Musical Expertise Reshapes Cross-Domain Semantic Integration: ERP Evidence from Language and Music Processing

Abstract

1. Introduction

1.1. Language Semantics and Music Meaning

1.2. A Potential Modulator: Cognitive Control

1.3. The Present Study

- Do Mandarin Chinese sentences and chord sequences share neurocognitive mechanisms for semantic processing, and if so, how do these mechanisms operate?

- How does musical expertise modulate this underlying shared mechanism across both early and late processing stages?

2. Methods

2.1. Participants

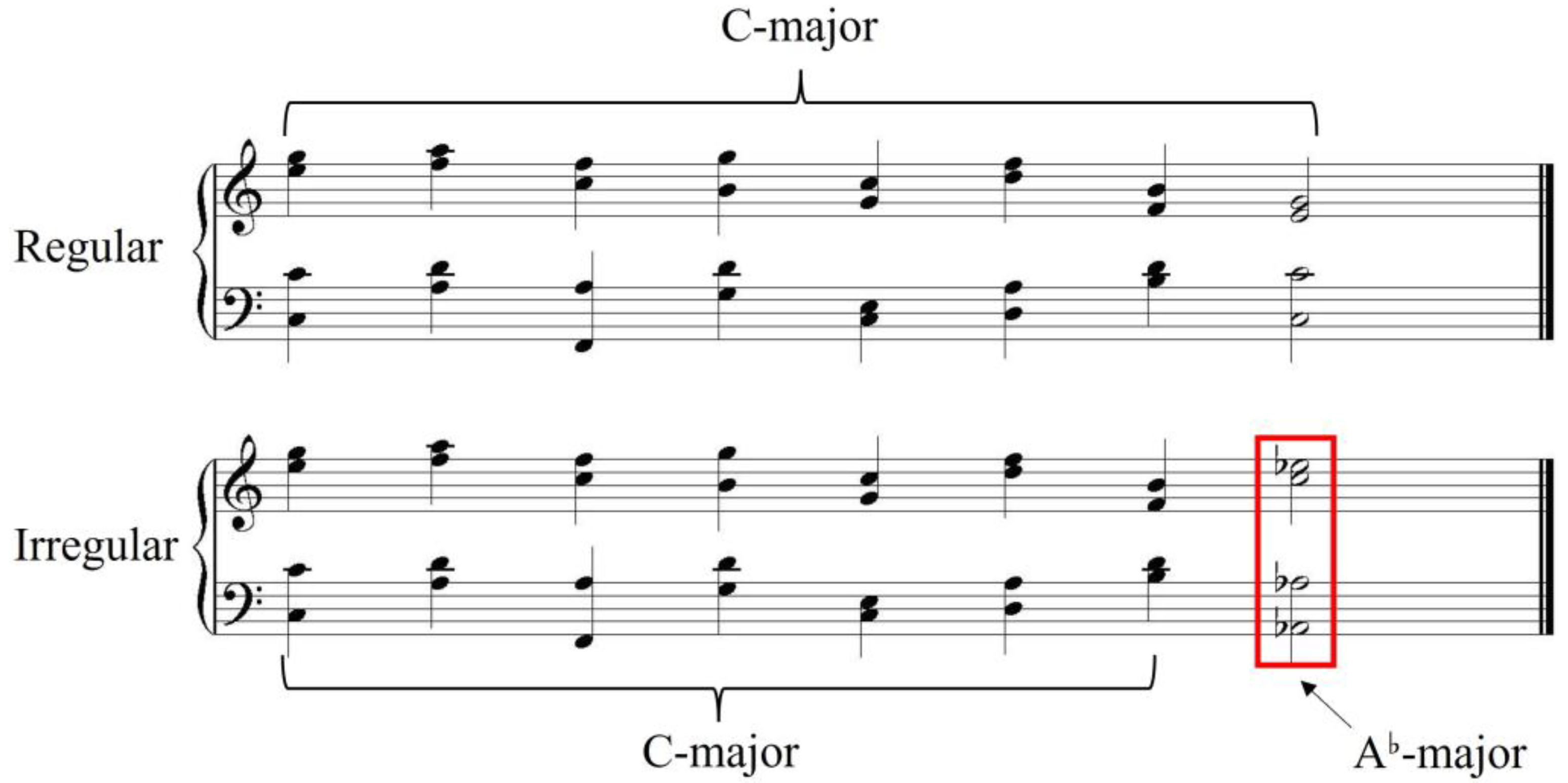

2.2. Stimuli

2.3. Procedure

2.4. EEG Recording and Preprocessing

2.5. Data Analysis

3. Results

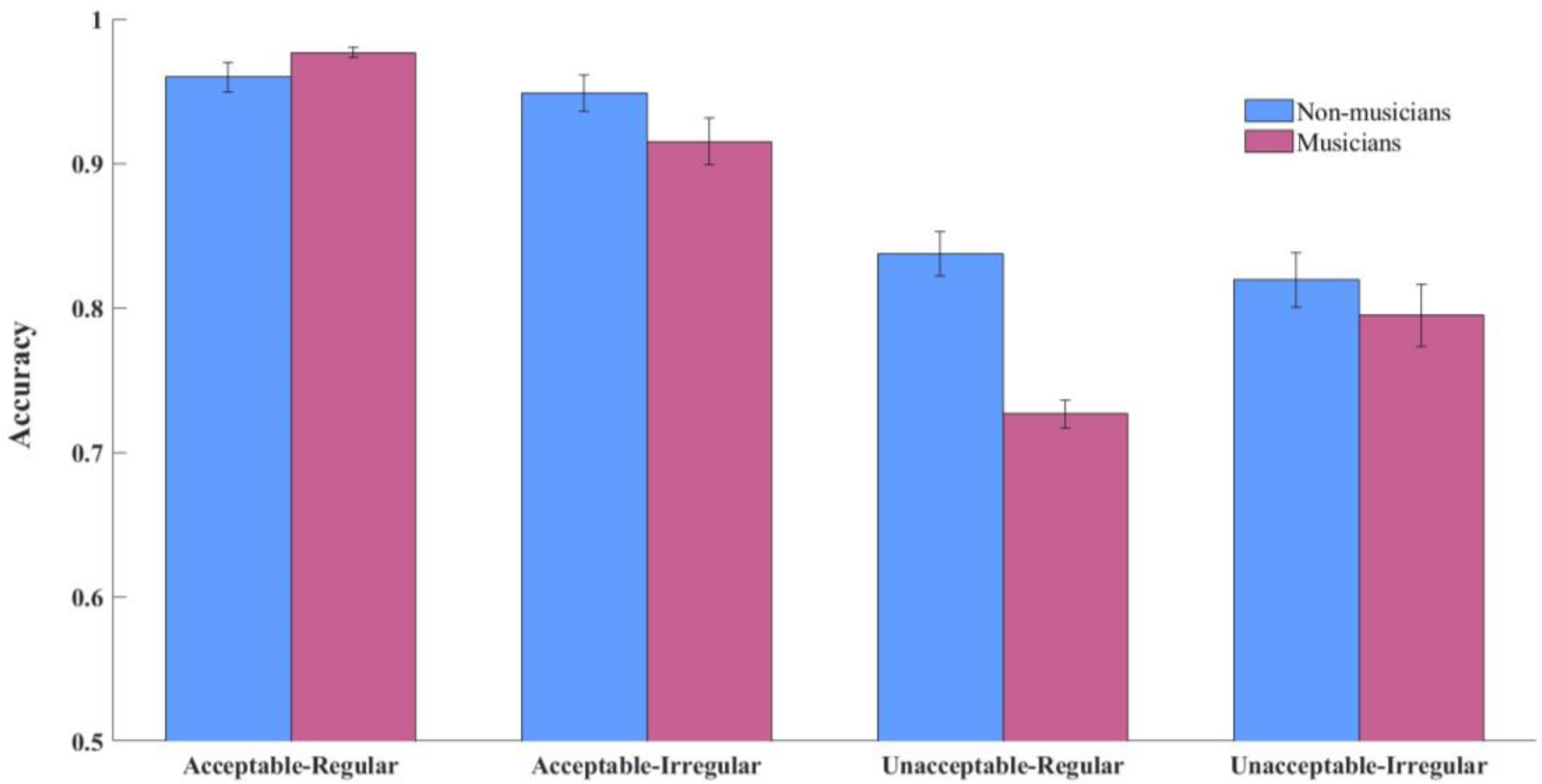

3.1. Behavioral Results

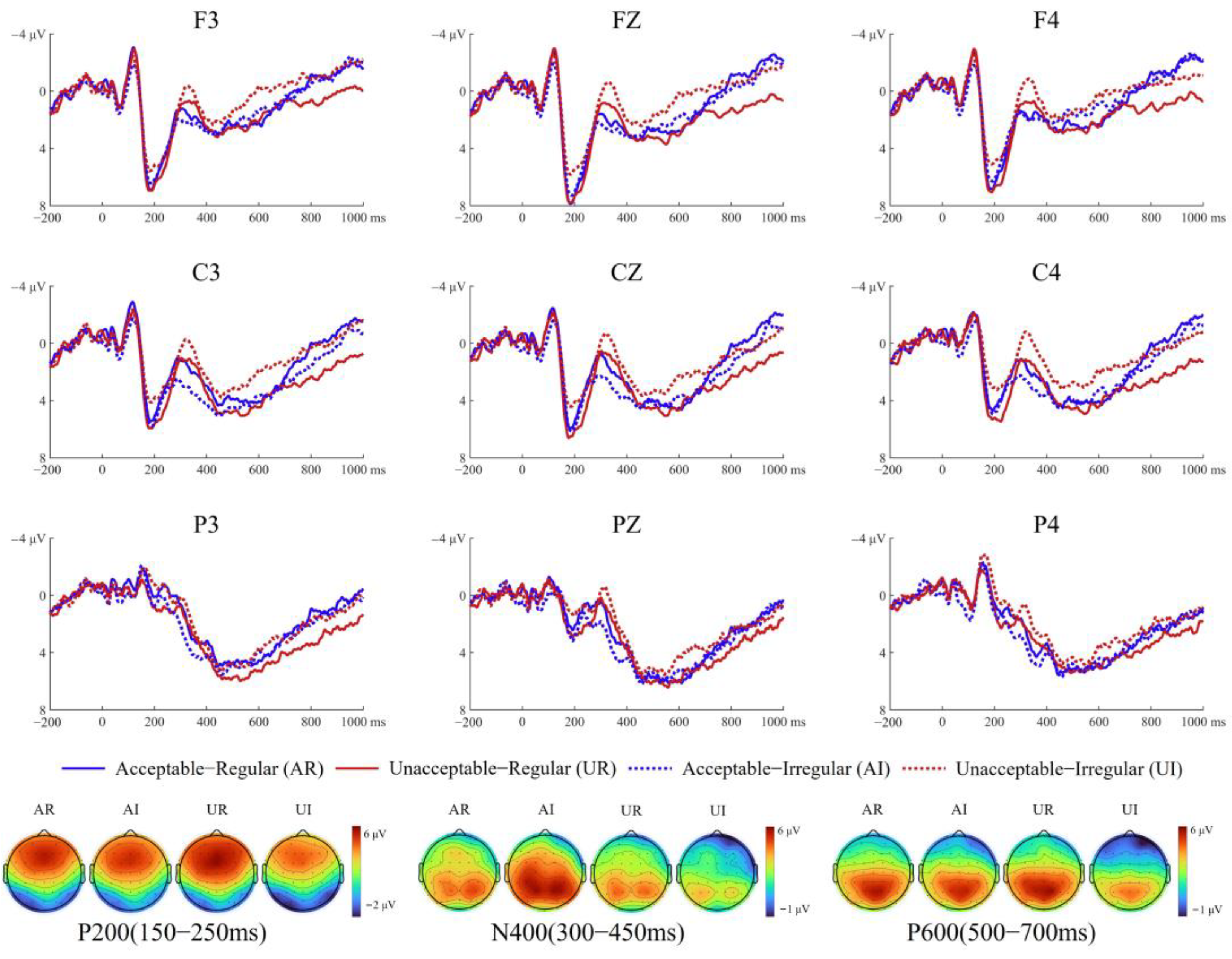

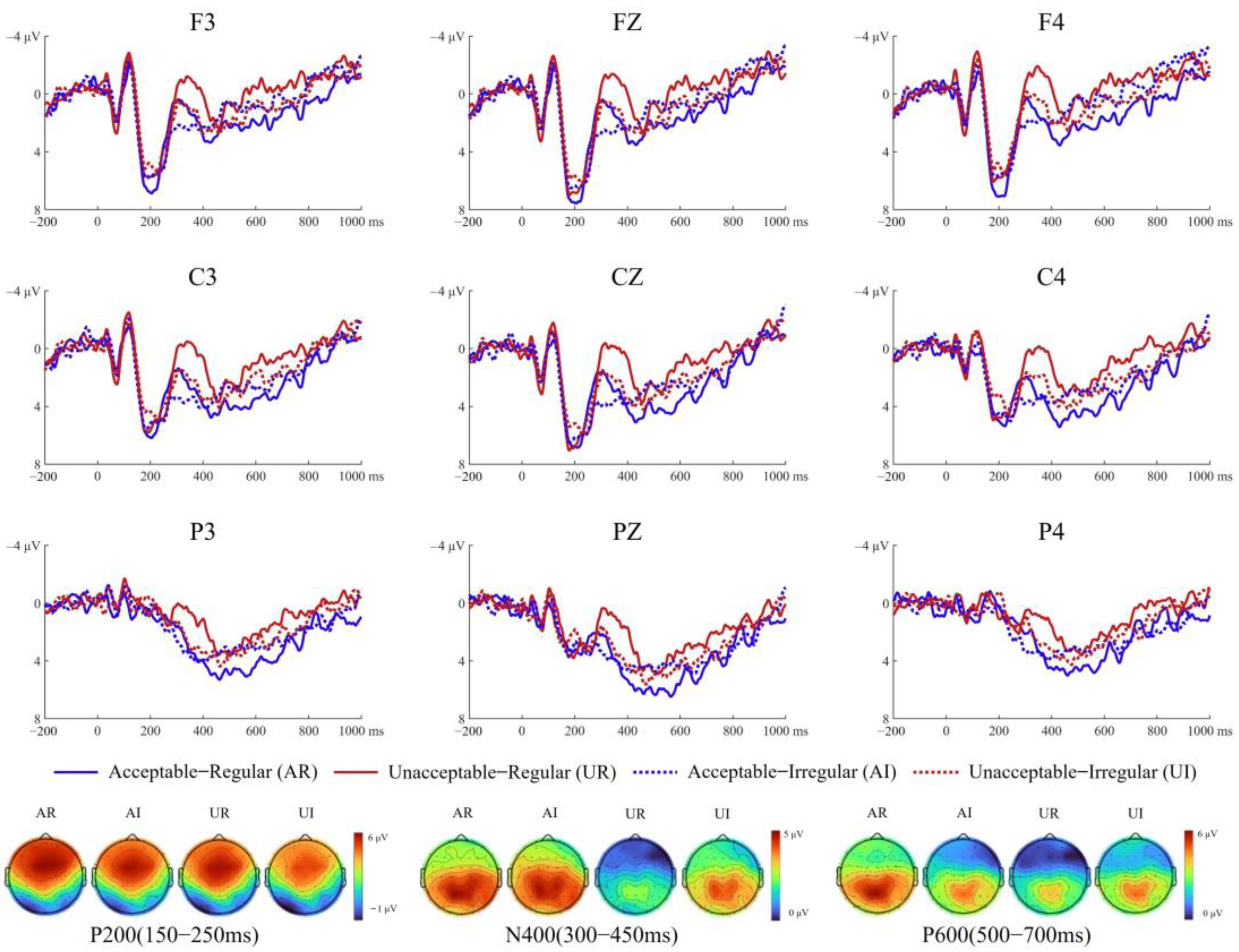

3.2. ERP Results

3.2.1. P200

3.2.2. N400

3.2.3. P600

4. Discussion

4.1. The Underlying Shared Mechanism at the Early Stage

4.2. The Underlying Shared Mechanisms at the Late Stage

4.3. The Potential Modulation of Shared Mechanisms by Musical Expertise

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brown, S.; Jordania, J. Universals in the world’s musics. Psychol. Music 2013, 41, 229–248. [Google Scholar] [CrossRef]

- Asano, R.; Boeckx, C. Syntax in language and music: What is the right level of comparison? Front. Psychol. 2015, 6, 942. [Google Scholar] [CrossRef] [PubMed]

- Chomsky, N. Knowledge of language: Its elements and origins. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1981, 295, 223–234. [Google Scholar] [CrossRef]

- Patel, A.D.; Gibson, E.; Ratner, J.; Besson, M.; Holcomb, P.J. Processing syntactic relations in language and music: An event-related potential study. J. Cogn. Neurosci. 1998, 10, 717–733. [Google Scholar] [CrossRef]

- Koelsch, S.; Gunter, T.C.; Wittfoth, M.; Sammler, D. Interaction between syntax processing in language and in music: An ERP study. J. Cogn. Neurosci. 2005, 17, 1565–1577. [Google Scholar] [CrossRef]

- Musso, M.; Weiller, C.; Horn, A.; Glauche, V.; Umarova, R.; Hennig, J.; Schneider, A.; Rijntjes, M. A single dual-stream framework for syntactic computations in music and language. Neuroimage 2015, 117, 267–283. [Google Scholar] [CrossRef]

- Carrus, E.; Pearce, M.T.; Bhattacharya, J. Melodic pitch expectation interacts with neural responses to syntactic but not semantic violations. Cortex 2013, 49, 2186–2200. [Google Scholar] [CrossRef]

- Zatorre, R.J.; Salimpoor, V.N. From perception to pleasure: Music and its neural substrates. Proc. Nat. Acad. Sci. USA 2013, 110, 10430–10437. [Google Scholar] [CrossRef]

- Koelsch, S.; Kasper, E.; Sammler, D.; Schulze, K.; Gunter, T.; Friederici, A.D. Music, language and meaning: Brain signatures of semantic processing. Nat. Neurosci. 2004, 7, 302–307. [Google Scholar] [CrossRef]

- Sun, L.; Liu, F.; Zhou, L.; Jiang, C. Musical training modulates the early but not the late stage of rhythmic syntactic processing. Psychophysiology 2017, 55, e12983. [Google Scholar] [CrossRef]

- Chen, J.; Scheller, M.; Wu, C.; Hu, B.; Peng, R.; Liu, C.; Liu, S.; Zhu, L.; Chen, J. The relationship between early musical training and executive functions: Validation of effects of the sensitive period. Psychol. Music 2022, 50, 86–99. [Google Scholar] [CrossRef]

- Bigand, E.; Tillmann, B. Near and far transfer: Is music special? Mem. Cogn. 2022, 50, 339–347. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Zhao, Z.; Yang, X.; Li, X. The impact of musical expertise on anticipatory semantic processing during online speech comprehension: An electroencephalography study. Brain Lang. 2021, 221, 105006. [Google Scholar] [CrossRef] [PubMed]

- Koelsch, S. Towards a neural basis of processing musical semantics. Phys. Life Rev. 2011, 8, 89–105. [Google Scholar] [CrossRef]

- Patel, A.D. Music, Language, and the Brain; The Oxford University Press: New York, NY, USA, 2010. [Google Scholar]

- Green, L. Music, Gender, Education; Cambridge University Press: New York, NY, USA, 1997. [Google Scholar]

- Meyer, L.B. Emotion and Meaning in Music; University of Chicago Press: London, UK, 2008. [Google Scholar]

- Steinbeis, N.; Koelsch, S. Shared neural resources between music and language indicate semantic processing of musical tension-resolution patterns. Cereb. Cortex 2008, 18, 1169–1178. [Google Scholar] [CrossRef]

- Krumhansl, C.L.; Shepard, R.N. Quantification of the hierarchy of tonal functions within a diatonic context. J. Exp. Psychol. Hum. Percept. Perform. 1979, 5, 579. [Google Scholar] [CrossRef]

- Lau, E.F.; Phillips, C.; Poeppel, D. A cortical network for semantics: (De)constructing the N400. Nat. Rev. Neurosci. 2008, 9, 920–933. [Google Scholar] [CrossRef]

- Hagoort, P. The fractionation of spoken language understanding by measuring electrical and magnetic brain signals. Phil. Trans. R. Soc. B Biol. Sci. 2008, 363, 1055–1069. [Google Scholar] [CrossRef]

- Daltrozzo, J.; Schön, D. Conceptual processing in music as revealed by N400 effects on words and musical targets. J. Cogn. Neurosci. 2009, 21, 1882–1892. [Google Scholar] [CrossRef]

- Daltrozzo, J.; Schön, D. Is conceptual processing in music automatic? An electrophysiological approach. Brain Res. 2009, 1270, 88–94. [Google Scholar] [CrossRef]

- Steinbeis, N.; Koelsch, S. Comparing the processing of music and language meaning using EEG and fMRI provides evidence for similar and distinct neural representations. PLoS ONE 2008, 3, e2226. [Google Scholar] [CrossRef]

- Painter, J.G.; Koelsch, S. Can out-of-context musical sounds convey meaning? An ERP study on the processing of meaning in music. Psychophysiology 2011, 48, 645–655. [Google Scholar] [CrossRef] [PubMed]

- Poulin-Charronnat, B.; Bigand, E.; Madurell, F.; Peereman, R. Musical structure modulates semantic priming in vocal music. Cognition 2005, 94, B67–B78. [Google Scholar] [CrossRef] [PubMed]

- Perruchet, P.; Poulin-Charronnat, B. Challenging prior evidence for a shared syntactic processor for language and music. Psychon. Bull. Rev. 2013, 20, 310–317. [Google Scholar] [CrossRef]

- Ye, Z.; Luo, Y.-J.; Friederici, A.D.; Zhou, X. Semantic and syntactic processing in Chinese sentence comprehension: Evidence from event-related potentials. Brain Res. 2006, 1071, 186–196. [Google Scholar] [CrossRef]

- Wang, S.; Mo, D.; Xiang, M.; Xu, R.; Chen, H.-C. The time course of semantic and syntactic processing in reading Chinese: Evidence from ERPs. Lang. Cognit. Process. 2013, 28, 577–596. [Google Scholar] [CrossRef]

- Wang, X.; Li, D.; Li, Y.; Zhu, L.; Song, D.; Ma, W. Semantic violation in sentence reading and incongruence in chord sequence comprehension: An ERP study. Heliyon 2023, 9, e13043. [Google Scholar] [CrossRef]

- Peretz, I.; Vuvan, D.; Lagrois, M.-É.; Armony, J.L. Neural overlap in processing music and speech. Phil. Trans. R. Soc. B Biol. Sci. 2015, 370, 20140090. [Google Scholar] [CrossRef]

- Slevc, L.R.; Okada, B.M. Processing structure in language and music: A case for shared reliance on cognitive control. Psychon. Bull. Rev. 2015, 22, 637–652. [Google Scholar] [CrossRef]

- Botvinick, M.M.; Braver, T.S.; Barch, D.M.; Carter, C.S.; Cohen, J.D. Conflict monitoring and cognitive control. Psychol. Rev. 2001, 108, 624. [Google Scholar] [CrossRef]

- Miller, E.K.; Cohen, J.D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 2001, 24, 167–202. [Google Scholar] [CrossRef] [PubMed]

- Rohrmeier, M.A.; Koelsch, S. Predictive information processing in music cognition. A critical review. Int. J. Psychophysiol. 2012, 83, 164–175. [Google Scholar] [CrossRef] [PubMed]

- Slevc, L.R.; Reitman, J.; Okada, B. Syntax in music and language: The role of cognitive control. Proc. Annu. Meet. Cogn. Sci. Soc. 2013, 35, 3414–3419. [Google Scholar]

- Slevc, L.R.; Davey, N.S.; Buschkuehl, M.; Jaeggi, S.M. Tuning the mind: Exploring the connections between musical ability and executive functions. Cognition 2016, 152, 199–211. [Google Scholar] [CrossRef]

- Herholz, S.C.; Zatorre, R.J. Musical training as a framework for brain plasticity: Behavior, function, and structure. Neuron 2012, 76, 486–502. [Google Scholar] [CrossRef]

- Schlaug, G.; Altenmüller, E.; Thaut, M. Music listening and music making in the treatment of neurological disorders and impairments. Music Percept. 2010, 27, 249–250. [Google Scholar] [CrossRef]

- Bialystok, E.; DePape, A.-M. Musical expertise, bilingualism, and executive functioning. J. Exp. Psychol. Hum. Percept. Perform. 2009, 35, 565. [Google Scholar] [CrossRef]

- Schroeder, S.R.; Marian, V.; Shook, A.; Bartolotti, J. Bilingualism and musicianship enhance cognitive control. Neural Plast. 2016, 2016, 4058620. [Google Scholar] [CrossRef]

- Daffner, K.R.; Chong, H.; Sun, X.; Tarbi, E.C.; Riis, J.L.; McGinnis, S.M.; Holcomb, P.J. Mechanisms underlying age-and performance-related differences in working memory. J. Cogn. Neurosci. 2011, 23, 1298–1314. [Google Scholar] [CrossRef]

- Kida, T.; Kaneda, T.; Nishihira, Y. Dual-task repetition alters event-related brain potentials and task performance. Clin. Neurophysiol. 2012, 123, 1123–1130. [Google Scholar] [CrossRef]

- George, E.M.; Coch, D. Music training and working memory: An ERP study. Neuropsychologia 2011, 49, 1083–1094. [Google Scholar] [CrossRef] [PubMed]

- Travis, F.; Harung, H.S.; Lagrosen, Y. Moral development, executive functioning, peak experiences and brain patterns in professional and amateur classical musicians: Interpreted in light of a Unified Theory of Performance. Conscious. Cogn. 2011, 20, 1256–1264. [Google Scholar] [CrossRef] [PubMed]

- Bangert, M.; Schlaug, G. Specialization of the specialized in features of external human brain morphology. Eur. J. Neurosci. 2006, 24, 1832–1834. [Google Scholar] [CrossRef] [PubMed]

- Benz, S.; Sellaro, R.; Hommel, B.; Colzato, L.S. Music makes the world go round: The impact of musical training on non-musical cognitive functions—A review. Front. Psychol. 2016, 6, 2023. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, F.; Zhou, X. Semantic processing persists despite anomalous syntactic category: ERP evidence from Chinese passive sentences. PLoS ONE 2015, 10, e0131936. [Google Scholar] [CrossRef]

- Wechsler, D. WAIS-IV Administration and Scoring Manual; Psychological Corporation: San Antonio, TX, USA, 2008. [Google Scholar]

- Peretz, I.; Champod, A.S.; Hyde, K. Varieties of musical disorders: The Montreal Battery of Evaluation of Amusia. Ann. N. Y. Acad. Sci. 2003, 999, 58–75. [Google Scholar] [CrossRef]

- Zhou, X.; Jiang, X.; Ye, Z.; Zhang, Y.; Lou, K.; Zhan, W. Semantic integration processes at different levels of syntactic hierarchy during sentence comprehension: An ERP study. Neuropsychologia 2010, 48, 1551–1562. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Zhang, G.; Li, X.; Cong, F. Objective extraction of evoked event-related oscillation from time-frequency representation of event-related potentials. Neural Plast. 2020, 2020, 8841354. [Google Scholar] [CrossRef]

- Federmeier, K.D.; Kutas, M. Picture the difference: Electrophysiological investigations of picture processing in the two cerebral hemispheres. Neuropsychologia 2002, 40, 730–747. [Google Scholar] [CrossRef]

- Crowley, K.E.; Colrain, I.M. A review of the evidence for P2 being an independent component process: Age, sleep and modality. Clin. Neurophysiol. 2004, 115, 732–744. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Chen, X.; Fan, Y.; Huang, J.; Wang, S. Semantic integration in the early time window: An ERPs study. J. Psychol. Sci. 2015, 38, 303–308. [Google Scholar] [CrossRef]

- Coulson, S.; Brang, D. Sentence context affects the brain response to masked words. Brain Lang. 2010, 113, 149–155. [Google Scholar] [CrossRef] [PubMed]

- Staub, A.; Rayner, K.; Pollatsek, A.; Hyönä, J.; Majewski, H. The time course of plausibility effects on eye movements in reading: Evidence from noun-noun compounds. J. Exp. Psychol.-Learn. Mem. Cogn. 2007, 33, 1162. [Google Scholar] [CrossRef]

- Luck, S.J.; Hillyard, S.A. Spatial filtering during visual search: Evidence from human electrophysiology. J. Exp. Psychol. Hum. Percept. Perform. 1994, 20, 1000. [Google Scholar] [CrossRef]

- Lee, C.-Y.; Liu, Y.-N.; Tsai, J.-L. The time course of contextual effects on visual word recognition. Front. Psychol. 2012, 3, 285. [Google Scholar] [CrossRef]

- Getzmann, S.; Wascher, E.; Schneider, D. The role of inhibition for working memory processes: ERP evidence from a short-term storage task. Psychophysiology 2018, 55, e13026. [Google Scholar] [CrossRef]

- Lee, D.J.; Jung, H.; Loui, P. Attention modulates electrophysiological responses to simultaneous music and language syntax processing. Brain Sci. 2019, 9, 305. [Google Scholar] [CrossRef]

- Fiveash, A.; McArthur, G.; Thompson, W.F. Syntactic and non-syntactic sources of interference by music on language processing. Sci. Rep. 2018, 8, 17918. [Google Scholar] [CrossRef]

- Carrus, E.; Koelsch, S.; Bhattacharya, J. Shadows of music–language interaction on low frequency brain oscillatory patterns. Brain Lang. 2011, 119, 50–57. [Google Scholar] [CrossRef]

- Koelsch, S.; Gunter, T.; Friederici, A.D.; Schröger, E. Brain indices of music processing: “Nonmusicians” are musical. J. Cogn. Neurosci. 2000, 12, 520–541. [Google Scholar] [CrossRef] [PubMed]

- Chou, T.-L.; Lee, S.-H.; Hung, S.-M.; Chen, H.-C. The role of inferior frontal gyrus in processing Chinese classifiers. Neuropsychologia 2012, 50, 1408–1415. [Google Scholar] [CrossRef] [PubMed]

- Kuo, J.Y.-C.; Sera, M.D. Classifier effects on human categorization: The role of shape classifiers in Mandarin Chinese. J. East Asian Linguist. 2009, 18, 1–19. [Google Scholar] [CrossRef]

- Hoch, L.; Poulin-Charronnat, B.; Tillmann, B. The influence of task-irrelevant music on language processing: Syntactic and semantic structures. Front. Psychol. 2011, 2, 112. [Google Scholar] [CrossRef]

- Hahne, A.; Friederici, A.D. Electrophysiological evidence for two steps in syntactic analysis: Early automatic and late controlled processes. J. Cogn. Neurosci. 1999, 11, 194–205. [Google Scholar] [CrossRef]

- Friederici, A.D. Towards a neural basis of auditory sentence processing. Trends Cogn. Sci. 2002, 6, 78–84. [Google Scholar] [CrossRef]

- Hsu, C.C.; Tsai, S.H.; Yang, C.L.; Chen, J.Y. Processing classifier–noun agreement in a long distance: An ERP study on Mandarin Chinese. Brain Lang. 2014, 137, 14–28. [Google Scholar] [CrossRef]

- Patel, A.D. Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef]

- Koelsch, S.; Schmidt, B.-H.; Kansok, J. Effects of musical expertise on the early right anterior negativity: An event-related brain potential study. Psychophysiology 2002, 39, 657–663. [Google Scholar] [CrossRef]

- Fitzroy, A.B.; Sanders, L.D. Musical expertise modulates early processing of syntactic violations in language. Front. Psychol. 2013, 3, 603. [Google Scholar] [CrossRef]

- Koelsch, S.; Jentschke, S.; Sammler, D.; Mietchen, D. Untangling syntactic and sensory processing: An ERP study of music perception. Psychophysiology 2007, 44, 476–490. [Google Scholar] [CrossRef] [PubMed]

- Featherstone, C.R.; Morrison, C.M.; Waterman, M.G.; MacGregor, L.J. Semantics, syntax or neither? A case for resolution in the interpretation of N500 and P600 responses to harmonic incongruities. PLoS ONE 2013, 8, e76600. [Google Scholar] [CrossRef] [PubMed]

- Honing, H.; ten Cate, C.; Peretz, I.; Trehub, S.E. Without it no music: Cognition, biology and evolution of musicality. Phil. Trans. R. Soc. B-Biol. Sci. 2015, 370, 20140088. [Google Scholar] [CrossRef] [PubMed]

- Arbib, M.A. Language, Music, and the Brain: A Mysterious Relationship; The MIT Press: London, UK, 2013. [Google Scholar]

- Hagoort, P. Interplay between syntax and semantics during sentence comprehension: ERP effects of combining syntactic and semantic violations. J. Cogn. Neurosci. 2003, 15, 883–899. [Google Scholar] [CrossRef]

- Roncaglia-Denissen, M.P.; Bouwer, F.L.; Honing, H. Decision making strategy and the simultaneous processing of syntactic dependencies in language and music. Front. Psychol. 2018, 9, 38. [Google Scholar] [CrossRef]

| Musicians | Non-Musicians | t-Test | |||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| age | 21.73 | 1.67 | 21.83 | 1.69 | 0.214 |

| Years of Education | 15.83 | 1.69 | 15.72 | 1.67 | 0.132 |

| Vocabulary | 71.45 | 4.30 | 69.92 | 3.67 | −1.324 |

| MBEA (%) | 94.87 | 2.29 | 98.09 | 1.30 | −5.802 *** |

| Years of musical training | 13.23 | 2.25 | - | - | - |

| Onset of musical training | 8.50 | 1.54 | - | - | - |

| Condition | Exemplar Sentence | |||||||

|---|---|---|---|---|---|---|---|---|

| Acceptable | 警察 | 捡到 | 了 | 一部 | 游客 | 丢失 | 的 | 手机 |

| The policeman | picked | LE (a perfective aspect marker) | one BU (CL: classifying electric appliance) | tourist | lost | DE (a modification marker) | cell phone | |

| The policeman picked up a cell phone, which might have been left by a tourist. | ||||||||

| Unacceptable | 警察 | 捡到 | 了 | 一部 | 游客 | 丢失 | 的 | 钱包 |

| The policeman | picked | LE (a perfective aspect marker) | one BU (CL: classifying electric appliance) | tourist | lost | DE (a modification marker) | wallet | |

| The policeman picked up a wallet, which might have been left by a tourist. | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Zeng, T. Musical Expertise Reshapes Cross-Domain Semantic Integration: ERP Evidence from Language and Music Processing. Brain Sci. 2025, 15, 401. https://doi.org/10.3390/brainsci15040401

Wang X, Zeng T. Musical Expertise Reshapes Cross-Domain Semantic Integration: ERP Evidence from Language and Music Processing. Brain Sciences. 2025; 15(4):401. https://doi.org/10.3390/brainsci15040401

Chicago/Turabian StyleWang, Xing, and Tao Zeng. 2025. "Musical Expertise Reshapes Cross-Domain Semantic Integration: ERP Evidence from Language and Music Processing" Brain Sciences 15, no. 4: 401. https://doi.org/10.3390/brainsci15040401

APA StyleWang, X., & Zeng, T. (2025). Musical Expertise Reshapes Cross-Domain Semantic Integration: ERP Evidence from Language and Music Processing. Brain Sciences, 15(4), 401. https://doi.org/10.3390/brainsci15040401