Prediction of Neurologically Intact Survival in Cardiac Arrest Patients without Pre-Hospital Return of Spontaneous Circulation: Machine Learning Approach

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design and Population

2.2. OHCA Registry and Definition

2.3. Methods (Machine Learning Algorithms)

2.4. Statistical Analysis

3. Results

3.1. Baseline Statistics

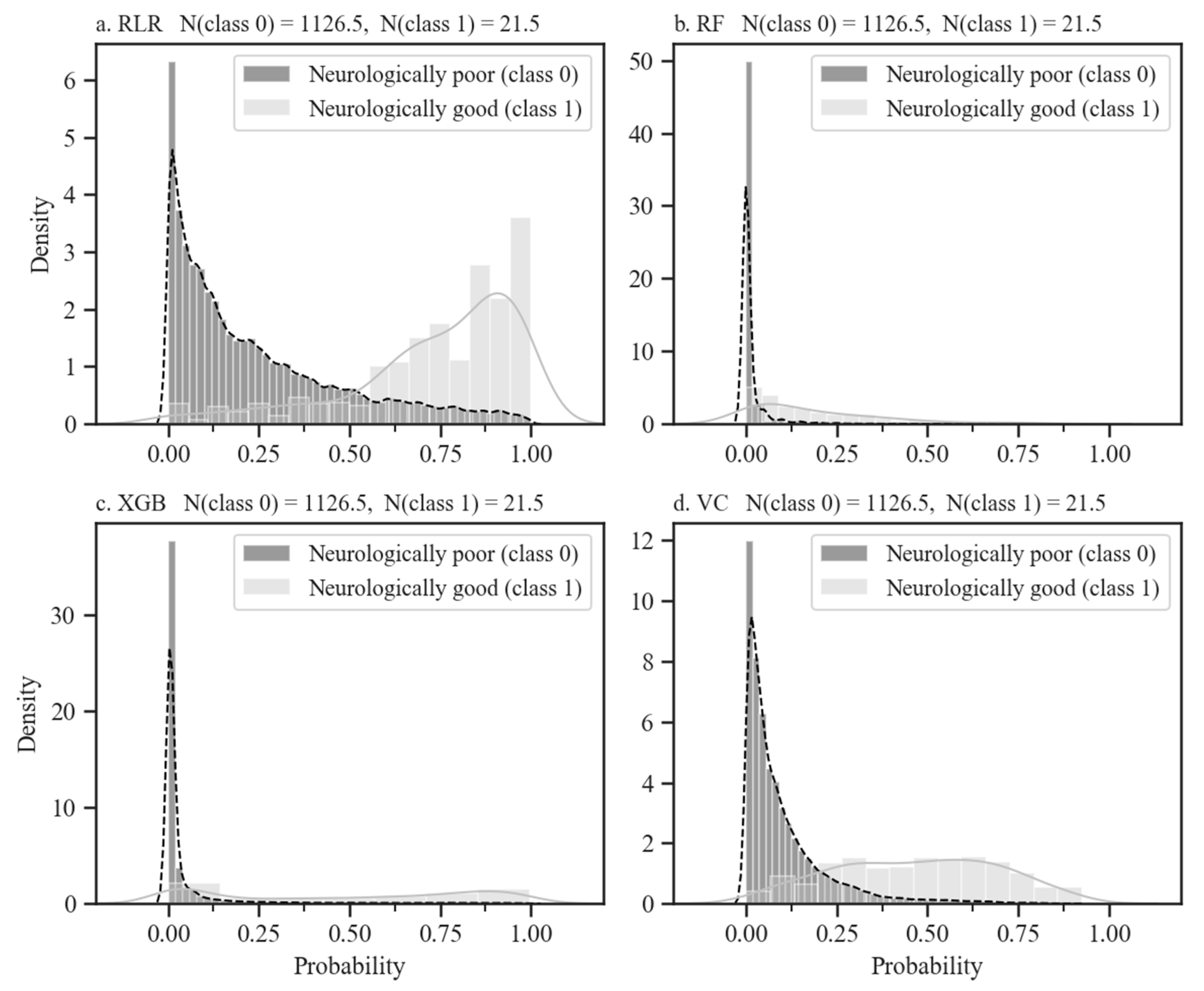

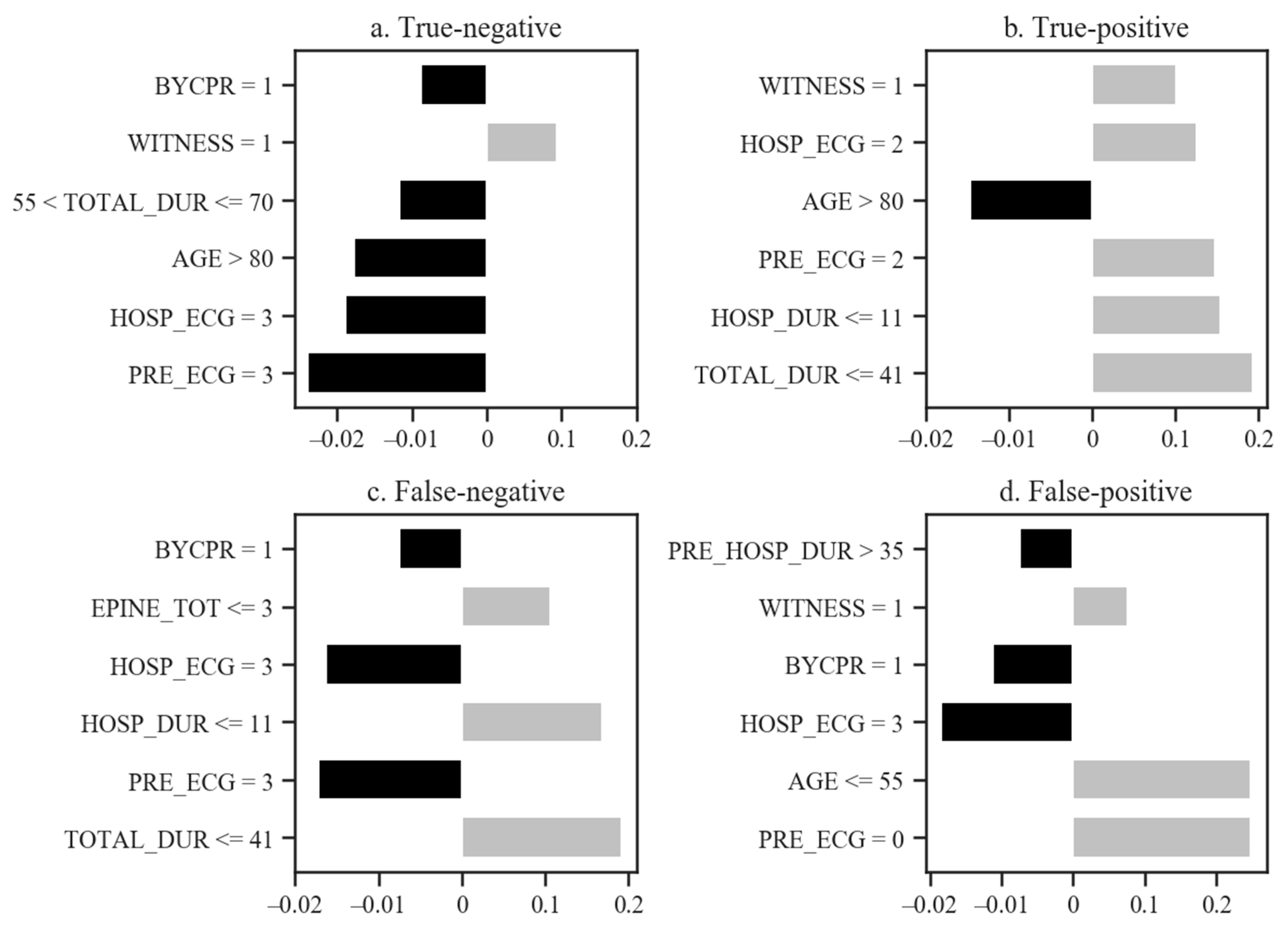

3.2. Model Performances and Validation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Official Variable Names | Convenient Variable Names |

|---|---|

| Demographics, Age | AGE |

| Demographics, Male sex | SEX |

| Demographics, Hypertension | HTN |

| Demographics, Diabetes mellitus | DM |

| Demographics, Dyslipidemia | DYSLIPID |

| Pre-hospital, Witnessed | WITNESS |

| Pre-hospital, Occurrence at house | OCC_HOUSE |

| Pre-hospital, Bystander CPR | BYCPR |

| Pre-hospital, Automated external defibrillation use | BYAED |

| Pre-hospital, First ECG rhythm, ventricular fibrillation | PRE_ECG: 0 |

| Pre-hospital, First ECG rhythm, pulseless ventricular tachycardia | PRE_ECG: 1 |

| Pre-hospital, First ECG rhythm, pulseless electrical activity | PRE_ECG: 2 |

| Pre-hospital, First ECG rhythm, asystole | PRE_ECG: 3 |

| Pre-hospital, Defibrillation | PRE_DEFIB |

| Pre-hospital, Airway | PRE_AIRWAY |

| Hospital, Endotracheal intubation | ENDO_INTU |

| Hospital First ECG rhythm, Ventricular fibrillation | HOSP_ECG: 0 |

| Hospital First ECG rhythm, Pulseless Ventricular Tachycardia | HOSP_ECG: 1 |

| Hospital First ECG rhythm, Pulseless Electrical Activity | HOSP_ECG: 2 |

| Hospital First ECG rhythm, Asystole | HOSP_ECG: 3 |

| Hospital, Use of mechanical compressor | MECH_CPR |

| Hospital, Total epinephrine | EPINE_TOT |

| Hospital, Defibrillation number | DEFIB_N |

| Duration of resuscitation, Total | TOTAL_DUR |

| Duration of resuscitation, Pre-hospital | PRE_HOSP_DUR |

| Duration of resuscitation, Hospital | HOSP_DUR |

| Duration, No flow time | NO_FLOW_TIME |

References

- Shih, H.-M.; Chen, Y.-C.; Chen, C.-Y.; Huang, F.-W.; Chang, S.-S.; Yu, S.-H.; Wu, S.-Y.; Chen, W.-K. Derivation and Validation of the SWAP Score for Very Early Prediction of Neurologic Outcome in Patients with Out-of-Hospital Cardiac Arrest. Ann. Emerg. Med. 2019, 73, 578–588. [Google Scholar] [CrossRef] [PubMed]

- Sasson, C.; Rogers, M.A.M.; Dahl, J.; Kellermann, A.L. Predictors of survival from out-of-hospital cardiac arrest: A systematic review and meta-analysis. Circ. Cardiovasc. Qual. Outcomes 2010, 3, 63–81. [Google Scholar] [CrossRef] [Green Version]

- Berdowski, J.; Berg, R.A.; Tijssen, J.G.P.; Koster, R.W. Global incidences of out-of-hospital cardiac arrest and survival rates: Systematic review of 67 prospective studies. Resuscitation 2010, 81, 1479–1487. [Google Scholar] [CrossRef] [PubMed]

- Yoon, J.C.; Kim, Y.J.; Ahn, S.; Jin, Y.H.; Lee, S.W.; Song, K.J.; Shin, S.D.; Hwang, S.O.; Kim, W.Y.; Korean Cardiac Arrest Research Consortium KoCARC. Factors for modifying the termination of resuscitation rule in out-of-hospital cardiac arrest. Am. Heart J. 2019, 213, 73–80. [Google Scholar] [CrossRef] [PubMed]

- Morrison, L.J.; Visentin, L.M.; Kiss, A.; Theriault, R.; Eby, D.; Vermeulen, M.; Sherbino, J.; Verbeek, P.R.; TOR Investigators. Validation of a rule for termination of resuscitation in out-of-hospital cardiac arrest. N. Engl. J. Med. 2006, 355, 478–487. [Google Scholar] [CrossRef]

- Nolan, J.P.; Soar, J.; Cariou, A.; Cronberg, T.; Moulaert, V.R.M.; Deakin, C.D.; Bottiger, B.W.; Friberg, H.; Sunde, K.; Sandroni, C. European Resuscitation Council and European Society of Intensive Care Medicine Guidelines for Post-resuscitation Care 2015: Section 5 of the European Resuscitation Council Guidelines for Resuscitation 2015. Resuscitation 2015, 95, 202–222. [Google Scholar] [CrossRef]

- Kim, Y.J.; Kim, M.-J.; Koo, Y.S.; Kim, W.Y. Background Frequency Patterns in Standard Electroencephalography as an Early Prognostic Tool in Out-of-Hospital Cardiac Arrest Survivors Treated with Targeted Temperature Management. J. Clin. Med. 2020, 9, 1113. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Callaway, C.W.; Donnino, M.W.; Fink, E.L.; Geocadin, R.G.; Golan, E.; Kern, K.B.; Leary, M.; Meurer, W.J.; Peberdy, M.A.; Thompson, T.M.; et al. Part 8: Post–Cardiac Arrest Care. Circulation 2015, 132, S465–S482. [Google Scholar] [CrossRef] [Green Version]

- Rittenberger, J.C.; Tisherman, S.A.; Holm, M.B.; Guyette, F.X.; Callaway, C.W. An early, novel illness severity score to predict outcome after cardiac arrest. Resuscitation 2011, 82, 1399–1404. [Google Scholar] [CrossRef] [Green Version]

- Adrie, C.; Cariou, A.; Mourvillier, B.; Laurent, I.; Dabbane, H.; Hantala, F.; Rhaoui, A.; Thuong, M.; Monchi, M. Predicting survival with good neurological recovery at hospital admission after successful resuscitation of out-of-hospital cardiac arrest: The OHCA score. Eur. Heart J. 2006, 27, 2840–2845. [Google Scholar] [CrossRef] [Green Version]

- Maupain, C.; Bougouin, W.; Lamhaut, L.; Deye, N.; Diehl, J.-L.; Geri, G.; Perier, M.-C.; Beganton, F.; Marijon, E.; Jouven, X.; et al. The CAHP (Cardiac Arrest Hospital Prognosis) score: A tool for risk stratification after out-of-hospital cardiac arrest. Eur. Heart J. 2016, 37, 3222–3228. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Fauw, J.; Ledsam, J.R.; Romera-Paredes, B.; Nikolov, S.; Tomasev, N.; Blackwell, S.; Askham, H.; Glorot, X.; O’Donoghue, B.; Visentin, D.; et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018, 24, 1–15. [Google Scholar]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Liu, X.; Marcus, J.; Sun, M.; et al. Scalable and accurate deep learning with electronic health records. NPJ Digit. Med. 2018, 1, 1–10. [Google Scholar] [CrossRef]

- Zhang, J.; Gajjala, S.; Agrawal, P.; Tison, G.H.; Hallock, L.A.; Beussink-Nelson, L.; Lassen, M.H.; Fan, E.; Aras, M.A.; Jordan, C.; et al. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation 2018, 138, 1623–1635. [Google Scholar] [CrossRef] [PubMed]

- Douglas, P.S.; De Bruyne, B.; Pontone, G.; Patel, M.R.; Nørgaard, B.L.; Byrne, R.A.; Curzen, N.; Purcell, I.; Gutberlet, M.; Rioufol, G.; et al. 1-Year Outcomes of FFRCT-Guided Care in Patients With Suspected Coronary Disease: The PLATFORM Study. J. Am. Coll. Cardiol. 2016, 68, 435–445. [Google Scholar] [CrossRef]

- Shung, D.; Simonov, M.; Gentry, M.; Au, B.; Laine, L. Machine Learning to Predict Outcomes in Patients with Acute Gastrointestinal Bleeding: A Systematic Review. Dig. Dis. Sci. 2019, 64, 2078–2087. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Greenstein, J.L.; Granite, S.J.; Fackler, J.C.; Bembea, M.M.; Sarma, S.V.; Winslow, R.L. Data-driven discovery of a novel sepsis pre-shock state predicts impending septic shock in the ICU. Sci. Rep. 2019, 9, 1–9. [Google Scholar] [CrossRef]

- Wilson, F.P.; Shashaty, M.; Testani, J.; Aqeel, I.; Borovskiy, Y.; Ellenberg, S.S.; Feldman, H.I.; Fernandez, H.; Gitelman, Y.; Lin, J.; et al. Automated, electronic alerts for acute kidney injury: A single-blind, parallel-group, randomised controlled trial. Lancet 2015, 385, 1966–1974. [Google Scholar] [CrossRef] [Green Version]

- Kwon, J.-M.; Jeon, K.-H.; Kim, H.M.; Kim, M.J.; Lim, S.; Kim, K.H.; Song, P.S.; Park, J.; Choi, R.K.; Oh, B.-H. Deep-learning-based out-of-hospital cardiac arrest prognostic system to predict clinical outcomes. Resuscitation 2019, 139, 84–91. [Google Scholar] [CrossRef]

- Kim, J.Y.; Hwang, S.O.; Shin, S.D.; Yang, H.J.; Chung, S.P.; Lee, S.W.; Song, K.J.; Hwang, S.S.; Cho, G.C.; Moon, S.W.; et al. Korean Cardiac Arrest Research Consortium (KoCARC): Rationale, development, and implementation. Clin. Exp. Emerg. Med. 2018, 5, 165–176. [Google Scholar] [CrossRef]

- Jennett, B.; Bond, M. Assessment of outcome after severe brain damage. Lancet 1975, 1, 480–484. [Google Scholar] [CrossRef]

- Tomek, I. Two modications of CNN. Syst. Man Cypernetics IEEE Trans. 1976, 6, 4. [Google Scholar]

- Stefanova, L.; Krishnamurti, T.N. Interpretation of Seasonal Climate Forecast Using Brier Skill Score, The Florida State University Superensemble, and the AMIP-I Dataset. J. Clim. 2002, 15, 537–544. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F.L., Jr. Python Tutorial; Centrum voor Wiskunde en Informatica (CWI): Amsterdam, The Netherlands, 1995. [Google Scholar]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Oliphant, T.E. A Guide to NumPy; Trelgol Publishing: New York, NY, USA, 2006; Volume 1, pp. 22–30. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; A Scalable Tree Boosting System; Association for Computing Machinery: New York, NY, USA, 2016; Volume 11, pp. 785–794. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 6. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S. Why should i trust you? Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Volume 13, pp. 1135–1144. [Google Scholar]

- mwaskom/seaborn: v0.8.1 (September 2017). Available online: https://doi.org/10.5281/zenodo.883859 (accessed on 5 March 2021).

- Sharafoddini, A.; Dubin, J.A.; Maslove, D.M.; Lee, J. A New Insight Into Missing Data in Intensive Care Unit Patient Profiles: Observational Study. JMIR Med. Inform. 2019, 7, e11605. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Azur, M.J.; Stuart, E.A.; Frangakis, C.; Leaf, P.J. Multiple imputation by chained equations: What is it and how does it work? Int. J. Methods Psychiatr. Res. 2011, 20, 10. [Google Scholar] [CrossRef]

- Van Buuren, S.; Groothuis-Oudshoorn, K. mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011, 45, 67. [Google Scholar] [CrossRef] [Green Version]

- Papageorgiou, G.; Grant, S.W.; Takkenberg, J.J.M.; Mokhles, M.M. Statistical primer: How to deal with missing data in scientific research? Interact. Cardio Vasc. Thorac. Surg. 2018, 27, 153. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Johnsson, J.; Björnsson, O.; Andersson, P.; Jakobsson, A.; Cronberg, T.; Lilja, G.; Friberg, H.; Hassager, C.; Kjaergard, J.; Wise, M.; et al. Artificial neural networks improve early outcome prediction and risk classification in out-of-hospital cardiac arrest patients admitted to intensive care. Critical Care 2020, 24, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Al-Dury, N.; Ravn-Fischer, A.; Hollenberg, J.; Israelsson, J.; Nordberg, P.; Strömsöe, A.; Axelsson, C.; Herlitz, J.; Rawshani, A. Identifying the relative importance of predictors of survival in out of hospital cardiac arrest: A machine learning study. Scand. J. Trauma Resusc. Emerg. Med. 2020, 28, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Gan, Y.; Jiang, N.; Wang, R.; Chen, Y.; Luo, Z.; Zong, Q.; Chen, S.; Lv, C. The global survival rate among adult out-of-hospital cardiac arrest patients who received cardiopulmonary resuscitation: A systematic review and meta-analysis. Critical Care 2020, 24, 61. [Google Scholar] [CrossRef] [Green Version]

| Predictor Variables | Good Neurological Outcomes (n = 105) | Poor Neurological Outcomes (n = 5634) | p-Value | |

|---|---|---|---|---|

| Demographics | Age (median, (IQR)) | 57 (47–66) | 71 (58–80) | <0.001 |

| Male sex (n, %) | 72 (68.6%) | 3596 (63.8%) | 0.367 | |

| Hypertension (n, %) | 45 (43.0%) | 2268 (40.3%) | 0.743 | |

| Diabetes mellitus (n, %) | 22 (21.0%) | 1438 (25.5%) | 0.099 | |

| Dyslipidemia (n, %) | 5 (4.76%) | 259 (4.60%) | 0.971 | |

| Pre-hospital | Witnessed (n, %) | 85 (81.0%) | 3149 (55.9%) | <0.001 |

| Occurrence at house (n, %) | 39 (37.1%) | 3687 (65.4%) | <0.001 | |

| Bystander CPR (n, %) | 39 (37.1%) | 2702 (48.0%) | 0.051 | |

| Automated external defibrillation use (n, %) | 3 (2.85%) | 55 (0.976%) | 0.152 | |

| First ECG rhythm (n, %) | <0.001 | |||

| Ventricular fibrillation | 47 (44.8%) | 648 (11.5%) | ||

| Pulseless ventricular tachycardia | 2 (1.90%) | 26 (0.461%) | ||

| Pulseless electrical activity | 31 (29.5%) | 1150 (20.4%) | ||

| Asystole | 12 (11.4%) | 3451 (61.3%) | ||

| Airway (n, %) | 72 (68.6%) | 1083 (19.2%) | <0.001 | |

| 4483 (79.6%) | 0.007 | |||

| Hospital | Endotracheal intubation (n, %) | 99 (94.3%) | 5015 (89.0%) | 0.347 |

| First ECG rhythm (n, %) | <0.001 | |||

| Ventricular fibrillation | 37 (35.2%) | 290 (5.14%) | ||

| Pulseless ventricular tachycardia | 1 (0.952%) | 13 (0.231%) | ||

| Pulseless electrical activity | 38 (36.2%) | 1078 (19.1%) | ||

| Asystole | 24 (22.9%) | 4114 (73.0%) | ||

| Use of mechanical compressor (n, %) | 17 (16.2%) | 925 (16.4%) | 0.955 | |

| Total epinephrine (mg, median, (IQR)) | 2 (1–4) | 6 (3–9) | <0.001 | |

| Defibrillation number (median, (IQR)) | 0 (0–3) | 0 (0–0) | <0.001 | |

| Duration | Duration of resuscitation, (min, median, (IQR)) | |||

| Total | 27 (15–43) | 55 (42–71) | <0.001 | |

| Pre-hospital | 17 (7–26) | 26 (19–36) | <0.001 | |

| Hospital | 6 (3–12) | 20 (11–30) | <0.001 | |

| No flow time, (min, median, (IQR)) | 0 (0–5) | 0 (0–8) | 0.016 | |

| Model | Actual Survival | RLR | RF | XGB | VC |

|---|---|---|---|---|---|

| Predicted survival | 0.019 | 0.226 (0.218–0.234) | 0.156 (0.149–0.163) | 0.155 (0.153–0.158) | 0.0819 (0.0747–0.089) |

| AUC | n.a. | 0.893 (0.883–0.903) | 0.881 (0.869–0.892) | 0.925 (0.919–0.931) | 0.925 (0.917–0.933) |

| Brier score | n.a. | 0.389 (0.381–0.397) | 0.138 (0.124–0.151) | 0.107 (0.102–0.113) | 0.146 (0.143–0.149) |

| Log loss | n.a. | 0.119 (0.116–0.121) | 0.0153 (0.0149–0.0160) | 0.0302 (0.0283–0.0320) | 0.0318 (0.0308–0.0330) |

| Sensitivity | n.a. | 0.857 (0.842–0.872) | 0.827 (0.804–0.850) | 0.836 (0.804–0.868) | 0.857 (0.843–0.871) |

| Specificity | n.a. | 0.786 (0.778–0.793) | 0.857 (0.85–0.863) | 0.851 (0.836–0.866) | 0.865 (0.858–0.873) |

| PPV | n.a. | 0.0702 (0.0679–0.072) | 0.0983 (0.095–0.102) | 0.104 (0.0954–0.113) | 0.109 (0.104–0.114) |

| NPV | n.a. | 0.997 (0.996–0.997) | 0.996 (0.996–0.997) | 0.997 (0.996–0.997) | 0.997(0.997–0.997) |

| F1-score | n.a. | 0.819 (0.811–0.826) | 0.839 (0.828–0.849) | 0.836 (0.823–0.848) | 0.86 (0.854–0.866) |

| Kappa | n.a. | 0.0991 (0.095–0.103) | 0.147 (0.142–0.153) | 0.155 (0.142–0.167) | 0.165 (0.158–0.173) |

| NRI | n.a. | n.a. | 0.0404 (0.0132–0.0680) | 0.0448 (0.0215–0.0680) | 0.0796 (0.0638–0.0960) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, D.-W.; Yi, H.; Bae, H.-J.; Kim, Y.-J.; Sohn, C.-H.; Ahn, S.; Lim, K.-S.; Kim, N.; Kim, W.-Y. Prediction of Neurologically Intact Survival in Cardiac Arrest Patients without Pre-Hospital Return of Spontaneous Circulation: Machine Learning Approach. J. Clin. Med. 2021, 10, 1089. https://doi.org/10.3390/jcm10051089

Seo D-W, Yi H, Bae H-J, Kim Y-J, Sohn C-H, Ahn S, Lim K-S, Kim N, Kim W-Y. Prediction of Neurologically Intact Survival in Cardiac Arrest Patients without Pre-Hospital Return of Spontaneous Circulation: Machine Learning Approach. Journal of Clinical Medicine. 2021; 10(5):1089. https://doi.org/10.3390/jcm10051089

Chicago/Turabian StyleSeo, Dong-Woo, Hahn Yi, Hyun-Jin Bae, Youn-Jung Kim, Chang-Hwan Sohn, Shin Ahn, Kyoung-Soo Lim, Namkug Kim, and Won-Young Kim. 2021. "Prediction of Neurologically Intact Survival in Cardiac Arrest Patients without Pre-Hospital Return of Spontaneous Circulation: Machine Learning Approach" Journal of Clinical Medicine 10, no. 5: 1089. https://doi.org/10.3390/jcm10051089