Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges

Abstract

:1. Introduction

- Type of input: Preoperative images (e.g., MRI, CT scan, ultrasound (US)) provide information on the deep-seated structures and are generally acquired several hours or days before the procedure. This timing is due to the complexity of image acquisition and processing. Intraoperative imaging equipment (e.g., cone-beam computed tomography (CBCT), intraoperative CT (iCT), fluoroscopy, US, intraoperative MRI (iMRI), endoscopic cameras) or tracking devices with markers connected to the navigation system serve for the initial image-to-patient registration and also to correct navigational errors or the tissue shift during the procedure [12]. Accordingly, data could take the form of either an image or the spatial coordinates of the physical space.

- Transformations: Depending on the types of surrounding tissues, the registration could be rigid or non-rigid (i.e., deformable, local). Soft tissues require a non-rigid registration that considers all local deformations [13], while a rigid registration considers only global transformation and normally requires fewer degrees of freedom and lower computational costs.

- Techniques: In both cases of rigid and non-rigid techniques, the registration can be manual [14], semi-automatic [15], or automatic [16]. Current studies focus on removing the human intervention from the loop progressively and ultimately automating the whole procedure. This enhances the ergonomics while potentially increasing the performance. Today, the most-common scenario consists of a surgeon or a qualified operator conducting a manual registration by selecting a set of corresponding points from the patient’s physical space or the target image based on a qualitative analysis. A semi-automatic procedure employs a program that can assist the registration process to enhance the performance, the accuracy, or the computational cost, but still requires the intervention of an expert. The chain of procedure can include well-known algorithms such as the iterative closest point (ICP), normal iterative closest point (NICP) [17], or even learning-based algorithms [18]. Several other routine CAS systems are based on automatic registration. The process does not require any intervention, but in some cases, invasive external fiducial markers and external tracking devices with bulky sensors attached to the body are required [19].

- Correspondence: Establishing a correspondence between the input data is based on the input modalities and their available features. The three common approaches for determining the correspondence are [20]: segmentation [21], sparse features (i.e., points, edges, objects) [22], or signal intensity (i.e., MRI or US signal, radiological density) [23,24]. Feature-based approaches are known to be less complex in terms of computation, where the transformation matrix could be directly obtained from the correspondence of features between two modalities or by a simple algorithm (e.g., least squares [25]). Features could be physically available (fiducials) or extracted from images with image-processing techniques [26]. However, in cases where the same features are not constantly available in all situations (e.g., block of display, noisy images, anatomical deformations), the reliability becomes an issue. As an alternative, intensity approaches start from the initial default parameters, and through an optimization algorithm, the best model is selected. Here, the key relies on the selected metric, which is at the basis of integrating mutual information from both modalities [27].

2. Methods

- R: register, registration.

- O: auditory, brain, canal, cavity, cephalic vein, cerebellar, cerebral, cerebral, cerebrum, cheek, chorda, ciliary nerves, cochlea, cranial, cricoarytenoid muscle, cricoid, ear, eardrum, eye, facial, fossa, glossopharyngeal, head, hypoglossal, iris, jaw, jugular, laryngeal, larynx, lingual, lip, malleus, mandibular, masticatory, maxillary, meninges, muscles, nasal, nasolacrimal duct, neck, nerve, nose, occipital lobe, ocular, oculomotor, optic chiasm, optic nerve, oral cavity, palate, palpebral, peduncles, pharynx, retina, septum, sinus, sinuses, skull, submandibular gland, teeth, temporomandibular joint, tongue, tooth, trachea, trochlear nerve, tympani nerve.

- M: angiographic, angiography, CT, DPI, fluoroscopic, fluoroscopy, image, imaging, Laser, mesh, modality, MRI, portal image, surface, techniques, TRUS, ultrasound, US. N: 3D to 2D, AR, augmented reality, biopsy, endoscopic, endoscopy, FNA, guided, IGRT, image to patient, image-to-patient, implant, implantation, interventional, intra, intraoperative, intra-operative, invasive, macroscopic, macroscopy, navigation, non-invasive, radiosurgery, radiotherapy, real time, shift, surface, surgery, surgical, video.

- P: angle tumor, apex, cataract, cavernous, cerebellopontine, cervical spinal, clivus, cochlear, craniotomy, gamma-knife, glaucoma, jugular, keratectomy, labyrinthectomy, labyrinthectomy, lasik, macula, myringotomy, neuroendoscopy, olfactory, ophthalmology, otologic, petrous, photorefractive, PRK, retinopathy, septoplasty, sinusitis, stereotactic, tracheostomy, turbinoplasty.

3. Registration Methods

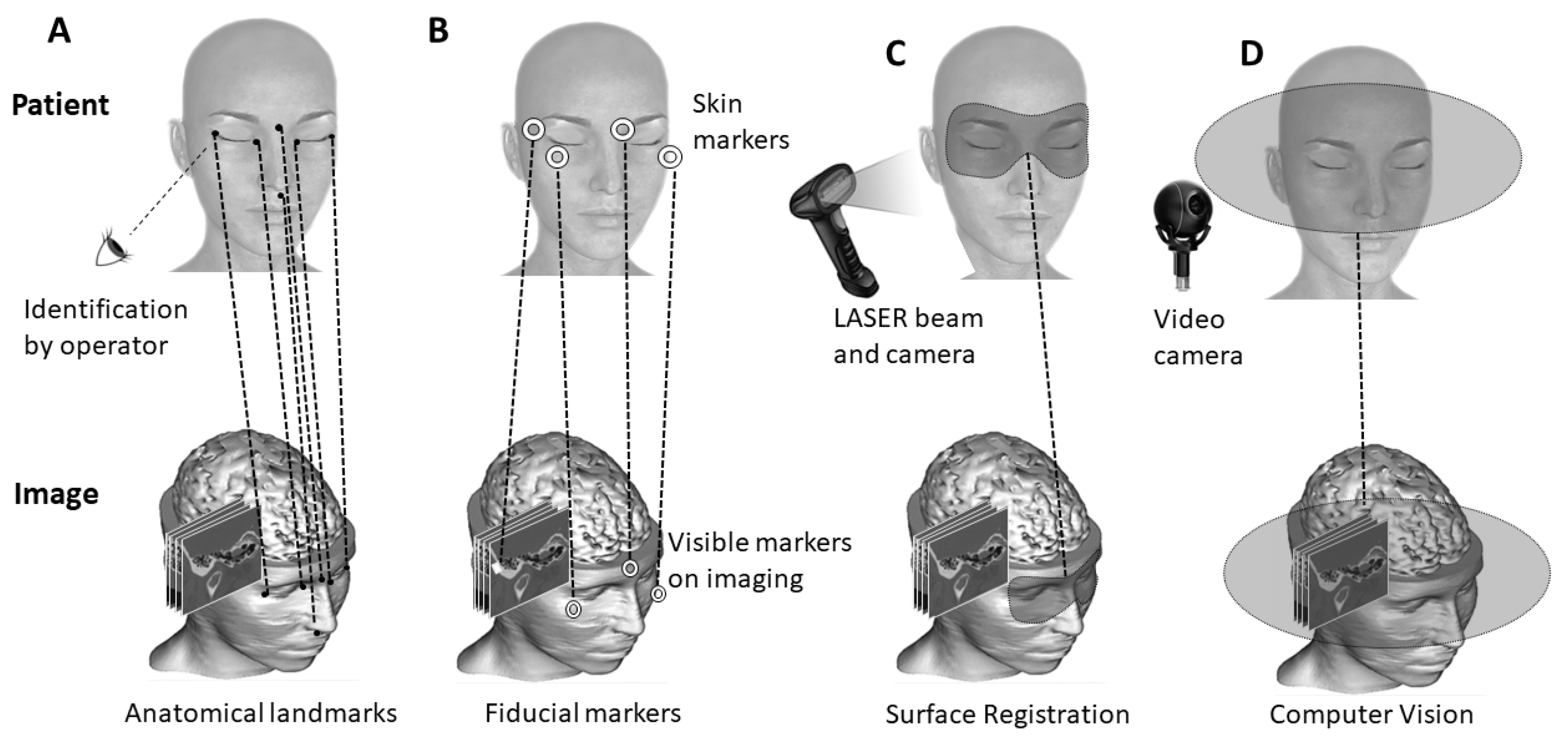

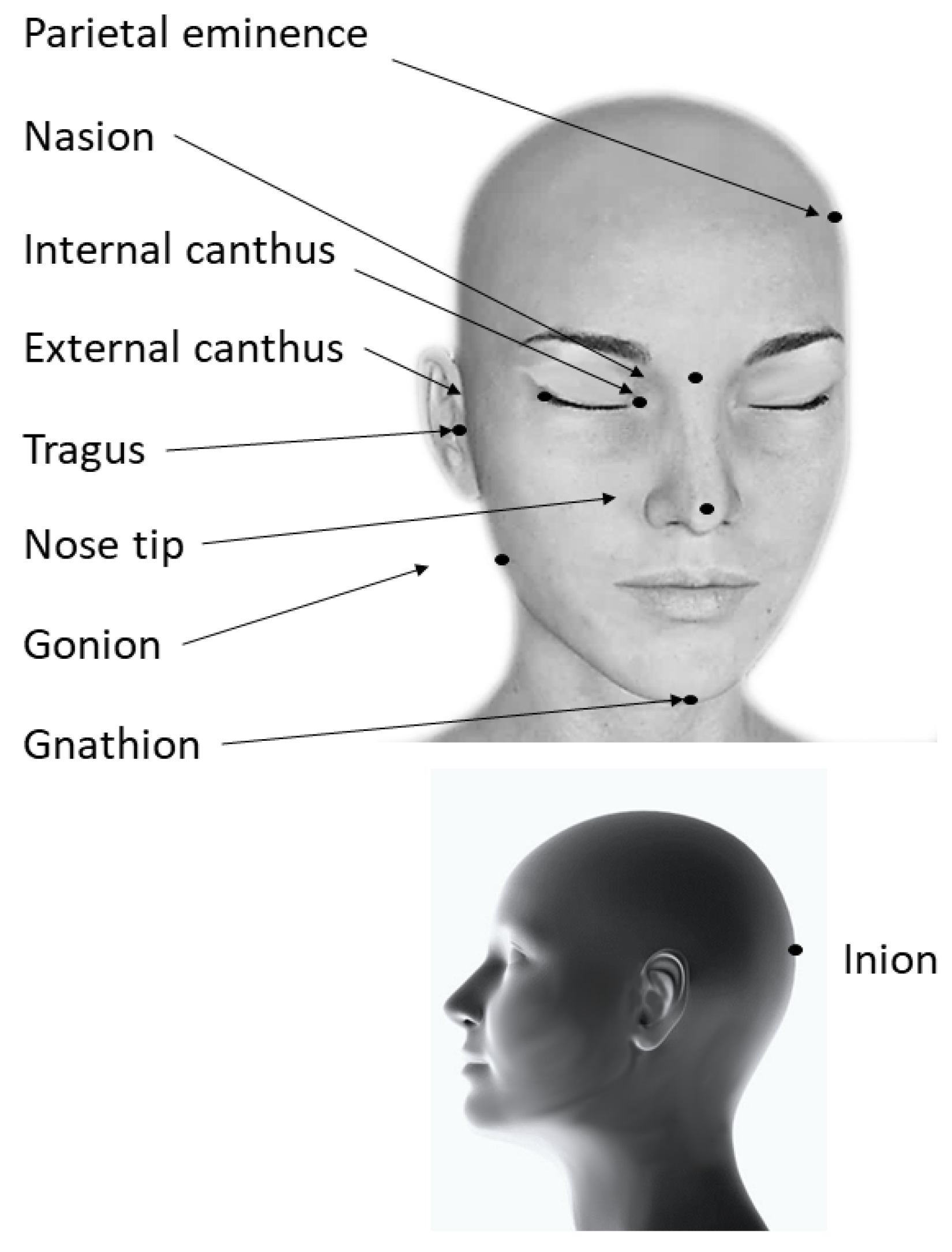

3.1. Anatomy-Based Methods

3.2. Surface-Based Methods

3.3. Marker-Based Methods

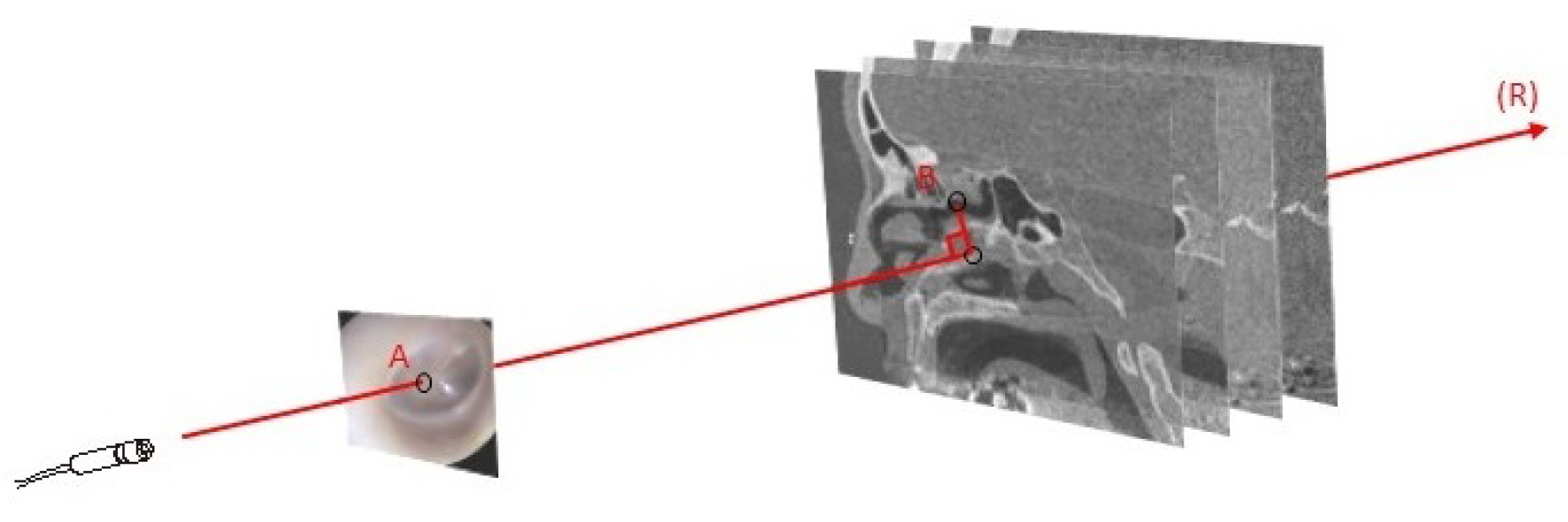

3.4. Computer-Vision-Based Methods

4. Accuracy

4.1. Target Registration Error

4.2. FLE

4.3. FRE

| Study | Procedure | Registration Landmarks | Test Subjects | Method | Description or Commercial Name | Target Landmarks | TRE (STD) [Min–Max] | Time |

|---|---|---|---|---|---|---|---|---|

| Gerber et al. [50] | Otology | Outer surface of the mastoid | 1 plastic temporal bone n = 32 | MB | Image-guided robotic microsurgery on the head | Cochlea and round window | 0.07 mm (0.019) | <4 min |

| Zhou et al. [89] | Otology | Mastoid bone | 13 patients | SB | Contact surface matching | Mastoid surface | 0.16 mm (0.09) | 3 min |

| Zhou et al. [89] | Otology | Mastoid bone | 13 patients | SB | Contact surface matching | Round window | 0.23 mm (0.1) | 3 min |

| Lavenir et al. [83] | Otology | Cochlea in HFUS images | 6 cadaveric guinea pig cochleae | AB + CVB | Registering micro CT scan to HFUS | Cochlear structures determined on 3D images | 0.32 mm (0.05) | NS |

| Schneider et al. [90] | Otology | Middle ear, auditory canal, mastoid cortex | 2 specimens | AB | Pair-point matching | Middle ear, auditory canal | 0.51 mm (0.28) | 3.8 min |

| Hauser et al. [88] | Rhinology | Nasion, outer ear, upper teeth | 1 plastic head n = 160 | MB | 1 dental face bow | 3 markers intranasal + 1 marker extranasal near the nose | 0.69 mm (0.2) | NS |

| Otake et al. [61] | Rhinology | Sinus endoscopic rendered image | 1 cadaver n = 7 | CVB | Rendering-based video CT | Sinus (2D to 3D TRE) | 0.83 mm | 4.4 s |

| Brouwer de Koning et al. [91] | Maxillofacial | Teeth | 1 phantom n = 45 | MB | 3D-printed dental splint | Mandibular | 0.83 mm [0.70–1.39] | >90 min |

| Broehan et al. [67] | Ophthalmology | Retinal vessels from ophthalmic microscopeframes | 10 patient video sequences | CVB | CAS for laser photocoagulation system | Retinal vessels on ophthalmic microscope | (18.8) | Real time |

| Reaungamornrat et al. [92] | Laryngology | Region of tongue extending to hyoid bone on CBCT | 1 cadaver (25 images) | CVB | Deformable image registration for base-of-tongue surgery | Tongue surface | 1.7 mm (0.9) | 5 min |

| Study | Procedure | Registration Landmarks | Test Subjects | Method | Description or Commercial Name | Target Landmarks | TRE (STD) [Min–Max] | Time |

|---|---|---|---|---|---|---|---|---|

| Fu et al. [74] | Skull-base surgery | Intraoperative X-ray images | 1 head-and-neck phantom n = 49 | CVB | Intensity-based intraoperative X-ray to CT registration in radio surgery | Skull surface | 0.34 mm (0.16) | <3 s |

| Ledderose et al. [93] | Skull-base surgery | Teeth | 1 cadaver n = 20 | MB | Dental splint for lateral skull surgery | Left lateral skull base | 0.55 mm (0.28) | NS |

| O’Reilly et al. [94] | Skull-base surgery | Skull surface by HFUS | 5 cadaveric human heads | CVB | Registration of human skull CT data to an ultrasound treatment space | Skull surface | 0.9 mm (0.2) | NS |

| Marmulla et al. [76] | Skull-base surgery | Auricle | 10 patients | SB | Laser Surface registration for lateral skull-base surgery | Periauricular bow | 0.9 mm (0.3) | 2 s |

| Gooroochurn et al. [95] | Skull-base surgery | Ear tragus, canthi | 1 artificial skull | CVB | Facial recognition applied to registration of patients in the emergency room | 4 landmarks near the canthi and the ear tragi | 0.98 mm [0.52–1.31] | NS |

| Saß et al. [96] | Skull-base surgery | Skull surface | 30 patients | MB | Frameless stereotactic brain biopsy | Skull surface | 0.7 mm (0.32) | 36 min |

| Xu et al. [97] | Deep brain stimulation | Skull surface | 38 patients | MB | Registration in deep brain stimulation using ROSA robotic device | Implanted rod | 0.27 mm (0.07) | NS |

| Hunsche et al. [98] | Deep brain stimulation | 2D X-ray images | 15 patients | CVB | Intensity-based 2D to 3D registration for lead localization | Implanted rod | 0.7 mm (0.2) | <20 min |

| Jiang et al. [56] | Brain surgery | Brain texture surface + camera images | 5 porcine brains | CVB | Non-rigid registration integrating surface and vessel/sulci features | Brain surface | 0.9 mm (0.24) | 340 s |

5. Processing Time

- Surface-based methods require shorter registration procedures due to their simplicity in both the setup and process of acquisition. In two studies on patients undergoing endoscopic sinus surgery, scanning with optical and electromagnetic devices required an average of 3 min for the equipment setup and less than 50 s to perform the registration [101,102].

- Anatomy-based methods are highly dependent on the perception of the operator. The process might be repeated several times to achieve the optimal accuracy and is, consequently, longer [100].

- Marker-based methods require more-complex and longer setups and can be further prolonged by sophisticated labeling and marker fixation into the bone [103]. In case of an inexperienced surgeon, an additional 15–30 min may be necessary for the overall process [104]. Even for non-invasive fiducial labels, the environment setup for fixing the markers might take several minutes or hours [91]. In a method described by Matsumoto et al. [105], building a customized template of the patient required a patient clinical visit 2 weeks before the surgery and, hence, a more-complex logistical organization than other registration routines. This method will be described with more details in the invasiveness section.

- Computer-vision-based methods require the least amount of human intervention or reliance. The overall process might take several seconds or several minutes when running on commercial central processing units (CPU) [106]. With the expansion of GPUs for these applications, heavy calculations could be performed instantly [61].

6. Invasiveness

- -

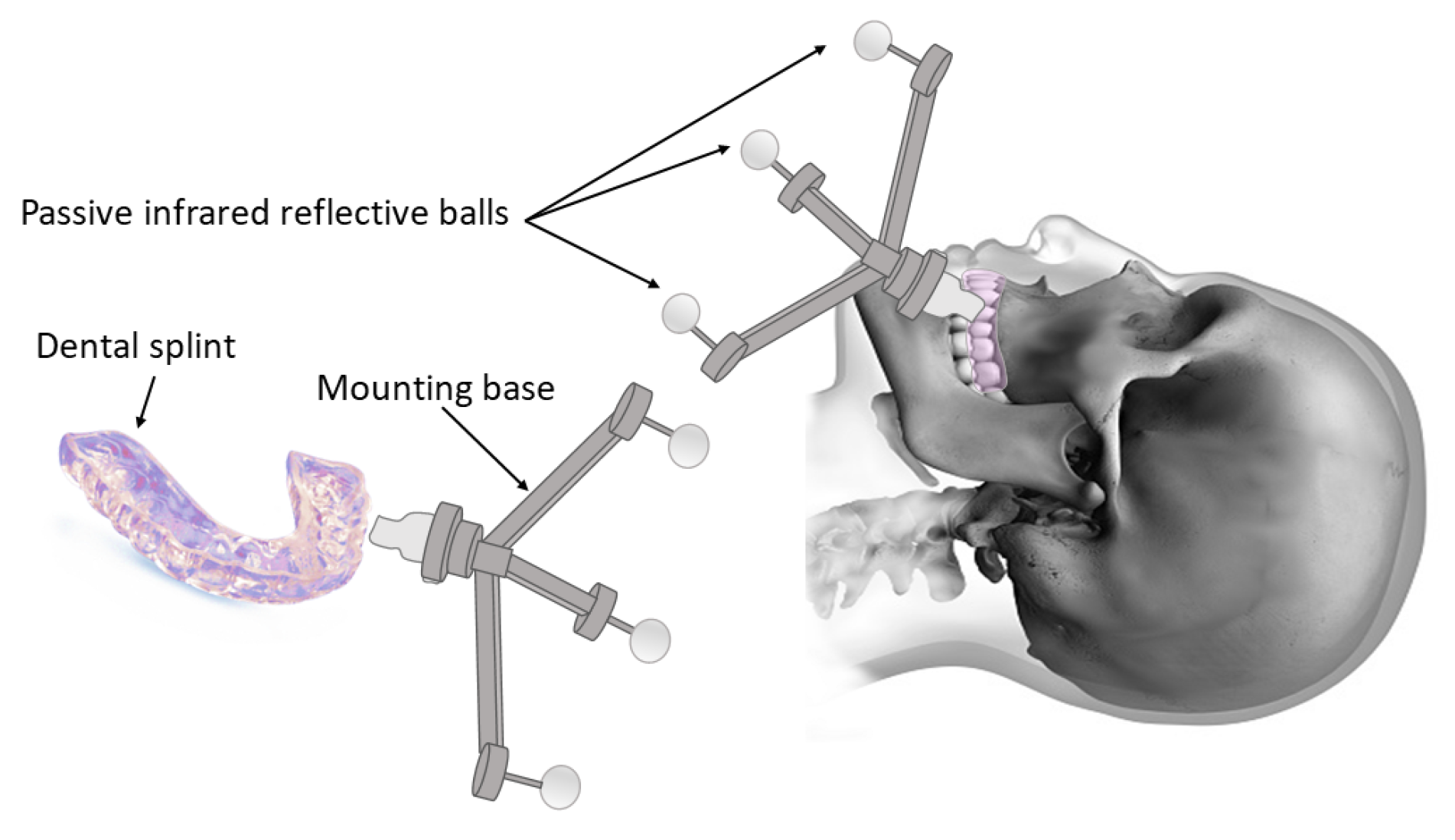

- One common approach is attaching the markers to the upper teeth or the maxillary bone as reference points [30,88,108]. The most-widely implemented systems were designed with a reference frame to be mounted on a dental splint or a mouthpiece (Figure 5) [75,91,109,110,111,112]. For instance, a registration tool tightly attached to the upper teeth by means of a silicon rubber splint bearing automatically recognized markers was developed and validated for cochlear implantation surgery [65]. Although such methods eliminated the complications of penetrating the skull, their accuracy was slightly below invasive approaches [113,114].

- -

- Alternatively, customized facial masks were conceived of, allowing a relatively precise registration without using an invasive marker. Ford et al. [115] proposed a facial mask (manufactured and provided by Xomed Corporation, Jacksonville, FL, USA) made of a radiolucent, low-melting-temperature polyester with ten radiopaque fiducial markers embedded permanently into it. This mask was used for a CAS dedicated to sinus surgery. The mask is placed in warm water until it is deformable enough then held on the patient’s face until it solidifies into shape. It fits on rigid structures with multiple contact points such as the frontal, nasal, maxillary, and parietal bones, and it can be securely strapped at the back of the head. However, the presence of a facial mask reduces the access to many facial and nasal regions and cannot be applied to many procedures. In a similar manner, Hubley et al. [116] used a facial thermoplastic mask for Gamma knife radiosurgery. It was applied for immobilization during an onboard CBCT imaging system to define the stereotactic space. Similarly, Chen et al. [117] used a six-marker thermoplastic facial mask for an image-to-patient registration in stereotactic radiofrequency thermocoagulation.

- -

- -

- Surface template-assisted marker positioning (STAMP) is another marker-based method that works by building a template of the bony surface of the patient’s head from a preoperative CT scan [105]. Virtual markers are created on the CT scan and then transferred to the patient’s head template, which is manufactured by 3D printing. Virtual markers are represented by holes in the template. Intraoperatively, a sterile template is placed on the patient’s head, and the positions of the virtual markers are marked by a pen through the holes, establishing the correspondence between the CT scan and the patient’s head.

- -

- Another way of securing the markers without tissue penetration is to place them in the nasal cavities. For an auditory brainstem implantation, the fiducial markers were mounted on a titanium mesh connected to a stent, which was placed through the nostrils into the rhinopharynx. This device was initially commercialized for the treatment of sleep apnea (AlaxoStent, Alaxo GmbH, Frechen, Germany) [119,120].

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nishihara, M.; Sasayama, T.; Kudo, H.; Kohmura, E. Morbidity of stereotactic biopsy for intracranial lesions. Kobe J. Med. Sci. 2011, 56, E148–E153. [Google Scholar] [PubMed]

- Miner, R.C. Image-guided neurosurgery. J. Med. Imaging Radiat. Sci. 2017, 48, 328–335. [Google Scholar] [CrossRef] [PubMed]

- Püschel, A.; Schafmayer, C.; Groß, J. Robot-assisted techniques in vascular and endovascular surgery. Langenbeck’s Arch. Surg. 2022, 407, 1789–1795. [Google Scholar] [CrossRef]

- Kazmitcheff, G.; Duriez, C.; Miroir, M.; Nguyen, Y.; Sterkers, O.; Bozorg Grayeli, A.; Cotin, S. Registration of a Validated Mechanical Atlas of Middle Ear for Surgical Simulation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2013: 16th International Conference, Nagoya, Japan, September 22–26, 2013, Proceedings, Part III 16; Mori, K., Sakuma, I., Sato, Y., Barillot, C., Navab, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 331–338. [Google Scholar]

- Dumitru, M.; Vrinceanu, D.; Banica, B.; Cergan, R.; Taciuc, I.A.; Manole, F.; Popa-Cherecheanu, M. Management of Aesthetic and Functional Deficits in Frontal Bone Trauma. Medicina 2022, 58, 1756. [Google Scholar] [CrossRef] [PubMed]

- Yasin, R.; O’Connell, B.P.; Yu, H.; Hunter, J.B.; Wanna, G.B.; Rivas, A.; Simaan, N. Steerable robot-assisted micromanipulation in the middle ear: Preliminary feasibility evaluation. Otol. Neurotol. 2017, 38, 290–295. [Google Scholar] [CrossRef] [PubMed]

- Turnbull, F., Jr.; Strelzow, V. Antro-ethmosphenoidectomy. Int. Surg. 1989, 74, 58–60. [Google Scholar] [PubMed]

- Pfeiffer, D.; Pfeiffer, F.; Rummeny, E. Advanced X-ray imaging technology. In Molecular Imaging in Oncology; Springer: Cham, Switzerland, 2020; pp. 3–30. [Google Scholar]

- Eggers, G.; Mühling, J.; Marmulla, R. Image-to-patient registration techniques in head surgery. Int. J. Oral Maxillofac. Surg. 2006, 35, 1081–1095. [Google Scholar] [CrossRef]

- Enchev, Y. Neuronavigation: Geneology, reality, and prospects. Neurosurg. Focus 2009, 27, E11. [Google Scholar] [CrossRef]

- Feng, W.; Wang, W.; Chen, S.; Wu, K.; Wang, H. O-arm navigation versus C-arm guidance for pedicle screw placement in spine surgery: A systematic review and meta-analysis. Int. Orthop. 2020, 44, 919–926. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wang, J.; Wang, T.; Ji, X.; Shen, Y.; Sun, Z.; Zhang, X. A markerless automatic deformable registration framework for augmented reality navigation of laparoscopy partial nephrectomy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1285–1294. [Google Scholar] [CrossRef]

- Golse, N.; Petit, A.; Lewin, M.; Vibert, E.; Cotin, S. Augmented reality during open liver surgery using a markerless non-rigid registration system. J. Gastrointest. Surg. 2021, 25, 662–671. [Google Scholar] [CrossRef] [PubMed]

- Hussain, R.; Lalande, A.; Marroquin, R.; Guigou, C.; Bozorg Grayeli, A. Video-based augmented reality combining CT scan and instrument position data to microscope view in middle ear surgery. Sci. Rep. 2020, 10, 6767. [Google Scholar] [CrossRef] [PubMed]

- Sharp, G.C.; Kollipara, S.; Madden, T.; Jiang, S.B.; Rosenthal, S.J. Anatomic feature-based registration for patient set-up in head and neck cancer radiotherapy. Mach. Vis. Appl. 2005, 50, 4667–4679. [Google Scholar] [CrossRef] [PubMed]

- Kristin, J.; Burggraf, M.; Mucha, D.; Malolepszy, C.; Anderssohn, S.; Schipper, J.; Klenzner, T. Automatic Registration for Navigation at the Anterior and Lateral Skull Base. Ann. Otol. Rhinol. Laryngol. 2019, 128, 894–902. [Google Scholar] [CrossRef]

- Lee, J.; Thornhill, R.E.; Nery, P.; DeKemp, R.; Peña, E.; Birnie, D.; Adler, A.; Ukwatta, E. Left atrial imaging and registration of fibrosis with conduction voltages using LGE-MRI and electroanatomical mapping. Comput. Biol. Med. 2019, 111, 103341. [Google Scholar] [CrossRef]

- Schneider, C.; Thompson, S.; Totz, J.; Song, Y.; Allam, M.; Sodergren, M.; Desjardins, A.; Barratt, D.; Ourselin, S.; Gurusamy, K.; et al. Comparison of manual and semi-automatic registration in augmented reality image-guided liver surgery: A clinical feasibility study. Surg. Endosc. 2020, 34, 4702–4711. [Google Scholar] [CrossRef]

- Cuchet, E.; Knoplioch, J.; Dormont, D.; Marsault, C. Registration in neurosurgery and neuroradiotherapy applications. J. Image Guid. Surg. 1995, 1, 198–207. [Google Scholar] [CrossRef]

- Brock, K. Image Registration in Intensity-Modulated, Image-Guided and Stereotactic Body Radiation Therapy. Front. Radiat. Ther. Oncol. 2007, 40, 94–115. [Google Scholar] [CrossRef]

- Alam, F.; Rahman, S.U.; Khalil, A. An investigation towards issues and challenges in medical image registration. J. Postgrad. Med. Inst. 2017, 31, 224–233. [Google Scholar]

- Liu, Y.; Song, Z.; Wang, M. A new robust markerless method for automatic image-to-patient registration in image-guided neurosurgery system. Comput. Assist. Surg. 2017, 22, 319–325. [Google Scholar] [CrossRef]

- Tan, W.; Alsadoon, A.; Prasad, P.; Al-Janabi, S.; Haddad, S.; Venkata, H.S.; Alrubaie, A. A novel enhanced intensity-based automatic registration: Augmented reality for visualization and localization cancer tumors. Int. J. Med. Robot. Comput. Assist. Surg. 2020, 16, e2043. [Google Scholar] [CrossRef] [PubMed]

- Coupé, P.; Hellier, P.; Morandi, X.; Barillot, C. 3D rigid registration of intraoperative ultrasound and preoperative MR brain images based on hyperechogenic structures. J. Biomed. Imaging 2012, 2012, 1. [Google Scholar] [CrossRef] [PubMed]

- Watson, G.S. Linear least squares regression. Ann. Math. Stat. 1967, 38, 679–1699. [Google Scholar] [CrossRef]

- Mirota, D.J.; Uneri, A.; Schafer, S.; Nithiananthan, S.; Reh, D.D.; Ishii, M.; Gallia, G.L.; Taylor, R.H.; Hager, G.D.; Siewerdsen, J.H. Evaluation of a system for high-accuracy 3D image-based registration of endoscopic video to C-arm cone-beam CT for image-guided skull base surgery. IEEE Trans. Med. Imaging 2013, 32, 1215–1226. [Google Scholar] [CrossRef] [PubMed]

- Penney, G.P.; Weese, J.; Little, J.A.; Desmedt, P.; Hill, D.L.G.; Hawkes, D.J. A Comparison of Simularity Measures for use in 2D-3D Medical Image Registration. IEEE Trans. Med. Imaging 1998, 17, 586–595. [Google Scholar] [CrossRef] [PubMed]

- Haouchine, N.; Juvekar, P.; Wells, W.M., III; Cotin, S.; Golby, A.; Frisken, S. Deformation aware augmented reality for craniotomy using 3d/2d non-rigid registration of cortical vessels. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020: Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part IV 23; Springer: Cham, Switzerland, 2020; pp. 735–744. [Google Scholar]

- Omara, A.; Wang, M.; Fan, Y.; Song, Z. Anatomical landmarks for point-matching registration in image-guided neurosurgery. Int. J. Med. Robot. Comput. Assist. Surg. MRCAS 2014, 10, 55–64. [Google Scholar] [CrossRef]

- Kang, S.; Kim, M.; Kim, J.; Park, H.; Park, W. Marker-free registration for the accurate integration of CT images and the subject’s anatomy during navigation surgery of the maxillary sinus. Dentomaxillofacial Radiol. 2012, 41, 679–685. [Google Scholar] [CrossRef] [PubMed]

- Hardy, S.M.; Melroy, C.; White, D.R.; Dubin, M.; Senior, B. A Comparison of Computer-Aided Surgery Registration Methods for Endoscopic Sinus Surgery. Am. J. Rhinol. 2006, 20, 48–52. [Google Scholar] [CrossRef] [PubMed]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 698–700. [Google Scholar] [CrossRef]

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Walker, M.W.; Shao, L.; Volz, R.A. Estimating 3-D location parameters using dual number quaternions. CVGIP Image Underst. 1991, 54, 358–367. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Cho, S.S.; Teng, C.W.; Ramayya, A.; Buch, L.; Hussain, J.; Harsch, J.; Brem, S.; Lee, J.Y. Surface-registration frameless stereotactic navigation is less accurate during prone surgeries: Intraoperative near-infrared visualization using second window indocyanine green offers an adjunct. Mol. Imaging Biol. 2020, 22, 1572–1580. [Google Scholar] [CrossRef]

- Marmulla, R.; Niederdellmann, H. Computer-assisted bone segment navigation. J. Cranio-Maxillofac. Surg. 1998, 26, 347–359. [Google Scholar] [CrossRef]

- Marmulla, R.; Hoppe, H.; Mühling, J.; Hassfeld, S. New augmented reality concepts for craniofacial surgical procedures. Plast. Reconstr. Surg. 2005, 115, 1124–1128. [Google Scholar] [CrossRef] [PubMed]

- Schicho, K.; Figl, M.; Seemann, R.; Donat, M.; Pretterklieber, M.L.; Birkfellner, W.; Reichwein, A.; Wanschitz, F.; Kainberger, F.; Bergmann, H.; et al. Comparison of laser surface scanning and fiducial marker–based registration in frameless stereotaxy. J. Neurosurg. 2007, 106, 704–709. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.; Park, J.M.; Kim, K.H.; Lee, D.H.; Sohn, M.J. Accuracy evaluation of surface registration algorithm using normal distribution transform in stereotactic body radiotherapy/radiosurgery: A phantom study. J. Appl. Clin. Med. Phys. 2022, 23, e13521. [Google Scholar] [CrossRef]

- Wang, M.Y.; Maurer, C.R.; Fitzpatrick, J.M.; Maciunas, R.J. An automatic technique for finding and localizing externally attached markers in CT and MR volume images of the head. IEEE Trans. Biomed. Eng. 1996, 43, 627–637. [Google Scholar] [CrossRef]

- Knott, P.D.; Maurer, C.R., Jr.; Gallivan, R.; Roh, H.J.; Citardi, M.J. The impact of fiducial distribution on headset-based registration in image-guided sinus surgery. Otolaryngol.—Head Neck Surg. 2004, 131, 666–672. [Google Scholar] [CrossRef]

- Choi, J.W.; Jang, J.; Jeon, K.; Kang, S.; Kang, S.H.; Seo, J.K.; Lee, S.H. Three-dimensional measurement and registration accuracy of a third-generation optical tracking system for navigational maxillary orthognathic surgery. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019, 128, 213–219. [Google Scholar] [CrossRef] [PubMed]

- Duque, S.; Gorrepati, R.; Kesavabhotla, K.; Huang, C.; Boockvar, J. Endoscopic Endonasal Transphenoidal Surgery Using the BrainLAB (R) Headband for Navigation without Rigid Fixation. J. Neurol. Surgery. Part Cent. Eur. Neurosurg. 2013, 75, 267–269. [Google Scholar] [CrossRef]

- Aoyama, T.; Uto, K.; Shimizu, H.; Ebara, M.; Kitagawa, T.; Tachibana, H.; Suzuki, K.; Kodaira, T. Development of a new poly-ε-caprolactone with low melting point for creating a thermoset mask used in radiation therapy. Sci. Rep. 2021, 11, 20409. [Google Scholar] [CrossRef] [PubMed]

- Balachandran, R.; Fritz, M.A.; Dietrich, M.S.; Danilchenko, A.; Mitchell, J.E.; Oldfield, V.L.; Lipscomb, W.W.; Fitzpatrick, J.M.; Neimat, J.S.; Konrad, P.E.; et al. Clinical testing of an alternate method of inserting bone-implanted fiducial markers. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 913–920. [Google Scholar] [CrossRef] [PubMed]

- Hosoki, M.; Nishigawa, K.; Miyamoto, Y.; Ohe, G.; Matsuka, Y. Allergic contact dermatitis caused by titanium screws and dental implants. J. Prosthodont. Res. 2016, 60, 213–219. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Gonzalez, G.; Lapeer, R. Intra-operative registration for image enhanced endoscopic sinus surgery using photo-consistency. Stud. Health Technol. Inform. 2007, 125, 67–72. [Google Scholar] [PubMed]

- Gerber, N.; Gavaghan, K.A.; Bell, B.J.; Williamson, T.M.; Weisstanner, C.; Caversaccio, M.D.; Weber, S. High-accuracy patient-to-image registration for the facilitation of image-guided robotic microsurgery on the head. IEEE Trans. Biomed. Eng. 2013, 60, 960–968. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.Z.; Hou, J.F. Registration for frameless brain surgery based on stereo imaging. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 3998–4001. [Google Scholar]

- Zagorchev, L.; Brueck, M.; Fläschner, N.; Wenzel, F.; Hyde, D.; Ewald, A.; Peters, J. Patient-specific sensor registration for electrical source imaging using a deformable head model. IEEE Trans. Biomed. Eng. 2020, 68, 267–275. [Google Scholar] [CrossRef]

- Russell, G.S.; Eriksen, K.J.; Poolman, P.; Luu, P.; Tucker, D.M. Geodesic photogrammetry for localizing sensor positions in dense-array EEG. Clin. Neurophysiol. 2005, 116, 1130–1140. [Google Scholar] [CrossRef]

- Gurbani, S.S.; Wilkening, P.; Zhao, M.; Gonenc, B.; Cheon, G.W.; Iordachita, I.I.; Chien, W.; Taylor, R.H.; Niparko, J.K.; Kang, J.U. Robot-assisted three-dimensional registration for cochlear implant surgery using a common-path swept-source optical coherence tomography probe. J. Biomed. Opt. 2014, 19, 057004. [Google Scholar] [CrossRef]

- Üneri, A.; Balicki, M.A.; Handa, J.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. New steady-hand eye robot with micro-force sensing for vitreoretinal surgery. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 814–819. [Google Scholar]

- Jiang, J.; Nakajima, Y.; Sohma, Y.; Saito, T.; Kin, T.; Oyama, H.; Saito, N. Marker-less tracking of brain surface deformations by non-rigid registration integrating surface and vessel/sulci features. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1687–1701. [Google Scholar] [CrossRef] [PubMed]

- Wen, R.; Chui, C.K.; Ong, S.H.; Lim, K.B.; Chang, S.K.Y. Projection-based visual guidance for robot-aided RF needle insertion. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 1015–1025. [Google Scholar] [CrossRef] [PubMed]

- Olesen, O.V.; Paulsen, R.R.; Hojgaard, L.; Roed, B.; Larsen, R. Motion tracking for medical imaging: A nonvisible structured light tracking approach. IEEE Trans. Med. Imaging 2011, 31, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Burschka, D.; Li, M.; Ishii, M.; Taylor, R.H.; Hager, G.D. Scale-invariant registration of monocular endoscopic images to CT scans for sinus surgery. Med. Image Anal. 2005, 9, 413–426. [Google Scholar] [CrossRef] [PubMed]

- Lapeer, R.; Chen, M.; Gonzalez, G.; Linney, A.; Alusi, G. Image-enhanced surgical navigation for endoscopic sinus surgery: Evaluating calibration, registration and tracking. Int. J. Med. Robot. Comput. Assist. Surg. 2008, 4, 32–45. [Google Scholar] [CrossRef] [PubMed]

- Otake, Y.; Léonard, S.; Reiter, A.; Rajan, P.; Siewerdsen, J.H.; Gallia, G.L.; Ishii, M.; Taylor, R.H.; Hager, G.D. Rendering-based video-CT registration with physical constraints for image-guided endoscopic sinus surgery. In Proceedings of the Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 21–26 February 2015; Volume 9415, pp. 64–69. [Google Scholar]

- Luo, X.; Takabatake, H.; Natori, H.; Mori, K. Robust real-time image-guided endoscopy: A new discriminative structural similarity measure for video to volume registration. In Information Processing in Computer-Assisted Interventions: Proceedings of the 4th International Conference, IPCAI 2013, Heidelberg, Germany, 26 June 2013; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2013; pp. 91–100. [Google Scholar]

- Farnia, P.; Najafzadeh, E.; Ahmadian, A.; Makkiabadi, B.; Alimohamadi, M.; Alirezaie, J. Co-sparse analysis model based image registration to compensate brain shift by using intra-operative ultrasound imaging. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1–4. [Google Scholar]

- Paul, P.; Morandi, X.; Jannin, P. A surface registration method for quantification of intraoperative brain deformations in image-guided neurosurgery. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 976–983. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Liu, H.; Ke, J.; Hu, L.; Zhang, S.; Yang, B.; Sun, S.; Guo, N.; Ma, F. Image-guided cochlear access by non-invasive registration: A cadaveric feasibility study. Sci. Rep. 2020, 10, 18318. [Google Scholar] [CrossRef] [PubMed]

- Samuel, H.T.; Lepcha, A.; Philip, A.; John, M.; Augustine, A.M. Dimensions of the Posterior Tympanotomy and Round Window Visibility Through the Facial Recess: Cadaveric Temporal Bone Study Using a Novel Digital Microscope. Indian J. Otolaryngol. Head Neck Surg. 2022, 74, 714–718. [Google Scholar] [CrossRef] [PubMed]

- Broehan, A.M.; Rudolph, T.; Amstutz, C.A.; Kowal, J.H. Real-time multimodal retinal image registration for a computer-assisted laser photocoagulation system. IEEE Trans. Biomed. Eng. 2011, 58, 2816–2824. [Google Scholar] [CrossRef]

- Kollias, A.N.; Ulbig, M.W. Diabetic retinopathy: Early diagnosis and effective treatment. Dtsch. Arztebl. Int. 2010, 107, 75. [Google Scholar] [PubMed]

- Kral, F.; Riechelmann, H.; Freysinger, W. Navigated surgery at the lateral skull base and registration and preoperative imagery: Experimental results. Arch. Otolaryngol.–Head Neck Surg. 2011, 137, 144–150. [Google Scholar] [CrossRef] [PubMed]

- Fitzpatrick, J.M.; West, J.B.; Maurer, C.R. Predicting error in rigid-body point-based registration. IEEE Trans. Med. Imaging 1998, 17, 694–702. [Google Scholar] [CrossRef] [PubMed]

- Shamir, R.R.; Freiman, M.; Joskowicz, L.; Spektor, S.; Shoshan, Y. Surface-based facial scan registration in neuronavigation procedures: A clinical study. J. Neurosurg. 2009, 111, 1201–1206. [Google Scholar] [CrossRef] [PubMed]

- Ledderose, G.; Stelter, K.; Leunig, A.; Hagedorn, H. Surface laser registration in ENT-surgery: Accuracy in the paranasal sinuses—A cadaveric study. Rhinology 2008, 45, 281–285. [Google Scholar]

- Ferrant, M.; Nabavi, A.; Macq, B.; Jolesz, F.A.; Kikinis, R.; Warfield, S.K. Registration of 3-D intraoperative MR images of the brain using a finite-element biomechanical model. IEEE Trans. Med. Imaging 2001, 20, 1384–1397. [Google Scholar] [CrossRef] [PubMed]

- Fu, D.; Kuduvalli, G. A fast, accurate, and automatic 2D–3D image registration for image-guided cranial radiosurgery. Med. Phys. 2008, 35, 2180–2194. [Google Scholar] [CrossRef]

- Eggers, G.; M¨ hling, J. Template-based registration for image-guided skull base surgery. Otolaryngol.—Head Neck Surg. 2007, 136, 907–913. [Google Scholar] [CrossRef]

- Marmulla, R.; Eggers, G.; Mühling, J. Laser surface registration for lateral skull base surgery. Minim. Invasive Neurosurg. 2005, 48, 181–185. [Google Scholar] [CrossRef]

- Bozorg Grayeli, A.; Esquia-Medina, G.; Nguyen, Y.; Mazalaigue, S.; Vellin, J.F.; Lombard, B.; Kalamarides, M.; Ferrary, E.; Sterkers, O. Use of anatomic or invasive markers in association with skin surface registration in image-guided surgery of the temporal bone. Acta Oto-Laryngol. 2009, 129, 405–410. [Google Scholar] [CrossRef]

- Eggers, G.; Kress, B.; Mühling, J. Automated registration of intraoperative CT image data for navigated skull base surgery. Minim. Invasive Neurosurg. 2008, 51, 15–20. [Google Scholar] [CrossRef] [PubMed]

- Labadie, R.F.; Davis, B.M.; Fitzpatrick, J.M. Image-guided surgery: What is the accuracy? Curr. Opin. Otolaryngol. Head Neck Surg. 2005, 13, 27–31. [Google Scholar] [CrossRef] [PubMed]

- Hamming, N.M.; Daly, M.J.; Irish, J.C.; Siewerdsen, J.H. Effect of fiducial configuration on target registration error in intraoperative cone-beam CT guidance of head-and-neck surgery. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 3643–3648. [Google Scholar]

- Smith, T.R.; Mithal, D.S.; Stadler, J.A.; Asgarian, C.; Muro, K.; Rosenow, J.M. Impact of fiducial arrangement and registration sequence on target accuracy using a phantom frameless stereotactic navigation model. J. Clin. Neurosci. 2014, 21, 1976–1980. [Google Scholar] [CrossRef] [PubMed]

- Alp, S.; Dujovny, M.; Misra, M.; Charbel, F.; Ausman, J. Head registration techniques for image-guided surgery. Neurol. Res. 1998, 20, 31–37. [Google Scholar] [CrossRef]

- Lavenir, L.; Zemiti, N.; Akkari, M.; Subsol, G.; Venail, F.; Poignet, P. HFUS Imaging of the Cochlea: A Feasibility Study for Anatomical Identification by Registration with MicroCT. Ann. Biomed. Eng. 2021, 49, 1308–1317. [Google Scholar] [CrossRef]

- Kral, F.; Url, C.; Widmann, G.; Riechelmann, H.; Freysinger, W. The learning curve of registration in navigated skull base surgery. Laryngo-Rhino-Otologie 2010, 90, 90–93. [Google Scholar] [CrossRef]

- Chu, Y.; Yang, J.; Ma, S.; Ai, D.; Li, W.; Song, H.; Li, L.; Chen, D.; Chen, L.; Wang, Y. Registration and fusion quantification of augmented reality based nasal endoscopic surgery. Med. Image Anal. 2017, 42, 241–256. [Google Scholar] [CrossRef] [PubMed]

- Mirota, D.J.; Wang, H.; Taylor, R.H.; Ishii, M.; Gallia, G.L.; Hager, G.D. A system for video-based navigation for endoscopic endonasal skull base surgery. IEEE Trans. Med. Imaging 2011, 31, 963–976. [Google Scholar] [CrossRef]

- Ingram, W.S.; Yang, J.; Wendt, R., III; Beadle, B.M.; Rao, A.; Wang, X.A.; Court, L.E. The influence of non-rigid anatomy and patient positioning on endoscopy-CT image registration in the head-and-neck. Med. Phys. 2017, 44, 4159–4168. [Google Scholar] [CrossRef] [PubMed]

- Hauser, R.; Westermann, B.; Probst, R. A non-invasive patient registration and reference system for interactive intraoperative localization in intranasal sinus surgery. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 1997, 211, 327–334. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Anschuetz, L.; Weder, S.; Xie, L.; Caversaccio, M.; Weber, S.; Williamson, T. Surface matching for high-accuracy registration of the lateral skull base. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 2097–2103. [Google Scholar] [CrossRef] [PubMed]

- Schneider, D.; Hermann, J.; Gerber, K.; Ansó, J.; Caversaccio, M.; Weber, S.; Anschuetz, L. Noninvasive Registration Strategies and Advanced Image Guidance Technology for Submillimeter Surgical Navigation Accuracy in the Lateral Skull Base. Otol. Neurotol. 2018, 39, 1326–1335. [Google Scholar] [CrossRef] [PubMed]

- Brouwer de Koning, S.; Riksen, J.; ter Braak, T.P.; van Alphen, M.J.; van der Heijden, F.; Schreuder, W.H.; Karssemakers, L.; Karakullukcu, M.B.; van Veen, R.L.P. Utilization of a 3D printed dental splint for registration during electromagnetically navigated mandibular surgery. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1997–2003. [Google Scholar] [CrossRef] [PubMed]

- Reaungamornrat, S.; Liu, W.; Wang, A.; Otake, Y.; Nithiananthan, S.; Uneri, A.; Schafer, S.; Tryggestad, E.; Richmon, J.; Sorger, J.; et al. Deformable image registration for cone-beam CT guided transoral robotic base-of-tongue surgery. Phys. Med. Biol. 2013, 58, 4951. [Google Scholar] [CrossRef] [PubMed]

- Ledderose, G.J.; Hagedorn, H.; Spiegl, K.; Leunig, A.; Stelter, K. Image guided surgery of the lateral skull base: Testing a new dental splint registration device. Comput. Aided Surg. 2012, 17, 13–20. [Google Scholar] [CrossRef] [PubMed]

- O’Reilly, M.A.; Jones, R.M.; Birman, G.; Hynynen, K. Registration of human skull computed tomography data to an ultrasound treatment space using a sparse high frequency ultrasound hemispherical array. Med. Phys. 2016, 43, 5063–5071. [Google Scholar] [CrossRef] [PubMed]

- Gooroochurn, M.; Kerr, D.; Bouazza-Marouf, K.; Ovinis, M. Facial recognition techniques applied to the automated registration of patients in the emergency treatment of head injuries. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2011, 225, 170–180. [Google Scholar] [CrossRef]

- Saß, B.; Pojskic, M.; Bopp, M.; Nimsky, C.; Carl, B. Comparing fiducial-based and intraoperative computed tomography-based registration for frameless stereotactic brain biopsy. Stereotact. Funct. Neurosurg. 2021, 99, 79–89. [Google Scholar] [CrossRef]

- Xu, F.; Jin, H.; Yang, X.; Sun, X.; Wang, Y.; Xu, M.; Tao, Y. Improved accuracy using a modified registration method of ROSA in deep brain stimulation surgery. Neurosurg. Focus 2018, 45, E18. [Google Scholar] [CrossRef]

- Hunsche, S.; Sauner, D.; El Majdoub, F.; Neudorfer, C.; Poggenborg, J.; Goßmann, A.; Maarouf, M. Intensity-based 2D 3D registration for lead localization in robot guided deep brain stimulation. Phys. Med. Biol. 2017, 62, 2417. [Google Scholar] [CrossRef]

- Van Krevelen, D.; Poelman, R. A survey of augmented reality technologies, applications and limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar] [CrossRef]

- Woodworth, B.; Davis, G.; Schlosser, R. Comparison of Laser versus Surface-Touch Registration for Image-Guided Sinus Surgery. Am. J. Rhinol. 2005, 19, 623–626. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.M.; Fang, K.M.; Huang, T.; Wang, C.T.; Cheng, P.W. Three-dimensional analysis of the surface registration accuracy of electromagnetic navigation systems in live endoscopic sinus surgery. Rhinology 2013, 51, 343–348. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.M.; Jaw, F.S.; Lo, W.C.; Fang, K.M.; Cheng, P.W. Three-dimensional analysis of the accuracy of optic and electromagnetic navigation systems using surface registration in live endoscopic sinus surgery. Rhinology 2016, 54, 88–94. [Google Scholar] [CrossRef] [PubMed]

- Ieiri, S.; Uemura, M.; Konishi, K.; Souzaki, R.; Nagao, Y.; Tsutsumi, N.; Akahoshi, T.; Ohuchida, K.; Ohdaira, T.; Tomikawa, M.; et al. Augmented reality navigation system for laparoscopic splenectomy in children based on preoperative CT image using optical tracking device. Pediatr. Surg. Int. 2012, 28, 341–346. [Google Scholar] [CrossRef] [PubMed]

- Metson, R.B.; Cosenza, M.J.; Cunningham, M.J.; Randolph, G.W. Physician experience with an optical image guidance system for sinus surgery. Laryngoscope 2000, 110, 972–976. [Google Scholar] [CrossRef] [PubMed]

- Matsumoto, N.; Hong, J.; Hashizume, M.; Komune, S. A minimally invasive registration method using surface template-assisted marker positioning (STAMP) for image-guided otologic surgery. Otolaryngol.—Head Neck Surg. 2009, 140, 96–102. [Google Scholar] [CrossRef]

- Berkels, B.; Cabrilo, I.; Haller, S.; Rumpf, M.; Schaller, K. Co-registration of intra-operative brain surface photographs and pre-operative MR images. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 387–400. [Google Scholar] [CrossRef]

- Mascott, C.R.; Sol, J.C.; Bousquet, P.; Lagarrigue, J.; Lazorthes, Y.; Lauwers-Cances, V. Quantification of true in vivo (application) accuracy in cranial image-guided surgery: Influence of mode of patient registration. Oper. Neurosurg. 2006, 59, ONS-146–ONS-156. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, S.; Taniike, N.; Takenobu, T. Application of an open position splint integrated with a reference frame and registration markers for mandibular navigation surgery. Int. J. Oral Maxillofac. Surg. 2020, 49, 686–690. [Google Scholar] [CrossRef] [PubMed]

- Hong, J.; Matsumoto, N.; Ouchida, R.; Komune, S.; Hashizume, M. Medical navigation system for otologic surgery based on hybrid registration and virtual intraoperative computed tomography. IEEE Trans. Biomed. Eng. 2008, 56, 426–432. [Google Scholar] [CrossRef]

- Bale, R.J.; Burtscher, J.; Eisner, W.; Obwegeser, A.A.; Rieger, M.; Sweeney, R.A.; Dessl, A.; Giacomuzzi, S.M.; Twerdy, K.; Jaschke, W. Computer-assisted neurosurgery by using a non-invasive vacuum-affixed dental cast that acts as a reference base: Another step toward a unified approach in the treatment of brain tumors. J. Neurosurg. 2000, 93, 208–213. [Google Scholar] [CrossRef] [PubMed]

- Meeks, S.L.; Bova, F.J.; Wagner, T.H.; Buatti, J.M.; Friedman, W.A.; Foote, K.D. Image localization for frameless stereotactic radiotherapy. Int. J. Radiat. Oncol. Biol. Phys. 2000, 46, 1291–1299. [Google Scholar] [CrossRef]

- Fenlon, M.R.; Jusczyzck, A.S.; Edwards, P.J.; King, A.P. Locking acrylic resin dental stent for image-guided surgery. J. Prosthet. Dent. 2000, 83, 482–485. [Google Scholar] [CrossRef]

- Hofer, M.; Dittrich, E.; Baumberger, C.; Strauß, M.; Dietz, A.; Lüth, T.; Strauß, G. The influence of various registration procedures upon surgical accuracy during navigated controlled petrous bone surgery. Otolaryngol.–Head Neck Surg. 2010, 143, 258–262. [Google Scholar] [CrossRef] [PubMed]

- Grauvogel, T.D.; Soteriou, E.; Metzger, M.C.; Berlis, A.; Maier, W. Influence of different registration modalities on navigation accuracy in ear, nose, and throat surgery depending on the surgical field. Laryngoscope 2010, 120, 881–888. [Google Scholar] [CrossRef] [PubMed]

- Albritton, F.D.; Kingdom, T.T.; DelGaudio, J.M. Malleable Registration Mask: Application of a Novel Registration Method in Image Guided Sinus Surgery. Am. J. Rhinol. Allergy 2001, 15, 219–224. [Google Scholar] [CrossRef]

- Hubley, E.; Mooney, K.; Schelin, M.; Shi, W.; Yu, Y.; Liu, H. Geometric and dosimetric effects of image co-registration workflows for Gamma Knife frameless radiosurgery. J. Radiosurg. SBRT 2020, 7, 47–55. [Google Scholar] [PubMed]

- Chen, M.J.; Gu, L.X.; Zhang, W.J.; Yang, C.; Zhao, J.; Shao, Z.Y.; Wang, B.L. Fixation, registration, and image-guided navigation using a thermoplastic facial mask in electromagnetic navigation–guided radiofrequency thermocoagulation. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endodontol. 2010, 110, e43–e48. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, S.; Hara, S.; Takenobu, T. A Splint-to-CT Data Registration Strategy for Maxillary Navigation Surgery. Case Rep. Dent. 2020, 2020, 8871148. [Google Scholar] [CrossRef] [PubMed]

- Traxdorf, M.; Hartl, M.; Angerer, F.; Bohr, C.; Grundtner, P.; Iro, H. A novel nasopharyngeal stent for the treatment of obstructive sleep apnea: A case series of nasopharyngeal stenting versus continuous positive airway pressure. Eur. Arch. Oto-Rhino-Laryngol. 2016, 273, 1307–1312. [Google Scholar] [CrossRef] [PubMed]

- Regodić, M.; Freyschlag, C.F.; Kerschbaumer, J.; Galijašević, M.; Hörmann, R.; Freysinger, W. Novel microscope-based visual display and nasopharyngeal registration for auditory brainstem implantation: A feasibility study in an ex vivo model. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 261–270. [Google Scholar] [CrossRef] [PubMed]

- Snyderman, D.C.; Zimmer, D.L.A.; Kassam, D.A. Sources of Registration Error with Image Guidance Systems During Endoscopic Anterior Cranial Base Surgery. Otolaryngol.–Head Neck Surg. 2004, 131, 145–149. [Google Scholar] [CrossRef] [PubMed]

- Bettschart, C.; Kruse, A.; Matthews, F.; Zemann, W.; Obwegeser, J.A.; Grätz, K.W.; Lübbers, H.T. Point-to-point registration with mandibulo-maxillary splint in open and closed jaw position. Evaluation of registration accuracy for computer-aided surgery of the mandible. J. Cranio-Maxillofac. Surg. 2012, 40, 592–598. [Google Scholar] [CrossRef]

- Kao, J.; Tarng, Y. The registration of CT image to the patient head by using an automated laser surface scanning system—A phantom study. Comput. Methods Programs Biomed. 2006, 83, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Song, Z. Surface-based automatic coarse registration of head scans. Bio-Med. Mater. Eng. 2014, 24, 3207–3214. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Li, R.; Na, Y.H.; Lee, R.; Xing, L. Accuracy of surface registration compared to conventional volumetric registration in patient positioning for head-and-neck radiotherapy: A simulation study using patient data. Med. Phys. 2014, 41, 121701. [Google Scholar] [CrossRef]

- Drakopoulos, F.; Foteinos, P.; Liu, Y.; Chrisochoides, N.P. Toward a real time multi-tissue Adaptive Physics-Based Non-Rigid Registration framework for brain tumor resection. Front. Neuroinformatics 2014, 8, 11. [Google Scholar] [CrossRef] [PubMed]

- Clatz, O.; Delingette, H.; Talos, I.F.; Golby, A.J.; Kikinis, R.; Jolesz, F.A.; Ayache, N.; Warfield, S.K. Robust nonrigid registration to capture brain shift from intraoperative MRI. IEEE Trans. Med. Imaging 2005, 24, 1417–1427. [Google Scholar] [CrossRef] [PubMed]

- Cooper, M.D.; Restrepo, C.; Hill, R.; Hong, M.; Greene, R.; Weise, L.M. The accuracy of 3D fluoroscopy (XT) vs computed tomography (CT) registration in deep brain stimulation (DBS) surgery. Acta Neurochir. 2020, 162, 1871–1878. [Google Scholar] [CrossRef]

- Peng, T.; Kramer, D.R.; Lee, M.B.; Barbaro, M.F.; Ding, L.; Liu, C.Y.; Kellis, S.; Lee, B. Comparison of intraoperative 3-dimensional fluoroscopy with standard computed tomography for stereotactic frame registration. Oper. Neurosurg. 2020, 18, 698. [Google Scholar] [CrossRef]

- Jones, M.R.; Baskaran, A.B.; Nolt, M.J.; Rosenow, J.M. Intraoperative computed tomography for registration of stereotactic frame in frame-based deep brain stimulation. Oper. Neurosurg. 2021, 20, E186–E189. [Google Scholar] [CrossRef] [PubMed]

- Jermakowicz, W.J.; Diaz, R.J.; Cass, S.H.; Ivan, M.E.; Komotar, R.J. Use of a mobile intraoperative computed tomography scanner for navigation registration during laser interstitial thermal therapy of brain tumors. World Neurosurg. 2016, 94, 418–425. [Google Scholar] [CrossRef] [PubMed]

- Eggers, G.; Kress, B.; Rohde, S.; Muhling, J. Intraoperative computed tomography and automated registration for image-guided cranial surgery. Dentomaxillofacial Radiol. 2009, 38, 28–33. [Google Scholar] [CrossRef] [PubMed]

- Shah, K.H.; Slovis, B.H.; Runde, D.; Godbout, B.; Newman, D.H.; Lee, J. Radiation exposure among patients with the highest CT scan utilization in the emergency department. Emerg. Radiol. 2013, 20, 485–491. [Google Scholar] [CrossRef] [PubMed]

- Granger, C.; Alexander, J.; McMurray, J. New England Journal of Medicine. NEJM 2011, 365, 981–992. [Google Scholar] [CrossRef] [PubMed]

- Frane, N.; Megas, A.; Stapleton, E.; Ganz, M.; Bitterman, A.D. Radiation exposure in orthopaedics. JBJS Rev. 2020, 8, e0060. [Google Scholar] [CrossRef] [PubMed]

- Carlson, J.D. Stereotactic registration using cone-beam computed tomography. Clin. Neurol. Neurosurg. 2019, 182, 107–111. [Google Scholar] [CrossRef]

- Carl, B.; Bopp, M.; Saß, B.; Nimsky, C. Intraoperative computed tomography as reliable navigation registration device in 200 cranial procedures. Acta Neurochir. 2018, 160, 1681–1689. [Google Scholar] [CrossRef]

- Carl, B.; Bopp, M.; Saß, B.; Pojskic, M.; Gjorgjevski, M.; Voellger, B.; Nimsky, C. Reliable navigation registration in cranial and spine surgery based on intraoperative computed tomography. Neurosurg. Focus 2019, 47, E11. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Cha, T.; Peng, Y.; Li, G. Transfer learning from an artificial radiograph-landmark dataset for registration of the anatomic skull model to dual fluoroscopic X-ray images. Comput. Biol. Med. 2021, 138, 104923. [Google Scholar] [CrossRef] [PubMed]

- Su, Y.; Sun, Y.; Hosny, M.; Gao, W.; Fu, Y. Facial landmark-guided surface matching for image-to-patient registration with an RGB-D camera. Int. J. Med. Robot. Comput. Assist. Surgery 2022, 18, e2373. [Google Scholar] [CrossRef] [PubMed]

- Duay, V.; Sinha, T.K.; D’Haese, P.F.; Miga, M.I.; Dawant, B.M. Non-rigid registration of serial intra-operative images for automatic brain shift estimation. In Biomedical Image Registration: Proceedings of the Second InternationalWorkshop, WBIR 2003, Philadelphia, PA, USA, 23–24 June 2003; Revised Papers 2; Springer: Berlin/Heidelberg, Germany, 2003; pp. 61–70. [Google Scholar]

- Arbel, T.; Arbel, T.; Morandi, X.; Comeau, R.M.; Collins, D.L. Automatic non-linear MRI-ultrasound registration for the correction of intra-operative brain deformations. Comput. Aided Surg. 2004, 9, 123–136. [Google Scholar] [CrossRef] [PubMed]

- Reinertsen, I.; Descoteaux, M.; Siddiqi, K.; Collins, D.L. Validation of vessel-based registration for correction of brain shift. Med. Image Anal. 2007, 11, 374–388. [Google Scholar] [CrossRef] [PubMed]

- Teske, H.; Bartelheimer, K.; Meis, J.; Bendl, R.; Stoiber, E.M.; Giske, K. Construction of a biomechanical head-and-neck motion model as a guide to evaluation of deformable image registration. Phys. Med. Biol. 2017, 62, N271. [Google Scholar] [CrossRef]

- Neylon, J.; Qi, X.; Sheng, K.; Staton, R.; Pukala, J.; Manon, R.; Low, D.; Kupelian, P.; Santhanam, A. A GPU based high-resolution multilevel biomechanical head-and-neck model for validating deformable image registration. Med. Phys. 2015, 42, 232–243. [Google Scholar] [CrossRef]

- Mohammadi, A.; Ahmadian, A.; Rabbani, S.; Fattahi, E.; Shirani, S. A combined registration and finite element analysis method for fast estimation of intraoperative brain shift; phantom and animal model study. Int. J. Med. Robot. Comput. Assist. Surg. 2017, 13, e1792. [Google Scholar] [CrossRef] [PubMed]

- Wittek, A.; Miller, K.; Kikinis, R.; Warfield, S.K. Patient-specific model of brain deformation: Application to medical image registration. J. Biomech. 2007, 40, 919–929. [Google Scholar] [CrossRef]

- Hagemann, A.; Rohr, K.; Stiehl, H.S.; Spetzger, U.; Gilsbach, J.M. Biomechanical modeling of the human head for physically based, nonrigid image registration. IEEE Trans. Med. Imaging 1999, 18, 875–884. [Google Scholar] [CrossRef]

- Constantin, B.N.; Marina, T.C.; Eugen, S.H.; Ileana, E.; Adrian, G. Tongue Base Ectopic Thyroid Tissue—Is It a Rare Encounter? Medicina 2023, 59, 313. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taleb, A.; Guigou, C.; Leclerc, S.; Lalande, A.; Bozorg Grayeli, A. Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges. J. Clin. Med. 2023, 12, 5398. https://doi.org/10.3390/jcm12165398

Taleb A, Guigou C, Leclerc S, Lalande A, Bozorg Grayeli A. Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges. Journal of Clinical Medicine. 2023; 12(16):5398. https://doi.org/10.3390/jcm12165398

Chicago/Turabian StyleTaleb, Ali, Caroline Guigou, Sarah Leclerc, Alain Lalande, and Alexis Bozorg Grayeli. 2023. "Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges" Journal of Clinical Medicine 12, no. 16: 5398. https://doi.org/10.3390/jcm12165398

APA StyleTaleb, A., Guigou, C., Leclerc, S., Lalande, A., & Bozorg Grayeli, A. (2023). Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges. Journal of Clinical Medicine, 12(16), 5398. https://doi.org/10.3390/jcm12165398