Image-Based Artificial Intelligence Technology for Diagnosing Middle Ear Diseases: A Systematic Review

Abstract

:1. Introduction

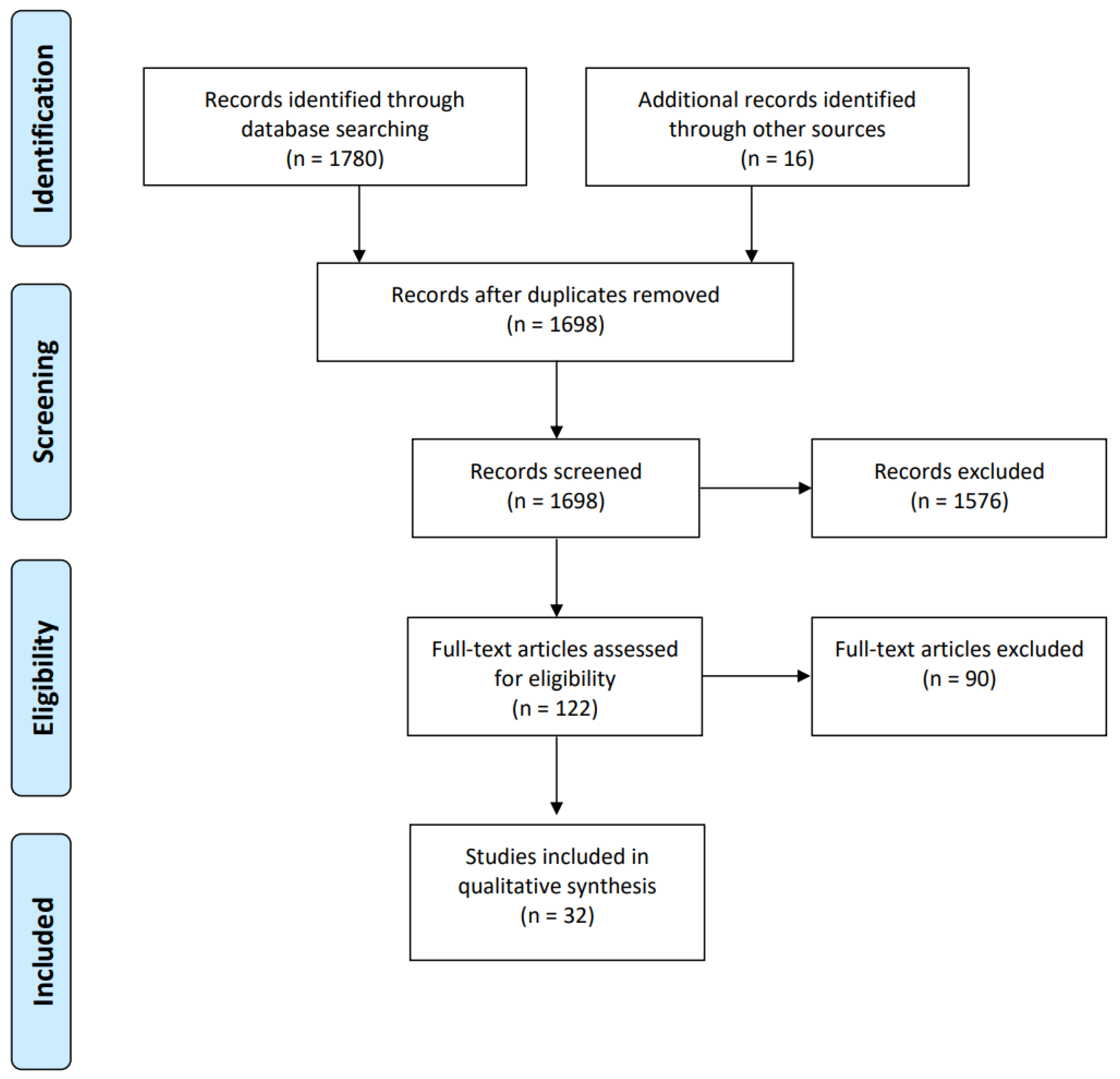

2. Materials and Methods

2.1. Search Strategy

2.2. Article Appraisal Method

2.3. Inclusion and Exclusion Criteria

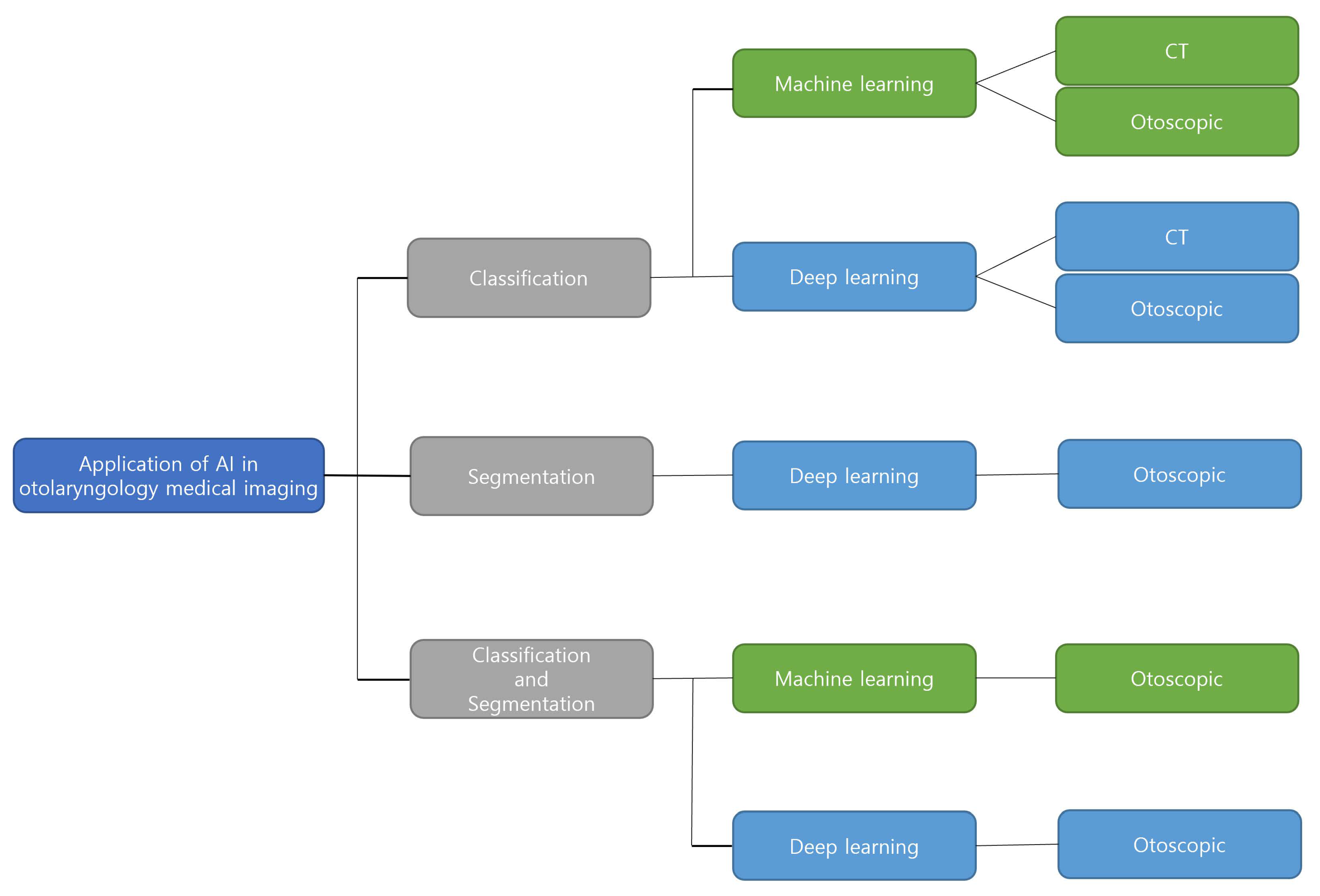

3. Result

3.1. Classification

3.2. Segmentation

3.3. Classification and Segmentation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boruk, M.; Paul, L.; Yelena, F.; Rosenfeld, R.M. Caregiver well-being and child quality of life. Otolaryngol. Neck Surg. 2007, 136, 159–168. [Google Scholar] [CrossRef] [PubMed]

- Tran, T.T.; Fang, T.Y.; Pham, V.T.; Lin, C.; Wang, P.C.; Lo, M.T. Development of an automatic diagnostic algorithm for pediatric otitis media. Otol. Neurotol. 2018, 39, 1060–1065. [Google Scholar] [CrossRef] [PubMed]

- Berman, S. Otitis media in children. N. Engl. J. Med. 1995, 332, 1560–1565. [Google Scholar] [CrossRef] [PubMed]

- DeAntonio, R.; Yarzabal, J.P.; Cruz, J.P.; Schmidt, J.E.; Kleijnen, J. Epidemiology of otitis media in children from developing countries: A systematic review. Int. J. Pediatr. Otorhinolaryngol. 2016, 85, 65–74. [Google Scholar] [CrossRef]

- Kenyon, G. Social otitis media: Ear infection and disparity in Australia. Lancet Infect. Dis. 2017, 17, 375–376. [Google Scholar] [CrossRef] [PubMed]

- Vanneste, P.; Page, C. Otitis media with effusion in children: Pathophysiology, diagnosis, and treatment. A review. J. Otol. 2019, 14, 33–39. [Google Scholar] [CrossRef]

- Crowson, M.G.; Hartnick, C.J.; Diercks, G.R.; Gallagher, T.Q.; Fracchia, M.S.; Setlur, J.; Cohen, M.S. Machine learning for accurate intraoperative pediatric middle ear effusion diagnosis. Pediatrics 2021, 147, e2020034546. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Lin, Z.; Li, L.; Pan, H.; Chen, G.; Fu, Y.; Qiu, Q. Deep learning for classification of pediatric otitis media. Laryngoscope 2021, 131, E2344–E2351. [Google Scholar] [CrossRef]

- Granath, A. Recurrent acute otitis media: What are the options for treatment and prevention? Curr. Otorhinolaryngol. Rep. 2017, 5, 93–100. [Google Scholar] [CrossRef]

- Blomgren, K.; Pitkäranta, A. Is it possible to diagnose acute otitis media accurately in primary health care? Fam. Pract. 2003, 20, 524–527. [Google Scholar] [CrossRef]

- Pichichero, M.E.; Poole, M.D. Assessing diagnostic accuracy and tympanocentesis skills in the management of otitis media. Arch. Pediatr. Adolesc. Med. 2001, 155, 1137–1142. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [PubMed]

- Latif, J.; Xiao, C.; Imran, A.; Tu, S. Medical imaging using machine learning and deep learning algorithms: A review. In Proceedings of the 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 30–31 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 230–243. [Google Scholar] [CrossRef]

- Monroy, G.L.; Won, J.; Dsouza, R.; Pande, P.; Hill, M.C.; Porter, R.G.; Novak, M.A.; Spillman, D.R.; Boppart, S.A. Automated classification platform for the identification of otitis media using optical coherence tomography. NPJ Digit. Med. 2019, 2, 22. [Google Scholar] [CrossRef] [PubMed]

- Rong, G.; Mendez, A.; Assi, E.B.; Zhao, B.; Sawan, M. Artificial intelligence in healthcare: Review and prediction case studies. Engineering 2020, 6, 291–301. [Google Scholar] [CrossRef]

- Ngombu, S.; Binol, H.; Gurcan, M.N.; Moberly, A.C. Advances in artificial intelligence to diagnose otitis media: State of the art review. Otolaryngol.-Head Neck Surg. 2023, 168, 635–642. [Google Scholar] [CrossRef]

- Bur, A.M.; Shew, M.; New, J. Artificial intelligence for the otolaryngologist: A state of the art review. Otolaryngol.-Head Neck Surg. 2019, 160, 603–611. [Google Scholar] [CrossRef]

- Habib, A.R.; Kajbafzadeh, M.; Hasan, Z.; Wong, E.; Gunasekera, H.; Perry, C.; Sacks, R.; Kumar, A.; Singh, N. Artificial intelligence to classify ear disease from otoscopy: A systematic review and meta-analysis. Clin. Otolaryngol. 2022, 47, 401–413. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; The PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Seok, J.; Song, J.J.; Koo, J.W.; Kim, H.C.; Choi, B.Y. The semantic segmentation approach for normal and pathologic tympanic membrane using deep learning. BioRxiv 2019, 515007. [Google Scholar] [CrossRef]

- Shie, C.K.; Chang, H.T.; Fan, F.C.; Chen, C.J.; Fang, T.Y.; Wang, P.C. A hybrid feature-based segmentation and classification system for the computer aided self-diagnosis of otitis media. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 4655–4658. [Google Scholar]

- Wang, W.; Liang, D.; Chen, Q.; Iwamoto, Y.; Han, X.H.; Zhang, Q.; Hu, H.; Lin, L.; Chen, Y.W. Medical image classification using deep learning. In Deep Learning in Healthcare; Springer: Cham, Switzerland, 2020; pp. 33–51. [Google Scholar]

- Myburgh, H.C.; Jose, S.; Swanepoel, D.W.; Laurent, C. Towards low cost automated smartphone-and cloud-based otitis media diagnosis. Biomed. Signal Process. Control 2018, 39, 34–52. [Google Scholar] [CrossRef]

- Zeng, X.; Jiang, Z.; Luo, W.; Li, H.; Li, H.; Li, G.; Shi, J.; Wu, K.; Liu, T.; Lin, X.; et al. Efficient and accurate identification of ear diseases using an ensemble deep learning model. Sci. Rep. 2021, 11, 10839. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Kwon, S.; Choo, J.; Hong, S.M.; Kang, S.H.; Park, I.H.; Kim, S.K.; Hong, S.J. Automatic detection of tympanic membrane and middle ear infection from oto-endoscopic images via convolutional neural networks. Neural Netw. 2020, 126, 384–394. [Google Scholar] [CrossRef] [PubMed]

- Eroğlu, O.; Eroğlu, Y.; Yıldırım, M.; Karlıdag, T.; Çınar, A.; Akyiğit, A.; Kaygusuz, İ.; Yıldırım, H.; Keleş, E.; Yalçın, Ş. Is it useful to use computerized tomography image-based artificial intelligence modelling in the differential diagnosis of chronic otitis media with and without cholesteatoma? Am. J. Otolaryngol. 2022, 43, 103395. [Google Scholar] [CrossRef] [PubMed]

- Cha, D.; Pae, C.; Seong, S.B.; Choi, J.Y.; Park, H.J. Automated diagnosis of ear disease using ensemble deep learning with a big otoendoscopy image database. EBioMedicine 2019, 45, 606–614. [Google Scholar] [CrossRef] [PubMed]

- Habib, A.; Wong, E.; Sacks, R.; Singh, N. Artificial intelligence to detect tympanic membrane perforations. J. Laryngol. Otol. 2020, 134, 311–315. [Google Scholar] [CrossRef]

- Byun, H.; Yu, S.; Oh, J.; Bae, J.; Yoon, M.S.; Lee, S.H.; Chung, J.H.; Kim, T.H. An assistive role of a machine learning network in diagnosis of middle ear diseases. J. Clin. Med. 2021, 10, 3198. [Google Scholar] [CrossRef]

- Mironică, I.; Vertan, C.; Gheorghe, D.C. Automatic pediatric otitis detection by classification of global image features. In Proceedings of the 2011 IEEE E-Health and Bioengineering Conference (EHB), Iasi, Romania, 24–26 November 2011; pp. 1–4. [Google Scholar]

- Wang, X.; Valdez, T.A.; Bi, J. Detecting tympanostomy tubes from otoscopic images via offline and online training. Comput. Biol. Med. 2015, 61, 107–118. [Google Scholar] [CrossRef]

- Myburgh, H.C.; Van Zijl, W.H.; Swanepoel, D.; Hellström, S.; Laurent, C. Otitis media diagnosis for developing countries using tympanic membrane image-analysis. EBioMedicine 2016, 5, 156–160. [Google Scholar] [CrossRef]

- Lee, J.Y.; Choi, S.H.; Chung, J.W. Automated classification of the tympanic membrane using a convolutional neural network. Appl. Sci. 2019, 9, 1827. [Google Scholar] [CrossRef]

- Livingstone, D.; Talai, A.S.; Chau, J.; Forkert, N.D. Building an Otoscopic screening prototype tool using deep learning. J. Otolaryngol.-Head Neck Surg. 2019, 48, 1–5. [Google Scholar] [CrossRef]

- Başaran, E.; Şengür, A.; Cömert, Z.; Budak, Ü.; Çelık, Y.; Velappan, S. Normal and acute tympanic membrane diagnosis based on gray level co-occurrence matrix and artificial neural networks. In Proceedings of the 2019 IEEE International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–6. [Google Scholar]

- Livingstone, D.; Chau, J. Otoscopic diagnosis using computer vision: An automated machine learning approach. Laryngoscope 2020, 130, 1408–1413. [Google Scholar] [CrossRef]

- Camalan, S.; Niazi, M.K.K.; Moberly, A.C.; Teknos, T.; Essig, G.; Elmaraghy, C.; Taj-Schaal, N.; Gurcan, M.N. OtoMatch: Content-based eardrum image retrieval using deep learning. PLoS ONE 2020, 15, e0232776. [Google Scholar] [CrossRef]

- Won, J.; Monroy, G.L.; Dsouza, R.I.; Spillman Jr, D.R.; McJunkin, J.; Porter, R.G.; Shi, J.; Aksamitiene, E.; Sherwood, M.; Stiger, L.; et al. Handheld briefcase optical coherence tomography with real-time machine learning classifier for middle ear infections. Biosensors 2021, 11, 143. [Google Scholar] [CrossRef]

- Tsutsumi, K.; Goshtasbi, K.; Risbud, A.; Khosravi, P.; Pang, J.C.; Lin, H.W.; Djalilian, H.R.; Abouzari, M. A web-based deep learning model for automated diagnosis of otoscopic images. Otol. Neurotol. 2021, 42, e1382–e1388. [Google Scholar] [CrossRef]

- Sundgaard, J.V.; Harte, J.; Bray, P.; Laugesen, S.; Kamide, Y.; Tanaka, C.; Paulsen, R.R.; Christensen, A.N. Deep metric learning for otitis media classification. Med. Image Anal. 2021, 71, 102034. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Dutta, M.K. Diagnosis of Ear Conditions Using Deep Learning Approach. In Proceedings of the 2021 IEEE International Conference on Communication, Control and Information Sciences (ICCISc), Idukki, India, 16–18 June 2021; Volume 1, pp. 1–5. [Google Scholar]

- Miwa, T.; Minoda, R.; Yamaguchi, T.; Kita, S.I.; Osaka, K.; Takeda, H.; Kanemaru, S.I.; Omori, K. Application of artificial intelligence using a convolutional neural network for detecting cholesteatoma in endoscopic enhanced images. Auris Nasus Larynx 2022, 49, 11–17. [Google Scholar] [CrossRef]

- Binol, H.; Niazi, M.K.K.; Elmaraghy, C.; Moberly, A.C.; Gurcan, M.N. OtoXNet—Automated identification of eardrum diseases from otoscope videos: A deep learning study for video-representing images. Neural Comput. Appl. 2022, 34, 12197–12210. [Google Scholar] [CrossRef]

- Habib, A.R.; Crossland, G.; Patel, H.; Wong, E.; Kong, K.; Gunasekera, H.; Richards, B.; Caffery, L.; Perry, C.; Sacks, R.; et al. An artificial intelligence computer-vision algorithm to triage otoscopic images from Australian Aboriginal and Torres Strait Islander children. Otol. Neurotol. 2022, 43, 481–488. [Google Scholar] [CrossRef]

- Ibekwe, T.; Nwaorgu, O. Otitis Media–Focusing on the Developing World; Irrua Specialist Teaching Hospital, Division of ENT Surgery: Irrua, Nigeria, 2010. [Google Scholar]

- Hughes, C.A.; McMenamin, P.; Mehta, V.; Pillsbury, H.; Kennedy, D. Otolaryngology workforce analysis. Laryngoscope 2016, 126, S5–S11. [Google Scholar] [CrossRef] [PubMed]

- Norouzi, A.; Rahim, M.S.M.; Altameem, A.; Saba, T.; Rad, A.E.; Rehman, A.; Uddin, M. Medical image segmentation methods, algorithms, and applications. IETE Tech. Rev. 2014, 31, 199–213. [Google Scholar] [CrossRef]

- Pham, V.T.; Tran, T.T.; Wang, P.C.; Chen, P.Y.; Lo, M.T. EAR-UNet: A deep learning-based approach for segmentation of tympanic membranes from otoscopic images. Artif. Intell. Med. 2021, 115, 102065. [Google Scholar] [CrossRef] [PubMed]

- Binol, H.; Moberly, A.C.; Niazi, M.K.K.; Essig, G.; Shah, J.; Elmaraghy, C.; Teknos, T.; Taj-Schaal, N.; Yu, L.; Gurcan, M.N. SelectStitch: Automated frame segmentation and stitching to create composite images from otoscope video clips. Appl. Sci. 2020, 10, 5894. [Google Scholar] [CrossRef]

- Hsu, C.Y.; Chen, Y.S.; Hwang, J.H.; Liu, T.C. A computer program to calculate the size of tympanic membrane perforations. Clin. Otolaryngol. Allied Sci. 2004, 29, 340–342. [Google Scholar] [CrossRef] [PubMed]

- Ibekwe, T.; Adeosun, A.; Nwaorgu, O. Quantitative analysis of tympanic membrane perforation: A simple and reliable method. J. Laryngol. Otol. 2009, 123, e2. [Google Scholar] [CrossRef]

- Ribeiro, F.d.A.Q.; Gaudino, V.R.R.; Pinheiro, C.D.; Marçal, G.J.; Mitre, E.I. Objective comparison between perforation and hearing loss. Braz. J. Otorhinolaryngol. 2014, 80, 386–389. [Google Scholar] [CrossRef]

- Xie, X.; Mirmehdi, M.; Maw, R.; Hall, A. Detecting abnormalities in tympanic membrane images. In Medical Image Understanding and Analysis; BMVA Press: Bristol, UK, 2005; pp. 19–22. [Google Scholar]

- Başaran, E.; Cömert, Z.; Çelik, Y. Convolutional neural network approach for automatic tympanic membrane detection and classification. Biomed. Signal Process. Control 2020, 56, 101734. [Google Scholar] [CrossRef]

- Viscaino, M.; Maass, J.C.; Delano, P.H.; Torrente, M.; Stott, C.; Auat Cheein, F. Computer-aided diagnosis of external and middle ear conditions: A machine learning approach. PLoS ONE 2020, 15, e0229226. [Google Scholar] [CrossRef]

- Teele, D.W.; Klein, J.O.; Rosner, B.; Group, G.B.O.M.S. Epidemiology of otitis media during the first seven years of life in children in greater Boston: A prospective, cohort study. J. Infect. Dis. 1989, 160, 83–94. [Google Scholar] [CrossRef]

- Goggin, L.S.; Eikelboom, R.H.; Atlas, M.D. Clinical decision support systems and computer-aided diagnosis in otology. Otolaryngol.-Head Neck Surg. 2007, 136, s21–s26. [Google Scholar] [CrossRef]

- Ning, A.Y.; Cabrera, C.I.; D’Anza, B. Telemedicine in otolaryngology: A systematic review of image quality, diagnostic concordance, and patient and provider satisfaction. Ann. Otol. Rhinol. Laryngol. 2021, 130, 195–204. [Google Scholar] [CrossRef] [PubMed]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef] [PubMed]

- Schwartz, R.H.; Rodriguez, W.J.; McAveney, W.; Grundfast, K.M. Cerumen removal: How necessary is it to diagnose acute otitis media? Am. J. Dis. Child. 1983, 137, 1064–1065. [Google Scholar] [CrossRef] [PubMed]

- Fairey, A.; Freer, C.; Machin, D. Ear wax and otitis media in children. Br. Med. J. 1985, 291, 387–388. [Google Scholar] [CrossRef]

| No. | Author | Number of Classes | Model | Image Type | Number of Images | Outcomes |

|---|---|---|---|---|---|---|

| 1 | Tran, T. et al. (2018) [2] | 2 | Multitask joint sparse representation-based classification (MTJSRC) | Otoscopic | 214 | Accuracy: 91.41% |

| 2 | Crowson, M.G. (2021) [7] | 2 | ResNet-34 | Endoscopic | 338 | Accuracy: 83.8% |

| 3 | Wu, Z. et al. (2021) [8] | 3 | Xception | Otoendoscopy | 12,203 | Accuracy 97.45% |

| 4 | Monroy, G.L. (2019) [15] | 3 | Twenty-two classifiers in MATLAB, random forest classifier | Optical coherence tomography (OCT) | 25,497 | Accuracy: 99.16% |

| 5 | Myburgh, H.C. et al. (2018) [24] | 5 | Neural network, Decision tree | Commercial video-otoscopes | 389 | Neural network accuracy: 86.84%,Decision tree accuracy: 81.58% |

| 6 | Zeng, X. et al. (2021) [25] | 8 | DenseNet169, DenseNet1615 | Endoscopic | 20,542 | Accuracy: 95.59% |

| 7 | Khan, M.A. et al. (2020) [26] | 3 | DenseNet161 | Otoendoscopy | 2,484 | Accuracy: 94.9% |

| 8 | Eroğlu, O. et al. (2022) [27] | 3 | (Alexnet, Googlenet, Densenet201) + SVM | CT | 3093 | Accuracy 95.4% |

| 9 | Cha, D. et al. (2019) [28] | 6 | InceptionV3, ResNet101 | Otoscopic | 10,544 | Accuracy: 93.73% |

| 10 | Habib, A.R. et al. (2020) [29] | 4 | InceptionV3 | Otoscopic | 233 | Accuracy: 76.0% |

| 11 | Byun, H. et al. (2021) [30] | 4 | ResNet18 + Shuffle | Endoscopic | 2272 | Accuracy: 97.18% |

| 12 | Mironică, I., Constantin, V., Dan, C.G. (2011) [31] | 2 | Neural Networks | Otoscopic | 186 | Accuracy: 73.11% |

| 13 | Wang, X., Tulio, A.V., Jinbo, B. (2015) [32] | 2 | cascaded classifier, SVM | Otoscopic | 215 | Accuracy: 90% |

| 14 | Myburgh, H.C. et al. (2016) [33] | 5 | Decision tree | Commercial video-otoscopes, Low cost custom-made video-otoscope | 489 | Commercial video-otoscopes accuracy: 80.6%, Low cost custom-made video-otoscope accuracy: 78.7% |

| 15 | Lee, J.Y., Choi, S., Chung, J.W. (2019) [34] | 2, 2 | Neural Networks | Endoscopic | 1338 | Tympanic membrane direction Accuracy: 97.9%, Perforation Accuracy: 91.0% |

| 16 | Livingstone, D. et al. (2019) [35] | 3 | Neural Networks | Otoscopic | 734 | Accuracy: 84.4% |

| 17 | Başaran, E. et al. (2019) [36] | 2 | Gray-level co-occurrence matrix (GLCM) and artificial neural network (ANN) | Otoscopic | 223 | Accuracy: 76.14% |

| 18 | Livingstone, D., Justin, C. (2020) [37] | 14 | Multilabel classifier architecture | Otoscopic | 1366 | Accuracy: 88.7% |

| 19 | Camalan, S. et al. (2020) [38] | 3 | Content-based image retrieval (CBIR) system | Otoscopic | 454 | Accuracy: 80.58% |

| 20 | Won, J. et al. (2021) [39] | 2 | Random forest | A-scan OCT | 25,479 | Accuracy: 91.5% |

| 21 | Tsutsumi, K. et al. (2021) [40] | 5 | MobileNet-V2 | Otoscopic | 400 | Accuracy: 77.0% |

| 22 | Sundgaard, J.V. et al. (2021) [41] | 3 | inceptionV3 | Otoscopic | 1,336 | Accuracy: 86% |

| 23 | Singh, A. and Malay, K.D. (2021) [42] | 4 | Neural Networks | Otoscopic | 880 | Accuracy: 96% |

| 24 | Miwa, T. et al. (2022) [43] | 3 | Single Shot MultiBox Detector (SSD) | CLARA + CHROMA, SPECTRA A, SPECTRA B | 826 | Accuracy: 48.7% |

| 25 | Binol, H. et al. (2022) [44] | 4 | OtoXNet | Otoscopy | 765 | Accuracy: 84.8% |

| 26 | Habib, A. et al. (2022) [45] | 5 | ResNet backbone | Endoscopic | 6,527 | Accuracy: 74.5% |

| No. | Author | Model | Image Type | Number of Images | Outcomes |

|---|---|---|---|---|---|

| 1 | Seok. J. et al. (2019) [21] | Mask R-CNN (ResNet-50 backbone) | Endoscopic | 920 | Accuracy: 92.9% |

| 2 | Pham. V. et al. (2021) [49] | EAR-UNet | Otoscopic | 1012 | Accuracy: 95.8% |

| 3 | Binol. H. et al. (2020) [50] | UNet | Otoscopic | 900 | Kendall’s Coefficient: 83.9% |

| No. | Author | Number of Classes | Model | Image Type | Number of Images | Outcomes |

|---|---|---|---|---|---|---|

| 1 | Shie, C. et al. (2014) [22] | 4 | Segmentation: Active Contour Models, Classification: Adaboost (adaptive boosting) | Otoscopic | 865 | Accuracy: 88.06% |

| 2 | Başaran, E., Zafer, C., Çelik, Y. (2020) [55] | 7 | Segmentation: Faster R-CNN, Classification: Vgg16 | Otoscopic | 282 | Accuracy: 90.45% |

| 3 | Viscaino, M. et al. (2020) [56] | 4 | Segmentation: Hough Transform, Classification: SVM | Otoscopic | 720 | Accuracy: 93.9% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, D.; Kim, T.; Lee, Y.; Kim, J. Image-Based Artificial Intelligence Technology for Diagnosing Middle Ear Diseases: A Systematic Review. J. Clin. Med. 2023, 12, 5831. https://doi.org/10.3390/jcm12185831

Song D, Kim T, Lee Y, Kim J. Image-Based Artificial Intelligence Technology for Diagnosing Middle Ear Diseases: A Systematic Review. Journal of Clinical Medicine. 2023; 12(18):5831. https://doi.org/10.3390/jcm12185831

Chicago/Turabian StyleSong, Dahye, Taewan Kim, Yeonjoon Lee, and Jaeyoung Kim. 2023. "Image-Based Artificial Intelligence Technology for Diagnosing Middle Ear Diseases: A Systematic Review" Journal of Clinical Medicine 12, no. 18: 5831. https://doi.org/10.3390/jcm12185831

APA StyleSong, D., Kim, T., Lee, Y., & Kim, J. (2023). Image-Based Artificial Intelligence Technology for Diagnosing Middle Ear Diseases: A Systematic Review. Journal of Clinical Medicine, 12(18), 5831. https://doi.org/10.3390/jcm12185831