Generative Adversarial Networks (GANs) in the Field of Head and Neck Surgery: Current Evidence and Prospects for the Future—A Systematic Review

Abstract

:1. Introduction

1.1. Background

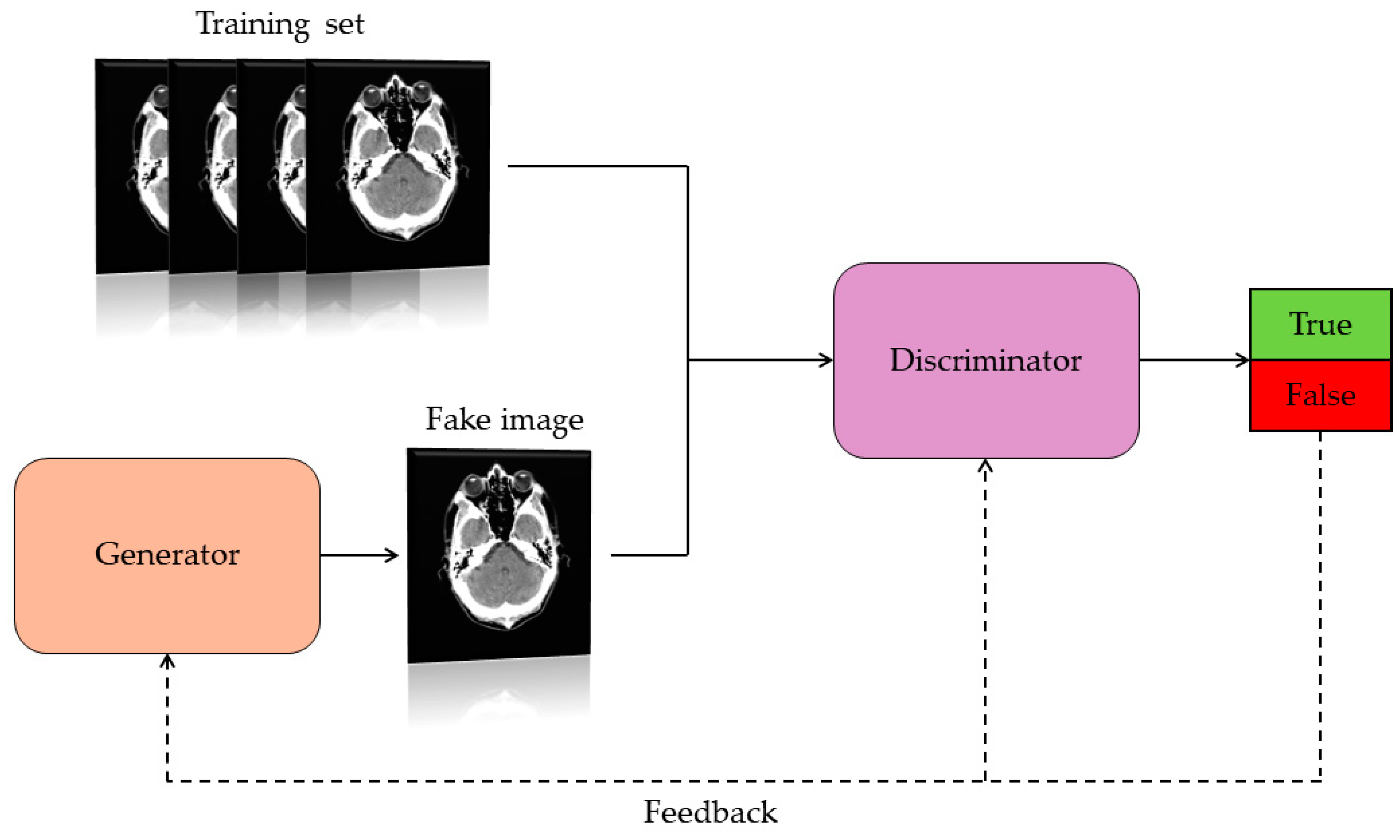

1.2. Generative Adversarial Network (GAN)

1.3. Objective of the Study

2. Materials and Methods

2.1. Literature Search

2.2. Data Collection

2.3. Inclusion and Exclusion Criteria Applied in the Collected Studies

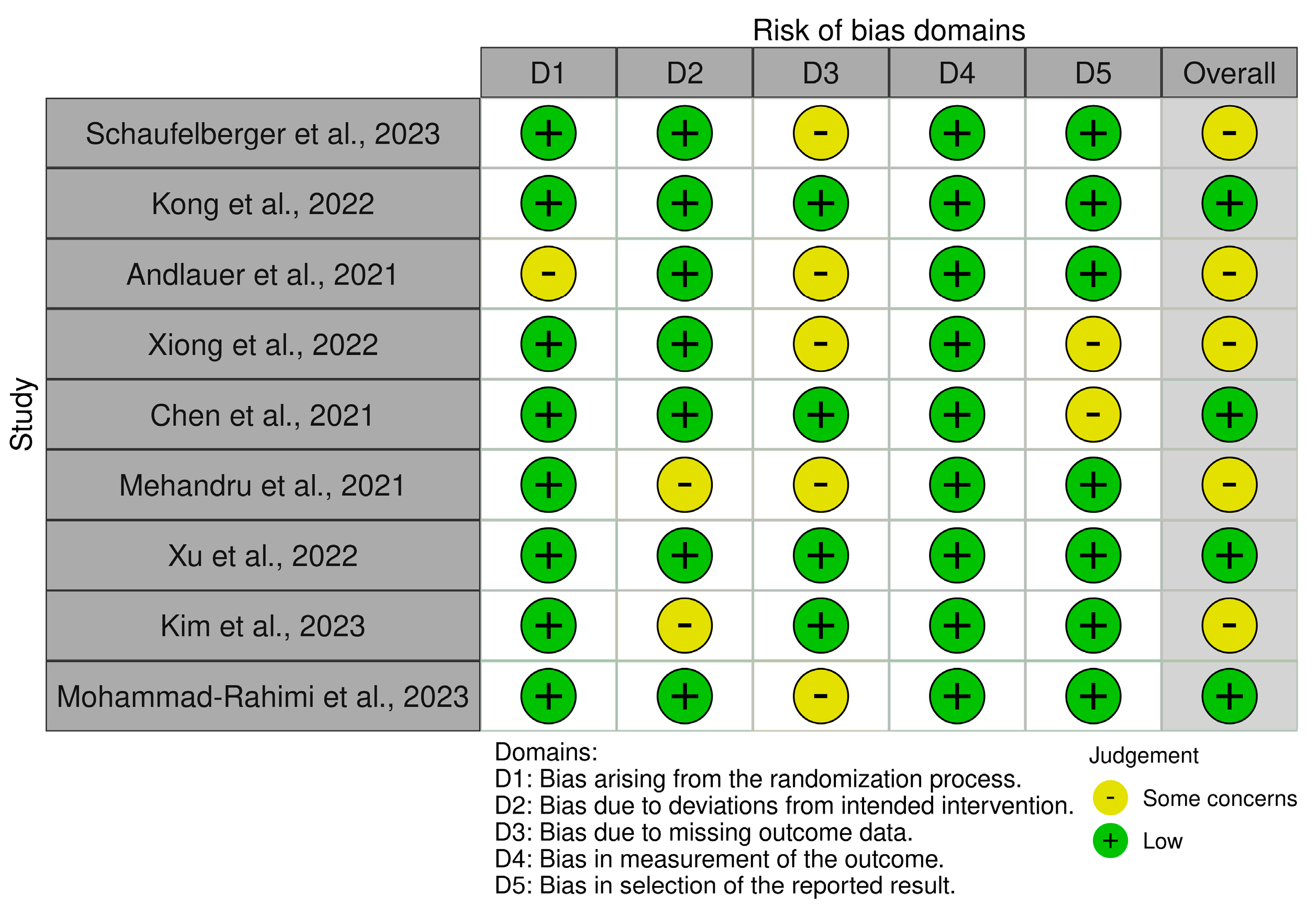

2.4. Bias Assessment

3. Results

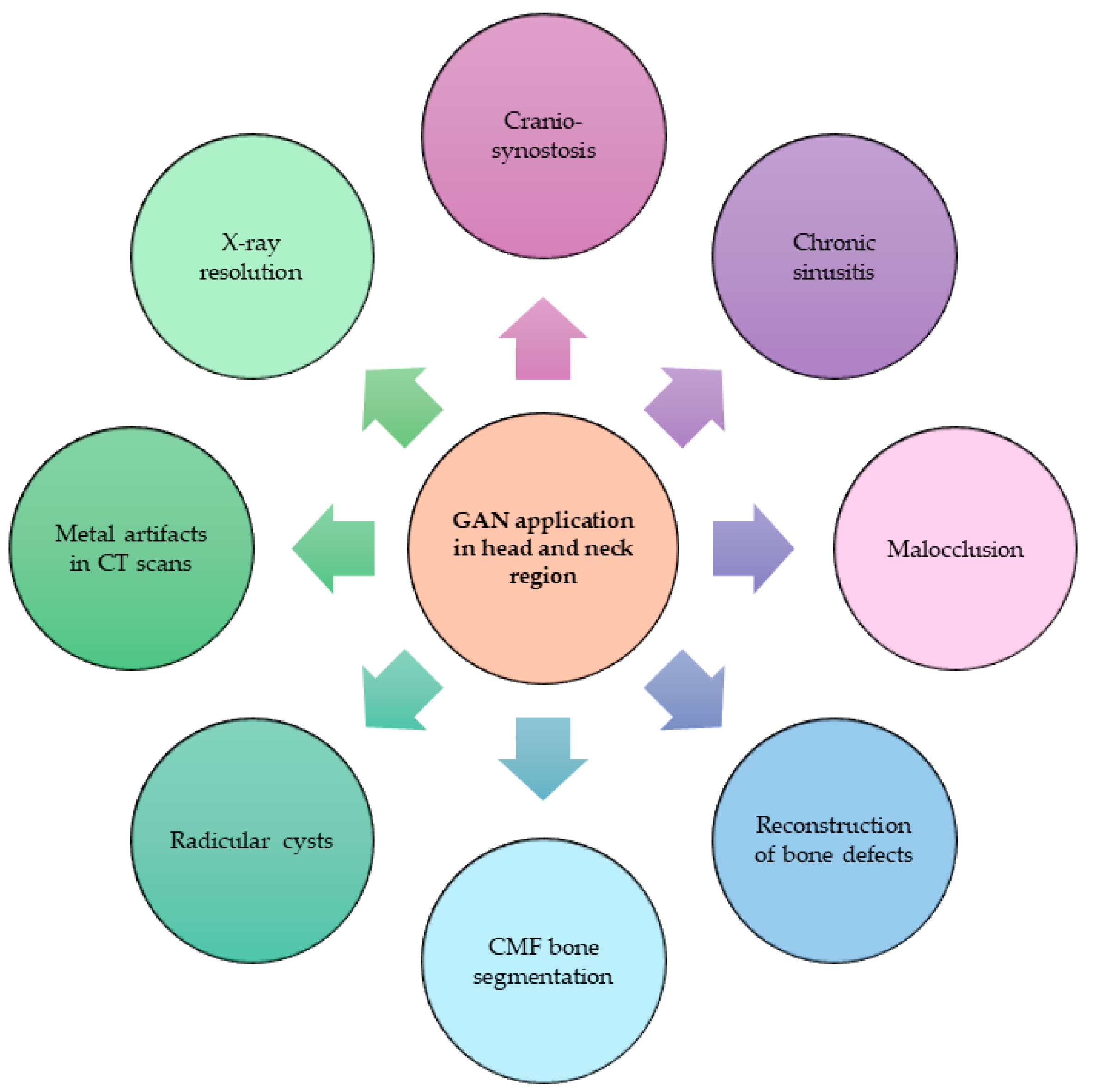

3.1. Topics

3.2. Reasons Why GANs Have Been Applied

3.3. GAN Architectures Used

| cDC-WGAN-GP | It is a model that combines the Wasserstein GAN (WGAN) and the Gradient Penalty (GP). The former can produce better quality samples, the latter introduces a penalty on gradients preventing them from vanishing or exploding further enhancing stability. In addition, being a conditional model, it can generate specific images, resulting in an advanced model for synthetic image generation. |

| AC-GAN [20] | It is an extension of conditional GAN (cGAN), which in turn is an extension of the GAN architecture. The cGAN can predict the class label of an image received as input. The AC-GAN has a discriminator that predicts the class label of an image. |

| Cycle-GAN [21] | It is an image–image translation model without the need to have paired examples. By image–image translation, we mean the creation of a new artificial version of an image with specific modifications. |

| DDA-GAN [22] | It is a model that can segment bone structures and exploit synthetic data generated from an annotated domain to improve the quality of segmentation of images from an unannotated domain. |

| CD-GAN [23] | It is a model for image-to-image transformation, transforming an image from one domain to another. It is based on a Cycle-GAN architecture, but unlike the latter, it evaluates the quality of synthetic images by additional cyclic discriminators, making them more realistic. |

| MAR-GAN | GAN model capable of removing metal artifacts present in CT scans. |

| Pix2pix-GAN [24] | It is a model of cGAN (conditional generative adversarial network) used for image-to-image translation. It features a generator that is based on the U-Net architecture and a discriminator represented by a PatchGAN classifier. |

| SR-GAN [25] | It is a model used for super-resolution imaging. It has the function of generating high-definition images from low-resolution images. |

3.4. Type of Data Analyzed

3.5. Parameters Adopted to Evaluate GANs

3.6. Results Obtained for the Single Studies Included

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mahmood, H.; Shaban, M.; Indave, B.I.; Santos-Silva, A.R.; Rajpoot, N.; Khurram, S.A. Use of Artificial Intelligence in Diagnosis of Head and Neck Precancerous and Cancerous Lesions: A Systematic Review. Oral Oncol. 2020, 110, 104885. [Google Scholar] [CrossRef]

- Resteghini, C.; Trama, A.; Borgonovi, E.; Hosni, H.; Corrao, G.; Orlandi, E.; Calareso, G.; De Cecco, L.; Piazza, C.; Mainardi, L.; et al. Big Data in Head and Neck Cancer. Curr. Treat. Options Oncol. 2018, 19, 62. [Google Scholar] [CrossRef]

- Mäkitie, A.A.; Alabi, R.O.; Ng, S.P.; Takes, R.P.; Robbins, K.T.; Ronen, O.; Shaha, A.R.; Bradley, P.J.; Saba, N.F.; Nuyts, S.; et al. Artificial Intelligence in Head and Neck Cancer: A Systematic Review of Systematic Reviews. Adv. Ther. 2023, 40, 3360–3380. [Google Scholar] [CrossRef]

- Abdel Razek, A.A.K.; Khaled, R.; Helmy, E.; Naglah, A.; AbdelKhalek, A.; El-Baz, A. Artificial Intelligence and Deep Learning of Head and Neck Cancer. Magn. Reson. Imaging Clin. N. Am. 2022, 30, 81–94. [Google Scholar] [CrossRef] [PubMed]

- Peng, Z.; Wang, Y.; Wang, Y.; Jiang, S.; Fan, R.; Zhang, H.; Jiang, W. Application of Radiomics and Machine Learning in Head and Neck Cancers. Int. J. Biol. Sci. 2021, 17, 475–486. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.M.; Terzic, A.; Becker, A.S.; Johnson, J.M.; Wu, C.C.; Wintermark, M.; Wald, C.; Wu, J. Artificial Intelligence in Oncologic Imaging. Eur. J. Radiol. Open 2022, 9, 100441. [Google Scholar] [CrossRef] [PubMed]

- Choi, R.Y.; Coyner, A.S.; Kalpathy-Cramer, J.; Chiang, M.F.; Campbell, J.P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 14. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Romeo, V.; Cuocolo, R.; Ricciardi, C.; Ugga, L.; Cocozza, S.; Verde, F.; Stanzione, A.; Napolitano, V.; Russo, D.; Improta, G.; et al. Prediction of Tumor Grade and Nodal Status in Oropharyngeal and Oral Cavity Squamous-Cell Carcinoma Using a Radiomic Approach. Anticancer Res. 2020, 40, 271–280. [Google Scholar] [CrossRef]

- Waters, M.R.; Inkman, M.; Jayachandran, K.; Kowalchuk, R.M.; Robinson, C.; Schwarz, J.K.; Swamidass, S.J.; Griffith, O.L.; Szymanski, J.J.; Zhang, J. GAiN: An Integrative Tool Utilizing Generative Adversarial Neural Networks for Augmented Gene Expression Analysis. Patterns 2024, 5, 100910. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Curran Associates, Inc.: New York, NY, USA, 2014; Volume 27. [Google Scholar]

- Ferreira, A.; Li, J.; Pomykala, K.L.; Kleesiek, J.; Alves, V.; Egger, J. GAN-Based Generation of Realistic 3D Volumetric Data: A Systematic Review and Taxonomy. Med. Image Anal. 2024, 93, 103100. [Google Scholar] [CrossRef] [PubMed]

- Osuala, R.; Kushibar, K.; Garrucho, L.; Linardos, A.; Szafranowska, Z.; Klein, S.; Glocker, B.; Diaz, O.; Lekadir, K. Data Synthesis and Adversarial Networks: A Review and Meta-Analysis in Cancer Imaging. Med. Image Anal. 2023, 84, 102704. [Google Scholar] [CrossRef] [PubMed]

- Loey, M.; Smarandache, F.; M. Khalifa, N.E. Within the Lack of Chest COVID-19 X-Ray Dataset: A Novel Detection Model Based on GAN and Deep Transfer Learning. Symmetry 2020, 12, 651. [Google Scholar] [CrossRef]

- Fujioka, T.; Kubota, K.; Mori, M.; Kikuchi, Y.; Katsuta, L.; Kimura, M.; Yamaga, E.; Adachi, M.; Oda, G.; Nakagawa, T.; et al. Efficient Anomaly Detection with Generative Adversarial Network for Breast Ultrasound Imaging. Diagnostics 2020, 10, 456. [Google Scholar] [CrossRef] [PubMed]

- Maniaci, A.; Fakhry, N.; Chiesa-Estomba, C.; Lechien, J.R.; Lavalle, S. Synergizing ChatGPT and General AI for Enhanced Medical Diagnostic Processes in Head and Neck Imaging. Eur. Arch. Otorhinolaryngol. 2024, 281, 3297–3298. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- McGuinness, L.A.; Higgins, J.P.T. Risk-of-bias VISualization (Robvis): An R Package and Shiny Web App for Visualizing Risk-of-bias Assessments. Res. Synth. Methods 2021, 12, 55–61. [Google Scholar] [CrossRef] [PubMed]

- GitHub. ChenKaiXuSan/ACGAN-PyTorch: PyTorch Implements of Auxiliary Classifier GAN. Available online: https://github.com/ChenKaiXuSan/ACGAN-PyTorch (accessed on 1 April 2024).

- GitHub. Junyanz/CycleGAN: Software That Can Generate Photos from Paintings, Turn Horses into Zebras, Perform Style Transfer, and More. Available online: https://github.com/junyanz/CycleGAN (accessed on 1 April 2024).

- Huang, C.-E.; Li, Y.-H.; Aslam, M.S.; Chang, C.-C. Super-Resolution Generative Adversarial Network Based on the Dual Dimension Attention Mechanism for Biometric Image Super-Resolution. Sensors 2021, 21, 7817. [Google Scholar] [CrossRef] [PubMed]

- GitHub. KishanKancharagunta/CDGAN: CDGAN: Cyclic Discriminative Generative Adversarial Networks for Image-to-Image Transformation. Available online: https://github.com/KishanKancharagunta/CDGAN (accessed on 1 April 2024).

- GitHub. 4vedi/Pix2Pix_GAN: Image-to-Image Translation with Conditional Adversarial Nets. Available online: https://github.com/4vedi/Pix2Pix_GAN (accessed on 1 April 2024).

- GitHub. Tensorlayer/SRGAN: Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. Available online: https://github.com/tensorlayer/srgan (accessed on 1 April 2024).

- Kong, H.-J.; Kim, J.Y.; Moon, H.-M.; Park, H.C.; Kim, J.-W.; Lim, R.; Woo, J.; Fakhri, G.E.; Kim, D.W.; Kim, S. Automation of Generative Adversarial Network-Based Synthetic Data-Augmentation for Maximizing the Diagnostic Performance with Paranasal Imaging. Sci. Rep. 2022, 12, 18118. [Google Scholar] [CrossRef]

- Andlauer, R.; Wachter, A.; Schaufelberger, M.; Weichel, F.; Kuhle, R.; Freudlsperger, C.; Nahm, W. 3D-Guided Face Manipulation of 2D Images for the Prediction of Post-Operative Outcome after Cranio-Maxillofacial Surgery. IEEE Trans. Image Process. 2021, 30, 7349–7363. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Lian, C.; Wang, L.; Deng, H.; Kuang, T.; Fung, S.H.; Gateno, J.; Shen, D.; Xia, J.J.; Yap, P.-T. Diverse Data Augmentation for Learning Image Segmentation with Cross-Modality Annotations. Med. Image Anal. 2021, 71, 102060. [Google Scholar] [CrossRef] [PubMed]

- Schaufelberger, M.; Kühle, R.P.; Wachter, A.; Weichel, F.; Hagen, N.; Ringwald, F.; Eisenmann, U.; Hoffmann, J.; Engel, M.; Freudlsperger, C.; et al. Impact of Data Synthesis Strategies for the Classification of Craniosynostosis. Front. Med. Technol. 2023, 5, 1254690. [Google Scholar] [CrossRef] [PubMed]

- Mehandru, N.; Hicks, W.L.; Singh, A.K.; Hsu, L.; Markiewicz, M.R.; Seshadri, M. Detection of Pathology in Panoramic Radiographs via Machine Learning Using Neural Networks for Dataset Size Augmentation. J. Oral Maxillofac. Surg. 2021, 79, e6–e7. [Google Scholar] [CrossRef]

- Xiong, Y.-T.; Zeng, W.; Xu, L.; Guo, J.-X.; Liu, C.; Chen, J.-T.; Du, X.-Y.; Tang, W. Virtual Reconstruction of Midfacial Bone Defect Based on Generative Adversarial Network. Head Face Med. 2022, 18, 19. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Zhou, S.; Guo, J.; Tian, W.; Tang, W.; Yi, Z. Metal Artifact Reduction for Oral and Maxillofacial Computed Tomography Images by a Generative Adversarial Network. Appl. Intell. 2022, 52, 13184–13194. [Google Scholar] [CrossRef]

- Kim, H.-S.; Ha, E.-G.; Lee, A.; Choi, Y.J.; Jeon, K.J.; Han, S.-S.; Lee, C. Refinement of Image Quality in Panoramic Radiography Using a Generative Adversarial Network. Dentomaxillofac. Radiol. 2023, 52, 20230007. [Google Scholar] [CrossRef] [PubMed]

- Mohammad-Rahimi, H.; Vinayahalingam, S.; Mahmoudinia, E.; Soltani, P.; Bergé, S.J.; Krois, J.; Schwendicke, F. Super-Resolution of Dental Panoramic Radiographs Using Deep Learning: A Pilot Study. Diagnostics 2023, 13, 996. [Google Scholar] [CrossRef] [PubMed]

- Park, J.E.; Eun, D.; Kim, H.S.; Lee, D.H.; Jang, R.W.; Kim, N. Generative Adversarial Network for Glioblastoma Ensures Morphologic Variations and Improves Diagnostic Model for Isocitrate Dehydrogenase Mutant Type. Sci. Rep. 2021, 11, 9912. [Google Scholar] [CrossRef]

- Michelutti, L.; Tel, A.; Zeppieri, M.; Ius, T.; Sembronio, S.; Robiony, M. The Use of Artificial Intelligence Algorithms in the Prognosis and Detection of Lymph Node Involvement in Head and Neck Cancer and Possible Impact in the Development of Personalized Therapeutic Strategy: A Systematic Review. J. Pers. Med. 2023, 13, 1626. [Google Scholar] [CrossRef]

- Chinnery, T.; Arifin, A.; Tay, K.Y.; Leung, A.; Nichols, A.C.; Palma, D.A.; Mattonen, S.A.; Lang, P. Utilizing Artificial Intelligence for Head and Neck Cancer Outcomes Prediction From Imaging. Can. Assoc. Radiol. J. 2021, 72, 73–85. [Google Scholar] [CrossRef] [PubMed]

- Chen, H. Challenges and Corresponding Solutions of Generative Adversarial Networks (GANs): A Survey Study. J. Phys. Conf. Ser. 2021, 1827, 012066. [Google Scholar] [CrossRef]

- Lechien, J.R.; Maniaci, A.; Gengler, I.; Hans, S.; Chiesa-Estomba, C.M.; Vaira, L.A. Validity and Reliability of an Instrument Evaluating the Performance of Intelligent Chatbot: The Artificial Intelligence Performance Instrument (AIPI). Eur. Arch. Otorhinolaryngol. 2024, 281, 2063–2079. [Google Scholar] [CrossRef] [PubMed]

| Classification | Model that can correctly classify input data by only emitting labels. In this model, the discriminator is a classification network. |

| Segmentation | It is used to classify voxels in order to identify objects using two GANs: the first produces synthetic scans, while the second has a segmentation network (using synthetic and real data) and a discriminator. |

| Reconstruction | GANs trained to reconstruct incomplete objects through a process with the purpose of acquiring the form and appearance of real objects. |

| Denoising | GANs trained to rebuild the true appearance of the object by removal of noise, artifacts, and causal data. |

| Image translation | GAN capable of converting an input image into another artificial version of that image, for example, transforming a CT scan into an MRI image. |

| General activities | GAN capable of generating synthetic data without applying it in a specific activity. |

| Participant | Patients with diseases, oncological and otherwise, of the head-neck region |

| Interventions | Evaluation of the effects of the application of GANs |

| Comparators | N/A |

| Outcomes | AUC, sensibility, specificity |

| Study design | Research articles, original articles, cohort studies, clinical trials, randomized clinical trials |

| Topics | Reason for Applying GANs | Architecture GAN Used | Data Used | Method | Results | |

|---|---|---|---|---|---|---|

| Schaufelberger et al., 2023 [29] | Craniosynostosis classification | Generation of synthetic data to train a CNN to classify craniosynostosis, given the paucity of clinical data | cDC-WGAN-GP | 75% of the clinical data were used to train GANs to generate synthetic data on which CNN was trained, while the remaining 25% was used for the test set for CNN. | A CNN was trained with purely synthetic data to classify craniosynostosis. The synthetic data were generated from three different generative models: GAN, SSM, and PCA. | Classification of craniosynostosis with a synthetic data set has been shown to have similar performance to that of a classifier trained on clinical data. |

| Kong et al., 2022 [26] | Imaging data generation for paranasal pathology (chronic sinusitis) | Synthetic data generation to improve the diagnostic performance of a DL model given the presence of possible problems in procuring clinical data, including: poor availability, need for experienced radiologists, and privacy issues | AC-GAN | Patients diagnosed with chronic sinusitis and undergoing X-ray examination were included. Included only patients who underwent both RX and CT with time distance of less than 14 days. The 389 paranasal RXs of the internal data set (212 images with sinusitis and 177 normal) were divided: 80% training set, 10% validation set, 20% test set). | All conventional radiographs were labeled as “sinusitis” or “normal” by experienced rhinologists by studying CT scans. One DL model, ChexNet, was trained in several ways, among them only with the original training data and with data obtained through GANs. | Of the various models analyzed in this study, the GBC + OMGDA model performed better in terms of AUC, accuracy, sensitivity, specificity, F1 score, PPV, and NPV. It was shown that the DL model had better diagnostic performance when trained with original data and synthetic data generated GAN data. |

| Andlauer et al., 2021 [27] | Postoperative prediction for temporomandibular disorders and malocclusion | Predicting the postoperative face using preoperative 2D images and 3D simulation of postoperative soft tissue, without the need for difficult-to-acquire preoperative 3D images, for patients to undergo bimaxillary surgery for class II and class III malocclusion | Cycle-GAN | Used preoperative 2D images and a postoperative 3D simulation using preoperative facial CT images of four patients with malocclusion | CT scans were used to simulate bimaxillary surgery, and bone and soft tissue segmentations were applied on being. These 3D simulations, along with preoperative 2D images, were used to train the GAN. | Cycle-GAN was shown to predict realistic chin and nose changes on selected examples. The accuracy of the predictions was evaluated by the Euclidean distance of the facial landmarks. Unfortunately, some prediction errors were found concerning the nose region, while there appeared to be no errors for the chin region. According to the authors, this is probably due to the need for more detailed plots for the study of the nose that change based on different head poses. Nevertheless, it seems to be a promising tool for predicting postoperative outcome. |

| Xiong et al., 2022 [31] | Reconstruction of midfacial bone defects | Training a GAN to reconstruct midfacial defects. There are reconstruction methods such as mirror technology, but it cannot be used for I midspan and bilateral defects. In addition, training the DL model requires a large amount of data, which is not always achievable with clinical data alone. | GAN | CT scans with different defects: median and unilateral were used in this study | For GAN training, spherical, cuboid and half-cylindrical artificial defects were manually inserted on the real and normal CT scans in 5 structural regions to simulate bone defects. To evaluate the performance of GAN, cosine similarity (an indicator to assess the similarity of two objects) was calculated. The reconstructed images were compared with the real ones, and the obtained effect of the rebuilt area was evaluated by surgeons. | To evaluate the performance of the GAN used, cosine similarity was assessed. The study obtained a result of 0.97 in terms of reconstruction of artificial defects and 0.96 in terms of reconstruction of unilateral defects. |

| Chen et al., 2021 [28] | Craniomaxillofacial bone segmentation | Synthetic data generation to train DL model to segment craniomaxillofacial CT scan. The paucity of available clinical data is a problem for having reliable segmentation models. In addition, we want to investigate whether from CT scans can be used to train segmentation models for MRI. | DDA-GAN | Use 50 CT scans as a training set and 50 MRI scans. | MRI-CT data were used to train the GAN to perform segmentations, and MRI scans were used for validation. The performance of the GAN was evaluated using DSC and ASSD, compared with different image segmentation methods, including PnP-AdaNet, SIFA, and SynSeg-Net. | The GAN analyzed by the study (DDA-GAN), outperforms SynSeg-Net, a Cycle-GAN used to train segmentation models. The DDA-GAN was shown to be superior because it avoids geometric distortions and improves segmentation performance. |

| Mehandru et al. 2021 [30] | Pathology detection in panoramic RX (root cysts) | Synthetic data generation to train a CNN to recognize root cysts in panoramic X-rays | CD-GAN | 34 panoramic RXs of root cysts and 34 normal ones were divided into training and test sets (75% and 25% respectively). In addition to these, synthetic images generated by GAN were added. | Two CNNs were compared for the purpose of recognizing root cysts from panoramic X-rays: the first uses non-GAN-generated data, while the second also takes advantage of synthetic images. Both CNNs were then evaluated in terms of AUC. | The proposed model has demonstrated better performance in terms of area under the curve (AUC), sensitivity, and specificity. Comparing the receiver operating characteristic (ROC) curves, the performance of the convolutional neural network (CNN) trained with synthetic images was superior to that of the CNN trained with non-artificial images (95.1% vs. 89.3%). |

| Xu et al., 2022 [32] | Reduction of metal artifacts in oral maxillofacial CT | Build a GAN-based model to reduce artifacts and improve image quality | MAR-GAN | For this study, CT scans were used on which metal artifacts were artificially simulated. | The parameters used to evaluate the performance of the studied GAN are RMSE and SSIM. | The used GAN in this study has high performance, demonstrainting its ability to effictibely reduce the metallic artifacts that were artificially inserted. |

| Kim et al., 2023 [33] | Image resolution refinement of panoramic RX | Create a GAN model that can improve image quality | Pix2Pix-GAN | In this study, panoramic X-rays were used. From these, images with poor quality were obtained to test the Pix2Pix-GAN’s ability to improve image quality. | The quality of the generated images was evaluated by radiologist experienced in maxillofacial pathology. | The proposed model performed very well on images with blur in the anterior tooth region, while it was less effective in improving image quality with blur and noise. |

| Mohammad-Rahimi et al., 2023 [34] | Image resolution refinement of panoramic RX | Compare different DL-based super resolution (SR) models to improve the resolution of panoramic RXs | SR-GAN | 888 Dental panoramic X-rays | Five super-resolution models were compared, including SRCNN, SRGAN, U-Net, Swinlr, and LTE. The results were compared with each other by conventional bicubic interpolation. The parameters of MSE, PNSR, SSIM, and MOS were evaluated. | The GAN included in this study (SR-GAN) has demonstrated significant performance in improving the resolution of panoramic X-rays in termins of mean opinion score (MOS). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Michelutti, L.; Tel, A.; Zeppieri, M.; Ius, T.; Agosti, E.; Sembronio, S.; Robiony, M. Generative Adversarial Networks (GANs) in the Field of Head and Neck Surgery: Current Evidence and Prospects for the Future—A Systematic Review. J. Clin. Med. 2024, 13, 3556. https://doi.org/10.3390/jcm13123556

Michelutti L, Tel A, Zeppieri M, Ius T, Agosti E, Sembronio S, Robiony M. Generative Adversarial Networks (GANs) in the Field of Head and Neck Surgery: Current Evidence and Prospects for the Future—A Systematic Review. Journal of Clinical Medicine. 2024; 13(12):3556. https://doi.org/10.3390/jcm13123556

Chicago/Turabian StyleMichelutti, Luca, Alessandro Tel, Marco Zeppieri, Tamara Ius, Edoardo Agosti, Salvatore Sembronio, and Massimo Robiony. 2024. "Generative Adversarial Networks (GANs) in the Field of Head and Neck Surgery: Current Evidence and Prospects for the Future—A Systematic Review" Journal of Clinical Medicine 13, no. 12: 3556. https://doi.org/10.3390/jcm13123556