Enhancing Ovarian Tumor Diagnosis: Performance of Convolutional Neural Networks in Classifying Ovarian Masses Using Ultrasound Images

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

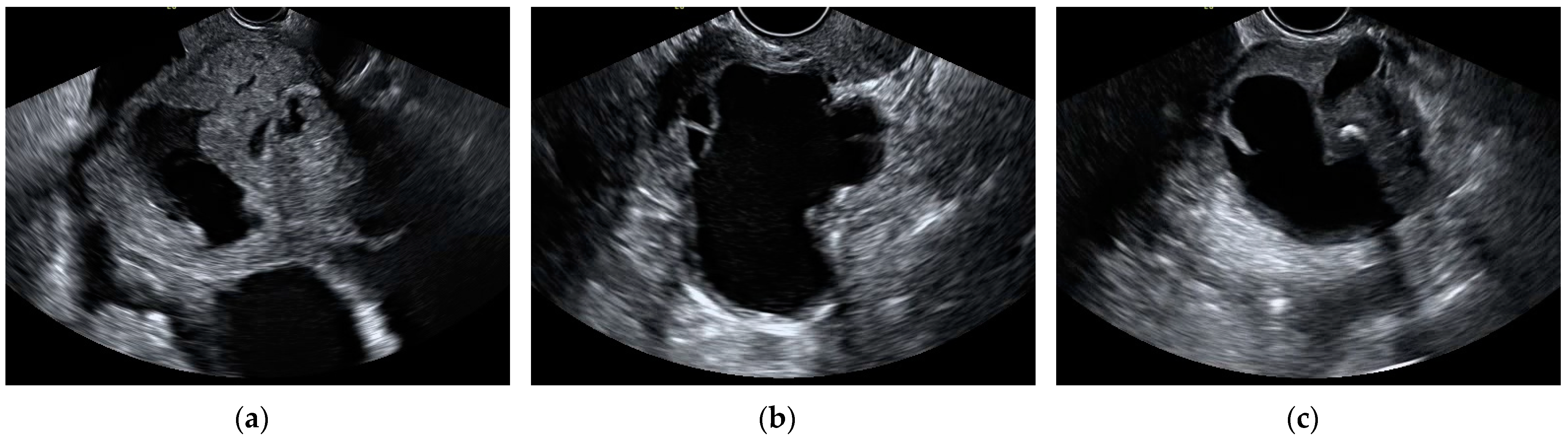

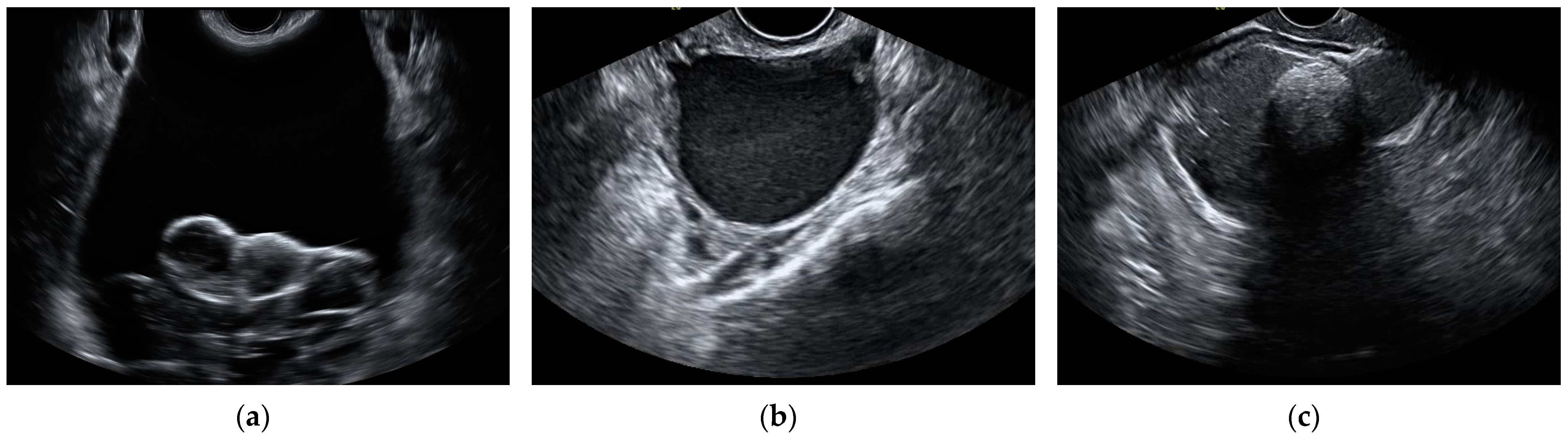

2.2. Ultrasound Assessment

2.3. Data Processing

2.4. Model Building

2.5. Training Process

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Siegel, R.L.; Giaquinto, A.N.; Jemal, A. Cancer statistics, 2024. CA Cancer J. Clin. 2024, 74, 12–49. [Google Scholar] [CrossRef] [PubMed]

- McCorkle, R.; Pasacreta, J.; Tang, S.T. The silent killer: Psychological issues in ovarian cancer. Holist. Nurs. Pract. 2003, 17, 300–308. [Google Scholar] [CrossRef] [PubMed]

- Forstner, R. Early detection of ovarian cancer. Eur. Radiol. 2020, 30, 5370–5373. [Google Scholar] [CrossRef] [PubMed]

- Froyman, W.; Timmerman, D. Methods of Assessing Ovarian Masses: International Ovarian Tumor Analysis Approach. Obstet. Gynecol. Clin. N. Am. 2019, 46, 625–641. [Google Scholar] [CrossRef] [PubMed]

- Timmerman, D.; Schwärzler, P.; Collins, W.P.; Claerhout, F.; Coenen, M.; Amant, F.; Vergote, I.; Bourne, T.H. Subjective assessment of adnexal masses with the use of ultrasonography: An analysis of interobserver variability and experience. Ultrasound Obstet. Gynecol. 1999, 13, 11–16. [Google Scholar] [CrossRef] [PubMed]

- Timmerman, D. The use of mathematical models to evaluate pelvic masses; can they beat an expert operator? Best Pract. Res. Clin. Obstet. Gynaecol. 2004, 18, 91–104. [Google Scholar] [CrossRef] [PubMed]

- Timmerman, D.; Testa, A.C.; Bourne, T.; Ameye, L.; Jurkovic, D.; Van Holsbeke, C.; Paladini, D.; Van Calster, B.; Vergote, I.; Van Huffel, S.; et al. Simple ultrasound-based rules for the diagnosis of ovarian cancer. Ultrasound Obstet. Gynecol. 2008, 31, 681–690. [Google Scholar] [CrossRef]

- Valentin, L.; Hagen, B.; Tingulstad, S.; Eik-Nes, S. Comparison of ‘pattern recognition’ and logistic regression models for discrimination between benign and malignant pelvic masses: A prospective cross validation. Ultrasound Obstet. Gynecol. 2001, 18, 357–365. [Google Scholar] [CrossRef] [PubMed]

- Van Calster, B.; Van Hoorde, K.; Valentin, L.; Testa, A.C.; Fischerova, D.; Van Holsbeke, C.; Savelli, L.; Franchi, D.; Epstein, E.; Kaijser, J.; et al. Evaluating the risk of ovarian cancer before surgery using the ADNEX model to differentiate between benign, borderline, early and advanced stage invasive, and secondary metastatic tumours: Prospective multicentre diagnostic study. BMJ 2014, 349, g5920. [Google Scholar] [CrossRef]

- Zhang, Q.; Dai, X.; Li, W. Systematic Review and Meta-Analysis of O-RADS Ultrasound and O-RADS MRI for Risk Assessment of Ovarian and Adnexal Lesions. AJR Am. J. Roentgenol. 2023, 221, 21–33. [Google Scholar] [CrossRef]

- Kaijser, J.; Bourne, T.; Valentin, L.; Sayasneh, A.; Van Holsbeke, C.; Vergote, I.; Testa, A.C.; Franchi, D.; Van Calster, B.; Timmerman, D. Improving strategies for diagnosing ovarian cancer: A summary of the International Ovarian Tumor Analysis (IOTA) studies. Ultrasound Obstet. Gynecol. 2013, 41, 9–20. [Google Scholar] [CrossRef]

- Giourga, M.; Pouliakis, A.; Vlastarakos, P.; Stavrou, S.; Tsiriva, M.; Gerede, A.; Daskalakis, G.; Voros, C.; Drakakis, P.; Domali, E. Evaluation of IOTA-ADNEX Model and Simple Rules for Identifying Adnexal Masses by Operators with Varying Levels of Expertise: A Single-Center Diagnostic Accuracy Study. Ultrasound Int. Open 2023, 9, E11–E17. [Google Scholar] [CrossRef] [PubMed]

- Akazawa, M.; Hashimoto, K. Artificial intelligence in gynecologic cancers: Current status and future challenges—A systematic review. Artif. Intell. Med. 2021, 120, 102164. [Google Scholar] [CrossRef] [PubMed]

- Bini, S.A. Artificial Intelligence, Machine Learning, Deep Learning, and Cognitive Computing: What Do These Terms Mean and How Will They Impact Health Care? J. Arthroplast. 2018, 33, 2358–2361. [Google Scholar] [CrossRef]

- Chen, X.; Huo, X.F.; Wu, Z.; Lu, J.J. Advances of Artificial Intelligence Application in Medical Imaging of Ovarian Cancers. Chin. Med. Sci. J. 2021, 36, 196–203. [Google Scholar] [CrossRef]

- Malani, S.N.; Shrivastava, D.; Raka, M.S. A Comprehensive Review of the Role of Artificial Intelligence in Obstetrics and Gynecology. Cureus 2023, 15, e34891. [Google Scholar] [CrossRef] [PubMed]

- Acharya, U.R.; Mookiah, M.R.; Vinitha Sree, S.; Yanti, R.; Martis, R.J.; Saba, L.; Molinari, F.; Guerriero, S.; Suri, J.S. Evolutionary algorithm-based classifier parameter tuning for automatic ovarian cancer tissue characterization and classification. Ultraschall Med. 2014, 35, 237–245. [Google Scholar] [CrossRef]

- Akazawa, M.; Hashimoto, K. Artificial Intelligence in Ovarian Cancer Diagnosis. Anticancer Res. 2020, 40, 4795–4800. [Google Scholar] [CrossRef]

- Sone, K.; Toyohara, Y.; Taguchi, A.; Miyamoto, Y.; Tanikawa, M.; Uchino-Mori, M.; Iriyama, T.; Tsuruga, T.; Osuga, Y. Application of artificial intelligence in gynecologic malignancies: A review. J. Obstet. Gynaecol. Res. 2021, 47, 2577–2585. [Google Scholar] [CrossRef]

- Barcroft, J.F.; Linton-Reid, K.; Landolfo, C.; Al-Memar, M.; Parker, N.; Kyriacou, C.; Munaretto, M.; Fantauzzi, M.; Cooper, N.; Yazbek, J.; et al. Machine learning and radiomics for segmentation and classification of adnexal masses on ultrasound. NPJ Precis Oncol. 2024, 8, 41. [Google Scholar] [CrossRef]

- Christiansen, F.; Epstein, E.L.; Smedberg, E.; Åkerlund, M.; Smith, K.; Epstein, E. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: Comparison with expert subjective assessment. Ultrasound Obstet. Gynecol. 2021, 57, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Zeng, S.; Xu, X.; Li, H.; Yao, S.; Song, K.; Li, X.; Chen, L.; Tang, J.; Xing, H.; et al. Deep learning-enabled pelvic ultrasound images for accurate diagnosis of ovarian cancer in China: A retrospective, multicentre, diagnostic study. Lancet Digit. Health 2022, 4, e179–e187. [Google Scholar] [CrossRef] [PubMed]

- Hsu, S.T.; Su, Y.J.; Hung, C.H.; Chen, M.J.; Lu, C.H.; Kuo, C.E. Automatic ovarian tumors recognition system based on ensemble convolutional neural network with ultrasound imaging. BMC Med. Inform. Decis. Mak. 2022, 22, 298. [Google Scholar] [CrossRef] [PubMed]

- Jung, Y.; Kim, T.; Han, M.R.; Kim, S.; Kim, G.; Lee, S.; Choi, Y.J. Ovarian tumor diagnosis using deep convolutional neural networks and a denoising convolutional autoencoder. Sci. Rep. 2022, 12, 17024. [Google Scholar] [CrossRef]

- Farag, N.H.; Alsaggaf, Z.H.; Bamardouf, N.O.; Khesfaty, D.M.; Fatani, M.M.; Alghamdi, M.K.; Saharti, S.N. The Histopathological Patterns of Ovarian Neoplasms in Different Age Groups: A Retrospective Study in a Tertiary Care Center. Cureus 2022, 14, e33092. [Google Scholar] [CrossRef]

- Prat, J. FIGO’s staging classification for cancer of the ovary, fallopian tube, and peritoneum: Abridged republication. J. Gynecol. Oncol. 2015, 26, 87–89. [Google Scholar] [CrossRef]

- Timmerman, D.; Verrelst, H.; Bourne, T.H.; De Moor, B.; Collins, W.P.; Vergote, I.; Vandewalle, J. Artificial neural network models for the preoperative discrimination between malignant and benign adnexal masses. Ultrasound Obstet. Gynecol. 1999, 13, 17–25. [Google Scholar] [CrossRef]

- Clayton, R.D.; Snowden, S.; Weston, M.J.; Mogensen, O.; Eastaugh, J.; Lane, G. Neural networks in the diagnosis of malignant ovarian tumours. Br. J. Obstet. Gynaecol. 1999, 106, 1078–1082. [Google Scholar] [CrossRef] [PubMed]

- Tailor, A.; Jurkovic, D.; Bourne, T.H.; Collins, W.P.; Campbell, S. Sonographic prediction of malignancy in adnexal masses using an artificial neural network. Br. J. Obstet. Gynaecol. 1999, 106, 21–30. [Google Scholar] [CrossRef]

- Szpurek, D.; Moszynski, R.; Smolen, A.; Sajdak, S. Artificial neural network computer prediction of ovarian malignancy in women with adnexal masses. Int. J. Gynaecol. Obstet. 2005, 89, 108–113. [Google Scholar] [CrossRef]

- Lucidarme, O.; Akakpo, J.P.; Granberg, S.; Sideri, M.; Levavi, H.; Schneider, A.; Autier, P.; Nir, D.; Bleiberg, H.; Ovarian HistoScanning Clinical Study Group. A new computer-aided diagnostic tool for non-invasive characterisation of malignant ovarian masses: Results of a multicentre validation study. Eur. Radiol. 2010, 20, 1822–1830. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Chen, Y.; Zhang, M.; Zhang, P.; He, K.; Yan, F.; Xu, H.; Burkhoff, D.; Luo, Y.; Wang, L.; et al. A Deep Learning Model System for Diagnosis and Management of Adnexal Masses. Cancers 2022, 14, 5291. [Google Scholar] [CrossRef] [PubMed]

- Aramendía-Vidaurreta, V.; Cabeza, R.; Villanueva, A.; Navallas, J.; Alcázar, J.L. Ultrasound Image Discrimination between Benign and Malignant Adnexal Masses Based on a Neural Network Approach. Ultrasound Med. Biol. 2016, 42, 742–752. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Más, J.; Bueno-Crespo, A.; Khazendar, S.; Remezal-Solano, M.; Martínez-Cendán, J.P.; Jassim, S.; Du, H.; Al Assam, H.; Bourne, T.; Timmerman, D. Evaluation of machine learning methods with Fourier Transform features for classifying ovarian tumors based on ultrasound images. PLoS ONE 2019, 14, e0219388. [Google Scholar] [CrossRef] [PubMed]

- Adusumilli, P.; Ravikumar, N.; Hall, G.; Swift, S.; Orsi, N.; Scarsbrook, A. Radiomics in the evaluation of ovarian masses—A systematic review. Insights Imaging 2023, 14, 165. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Xiao, Y.; Guo, W.; Yao, J.; Lan, T.; Li, S.; Wen, H.; Zhu, W.; He, G.; Zheng, H.; et al. Development and validation of an ultrasound-based deep learning radiomics nomogram for predicting the malignant risk of ovarian tumours. Biomed. Eng. Online 2024, 23, 41. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.P.; Ma, Z.; He, Y.; Liu, R.C.; Jin, B.B.; Wen, D.Y.; Wen, R.; Yin, H.H.; Qiu, C.C.; Gao, R.Z.; et al. Ultrasound-based radiomics for predicting different pathological subtypes of epithelial ovarian cancer before surgery. BMC Med. Imaging 2022, 22, 147. [Google Scholar] [CrossRef]

- Liu, L.; Cai, W.; Zhou, C.; Tian, H.; Wu, B.; Zhang, J.; Yue, G.; Hao, Y. Ultrasound radiomics-based artificial intelligence model to assist in the differential diagnosis of ovarian endometrioma and ovarian dermoid cyst. Front. Med. 2024, 11, 1362588. [Google Scholar] [CrossRef] [PubMed]

- Afshar, P.; Mohammadi, A.; Plataniotis, K.N.; Oikonomou, A.; Benali, H. From handcrafted to deep-learning-based cancer radiomics: Challenges and opportunities. IEEE Signal Process. Mag. 2019, 36, 132–160. [Google Scholar] [CrossRef]

- Timmerman, D.; Valentin, L.; Bourne, T.H.; Collins, W.P.; Verrelst, H.; Vergote, I.; Group, I.O.T.A.I. Terms, definitions and measurements to describe the sonographic features of adnexal tumors: A consensus opinion from the International Ovarian Tumor Analysis (IOTA) Group. Ultrasound Obstet. Gynecol. 2000, 16, 500–505. [Google Scholar] [CrossRef]

- Exacoustos, C.; Romanini, M.E.; Rinaldo, D.; Amoroso, C.; Szabolcs, B.; Zupi, E.; Arduini, D. Preoperative sonographic features of borderline ovarian tumors. Ultrasound Obstet. Gynecol. 2005, 25, 50–59. [Google Scholar] [CrossRef] [PubMed]

- Daraï, E.; Fauvet, R.; Uzan, C.; Gouy, S.; Duvillard, P.; Morice, P. Fertility and borderline ovarian tumor: A systematic review of conservative management, risk of recurrence and alternative options. Hum. Reprod. Update 2013, 19, 151–166. [Google Scholar] [CrossRef] [PubMed]

- Sayasneh, A.; Ekechi, C.; Ferrara, L.; Kaijser, J.; Stalder, C.; Sur, S.; Timmerman, D.; Bourne, T. The characteristic ultrasound features of specific types of ovarian pathology (review). Int. J. Oncol. 2015, 46, 445–458. [Google Scholar] [CrossRef] [PubMed]

- Tanase, Y.; Kawaguchi, R.; Takahama, J.; Kobayashi, H. Factors that Differentiate between Endometriosis-associated Ovarian Cancer and Benign Ovarian Endometriosis with Mural Nodules. Magn. Reson. Med. Sci. 2018, 17, 231–237. [Google Scholar] [CrossRef] [PubMed]

| Histopathology | N (%) | |

|---|---|---|

| Benign | Cystadenoma (serous, mucinous, and sero-mucinous) | 153 (26.2) |

| Endometrioma | 72 (12.3) | |

| Mature teratoma | 60 (10.3) | |

| Benign tubal | 45 (7.7) | |

| Corpus luteum | 25 (4.2) | |

| Cystadenofibroma (serous and mucinous) | 18 (3.1) | |

| Thecoma | 12 (2.1) | |

| Fibroma | 2 (0.3) | |

| Other benign | 3 (0.5) | |

| Total benign | 390 (66.7) | |

| Malignant | Serous carcinoma | 101 (17.3) |

| Metastatic | 26 (4.4) | |

| Germ cell malignant tumor | 15 (2.6) | |

| Mucinous carcinoma | 15 (2.6) | |

| Endometrioid carcinoma | 12 (2.0) | |

| Clear cell carcinoma | 9 (1.5) | |

| Sex cord malignant tumor | 6 (1) | |

| Carcinosarcoma | 4 (0.7) | |

| Other malignant tumors | 7 (1.2) | |

| Total malignant | 195 (33.3) | |

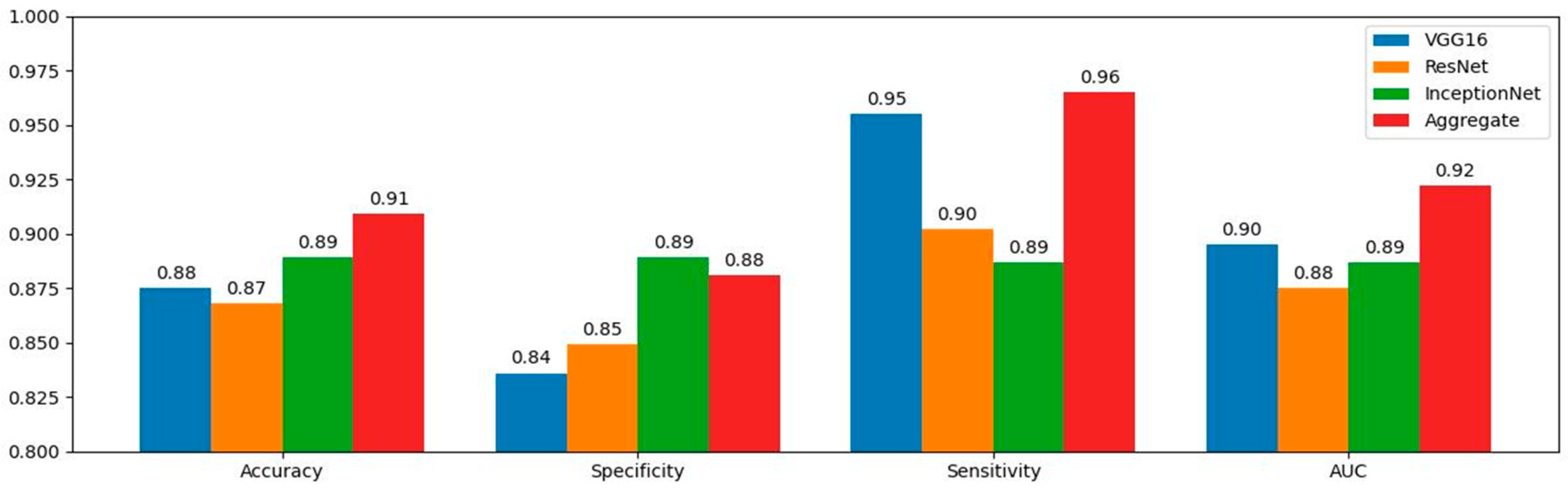

| Metrics | VGG16% (95% CI) | ResNet50% (95% CI) | InceptionNet% (95% CI) | Aggregate% (95% CI) | SA% (95% CI) |

|---|---|---|---|---|---|

| Accuracy | 87.50 (82.3–91.9) | 86.80 (82.6–89.6) | 88.90 (83.7–93.5) | 90.90 (85.6–93.1) | 94.2 (92.3–98.3) |

| Sensitivity | 95.50 (91.1–97.3) | 90.20 (86.2–93.2) | 88.70 (83.9–91.9) | 96.50 (91.2–98.5) | 95.90 (93.9–99.1) |

| Specificity | 83.60 (78.7–85.6) | 84.90 (79.7–88.1) | 88.90 (83.7–93.5) | 88.10 (85.1–90.2) | 93.60 (88.6–95.9) |

| AUC | 89.50 (84.8–91.9) | 87.50 (80.1–90.3) | 88.70 (83.9–91.9) | 92.20 (90.8–97.1) | - |

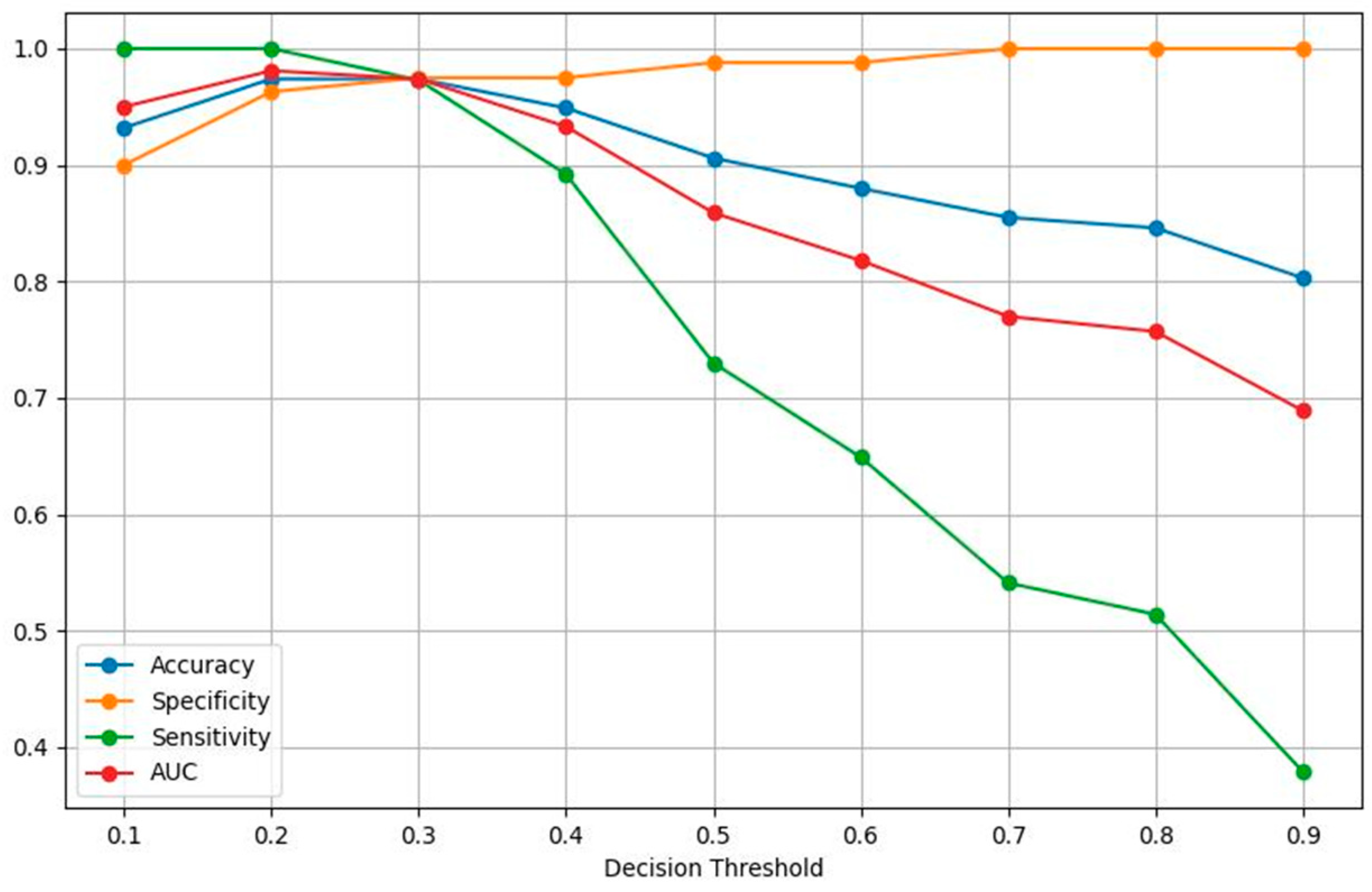

| Threshold | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.932 | 0.974 | 0.974 | 0.949 | 0.906 | 0.880 | 0.855 | 0.846 | 0.803 |

| Specificity | 0.900 | 0.963 | 0.975 | 0.975 | 0.988 | 0.988 | 1.000 | 1.000 | 1.000 |

| Sensitivity | 1.000 | 1.000 | 0.973 | 0.892 | 0.730 | 0.649 | 0.541 | 0.514 | 0.379 |

| AUC | 0.950 | 0.981 | 0.974 | 0.933 | 0.859 | 0.818 | 0.770 | 0.757 | 0.689 |

| VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | |

| Weights | 0.1 | 0.45 | 0.45 | 0.2 | 0.4 | 0.4 | 0.3 | 0.35 | 0.35 | 0.4 | 0.3 | 0.3 |

| Accuracy | 0.915 | 0.915 | 0.915 | 0.915 | ||||||||

| Specificity | 0.902 | 0.89 | 0.89 | 0.89 | ||||||||

| Sensitivity | 0.943 | 0.943 | 0.943 | 0.943 | ||||||||

| AUC | 0.923 | 0.931 | 0.931 | 0.931 | ||||||||

| FP | 8 | 9 | 9 | 9 | ||||||||

| FN | 2 | 2 | 2 | 2 | ||||||||

| VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | VGG16 | ResNet | InceptionNet | |

| Weights | 0.5 | 0.25 | 0.25 | 0.6 | 0.2 | 0.2 | 0.7 | 0.15 | 0.15 | 0.8 | 0.1 | 0.1 |

| Accuracy | 0.906 | 0.897 | 0.88 | 0.863 | ||||||||

| Specificity | 0.878 | 0.866 | 0.841 | 0.829 | ||||||||

| Sensitivity | 0.971 | 0.971 | 0.971 | 0.943 | ||||||||

| AUC | 0.925 | 0.919 | 0.906 | 0.886 | ||||||||

| FP | 10 | 11 | 13 | 14 | ||||||||

| FN | 1 | 1 | 1 | 2 | ||||||||

| Histopathology | Aggregate Model | SA |

|---|---|---|

| Benign (Total) | 46 | 26 |

| Cystadenoma | 14 | 10 |

| Endometrioma | 14 | 5 |

| Mature teratoma | 6 | - |

| Abscess | 3 | 1 |

| Corpus Luteum | 3 | 1 |

| Hydrosalpinx | 2 | - |

| Cystadenofibroma | 2 | 5 |

| Serous Cyst | 1 | 1 |

| Rete ovarii | 1 | - |

| Brenner tumor | - | 2 |

| Thecoma | - | 2 |

| Malignant (Total) | 7 | 8 |

| Serous carcinoma | 4 | 1 |

| Endometrioid carcinoma | 2 | 2 |

| Metastatic | 1 | 2 |

| Ovarian Schwannoma | - | 1 |

| Immature teratoma | - | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giourga, M.; Petropoulos, I.; Stavros, S.; Potiris, A.; Gerede, A.; Sapantzoglou, I.; Fanaki, M.; Papamattheou, E.; Karasmani, C.; Karampitsakos, T.; et al. Enhancing Ovarian Tumor Diagnosis: Performance of Convolutional Neural Networks in Classifying Ovarian Masses Using Ultrasound Images. J. Clin. Med. 2024, 13, 4123. https://doi.org/10.3390/jcm13144123

Giourga M, Petropoulos I, Stavros S, Potiris A, Gerede A, Sapantzoglou I, Fanaki M, Papamattheou E, Karasmani C, Karampitsakos T, et al. Enhancing Ovarian Tumor Diagnosis: Performance of Convolutional Neural Networks in Classifying Ovarian Masses Using Ultrasound Images. Journal of Clinical Medicine. 2024; 13(14):4123. https://doi.org/10.3390/jcm13144123

Chicago/Turabian StyleGiourga, Maria, Ioannis Petropoulos, Sofoklis Stavros, Anastasios Potiris, Angeliki Gerede, Ioakeim Sapantzoglou, Maria Fanaki, Eleni Papamattheou, Christina Karasmani, Theodoros Karampitsakos, and et al. 2024. "Enhancing Ovarian Tumor Diagnosis: Performance of Convolutional Neural Networks in Classifying Ovarian Masses Using Ultrasound Images" Journal of Clinical Medicine 13, no. 14: 4123. https://doi.org/10.3390/jcm13144123

APA StyleGiourga, M., Petropoulos, I., Stavros, S., Potiris, A., Gerede, A., Sapantzoglou, I., Fanaki, M., Papamattheou, E., Karasmani, C., Karampitsakos, T., Topis, S., Zikopoulos, A., Daskalakis, G., & Domali, E. (2024). Enhancing Ovarian Tumor Diagnosis: Performance of Convolutional Neural Networks in Classifying Ovarian Masses Using Ultrasound Images. Journal of Clinical Medicine, 13(14), 4123. https://doi.org/10.3390/jcm13144123