The Correlation of Computerized Scoring in Home Sleep Apnea Tests with Technician Visual Scoring for Assessing the Severity of Obstructive Sleep Apnea

Abstract

1. Introduction

2. Methods

2.1. Selection Criteria and Diagnostic Categories

2.2. Statistical Analysis

2.3. Polysmith Version 11 Scoring

3. Results

3.1. Patient Characteristics Classified by Disease Severity

3.2. Comparison of the Interpretation of Sleep Events by Software vs. Technicians

3.3. Software Diagnoses vs. Technician Diagnoses

3.4. Correlation of REI between the Technicians and Software

3.5. Sensitivity, Specificity, PPV, and NPV

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lechat, B.; Naik, G.; Reynolds, A.; Aishah, A.; Scott, H.; Loffler, K.A.; Vakulin, A.; Escourrou, P.; McEvoy, R.D.; Adams, R.J.; et al. Multinight Prevalence, Variability, and Diagnostic Misclassification of Obstructive Sleep Apnea. Am. J. Respir. Crit. Care Med. 2022, 205, 563–569. [Google Scholar] [CrossRef] [PubMed]

- Rosen, I.M.; Kirsch, D.B.; Chervin, R.D.; Carden, K.A.; Ramar, K.; Aurora, R.N.; Kristo, D.A.; Malhotra, R.K.; Martin, J.L.; Olson, E.J.; et al. Clinical Use of a Home Sleep Apnea Test: An American Academy of Sleep Medicine Position Statement. J. Clin. Sleep Med. 2017, 13, 1205–1207. [Google Scholar] [CrossRef] [PubMed]

- De Meyer, M.M.; Jacquet, W.; Vanderveken, O.M.; Marks, L.A. Systematic review of the different aspects of primary snoring. Sleep Med. Rev. 2019, 45, 88–94. [Google Scholar] [CrossRef] [PubMed]

- Cumpston, E.; Chen, P. Sleep Apnea Syndrome; StatPearls Publishing: St. Petersburg, FL, USA, 2024. [Google Scholar]

- Strollo, P.J.; Rogers, R.M. Obstructive Sleep Apnea. N. Engl. J. Med. 1996, 334, 99–104. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Kryger, M.H.; Zorick, F.J.; Conway, W.; Roth, T. Mortality and Apnea Index in Obstructive Sleep Apnea. Chest 1988, 94, 9–14. [Google Scholar] [CrossRef] [PubMed]

- Maurer, J.T. Early diagnosis of sleep related breathing disorders. GMS Curr. Top. Otorhinolaryngol. Head Neck Surg. 2008, 7, Doc03. [Google Scholar] [PubMed]

- Collen, J.F.; Wickwire, E.M.; Capaldi, V.; Lettieri, C. Losing sleep! Are we missing the future of sleep medicine? J. Clin. Sleep Med. 2020, 16, 473–474. [Google Scholar] [CrossRef]

- Khosla, S.; Beam, E.; Berneking, M.; Cheung, J.; Epstein, L.J.; Meyer, B.J.; Ramar, K.; So, J.Y.; Sullivan, S.S.; Wolfe, L.F.; et al. The COVID-19 pandemic and sleep medicine: A look back and a look ahead. J. Clin. Sleep Med. 2022, 18, 2045–2050. [Google Scholar] [CrossRef]

- Johnson, K.G.; Sullivan, S.S.; Nti, A.; Rastegar, V.; Gurubhagavatula, I. The impact of the COVID-19 pandemic on sleep medicine practices. J. Clin. Sleep Med. 2021, 17, 79–87. [Google Scholar] [CrossRef]

- Golpe, R.; Jime’nez, A.; Carpizo, R. Home Sleep Studies in the Assessment of Sleep Apnea/Hypopnea Syndrome. Chest 2002, 122, 1156–1161. [Google Scholar] [CrossRef]

- Green, A.; Nagel, N.; Kemer, L.; Dagan, Y. Comparing in-lab full polysomnography for diagnosing sleep apnea in children to home sleep apnea tests (HSAT) with an online video attending technician. Sleep Biol. Rhythm. 2022, 20, 397–401. [Google Scholar] [CrossRef]

- ElMoaqet, H.; Kim, J.; Tilbury, D.; Ramachandran, S.K.; Ryalat, M.; Chu, C.-H. Gaussian Mixture Models for Detecting Sleep Apnea Events Using Single Oronasal Airflow Record. Appl. Sci. 2020, 10, 7889. [Google Scholar] [CrossRef]

- Biswal, S.; Sun, H.; Goparaju, B.; Westover, M.B.; Sun, J.; Bianchi, M.T. Expert-level sleep scoring with deep neural networks. J. Am. Med. Inform. Assoc. 2018, 25, 1643–1650. [Google Scholar] [CrossRef]

- Patanaik, A.; Ong, J.L.; Gooley, J.J.; Ancoli-Israel, S.; Chee, M.W.L. An end-to-end framework for real-time automatic sleep stage classification. Sleep 2018, 41, zsy041. [Google Scholar] [CrossRef]

- Punjabi, N.M.; Shifa, N.; Dorffner, G.; Patil, S.; Pien, G.; Aurora, R.N. Computer-Assisted Automated Scoring of Polysomnograms Using the Somnolyzer System. Sleep 2015, 38, 1555–1566. [Google Scholar] [CrossRef]

- Maniaci, A.; Riela, P.M.; Iannella, G.; Lechien, J.R.; La Mantia, I.; De Vincentiis, M.; Cammaroto, G.; Calvo-Henriquez, C.; Di Luca, M.; Estomba, C.C.; et al. Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study. Life 2023, 13, 702. [Google Scholar] [CrossRef]

- Gunnarsdottir, K.M.; Gamaldo, C.; Salas, R.M.; Ewen, J.B.; Allen, R.P.; Hu, K.; Sarma, S.V. A novel sleep stage scoring system: Combining expert-based features with the generalized linear model. J. Sleep Res. 2020, 29, e12991. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. 510(k) Summary K142774. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf14/K142774.pdf (accessed on 10 July 2023).

- Ramar, K.; Dort, L.C.; Katz, S.G.; Lettieri, C.J.; Harrod, C.G.; Thomas, S.M.; Chervin, R.D. Clinical Practice Guideline for the Treatment of Obstructive Sleep Apnea and Snoring with Oral Appliance Therapy: An Update for 2015. J. Clin. Sleep Med. 2015, 11, 773–827. [Google Scholar] [CrossRef]

- Nihon Kohden Corporation, Nomad Portable Home Sleep Testing/PSG. Available online: https://us.nihonkohden.com/products/nomad/ (accessed on 9 August 2023).

- Kaw, R.; Wong, J.; Mokhlesi, B. Obesity and Obesity Hypoventilation, Sleep Hypoventilation, and Postoperative Respiratory Failure. Obstet. Anesth. Dig. 2021, 132, 1265–1273. [Google Scholar] [CrossRef]

- Spurling, K.; Perks, J.; Makker, H. Misleading sleep-diagnostic results in a patient with variable nasal airflow obstruction. Sleep Sci. 2015, 8, 56–57. [Google Scholar] [CrossRef]

- Cowie, M.R.; Linz, D.; Redline, S.; Somers, V.K.; Simonds, A.K. Sleep Disordered Breathing and Cardiovascular Disease. J. Am. Coll. Cardiol. 2021, 78, 608–624. [Google Scholar] [CrossRef]

- Collop, N.A. Scoring variability between polysomnography technologists in different sleep laboratories. Sleep Med. 2002, 3, 43–47. [Google Scholar] [CrossRef]

- Gagnadoux, F.; Pelletier-Fleury, N.; Philippe, C.; Rakotonanahary, D.; Fleury, B. Home Unattended vs Hospital Telemonitored Polysomnography in Suspected Obstructive Sleep Apnea Syndrome. Chest 2002, 121, 753–758. [Google Scholar] [CrossRef]

- Banhiran, W.; Chotinaiwattarakul, W.; Chongkolwatana, C.; Metheetrairut, C. Home-based diagnosis of obstructive sleep apnea by polysomnography type 2: Accuracy, reliability, and feasibility. Sleep Breath. 2014, 18, 817–823. [Google Scholar] [CrossRef]

- Alakuijala, A.; Tervamäki, J.; Maasilta, P.; Bachour, A. Predicting Technical Success in Home Sleep Apnea Test. J. Sleep Disord. 2019, 8, 1000226. [Google Scholar]

- Massie, F.; Van Pee, B.; Bergmann, J. Correlations between home sleep apnea tests and polysomnography outcomes do not fully reflect the diagnostic accuracy of these tests. J. Clin. Sleep Med. 2022, 18, 871–876. [Google Scholar] [CrossRef]

- De Batlle, J.; Bertran, S.; Turino, C.; Escarrabill, J.; Sánchez-De-La-Torre, M.; Woehrle, H.; Barbé, F. Mortality in Patients Treated with Continuous Positive Airway Pressure at the Population Level. Am. J. Respir. Crit. Care Med. 2018, 197, 1486–1488. [Google Scholar] [CrossRef]

- Watson, N.F. Health Care Savings: The Economic Value of Diagnostic and Therapeutic Care for Obstructive Sleep Apnea. J. Clin. Sleep Med. 2016, 12, 1075–1077. [Google Scholar] [CrossRef]

| Variable | Total = 120 | Snoring (n = 30) | Mild (n = 30) | Moderate (n = 30) | Severe (n = 30) | p-Value |

|---|---|---|---|---|---|---|

| Age, mean (SD) | 55.7 (13.3) | 48.5 (15.3) | 52.9 (11.9) | 60.8 (10.6) | 60.6 (11.2) | 0.0002 |

| Sex, n (%) | 0.0256 | |||||

| Male | 66 (55.0) | 14 (46.7) | 11 (36.7) | 20 (66.7) | 21 (70.0) | |

| Female | 54 (45.0) | 16 (53.3) | 19 (63.3) | 10 (33.3) | 9 (30.0) | |

| TRT 1 (min), mean (SD) | 440.2 (95.4) | 455.8 (99.3) | 462.0 (74.0) | 430.5 (116.5) | 412.5 (82.4) | 0.1570 |

| Mean oxygen saturation, mean (SD) | 93.7 (2.0) | 95.2 (1.7) | 94.1 (1.7) | 93.2 (2.1) | 92.5 (1.7) | <0.0001 |

| Nadir SPO2 2, mean (SD) | 81.2 (8.3) | 87.5 (6.7) | 81.8 (4.5) | 80.8 (7.7) | 74.6 (8.4) | <0.0001 |

| t89 3 (min), mean (SD) | 56.5 (83.2) | 37.1 (99.8) | 65.0 (79.0) | 61.0 (99.3) | 63.0 (42.8) | <0.0001 |

| t89 (percent), mean (SD) | 13.4 (17.4) | 7.1 (17.2) | 14.8 (16.0) | 16.2 (22.7) | 15.4 (10.9) | <0.0001 |

| Average heart rate (bpm 4), mean (SD) | 65.1 (9.9) | 63.1 (9.4) | 66.2 (10.7) | 64.8 (9.8) | 66.5 (9.7) | 0.5255 |

| Criteria, n (%) | 0.1901 | |||||

| 3% | 36 (30.0) | 6 (20.0) | 7 (23.3) | 13 (43.3) | 10 (33.3) | |

| 4% | 84 (70.0) | 24 (80.0) | 23 (76.7) | 17 (56.7) | 20 (66.7) |

| Variable | Total (n = 120) | Snoring (n = 30) | Mild (n = 30) | Moderate (n = 30) | Severe (n = 30) | p-Value |

|---|---|---|---|---|---|---|

| REI Technician #, median (IQR) | 14.7 (25.2) | 2.1 (1.9) | 7.6 (5.1) | 20.1 (7.5) | 44.0 (18.0) | <0.0001 |

| REI Polysmith Version 11#, median (IQR), N = 119 1 | 12.2 (24.5) | 2.8 (2.3) | 7.0 (4.2) | 21.2 (8.7) | 42.6 (16.7) | <0.0001 |

| Apnea incidents #, Technician, mean (SD) | 63.4 (111.3) | 5.0 (6.6) | 16.4 (13.3) | 51.9 (38.4) | 180.5 (170.2) | <0.0001 |

| Apnea incidents #, Software, mean (SD) | 83.5 (96.0) | 11.9 (13.8) | 32.2 (20.9) | 87.5 (53.9) | 202.2 (108.1) | <0.0001 |

| Hypopnea incidents #, Technician, mean (SD) | 70.9 (62.4) | 9.7 (7.9) | 47.2 (27.2) | 93.1 (45.6) | 133.5 (64.0) | <0.0001 |

| Hypopnea incidents #, Software, mean (SD) | 41.5 (42.9) | 6.7 (7.1) | 22.7 (13.0) | 52.2 (36.2) | 84.2 (49.3) | <0.0001 |

| Variable | Total (n = 120) | Snoring (n = 30) | Mild (n = 30) | Moderate (n = 30) | Severe (n = 30) | p-Value |

|---|---|---|---|---|---|---|

| REI difference, n = 119, median (IQR) | 0.5 (4.1) | −0.2 (1.4) | 0.75 (4.0) | 1.56 (5.6) | 1.60 (4.9) | 0.0318 |

| Hypopnea incidents difference, median (IQR) | 19.0 (45.0) | 4.0 (7.0) | 23.00 (24.0) | 43.0 (40.0) | 48.00 (60.0) | <0.0001 |

| Apnea incidents difference, median (IQR) | −16.5 (37.5) | −4.0 (8.0) | −15.0 (14.0) | −39.5 (39.0) | −38.0 (93.0) | <0.0001 |

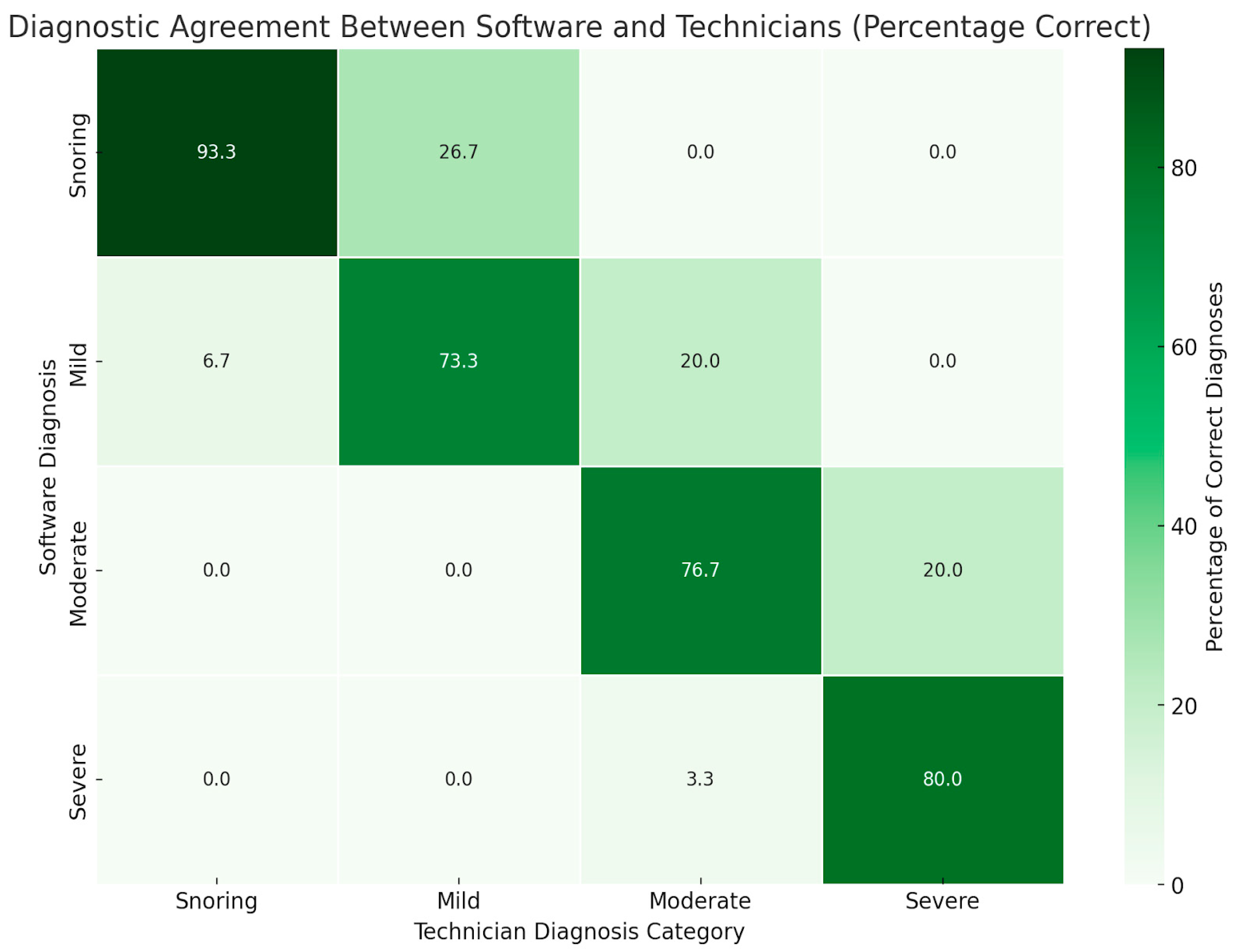

| Technician Diagnosis | |||||

|---|---|---|---|---|---|

| Software Diagnosis | Snoring | Mild | Moderate | Severe | Total |

| Snoring | 28 (93.3) | 8 (26.7) | 0 (0.0) | 0 (0.0) | 36 (30.0) |

| Mild | 2 (6.7) | 22 (73.3) | 6 (20.0) | 0 (0.0) | 30 (25.0) |

| Moderate | 0 (0.0) | 0 (0.0) | 23 (76.7) | 6 (20.0) | 29 (24.2) |

| Severe | 0 (0.0) | 0 (0.0) | 1 (3.3) | 24 (80.0) | 25 (20.8) |

| Total | 30 (25.0) | 30 (25.0) | 30 (25.0) | 30 (25.0) | 120 (100.0) |

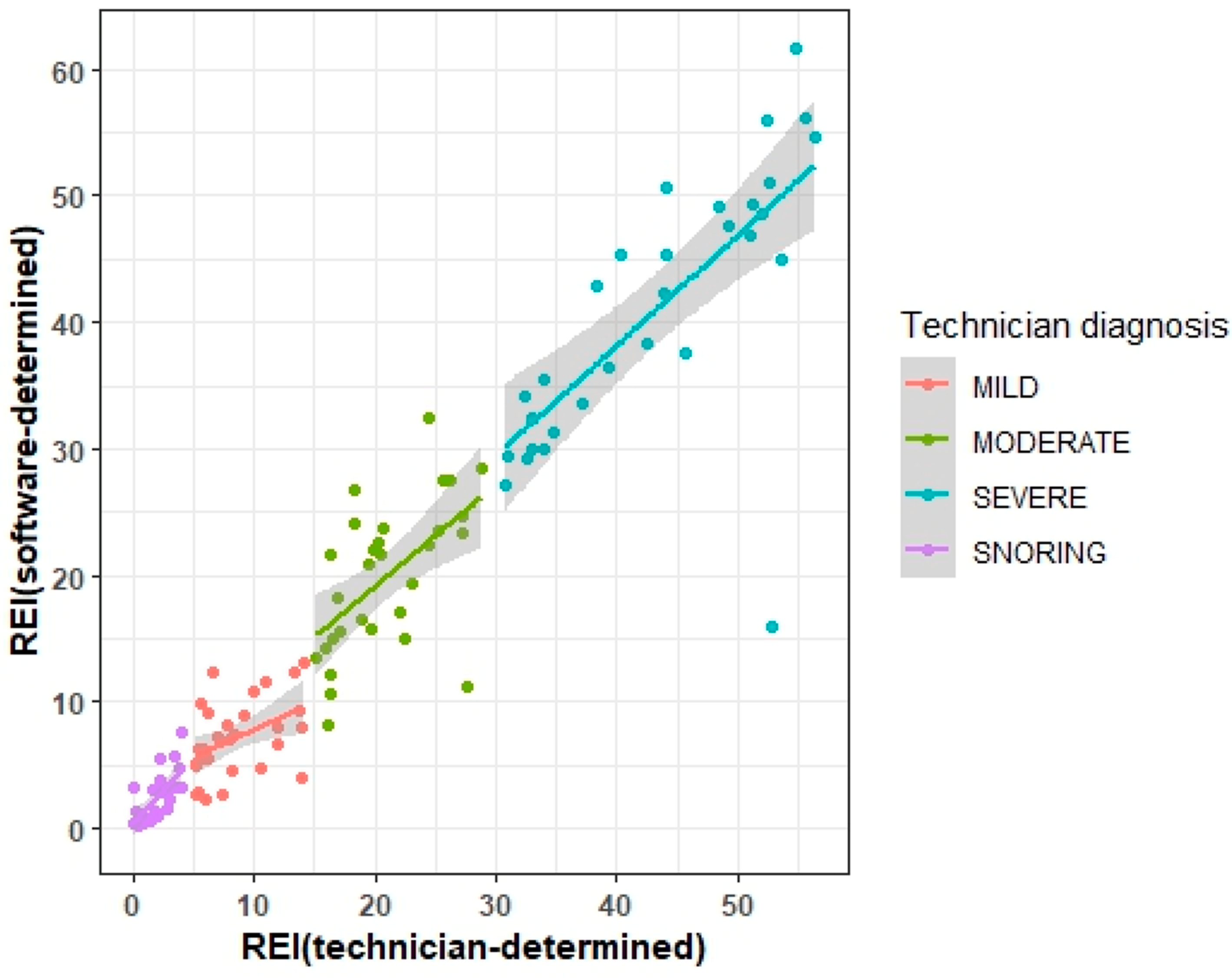

| Variable | r, p Value | Wilcoxon Signed Rank Test, p Value |

|---|---|---|

| ALL N = 119 | 0.96, <0.0001 | 0.0134 |

| Mild N = 30 | 0.45, 0.0129 | 0.0197 |

| Moderate N = 30 | 0.56, 0.0012 | 0.2350 |

| Severe N = 30 | 0.71, <0.0001 | 0.0694 |

| Snoring N = 29 | 0.69, <0.0001 | 0.1798 |

| OSA Diagnostic Categories | Moderate/Severe Combined | ||||

| Snoring | Mild | Moderate | Severe | Moderate/Severe | |

| Sensitivity | 93% | 73% | 77% | 80% | 90% |

| Specificity | 91% | 91% | 93% | 99% | 100% |

| PPV | 78% | 73% | 79% | 96% | 100% |

| NPV | 98% | 91% | 92% | 94% | 91% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hawco, C.; Bonthu, A.; Pasek, T.; Sarna, K.; Smolley, L.; Hadeh, A. The Correlation of Computerized Scoring in Home Sleep Apnea Tests with Technician Visual Scoring for Assessing the Severity of Obstructive Sleep Apnea. J. Clin. Med. 2024, 13, 4204. https://doi.org/10.3390/jcm13144204

Hawco C, Bonthu A, Pasek T, Sarna K, Smolley L, Hadeh A. The Correlation of Computerized Scoring in Home Sleep Apnea Tests with Technician Visual Scoring for Assessing the Severity of Obstructive Sleep Apnea. Journal of Clinical Medicine. 2024; 13(14):4204. https://doi.org/10.3390/jcm13144204

Chicago/Turabian StyleHawco, Colton, Amrita Bonthu, Tristan Pasek, Kaylee Sarna, Laurence Smolley, and Anas Hadeh. 2024. "The Correlation of Computerized Scoring in Home Sleep Apnea Tests with Technician Visual Scoring for Assessing the Severity of Obstructive Sleep Apnea" Journal of Clinical Medicine 13, no. 14: 4204. https://doi.org/10.3390/jcm13144204

APA StyleHawco, C., Bonthu, A., Pasek, T., Sarna, K., Smolley, L., & Hadeh, A. (2024). The Correlation of Computerized Scoring in Home Sleep Apnea Tests with Technician Visual Scoring for Assessing the Severity of Obstructive Sleep Apnea. Journal of Clinical Medicine, 13(14), 4204. https://doi.org/10.3390/jcm13144204