Navigating the Metaverse: A New Virtual Tool with Promising Real Benefits for Breast Cancer Patients

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

3. Metaverse and Its Influence on BC Operations

3.1. MeTAI

3.2. MIoT

3.3. Digital Twins Technology

3.4. Privacy, Security, and Ethical Considerations

4. Metaverse for Training

5. Online Support Groups: Patient Coalition in the Metaverse

5.1. Telehealth

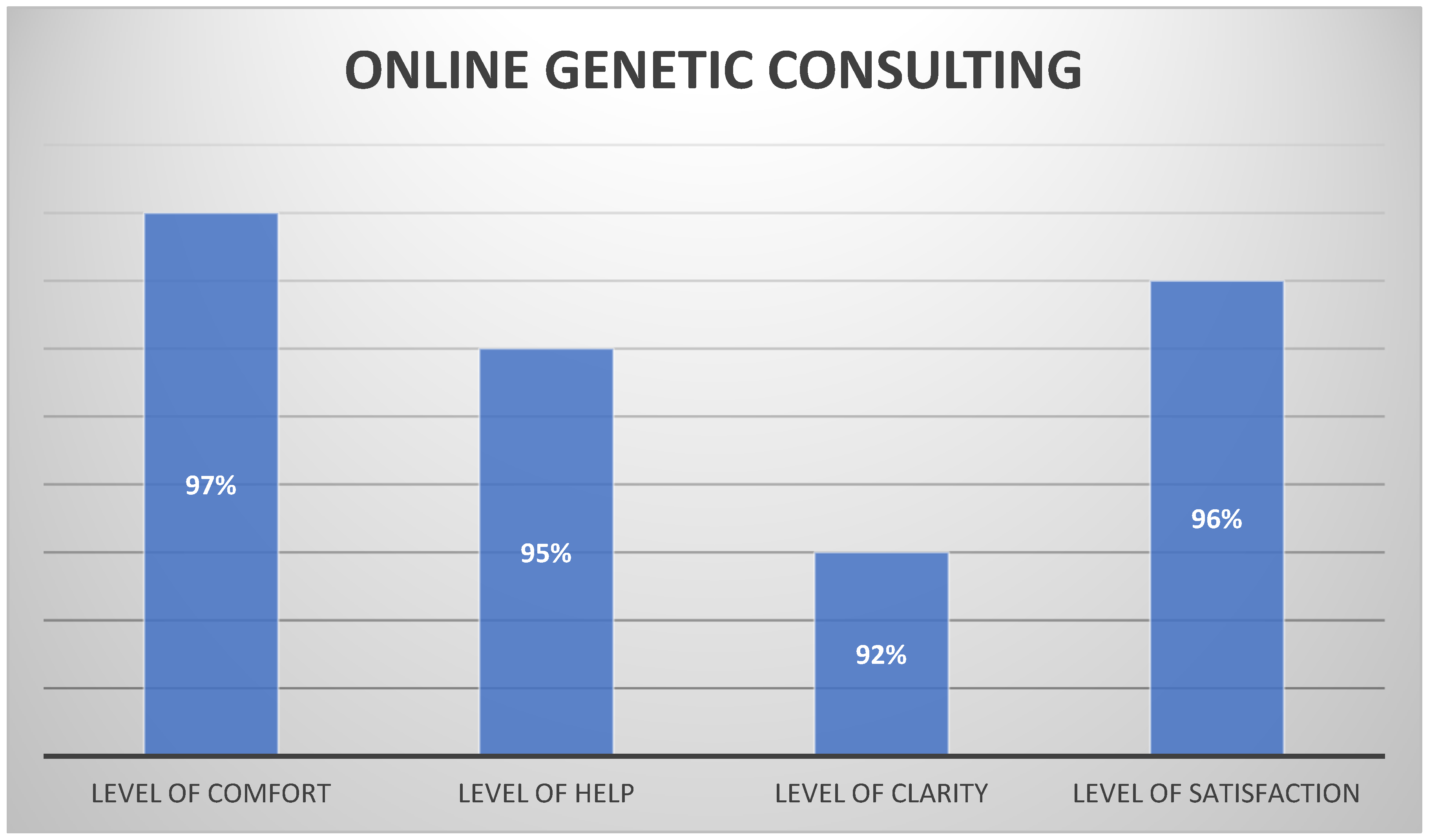

5.2. Online Genetic Consulting

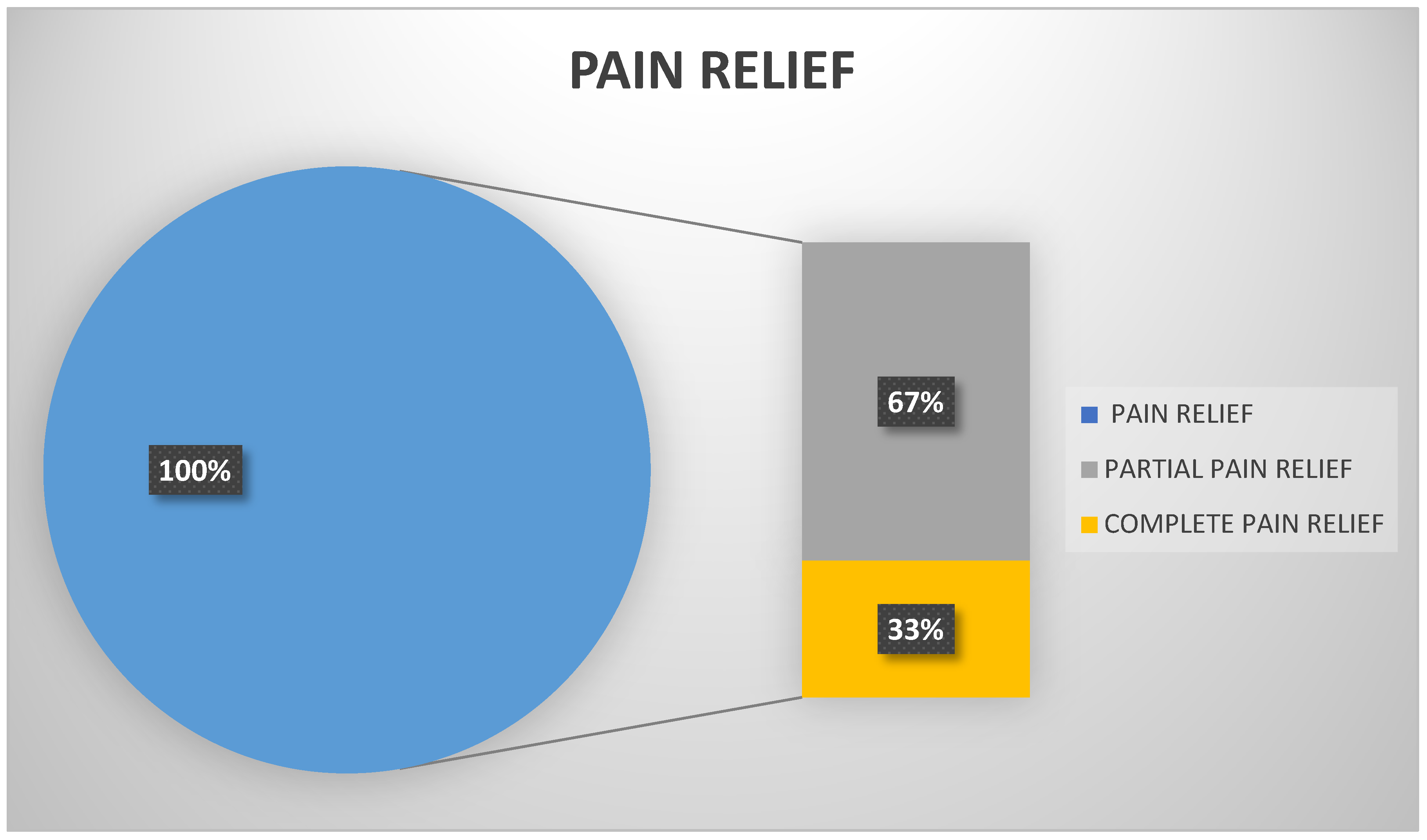

5.3. Online Patient Therapies

5.4. Financial Awareness Support

6. Dr. Meta

7. Future Research

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Daneshfar, F.; Jamshidi, M. An Octonion-Based Nonlinear Echo State Network for Speech Emotion Recognition in Metaverse. Neural Netw. 2023, 163, 108–121. [Google Scholar] [CrossRef]

- Moztarzadeh, O.; Jamshidi, M.; Sargolzaei, S.; Jamshidi, A.; Baghalipour, N.; Malekzadeh Moghani, M.; Hauer, L. Metaverse and Healthcare: Machine Learning-Enabled Digital Twins of Cancer. Bioengineering 2023, 10, 455. [Google Scholar] [CrossRef] [PubMed]

- Koo, H. Training in Lung Cancer Surgery through the Metaverse, Including Extended Reality, in the Smart Operating Room of Seoul National University Bundang Hospital, Korea. J. Educ. Eval. Health Prof. 2021, 18, 33. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, K.P.; Raza, M.M.; Kvedar, J.C. Health Digital Twins as Tools for Precision Medicine: Considerations for Computation, Implementation, and Regulation. NPJ Digit. Med. 2022, 5, 150. [Google Scholar] [CrossRef]

- Ghaednia, H.; Fourman, M.S.; Lans, A.; Detels, K.; Dijkstra, H.; Lloyd, S.; Sweeney, A.; Oosterhoff, J.H.F.; Schwab, J.H. Augmented and Virtual Reality in Spine Surgery, Current Applications and Future Potentials. Spine J. 2021, 21, 1617–1625. [Google Scholar] [CrossRef]

- Lungu, A.J.; Swinkels, W.; Claesen, L.; Tu, P.; Egger, J.; Chen, X. A Review on the Applications of Virtual Reality, Augmented Reality and Mixed Reality in Surgical Simulation: An Extension to Different Kinds of Surgery. Expert. Rev. Med. Devices 2021, 18, 47–62. [Google Scholar] [CrossRef]

- Yang, Y.; Sun, Y. Smart Health: Intelligent Healthcare Systems in the Metaverse, Artificial Intelligence, and Data Science Era. J. Organ. End User Comput. 2022, 34, 1–14. [Google Scholar] [CrossRef]

- Wang, G.; Badal, A.; Jia, X.; Maltz, J.S.; Mueller, K.; Myers, K.J.; Niu, C.; Vannier, M.; Yan, P.; Yu, Z.; et al. Development of Metaverse for Intelligent Healthcare. Nat. Mach. Intell. 2022, 2022, 4. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Ye, J.C.; De Man, B. Deep Learning for Tomographic Image Reconstruction. Nat. Mach. Intell. 2020, 2, 737–748. [Google Scholar] [CrossRef]

- Yoo, B.; Kim, A.; Moon, H.S.; So, M.K.; Jeong, T.D.; Lee, K.E.; Moon, B.I.; Huh, J. Evaluation of Group Genetic Counseling Sessions via a Metaverse-Based Application. Ann. Lab. Med. 2024, 44, 82. [Google Scholar] [CrossRef]

- Yang, D.; Zhou, J.; Chen, R.; Song, Y.; Song, Z.; Zhang, X.; Wang, Q.; Wang, K.; Zhou, C.; Sun, J.; et al. Expert Consensus on the Metaverse in Medicine. Clin. eHealth 2022, 5, 1–9. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, N.; Li, Y.; Jampani, V.; Pritch, Y.; Rubinstein, M.; Aberman, K. DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 22500–22510. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital Twin: Enabling Technologies, Challenges and Open Research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Badano, A.; Graff, C.G.; Badal, A.; Sharma, D.; Zeng, R.; Samuelson, F.W.; Glick, S.J.; Myers, K.J. Evaluation of Digital Breast Tomosynthesis as Replacement of Full-Field Digital Mammography Using an In Silico Imaging Trial. JAMA Netw. Open 2018, 1, e185474. [Google Scholar] [CrossRef] [PubMed]

- Center for Devices and Radiological Health. Assessing the Credibility of Computational Modeling and Simulation in Medical Device Submissions—Guidance for Industry and Food and Drug Administration Staff; U.S. Food and Drug Administration: Silver Spring, MD, USA, 2021. Available online: https://www.fda.gov/media/87534/download (accessed on 2 April 2024).

- Cè, M.; Caloro, E.; Pellegrino, M.E.; Basile, M.; Sorce, A.; Fazzini, D.; Oliva, G.; Cellina, M. Artificial Intelligence in Breast Cancer Imaging: Risk Stratification, Lesion Detection and Classification, Treatment Planning and Prognosis—A Narrative Review. Explor. Target. Antitumor Ther. 2022, 3, 795. [Google Scholar] [CrossRef] [PubMed]

- Schaffter, T.; Buist, D.S.M.; Lee, C.I.; Nikulin, Y.; Ribli, D.; Guan, Y.; Lotter, W.; Jie, Z.; Du, H.; Wang, S.; et al. Evaluation of Combined Artificial Intelligence and Radiologist Assessment to Interpret Screening Mammograms. JAMA Netw. Open 2020, 3, E200265. [Google Scholar] [CrossRef]

- Lång, K.; Hofvind, S.; Rodríguez-Ruiz, A.; Andersson, I. Can Artificial Intelligence Reduce the Interval Cancer Rate in Mammography Screening? Eur. Radiol. 2021, 31, 5940–5947. [Google Scholar] [CrossRef] [PubMed]

- Larsen, M.; Aglen, C.F.; Lee, C.I.; Hoff, S.R.; Lund-Hanssen, H.; Lång, K.; Nygård, J.F.; Ursin, G.; Hofvind, S. Artificial Intelligence Evaluation of 122969 Mammography Examinations from a Population-Based Screening Program. Radiology 2022, 303, 502–511. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.E.; Kim, H.H.; Han, B.K.; Kim, K.H.; Han, K.; Nam, H.; Lee, E.H.; Kim, E.K. Changes in Cancer Detection and False-Positive Recall in Mammography Using Artificial Intelligence: A Retrospective, Multireader Study. Lancet Digit. Health 2020, 2, e138–e148. [Google Scholar] [CrossRef]

- Kim, H.J.; Kim, H.H.; Kim, K.H.; Choi, W.J.; Chae, E.Y.; Shin, H.J.; Cha, J.H.; Shim, W.H. Mammographically Occult Breast Cancers Detected with AI-Based Diagnosis Supporting Software: Clinical and Histopathologic Characteristics. Insights Imaging 2022, 13, 57. [Google Scholar] [CrossRef]

- Lång, K.; Josefsson, V.; Larsson, A.M.; Larsson, S.; Högberg, C.; Sartor, H.; Hofvind, S.; Andersson, I.; Rosso, A. Artificial Intelligence-Supported Screen Reading versus Standard Double Reading in the Mammography Screening with Artificial Intelligence Trial (MASAI): A Clinical Safety Analysis of a Randomised, Controlled, Non-Inferiority, Single-Blinded, Screening Accuracy Study. Lancet Oncol. 2023, 24, 936–944. [Google Scholar] [CrossRef] [PubMed]

- Bai, J.; Posner, R.; Wang, T.; Yang, C.; Nabavi, S. Applying Deep Learning in Digital Breast Tomosynthesis for Automatic Breast Cancer Detection: A Review. Med. Image Anal. 2021, 71, 102049. [Google Scholar] [CrossRef] [PubMed]

- Geras, K.J.; Mann, R.M.; Moy, L. Artificial Intelligence for Mammography and Digital Breast Tomosynthesis: Current Concepts and Future Perspectives. Radiology 2019, 293, 246–259. [Google Scholar] [CrossRef] [PubMed]

- van Winkel, S.L.; Rodríguez-Ruiz, A.; Appelman, L.; Gubern-Mérida, A.; Karssemeijer, N.; Teuwen, J.; Wanders, A.J.T.; Sechopoulos, I.; Mann, R.M. Impact of Artificial Intelligence Support on Accuracy and Reading Time in Breast Tomosynthesis Image Interpretation: A Multi-Reader Multi-Case Study. Eur. Radiol. 2021, 31, 8682–8691. [Google Scholar] [CrossRef]

- Conant, E.F.; Toledano, A.Y.; Periaswamy, S.; Fotin, S.V.; Go, J.; Boatsman, J.E.; Hoffmeister, J.W. Improving Accuracy and Efficiency with Concurrent Use of Artificial Intelligence for Digital Breast Tomosynthesis. Radiol. Artif. Intell. 2019, 1, e180096. [Google Scholar] [CrossRef] [PubMed]

- Buda, M.; Saha, A.; Walsh, R.; Ghate, S.; Li, N.; Święcicki, A.; Lo, J.Y.; Mazurowski, M.A. A Data Set and Deep Learning Algorithm for the Detection of Masses and Architectural Distortions in Digital Breast Tomosynthesis Images. JAMA Netw. Open 2021, 4, E2119100. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Edwards, A.V.; Newstead, G.M. Artificial Intelligence Applied to Breast MRI for Improved Diagnosis. Radiology 2021, 298, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Korreman, S.; Eriksen, J.G.; Grau, C. The Changing Role of Radiation Oncology Professionals in a World of AI—Just Jobs Lost—Or a Solution to the under-Provision of Radiotherapy? Clin. Transl. Radiat. Oncol. 2021, 26, 104. [Google Scholar] [CrossRef] [PubMed]

- Ruprecht, N.A.; Singhal, S.; Schaefer, K.; Panda, O.; Sens, D.; Singhal, S.K. A Review: Multi-Omics Approach to Studying the Association between Ionizing Radiation Effects on Biological Aging. Biology 2024, 13, 98. [Google Scholar] [CrossRef]

- Rezayi, S.; Niakan Kalhori, S.R.; Saeedi, S. Effectiveness of Artificial Intelligence for Personalized Medicine in Neoplasms: A Systematic Review. Biomed. Res. Int. 2022, 2022, 7842566. [Google Scholar] [CrossRef]

- Kashyap, D.; Kaur, H. Cell-Free MiRNAs as Non-Invasive Biomarkers in Breast Cancer: Significance in Early Diagnosis and Metastasis Prediction. Life Sci. 2020, 246, 117417. [Google Scholar] [CrossRef]

- Cheng, K.; Wang, J.; Liu, J.; Zhang, X.; Shen, Y.; Su, H.; Cheng, K.; Wang, J.; Liu, J.; Zhang, X.; et al. Public Health Implications of Computer-Aided Diagnosis and Treatment Technologies in Breast Cancer Care. AIMS Public. Health 2023, 10, 867–895. [Google Scholar] [CrossRef]

- Hong, J.C.; Rahimy, E.; Gross, C.P.; Shafman, T.; Hu, X.; Yu, J.B.; Ross, R.; Finkelstein, S.E.; Dosoretz, A.; Park, H.S.; et al. Radiation Dose and Cardiac Risk in Breast Cancer Treatment: An Analysis of Modern Radiation Therapy Including Community Settings. Pract. Radiat. Oncol. 2018, 8, e79–e86. [Google Scholar] [CrossRef]

- Sager, O.; Dincoglan, F.; Uysal, B.; Demiral, S.; Gamsiz, H.; Elcim, Y.; Gundem, E.; Dirican, B.; Beyzadeoglu, M. Evaluation of Adaptive Radiotherapy (ART) by Use of Replanning the Tumor Bed Boost with Repeated Computed Tomography (CT) Simulation after Whole Breast Irradiation (WBI) for Breast Cancer Patients Having Clinically Evident Seroma. Jpn J. Radiol. 2018, 36, 401–406. [Google Scholar] [CrossRef]

- Jung, J.W.; Lee, C.; Mosher, E.G.; Mille, M.M.; Yeom, Y.S.; Jones, E.C.; Choi, M.; Lee, C. Automatic Segmentation of Cardiac Structures for Breast Cancer Radiotherapy. Phys. Imaging Radiat. Oncol. 2019, 12, 44–48. [Google Scholar] [CrossRef]

- Enderling, H.; Alfonso, J.C.L.; Moros, E.; Caudell, J.J.; Harrison, L.B. Integrating Mathematical Modeling into the Roadmap for Personalized Adaptive Radiation Therapy. Trends Cancer 2019, 5, 467–474. [Google Scholar] [CrossRef]

- Duanmu, H.; Huang, P.B.; Brahmavar, S.; Lin, S.; Ren, T.; Kong, J.; Wang, F.; Duong, T.Q. Prediction of Pathological Complete Response to Neoadjuvant Chemotherapy in Breast Cancer Using Deep Learning with Integrative Imaging, Molecular and Demographic Data. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020; Volume 12262, pp. 242–252. [Google Scholar] [CrossRef]

- Byra, M.; Dobruch-Sobczak, K.; Klimonda, Z.; Piotrzkowska-Wroblewska, H.; Litniewski, J. Early Prediction of Response to Neoadjuvant Chemotherapy in Breast Cancer Sonography Using Siamese Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2021, 25, 797–805. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On Hyperparameter Optimization of Machine Learning Algorithms: Theory and Practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Xi, N.; Chen, J.; Gama, F.; Riar, M.; Hamari, J. The Challenges of Entering the Metaverse: An Experiment on the Effect of Extended Reality on Workload. Inf. Syst. Front. 2022, 25, 659–680. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Rodrigues Armijo, P.; Krause, C.; Siu, K.C.; Oleynikov, D. A Comprehensive Review of Robotic Surgery Curriculum and Training for Residents, Fellows, and Postgraduate Surgical Education. Surg. Endosc. 2020, 34, 361–367. [Google Scholar] [CrossRef] [PubMed]

- Cleveland Clinic Creates E-Anatomy with Virtual Reality. Available online: https://newsroom.clevelandclinic.org/2018/08/23/cleveland-clinic-creates-e-anatomy-with-virtual-reality (accessed on 18 May 2024).

- Duan, J.; Yu, S.; Tan, H.L.; Zhu, H.; Tan, C. A Survey of Embodied AI: From Simulators to Research Tasks. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 6, 230–244. [Google Scholar] [CrossRef]

- Sharma, S.; Rawal, R.; Shah, D. Addressing the Challenges of AI-Based Telemedicine: Best Practices and Lessons Learned. J. Educ. Health Promot. 2023, 12, 338. [Google Scholar] [CrossRef] [PubMed]

- Krishnan, G.; Singh, S.; Pathania, M.; Gosavi, S.; Abhishek, S.; Parchani, A.; Dhar, M. Artificial Intelligence in Clinical Medicine: Catalyzing a Sustainable Global Healthcare Paradigm. Front. Artif. Intell. 2023, 6, 1227091. [Google Scholar] [CrossRef] [PubMed]

- Skalidis, I.; Muller, O.; Fournier, S. The Metaverse in Cardiovascular Medicine: Applications, Challenges, and the Role of Non-Fungible Tokens. Can. J. Cardiol. 2022, 38, 1467–1468. [Google Scholar] [CrossRef]

- Ogundokun, R.O.; Misra, S.; Douglas, M.; Damaševičius, R.; Maskeliūnas, R. Medical Internet-of-Things Based Breast Cancer Diagnosis Using Hyperparameter-Optimized Neural Networks. Future Internet 2022, 14, 153. [Google Scholar] [CrossRef]

- Thong, B.K.S.; Loh, G.X.Y.; Lim, J.J.; Lee, C.J.L.; Ting, S.N.; Li, H.P.; Li, Q.Y. Telehealth Technology Application in Enhancing Continuous Positive Airway Pressure Adherence in Obstructive Sleep Apnea Patients: A Review of Current Evidence. Front. Med. 2022, 9, 877765. [Google Scholar] [CrossRef]

- Al-Turjman, F.; Alturjman, S. Context-Sensitive Access in Industrial Internet of Things (IIoT) Healthcare Applications. IEEE Trans. Ind. Inform. 2018, 14, 2736–2744. [Google Scholar] [CrossRef]

- Federal Register: Strategy for American Innovation. Available online: https://www.federalregister.gov/documents/2014/07/29/2014-17761/strategy-for-american-innovation (accessed on 19 May 2024).

- Chan, Y.F.Y.; Wang, P.; Rogers, L.; Tignor, N.; Zweig, M.; Hershman, S.G.; Genes, N.; Scott, E.R.; Krock, E.; Badgeley, M.; et al. The Asthma Mobile Health Study, a Large-Scale Clinical Observational Study Using ResearchKit. Nat. Biotechnol. 2017, 35, 354–362. [Google Scholar] [CrossRef]

- Center for Aging Services Technologies (CAST). Available online: https://leadingage.org/topic/technology-cast/ (accessed on 19 May 2024).

- Anliker, U.; Ward, J.A.; Lukowicz, P.; Tröster, G.; Dolveck, F.; Baer, M.; Keita, F.; Schenker, E.B.; Catarsi, F.; Coluccini, L.; et al. AMON: A Wearable Multiparameter Medical Monitoring and Alert System. IEEE Trans. Inf. Technol. Biomed. 2004, 8, 415–427. [Google Scholar] [CrossRef]

- Siontis, K.C.; Noseworthy, P.A.; Attia, Z.I.; Friedman, P.A. Artificial Intelligence-Enhanced Electrocardiography in Cardiovascular Disease Management. Nat. Rev. Cardiol. 2021, 18, 465–478. [Google Scholar] [CrossRef]

- Global Top Page|Toshiba. Available online: https://www.global.toshiba/ww/top.html (accessed on 19 May 2024).

- Saroğlu, H.E.; Shayea, I.; Saoud, B.; Azmi, M.H.; El-Saleh, A.A.; Saad, S.A.; Alnakhli, M. Machine Learning, IoT and 5G Technologies for Breast Cancer Studies: A Review. Alex. Eng. J. 2024, 89, 210–223. [Google Scholar] [CrossRef]

- Memon, M.H.; Li, J.P.; Haq, A.U.; Memon, M.H.; Zhou, W.; Lacuesta, R. Breast Cancer Detection in the IOT Health Environment Using Modified Recursive Feature Selection. Wirel. Commun. Mob. Comput. 2019, 2019, 5176705. [Google Scholar] [CrossRef]

- Salvi, S.; Kadam, A. Breast Cancer Detection Using Deep Learning and IoT Technologies. J. Phys. Conf. Ser. 2021, 1831, 012030. [Google Scholar] [CrossRef]

- Gopal, V.N.; Al-Turjman, F.; Kumar, R.; Anand, L.; Rajesh, M. Feature Selection and Classification in Breast Cancer Prediction Using IoT and Machine Learning. Measurement 2021, 178, 109442. [Google Scholar] [CrossRef]

- Lamba, M.; Munja, G.; Gigras, Y. Supervising Healthcare Schemes Using Machine Learning in Breast Cancer and Internet of Things (SHSMLIoT). Internet Healthc. Things Mach. Learn. Secur. Priv. 2022, 241–263. [Google Scholar] [CrossRef]

- Jahnke, P.; Limberg, F.R.P.; Gerbl, A.; Pardo, G.L.A.; Braun, V.P.B.; Hamm, B.; Scheel, M. Radiopaque Three-Dimensional Printing: A Method to Create Realistic CT Phantoms. Radiology 2016, 282, 569–575. [Google Scholar] [CrossRef]

- Faisal, S.; Ivo, J.; Patel, T. A Review of Features and Characteristics of Smart Medication Adherence Products. CPJ 2021, 154, 312. [Google Scholar] [CrossRef]

- Shajari, S.; Kuruvinashetti, K.; Komeili, A.; Sundararaj, U. The Emergence of AI-Based Wearable Sensors for Digital Health Technology: A Review. Sensors 2023, 23, 9498. [Google Scholar] [CrossRef]

- Bian, Y.; Xiang, Y.; Tong, B.; Feng, B.; Weng, X. Artificial Intelligence–Assisted System in Postoperative Follow-up of Orthopedic Patients: Exploratory Quantitative and Qualitative Study. J. Med. Internet Res. 2020, 22, e16896. [Google Scholar] [CrossRef] [PubMed]

- Seth, I.; Lim, B.; Joseph, K.; Gracias, D.; Xie, Y.; Ross, R.J.; Rozen, W.M. Use of Artificial Intelligence in Breast Surgery: A Narrative Review. Gland. Surg. 2024, 13, 395–411. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.-C.; Chuang, C.-W.; Liao, W.-C.; Li, C.-F.; Shih, H.-H. Using Virtual Reality in a Rehabilitation Program for Patients With Breast Cancer: Phenomenological Study. JMIR Serious Games 2024, 12, e44025. [Google Scholar] [CrossRef]

- Garrett, B.; Taverner, T.; Gromala, D.; Tao, G.; Cordingley, E.; Sun, C. Virtual Reality Clinical Research: Promises and Challenges. JMIR Serious Games 2018, 6, e10839. [Google Scholar] [CrossRef]

- Yang, Z.; Rafiei, M.H.; Hall, A.; Thomas, C.; Midtlien, H.A.; Hasselbach, A.; Adeli, H.; Gauthier, L.V. A Novel Methodology for Extracting and Evaluating Therapeutic Movements in Game-Based Motion Capture Rehabilitation Systems. J. Med. Syst. 2018, 42, 255. [Google Scholar] [CrossRef]

- Jimenez, Y.A.; Cumming, S.; Wang, W.; Stuart, K.; Thwaites, D.I.; Lewis, S.J. Patient Education Using Virtual Reality Increases Knowledge and Positive Experience for Breast Cancer Patients Undergoing Radiation Therapy. Support. Care Cancer 2018, 26, 2879–2888. [Google Scholar] [CrossRef]

- Feyzioğlu, Ö.; Dinçer, S.; Akan, A.; Algun, Z.C. Is Xbox 360 Kinect-Based Virtual Reality Training as Effective as Standard Physiotherapy in Patients Undergoing Breast Cancer Surgery? Support. Care Cancer 2020, 28, 4295–4303. [Google Scholar] [CrossRef]

- Sligo, F.X.; Jameson, A.M. The Knowledge—Behavior Gap in Use of Health Information. J. Am. Soc. Inf. Sci. 2000, 51, 858–869. [Google Scholar] [CrossRef]

- Pazzaglia, C.; Imbimbo, I.; Tranchita, E.; Minganti, C.; Ricciardi, D.; Lo Monaco, R.; Parisi, A.; Padua, L. Comparison of Virtual Reality Rehabilitation and Conventional Rehabilitation in Parkinson’s Disease: A Randomised Controlled Trial. Physiotherapy 2020, 106, 36–42. [Google Scholar] [CrossRef]

- Zasadzka, E.; Pieczyńska, A.; Trzmiel, T.; Hojan, K. Virtual Reality as a Promising Tool Supporting Oncological Treatment in Breast Cancer. Int. J. Environ. Res. Public. Health 2021, 18, 8768. [Google Scholar] [CrossRef]

- Bu, X.; Ng, P.H.F.; Xu, W.; Cheng, Q.; Chen, P.Q.; Cheng, A.S.K.; Liu, X. The Effectiveness of Virtual Reality-Based Interventions in Rehabilitation Management of Breast Cancer Survivors: Systematic Review and Meta-Analysis. JMIR Serious Games 2022, 10, e31395. [Google Scholar] [CrossRef] [PubMed]

- Panigutti, C.; Andrea Beretta, I.; Fadda, D.; Fosca Giannotti, I.; Normale Superiore, S.; Dino Pedreschi, I.; Beretta, A.; Fadda, D.; Rinzivillo, S.; Giannotti, F.; et al. Article 21. Rinzivillo. 2023. Co-Design of Human-Centered, Explainable AI for Clinical Decision Support. ACM Trans. Interact. Intell. Syst. 2023, 13, 1–35. [Google Scholar] [CrossRef]

- Hassan, M.A.; Malik, A.S.; Fofi, D.; Karasfi, B.; Meriaudeau, F. Towards Health Monitoring Using Remote Heart Rate Measurement Using Digital Camera: A Feasibility Study. Measurement 2020, 149, 106804. [Google Scholar] [CrossRef]

- Konopik, J.; Wolf, L.; Schöffski, O. Digital Twins for Breast Cancer Treatment—An Empirical Study on Stakeholders’ Perspectives on Potentials and Challenges. Health Technol. 2023, 13, 1003–1010. [Google Scholar] [CrossRef]

- Chang, H.C.; Gitau, A.M.; Kothapalli, S.; Welch, D.R.; Sardiu, M.E.; McCoy, M.D. Understanding the Need for Digital Twins’ Data in Patient Advocacy and Forecasting Oncology. Front. Artif. Intell. 2023, 6, 1260361. [Google Scholar] [CrossRef]

- Liu, Z.; Meyendorf, N.; Mrad, N. The Role of Data Fusion in Predictive Maintenance Using Digital Twin. AIP Conf. Proc. 2018, 1949, 1–7. [Google Scholar] [CrossRef]

- Madhavan, S.; Beckman, R.A.; McCoy, M.D.; Pishvaian, M.J.; Brody, J.R.; Macklin, P. Envisioning the Future of Precision Oncology Trials. Nat. Cancer 2021, 2, 9–11. [Google Scholar] [CrossRef]

- Stahlberg, E.A.; Abdel-Rahman, M.; Aguilar, B.; Asadpoure, A.; Beckman, R.A.; Borkon, L.L.; Bryan, J.N.; Cebulla, C.M.; Chang, Y.H.; Chatterjee, A.; et al. Exploring Approaches for Predictive Cancer Patient Digital Twins: Opportunities for Collaboration and Innovation. Front. Digit. Health 2022, 4, 1007784. [Google Scholar] [CrossRef]

- Alazab, M.; Khan, L.U.; Koppu, S.; Ramu, S.P.; Iyapparaja, M.; Boobalan, P.; Baker, T.; Maddikunta, P.K.R.; Gadekallu, T.R.; Aljuhani, A. Digital Twins for Healthcare 4.0—Recent Advances, Architecture, and Open Challenges. IEEE Consum. Electron. Mag. 2023, 12, 29–37. [Google Scholar] [CrossRef]

- De Benedictis, A.; Mazzocca, N.; Somma, A.; Strigaro, C. Digital Twins in Healthcare: An Architectural Proposal and Its Application in a Social Distancing Case Study. IEEE J. Biomed. Health Inform. 2023, 27, 5143–5154. [Google Scholar] [CrossRef]

- Sahal, R.; Alsamhi, S.H.; Brown, K.N. Personal Digital Twin: A Close Look into the Present and a Step towards the Future of Personalised Healthcare Industry. Sensors 2022, 22, 5918. [Google Scholar] [CrossRef] [PubMed]

- Kaul, R.; Ossai, C.; Forkan, A.R.M.; Jayaraman, P.P.; Zelcer, J.; Vaughan, S.; Wickramasinghe, N. The Role of AI for Developing Digital Twins in Healthcare: The Case of Cancer Care. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1480. [Google Scholar] [CrossRef]

- Haleem, A.; Javaid, M.; Pratap Singh, R.; Suman, R. Exploring the Revolution in Healthcare Systems through the Applications of Digital Twin Technology. Biomed. Technol. 2023, 4, 28–38. [Google Scholar] [CrossRef]

- Hernandez-Boussard, T.; Macklin, P.; Greenspan, E.J.; Gryshuk, A.L.; Stahlberg, E.; Syeda-Mahmood, T.; Shmulevich, I. Digital Twins for Predictive Oncology Will Be a Paradigm Shift for Precision Cancer Care. Nat. Med. 2021, 27, 2065–2066. [Google Scholar] [CrossRef]

- Wickramasinghe, N.; Jayaraman, P.P.; Forkan, A.R.M.; Ulapane, N.; Kaul, R.; Vaughan, S.; Zelcer, J. A Vision for Leveraging the Concept of Digital Twins to Support the Provision of Personalized Cancer Care. IEEE Internet Comput. 2022, 26, 17–24. [Google Scholar] [CrossRef]

- Huang, P.H.; Kim, K.H.; Schermer, M. Ethical Issues of Digital Twins for Personalized Health Care Service: Preliminary Mapping Study. J. Med. Internet Res. 2022, 24, e33081. [Google Scholar] [CrossRef]

- Hassani, H.; Huang, X.; MacFeely, S. Impactful Digital Twin in the Healthcare Revolution. BDCC 2022, 6, 83. [Google Scholar] [CrossRef]

- Adnan, M.; Kalra, S.; Cresswell, J.C.; Taylor, G.W.; Tizhoosh, H.R. Federated Learning and Differential Privacy for Medical Image Analysis. Sci. Rep. 2022, 12, 1953. [Google Scholar] [CrossRef]

- Kaissis, G.A.; Makowski, M.R.; Rückert, D.; Braren, R.F. Secure, Privacy-Preserving and Federated Machine Learning in Medical Imaging. Nat. Mach. Intell. 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Dayan, I.; Roth, H.R.; Zhong, A.; Harouni, A.; Gentili, A.; Abidin, A.Z.; Liu, A.; Costa, A.B.; Wood, B.J.; Tsai, C.S.; et al. Federated Learning for Predicting Clinical Outcomes in Patients with COVID-19. Nat. Med. 2021, 27, 1735–1743. [Google Scholar] [CrossRef]

- Regulation (EU) 2016/679; General Data Protection Regulation (GDPR). EUR-Lex: Luxembourg, 2016. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 2 April 2024).

- ISO/IEC 27001:2013; Information Technology—Security Techniques—Information Security Management Systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2013. Available online: https://www.iso.org/standard/54534.html (accessed on 2 April 2024).

- Nadini, M.; Alessandretti, L.; Di Giacinto, F.; Martino, M.; Aiello, L.M.; Baronchelli, A. Mapping the NFT Revolution: Market Trends, Trade Networks, and Visual Features. Sci. Rep. 2021, 11, 20902. [Google Scholar] [CrossRef] [PubMed]

- Ghafur, S.; Grass, E.; Jennings, N.R.; Darzi, A. The Challenges of Cybersecurity in Health Care: The UK National Health Service as a Case Study. Lancet Digit. Health 2019, 1, e10–e12. [Google Scholar] [CrossRef] [PubMed]

- The Metaverse’s Dark Side: Here Come Harassment and Assaults—The New York Times. Available online: https://www.nytimes.com/2021/12/30/technology/metaverse-harassment-assaults.html (accessed on 21 May 2024).

- Wu, W.; Hu, D.; Cong, W.; Shan, H.; Wang, S.; Niu, C.; Yan, P.; Yu, H.; Vardhanabhuti, V.; Wang, G. Stabilizing Deep Tomographic Reconstruction: Part A. Hybrid Framework and Experimental Results. Patterns 2022, 3, 100474. [Google Scholar] [CrossRef]

- Wu, W.; Hu, D.; Cong, W.; Shan, H.; Wang, S.; Niu, C.; Yan, P.; Yu, H.; Vardhanabhuti, V.; Wang, G. Stabilizing Deep Tomographic Reconstruction: Part B. Convergence Analysis and Adversarial Attacks. Patterns 2022, 3, 100475. [Google Scholar] [CrossRef]

- Zhang, J.; Chao, H.; Kalra, M.K.; Wang, G.; Yan, P. Overlooked Trustworthiness of Explainability in Medical AI. medRxiv 2021. [Google Scholar] [CrossRef]

- Burr, C.; Leslie, D. Ethical Assurance: A Practical Approach to the Responsible Design, Development, and Deployment of Data-Driven Technologies. AI Ethics 2023, 3, 73–98. [Google Scholar] [CrossRef]

- Burrell, J. How the Machine ‘Thinks’: Understanding Opacity in Machine Learning Algorithms. Big Data Soc. 2016, 3. [Google Scholar] [CrossRef]

- Zhuk, A. Ethical Implications of AI in the Metaverse. AI Ethics 2024, 1–12. [Google Scholar] [CrossRef]

- Sap, M.; Card, D.; Gabriel, S.; Choi, Y.; Smith, N.A. The Risk of Racial Bias in Hate Speech Detection. In Proceedings of the ACL 2019—57th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference, Florence, Italy, 28 July–2 August 2019; pp. 1668–1678. [Google Scholar] [CrossRef]

- Ahmet, E. The Impact of Artificial Intelligence on Social Problems and Solutions: An Analysis on the Context of Digital Divide and Exploitation. Yeni Medya 2022, 2022, 247–264. [Google Scholar]

- Mittelstadt, B.D.; Allo, P.; Taddeo, M.; Wachter, S.; Floridi, L. The Ethics of Algorithms: Mapping the Debate. Big Data Soc. 2016, 3. [Google Scholar] [CrossRef]

- Lee, N.; Resnick, P.; Barton, G. Algorithmic Bias Detection and Mitigation: Best Practices and Policies to Reduce Consumer Harms; Brookings Institution: Washington, DC, USA, 2019. [Google Scholar]

- Selbst, A.D.; Boyd, D.; Friedler, S.A.; Venkatasubramanian, S.; Vertesi, J. Fairness and Abstraction in Sociotechnical Systems. In Proceedings of the FAT* 2019—Proceedings of the 2019 Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 59–68. [Google Scholar] [CrossRef]

- Felzmann, H.; Fosch-Villaronga, E.; Lutz, C.; Tamò-Larrieux, A. Towards Transparency by Design for Artificial Intelligence. Sci. Eng. Ethics 2020, 26, 3333–3361. [Google Scholar] [CrossRef]

- Brundage, M.; Avin, S.; Wang, J.; Belfield, H.; Krueger, G.; Hadfield, G.; Khlaaf, H.; Yang, J.; Toner, H.; Fong, R.; et al. Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims. arXiv 2020. [Google Scholar] [CrossRef]

- Ferrer, X.; Van Nuenen, T.; Such, J.M.; Cote, M.; Criado, N. Bias and Discrimination in AI: A Cross-Disciplinary Perspective. IEEE Technol. Soc. Mag. 2021, 40, 72–80. [Google Scholar] [CrossRef]

- Benjamins, R.; Rubio Viñuela, Y.; Alonso, C. Social and Ethical Challenges of the Metaverse: Opening the Debate. AI Ethics 2023, 3, 689–697. [Google Scholar] [CrossRef]

- Schiff, D.; Rakova, B.; Ayesh, A.; Fanti, A.; Lennon, M. Principles to Practices for Responsible AI: Closing the Gap. arXiv 2020, arXiv:2006.04707. [Google Scholar]

- Schmitt, L. Mapping Global AI Governance: A Nascent Regime in a Fragmented Landscape. AI Ethics 2022, 2, 303–314. [Google Scholar] [CrossRef]

- Bang, J.; Kim, J. Metaverse Ethics for Healthcare Using AI Technology Challenges and Risks; Springer: Cham, Switzerland, 2023; pp. 367–378. [Google Scholar]

- Habbal, A.; Ali, M.K.; Abuzaraida, M.A. Artificial Intelligence Trust, Risk and Security Management (AI TRiSM): Frameworks, Applications, Challenges and Future Research Directions. Expert. Syst. Appl. 2024, 240, 122442. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; López de Prado, M.; Herrera-Viedma, E.; Herrera, F. Connecting the Dots in Trustworthy Artificial Intelligence: From AI Principles, Ethics, and Key Requirements to Responsible AI Systems and Regulation. Inform. Fusion. 2023, 99, 101896. [Google Scholar] [CrossRef]

- Li, Y. Performance Evaluation of Machine Learning Methods for Breast Cancer Prediction. Appl. Comput. Math. 2018, 7, 212. [Google Scholar] [CrossRef]

- Champalimaud Foundation: First Breast Cancer Surgery in the Metaverse—Der Große Neustart—Podcast. Available online: https://podtail.com/podcast/der-grosse-neustart/champalimaud-foundation-first-breast-cancer-surger/ (accessed on 2 April 2024).

- Antaki, F.; Doucet, C.; Milad, D.; Giguère, C.É.; Ozell, B.; Hammamji, K. Democratizing Vitreoretinal Surgery Training with a Portable and Affordable Virtual Reality Simulator in the Metaverse. Transl. Vis. Sci. Technol. 2024, 13, 5. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.C.; Yu, Z.; Scott, I.U.; Greenberg, P.B. Virtual Reality Training for Cataract Surgery Operating Performance in Ophthalmology Trainees. Cochrane Database Syst. Rev. 2021, 12, CD014953. [Google Scholar] [CrossRef]

- Jamshidi, M.; Lalbakhsh, A.; Talla, J.; Peroutka, Z.; Hadjilooei, F.; Lalbakhsh, P.; Jamshidi, M.; La Spada, L.; Mirmozafari, M.; Dehghani, M.; et al. Artificial Intelligence and COVID-19: Deep Learning Approaches for Diagnosis and Treatment. IEEE Access 2020, 8, 109581–109595. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial Intelligence in Medicine. Metabolism 2017, 69S, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Jin, M.L.; Brown, M.M.; Patwa, D.; Nirmalan, A.; Edwards, P.A. Telemedicine, Telementoring, and Telesurgery for Surgical Practices. Curr. Probl. Surg. 2021, 58, 100986. [Google Scholar] [CrossRef] [PubMed]

- Erlandson, R.F. Universal and Accessible Design for Products, Services, and Processes; CRC Press: Boca Raton, FL, USA, 2007; pp. 1–259. [Google Scholar] [CrossRef]

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The Past, Present, and Future of Virtual and Augmented Reality Research: A Network and Cluster Analysis of the Literature. Front Psychol 2018, 9, 2086. [Google Scholar] [CrossRef] [PubMed]

- Ciccone, B.A.; Bailey, S.K.T.; Lewis, J.E. The Next Generation of Virtual Reality: Recommendations for Accessible and Ergonomic Design. Ergon. Des. 2023, 31, 24–27. Available online: https://journals.sagepub.com/doi/abs/10.1177/10648046211002578 (accessed on 22 May 2024). [CrossRef]

- Birch, J. Worldwide Prevalence of Red-Green Color Deficiency. J. Opt. Soc. Am. A 2012, 29, 313. [Google Scholar] [CrossRef] [PubMed]

- Stevens, G.; Flaxman, S.; Brunskill, E.; Mascarenhas, M.; Mathers, C.D.; Finucane, M. Global and Regional Hearing Impairment Prevalence: An Analysis of 42 Studies in 29 Countries. Eur. J. Public Health 2013, 23, 146–152. [Google Scholar] [CrossRef] [PubMed]

- Fulvio, J.; Rokers, B. Sensitivity to Sensory Cues Predicts Motion Sickness in Virtual Reality. J. Vis. 2018, 18, 1066. [Google Scholar] [CrossRef]

- Dodgson, N.A. Variation and Extrema of Human Interpupillary Distance. Stereosc. Disp. Virtual Real. Syst. XI 2004, 5291, 36–46. [Google Scholar] [CrossRef]

- Chihara, T.; Seo, A. Evaluation of Physical Workload Affected by Mass and Center of Mass of Head-Mounted Display. Appl. Erg. 2018, 68, 204–212. [Google Scholar] [CrossRef]

- Yan, Y.; Chen, K.; Xie, Y.; Song, Y.; Liu, Y. The Effects of Weight on Comfort of Virtual Reality Devices. Adv. Intell. Syst. Comput. 2019, 777, 239–248. [Google Scholar] [CrossRef]

- Hynes, J.; MacMillan, A.; Fernandez, S.; Jacob, K.; Carter, S.; Predham, S.; Etchegary, H.; Dawson, L. Group plus “Mini’’ Individual Pre-Test Genetic Counselling Sessions for Hereditary Cancer Shorten Provider Time and Improve Patient Satisfaction. Hered. Cancer Clin. Pract. 2020, 18, 3. [Google Scholar] [CrossRef] [PubMed]

- Benusiglio, P.R.; Di Maria, M.; Dorling, L.; Jouinot, A.; Poli, A.; Villebasse, S.; Le Mentec, M.; Claret, B.; Boinon, D.; Caron, O. Hereditary Breast and Ovarian Cancer: Successful Systematic Implementation of a Group Approach to Genetic Counselling. Fam Cancer 2017, 16, 51–56. [Google Scholar] [CrossRef]

- Hoffman, H.G.; Patterson, D.R.; Carrougher, G.J.; Sharar, S.R. Effectiveness of Virtual Reality-Based Pain Control with Multiple Treatments. Clin. J. Pain 2001, 17, 229–235. [Google Scholar] [CrossRef]

- Epidemic Responding to America’s Prescription Drug Abuse Crisis|Office of Justice Programs. Available online: https://www.ojp.gov/ncjrs/virtual-library/abstracts/epidemic-responding-americas-prescription-drug-abuse-crisis (accessed on 19 May 2024).

- Van Twillert, B.; Bremer, M.; Faber, A.W. Computer-Generated Virtual Reality to Control Pain and Anxiety in Pediatric and Adult Burn Patients during Wound Dressing Changes. J. Burn Care Res. 2007, 28, 694–702. [Google Scholar] [CrossRef] [PubMed]

- Gershon, J.; Zimand, E.; Pickering, M.; Rothbaum, B.O.; Hodges, L.A. Pilot and Feasibility Study of Virtual Reality as a Distraction for Children with Cancer. J. Am. Acad. Child Adolesc. Psychiatry 2004, 43, 1243–1249. [Google Scholar] [CrossRef]

- Jones, T.; Moore, T.; Choo, J. The Impact of Virtual Reality on Chronic Pain. PLoS ONE 2016, 11, e0167523. [Google Scholar] [CrossRef]

- Furlan, A.D.; Sandoval, J.A.; Mailis-Gagnon, A.; Tunks, E. Opioids for Chronic Noncancer Pain: A Meta-Analysis of Effectiveness and Side Effects. CMAJ 2006, 174, 1589–1594. [Google Scholar] [CrossRef]

- Chirico, A.; Lucidi, F.; De Laurentiis, M.; Milanese, C.; Napoli, A.; Giordano, A. Virtual Reality in Health System: Beyond Entertainment. A Mini-Review on the Efficacy of VR During Cancer Treatment. J. Cell. Physiol. 2016, 231, 275–287. [Google Scholar] [CrossRef]

- Yazdipour, A.B.; Saeedi, S.; Bostan, H.; Masoorian, H.; Sajjadi, H.; Ghazisaeedi, M. Opportunities and Challenges of Virtual Reality-Based Interventions for Patients with Breast Cancer: A Systematic Review. BMC Med. Inform. Decis. Mak. 2023, 23, 17. [Google Scholar] [CrossRef] [PubMed]

- Caserman, P.; Garcia-Agundez, A.; Gámez Zerban, A.; Göbel, S. Cybersickness in Current-Generation Virtual Reality Head-Mounted Displays: Systematic Review and Outlook. Virtual Real. 2021, 25, 1153–1170. [Google Scholar] [CrossRef]

- Weech, S.; Kenny, S.; Barnett-Cowan, M. Presence and Cybersickness in Virtual Reality Are Negatively Related: A Review. Front. Psychol. 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Chirico, A.; Maiorano, P.; Indovina, P.; Milanese, C.; Giordano, G.G.; Alivernini, F.; Iodice, G.; Gallo, L.; De Pietro, G.; Lucidi, F.; et al. Virtual Reality and Music Therapy as Distraction Interventions to Alleviate Anxiety and Improve Mood States in Breast Cancer Patients during Chemotherapy. J. Cell. Physiol. 2020, 235, 5353–5362. [Google Scholar] [CrossRef] [PubMed]

- Ames, S.L.; Wolffsohn, J.S.; Mcbrien, N.A. The Development of a Symptom Questionnaire for Assessing Virtual Reality Viewing Using a Head-Mounted Display. Optom. Vis. Sci. 2005, 82, 168–176. [Google Scholar] [CrossRef] [PubMed]

- Menekli, T.; Yaprak, B.; Doğan, R. The Effect of Virtual Reality Distraction Intervention on Pain, Anxiety, and Vital Signs of Oncology Patients Undergoing Port Catheter Implantation: A Randomized Controlled Study. Pain Manag. Nurs. 2022, 23, 585–590. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Y.; Zhang, J.E.; Cheng, A.S.K.; Cheng, H.; Wefel, J.S. Meta-Analysis of the Efficacy of Virtual Reality–Based Interventions in Cancer-Related Symptom Management. Integr. Cancer Ther. 2019, 18. [Google Scholar] [CrossRef] [PubMed]

- Bani Mohammad, E.; Ahmad, M. Virtual Reality as a Distraction Technique for Pain and Anxiety among Patients with Breast Cancer: A Randomized Control Trial. Palliat. Support. Care 2019, 17, 29–34. [Google Scholar] [CrossRef] [PubMed]

- Cieślik, B.; Mazurek, J.; Rutkowski, S.; Kiper, P.; Turolla, A.; Szczepańska-Gieracha, J. Virtual Reality in Psychiatric Disorders: A Systematic Review of Reviews. Complement. Ther. Med. 2020, 52, 102480. [Google Scholar] [CrossRef] [PubMed]

- Tian, N.; Lopes, P.; Boulic, R. A Review of Cybersickness in Head-Mounted Displays: Raising Attention to Individual Susceptibility. Virtual Real. 2022, 26, 1409–1441. [Google Scholar] [CrossRef]

- Ventura, S.; Baños, R.M.; Botella, C.; Ventura, S.; Baños, R.M.; Botella, C. Virtual and Augmented Reality: New Frontiers for Clinical Psychology. In State of the Art Virtual Reality and Augmented Reality Knowhow; BoD–Books on Demand: Norderstedt, Germany, 2018. [Google Scholar]

- Breast Cancer Information|Susan G. Komen®. Available online: https://www.komen.org/breast-cancer/ (accessed on 19 May 2024).

- Meet Susan G. Komen Partners|Susan G. Komen®. Available online: https://www.komen.org/how-to-help/support-our-partners/meet-our-partners/ (accessed on 19 May 2024).

- Kim, S.; Jung, T.; Sohn, D.K.; Chae, Y.; Kim, Y.A.; Kang, S.H.; Park, Y.; Chang, Y.J. The Multidomain Metaverse Cancer Care Digital Platform: Development and Usability Study. JMIR Serious Games 2023, 11, e46242. [Google Scholar] [CrossRef]

- Marzaleh, M.A.; Peyravi, M.; Shaygani, F.A. Revolution in Health: Opportunities and Challenges of the Metaverse. EXCLI J 2022, 21, 791. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Żydowicz, W.M.; Skokowski, J.; Marano, L.; Polom, K. Navigating the Metaverse: A New Virtual Tool with Promising Real Benefits for Breast Cancer Patients. J. Clin. Med. 2024, 13, 4337. https://doi.org/10.3390/jcm13154337

Żydowicz WM, Skokowski J, Marano L, Polom K. Navigating the Metaverse: A New Virtual Tool with Promising Real Benefits for Breast Cancer Patients. Journal of Clinical Medicine. 2024; 13(15):4337. https://doi.org/10.3390/jcm13154337

Chicago/Turabian StyleŻydowicz, Weronika Magdalena, Jaroslaw Skokowski, Luigi Marano, and Karol Polom. 2024. "Navigating the Metaverse: A New Virtual Tool with Promising Real Benefits for Breast Cancer Patients" Journal of Clinical Medicine 13, no. 15: 4337. https://doi.org/10.3390/jcm13154337