Abstract

Cattle behaviour is a significant indicator of cattle welfare. With the advancements in electronic equipment, monitoring and classifying multiple cattle behaviour patterns is becoming increasingly important in precision livestock management. The aim of this study was to detect important cattle physiological states using a neural network model and wearable electronic sensors. A novel long short-term memory (LSTM) recurrent neural network model that uses two-way information was developed to accurately classify cattle behaviour and compared with baseline LSTM. Deep residual bidirectional LSTM and baseline LSTM were used to classify six behavioural patterns of cows with window sizes of 64, 128 and 256 (6.4 s, 12.8 s and 25.6 s, respectively). The results showed that when using deep residual bidirectional LSTM with window size 128, four classification performance indicators, namely, accuracy, precision, recall, and F1-score, achieved the best results of 94.9%, 95.1%, 94.9%, and 94.9%, respectively. The results showed that the deep residual bidirectional LSTM model can be used to classify time-series data collected from twelve cows using inertial measurement unit collars. Six aim cattle behaviour patterns can be classified with high accuracy. This method can be used to quickly detect whether a cow is suffering from bovine dermatomycosis. Furthermore, this method can be used to implement automated and precise cattle behaviour classification techniques for precision livestock farming.

1. Introduction

Cattle behaviour detection plays a significant role in smart livestock management. Several studies in this area have focused on automatic, precise, multiple cattle behaviour pattern classifications. Cattle behaviour classification technology is a potential way to provide additional information on animal health, production, and livestock precision management.

Behaviour is an important indicator representing animal welfare. Livestock behaviour monitoring based on videos/images and wearable sensors are two important approaches that emerged with the development of modern technology. To recognize cattle behaviours (e.g., lying, standing, and walking), video-based monitoring with Rexnet 3D network and other deep learning (DL) methods have been proposed [1,2]. Based on neck [3], leg [4] or ear-tag accelerometers [5], an unsupervised machine learning model [6] was introduced to assess multiple behaviour patterns or detect anomalies in cows’ walking patterns.

Cattle are often affected by skin diseases and infected cattle have difficulties feeding and resting, which has a negative impact on growth, production performance, coat, and body condition [7]. An increasing number of studies on cattle skin diseases have been conducted to improve animal welfare and farm economics [8,9]. An important and ongoing study is the early detection of cattle behaviour using computer vision and wearable motion sensors. A long short-term memory recurrent neural network (LSTM-RNN) was found to be suitable for processing time series data such as cattle behaviour in a previous study [10], but there is still room for improvement. To this end, novel methods for accurate and comprehensive behaviour classification should be investigated. DL has been used in many fields, including recognising animal activity, and has become a popular research area for scientists and engineers [11,12,13].

This study aims to develop a deep residual bidirectional LSTM (Bidir-LSTM) model to classify cattle behaviour patterns, especially skin disease-related behaviours, accurately and comprehensively. To analyse the feasibility of the time series activity classification, inertial measurement unit (IMU) data from 12 cows were classified using a baseline LSTM-RNN model and a deep residual Bidir-LSTM model. To evaluate the classification performance, these two models were trained with window size 64, window size 128 and window size 256. The goal of this study is to propose a method that can be applied to the quick detection of bovine dermatomycosis and used to implement automated and precise cattle behaviour classification for precision livestock farming.

2. Materials and Methods

2.1. Animals and Feeding

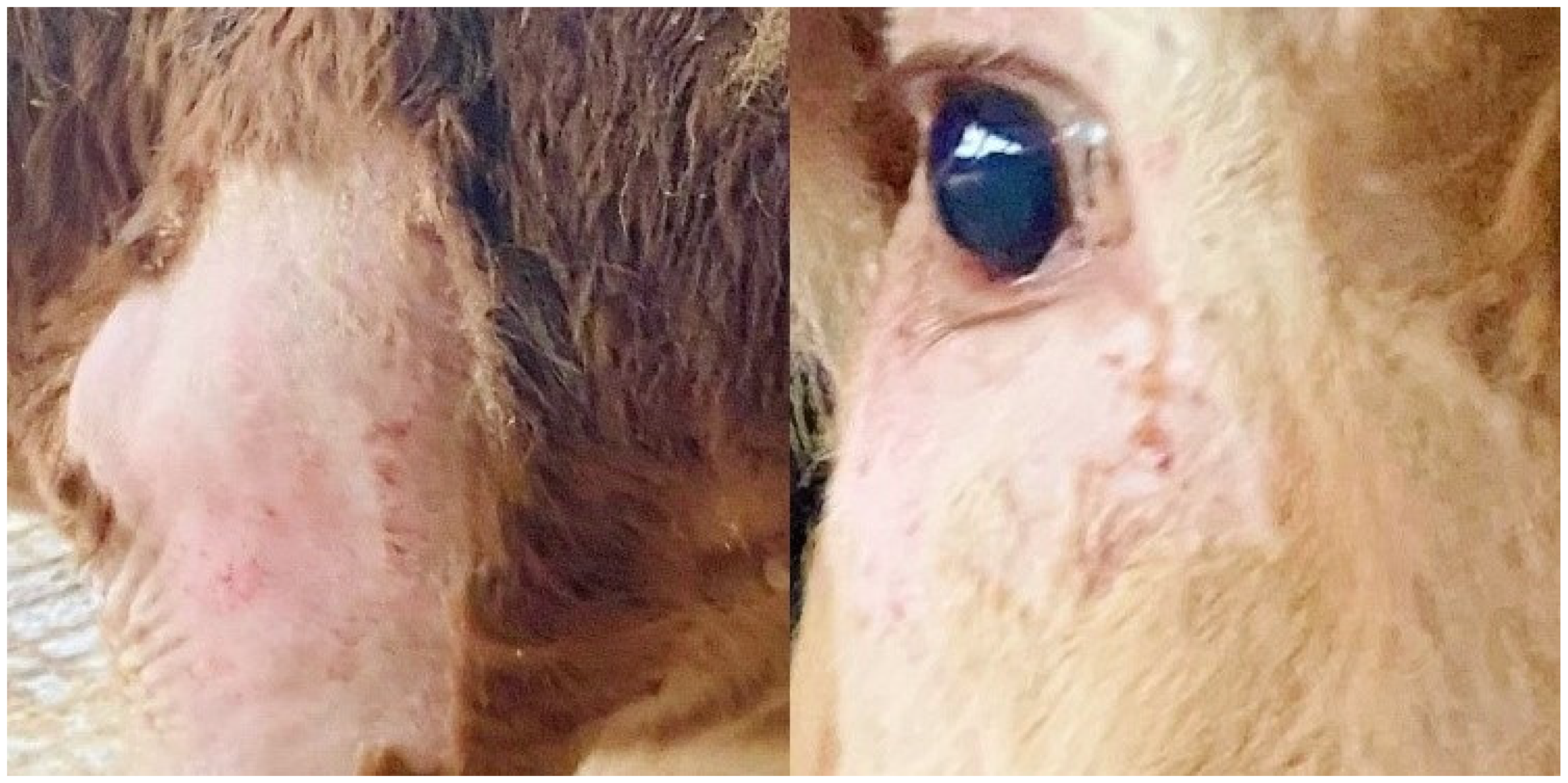

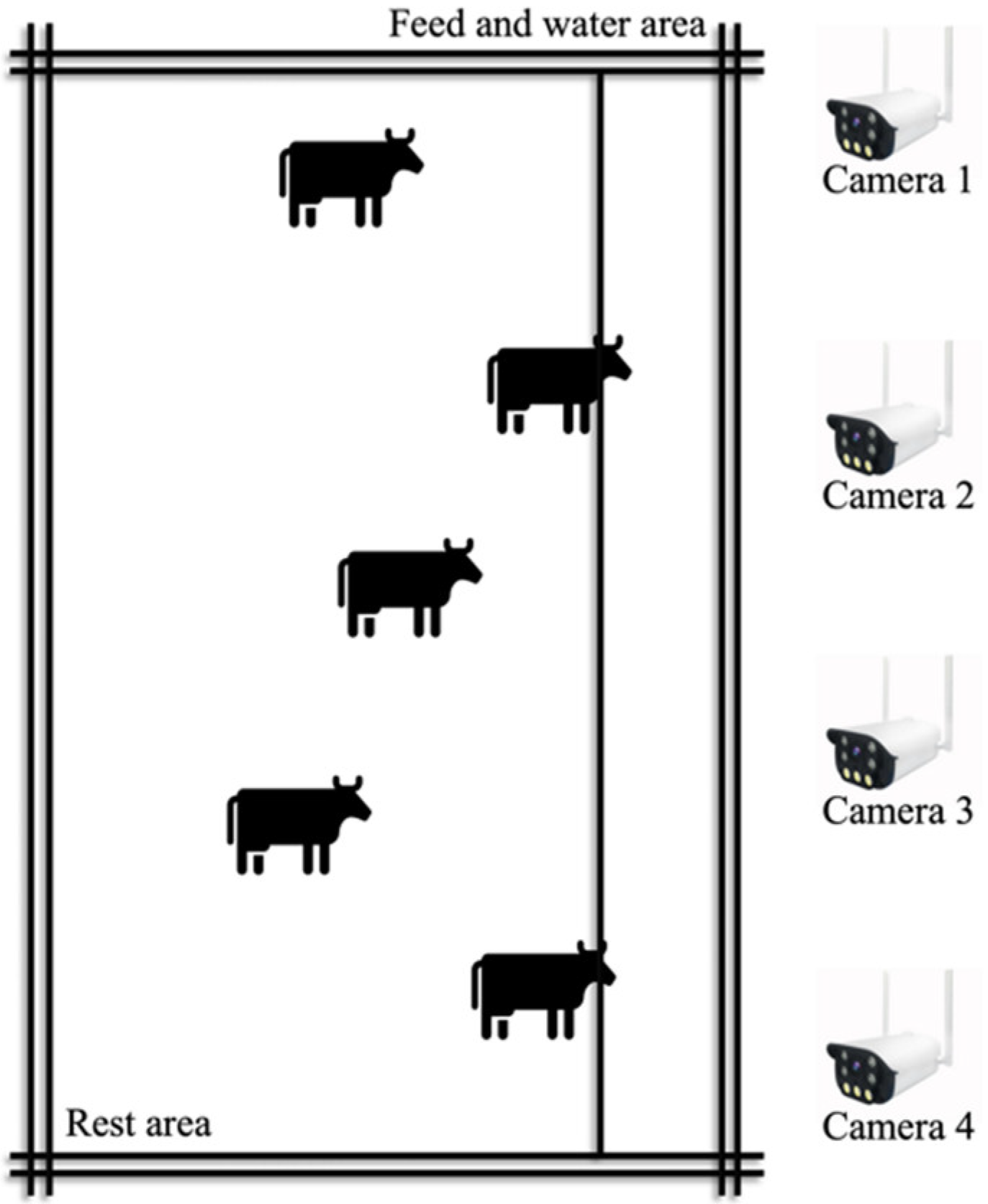

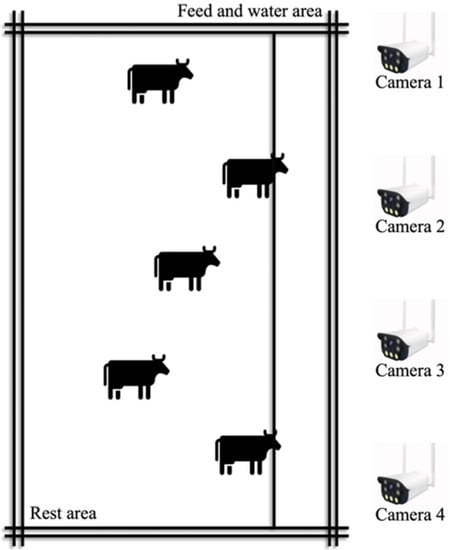

The experiment was conducted at an animal feeding farm in Sichuan, China from 20 November 2020 to 20 July 2021. The behaviour data of 12 expectant cows were recorded synchronously for all cows using four cameras and a nine-axis IMU with an SD card. These indigenous southern Sichuan mountainous cattle (Junlian yellow cattle) were between three and four years of age; two of the expectant cows suffered from bovine dermatomycosis (Figure 1). Bovine dermatomycosis is usually caused by certain trichophytons and microspore. Infected cattle often rub the itching area, which causes subcutaneous haemorrhages, and the dermatosis may eventually lead to irregular feeding and emaciation. Total mixed rations were used as the feeding method, with the main ingredients being oat grass, corn, wheat bran, rapeseed meal, and soybean meal. Each cow had an independent feeding position, and water intake was ad libitum. Figure 2 depicts the barn layout and additional devices. The Animal Welfare Committee of Sichuan Agricultural University reviewed this experiment, and the serial number affidavit of approval regarding animal ethics and welfare was No. 20200057.

Figure 1.

Signs of dermatomycosis in cows.

Figure 2.

Layout of the barn.

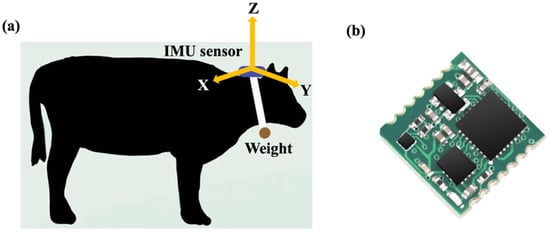

2.2. Collar and IMU Systems

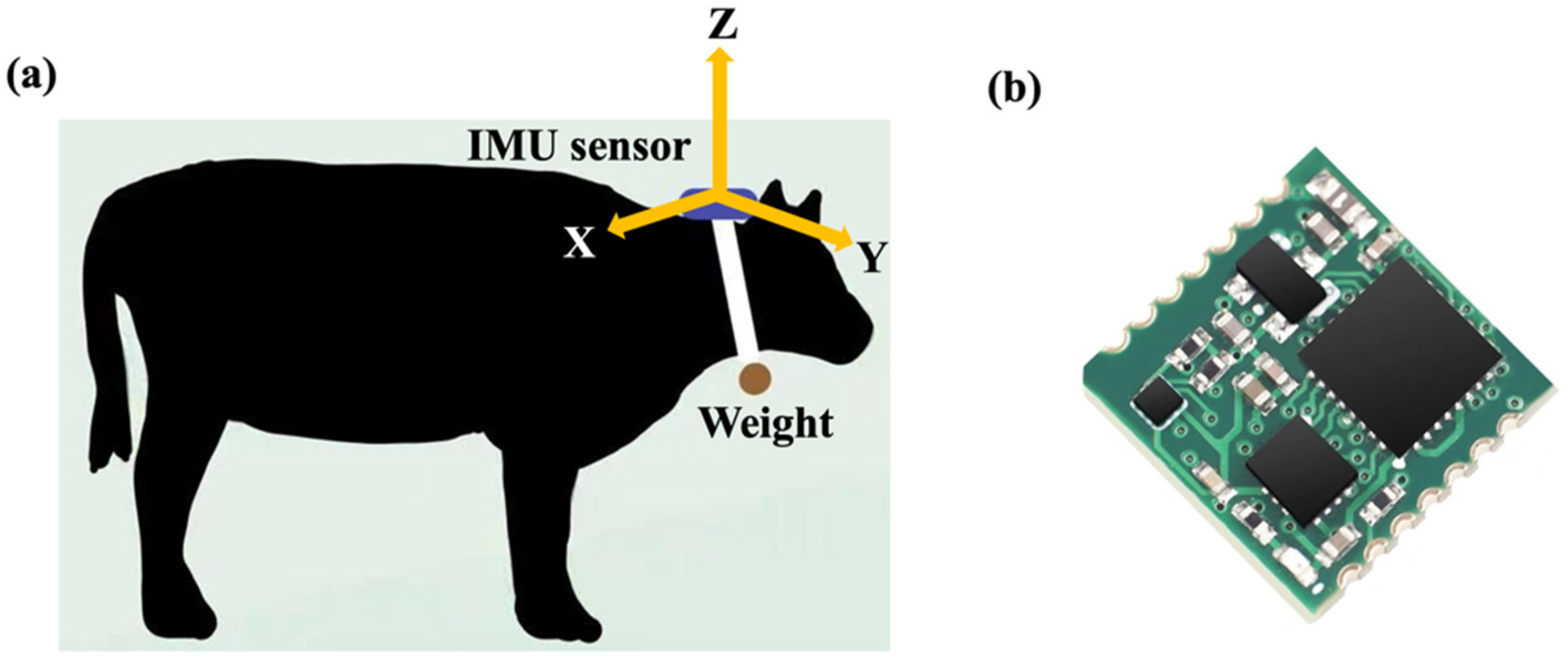

A collar with a nine-axis IMU (WT901SDCL, Shenzhen Vite Intelligent Technology Co., Ltd., Shenzhen, China) was placed on each cow’s neck to collect the behaviour data. With only one piece of equipment attached to the cow, more behaviour data could be recorded on the microSD card by placing the IMU sensor on the top of the cow’s neck. The accelerometers were configured to sample at 10 Hz and, to verify the cows’ behaviour, four cameras (MVM3150-B11, Shenzhen Weiduan Trading Co., Ltd., Shenzhen, China) were set in the barn to record synchronously with IMU. Figure 3 shows the positions of the collar and IMU sensor. Before data collection, the video and IMU sensor recordings were artificially synchronized. The videos recorded during the experiment were used to supplement behaviour verification and label IMU data. The battery used for the IMU sensor had a capacity of 7000 mAh, allowing for it to operate for approximately 10 days. IMU data and videos were collected 24 h a day, seven days a week, to conduct continuous monitoring throughout the experiment. At each battery replacement cycle, the previous week’s data were downloaded (approximately once per week).

Figure 3.

(a) Position of the collar and IMU sensor and (b) WT901 IMU sensor.

2.3. Data Analysis

2.3.1. Behaviour Definition

Six behaviour patterns were defined in this study based on previous studies [14,15,16]. According to our actual observation and related studies [17,18], abnormal rubbing and scratching are the key behaviours associated with cattle skin disease, which are defined as rub itching (leg) and rub itching (neck). Each behaviour pattern is clearly defined and specified in Table 1.

Table 1.

Definitions of cattle behaviours.

Among these six cattle behaviour patterns, rub itching (leg), social licking, and rub itching (neck) were the three behaviour patterns related to bovine dermatomycosis. The intensity of animal activity can be described by ODBA [19,20] which is the sum of the absolute dynamic acceleration from all three orthogonal directions, as follows:

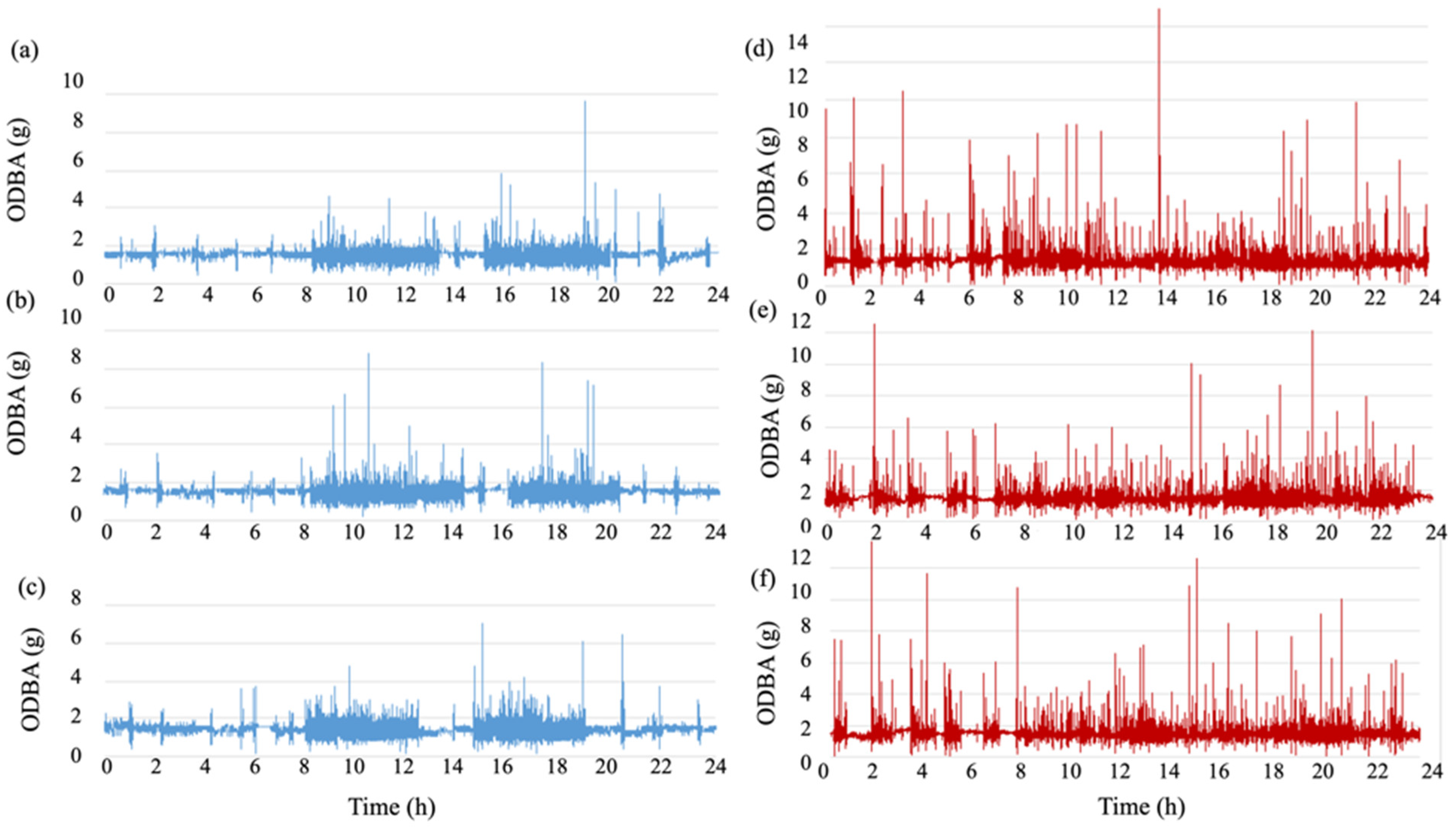

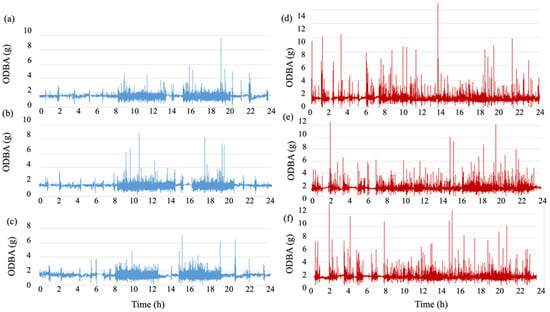

(g), (g) and (g) are the acceleration in each axis. Compared with healthy cows, the overall dynamic body acceleration (ODBA) of 24 h distribution of cows infected with skin disease was different regarding certain rules. Figure 4 presents the ODBA of two groups from cows with skin disease and healthy cows. There was a clear difference between infected and healthy cows regarding the resting state at night (20:00) and the active state during the day (08:00) cows. High ODBA values were observed in infected cows at compared to healthy cows, due to the high frequency of skin-disease-related behaviours.

Figure 4.

ODBA values over a 24 h period for (a–c) healthy cows and (d–f) infected cows.

2.3.2. Baseline LSTM-RNN

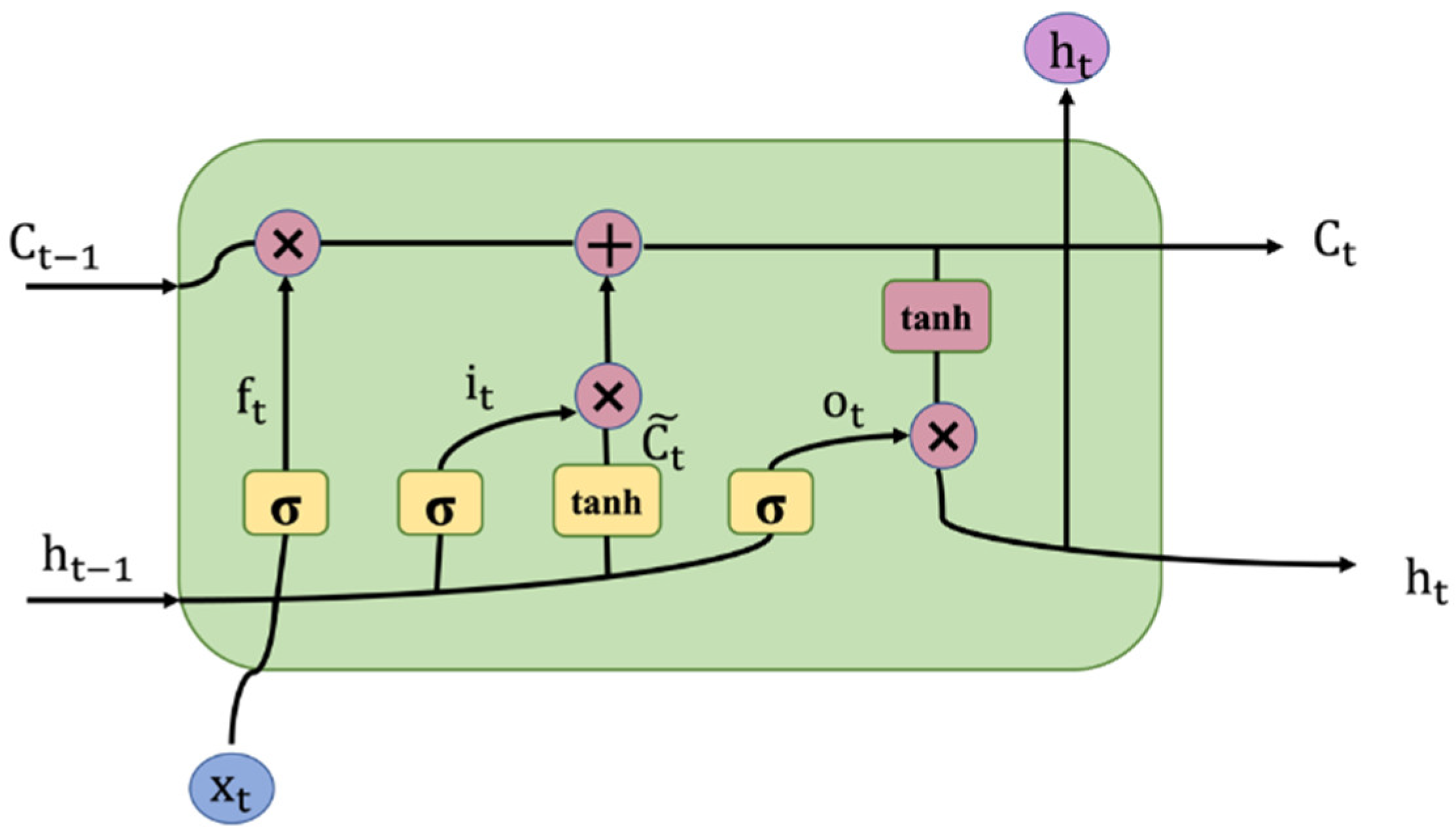

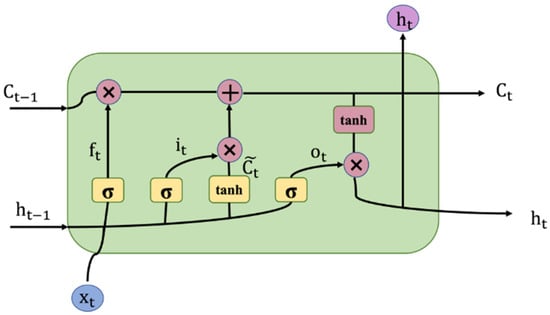

An LSTM is an RNN that uses additional cells and in- and output gates to address the vanishing gradient problem in vanilla RNNs [21]. To allow for the gradients to flow through the network without quickly vanishing, vanishing gradients were solved through additional additive components and forgetting gate activations. Figure 5 shows an LSTM cell, where σ and tanh are the activation functions, and i, f, o and c are the input gate, forget gate, output gate and cell activation vectors, all of which are the same size with hidden vector h. xt is the input. An LSTM-RNN classifier was used in a study on classifying specific cow behaviour before calving [10] and was found to be suitable for the behaviour classification of cows’ activity data.

Figure 5.

Basic architecture of LSTM.

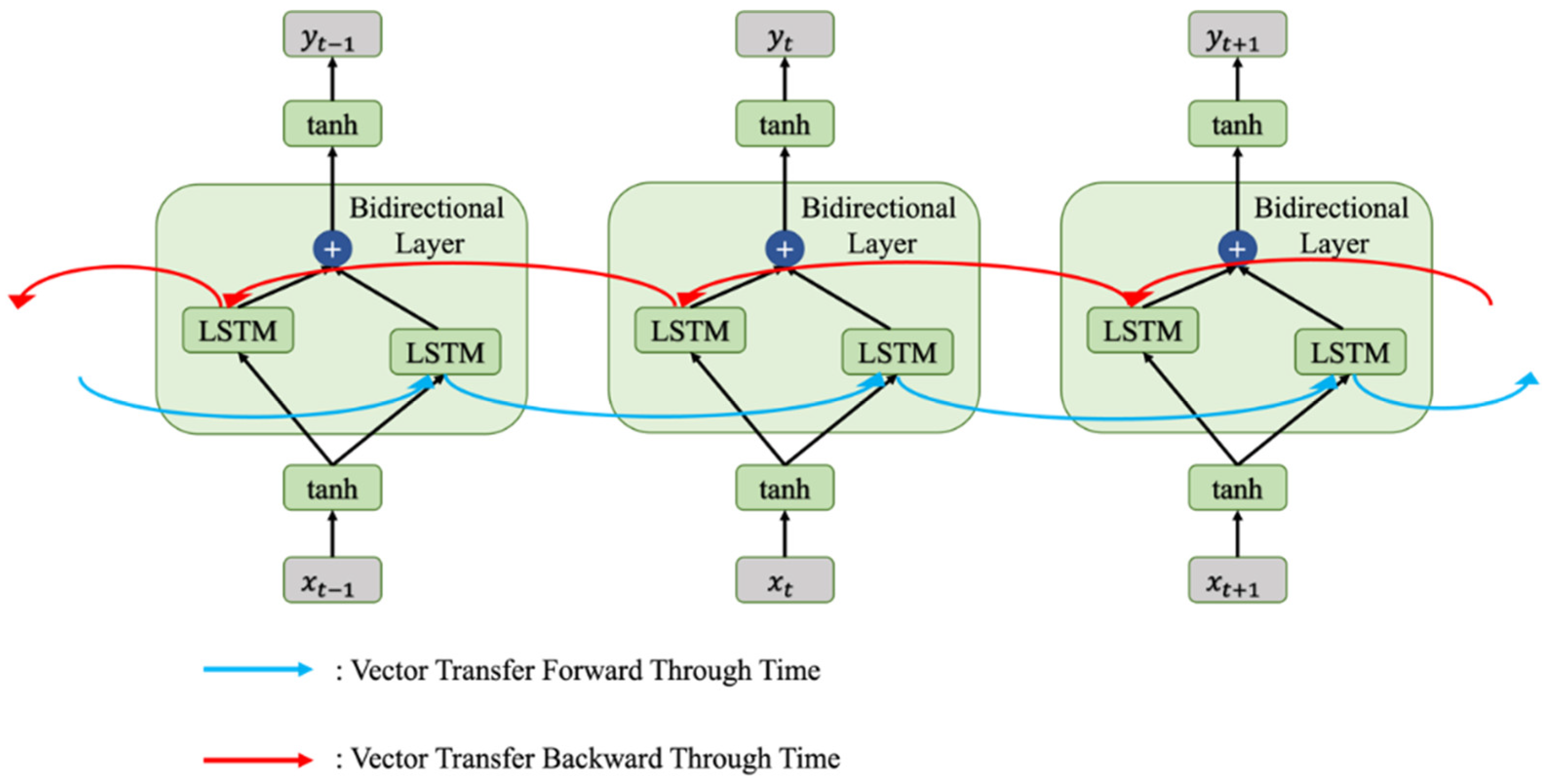

2.3.3. Bidirectional LSTM

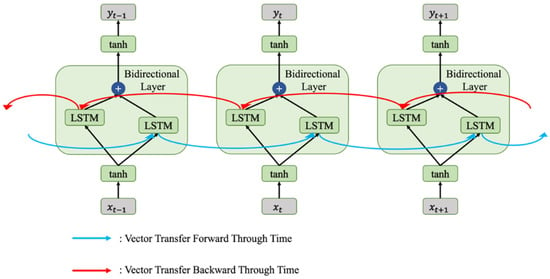

Bidir-LSTM is an improved version of earlier LSTM networks that work on two LSTMs [22,23]. Cattle activity trajectories are continuous; for baseline LSTM cells, if the cell runs in one direction, then only the previous information can be used to predict the status and some valuable information may be lost. The current output related to both previous and subsequent information was the improvement in Bidir-LSTM, which is a sequence processing model that consists of two LSTM cells: one that takes the input in a forward direction and the other in a backward direction [23,24]. Bidir-LSTM increased the amount of information that was available to the network, improving the algorithm’s context. Figure 6 shows a standard Bidir-LSTM structure, where xt is the input and tanh is the activation function. Forward and backward sequences were defined as and in the hidden layer, respectively. The hidden layer, , and the output layer can be defined as follows:

where are weight metrics between the layers and is the bias. A bidirectional layer receives information from both the vertical direction (lower layer) and horizontal direction (past and future), and outputs the processed information for the upper layer [25].

Figure 6.

Standard Bidir-LSTM structure.

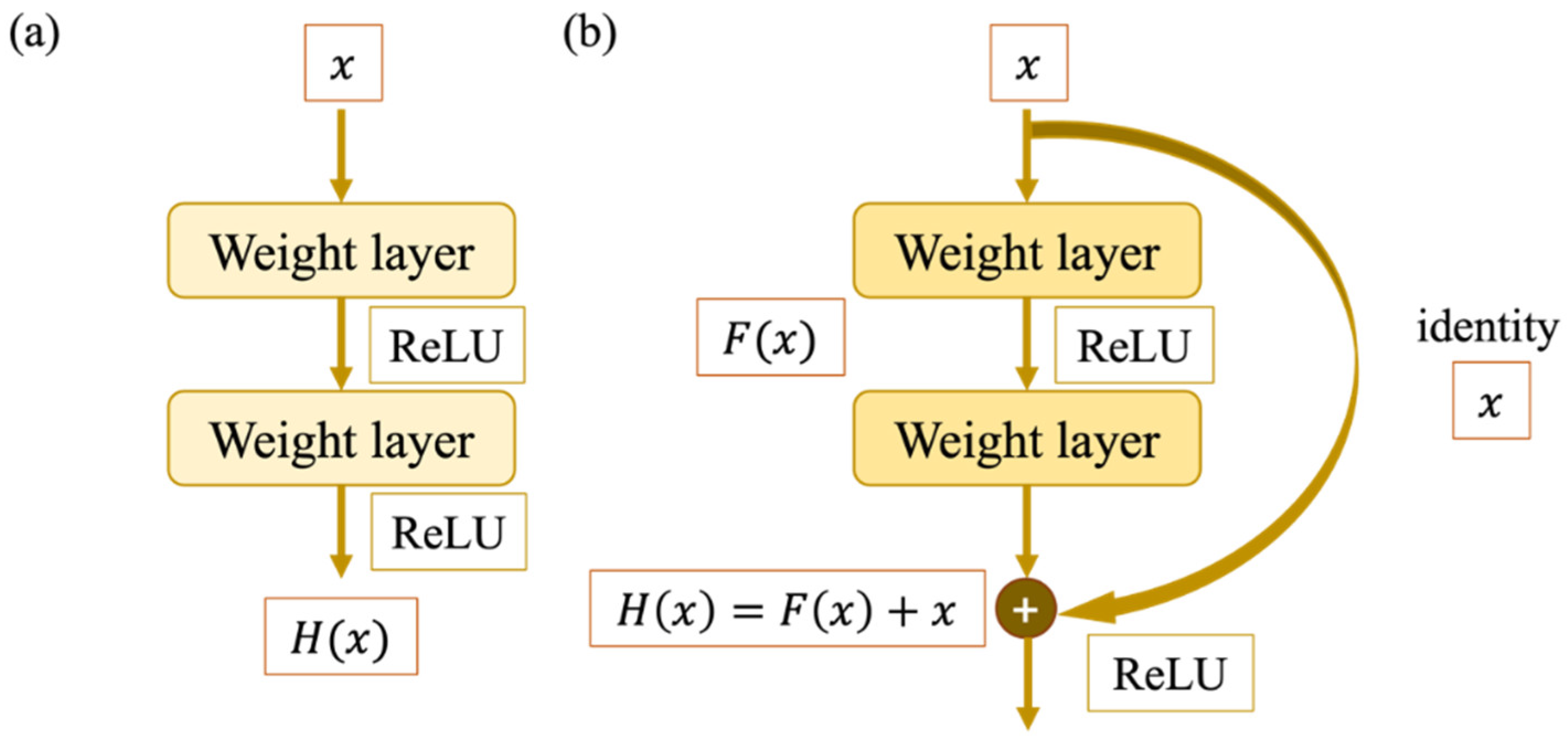

2.3.4. Bidir-LSTM

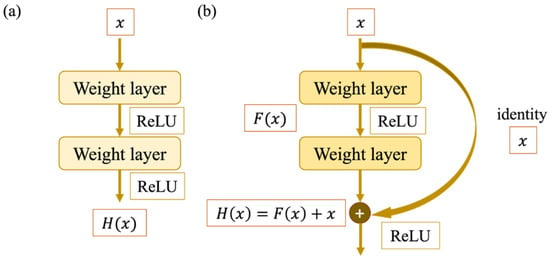

The existence of residual blocks provides the difference between deep residual Bidir-LSTM and Bidir-LSTM. Residual blocks are skip-connection blocks in the ResNet architecture that learn residual functions with reference to the layer inputs, instead of learning unreferenced functions [26]. Formally, denoting the desired underlying mapping as , the stacked nonlinear layers fit another mapping of . The original mapping is recast into and then acts identical to a residual, hence the name “residual block” (Zhao et al., n.d.). Figure 7 shows two stacked layers of plaint net and residual net. Figure 7b depicts the basic unit of the residual network, and the largest difference from Figure 7a was that there was an additional identity that was a direct line to the output. In Figure 7b, is the input. Weight stacking was conducted using the conventional neural network and then passed the activation function. Then, the in- and output stacks were passed through the activation function, which was the basic unit of the residual network, with the line “identity” acting as a shortcut or highway. A building block that allows for identity mapping by shortcut is defined as follows:

where and are the input and output, respectively, and is the residual mapping that has to be learned. Figure 7b shows a two-layer residual network.

where represents the ReLU [27] and is a weight matrix.

Figure 7.

(a) Any two stacked layers of a plaint net. (b) Any two stacked layers of a residual net.

The “highway” optimized the deeper network and prevented the problem of gradient disappearance [22,25,28]. ResNet network added a residual module to the Bidir-LSTM to improve the learning efficiency and accuracy as the number of layers increased to a certain number and the network deepened [29]. Therefore, deep residual Bidir-LSTM was used to compare the classification performance with that of the baseline LSTM.

2.4. Classifying Behaviour Using Baseline LSTM and Deep Residual Bidir-LSTM

The data were analysed in TensorFlow 2.3 with Python 3.8. For baseline LSTM and deep residual Bidir-LSTM classification models, the batch sizes were both set as 1500. The learning rates of these two models were finally set as 0.0025 and 0.001. For the internal structures of these two neural networks, the hidden layer number of baseline LSTM was 32 and the Bidir-LSTM was 28.

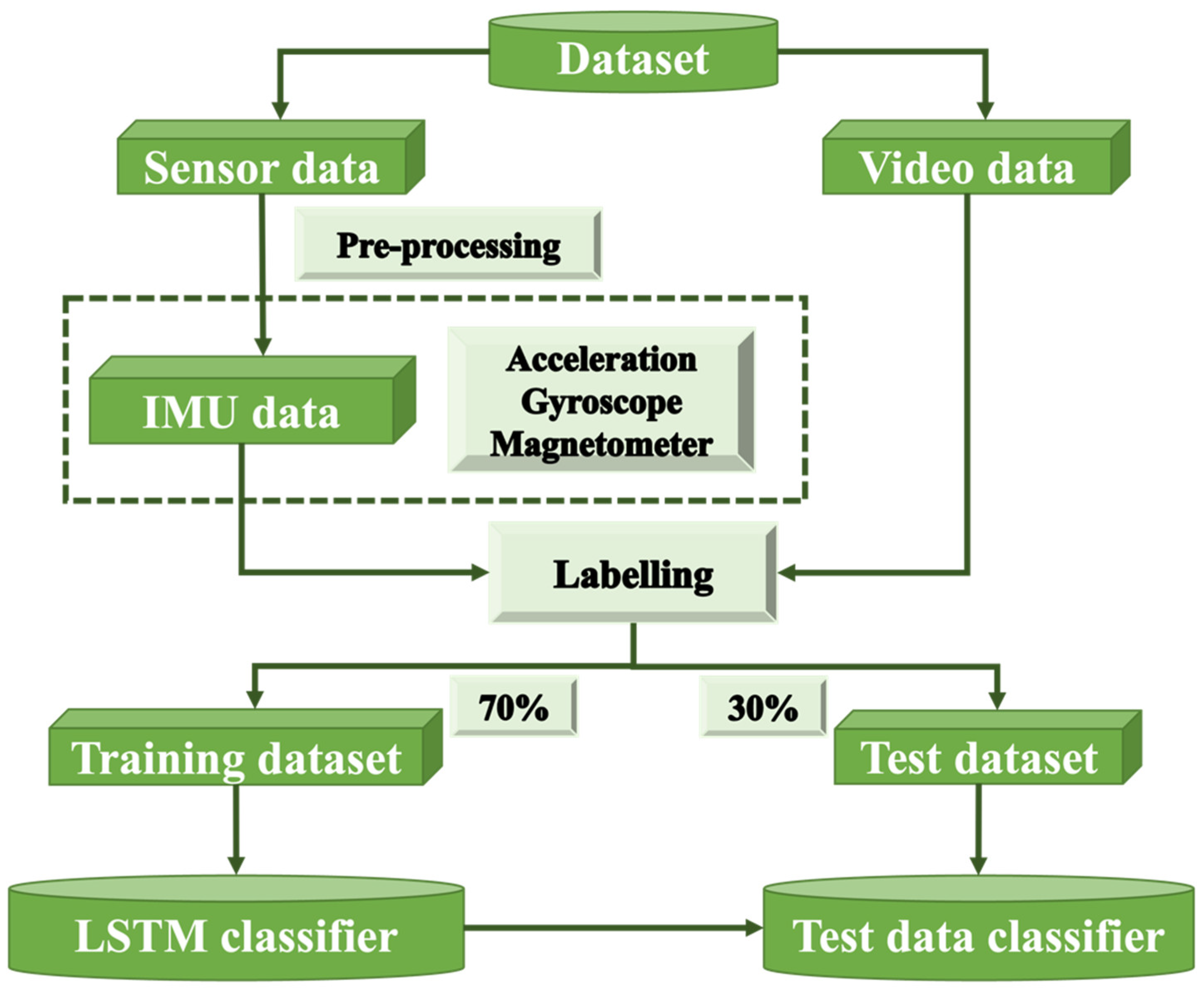

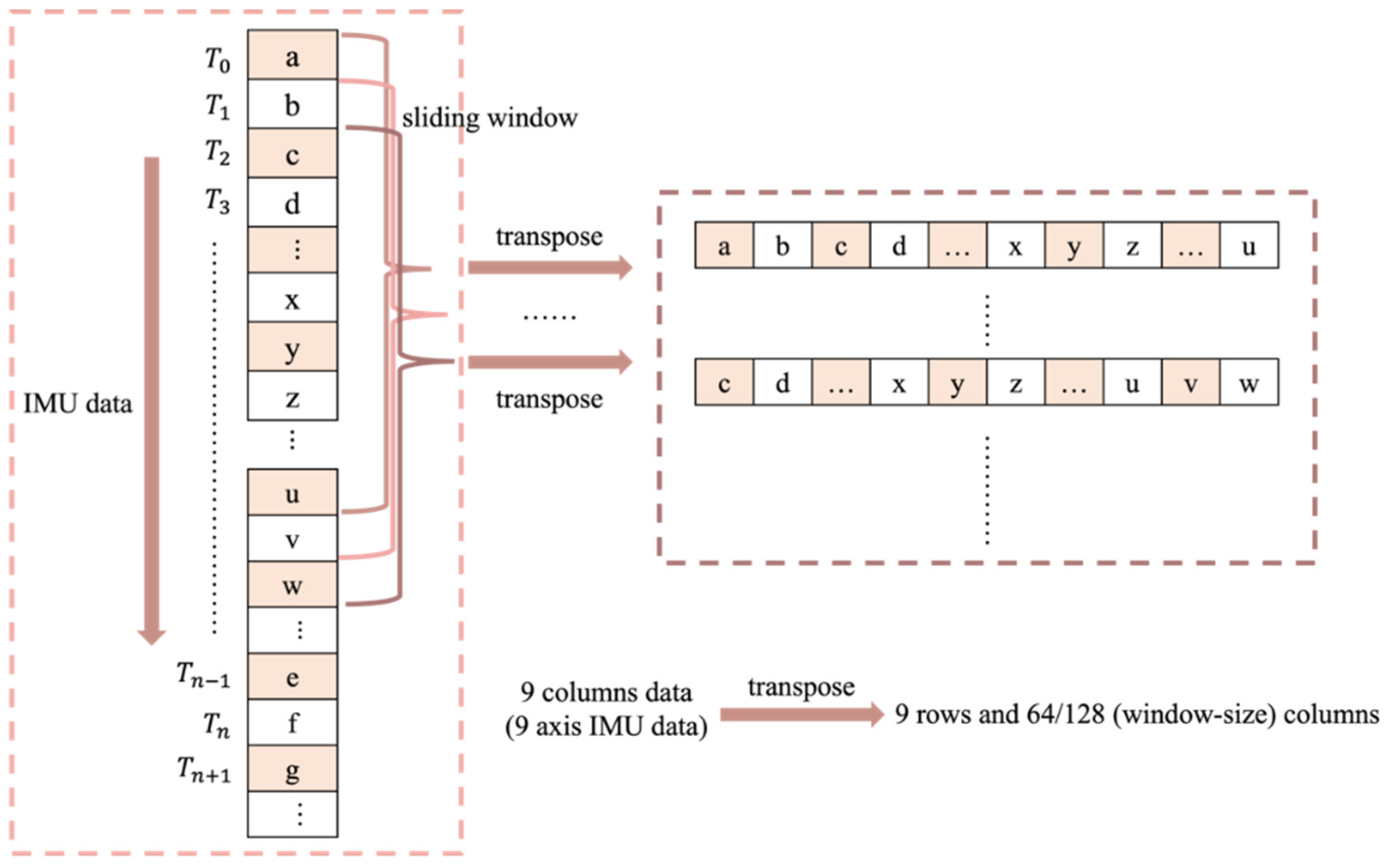

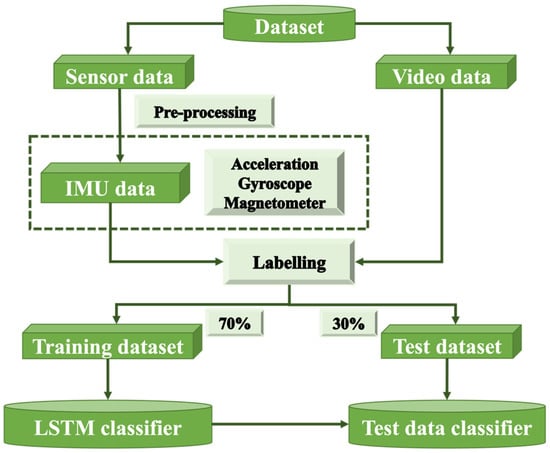

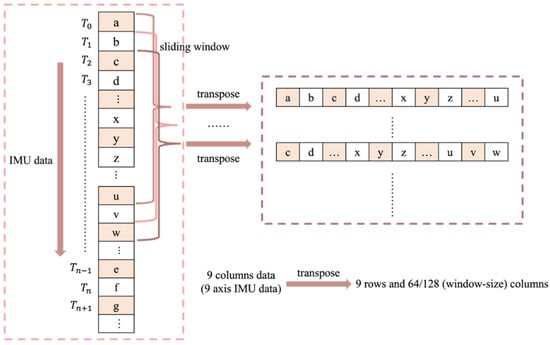

Figure 8 shows the entire data analysis process, from pre-processing to cattle behaviour pattern classification. The dataset consisted of the IMU data, coupled with the collected cow activity videos. Using videos, all IMU data were manually labelled with a target behaviour class based on the above-mentioned six main behavioural pattern definitions. After labelling, the amount of the ‘ground truth’ data in terms of the total number of hours was about 1066 h. As Figure 9 shows, sample matrix transpose was performed in data pre-processing, which transposed each behaviour sample size from nine columns (nine axis IMU data) to nine rows, which implied that every sample was a matrix with nine rows and 64 columns, nine rows and 128 columns or nine rows and 256 columns. Table 2 shows the total sample size and individual behaviour class sample size. The training dataset comprised 70% of the total data, while the testing dataset comprised the remaining 30%.

Figure 8.

Data analysis process.

Figure 9.

IMU data pre-processing.

Table 2.

Total sample size and individual behaviour class sample size.

The labelled data for all behaviour patterns were input into baseline LSTM model and deep residual Bidir-LSTM model to classify the cow behaviour patterns. To compare the classification performance of different window sizes, the labelled IMU data of each behaviour class were sliced using window sizes 6.4 s, 12.8 s and 25.6 s, defined as window sizes of 64, 128 and 256. After all labelled data on six behaviour patterns were mixed and randomly shuffled 20 times, 70% of the IMU data in this study were used for training and the remaining 30% were used for testing. The training and test datasets are distinct from each other and contain all 12 cows’ behaviour data, which are randomly arranged.

3. Results

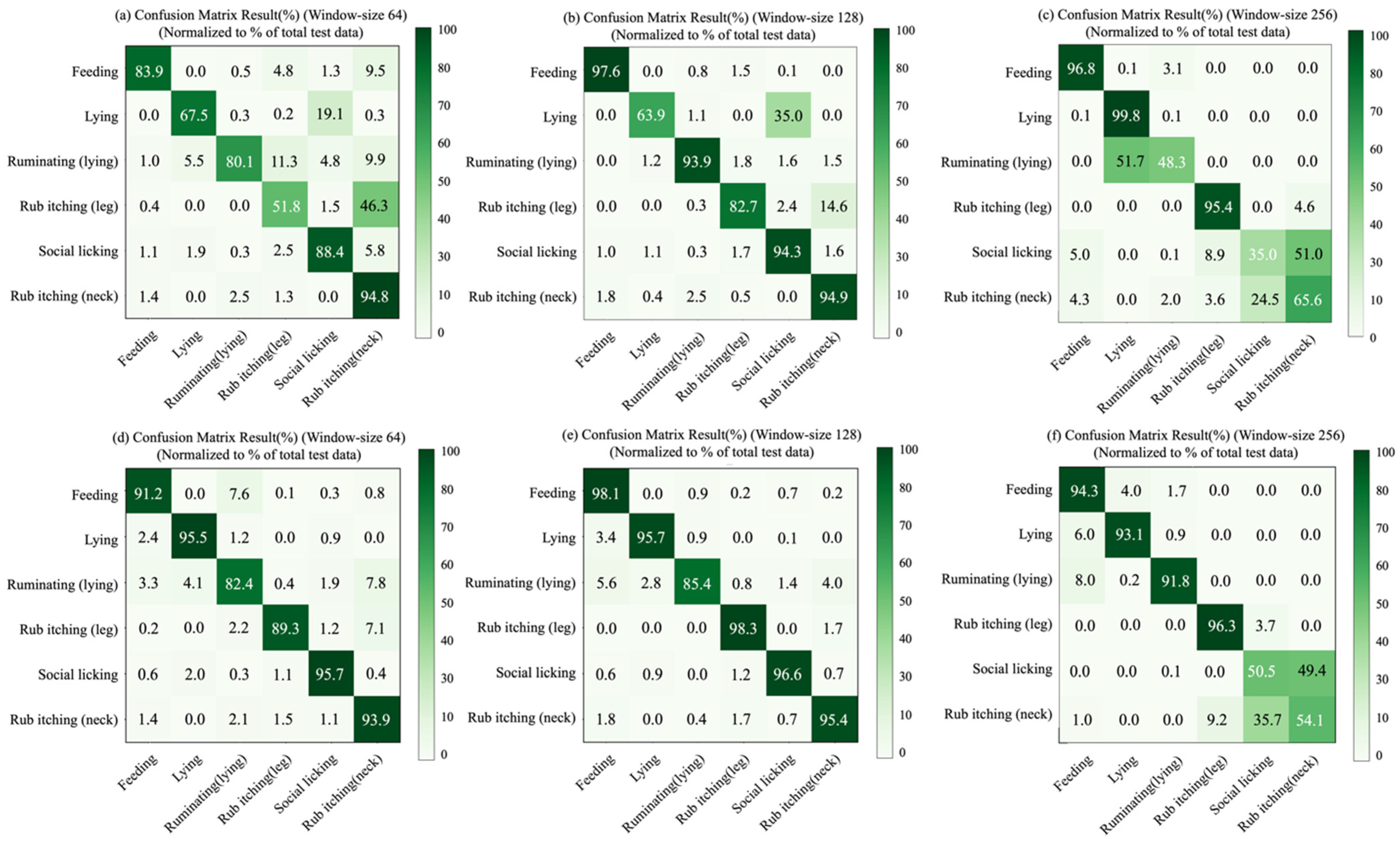

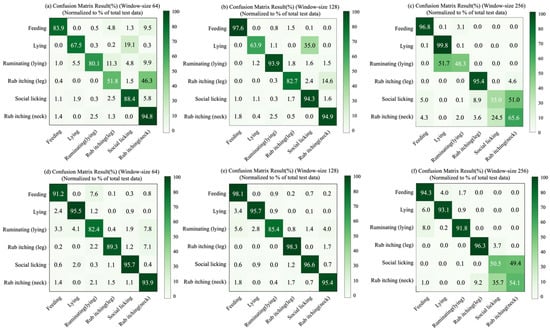

The three basic cattle behaviour patterns including feeding, lying and ruminating (lying), and three bovine-dermatomycosis-related behaviour patterns social licking, rub itching (neck), and rub itching (leg), were classified using baseline LSTM and deep residual Bidir-LSTM models with window sizes of 64, 128 and 256. Table 3 shows the accuracy, precision, recall, and F1-score performance metrics obtained from the four models’ results. The deep residual Bidir-LSTM with window size 128 performed best, with an accuracy of 94.9%. In deep residual Bidir-LSTM, the difference in results between window sizes 64 and 128 was minor. Only 73.5% and 80.0% accuracy was achieved using the baseline LSTM and Bidir-LSTM with a window size of 256.

Table 3.

Classification performance with three window sizes of baseline LSTM and deep residual Bidir-LSTM.

The classification accuracy of the six cow behaviour patterns is displayed in the confusion matrixes (see Figure 10). The classification accuracy of the deep residual Bidir-LSTM-based approach with a window size of 128 was over 90% for feeding, lying, rub itching (leg), social licking, and rub itching (neck), which was higher than the accuracy of the deep residual Bidir-LSTM with a window size of 64. Compared with the deep residual Bidir-LSTM of the six behaviour patterns under the same window size, baseline LSTM classification results were lower.

Figure 10.

Confusion matrixes of classification accuracy of six cow behaviour patterns: (a–c) behaviour classification accuracy using baseline LSTM with window sizes 64, 128 and 256; (d–f) behaviour classification accuracy using deep residual Bidir-LSTM with window sizes 64, 128 and 256. The diagonal represents the classification accuracy for each behaviour and the colour is darker for higher precision.

The classification accuracy of rub itching (neck) was the best in all deep residual Bidir-LSTM and baseline LSTM with window sizes of 64 and 128. Feeding yielded high classification accuracy in these six classification models, except for baseline LSTM, with a window size of 64. The classification accuracy was high in terms of baseline LSTM with a window size of 128 and Bidir-LSTM with window sizes of 64 and 128.

In deep residual Bidir-LSTM method with window sizes of 64 and 128, because these three behaviour patterns had similar head movements, a major part of the misclassification occurred during feeding, with 4.8% and 9.5% of this being incorrectly identified as rub itching (leg) and rub itching (neck). When using window size 256 in two classification models, social licking and rub itching (neck) were misclassified due to the high similarity of these two behaviour patterns. For social licking classification, due to the irregular and intensive movements of the cow’s neck, 5.8% and 1.6% of the results misclassified as rub itching (neck) using baseline LSTM with window sizes of 64 and 128. The classification results yielded improvements in deep residual Bidir-LSTM compared with baseline LSTM in window sizes 64 and 128. Since the window size increased, when using baseline LSTM and Bidir-LSTM with a window size of 256, the inclusion of multiple behaviour data in one slice window also led to a reduction in classification accuracy. Compared with baseline LSTM using window size 128, the classification results in deep residual Bidir-LSTM of lying and rub itching (leg) were improved, reaching 95.7% and 98.3%, respectively, compared with 63.9% and 82.7%. The classification results showed that, when using a Bidir-LSTM structure, deep residual Bidir-LSTM extracted more information from the vertical and horizontal directions of cow behaviour. Lying was misclassified as social licking in baseline LSTM. Due to their variable and fluctuating movements, a major portion of rub itching (leg) was misclassified as rub itching (neck) in this model. In baseline LSTM with a window size of 64 and in deep residual Bidir LSTM with both window sizes, the classification result of ruminating (lying) was over 80%. When using baseline LSTM with a window size of 128, the classification accuracy of ruminating (lying) was 93.9%.

Table 3 also shows the effect of window size on the behaviour classification accuracy. In both baseline LSTM and deep residual Bidir-LSTM models, the overall classification accuracies of window size 128 were 87.9% and 94.9%, respectively, which outperformed 77.7% and 91.3% when using a window size of 64. The classification results were not good in window size 256. The deep residual Bidir-LSTM achieved a better classification performance in window sizes 64 and 128, with the highest misclassification occurring in ruminating (lying). Due to the similarities between chewing action and a cow’s stationary body, 5.6% and 2.8% of ruminating (lying) were incorrectly classified as feeding and lying when using window size 128, and 3.3% and 4.1% were misclassified as feeding and lying with a window size of 64. In the deep residual Bidir-LSTM method, 4.0% and 7.8% of ruminating (lying) were incorrectly classified as rub itching (neck) in window sizes 128 and 64, respectively. Additionally, compared with baseline LSTM, the window size had little effect on the classification performance of deep residual Bidir-LSTM, except for a window size of 256. The classification results of different window sizes clearly differed as the baseline LSTM processing information occurred in one direction. When slicing IMU data with a sliding window, a larger window size could extract more useful feature information. In this study, larger window sizes, such as 256, did not improve classification performance, and even resulted in more misclassifications. Moreover, for some dynamic behaviour patterns, such as social licking, which might last for a relatively longer period, it is more likely that a complete movement can be contained in a window size 128 (12.8 s) than in a window size of 64 (6.4 s).

4. Discussion

Here, we used a DL framework, which combined the residual network and Bidir-LSTM to monitor and classify six cow behaviour patterns, including three bovine-dermatomycosis-related behaviour patterns. Deep residual Bidir-LSTM with a window size of 128 outperformed the other three models in terms of classification. The results revealed that both learning algorithms and window sizes affected the classification performance. Bidir-LSTM increased the amount of information that was available to the network and context connections for time-continuous cattle behaviour. Additionally, adding residual blocks to Bidir-LSTM improved learning efficiency and accuracy by optimizing the deeper network.

In previous studies, analytic models based on accelerometers or videos were used to classify certain cattle behaviour patterns, such as lameness [30] and drinking behaviour [31]; only a few studies focused on the recognition and classification of multiple behaviour patterns [16,32]. To date, the LSTM-RNN has been used for sequence data in activity recognition [10,33]. To the best of our knowledge, this is the first study in which deep residual Bidir-LSTM is employed to classify cattle behaviour patterns. Compared with our previous, unpublished work, the classification accuracy of six cow behaviour patterns improved when using deep residual Bidir-LSTM. Previous classification results for the behaviour patterns were 77.9% (ruminating (lying)), 84.0% (lying), 91.9% (feeding), 60.2% (rub itching (legs)), 69.6% (social licking), and 93.7% (rub itching (neck)). Due to its Bidir-LSTM structure, the deep residual Bidir-LSTM was a sequence processing model that learned both previous and subsequent information from the network. However, the high similarities for a range of motions and insufficient sample size resulted in lower classification accuracy for ruminating (lying) and rub itching (leg). In the future, more behaviour patterns could be recognized and higher accuracy could be achieved by training classifiers with more types of cattle behaviour data using a dataset with a larger sample size.

5. Conclusions

Cattle behaviour monitoring is a way of increasing livestock fertility, production, and precision management efficiency. The early detection of skin disease in cows by applying automated technology can reduce the rate of transmission in cattle farms. To date, cattle behaviour research has been conducted by using accelerometers or videos at a low cost. In this study, two DL algorithms based on RNN and IMU data were used to classify cattle behaviour patterns. Deep residual Bidir-LSTM outperformed baseline LSTM in terms of accuracy. This novel LSTM framework achieved an accuracy of 94.9%, precision of 95.1%, recall of 94.9%, and F1-score of 94.9%, with a window size of 128. The experimental results showed that deep residual Bidir-LSTM can improve the classification ability by using information from vertical and horizontal directions through a Bidir-LSTM structure and learning residual functions with reference to the layer input through the ResNet architecture. The impact of the window size on classification performance was investigated. The best classification accuracy was achieved when a window size of 128 (12.8 s) was used. More behaviour patterns and longer periods of continuous cattle behaviour data could be classified, helping to achieve higher accuracy in practical applications. For future work, we plan to obtain acoustic information, using a camera to further gather semantic information on cattle behaviour to improve the classification accuracy of similar behaviour patterns or transitional behaviour patterns. In the near future, more cattle behaviour and activity data should be collected from more cattle, and aim behaviour patterns could be amplified to verify the comprehensiveness of the behaviour classification models. We intend to collect cattle activity data using a collar with a solar panel to extend the battery life of the equipment and ensure data integrity, so that the classifier can accurately identify more complex cattle activities. We intend to use edge cloud computing in the future to simplify the data that are collected by the collar in the cattle farm, and then upload the results to the cloud for later use.

Author Contributions

Conceptualization, Y.P.; methodology, Y.W. and M.L. (Mei Liu); software, Y.W. and M.L. (Mei Liu); validation, Y.W. and M.L. (Mei Liu); formal analysis, Y.W. and M.L. (Mei Liu); resources, Y.W., M.L. (Mei Liu), Z.P., M.L. (Meiqi Liu) and M.W.; data curation, Y.W., M.L. (Mei Liu) and Z.P.; writing—original draft preparation, Y.W. and M.L. (Mei Liu); writing—review and editing, Y.W., M.L. (Mei Liu) and Y.P.; visualization, Y.W. and M.L. (Mei Liu); supervision, Y.P.; project administration, Y.P.; funding acquisition, Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by National Key R&D Program of China (Grant Number 2021YFD1600200); And the study also supported by subject double support program of Sichuan Agriculture University (Grant Number T202107).

Institutional Review Board Statement

The animal study protocol was approved by Sichuan Agricultural University Institutional Animal Care and Use Committee (protocol code No. 20200057, 2020/11/10).

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ma, S.; Zhang, Q.; Li, T.; Song, H. Basic Motion Behavior Recognition of Single Dairy Cow Based on Improved Rexnet 3D Network. Comput. Electron. Agric. 2022, 194, 106772. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Park, J.; Park, D.S. Deep Learning-Based Hierarchical Cattle Behavior Recognition with Spatio-Temporal Information. Comput. Electron. Agric. 2020, 177, 105627. [Google Scholar] [CrossRef]

- Shen, W.; Cheng, F.; Zhang, Y.; Wei, X.; Fu, Q.; Zhang, Y. Automatic Recognition of Ingestive-Related Behaviors of Dairy Cows Based on Triaxial Acceleration. Inf. Process. Agric. 2020, 7, 427–443. [Google Scholar] [CrossRef]

- Benaissa, S.; Tuyttens, F.A.M.; Plets, D.; de Pessemier, T.; Trogh, J.; Tanghe, E.; Martens, L.; Vandaele, L.; Van Nuffel, A.; Joseph, W.; et al. On the Use of On-Cow Accelerometers for the Classification of Behaviours in Dairy Barns. Res. Vet. Sci. 2019, 125, 425–433. [Google Scholar] [CrossRef]

- Simanungkalit, G.; Barwick, J.; Cowley, F.; Dawson, B.; Dobos, R.; Hegarty, R. Use of an Ear-Tag Accelerometer and a Radio-Frequency Identification (RFID) System for Monitoring the Licking Behaviour in Grazing Cattle. Appl. Anim. Behav. Sci. 2021, 244, 105491. [Google Scholar] [CrossRef]

- Haladjian, J.; Haug, J.; Nüske, S.; Bruegge, B. A Wearable Sensor System for Lameness Detection in Dairy Cattle. Multimodal Technol. Interact. 2018, 2, 27. [Google Scholar] [CrossRef]

- Lund, A.; Bratberg, A.M.; Næss, B.; Gudding, R. Control of Bovine Ringworm by Vaccination in Norway. Vet. Immunol. Immunopathol. 2014, 158, 37–45. [Google Scholar] [CrossRef]

- Broom, D.M. Behaviour and Welfare in Relation to Pathology. Appl. Anim. Behav. Sci. 2006, 97, 73–83. [Google Scholar] [CrossRef]

- von Keyserlingk, M.A.G.; Weary, D.M. A 100-Year Review: Animal Welfare in the Journal of Dairy Science—The First 100 Years. J. Dairy Sci. 2017, 100, 10432–10444. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Ouma, S.; Wulandari; Yoshioka, H.; Itoyama, E. Dam Behavior Patterns in Japanese Black Beef Cattle Prior to Calving: Automated Detection Using LSTM-RNN. Comput. Electron. Agric. 2020, 169, 105178. [Google Scholar] [CrossRef]

- de Freslon, I.; Martínez-López, B.; Belkhiria, J.; Strappini, A.; Monti, G. Use of Social Network Analysis to Improve the Understanding of Social Behaviour in Dairy Cattle and Its Impact on Disease Transmission. Appl. Anim. Behav. Sci. 2019, 213, 47–54. [Google Scholar] [CrossRef]

- Matthews, S.G.; Miller, A.L.; Clapp, J.; Plötz, T.; Kyriazakis, I. Early Detection of Health and Welfare Compromises through Automated Detection of Behavioural Changes in Pigs. Vet. J. 2016, 217, 43–51. [Google Scholar] [CrossRef] [PubMed]

- Robert, B.; White, B.J.; Renter, D.G.; Larson, R.L. Evaluation of Three-Dimensional Accelerometers to Monitor and Classify Behavior Patterns in Cattle. Comput. Electron. Agric. 2009, 67, 80–84. [Google Scholar] [CrossRef]

- Arcidiacono, C.; Porto, S.M.C.; Mancino, M.; Cascone, G. Development of a Threshold-Based Classifier for Real-Time Recognition of Cow Feeding and Standing Behavioural Activities from Accelerometer Data. Comput. Electron. Agric. 2017, 134, 124–134. [Google Scholar] [CrossRef]

- Kour, H.; Corbet, N.J.; Patison, K.P.; Swain, D.L. Changes in the Suckling Behaviour of Beef Calves at 1 Month and 4 Months of Age and Effect on Cow Production Variables. Appl. Anim. Behav. Sci. 2021, 236, 105219. [Google Scholar] [CrossRef]

- Peng, Y.; Kondo, N.; Fujiura, T.; Suzuki, T.; Wulandari; Yoshioka, H.; Itoyama, E. Classification of Multiple Cattle Behavior Patterns Using a Recurrent Neural Network with Long Short-Term Memory and Inertial Measurement Units. Comput. Electron. Agric. 2019, 157, 247–253. [Google Scholar] [CrossRef]

- Agnetti, F.; Righi, C.; Scoccia, E.; Felici, A.; Crotti, S.; Moretta, I.; Moretti, A.; Maresca, C.; Troiani, L.; Papini, M. Trichophyton Verrucosum Infection in Cattle Farms of Umbria (Central Italy) and Transmission to Humans. Mycoses 2014, 57, 400–405. [Google Scholar] [CrossRef]

- Nenoff, P.; Handrick, W.; Krüger, C.; Vissiennon, T.; Wichmann, K.; Gräser, Y.; Tchernev, G. Dermatomykosen durch Haus-und Nutztiere: Vernachlässigte Infektionen? Hautarzt 2012, 63, 848–858. [Google Scholar] [CrossRef]

- Halsey, L.G.; Shepard, E.L.C.; Hulston, C.J.; Venables, M.C.; White, C.R.; Jeukendrup, A.E.; Wilson, R.P. Acceleration versus Heart Rate for Estimating Energy Expenditure and Speed during Locomotion in Animals: Tests with an Easy Model Species, Homo Sapiens. Zoology 2008, 111, 231–241. [Google Scholar] [CrossRef]

- Shiode, D.; Okamoto, J.; Shiozawa, M.; Uchida, K.; Miyamoto, Y.; Hu, F.; Tokai, T. Differences in the Behavioral Characteristics between Green and Loggerhead Turtles in a Setnet Bycatch Simulation. Fish. Res. 2021, 242, 106036. [Google Scholar] [CrossRef]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks. In Proceedings of the 23rd International Conference on Machine Learning—ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; ACM Press: Pittsburgh, PA, USA, 2006; pp. 369–376. [Google Scholar]

- Ordóñez, F.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Tuncer, E.; Doğru Bolat, E. Classification of Epileptic Seizures from Electroencephalogram (EEG) Data Using Bidirectional Short-Term Memory (Bi-LSTM) Network Architecture. Biomed. Signal Processing Control 2022, 73, 103462. [Google Scholar] [CrossRef]

- Cui, Z.; Ke, R.; Pu, Z.; Wang, Y. Stacked Bidirectional and Unidirectional LSTM Recurrent Neural Network for Forecasting Network-Wide Traffic State with Missing Values. Transp. Res. Part C Emerg. Technol. 2020, 118, 102674. [Google Scholar] [CrossRef]

- Feng, K.; Fan, Z. A Novel Bidirectional LSTM Network Based on Scale Factor for Atrial Fibrillation Signals Classification. Biomed. Signal Processing Control 2022, 76, 103663. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on International Conference on Machine Learning (ICML’10), Haifa, Israel, 21–24 June 2010; Omnipress: Madison, WI, USA; pp. 807–814. [Google Scholar]

- Zhao, Y.; Yang, R.; Chevalier, G.; Xu, X.; Zhang, Z. Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors. Math. Probl. Eng. 2018, 2018, 7316954. [Google Scholar] [CrossRef]

- Li, X.; Zhang, J.; Xue, Y.; Qiu, L. Classification of Hops Image Based on ResNet-ConvLSTM and Research of Intelligent Liquor Picking System. Measurement 2022, 194, 110955. [Google Scholar] [CrossRef]

- Cernek, P.; Bollig, N.; Anklam, K.; Döpfer, D. Hot Topic: Detecting Digital Dermatitis with Computer Vision. J. Dairy Sci. 2020, 103, 9110–9115. [Google Scholar] [CrossRef]

- Williams, L.R.; Moore, S.T.; Bishop-Hurley, G.J.; Swain, D.L. A Sensor-Based Solution to Monitor Grazing Cattle Drinking Behaviour and Water Intake. Comput. Electron. Agric. 2020, 168, 105141. [Google Scholar] [CrossRef]

- Rahman, A.; Smith, D.V.; Little, B.; Ingham, A.B.; Greenwood, P.L.; Bishop-Hurley, G.J. Cattle Behaviour Classification from Collar, Halter, and Ear Tag Sensors. Inf. Processing Agric. 2018, 5, 124–133. [Google Scholar] [CrossRef]

- Andriamandroso, A.L.H.; Lebeau, F.; Beckers, Y.; Froidmont, E.; Dufrasne, I.; Heinesch, B.; Dumortier, P.; Blanchy, G.; Blaise, Y.; Bindelle, J. Development of an Open-Source Algorithm Based on Inertial Measurement Units (IMU) of a Smartphone to Detect Cattle Grass Intake and Ruminating Behaviors. Comput. Electron. Agric. 2017, 139, 126–137. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).