Optimization of Open-Access Optical and Radar Satellite Data in Google Earth Engine for Oil Palm Mapping in the Muda River Basin, Malaysia

Abstract

:1. Introduction

2. Materials and Methods

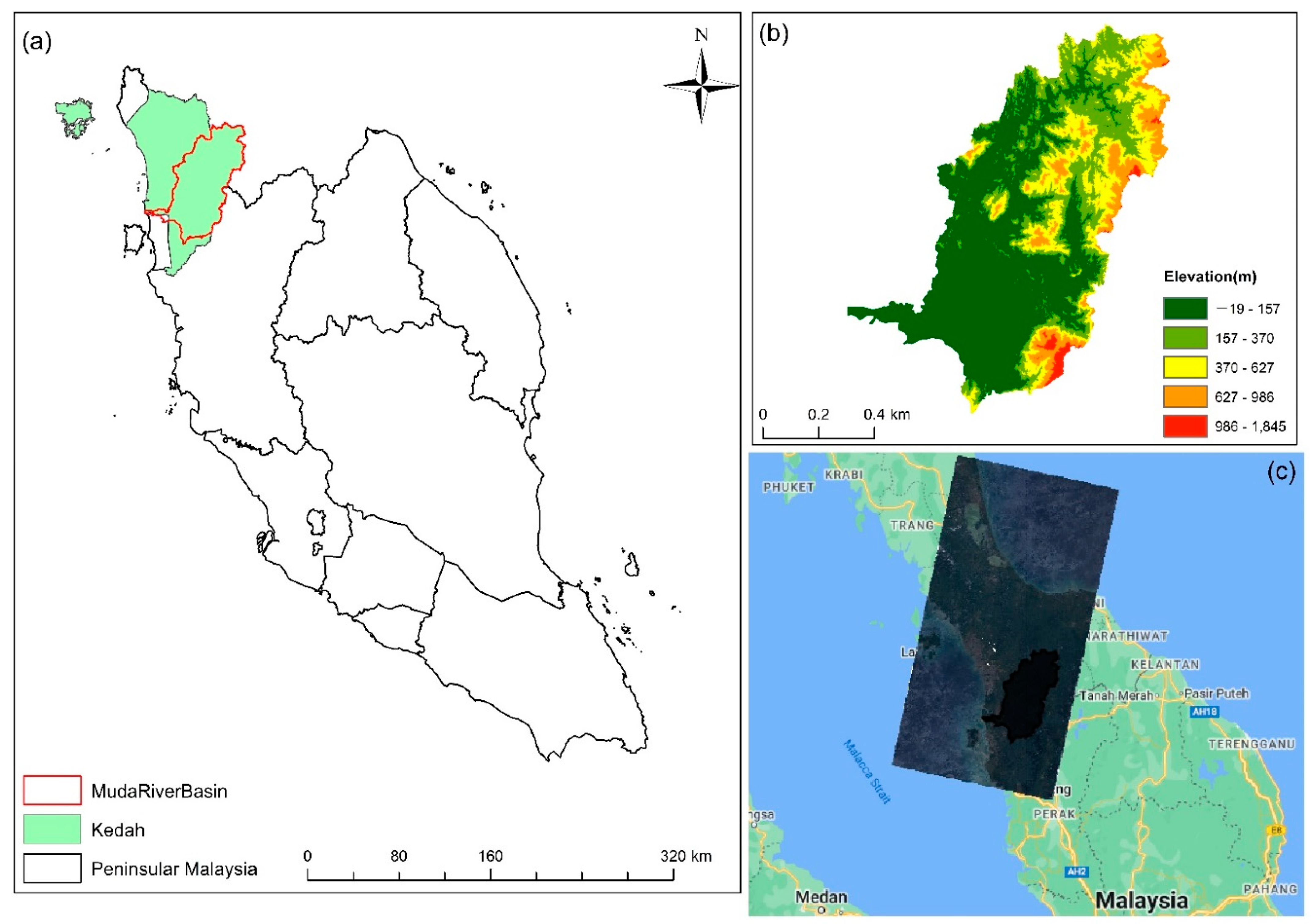

2.1. Study Area

2.2. Satellite Data

2.3. Preprocessing of Data

2.3.1. Preprocessing of Landsat 8 and Sentinel-2

2.3.2. Preprocessing of PALSAR-2 and Sentinel-1

2.4. Spectral and SAR Indices

2.5. Training and Validation Sample Data

2.6. Methods

2.6.1. Land Cover Land Use Mapping

2.6.2. Image Composition Creation

2.6.3. Oil Palm Area Change

3. Results

3.1. Accuracy Assessment

3.2. Oil Palm Area Changes

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- FAO. Food and Agriculture Organization of the UN. Available online: https://www.fao.org/faostat/en/#data (accessed on 1 May 2022).

- MPOB. Malaysian Palm Oil Board (MPOB). Available online: https://bepi.mpob.gov.my/index.php/en/ (accessed on 1 May 2022).

- Danylo, O.; Pirker, J.; Lemoine, G.; Ceccherini, G.; See, L.; McCallum, I.; Hadi; Kraxner, F.; Achard, F.; Fritz, S. A map of the extent and year of detection of oil palm plantations in Indonesia, Malaysia and Thailand. Sci. Data 2021, 8, 96. [Google Scholar] [CrossRef]

- Kang, C.S.; Kanniah, K.D. Land use and land cover change and its impact on river morphology in Johor River Basin, Malaysia. J. Hydrol. Reg. Stud. 2022, 41, 101072. [Google Scholar] [CrossRef]

- Tan, M.L.; Tew, Y.L.; Chun, K.P.; Samat, N.; Shaharudin, S.M.; Mahamud, M.A.; Tangang, F.T. Improvement of the ESA CCI Land cover maps for water balance analysis in tropical regions: A case study in the Muda River Basin, Malaysia. J. Hydrol. Reg. Stud. 2021, 36, 100837. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, L.; Li, W.; Ciais, P.; Cheng, Y.; Gong, P. Annual oil palm plantation maps in Malaysia and Indonesia from 2001 to 2016. Earth Syst. Sci. Data 2020, 12, 847–867. [Google Scholar] [CrossRef]

- Sarzynski, T.; Giam, X.; Carrasco, L.; Lee, J.S.H. Combining Radar and Optical Imagery to Map Oil Palm Plantations in Sumatra, Indonesia, Using the Google Earth Engine. Remote Sens. 2020, 12, 1220. [Google Scholar] [CrossRef]

- Poortinga, A.; Tenneson, K.; Shapiro, A.; Nquyen, Q.; San Aung, K.; Chishtie, F.; Saah, D. Mapping Plantations in Myanmar by Fusing Landsat-8, Sentinel-2 and Sentinel-1 Data along with Systematic Error Quantification. Remote Sens. 2019, 11, 831. [Google Scholar] [CrossRef]

- Mohd Najib, N.E.; Kanniah, K.D.; Cracknell, A.P.; Yu, L. Synergy of Active and Passive Remote Sensing Data for Effective Mapping of Oil Palm Plantation in Malaysia. Forests 2020, 11, 858. [Google Scholar] [CrossRef]

- Oon, A.; Ngo, K.D.; Azhar, R.; Ashton-Butt, A.; Lechner, A.M.; Azhar, B. Assessment of ALOS-2 PALSAR-2L-band and Sentinel-1 C-band SAR backscatter for discriminating between large-scale oil palm plantations and smallholdings on tropical peatlands. Remote Sens. Appl. Soc. Environ. 2019, 13, 183–190. [Google Scholar] [CrossRef]

- Cheng, Y.; Yu, L.; Cracknell, A.P.; Gong, P. Oil palm mapping using Landsat and PALSAR: A case study in Malaysia. Int. J. Remote Sens. 2016, 37, 5431–5442. [Google Scholar] [CrossRef]

- Gutiérrez-Vélez, V.H.; DeFries, R. Annual multi-resolution detection of land cover conversion to oil palm in the Peruvian Amazon. Remote Sens. Environ. 2013, 129, 154–167. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Chen, B.; Xiao, X.; Li, X.; Pan, L.; Doughty, R.; Ma, J.; Dong, J.; Qin, Y.; Zhao, B.; Wu, Z. A mangrove forest map of China in 2015: Analysis of time series Landsat 7/8 and Sentinel-1A imagery in Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2017, 131, 104–120. [Google Scholar] [CrossRef]

- Rembold, F.; Meroni, M.; Urbano, F.; Csak, G.; Kerdiles, H.; Perez-Hoyos, A.; Lemoine, G.; Leo, O.; Negre, T. ASAP: A new global early warning system to detect anomaly hot spots of agricultural production for food security analysis. Agric. Syst. 2019, 168, 247–257. [Google Scholar] [CrossRef]

- Pickens, A.H.; Hansen, M.C.; Hancher, M.; Stehman, S.V.; Tyukavina, A.; Potapov, P.; Marroquin, B.; Sherani, Z. Mapping and sampling to characterize global inland water dynamics from 1999 to 2018 with full Landsat time-series. Remote Sens. Environ. 2020, 243, 111792. [Google Scholar] [CrossRef]

- Coltin, B.; McMichael, S.; Smith, T.; Fong, T. Automatic boosted flood mapping from satellite data. Int. J. Remote Sens. 2016, 37, 993–1015. [Google Scholar] [CrossRef]

- Tew, Y.L.; Tan, M.L.; Juneng, L.; Chun, K.P.; Hassan, M.H.b.; Osman, S.b.; Samat, N.; Chang, C.K.; Kabir, M.H. Rapid Extreme Tropical Precipitation and Flood Inundation Mapping Framework (RETRACE): Initial Testing for the 2021–2022 Malaysia Flood. ISPRS Int. J. Geo-Inf. 2022, 11, 378. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B., 3rd. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens Env. 2016, 185, 142–154. [Google Scholar] [CrossRef]

- Tew, Y.L.; Tan, M.L.; Samat, N.; Chan, N.W.; Mahamud, M.A.; Sabjan, M.A.; Lee, L.K.; See, K.F.; Wee, S.T. Comparison of Three Water Indices for Tropical Aquaculture Ponds Extraction using Google Earth Engine. Sains Malays. 2022, 51, 369–378. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Lee, J.S.H.; Wich, S.; Widayati, A.; Koh, L.P. Detecting industrial oil palm plantations on Landsat images with Google Earth Engine. Remote Sens. Appl. Soc. Environ. 2016, 4, 219–224. [Google Scholar] [CrossRef]

- Shaharum, N.S.N.; Shafri, H.Z.M.; Ghani, W.A.W.A.K.; Samsatli, S.; Al-Habshi, M.M.A.; Yusuf, B. Oil palm mapping over Peninsular Malaysia using Google Earth Engine and machine learning algorithms. Remote Sens. Appl. Soc. Environ. 2020, 17, 100287. [Google Scholar] [CrossRef]

- Puttinaovarat, S.; Horkaew, P. Oil-Palm Plantation Identification from Satellite Images Using Google Earth Engine. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 720–726. [Google Scholar] [CrossRef]

- De Alban, J.; Connette, G.; Oswald, P.; Webb, E. Combined Landsat and L-Band SAR Data Improves Land Cover Classification and Change Detection in Dynamic Tropical Landscapes. Remote Sens. 2018, 10, 306. [Google Scholar] [CrossRef]

- Torbick, N.; Ledoux, L.; Salas, W.; Zhao, M. Regional Mapping of Plantation Extent Using Multisensor Imagery. Remote Sens. 2016, 8, 236. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef]

- van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A. Potential of ESA’s Sentinel-2 for geological applications. Remote Sens. Environ. 2014, 148, 124–133. [Google Scholar] [CrossRef]

- Shimada, M.; Itoh, T.; Motooka, T.; Watanabe, M.; Shiraishi, T.; Thapa, R.; Lucas, R. New global forest/non-forest maps from ALOS PALSAR data (2007–2010). Remote Sens. Environ. 2014, 155, 13–31. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R.; Hensley, S.; Kobrick, M.; Paller, M.; Rodriguez, E.; Roth, L.; et al. The Shuttle Radar Topography Mission. Rev. Geophys. 2007, 45, RG2004. [Google Scholar] [CrossRef]

- Miettinen, J.; Shi, C.; Liew, S.C. Towards automated 10–30 m resolution land cover mapping in insular South-East Asia. Geocarto Int. 2017, 34, 443–457. [Google Scholar] [CrossRef]

- Rosenqvist, A.; Shimada, M.; Ito, N.; Watanabe, M. ALOS PALSAR: A Pathfinder Mission for Global-Scale Monitoring of the Environment. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3307–3316. [Google Scholar] [CrossRef]

- Carrasco, L.; O’Neil, A.; Morton, R.; Rowland, C. Evaluating Combinations of Temporally Aggregated Sentinel-1, Sentinel-2 and Landsat 8 for Land Cover Mapping with Google Earth Engine. Remote Sens. 2019, 11, 288. [Google Scholar] [CrossRef]

- Nomura, K.; Mitchard, E. More Than Meets the Eye: Using Sentinel-2 to Map Small Plantations in Complex Forest Landscapes. Remote Sens. 2018, 10, 1693. [Google Scholar] [CrossRef]

- Li, W.; Fu, D.; Su, F.; Xiao, Y. Spatial–Temporal Evolution and Analysis of the Driving Force of Oil Palm Patterns in Malaysia from 2000 to 2018. ISPRS Int. J. Geo-Inf. 2020, 9, 280. [Google Scholar] [CrossRef]

- Kou, W.; Xiao, X.; Dong, J.; Gan, S.; Zhai, D.; Zhang, G.; Qin, Y.; Li, L. Mapping Deciduous Rubber Plantation Areas and Stand Ages with PALSAR and Landsat Images. Remote Sens. 2015, 7, 1048–1073. [Google Scholar] [CrossRef]

- Chen, B.; Li, X.; Xiao, X.; Zhao, B.; Dong, J.; Kou, W.; Qin, Y.; Yang, C.; Wu, Z.; Sun, R.; et al. Mapping tropical forests and deciduous rubber plantations in Hainan Island, China by integrating PALSAR 25-m and multi-temporal Landsat images. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 117–130. [Google Scholar] [CrossRef]

- Chong, K.L.; Kanniah, K.D.; Pohl, C.; Tan, K.P. A review of remote sensing applications for oil palm studies. Geo-Spat. Inf. Sci. 2017, 20, 184–200. [Google Scholar] [CrossRef]

- Rakwatin, P.; Longépé, N.; Isoguchi, O.; Shimada, M.; Uryu, Y.; Takeuchi, W. Using multiscale texture information from ALOS PALSAR to map tropical forest. Int. J. Remote Sens. 2012, 33, 7727–7746. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Dong, X.; Quegan, S.; Yumiko, U.; Hu, C.; Zeng, T. Feasibility Study of C- and L-band SAR Time Series Data in Tracking Indonesian Plantation and Natural Forest Cover Changes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3692–3699. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Sheldon, S.; Biradar, C.; Xie, G. Mapping tropical forests and rubber plantations in complex landscapes by integrating PALSAR and MODIS imagery. ISPRS J. Photogramm. Remote Sens. 2012, 74, 20–33. [Google Scholar] [CrossRef]

- Miettinen, J.; Liew, S.C. Separability of insular Southeast Asian woody plantation species in the 50 m resolution ALOS PALSAR mosaic product. Remote Sens. Lett. 2010, 2, 299–307. [Google Scholar] [CrossRef]

- Wulder, M.A.; Franklin, S.E.; White, J.C.; Linke, J.; Magnussen, S. An accuracy assessment framework for large-area land cover classification products derived from medium-resolution satellite data. Int. J. Remote Sens. 2007, 27, 663–683. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- de Sousa, C.; Fatoyinbo, L.; Neigh, C.; Boucka, F.; Angoue, V.; Larsen, T. Cloud-computing and machine learning in support of country-level land cover and ecosystem extent mapping in Liberia and Gabon. PLoS ONE 2020, 15, e0227438. [Google Scholar] [CrossRef]

- Gyamfi-Ampadu, E.; Gebreslasie, M.; Mendoza-Ponce, A. Mapping natural forest cover using satellite imagery of Nkandla forest reserve, KwaZulu-Natal, South Africa. Remote Sens. Appl. Soc. Environ. 2020, 18, 100302. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, X.; Chen, Y.; Liang, X. Land-cover mapping using Random Forest classification and incorporating NDVI time-series and texture: A case study of central Shandong. Int. J. Remote Sens. 2018, 39, 8703–8723. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Johnson, B.A.; Iizuka, K. Integrating OpenStreetMap crowdsourced data and Landsat time-series imagery for rapid land use/land cover (LULC) mapping: Case study of the Laguna de Bay area of the Philippines. Appl. Geogr. 2016, 67, 140–149. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Forkuor, G.; Dimobe, K.; Serme, I.; Tondoh, J.E. Landsat-8 vs. Sentinel-2: Examining the added value of sentinel-2’s red-edge bands to land-use and land-cover mapping in Burkina Faso. GIScience Remote Sens. 2017, 55, 331–354. [Google Scholar] [CrossRef]

- Muhadi, N.A.; Mohd Kassim, M.S.; Abdullah, A.F. Improvement of Digital Elevation Model (DEM) using data fusion technique for oil palm replanting phase. Int. J. Image Data Fusion 2018, 10, 232–243. [Google Scholar] [CrossRef]

- Li, G.; Lu, D.; Moran, E.; Dutra, L.; Batistella, M. A comparative analysis of ALOS PALSAR L-band and RADARSAT-2 C-band data for land-cover classification in a tropical moist region. ISPRS J. Photogramm. Remote Sens. 2012, 70, 26–38. [Google Scholar] [CrossRef]

- Cheng, Y.; Yu, L.; Zhao, Y.; Xu, Y.; Hackman, K.; Cracknell, A.P.; Gong, P. Towards a global oil palm sample database: Design and implications. Int. J. Remote Sens. 2017, 38, 4022–4032. [Google Scholar] [CrossRef]

- Cheng, Y.; Yu, L.; Xu, Y.; Liu, X.; Lu, H.; Cracknell, A.P.; Kanniah, K.; Gong, P. Towards global oil palm plantation mapping using remote-sensing data. Int. J. Remote Sens. 2018, 39, 5891–5906. [Google Scholar] [CrossRef]

- Nurmasari, Y.; Wijayanto, A.W. Oil Palm Plantation Detection in Indonesia Using Sentinel-2 and Landsat-8 Optical Satellite Imagery (Case Study: Rokan Hulu Regency, Riau Province). Int. J. Remote Sens. Earth Sci. (IJReSES) 2021, 18, 1. [Google Scholar] [CrossRef]

- Ju, Z.; Leong Tan, M.; Samat, N.; Kiat Chang, C. Comparison of Landsat 8, Sentinel-2 and spectral indices combinations for Google Earth Engine-based land use mapping in the Johor River Basin, Malaysia. Malays. J. Soc. Space 2021, 17, 30–46. [Google Scholar] [CrossRef]

- Descals, A.; Szantoi, Z.; Meijaard, E.; Sutikno, H.; Rindanata, G.; Wich, S. Oil Palm (Elaeis guineensis) Mapping with Details: Smallholder versus Industrial Plantations and their Extent in Riau, Sumatra. Remote Sens. 2019, 11, 2590. [Google Scholar] [CrossRef]

- Shafri, H.Z.; Anuar, M.I.; Seman, I.A.; Noor, N.M. Spectral discrimination of healthy and Ganoderma-infected oil palms from hyperspectral data. Int. J. Remote Sens. 2011, 32, 7111–7129. [Google Scholar] [CrossRef]

- Teng, K.C.; Koay, J.Y.; Tey, S.H.; Lim, K.S.; Ewe, H.T.; Chuah, H.T. A dense medium microwave backscattering model for the remote sensing of oil palm. IEEE Trans. Geosci. Remote Sens. 2014, 53, 3250–3259. [Google Scholar] [CrossRef]

- Li, L.; Dong, J.; Njeudeng Tenku, S.; Xiao, X. Mapping Oil Palm Plantations in Cameroon Using PALSAR 50-m Orthorectified Mosaic Images. Remote Sens. 2015, 7, 1206–1224. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Bai, Y.; Feng, M.; Jiang, H.; Wang, J.; Liu, Y. Validation of Land Cover Maps in China Using a Sampling-Based Labeling Approach. Remote Sens. 2015, 7, 10589–10606. [Google Scholar] [CrossRef] [Green Version]

| Data | Sensor | Bands | Pixel Size | The Time of Images (Year) |

|---|---|---|---|---|

| (m) | ||||

| Optical image | Landsat 8 | Blue, green, red, near-infrared (NIR), Short-wave infrared 1(SWIR1), short-wave infrared 2(SWIR2) | 30 | 2015–2020 |

| Sentinel-2 | Blue, green, red, near-infrared (NIR), Short-wave infrared 1(SWIR1), short-wave infrared 2(SWIR2), Red Edge1, Red Edge2, Red Edge3, Red Edge4 | 10,20 | 2015–2016, 2020 | |

| SAR image | Global PALSAR-2/PALSAR yearly mosaic | HH, HV | 25 | 2015–2020 |

| Sentinel-1GRD | VV, VH | 10 | 2015–2020 | |

| Topographic data | NASA SRTM digital elevation | Elevation | 30 | 2000 |

| Indices | Formula | |

|---|---|---|

| Spectral Indices | NDVI | NDVI = (NIR − RED)/(NIR + RED) |

| NDWI | NDWI = (NIR − SWIR)/(NIR + SWIR1) | |

| EVI | EVI = 2.5 × (NIR − RED)/ (NIR + 6.0× RED − 7.5 × BLUE + 1.0) | |

| SAR Indices | AVE | (HH + HV)/2; (VV + VH)/2 |

| DIF | HH − HV; VV − VH | |

| RAT1 | HH/HV; VH/VV | |

| RAT2 | HV/HH; VV/VH | |

| ASM | ||

| AVG | ||

| CON | ||

| COR | ||

| DIS | ||

| ENT | ||

| IDM | ||

| VAR | ||

| Year | Symbol | Name | Description | Band |

|---|---|---|---|---|

| 2015, 2020 | C1 | PALSAR-2 | SAR data, SAR indices, and topographic data | 23 |

| C2 | Sentinel-1 | SAR data, SAR indices, and topographic data | 23 | |

| C3 | Sentinel-2 | Optical data, spectral indices, and topographic data | 14 | |

| C4 | Landsat 8 | Optical data, spectral indices, and topographic data | 10 | |

| C5 | PALSAR-2 +Landsat 8 | Optical and SAR data, spectral and SAR indices, and Topographic data | 32 | |

| C6 | PALSAR-2 +Sentinel-2 | Optical and SAR data, spectral and SAR indices, and topographic data | 36 | |

| C7 | Sentinel-1 + Sentinel-2 | Optical and SAR data, spectral and SAR indices, and topographic data | 36 | |

| C8 | Sentinel-1 + Landsat 8 | Optical and SAR data, spectral and SAR indices, and topographic data | 32 |

| Class | SAR | Optical | SAR + Optical | ||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | ||

| FRSE | PA | 96% | 84% | 96% | 96% | 100% | 96% | 96% | 96% |

| CA | 92% | 95% | 96% | 100% | 100% | 100% | 100% | 96% | |

| URBN | PA | 70% | 65% | 100% | 91% | 91% | 100% | 100% | 95% |

| CA | 57% | 83% | 96% | 88% | 100% | 96% | 96% | 96% | |

| WATR | PA | 92% | 96% | 100% | 96% | 96% | 92% | 96% | 96% |

| CA | 80% | 81% | 90% | 89% | 83% | 92% | 96% | 89% | |

| OILP | PA | 46% | 88% | 92% | 96% | 96% | 96% | 96% | 92% |

| CA | 67% | 74% | 96% | 93% | 100% | 96% | 96% | 92% | |

| RICE | PA | 68% | 92% | 92% | 88% | 88% | 96% | 96% | 88% |

| CA | 81% | 92% | 100% | 100% | 92% | 100% | 96% | 100% | |

| RUBR | PA | 65% | 69% | 97% | 100% | 100% | 96% | 100% | 100% |

| CA | 60% | 76% | 100% | 100% | 100% | 92% | 100% | 95% | |

| Overall accuracy | 73% | 83% | 96% | 95% | 95% | 96% | 97% | 95% | |

| Kappa statistic | 68% | 80% | 95% | 94% | 94% | 95% | 97% | 94% | |

| Symbol | Area of OPIL (km²) | Data | |

|---|---|---|---|

| 2015 | 2020 | ||

| C1 | 406.26 | 528.8 | SAR |

| C2 | 319.43 | 382.67 | |

| C3 | 363.55 | 583.12 | Optical |

| C4 | 463.49 | 602.91 | |

| C5 | 377.45 | 529.78 | Optical + SAR |

| C6 | 475.81 | 522.99 | |

| C7 | 323.25 | 465.73 | |

| C8 | 418.03 | 496.92 | |

| Xu et al. [6] | 598 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, J.; Tan, M.L.; Tew, Y.L.; Zhang, F.; Wang, T.; Samat, N.; Tangang, F.; Yusop, Z. Optimization of Open-Access Optical and Radar Satellite Data in Google Earth Engine for Oil Palm Mapping in the Muda River Basin, Malaysia. Agriculture 2022, 12, 1435. https://doi.org/10.3390/agriculture12091435

Zeng J, Tan ML, Tew YL, Zhang F, Wang T, Samat N, Tangang F, Yusop Z. Optimization of Open-Access Optical and Radar Satellite Data in Google Earth Engine for Oil Palm Mapping in the Muda River Basin, Malaysia. Agriculture. 2022; 12(9):1435. https://doi.org/10.3390/agriculture12091435

Chicago/Turabian StyleZeng, Ju, Mou Leong Tan, Yi Lin Tew, Fei Zhang, Tao Wang, Narimah Samat, Fredolin Tangang, and Zulkifli Yusop. 2022. "Optimization of Open-Access Optical and Radar Satellite Data in Google Earth Engine for Oil Palm Mapping in the Muda River Basin, Malaysia" Agriculture 12, no. 9: 1435. https://doi.org/10.3390/agriculture12091435