Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation

Abstract

1. Introduction

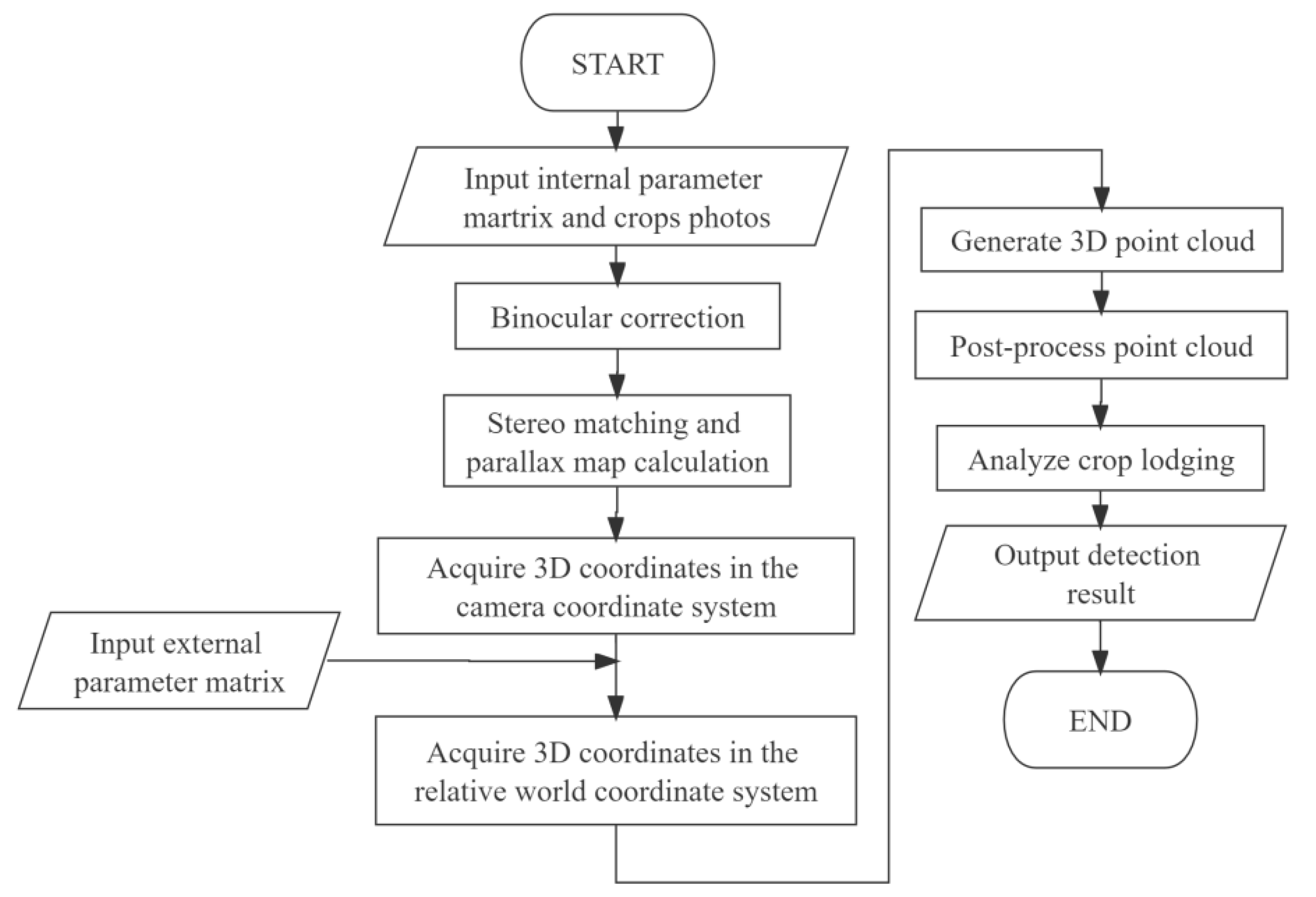

2. Materials and Methods

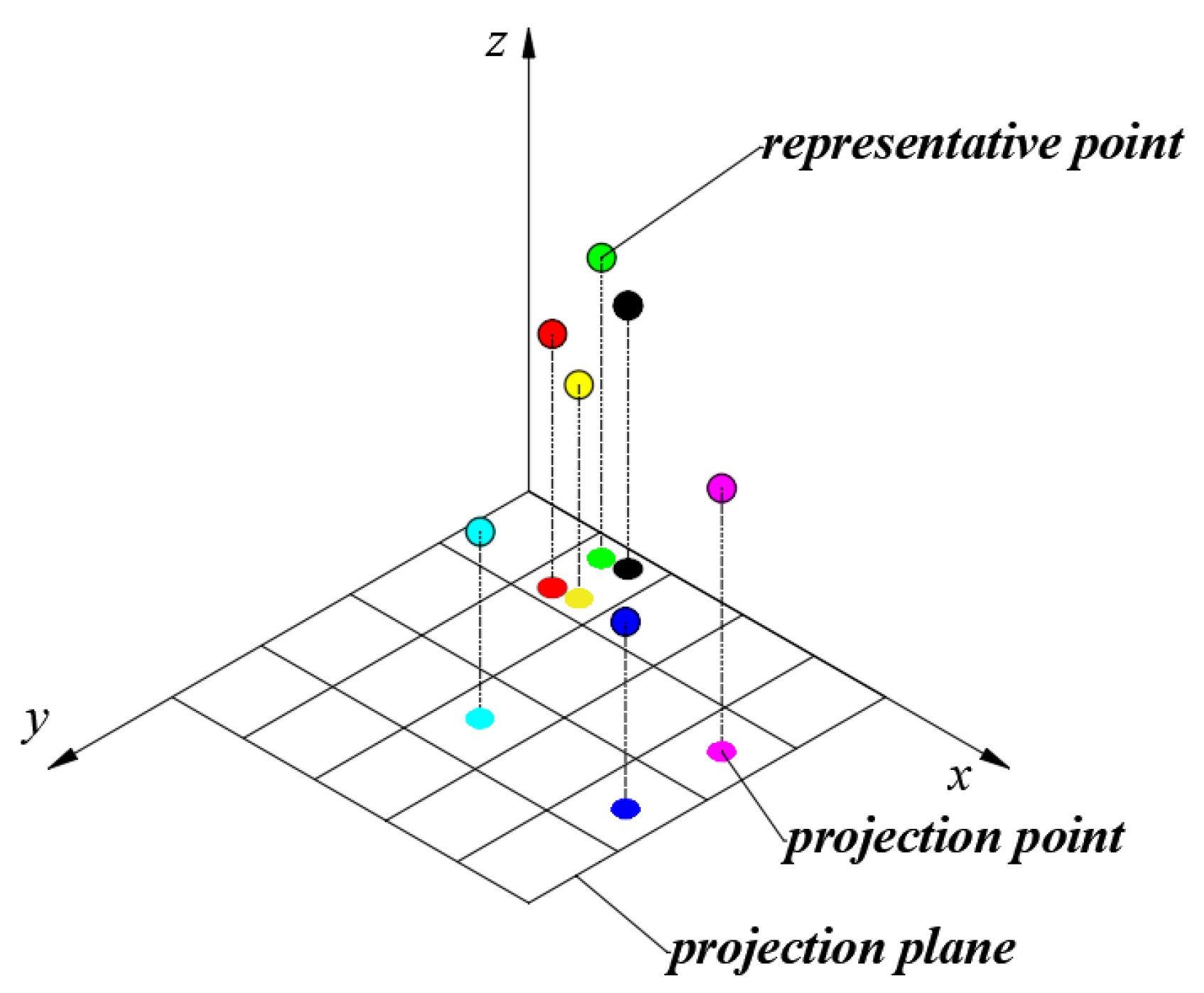

2.1. Visual Detection Method of Wheat Height

2.2. Discrimination Method of Wheat Lodging State

2.3. Wheat Lodging Image Processing Algorithm

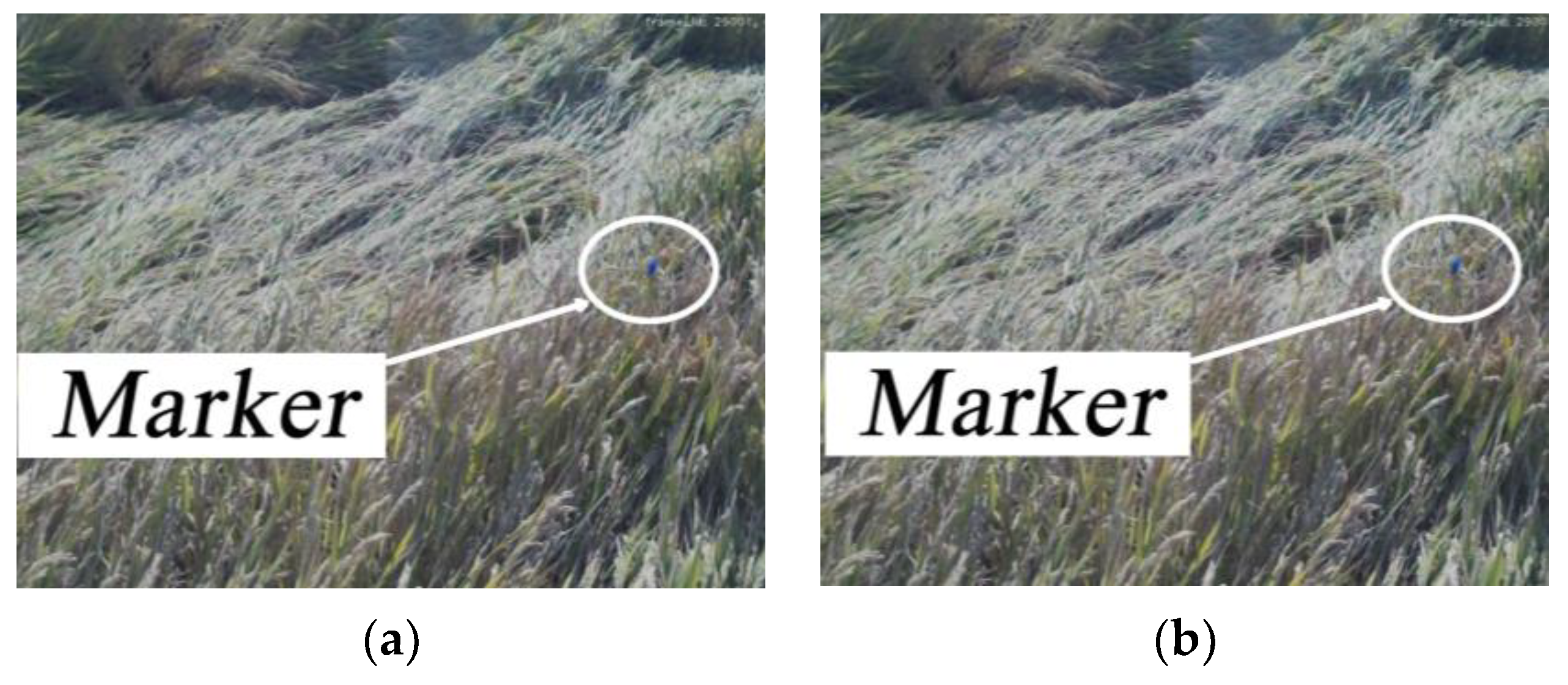

2.4. Field Experiment

3. Result and Discussion

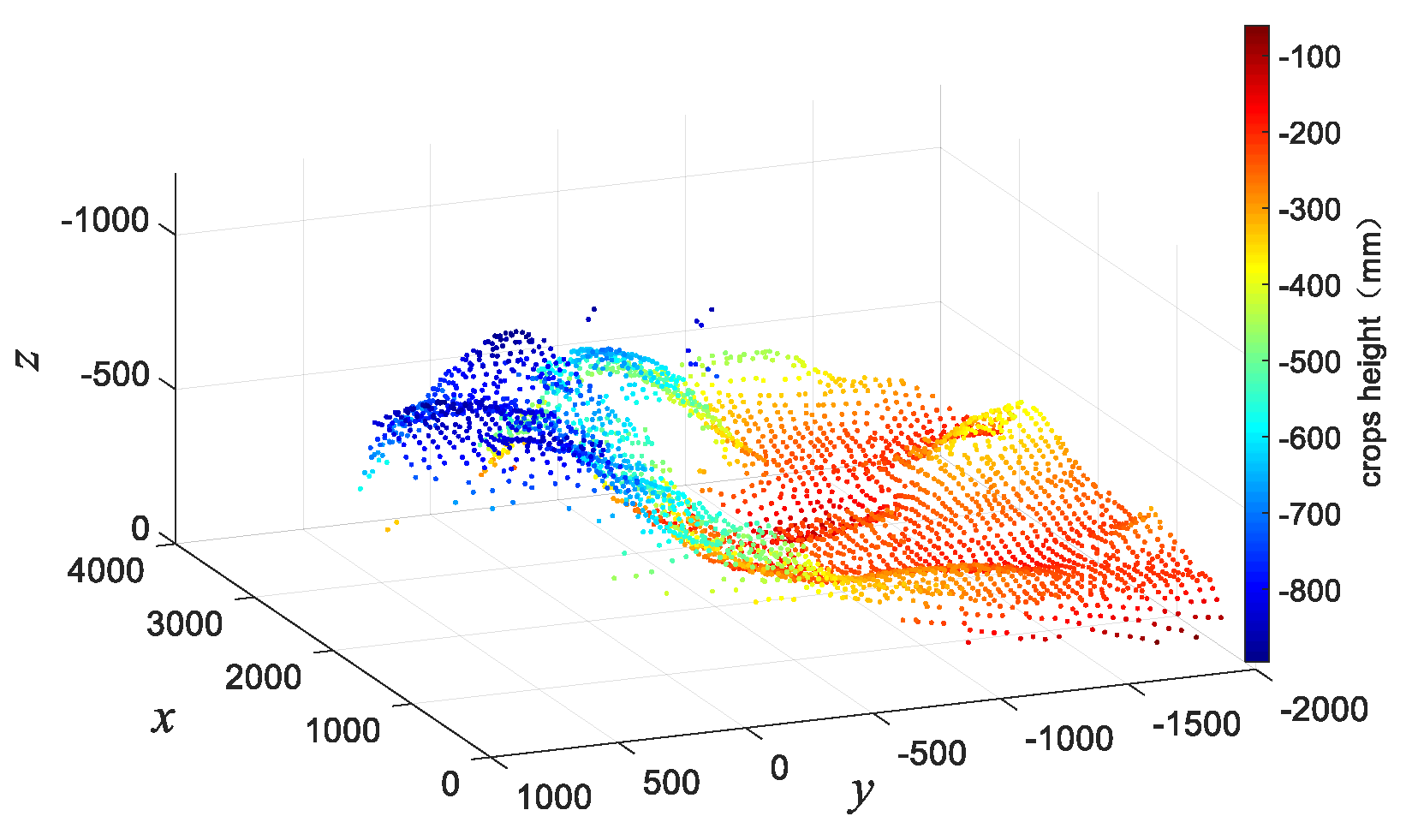

3.1. Wheat Height Detection Results

3.2. Lodging Area Detection Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cai, T.; Peng, D.; Wang, R.; Jia, X.; Qiao, D.; Liu, T.; Jia, Z.; Wang, Z.; Ren, X. Can intercropping or mixed cropping of two genotypes enhance wheat lodging resistance? Field Crop. Res. 2019, 239, 10–18. [Google Scholar] [CrossRef]

- Chauhan, S.; Darvishzadeh, R.; van Delden, S.H.; Boschetti, M.; Nelson, A. Mapping of wheat lodging susceptibility with synthetic aperture radar data. Remote Sens. Environ. 2021, 259, 112427. [Google Scholar] [CrossRef]

- Shah, L.; Yahya, M.; Shah, S.M.A.; Nadeem, M.; Ali, A.; Ali, A.; Wang, J.; Riaz, M.W.; Rehman, S.; Wu, W.; et al. Improving Lodging Resistance: Using Wheat and Rice as Classical Examples. Int. J. Mol. Sci. 2019, 20, 4211. [Google Scholar] [CrossRef]

- Chen, J.; Wang, S.; Lian, Y. Design and test of header parameter keys electric control adjusting device for rice and wheat combined harvester. Trans. Chin. Soc. Agric. Eng. 2018, 34, 19–26. [Google Scholar] [CrossRef]

- Liao, Y.; Xiang, Y.; Wu, M.; Liu, D.; Cheng, Y.; Li, Y. Design and test of the adaptive height adjustment system for header of the combine-harvester. J. Hunan Agric. Univ. Nat. Sci. 2018, 44, 326–329. [Google Scholar] [CrossRef]

- Liu, H.; Reibman, A.R.; Ault, A.C.; Krogmeier, J.V. Video-Based Prediction for Header-Height Control of a Combine Harvester. In Proceedings of the 2nd IEEE International Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 310–315. [Google Scholar] [CrossRef]

- Wang, H.; Shen, H.; Cao, S.; Xu, X.; Han, T.; Guo, H. Hydraulic System Design of Combined Harvester Header and Simulation of Header Lifting System. IOP Conf. Ser. Earth Environ. Sci. 2019, 233, 032012. [Google Scholar] [CrossRef]

- Zhuang, X.; Li, Y. Header Height Control Strategy of Harvester Based on Robust Feedback Linearization. Trans-Actions Chin. Soc. Agric. Mach. 2020, 51, 123–130. [Google Scholar] [CrossRef]

- Xue, J.; Dong, P.; Hu, S.; Li, L.; Wang, K.; Gao, S.; Wang, Y.Z.; Li, S. Effect of Lodging on Maize Grain Loss and Loss Reduction Technology in Mechanical Grain Harvest. J. Maize Sci. 2020, 28, 116. [Google Scholar] [CrossRef]

- Cao, W.; Qiao, Z.; Gao, Z.; Lu, S.; Tian, F. Use of unmanned aerial vehicle imagery and a hybrid algorithm combining a watershed algorithm and adaptive threshold segmentation to extract wheat lodging. Phys. Chem. Earth Parts A/B/C 2021, 123, 103016. [Google Scholar] [CrossRef]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Guan, S.; Fukami, K.; Matsunaka, H.; Okami, M.; Tanaka, R.; Nakano, H.; Sakai, T.; Nakano, K.; Ohdan, H.; Takahashi, K. Assessing Correlation of High-Resolution NDVI with Fertilizer Application Level and Yield of Rice and Wheat Crops Using Small UAVs. Remote Sens. 2019, 11, 112. [Google Scholar] [CrossRef]

- Wang, J.-J.; Ge, H.; Dai, Q.; Ahmad, I.; Dai, Q.; Zhou, G.; Qin, M.; Gu, C. Unsupervised discrimination between lodged and non-lodged winter wheat: A case study using a low-cost unmanned aerial vehicle. Int. J. Remote Sens. 2018, 39, 2079–2088. [Google Scholar] [CrossRef]

- Xie, T.; Li, J.; Yang, C.; Jiang, Z.; Chen, Y.; Guo, L.; Zhang, J. Crop height estimation based on UAV images: Methods, errors, and strategies. Comput. Electron. Agric. 2021, 185, 106155. [Google Scholar] [CrossRef]

- Zhao, B.; Li, J.; Baenziger, P.S.; Belamkar, V.; Ge, Y.; Zhang, J.; Shi, Y. Automatic Wheat Lodging Detection and Mapping in Aerial Imagery to Support High-Throughput Phenotyping and In-Season Crop Management. Agronomy 2020, 10, 1762. [Google Scholar] [CrossRef]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of Plant Height Changes of Lodged Maize Using UAV-LiDAR Data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Zhang, Z.; Flores, P.; Igathinathane, C.; Naik, D.L.; Kiran, R.; Ransom, J.K. Wheat Lodging Detection from UAS Imagery Using Machine Learning Algorithms. Remote Sens. 2020, 12, 1838. [Google Scholar] [CrossRef]

- Koh, J.; Spangenberg, G.; Kant, S. Automated Machine Learning for High-Throughput Image-Based Plant Phenotyping. Remote Sens. 2021, 13, 858. [Google Scholar] [CrossRef]

- Han, D.; Yang, H.; Yang, G.; Qiu, C. Monitoring model of maize lodging based on Sentinel-1 radar image. Trans-Actions Chin. Soc. Agric. Eng. 2018, 34, 166–172. [Google Scholar] [CrossRef]

- Hua, S.; Xu, M.; Xu, Z.; Ye, H.; Zhou, C.Q. Kinect-Based Real-Time Acquisition Algorithm of Crop Growth Depth Images. Math. Probl. Eng. 2021, 2021, 1–9. [Google Scholar] [CrossRef]

- Setyawan, R.A.; Basuki, A.; Wey, C.Y. Machine Vision-Based Urban Farming Growth Monitoring System. In Proceedings of the 10th Electrical Power, Electronics, Communications, Controls and Informatics Seminar (EECCIS), Malang, Indonesia, 26–28 August 2020; pp. 183–187. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Choi, K.H.; Han, S.K.; Park, K.-H.; Kim, K.-S.; Kim, S. Morphology-based guidance line extraction for an autonomous weeding robot in paddy fields. Comput. Electron. Agric. 2015, 113, 266–274. [Google Scholar] [CrossRef]

- He, J.; Zang, Y.; Luo, X.; Zhao, R.; He, J.; Jiao, J. Visual detection of rice rows based on Bayesian decision theory and robust regression least squares method. Int. J. Agric. Biol. Eng. 2021, 14, 199–206. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time weed-crop classification and localisation technique for robotic weed control in lettuce. Biosyst. Eng. 2020, 192, 257–274. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.-R.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Masuda, R.; Fujimoto, S.; Iida, M.; Suguri, M. A Method to Detect the Occurrence of Rice Plant Lodging Using Wavelet Transform. IFAC Proc. Vol. 2013, 46, 75–80. [Google Scholar] [CrossRef]

- Yu-ping, G.; Feng, S. Effect of Lodging Resistance on Yield of Rice and Its Relationship with Stalk Physical Characteristics. J. Jilin Agric. Sci. 2004, 29, 6–11. [Google Scholar] [CrossRef]

- Zhang, H.; Li, A.N.; Zhang, Q.; Guo, Y.G.; Song, X.N.; Gao, Q. Sgbm Algorithm and bm Algorithm Analysis and Research. Geomat. Spat. Inf. Technol. 2016, 39, 214–216. [Google Scholar]

- Hirschmüller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005. [Google Scholar] [CrossRef]

| α | Lodging Grade |

|---|---|

| α = 0° | Grade I |

| 0° < α ≤ 30° | Grade II |

| 30° < α ≤ 45° | Grade III |

| 45° < α ≤ 60° | Grade IV |

| 60° < α ≤ 90° | Grade V |

| Lodging State | |

|---|---|

| Upright | |

| Inclined | |

| Lodging |

| No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Mean Value |

|---|---|---|---|---|---|---|---|---|

| Height (mm) | 900 | 850 | 900 | 880 | 850 | 820 | 860 | 865.73 |

| No. | Actual Height (mm) | Measured Height (mm) | Absolute Error (mm) | Relative Error |

|---|---|---|---|---|

| 1 | 860 | 822.70 | 37.29033 | 0.0453 |

| 2 | 290 | 336.84 | 46.84467 | 0.1391 |

| 3 | 590 | 580.44 | 9.55433 | 0.0165 |

| 4 | 770 | 690.07 | 79.92233 | 0.1158 |

| 5 | 255 | 264.01 | 9.01267 | 0.0341 |

| 6 | 230 | 336.03 | 106.03667 | 0.3156 |

| 7 | 595 | 500.24 | 94.75533 | 0.1894 |

| 8 | 690 | 689.53 | 0.46333 | 0.0007 |

| 9 | 770 | 702.70 | 67.29233 | 0.0958 |

| 10 | 770 | 699.91 | 70.08633 | 0.1001 |

| 11 | 590 | 533.78 | 56.21633 | 0.1053 |

| 12 | 390 | 304.21 | 85.79 | 0.2820 |

| 13 | 510 | 462.06 | 47.939 | 0.1038 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, J.; Yin, Y.; Zhang, Y.; Pan, Z.; Fan, Y. Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation. Agriculture 2023, 13, 120. https://doi.org/10.3390/agriculture13010120

Wen J, Yin Y, Zhang Y, Pan Z, Fan Y. Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation. Agriculture. 2023; 13(1):120. https://doi.org/10.3390/agriculture13010120

Chicago/Turabian StyleWen, Jingqian, Yanxin Yin, Yawei Zhang, Zhenglin Pan, and Yindong Fan. 2023. "Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation" Agriculture 13, no. 1: 120. https://doi.org/10.3390/agriculture13010120

APA StyleWen, J., Yin, Y., Zhang, Y., Pan, Z., & Fan, Y. (2023). Detection of Wheat Lodging by Binocular Cameras during Harvesting Operation. Agriculture, 13(1), 120. https://doi.org/10.3390/agriculture13010120