Beyond Trade-Off: An Optimized Binocular Stereo Vision Based Depth Estimation Algorithm for Designing Harvesting Robot in Orchards

Abstract

:1. Introduction

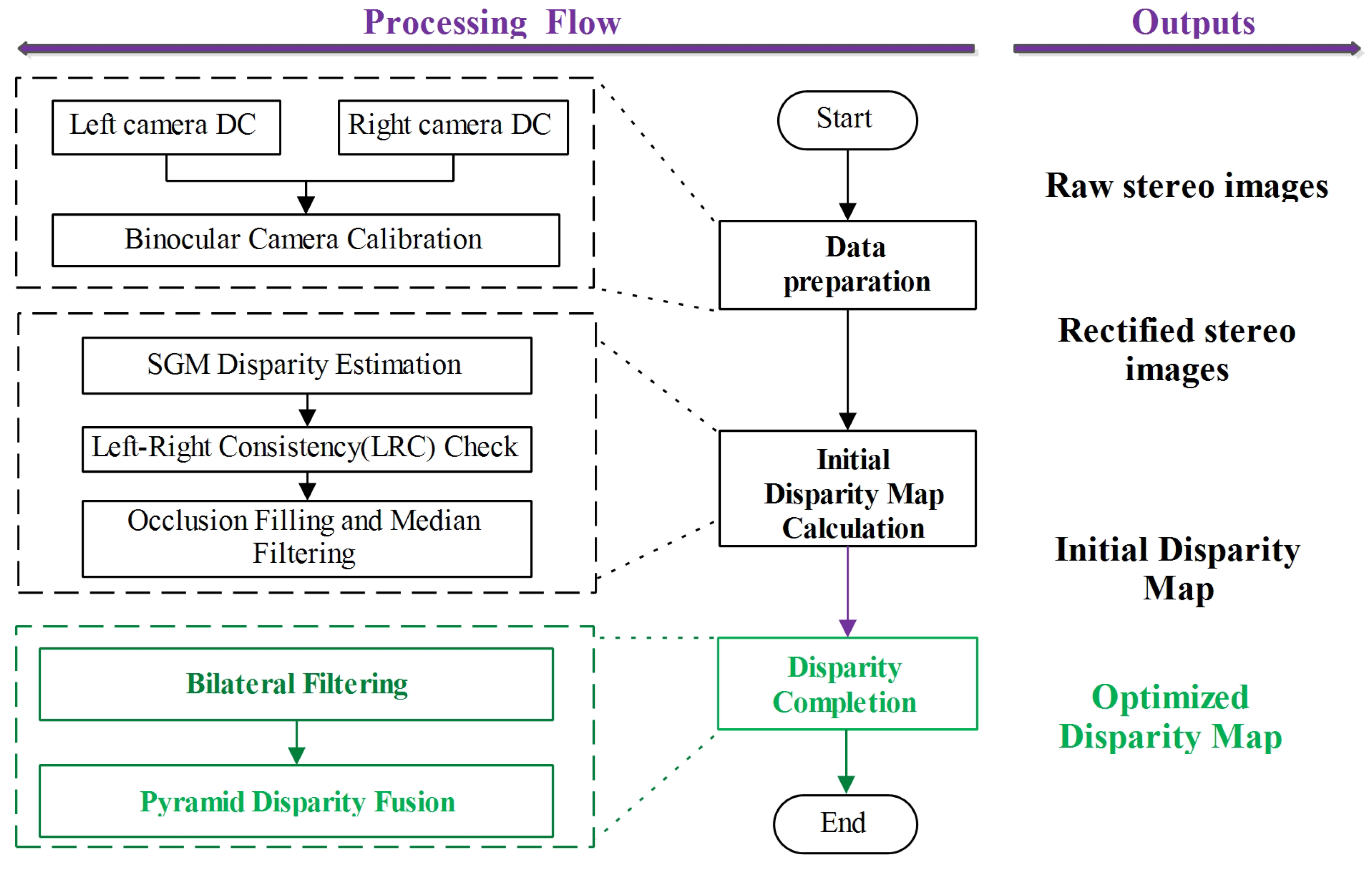

- We propose a completion method by applying a bilateral filtering (BF) algorithm on an optimized disparity map. Then, the high-precision sparse-optimized disparity maps are achieved by the semi-global matching algorithm with left and right consistency (LRC) checks and median filtering. In this way, discontinuous holes caused by missing or incorrectly matched corresponding pixels or regions from a reference image to the target image can be effectively attenuated.

- In order to reduce the time cost while maintaining the accuracy of outputs as much as possible, we attempted to merge multiple low-resolution bilateral filtering outputs into a pyramid model. This mechanism can optimize the accuracy of outputs while reducing the time cost effectively.

- A prototype harvesting robot was designed based on our proposed method. In addition, comprehensive qualitative and quantitative experimental results show that the proposed method has a certain validity in practical application.

2. Related Work

3. Completion-BiPy-Disp Algorithm

3.1. Data Preparation

3.2. Optimize Initial Disparity Map

3.2.1. Initial Disparity Map

3.2.2. Optimize

3.3. Disparity Map Completion

3.3.1. Bilateral Filtering

3.3.2. Acquisition Multiscale Disparity Map

3.3.3. Fusion of Multi-Scale Disparity Maps

4. Experiments and Results Analysis

4.1. Data Acquisition Results

4.2. Optimized Results

4.3. Time Cost Comparison

4.4. The Qualitative Completion Results

4.4.1. Acquisition of Initial Disparity Map with Different Resolutions

4.4.2. Experimental Results of BF

4.4.3. Pyramid Fusion

4.5. The Quantitative Results Analyze

4.6. Experimental Results of Multiple Samples

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Jia, W.; Zhang, Y.; Lian, J.; Zheng, Y.; Zhao, D.; Li, C. Apple harvesting robot under information technology: A review. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420925310. [Google Scholar] [CrossRef]

- Jin, Y.; Yu, C.; Yin, J.; Yang, S.X. Detection method for table grape ears and stems based on a far-close-range combined vision system and hand-eye-coordinated picking test. Comput. Electron. Agric. 2022, 202, 107364. [Google Scholar] [CrossRef]

- Ricciuti, M.; Gambi, E. Pupil Diameter Estimation in Visible Light. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–22 January 2021; pp. 1244–1248. [Google Scholar]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Roy, P.; Isler, V. Surveying apple orchards with a monocular vision system. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 916–921. [Google Scholar]

- Liu, X.; Chen, S.W.; Aditya, S.; Sivakumar, N.; Dcunha, S.; Qu, C.; Taylor, C.J.; Das, J.; Kumar, V. Robust fruit counting: Combining deep learning, tracking, and structure from motion. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1045–1052. [Google Scholar]

- Häni, N.; Roy, P.; Isler, V. A comparative study of fruit detection and counting methods for yield mapping in apple orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Roy, P.; Dong, W.; Isler, V. Registering reconstructions of the two sides of fruit tree rows. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Nielsen, M.; Andersen, H.J.; Slaughter, D.; Granum, E. Ground truth evaluation of computer vision based 3D reconstruction of synthesized and real plant images. Precis. Agric. 2007, 8, 49–62. [Google Scholar] [CrossRef]

- Fusiello, A.; Roberto, V.; Trucco, E. Symmetric stereo with multiple windowing. Int. J. Pattern Recognit. Artif. Intell. 2000, 14, 1053–1066. [Google Scholar] [CrossRef]

- Tan, P.; Zeng, G.; Wang, J.; Kang, S.B.; Quan, L. Image-based tree modeling. Assoc. Comput. Mach. 2006, 6, 87-es. [Google Scholar]

- Quan, L.; Tan, P.; Zeng, G.; Yuan, L.; Wang, J.; Kang, S.B. Image-based plant modeling. Assoc. Comput. Mach. 2006, 6, 599–604. [Google Scholar]

- Kaczmarek, A.L. Stereo vision with Equal Baseline Multiple Camera Set (EBMCS) for obtaining depth maps of plants. Comput. Electron. Agric. 2017, 135, 23–37. [Google Scholar] [CrossRef]

- Malekabadi, A.J.; Khojastehpour, M.; Emadi, B. Disparity map computation of tree using stereo vision system and effects of canopy shapes and foliage density. Comput. Electron. Agric. 2019, 156, 627–644. [Google Scholar] [CrossRef]

- Hayashi, S.; Ganno, K.; Ishii, Y.; Tanaka, I. Robotic harvesting system for eggplants. Jpn. Agric. Res. Q. JARQ 2002, 36, 163–168. [Google Scholar] [CrossRef]

- Bleyer, M.; Breiteneder, C. Stereo matching—State-of-the-art and research challenges. In Advanced Topics in Computer Vision; Springer: London, UK, 2013; pp. 143–179. [Google Scholar]

- Lipson, L.; Teed, Z.; Deng, J. Raft-stereo: Multilevel recurrent field transforms for stereo matching. In Proceedings of the 2021 International Conference on 3D Vision (3DV), Virtual, 1 December 2021; pp. 218–227. [Google Scholar]

- Yuan, T.; Li, W.; Tan, Y.; Yang, Q.; Gao, F.; Ren, Y. Information acquisition for cucumber harvesting robot in greenhouse. Nongye Jixie Xuebao = Trans. Chin. Soc. Agric. Mach. 2009, 40, 151–155. [Google Scholar]

- Feng, Q.; Wang, X.; Zheng, W.; Qiu, Q.; Jiang, K. New strawberry harvesting robot for elevated-trough culture. Int. J. Agric. Biol. Eng. 2012, 5, 1–8. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J.; Kurita, M. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Yang, L.; Dickinson, J.; Wu, Q.J.; Lang, S. A fruit recognition method for automatic harvesting. In Proceedings of the 2007 14th International Conference on Mechatronics and Machine Vision in Practice, Xiamen, China, 4–6 December 2007; pp. 152–157. [Google Scholar]

- Xiang, R.; Ying, Y.; Jiang, H.; Peng, Y. Three-dimensional location of tomato based on binocular stereo vision for tomato harvesting robot. In Proceedings of the 5th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optoelectronic Materials and Devices for Detector, Imager, Display, and Energy Conversion Technology, Dalian, China, 26–29 April 2010; Volume 7658, pp. 666–672. [Google Scholar]

- Van Henten, E.J.; Hemming, J.; Van Tuijl, B.; Kornet, J.; Meuleman, J.; Bontsema, J.; Van Os, E. An autonomous robot for harvesting cucumbers in greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Plebe, A.; Grasso, G. Localization of spherical fruits for robotic harvesting. Mach. Vis. Appl. 2001, 13, 70–79. [Google Scholar] [CrossRef]

- Liang, Z.; Feng, Y.; Guo, Y.; Liu, H.; Chen, W.; Qiao, L.; Zhou, L.; Zhang, J. Learning for disparity estimation through feature constancy. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2811–2820. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid stereo matching network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5410–5418. [Google Scholar]

- Guo, X.; Yang, K.; Yang, W.; Wang, X.; Li, H. Group-wise correlation stereo network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3273–3282. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef]

- Kopf, J.; Cohen, M.F.; Lischinski, D.; Uyttendaele, M. Joint bilateral upsampling. ACM Trans. Graph. (ToG) 2007, 26, 96-es. [Google Scholar] [CrossRef]

- Piella, G. A general framework for multiresolution image fusion: From pixels to regions. Inf. Fusion 2003, 4, 259–280. [Google Scholar] [CrossRef]

- Hu, J.; Li, S. The multiscale directional bilateral filter and its application to multisensor image fusion. Inf. Fusion 2012, 13, 196–206. [Google Scholar] [CrossRef]

- Sidia, W.D.; Wibawaa, I.G.A. Sum of Squared Difference (SSD) Template Matching Testing on Writing Learning Application. J. Elektron. Ilmu Komput. Udayana 2020, 8, 453–461. [Google Scholar] [CrossRef]

- Mattoccia, S.; Tombari, F.; Di Stefano, L. Fast full-search equivalent template matching by enhanced bounded correlation. IEEE Trans. Image Process. 2008, 17, 528–538. [Google Scholar] [CrossRef] [PubMed]

- Yoon, K.J.; Kweon, I.S. Adaptive support-weight approach for correspondence search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef]

| Parameters | Left | Right |

|---|---|---|

| 1056.6100 | 1055.6700 | |

| 1055.1899 | 1054.9200 | |

| 932.9600 | 979.3500 | |

| 582.5270 | 551.9710 | |

| −0.0406 | −0.0401 | |

| 0.0091 | 0.0083 | |

| −0.0047 | −0.0047 | |

| −0.0009 | −0.0009 | |

| 0.0001 | 0.0001 |

| Time | F | 1/2F | 1/4F | 1/5F |

|---|---|---|---|---|

| cost | 3.14 | 0.72 | 0.16 | 0.10 |

| Aggregating | 247.03 | 51.26 | 13.72 | 8.17 |

| Disparity | 27.76 | 5.53 | 1.39 | 0.90 |

| LRC | 28.60 | 5.68 | 1.42 | 0.91 |

| Remove Speckles | 4.24 | 0.93 | 0.33 | 0.20 |

| Median Filter | 10.03 | 1.97 | 0.52 | 0.32 |

| Total Time cost | 320.81 | 66.10 | 17.53 | 10.60 |

| Reduction% | – | 79.4 | 94.5 | 96.7 |

| Name | F | 1/2F | 1/4F | 1/5F |

|---|---|---|---|---|

| Time cost (s) | 1038 | 179 | 50 | 26 |

| Reduction rate | – | 83% | 96% | 97% |

| ID | 1 | 2 | 3 | 4 | Mean Value |

|---|---|---|---|---|---|

| Left (pixel) | |||||

| Right (pixel) | |||||

| HOR (mm) | 159 | 184 | 193 | 171 | 176.75 |

| VERT (mm) | 6 | 6 | 2 | 4 | 4.5 |

| Estimated depth (mm) | 797.44 | 689.09 | 656.96 | 741.48 | 721.2425 |

| Measured (mm) | 800 | 700 | 650 | 750 | 725 |

| Abs (mm) | 2.56 | 10.91 | 6.96 | 8.52 | 7.2375 |

| Res Error | 0.32% | 1.56% | 1.07% | 1.14% | 1.10225% |

| Name | Left (Pixel) | Right (Pixel) | Measured (mm) | Estimated (mm) | RE (%) |

|---|---|---|---|---|---|

| 1 | (442, 445) | (230, 445) | 610 | 598.08 | 1.95 |

| 2 | (583, 230) | (390, 226) | 660 | 656.96 | 0.46 |

| 3 | (801, 314) | (628, 314) | 740 | 732.91 | 0.96 |

| 4 | (707, 536) | (504, 531) | 630 | 624.60 | 0.86 |

| 5 | (432, 340) | (324, 342) | 1200 | 1174.01 | 2.17 |

| 6 | (312, 527) | (190, 528) | 1100 | 1039.29 | 5.52 |

| 7 | (455, 535) | (343, 537) | 1150 | 1132.08 | 1.56 |

| 8 | (597, 311) | (526, 311) | 1700 | 1785.82 | 5.05 |

| 9 | (500, 393) | (431, 396) | 1850 | 1837.58 | 0.67 |

| 10 | (583, 375) | (507, 375) | 1650 | 1668.33 | 1.11 |

| 11 | (598, 404) | (523, 404) | 1700 | 1690.58 | 0.55 |

| 12 | (646, 433) | (576, 435) | 1800 | 1811.33 | 0.63 |

| Avg | (554.7, 403.6) | (431.0, 403.7) | 1232.5 | 1229.3 | 1.79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Hao, Q.; Mao, Y.; Su, J.; Cao, J. Beyond Trade-Off: An Optimized Binocular Stereo Vision Based Depth Estimation Algorithm for Designing Harvesting Robot in Orchards. Agriculture 2023, 13, 1117. https://doi.org/10.3390/agriculture13061117

Zhang L, Hao Q, Mao Y, Su J, Cao J. Beyond Trade-Off: An Optimized Binocular Stereo Vision Based Depth Estimation Algorithm for Designing Harvesting Robot in Orchards. Agriculture. 2023; 13(6):1117. https://doi.org/10.3390/agriculture13061117

Chicago/Turabian StyleZhang, Li, Qun Hao, Yefei Mao, Jianbin Su, and Jie Cao. 2023. "Beyond Trade-Off: An Optimized Binocular Stereo Vision Based Depth Estimation Algorithm for Designing Harvesting Robot in Orchards" Agriculture 13, no. 6: 1117. https://doi.org/10.3390/agriculture13061117