Evaluation of Maize Crop Damage Using UAV-Based RGB and Multispectral Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and UAV Data Acquisition

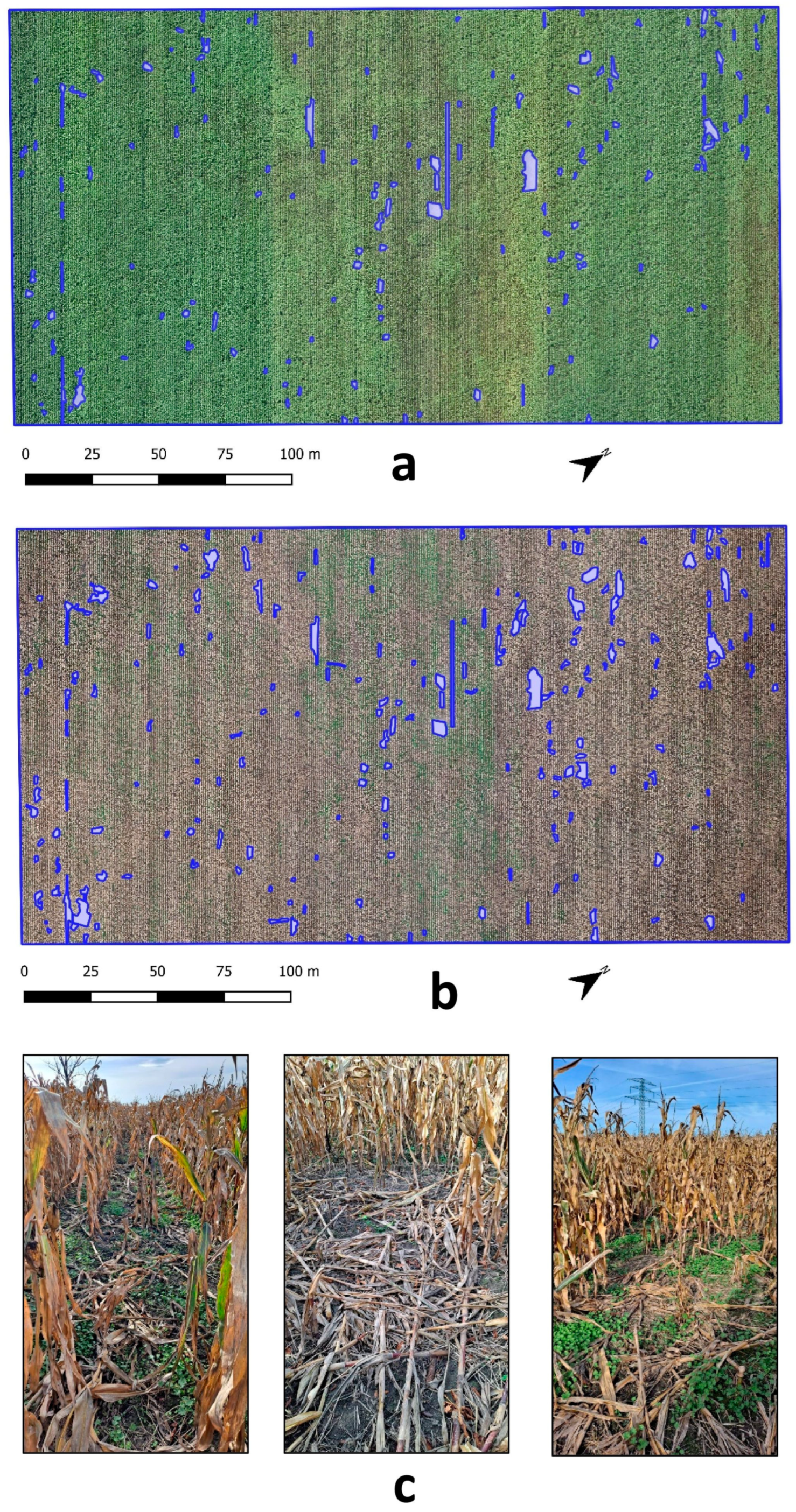

2.2. Reference Areas of Crop Damage

2.3. Statistical Analysis

3. Results

3.1. Evaluation of Crop Damage Areas Based on Vegetation Index (NDVI)

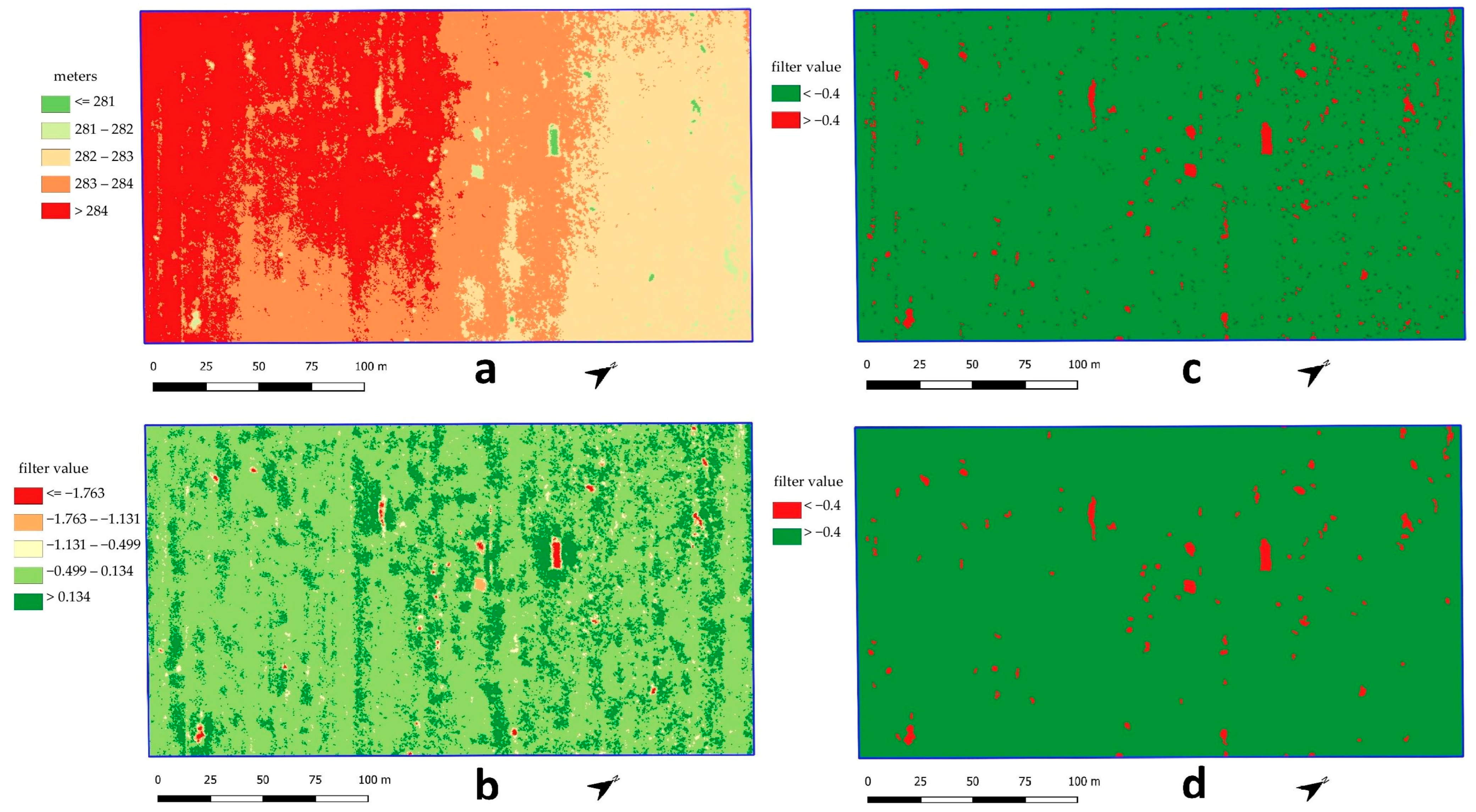

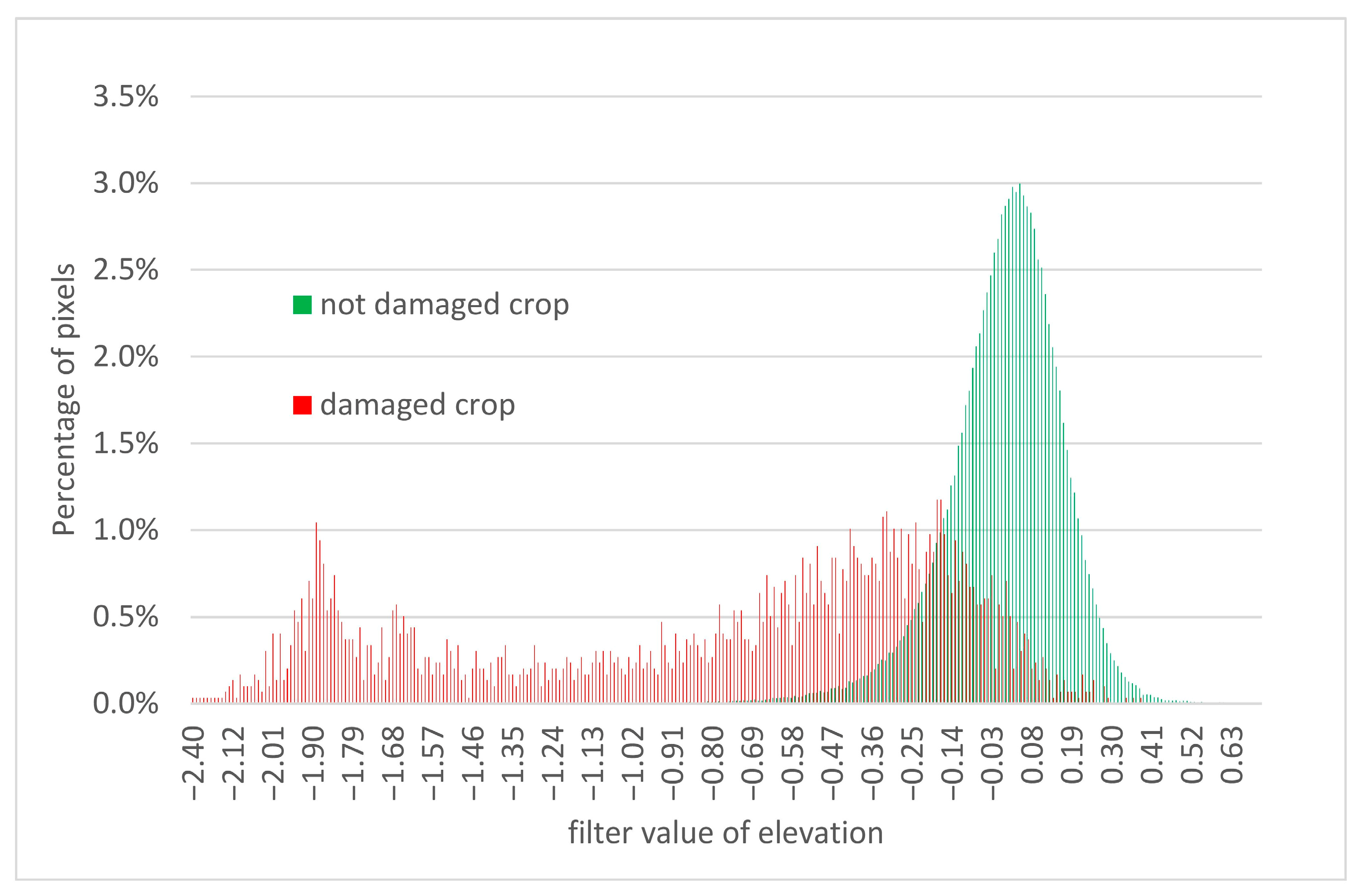

3.2. Evaluation of Crop Damage Areas Based on 3D Cloud

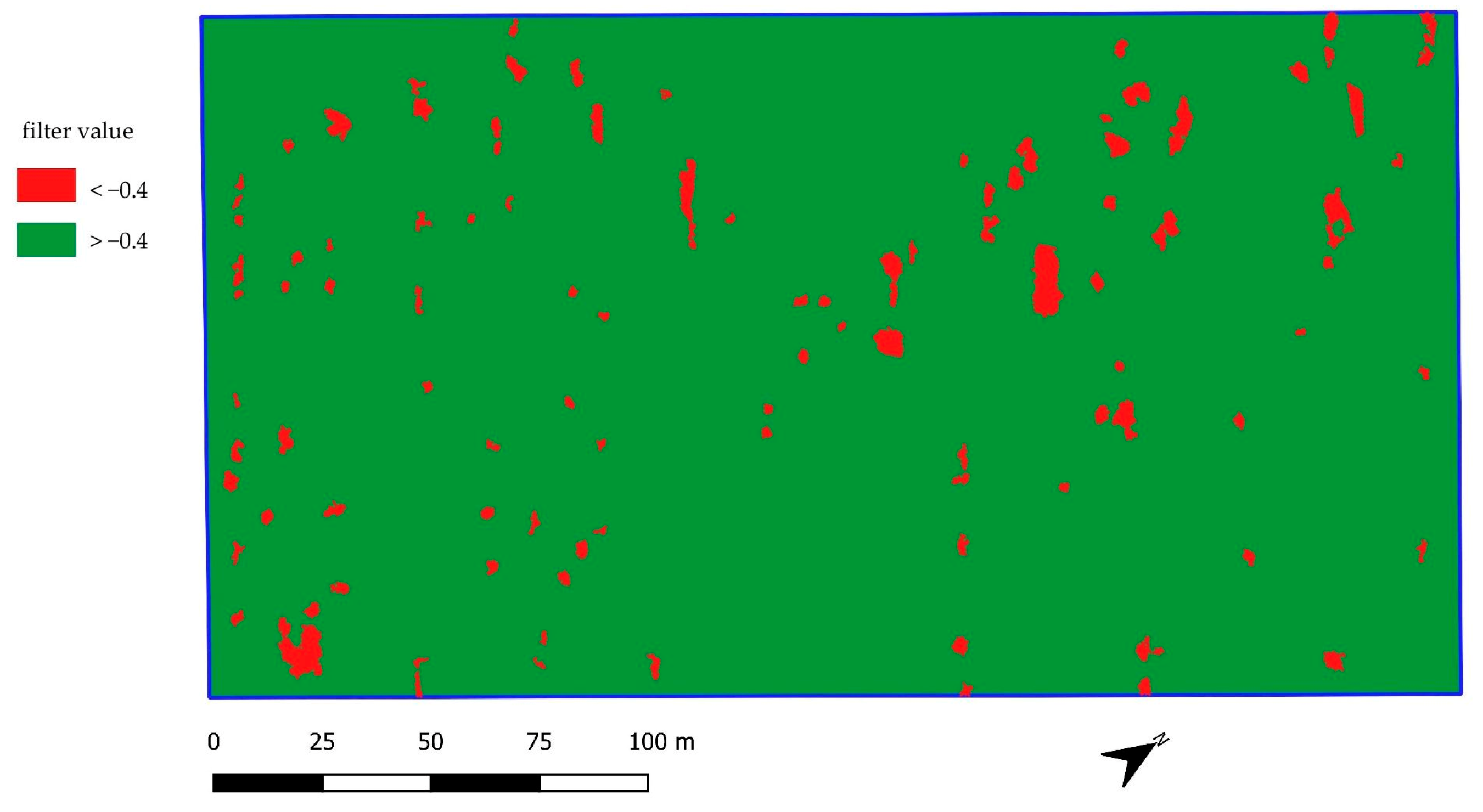

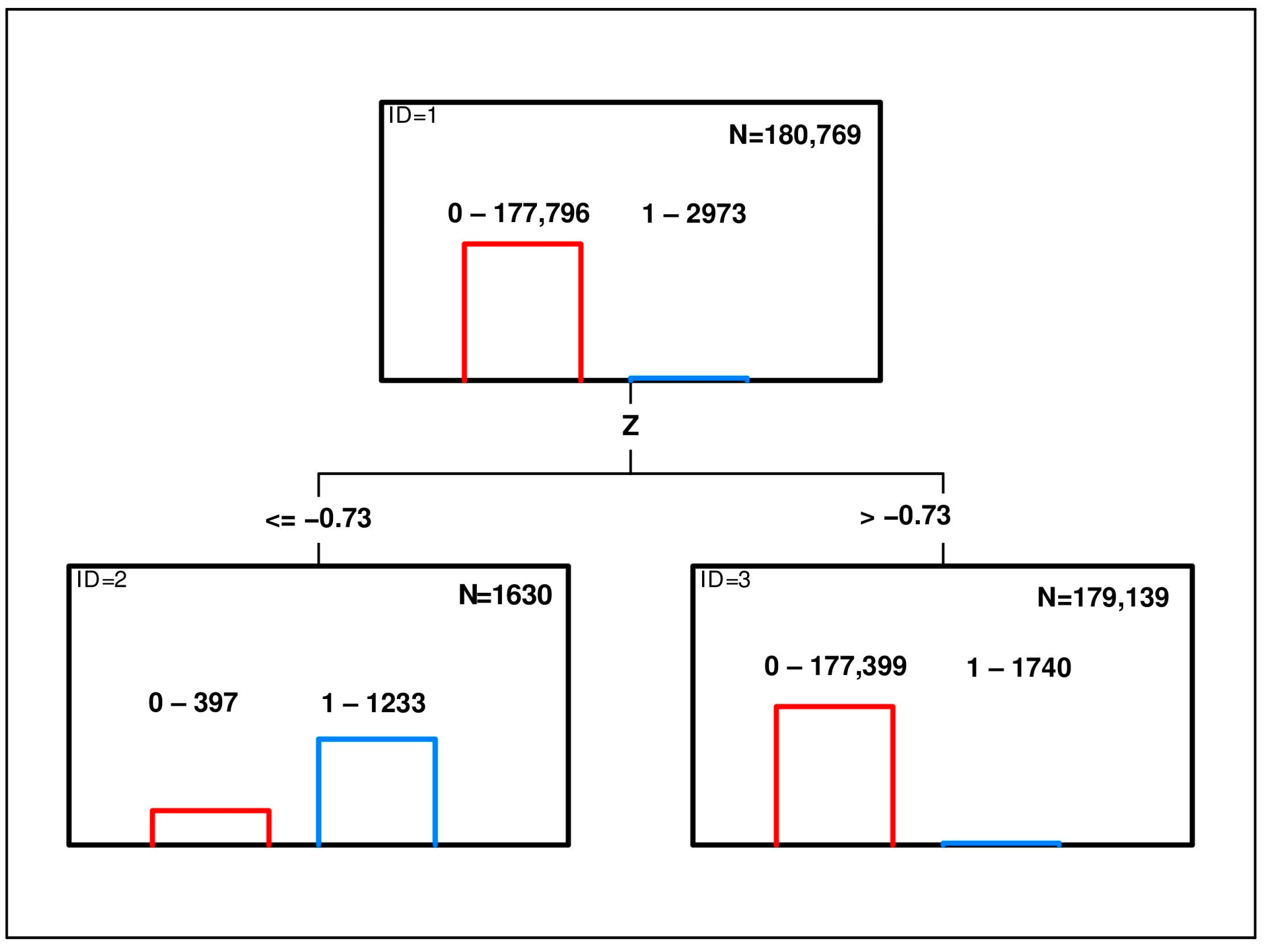

3.3. Evaluation of Crop Damage Areas Based on CART

3.4. Evaluation of Classification Accuracy of Crop Damage Areas Based on Different Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Novosel, H.; Piria, M.; Safner, R.; Kutnjak, H.; Šprem, N. The Game Damages on Agricultural Crops in Croatia. J. Cent. Eur. Agric. 2012, 13, 631–642. [Google Scholar] [CrossRef]

- Carpio, A.J.; Apollonio, M.; Acevedo, P. Wild Ungulate Overabundance in Europe: Contexts, Causes, Monitoring and Management Recommendations. Mam. Rev. 2021, 51, 95–108. [Google Scholar] [CrossRef]

- Bleier, N.; Lehoczki, R.; Újváry, D.; Szemethy, L.; Csányi, S. Relationships between Wild Ungulates Density and Crop Damage in Hungary. Acta Theriol 2012, 57, 351–359. [Google Scholar] [CrossRef]

- Statistics Poland. Statistical Yearbook of Forestry; Statistical Office in Białystok: Białystok, Poland, 2022. [Google Scholar]

- Pejsak, Z.; Woźniakowski, G. Etyczne i Ekonomiczne Aspekty Depopulacji Dzików w Zwalczaniu Afrykańskiego Pomoru Świń (ASF). Życie Weter. 2021, 96, 703–708. [Google Scholar]

- Mackin, R. Dynamics of Damage Caused by Wild Boar to Different Agricultural Crops. Acta Theriol. 1970, 15, 447–458. [Google Scholar] [CrossRef]

- Piekarczyk, P.; Tajchman, K.; Belova, O.; Wójcik, M. Crop Damage by Wild Boar (Sus Scrofa L.) Depending on the Crop Composition in Central-Eastern Poland. Balt. For. 2021, 27. [Google Scholar] [CrossRef]

- Lee, S.-M.; Lee, E.-J. Diet of the Wild Boar (Sus Scrofa): Implications for Management in Forest-Agricultural and Urban Environments in South Korea. PeerJ 2019, 7, e7835. [Google Scholar] [CrossRef]

- Amici, A.; Serrani, F.; Rossi, C.M.; Primi, R. Increase in Crop Damage Caused by Wild Boar (Sus Scrofa L.): The “Refuge Effect”. Agron. Sustain. Dev. 2012, 32, 683–692. [Google Scholar] [CrossRef]

- Massei, G.; Kindberg, J.; Licoppe, A.; Gačić, D.; Šprem, N.; Kamler, J.; Baubet, E.; Hohmann, U.; Monaco, A.; Ozoliņš, J.; et al. Wild Boar Populations up, Numbers of Hunters down? A Review of Trends and Implications for Europe: Wild Boar and Hunter Trends in Europe. Pest. Manag. Sci. 2015, 71, 492–500. [Google Scholar] [CrossRef]

- Schley, L.; Roper, T.J. Diet of Wild Boar Sus Scrofa in Western Europe, with Particular Reference to Consumption of Agricultural Crops: Diet of Wild Boar. Mammal. Rev. 2003, 33, 43–56. [Google Scholar] [CrossRef]

- Tarvydas, A.; Belova, O. Effect of Wild Boar (Sus Scrofa L.) on Forests, Agricultural Lands and Population Management in Lithuania. Diversity 2022, 14, 801. [Google Scholar] [CrossRef]

- Herrero, J.; García-Serrano, A.; Couto, S.; Ortuño, V.M.; García-González, R. Diet of Wild Boar Sus Scrofa L. and Crop Damage in an Intensive Agroecosystem. Eur. J. Wildl. Res. 2006, 52, 245–250. [Google Scholar] [CrossRef]

- Cappa, F.; Lombardini, M.; Meriggi, A. Influence of Seasonality, Environmental and Anthropic Factors on Crop Damage by Wild Boar Sus Scrofa. Folia Zool. 2019, 68, 261. [Google Scholar] [CrossRef]

- Schley, L.; Dufrêne, M.; Krier, A.; Frantz, A.C. Patterns of Crop Damage by Wild Boar (Sus Scrofa) in Luxembourg over a 10-Year Period. Eur. J. Wildl. Res. 2008, 54, 589–599. [Google Scholar] [CrossRef]

- Bobek, B.; Furtek, J.; Bobek, J.; Merta, D.; Wojciuch-Ploskonka, M. Spatio-Temporal Characteristics of Crop Damage Caused by Wild Boar in North-Eastern Poland. Crop Prot. 2017, 93, 106–112. [Google Scholar] [CrossRef]

- Kuželka, K.; Surový, P. Automatic Detection and Quantification of Wild Game Crop Damage Using an Unmanned Aerial Vehicle (UAV) Equipped with an Optical Sensor Payload: A Case Study in Wheat. Eur. J. Remote Sens. 2018, 51, 241–250. [Google Scholar] [CrossRef]

- Michez, A.; Morelle, K.; Lehaire, F.; Widar, J.; Authelet, M.; Vermeulen, C.; Lejeune, P. Use of Unmanned Aerial System to Assess Wildlife (Sus Scrofa) Damage to Crops (Zea Mays). J. Unmanned Veh. Syst. 2016, 4, 266–275. [Google Scholar] [CrossRef]

- Hu, X.; Gu, X.; Sun, Q.; Yang, Y.; Qu, X.; Yang, X.; Guo, R. Comparison of the Performance of Multi-Source Three-Dimensional Structural Data in the Application of Monitoring Maize Lodging. Comput. Electron. Agric. 2023, 208, 107782. [Google Scholar] [CrossRef]

- Jełowicki, Ł.; Sosnowicz, K.; Ostrowski, W.; Osińska-Skotak, K.; Bakuła, K. Evaluation of Rapeseed Winter Crop Damage Using UAV-Based Multispectral Imagery. Remote Sens. 2020, 12, 2618. [Google Scholar] [CrossRef]

- Garcia Millan, V.E.; Rankine, C.; Sanchez-Azofeifa, G.A. Crop Loss Evaluation Using Digital Surface Models from Unmanned Aerial Vehicles Data. Remote Sens. 2020, 12, 981. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.-G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2021, 15, 217. [Google Scholar] [CrossRef]

- Stöcker, C.; Nex, F.; Koeva, M.; Gerke, M. High-Quality UAV-Based Orthophotos for Cadastral Mapping: Guidance for Optimal Flight Configurations. Remote Sens. 2020, 12, 3625. [Google Scholar] [CrossRef]

- Rutten, A.; Casaer, J.; Vogels, M.F.A.; Addink, E.A.; Vanden Borre, J.; Leirs, H. Assessing Agricultural Damage by Wild Boar Using Drones: Wild Boar Damage Assessment. Wildl. Soc. Bull. 2018, 42, 568–576. [Google Scholar] [CrossRef]

- Fischer, J.W.; Greiner, K.; Lutman, M.W.; Webber, B.L.; Vercauteren, K.C. Use of Unmanned Aircraft Systems (UAS) and Multispectral Imagery for Quantifying Agricultural Areas Damaged by Wild Pigs. Crop Prot. 2019, 125, 104865. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.-K.; Kim, H.-J.; Kim, Y.; Kim, D.-W.; Kim, K.-H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef]

- Chen, P.-C.; Chiang, Y.-C.; Weng, P.-Y. Imaging Using Unmanned Aerial Vehicles for Agriculture Land Use Classification. Agriculture 2020, 10, 416. [Google Scholar] [CrossRef]

- Mattivi, P.; Pappalardo, S.E.; Nikolić, N.; Mandolesi, L.; Persichetti, A.; De Marchi, M.; Masin, R. Can Commercial Low-Cost Drones and Open-Source GIS Technologies Be Suitable for Semi-Automatic Weed Mapping for Smart Farming? A Case Study in NE Italy. Remote Sens. 2021, 13, 1869. [Google Scholar] [CrossRef]

- Breiman, L. Classification and Regression Trees; Routledge: Abingdon, UK, 2017; ISBN 978-1-351-46048-4. [Google Scholar]

- Wójcik-Gront, E. Variables Influencing Yield-Scaled Global Warming Potential and Yield of Winter Wheat Production. Field Crops Res. 2018, 227, 19–29. [Google Scholar] [CrossRef]

- StatSoft. STATISTICA Ver. 13, Data Analysis Software System 2014; Statsoft: Tulsa, OK, USA, 2014. [Google Scholar]

- Johenneken, M.; Drak, A.; Herpers, R. Damage Analysis of Grassland from Aerial Images Applying Convolutional Neural Networks. In Proceedings of the 2020 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Virtual, 17–19 September 2020; IEEE: Split, Croatia; pp. 1–6. [Google Scholar]

- ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and Crop Height Estimation of Different Crops Using UAV-Based Lidar. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of Plant Height Changes of Lodged Maize Using UAV-LiDAR Data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and Soybean Heights Estimation from Unmanned Aerial Vehicle (UAV) LiDAR Data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Pecho, P.; Škvareková, I.; Ažaltovič, V.; Bugaj, M. UAV Usage in the Process of Creating 3D Maps by RGB Spectrum. Transp. Res. Procedia 2019, 43, 328–333. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Erkbol, H.; Adrian, J.; Newcomb, M.; LeBauer, D.; Pauli, D.; Shakoor, N.; Mockler, T.C. UAV-Based Sorghum Growth Monitoring: A Comparative Analysis of Lidar and Photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, 3, 489–496. [Google Scholar] [CrossRef]

| Phantom 4 Pro | Phantom 4 Multispectral | |

|---|---|---|

| Sensors | One 1” CMOS (complementary metal–oxide–semiconductor) RGB sensors | Six 1/2.9” CMOS (complementary metal–oxide–semiconductor) sensors, one RGB, and five monochrome sensors |

| Resolution | 20 MP (5472 × 3648 pixels) | 2.08 MP (1600 × 1300 pixels) |

| Wavelengths | Visible light—RGB | Blue (B): 450 nm ± 16 nm; Green (G): 560 nm ± 16 nm; Red (R): 650 nm ± 16 nm; Red edge (RE): 730 nm ± 16 nm; Near-infrared (NIR): 840 nm ± 26 nm |

| Lenses | FOV (Field of view): 84° | FOV (Field of view): 62.7° |

| Photo format | JPEG | JPEG (visible light imaging) + TIFF (multispectral imaging) |

| Method | True Positive (Correctly Classified Crop Damage) | False Negative (Omissions) | False Positive (Commissions) | Estimated Crop Damage Area (% of the Reference Area) |

|---|---|---|---|---|

| Only areas larger than 3 m2 (reference crop damage area 573 m2) | ||||

| NDVI-based—filter value threshold −0.12 | 162.1 (28.3% *) | 411.3 (71.8%) | 62.3 (10.9%) | 224.4 (39.2%) |

| DSM-based—filter value threshold −0.40 | 339.8 (59.3%) | 233.6 (40.8%) | 338.9 (59.1%) | 678.7 (118.4%) |

| CART-based—DSM filter value threshold −0.73 | 251.5 (43.9%) | 321.8 (56.2%) | 57.7 (10.1%) | 309.2 (54.0%) |

| Only areas larger than 15 m2 (reference crop damage area 222 m2) | ||||

| NDVI-based—filter value threshold −0.12 | 108.3 (48.8%) | 114.2 (51.5%) | 17.2 (7.7%) | 125.5 (56.5%) |

| DSM-based—filter value threshold −0.40 | 158.2 (71.3%) | 64.3 (29.0%) | 119.8 (54.0%) | 278.0 (125.2%) |

| CART-based—DSM filter value threshold −0.73 | 147.9 (66.6%) | 74.5 (33.6%) | 37.3 (16.8%) | 185.2 (83.4%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dobosz, B.; Gozdowski, D.; Koronczok, J.; Žukovskis, J.; Wójcik-Gront, E. Evaluation of Maize Crop Damage Using UAV-Based RGB and Multispectral Imagery. Agriculture 2023, 13, 1627. https://doi.org/10.3390/agriculture13081627

Dobosz B, Gozdowski D, Koronczok J, Žukovskis J, Wójcik-Gront E. Evaluation of Maize Crop Damage Using UAV-Based RGB and Multispectral Imagery. Agriculture. 2023; 13(8):1627. https://doi.org/10.3390/agriculture13081627

Chicago/Turabian StyleDobosz, Barbara, Dariusz Gozdowski, Jerzy Koronczok, Jan Žukovskis, and Elżbieta Wójcik-Gront. 2023. "Evaluation of Maize Crop Damage Using UAV-Based RGB and Multispectral Imagery" Agriculture 13, no. 8: 1627. https://doi.org/10.3390/agriculture13081627

APA StyleDobosz, B., Gozdowski, D., Koronczok, J., Žukovskis, J., & Wójcik-Gront, E. (2023). Evaluation of Maize Crop Damage Using UAV-Based RGB and Multispectral Imagery. Agriculture, 13(8), 1627. https://doi.org/10.3390/agriculture13081627