An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots

Abstract

:1. Introduction

2. Vision Recognition and Positioning System for Fruit and Vegetable Harvesting Robots

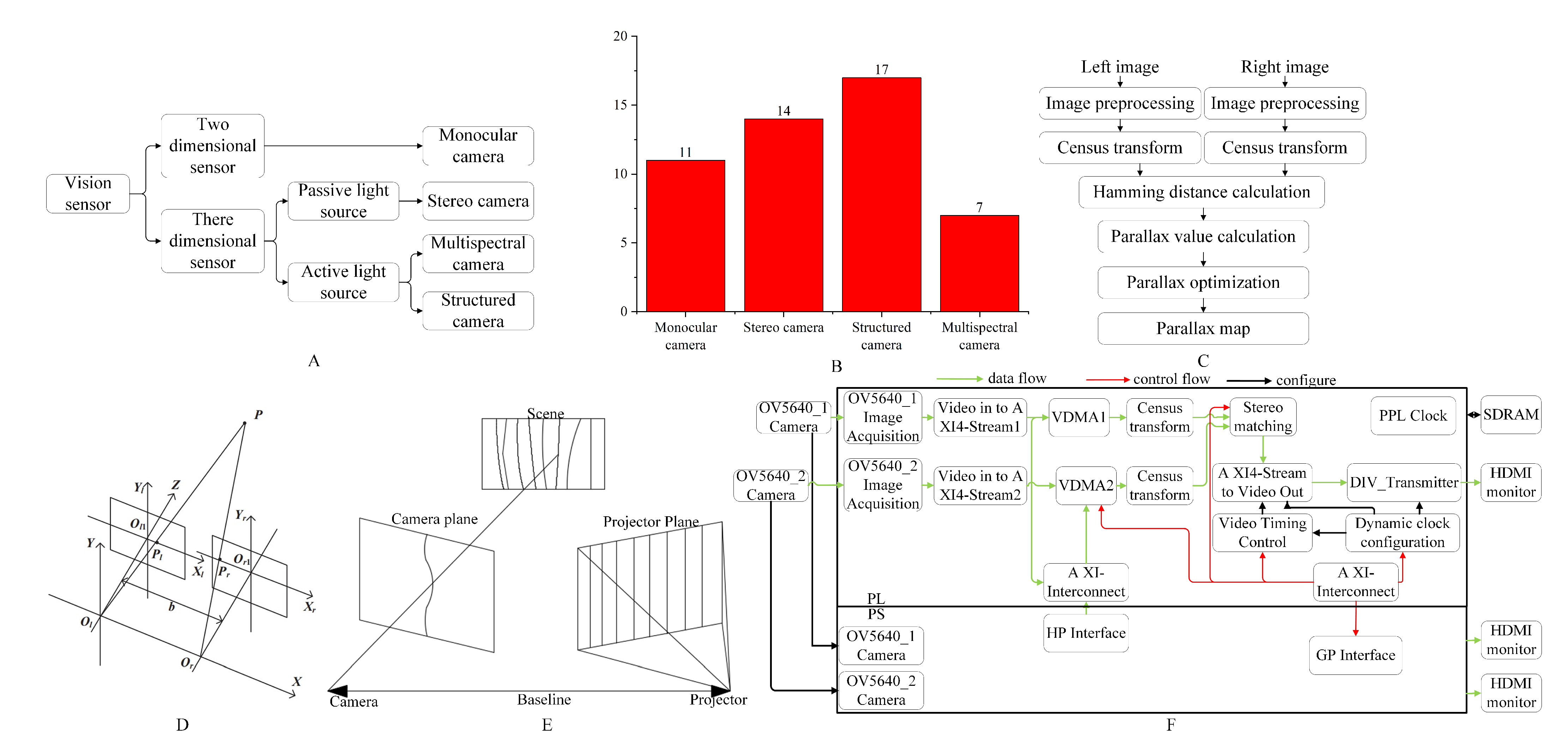

2.1. Visual Sensors

2.1.1. Monocular Camera

| Types of Sensors | Applications and Principles | Advantages | Disadvantages | Images |

|---|---|---|---|---|

| Monocular camera | Color, shape, texture, and other features | Simple system structure, low cost, can be combined with multiple monocular systems to form a multi-camera system | It can only capture two-dimensional image information, has poor stability, and cannot be used in dark or low-light conditions [25] |  |

| Stereo camera | Texture, color, and other features; obtaining the spatial coordinates of the target through the principle of triangulation imaging | By combining algorithms, the matching efficiency can be improved, and three-dimensional coordinate information can be obtained | It requires high sensor calibration accuracy, and the stereo matching computation takes a long time. It is also challenging to determine the three-dimensional position of edge points |  |

| Structured camera | Obtaining three-dimensional features through the reflection of structured light by the object being measured | The three-dimensional features are not easily affected by background interference and have better positioning accuracy | Sunlight can cancel out most of the infrared images, and the cost is high |  |

| Multispectral camera | Identifying targets based on the differences in radiation characteristics of different wavelength bands | It is not easily affected by environmental interference | It requires heavy computational processing, making it unsuitable for real-time picking operations |  |

2.1.2. Stereo Camera

2.1.3. Structured Camera

2.1.4. Multispectral Camera

2.2. Machine Vision Algorithms

2.2.1. Image Segmentation Algorithms

2.2.2. Object Detection Algorithm

2.2.3. A 3D Reconstruction Algorithm for Object Models

3. The Challenges of Machine Vision in Recognition and Localization for Fruit and Vegetable Harvesting Robots

3.1. The Current Status of Machine Vision in Recognition, Localization, and Harvesting for Fruit and Vegetable Harvesting Robots

3.1.1. Recognition and Localization of Machine Vision in Greenhouse Environments

3.1.2. Recognition and Localization of Machine Vision in Outdoor Greenhouse Environments

3.2. The Significant Challenges Faced by Machine Vision in Recognition and Localization for Fruit and Vegetable Harvesting Robots

3.2.1. The Stability of Fast Recognition under Complex Background Interference

3.2.2. Identifying Stability under Different Lighting Conditions for the Same Crop

3.2.3. The Dependence of Recognition and Localization Functions on Prior Information in the Case of Overlapping Fruits and Occluded Leaves and Branches

3.2.4. Uncertainty in Fruit Picking Due to Complex Work Environments

4. Conclusions

5. Future Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sa, I.; Zong, G.; Feras, D.; Ben, U.; Tristan, P.; Chris, M.C. Deepfruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Zheng, T.; Jiang, M.; Feng, M. Research overview of visual-based target recognition and localization methods for harvesting robots. J. Instrum. Instrum. 2021, 42, 28–51. [Google Scholar]

- Ruan, S.J.; Chen, J.H. Title of presentation. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies, Osaka, Japan, 7–9 March 2022; pp. 431–432. [Google Scholar]

- Luo, G. Depth Perception and 3D Reconstruction Based on Binocular Stereo Vision. Ph.D. Thesis, Central South University, Changsha, China, 2012; pp. 6–15. [Google Scholar]

- Anwar, I.; Lee, S. High performance stand-alone structured light 3D camera for smart manipulators. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Republic of Korea, 28 June–1 July 2017; pp. 192–195. [Google Scholar]

- Zhang, B.H.; Huang, W.Q.; Li, J.B. Principles, developments and applications of computer vision for external quality inspection of fruits and vegetables: A review. Food Res. Int. 2014, 62, 326–343. [Google Scholar] [CrossRef]

- Xu, P.; Fang, N.; Liu, N. Visual recognition of cherry tomatoes in plant factory based on improved deep instance segmentation. Comput. Electron. Agric. 2022, 197, 106991. [Google Scholar] [CrossRef]

- Xiao, X.; Huang, J.; Li, M. Fast recognition method for citrus under complex environments based on improved YOLOv3. J. Eng. 2022, 2022, 148–159. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; Tenorio, G.L. Fruit localization and environment perception for strawberry harvesting robots. IEEE Access 2019, 7, 147642–147652. [Google Scholar] [CrossRef]

- Liu, J. Research progress analysis of robotic harvesting technologies in greenhouse. Trans. Chin. Soc. Agric. Mach. 2017, 48, 1–18. [Google Scholar]

- Liu, J.; Pi, J.; Xia, L. A novel and high precision tomato maturity recognition algorithm based on multi-level deep residual network. Multimed. Tools Appl. 2019, 79, 9403–9417. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, H.; Wang, Z. An image restoration and detection method for picking robot based on convolutional auto-encoder. Comput. Electron. Agric. 2022, 196, 106896. [Google Scholar] [CrossRef]

- Mehta, S.S.; Ton, C.; Asundi, S.; Burks, T.F. Multiple Camera Fruit Localization Using a Particle Filter. Comput. Electron. Agric. 2017, 142, 139–154. [Google Scholar] [CrossRef]

- Hua, X.; Li, H.; Zeng, J.; Han, C.; Chen, T.; Tang, L.; Luo, Y. A review of target recognition technology for fruit picking robots: From digital image processing to deep learning. Appl. Sci. 2023, 13, 4160. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit detection and recognition based on deep learning for automatic harvesting: An overview and review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Li, Y.; Feng, Q.; Li, T.; Xie, F.; Liu, C.; Xiong, Z. Advance of target visual information acquisition technology for fresh fruit robotic harvesting: A review. Agronomy 2022, 12, 1336. [Google Scholar] [CrossRef]

- Wang, Z.; Xun, Y.; Wang, Y.; Yang, Q. Review of smart robots for fruit and vegetable picking in agriculture. Int. J. Agric. Biol. Eng. 2022, 15, 33–54. [Google Scholar]

- Tang, Y.; Chen, M.; Wang, C. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Luo, L.; Zhang, B. Research on Target Localization and Recognition Based on Binocular Vision and Deep Learning with FPGA. J. Phys. Conf. Ser. 2022, 2284, 12009. [Google Scholar] [CrossRef]

- Feng, Q.C.; Zou, W.; Fan, P.F.; Zhang, C.F.; Wang, X. Design and Test of Robotic Harvesting System for Cherry Tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Baeten, J.; Kevin, D.; Sven, B.; Wim, B.; Eric, C. Autonomous Fruit Picking Machine: A Robotic Apple Harvester. Field Serv. Robot. 2008, 42, 531–539. [Google Scholar]

- Bulanon, D.M.; Kataoka, T.; Ota, Y.A. Machine Vision System for the Apple Harvesting Robot. Agric. Eng. Int. Cigr. Ejournal 2001, 3, 1–11. [Google Scholar]

- Zhao, J.; Tow, J.; Katupitiya, J. On-tree Fruit Recognition Using Texture Properties and Color Data. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 263–268. [Google Scholar]

- Mehta, S.S.; Burks, T.F. Vision-based Control of Robotic Manipulator for Citrus Harvesting. Comput. Electron. Agric. 2014, 102, 146–158. [Google Scholar] [CrossRef]

- Meng, L.; Yuan, L.; Qing, L.W. A Calibration Method for Mobile Omnidirectional Vision Based on Structured Light. IEEE Sens. J. 2021, 21, 11451–11460. [Google Scholar] [CrossRef]

- Cao, K.; Liu, R.; Wang, Z.; Peng, K.; Zhang, J.; Zheng, J.; Teng, Z.; Yang, K.; Stiefelhagen, R. Tightly-coupled liDAR-visual SLAM based on geometric features for mobile agents. arXiv 2023, arXiv:2307.07763. [Google Scholar]

- Shu, C.F.; Luo, Y.T. Multi-modal feature constraint based tightly coupled monocular Visual-liDAR odometry and mapping. IEEE Trans. Intell. Veh. 2023, 8, 3384–3393. [Google Scholar] [CrossRef]

- Zhang, L.; Yu, X.; Adu-Gyamfi, Y.; Sun, C. Spatio-temporal fusion of LiDAR and camera data for omnidirectional depth perception. Transp. Res. Rec. 2023, 1. [Google Scholar] [CrossRef]

- Cheng, X.; Qiu, S.; Zou, Z.; Pu, J.; Xue, X. Understanding depth map progressively: Adaptive distance interval separation for monocular 3D object detection. arXiv 2023, arXiv:2306.10921. [Google Scholar]

- Ma, R.; Yin, Y.; Chen, J.; Chang, R. Multi-modal information fusion for liDAR-based 3D object detection framework. Multimed. Tools Appl. 2023, 13, 1731. [Google Scholar] [CrossRef]

- Guo, S.; Guo, J.; Bai, C. Semi-Direct Visual Odometry Based on Monocular Depth Estimation. In Proceedings of the 2019 IEEE International Conference on Unmanned Systems (ICUS), Beijing, China, 17–19 October 2019; pp. 720–724. [Google Scholar]

- Edan, Y.; Rogozin, D.; Flash, T. Robotic melon harvesting. IEEE Trans. Robot. Autom. 2000, 16, 831–834. [Google Scholar] [CrossRef]

- Xiong, J.; He, Z.; Lin, R.; Liu, Z.; Bu, R.; Yang, Z.; Peng, H.; Zou, X. Visual Positioning Technology of Picking Robots for Dynamic Litchi Clusters with Disturbance. Comput. Electron. Agric. 2018, 151, 226–237. [Google Scholar] [CrossRef]

- Wang, H.; Mao, W.; Liu, G.; Hu, X.; Li, S. Recognition and positioning of apple harvesting robot based on visual fusion. J. Agric. Mach. 2012, 43, 165–170. [Google Scholar]

- Mrovlje, J.; Vrancic, D. Distance measuring based on stereoscopic pictures. In Proceedings of the 9th International PhD Workshop on Systems and Control, Izola, Slovenia, 1–3 October 2008. [Google Scholar]

- Pal, B.; Khaiyum, S.; Kumaraswamy, Y.S. 3D point cloud generation from 2D depth camera images using successive triangulation. In Proceedings of the IEEE International Conference on Innovative Mechanisms for Industry Applications, Bangalore, India, 19–20 May 2017; pp. 129–133. [Google Scholar]

- Ji, W.; Meng, X.; Qian, Z.; Xu, B.; Zhao, D. Branch Localization Method Based on the Skeleton Feature Extraction and Stereo Matching for Apple Harvesting Robot. Int. J. Adv. Robot. Syst. 2017, 14, 172988141770527. [Google Scholar] [CrossRef]

- Guo, A.; Xiong, J.; Xiao, D.; Zou, X. Calculation and stereo matching of picking points for litchi using fused Harris and SIFT algorithm. J. Agric. Mach. 2015, 46, 11–17. [Google Scholar]

- Jiang, H.; Peng, Y.; Ying, Y. Measurement of 3-D locations of ripe tomato by binocular stereo vision for tomato harvesting. In Proceedings of the 2008 ASABE International Meeting, Providence, RI, USA, 29 June–2 July 2008; p. 084880. [Google Scholar]

- Van Henten, E.J.; Van Tuijl, B.A.J.; Hoogakker, G.-J.; Van Der Weerd, M.J.; Emming, J.; Kornet, J.G.; Bontsema, J. An Autonomous Robot for De-leafing Cucumber Plants Grown in a High-wire Cultivation System. Biosyst. Eng. 2006, 94, 317–323. [Google Scholar] [CrossRef]

- Van Henten, E.J.; Van Tuijl, B.A.J.; Hemming, J.; Kornet, J.G.; Bontsema, J.; Van Os, E.A. Field Test of an Autonomous Cucumber Picking Robot. Biosyst. Eng. 2003, 86, 305–313. [Google Scholar] [CrossRef]

- Yoshida, T.; Kawahara, T.; Fukao, T. Fruit Recognition Method for a Harvesting Robot with RGB-D Cameras. Robomech. J. 2022, 9, 1–10. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Wang, Y.; Xiong, L.; Xu, H.; Zhao, W. Research on Recognition and Positioning System for Citrus Harvesting Robot in Natural Environment. Trans. Chin. Soc. Agric. Mach. 2019, 50, 14–22+72. [Google Scholar]

- Safren, Q.; Alchanatis, V.; Ostrovsky, V.; Levi, O. Detection of green apples in hyperspectral images of apple-tree foliage using machine vision. Trans. ASABE 2008, 50, 2303–2313. [Google Scholar] [CrossRef]

- Okamoto, H.; Lee, W.S. Green citrus detection using hyperspectral imaging. Comput. Electron. Agric. 2010, 66, 201–208. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, J.; Li, B.; Xu, C. Method for identifying and locating the picking points of tomato clusters based on RGB-D information fusion and object detection. Trans. Chin. Soc. Agric. Mach. 2021, 37, 143–152. [Google Scholar]

- Mccool, C.; Sa, I.; Dayoub, F. Visual detection of occluded crop: For automated harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–19 May 2016. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Liang, C.; Xiong, J.; Zheng, Z. A visual detection method for nighttime litchi fruits and fruiting stems. Comput. Electron. Agric. 2020, 169, 105192. [Google Scholar] [CrossRef]

- Han, K.S.; Kim, S.-C.; Lee, Y.-B.; Kim, S.C.; Im, D.-H.; Choi, H.-K. Strawberry harvesting robot for bench-type cultivation. Biosyst. Eng. 2012, 37, 65–74. [Google Scholar] [CrossRef]

- Atif, M.; Lee, S. Adaptive Pattern Resolution for Structured Light 3D Camera System. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28–31 October 2018; pp. 1–4. [Google Scholar]

- Weinmann, M.; Schwartz, C.; Ruiters, R.; Klein, R. A Multicamera, Multi-projector Super-Resolution Framework for Structured Light. In Proceedings of the 2011 International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, Hangzhou, China, 16–19 May 2011; pp. 397–404. [Google Scholar]

- Lee, S.; Atif, M.; Han, K. Stand-Alone Hand-Eye 3D Camera for Smart Modular Manipulator. In Proceedings of the IEEE/RSJ IROS Workshop on Robot Modularity, Daejeon, Republic of Korea, 9–14 October 2016. [Google Scholar]

- Hyun, J.S.; Chiu, G.T.-C.; Zhang, S. High-speed and high-accuracy 3D surface measurement using a mechanical projector. Opt. Express 2018, 26, 1474–1487. [Google Scholar] [CrossRef] [PubMed]

- Nevatia, R. Depth measurement by motion stereo. Comput. Graph. Image Process. 1976, 5, 203–214. [Google Scholar] [CrossRef]

- Subrata, I.D.M.; Fujiura, T.; Nakao, S. 3-D Vision Sensor for Cherry Tomato Harvesting Robot. Jpn. Agric. Res. Q. 1997, 31, 257–264. [Google Scholar]

- Wang, Z.; Walsh, K.B.; Verma, B. On-tree mango fruit size estimation using RGB-D images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef]

- Rong, J.; Dai, G.; Wang, P. A peduncle detection method of tomato for autonomous harvesting. Complex Intell. Syst. 2021, 8, 2955–2969. [Google Scholar] [CrossRef]

- Zheng, B.; Sun, G.; Meng, Z.; Nan, R. Vegetable Size Measurement Based on Stereo Camera and Keypoints Detection. Sensors 2022, 22, 1617. [Google Scholar] [CrossRef]

- Jin, X.; Tang, L.; Li, R.; Zhao, B.; Ji, J.; Ma, Y. Edge Recognition and Reduced Transplantation Loss of Leafy Vegetable Seedlings with Intel RealsSense D415 Depth Camera. Comput. Electron. Agric. 2022, 198, 107030. [Google Scholar] [CrossRef]

- Tran, T.M. A Study on Determination of Simple Objects Volume Using ZED Stereo Camera Based on 3D-Points and Segmentation Images. Int. J. Emerg. Trends Eng. Res. 2020, 8, 1990–1995. [Google Scholar] [CrossRef]

- Pan, S.; Ahamed, T. Pear Recognition in an Orchard from 3D Stereo Camera Datasets to Develop a Fruit Picking Mechanism Using Mask R-CNN. Sensors 2022, 22, 4187. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; Van Henten, E.J. Robust pixel-based classification of obstacles for robotic harvesting of sweet-pepper. Comput. Electron. Agric. 2013, 96, 148–162. [Google Scholar] [CrossRef]

- Yuan, T.; Li, W.; Feng, Q. Spectral imaging for greenhouse cucumber fruit detection based on binocular stereovision. In Proceedings of the 2010 ASABE International Meeting, Pittsburgh, PA, USA, 20–23 June 2010. [Google Scholar]

- Ji, C.; Feng, Q.; Yuan, T. Development and performance analysis of greenhouse cucumber harvesting robot system. Robot 2011, 6, 726–730. [Google Scholar]

- Bao, G.; Cai, S.; Qi, L. Multi-template matching algorithm for cucumber recognition in natural environment. Comput. Electron. Agric. 2016, 127, 754–762. [Google Scholar] [CrossRef]

- Zheng, T. Research on tomato detection in natural environment based on RC-YOLOv4. Comput. Electron. Agric. 2022, 198, 107029. [Google Scholar] [CrossRef]

- Xu, W.; Zhao, L.; Li, J. Detection and classification of tea buds based on deep learning. Comput. Electron. Agric. 2022, 192, 106547. [Google Scholar] [CrossRef]

- Zhong, Z.; Xiong, J.; Zheng, Z. A method for litchi picking points calculation in natural environment based on main fruit bearing branch detection. Comput. Electron. Agric. 2021, 189, 106398. [Google Scholar] [CrossRef]

- Zhang, B.; Huang, W.; Wang, C. Computer vision recognition of stem and calyx in apples using near-infrared linear-array structured light and 3D reconstruction. Biosyst. Eng. 2015, 139, 25–34. [Google Scholar] [CrossRef]

- Feng, Q.; Zhao, C.; Wang, X. Measurement method for targeted measurement of cherry tomato fruit clusters based on visual servoing. Trans. Chin. Soc. Agric. Mach. 2015, 31, 206–212. [Google Scholar]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. Robust tomato recognition for robotic harvesting using feature images fusion. Sensors 2016, 16, 173. [Google Scholar] [CrossRef]

- Li, Y. Research on Target Recognition and Positioning Technology of Citrus Harvesting Robot Based on Binocular Vision. Master’s Thesis, Chongqing University of Technology, Chongqing, China, 2017. [Google Scholar]

- Yan, J.; Wang, P.; Wang, T. Identification and Localization of Optimal Picking Point for Truss Tomato Based on Mask R-CNN and Depth Threshold Segmentation. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Jiaxing, China, 27–31 May 2021; pp. 899–903. [Google Scholar]

- Yang, Q.; Chen, C.; Dai, J. Tracking and recognition algorithm for a robot harvesting oscillating apples. Int. J. Agric. Biol. Eng. 2020, 13, 163–170. [Google Scholar] [CrossRef]

- Zhang, J. Target extraction of fruit picking robot vision system. J. Phys. Conf. Ser. 2019, 1423, 012061. [Google Scholar] [CrossRef]

- Xiong, J.; Zou, X.; Peng, H. Real-time recognition and picking point determination technology for perturbed citrus harvesting. Trans. Chin. Soc. Agric. Mach. 2014, 45, 38–43. [Google Scholar]

- Xiong, J.; Lin, R.; Liu, Z. Recognition technology of litchi picking robot in natural environment at night. Trans. Chin. Soc. Agric. Mach. 2017, 48, 28–34. [Google Scholar]

- Zhu, Y.; Zhang, T.; Liu, L. Fast Location of Table Grapes Picking Point Based on Infrared Tube. Inventions 2022, 7, 27. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P. Rachis detection and three-dimensional localization of cut-off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Silwal, A.; Karkee, M.; Zhang, Q. A hierarchical approach to apple identification for robotic harvesting. Trans. ASABE 2016, 59, 1079–1086. [Google Scholar]

- Qi, X.; Dong, J.; Lan, Y. Method for Identifying Litchi Picking Position Based on YOLOv5 and PSPNet. Remote Sens. 2022, 14, 2004. [Google Scholar] [CrossRef]

- Feng, Q.; Cheng, W.; Li, Y.; Wang, B.; Chen, L. Localization method of tomato plant pruning points based on Mask R-CNN. Trans. Chin. Soc. Agric. Eng. 2022, 38, 128–135. [Google Scholar]

- Feng, Q.; Cheng, W.; Zhang, W. Visual Tracking Method of Tomato Plant Main-Stems for Robotic Harvesting. In Proceedings of the 2021 IEEE 11th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Jiaxing, China, 27–31 July 2021; pp. 886–890. [Google Scholar]

- Tafuro, A.; Adewumi, A.; Parsa, S. Strawberry picking point localization, ripeness, and weight estimation. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2295–2302. [Google Scholar]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask R-CNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Zhang, X.; Fu, L.; Karkee, M.; Whiting, M.D.; Zhang, Q. Canopy segmentation using ResNet for mechanical harvesting of apples. IFAC-PapersOnLine 2019, 52, 300–305. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, X.; Yuan, J. YOLO5-spear: A robust and real-time spear tips locator by improving image augmentation and lightweight network for selective harvesting robot of white asparagus. Biosyst. Eng. 2022, 218, 43–61. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Cui, Z.; Sun, H.M.; Yu, J.T. Fast detection method of green peach for application of picking robot. Appl. Intell. 2022, 52, 1718–1739. [Google Scholar] [CrossRef]

- Peng, H.X.; Huang, B.; Shao, Y.Y. Generalized improved SSD model for multi-class fruit picking target recognition in natural environment. Trans. Agric. Eng. 2018, 34, 155–162. [Google Scholar]

- Su, F.; Zhao, Y.; Wang, G. Tomato Maturity Classification Based on SE-YOLOv3-MobileNetV1 Network under Nature Greenhouse Environment. Agronomy 2022, 12, 1638. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, S.; Zou, T. A Dense Litchi Target Recognition Algorithm for Large Scenes. Math. Prob. Eng. 2022, 2022, 4648105. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Z.; Wu, J. An improved Yolov3 based on dual path network for cherry tomatoes detection. J. Food Process Eng. 2021, 44, 13803. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B. A real-time Apple targets detection method for picking robot based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Zhao, D.A.; Wu, R.D.; Liu, X.Y.; Zhao, Y.Y. Localization of Apple Picking Under Complex Background Based on YOLO Deep Convolutional Neural Network. Trans. Chin. Soc. Agric. Eng. 2019, 35, 164–173. [Google Scholar]

- Zhang, Q.; Chen, J.M.; Li, B.; Xu, C. Tomato cluster picking point identification based on RGB-D fusion and object detection. Trans. Chin. Soc. Agric. Eng. 2021, 37, 143–152. [Google Scholar]

- Peng, H.; Xue, C.; Shao, Y. Litchi detection in the field using an improved YOLOv3 model. Int. J. Agric. Biol. Eng. 2022, 15, 211–220. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X. Three-dimensional point cloud reconstruction and morphology measurement method for greenhouse plants based on the Kinect sensor self-calibration. Agronomy 2019, 9, 596. [Google Scholar] [CrossRef]

- Isachsen, U.J.; Theoharis, T.; Misimi, E. Fast and accurate GPU-accelerated, high-resolution 3D registration for the robotic 3D reconstruction of compliant food objects. Comput. Electron. Agric. 2021, 180, 105929. [Google Scholar] [CrossRef]

- Xu, Z.F.; Jia, R.S.; Liu, Y.B. Fast method of detecting tomatoes in a complex scene for picking robots. IEEE Access 2020, 8, 55289–55299. [Google Scholar] [CrossRef]

- Rong, D.; Wang, H.; Ying, Y.; Zhang, Z.; Zhang, Y. Peach variety detection using VIS-NIR spectroscopy and deep learning. Comput. Electron. Agric. 2020, 175, 105553. [Google Scholar] [CrossRef]

- Wang, C.; Wan, Y.; Wang, G. Development of control system for a picking robot used in plate flame cutting. Res. Explor. Lab. 2017, 36, 41–44. [Google Scholar]

- Tian, Y.N.; Yang, G.D.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Parvathi, S.; Selvi, S.T. Detection of maturity stages of coconuts in complex background using Faster R-CNN model. Biosyst. Eng. 2021, 202, 119–132. [Google Scholar] [CrossRef]

- Changhong, W.; Qiang, L.; Xin, C. Citrus recognition based on YOLOv4 neural network. J. Physics Conf. Ser. 2021, 1820, 012163. [Google Scholar]

- Chu, P.Y.; Li, Z.J.; Lammers, K.; Lu, R.F.; Liu, X.M. Deep learning-based apple detection using a suppression mask R-CNN. Pattern Recognit. Lett. 2021, 147, 206–211. [Google Scholar] [CrossRef]

- Xiong, J.; Zheng, Z.; Liang, J. Orange recognition method in night environment based on improved YOLO V3 network. J. Agric. Mach. 2020, 51, 199–206. [Google Scholar]

- Jia, W.; Tian, Y.; Luo, R. Detection and Segmentation of Overlapped Fruits Based on Optimized Mask R-CNN Application in Apple Harvesting Robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Bi, S.; Gao, F.; Chen, J. Citrus target recognition method based on deep convolutional neural network. J. Agric. Mach. 2019, 50, 181–186. [Google Scholar]

- Zhang, Z.; Jia, W.; Shao, W. Optimization of FCOS network for green apple detection in complex orchard environments. Spectrosc. Spectr. Anal. 2022, 42, 647–653. [Google Scholar]

- Ji, W.; Zhao, D.; Cheng, F. Automatic recognition vision system guided for apple harvesting robot. Comput. Electr. Eng. 2012, 38, 1186–1195. [Google Scholar] [CrossRef]

- Xiong, J.; Liu, Z.; Tang, L.; Lin, R.; Bu, R.; Peng, H. Research on visual detection technology for green citrus in natural environment. Trans. Chin. Soc. Agric. Mach. 2018, 49, 45–52. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Liu, X.; Zhao, D.; Jia, W. Cucumber fruits detection in greenhouses based on instance segmentation. IEEE Access 2019, 7, 139635–139642. [Google Scholar] [CrossRef]

- Lv, J.; Zhao, D. An algorithm for rapid tracking and recognition of target fruit for apple picking robot. Trans. Chin. Soc. Agric. Mach. 2014, 45, 65–72. [Google Scholar]

- Wei, X.Q.; Ji, K.; Lan, J.H. Automatic method of fruit object extraction under complex agricultural background for vision system of fruit picking robot. Optik 2014, 125, 5684–5689. [Google Scholar] [CrossRef]

- Arefi, A.; Motlagh, A.M.; Mollazade, K.; Teimourlou, R.F.J. Recognition and localization of ripen tomato based on machine vision. Aust. J. Crop Sci. 2011, 5, 1144–1149. [Google Scholar]

- He, Z.-L.; Xiong, J.-T.; Lin, R.; Zou, X.; Tang, L.Y.; Yang, Z.G.; Liu, Z.; Song, G. A method of green litchi recognition in natural environment based on improved LDA classifier. Comput. Electron. Agric. 2017, 140, 159–167. [Google Scholar] [CrossRef]

- Sun, S.; Song, H.; He, D. An adaptive segmentation method combining MSRCR and mean shift algorithm with K-means correction of green apples in natural environment. Inf. Process. Agric. 2019, 6, 200–215. [Google Scholar] [CrossRef]

- Singh, N.; Tewari, V.K.; Biswas, P.K. Image processing algorithms for in-field cotton boll detection in natural lighting conditions. Artif. Intell. Agric. 2021, 5, 142–156. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, Z.; Tang, W. Identification of various food residuals on denim based on hyperspectral imaging system and combination optimal strategy. Artif. Intell. Agric. 2021, 5, 125–132. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 2017, 34, 1039. [Google Scholar] [CrossRef]

- Xiong, J.; Zou, X.; Chen, L. Visual positioning of a picking manipulator for perturbed litchi. Trans. Chin. Soc. Agric. Eng. 2012, 28, 36–41. [Google Scholar]

- Zhuang, J.; Hou, C.; Tang, Y. Computer vision-based localisation of picking points for automatic litchi harvesting applications towards natural scenarios. Biosyst. Eng. 2019, 187, 1–20. [Google Scholar] [CrossRef]

- Benavides, M.; Cantón-Garbín, M.; Sánchez-Molina, J.A. Automatic tomato and peduncle location system based on computer vision for use in robotized harvesting. Appl. Sci. 2020, 10, 5887. [Google Scholar] [CrossRef]

- Lü, Q.; Cai, J.; Zhao, J.; Wang, F.; Tang, M. Real-time Recognition of Citrus on Trees in Natural Scene. Trans. Chin. Soc. Agric. Mach. 2010, 41, 170–188. [Google Scholar]

- Bulanon, D.M.; Kataoka, T.; Ota, Y.; Hiroma, T. AE-Automation and emerging technologies: A segmentation algorithm for the automatic recognition of fuji apples at harvest. Biosyst. Eng. 2002, 83, 405–412. [Google Scholar] [CrossRef]

- Humburg, D.S.; Reid, J.F. Field performance of machine vision for the selective harvest of asparagus. SAE Trans. 1991, 100, 81–92. [Google Scholar]

- Liu, X.; Dai, B.; He, H. Real-time object segmentation for visual object detection in dynamic scenes. In Proceedings of the 2011 International Conference of Soft Computing and Pattern Recognition (SoCPaR), Dalian, China, 14–16 October 2011; pp. 423–428. [Google Scholar]

- Khoshroo, A.; Arefi, A.; Khodaei, J. Detection of red tomato on plants using image processing techniques. Agric. Commun. 2014, 2, 9–15. [Google Scholar]

- Xiong, J.; Lin, R.; Liu, Z. The recognition of litchi clusters and the calculation of picking point in a nocturnal natural environment. Biosyst. Eng. 2018, 166, 44–57. [Google Scholar] [CrossRef]

- Fu, J.; Duan, X.; Zou, X. Banana detection based on color and texture features in the natural environment. Comput. Electron. Agric. 2019, 167, 105057. [Google Scholar] [CrossRef]

- Wang, D.; He, D.; Song, H. Combining SUN-based visual attention model and saliency contour detection algorithm for apple image segmentation. Multimed. Tools Appl. 2019, 78, 17391–17411. [Google Scholar] [CrossRef]

- He, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Rapid Identification of Tomato Fruits in Nighttime Greenhouses Based on Improved YOLO v5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Wachs, J.P.; Stern, H.I.; Burks, T. Low and high-level visual feature-based apple detection from multi-modal images. Precis. Agric. 2010, 11, 717–735. [Google Scholar] [CrossRef]

- Gen’e-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.-R.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-modal deep learning for Fuji apple detection using RGB-D cameras and their radiometric capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar] [CrossRef]

- Fu, L.; Wang, B.; Cui, Y. Kiwifruit recognition at nighttime using artificial lighting based on machine vision. Int. Agric. Biol. Eng. 2015, 8, 52–59. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 August 2015. [Google Scholar]

- Zhao, D.; Liu, X.; Chen, Y. Nighttime recognition method for apple harvesting robots. J. Agric. Mach. 2015, 46, 15–22. [Google Scholar]

- Liu, X.; Zhao, D.; Jia, W.; Ruan, C.; Tang, S.; Shen, T. A method of segmenting apples at night based on color and position information. Comput. Electron. Agric. 2016, 122, 118–123. [Google Scholar] [CrossRef]

- Kitamura, S.; Oka, K.; Ikutomo, K.; Kimura, Y.; Taniguchi, Y. A Distinction Method for Fruit of Sweet Pepper Using Reflection of LED Light. In Proceedings of the Annual Conference of the SICE, Chofu, Japan, 20–22 August 2008. [Google Scholar]

- Xiong, J.; Bu, R.; Guo, W.; Chen, S.; Yang, Z. Surface Shadow Removal Method for Fruit Recognition of Harvesting Robots Under Natural Lighting Conditions. Trans. Chin. Soc. Agric. Eng. 2018, 34, 147–154. [Google Scholar]

- Wu, T.P.; Tang, C.K. A bayesian approach for shadow extraction from a single image. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 20–26 June 2005; pp. 480–487. [Google Scholar]

- Han, G.; Cosker, D. User-assisted image shadow removal. Image Vis. Comput. 2017, 62, 19–27. [Google Scholar]

- Liu, Y.; Shi, J.; Zhang, Y. Shadow Removal Algorithm for Single Outdoor Image. J. Softw. 2012, 23, 168–175. [Google Scholar]

- Levine, M.D.; Bhattacharyya, J. Removing shadows. Pattern Recognit. Lett. 2005, 26, 251–265. [Google Scholar] [CrossRef]

- Qu, L.; Tian, J.; Han, Z. Pixel-wise orthogonal decomposition for color illumination invariant and shadow-free image. Opt. Express 2015, 23, 2220–2239. [Google Scholar] [CrossRef]

- Shen, L.; Tan, P.; Lin, S. Intrinsic image decomposition with non-local texture cues. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 July 2008; pp. 1–7. [Google Scholar]

- Shen, L.; Yeo, C. Intrinsic images decomposition using a local and global sparse representation of reflectance. In Proceedings of the Computer Vision and Pattern Recognition 2011, Colorado Springs, CO, USA, 21–23 July 2011; pp. 697–704. [Google Scholar]

- Laffont, P.Y.; Bousseau, A.; Paris, S. Coherent intrinsic images from photo collections. ACM Trans. Graph. 2012, 31, 1–11. [Google Scholar] [CrossRef]

- Figov, Z.; Koppel, M. Detecting and removing shadows. In Proceedings of the International Conference on Computer Graphics and Imaging, Las Vegas, NV, USA, June 30–3 July 2004. [Google Scholar]

- Baba, M.; Mukunoki, M.; Asada, N. Shadow removal from a real image based on shadow density. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 8–12 August 2004. [Google Scholar]

- Weiss, Y. Deriving intrinsic images from image sequences. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Montreal, BC, Canada, 14–17 July 2001; pp. 68–75. [Google Scholar]

- Matsushita, Y.; Lin, S.; Kang, S.B. Estimating intrinsic images from image sequences with biased illumination. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 274–286. [Google Scholar]

- Guo, A.X.; Zou, X.J.; Zhu, M.S.; Chen, Y.; Xiong, J.; Chen, L. Analysis and recognition of color characteristics of litchi fruit and fruit clusters based on exploratory analysis. Trans. Chin. Soc. Agric. Eng. 2013, 29, 191–198. [Google Scholar]

- Xiong, J.T.; Zou, X.J.; Wang, H.J. Mature litchi identification under different lighting conditions based on Retinex image enhancement. Trans. Chin. Soc. Agric. Eng. 2013, 29, 170–178. [Google Scholar]

- Peng, H.X.; Zou, X.J.; Chen, L.J. Fast identification of multi-color targets of litchi in the field based on dual-threshold Otsu algorithm. Trans. Chin. Soc. Agric. Mach. 2014, 45, 61–68. [Google Scholar]

- Ding, Y.; Lee, W.S.; Li, M. Feature extraction of hyperspectral images for detecting immature green citrus fruit. Front. Agric. Sci. Eng. 2018, 5, 475–484. [Google Scholar] [CrossRef]

- Zhuang, J.J.; Luo, S.M.; Hou, C.J.; Tang, Y.; He, Y.; Xue, X.Y. Detection of orchard citrus fruits using a monocular machine vision-based method for automatic fruit picking applications. Comput. Electron. Agric. 2018, 152, 64–73. [Google Scholar] [CrossRef]

- Saedi, S.I.; Khosravi, H. A deep neural network approach towards real-time on-branch fruit recognition for precision horticulture. Expert Syst. Appl. 2020, 159, 113594. [Google Scholar] [CrossRef]

- Xu, Y. Two-stage approach for detecting slightly overlapping strawberries using HOG descriptor. Biosyst. Eng. 2013, 115, 144–153. [Google Scholar] [CrossRef]

- Lin, Y.; Lv, Z.; Yang, C.; Lin, P.; Chen, F.; Hong, J. Recognition and Experiment of Overlapping Honey Pomelo in Natural Scene Images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 158–167. [Google Scholar]

- Abdulla, A.A.; Ahmed, M.W. An improved image quality algorithm for exemplar-based image inpainting. Multimed. Tools Appl. 2021, 80, 13143–13156. [Google Scholar] [CrossRef]

- Hedjazi, M.A.; Genc, Y. Efficient texture-aware multi-GAN for image inpainting. Knowl.-Based Syst. 2021, 217, 106789. [Google Scholar] [CrossRef]

- Arun, P.L.; Kumar, R.M.S. Non-linear sorenson-dice exemplar image inpainting based bayes probability for occlusion removal in remote traffic control. Multimed. Tools Appl. 2021, 80, 11523–11538. [Google Scholar] [CrossRef]

- Lv, J.D.; Wang, F.; Xu, L.M.; Ma, Z.H.; Yang, B. A segmentation method of bagged green apple image. Sci. Hortic. 2019, 246, 411–417. [Google Scholar] [CrossRef]

- Tian, Y.; Duan, H.; Luo, R.; Zhang, Y.; Jia, W.; Lian, J.; Zheng, Y.; Ruan, C.; Li, C. Fast recognition and location of target fruit based on depth information. IEEE Access 2019, 7, 170553–170563. [Google Scholar] [CrossRef]

- Liang, X.; Jin, C.; Ni, M. Acquisition and Experiment of the Position Information of Tomato Fruit String Picking Points. Trans. Chin. Soc. Agric. Eng. 2018, 34, 163–169. [Google Scholar]

- Lin, S.; Wang, N. Cloud robotic grasping of Gaussian mixture model based on point cloud projection under occlusion. Assem. Autom. 2021, 41, 312–323. [Google Scholar] [CrossRef]

- Chen, L. Research on the Strawberry Harvest Robot Picking System. Master’s Thesis, China Agricultural University, Beijing, China, 2005. [Google Scholar]

- Chang-Yong, L.I.; Fang, A.Q.; Tan, H. Elevated Strawberry Picking Robot System Research. Mach. Des. Manuf. 2017, 6, 245–247. [Google Scholar]

- Shiigi, T.; Kurita, M.; Kondo, N.; Ninomiya, K.; Rajendra, P.; Kamata, J. Strawberry harvesting robot for fruits grown on tabletop culture. In Proceedings of the American Society of Agricultural and Biological Engineers, Providence, RI, USA, 29 July–20 August 2008; p. 084046. [Google Scholar]

- Liu, Z.; Liu, G.; Qiao, J. Three-Dimensional Visual Sensor Design of Apple Harvesting Robot. Trans. Chin. Soc. Agric. Mach. 2010, 41, 171–175. [Google Scholar]

- Hayashi, S.; Shigematsu, K.; Yamamoto, S.; Kobayashi, K.; Kohno, Y.; Kamata, J. Evaluation of a strawberry-harvesting robot in a field test. Biosyst. Eng. 2010, 105, 160–171. [Google Scholar] [CrossRef]

- Zou, X.J.; Ye, M.; Luo, C.H. Fault-tolerant design of a limited universal fruit-picking end-effector based on vision-positioning error. Appl. Eng. Agric. 2016, 32, 5–18. [Google Scholar]

- Africa, A.D.M.; Tabalan, A.R.V.; Tan, M.A.A. Ripe fruit detection and classification using machine learning. Int. J. 2020, 8, 60852020. [Google Scholar] [CrossRef]

- Xiang, R.; Duan, P.F. Design and experiment of night lighting system for tomato harvesting robots. J. Agric. Mach. 2016, 47, 8–14. [Google Scholar]

- Lv, J.; Zhao, D.A.; Wei, J.; Ding, S.H. Recognition of Overlapping and Occluded Fruits in Natural Environment. Optik 2016, 127, 1354–1362. [Google Scholar] [CrossRef]

- Wasaki, F.; Imamura, H. A robust recognition method for occlusion of mini tomatoes based on hue information and the curvature. Int. J. Image Graph. 2015, 15, 1540004. [Google Scholar] [CrossRef]

| Algorithms | Image Segmentation Algorithms | Module | Cite References | Object | Detection Time | Detection Accuracy |

|---|---|---|---|---|---|---|

| Traditional segmentation | Depth thresholding segmentation | HSV thresholding | [71,72,73] [74,75] | Tomato, orange | 2.34 s | 83.5–93% |

| Similarity measure segmentation | NCC,K-means | [46,66,76] [33,77,78] | Tomato, orange, lychee, cucumber | 0.054–7.58 s | 85–98% | |

| Image binarization segmentation | Otsu | [79] | Grape | 0.61 s | 90% | |

| Shape segmentation algorithm | Hough circle transform | [75,80] | Banana, apple | 0.009–0.13 s | 93.2% | |

| Machine learning | Semantic segmentation algorithms | PSP-Net semantic segmentation, U-Net semantic segmentation | [81,82] | Lychee, cucumber | - | 92.5–98% |

| Instance segmentation algorithms | Mask R-CNN and YOLACT | [7,83,84] [69,85,86] | Tomato, strawberry, lychee | 0.04–0.154 s | 89.7–95.78% |

| Specific Methods | Cite References | Object | Detection Time | Detection Accuracy |

|---|---|---|---|---|

| Introducing residual modules ResNet | [67,87] | Tomato, lychee | 0.017–0.093 s | 94.44–97.07% |

| Modifying or replacing the backbone feature extraction network | [8,68,88,89,90,91] | Citrus, tea tooth, cherry, apple, green peach | 0.01–0.063 s | 86.57–97.8% |

| Applying the K-means clustering algorithm for combining predicted candidate boxes | [43,92,93,94] | Tomato, citrus, lychee, cherry tomato | 0.058 s | 79–94.29% |

| Incorporating attention mechanism modules | [91,92,95] | Apple, tomato | 0.015–0.227 s | 86.57–97.5% |

| Enhancing the activation function | [89,91,96,97] | Apple, tomato, lychee, navel orange, Emperor orange | 0.467 s | 94.7% |

| Specific Methods | Cite References | Object | Rebuilding Accuracy |

|---|---|---|---|

| Density-based point clustering and localization approximation method | [9] | Strawberry | 74.1% |

| Nearest point iteration algorithm | [99] | Apple | 85.49% |

| Delaunay triangulation method | [70] | Apple | 97.5% |

| Three-dimensional reconstruction algorithm based on iterative closest point (ICP) | [100] | Apples, bananas, cabbage, pears | - |

| Challenges in Recognition and Positioning | Solutions | Cite References | Object | Time | Accuracy Rate |

|---|---|---|---|---|---|

| Complex background | Deep learning technology | [104,105,106,107,108,109] [110,111] [112,113,114] | Orange, apple, green apple, lime, cucumber | 0.06–0.352 s | 85.49–90.75% |

| Based on color features | [115,116,117,118] | Apple, tomato | 0.017 s | 43.9% | |

| Limitations of color features | [115,119,120,121] [68,122,123] | Cucumber | 0.346 s | 89.47% | |

| Based on spatial relationships | [124,125,126] | Lychee, tomato | 0.03 s | 80.8% | |

| Removing background interference | [118,127,128,129] [130,131,132,133,134] | Lychee, banana | 0.343–0.516 s | 89.63–93.75% | |

| Different lighting environments | Research in nighttime environments | [132,135,136,137] [49,78,138,139] | Kiwi, lychee, tomato, green apple | 0.516 s | 74–96.2% |

| Adding light sources | [140,141,142] | Apple, tomato, green pepper | - | 67.79–80.8% | |

| Removing shadows | [143,144,145,146,147,148,149,150,151] [152,153,154,155] | - | - | 83.16% | |

| Research under natural lighting conditions | [63,156,157] [11,96,158,159] | Green pepper, lychee, green orange, tomato | 0.105–0.2 s | 59.2–94.75% | |

| Handling uneven lighting | [49,160] | - | - | 86% | |

| Overlap occlusion | Directly detecting obstructed and overlapping fruit images | [1,86,161,162] | Strawberry | 0.008 s | 87–99.8% |

| Classifying and recognizing obstacles and unobstructed fruit | [91,160,163] | Apples, citrus, pomelo | 0.015 s | 91.48–94.02% | |

| Image restoration | [160,164,165,166] | - | - | 95.96–99.3% | |

| Computation and multi-sensor detection | [167,168,169,170] | Apples, tomato, cherry tomato | - | 78.8–96.61% | |

| Uncertainty in harvesting | Reducing overall vibrations | [50,171,172,173,174] | Strawberry | - | 38% |

| Sensor interference | [175] | Strawberry | 11.5 s | 38.1% | |

| Establishing a fault-tolerant mathematical model | [176] | Lychee, citrus | - | 78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, G.; Chen, H.; Jiang, M.; Niu, R. An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots. Agriculture 2023, 13, 1814. https://doi.org/10.3390/agriculture13091814

Hou G, Chen H, Jiang M, Niu R. An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots. Agriculture. 2023; 13(9):1814. https://doi.org/10.3390/agriculture13091814

Chicago/Turabian StyleHou, Guangyu, Haihua Chen, Mingkun Jiang, and Runxin Niu. 2023. "An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots" Agriculture 13, no. 9: 1814. https://doi.org/10.3390/agriculture13091814

APA StyleHou, G., Chen, H., Jiang, M., & Niu, R. (2023). An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots. Agriculture, 13(9), 1814. https://doi.org/10.3390/agriculture13091814