Key Technologies of Intelligent Weeding for Vegetables: A Review

Abstract

:1. Introduction

1.1. Background and Motivation

1.2. Related Surveys

1.3. Literature Indexing Methods

1.4. Paper Organisation

2. Types of Vegetables and Weeds

3. Vegetable and Weed Identification Technology

3.1. Global Weed Detection (GWD)

3.2. Crop-Rows Detection (CWD)

3.3. Vegetable Weed Precise Identification

3.3.1. Machine Learning (ML)

Traditional Machine Learning (TML)

Deep Learning (DL)

3.3.2. Sensor Detection Technology

Spectral Detection

| Platforms | Crops | Weeds | Sensors | Resolution Ratio | Bands | Detection Algorithms | Precision | References |

|---|---|---|---|---|---|---|---|---|

| Handheld | Sorghum | Amaranthus, Chenopodium retusum, Malva sylvestris, Cyperus rotundus Linn., Alloteropsis cimicina | Hyperspectral | - | 20 bands, 325–1075 nm | Stepwise linear discriminant analysis (SLDA) | 80–100% | Che Ya et al. [209] |

| UAV | Multispectral | 0.87 mm | 440, 560, 680, 710, 720, and 850 nm | OBIA | 93% | |||

| Aircraft | Corn | Sorghum halepense, Xanthium strumarium, Abutilon theophrasti | Hyperspectral | 2 m | 21 bands, 456–1650 nm | Spectral angle mapper and Spectral mixture analysis | 60–80% | Martin et al. [210] |

| Tractor | Lettuce | Broadleaf herba | Multispectral | 0.95 mm | 525, 650, and 850 nm | Otsu’s multi-level threshold | >81% | Elstone et al. [211] |

| Flat bed truck | Field pea, spring wheat, canola | Avena fatua Linn., Amaranthus retroflexus L., Chenopodium retusum | Hyperspectral | 1.25 mm | 61 bands, 400–1000 nm | Modified Chlorophyll Absorptance Reflectance Index; Principal component analysis and stepwise discriminant analysis | 88–94% | Eddy et al. [212] |

Fluorescence Detection

Ultrasonic Detection

LiDAR Detection

Combination Sensors Detection

3.3.3. Plant Modification Technology

4. Weeding Actuators and Robots

4.1. Intelligent Chemical Weeding

4.2. Intelligent Mechanical Weeding

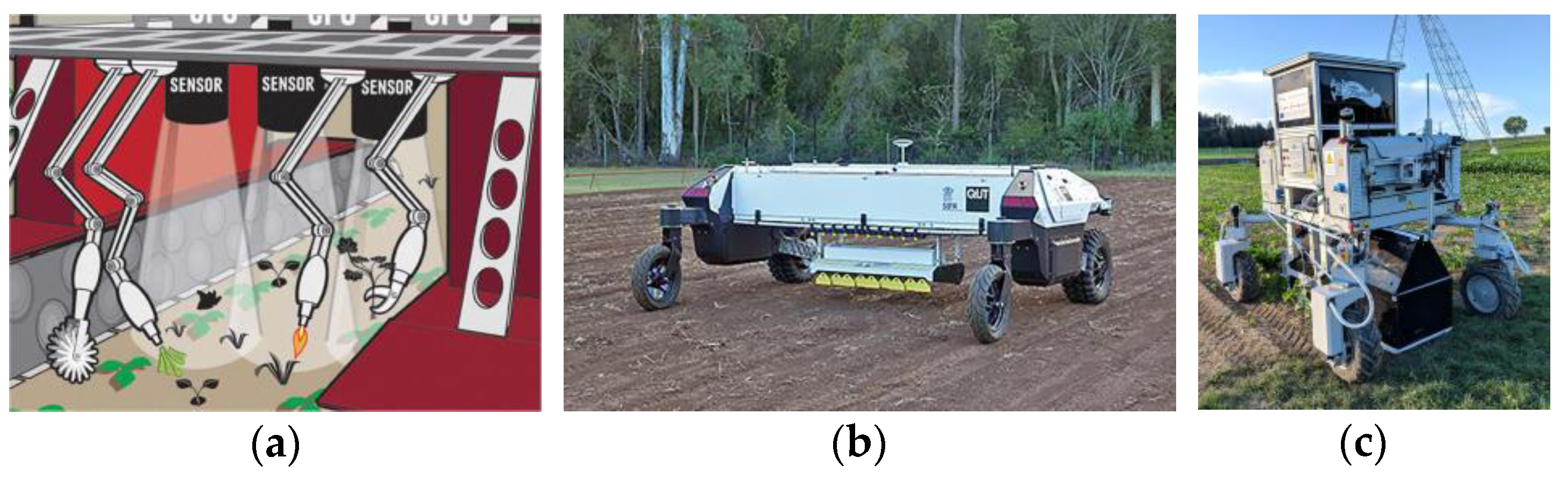

4.3. Intelligent Physical Weeding

4.4. Integrated Weed Management (IWM)

5. Challenges and Future Development

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sultanbawa, Y.; Sivakumar, D. Enhanced nutritional and phytochemical profiles of selected underutilized fruits, vegetables, and legumes. Curr. Opin. Food Sci. 2022, 46, 100853. [Google Scholar] [CrossRef]

- Appleton, K.; Dinnella, C.; Spinelli, S.; Morizet, D.; Saulais, L.; Hemingway, A.; Monteleone, E.; Depezay, L.; Perez-Cueto, F.; Hartwell, H. Consumption of a high quantity and a wide variety of vegetables are predicted by different food choice motives in older adults from France, Italy and the UK. Nutrients 2017, 9, 923. [Google Scholar] [CrossRef]

- Mwadzingeni, L.; Afari-Sefa, V.; Shimelis, H.; N’Danikou, S.; Figlan, S.; Depenbusch, L.; Shayanowako, A.I.T.; Chagomoka, T.; Mushayi, M.; Schreinemachers, P.; et al. Unpacking the value of traditional African vegetables for food and nutrition security. Food Secur. 2021, 13, 1215–1226. [Google Scholar] [CrossRef]

- Lee, S.; Choi, Y.; Jeong, H.S.; Lee, J.; Sung, J. Effect of different cooking methods on the content of vitamins and true retention in selected vegetables. Food Sci. Biotechnol. 2018, 27, 333–342. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Guo, C.; Zhang, Y.; Yu, L.; Ma, F.; Wang, X.; Zhang, L.; Li, P. Contribution of different food types to vitamin a intake in the chinese diet. Nutrients 2023, 15, 4028. [Google Scholar] [CrossRef] [PubMed]

- Schreinemachers, P.; Simmons, E.B.; Wopereis, M.C.S. Tapping the economic and nutritional power of vegetables. Glob. Food Secur. 2018, 16, 36–45. [Google Scholar] [CrossRef]

- Shinali, T.S.; Zhang, Y.; Altaf, M.; Nsabiyeze, A.; Han, Z.; Shi, S.; Shang, N. The Valorization of wastes and byproducts from cruciferous vegetables: A review on the potential utilization of cabbage, cauliflower, and broccoli byproducts. Foods 2024, 13, 1163. [Google Scholar] [CrossRef] [PubMed]

- Popovic-Djordjevic, J.B.; Kostic, A.Z.; Rajkovic, M.B.; Miljkovic, I.; Krstic, D.; Caruso, G.; Siavash, M.S.; Brceski, I. Organically vs. conventionally grown vegetables: Multi-elemental analysis and nutritional evaluation. Biol. Trace Elem. Res. 2022, 200, 426–436. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Zheng, Q.; Dong, A.; Wang, J.; Si, J. Chemical constituents, biological activities, and proposed biosynthetic pathways of steroidal saponins from healthy nutritious vegetable-allium. Nutrients 2023, 15, 2233. [Google Scholar] [CrossRef]

- Available online: https://www.fao.org/faostat/zh/#data/QCL (accessed on 20 June 2024).

- Fischer, G.; Patt, N.; Ochieng, J.; Mvungi, H. Participation in and gains from traditional vegetable value chains: A gendered analysis of perceptions of labour, income and expenditure in producers’ and traders’ households. Eur. J. Dev. Res. 2020, 32, 1080–1104. [Google Scholar] [CrossRef]

- Liu, L.; Ross, H.; Ariyawardana, A. Building rural resilience through agri-food value chains and community interactions: A vegetable case study in wuhan, China. J. Rural. Stud. 2023, 101, 103047. [Google Scholar] [CrossRef]

- Ganesh, K.S.; Sridhar, A.; Vishali, S. Utilization of fruit and vegetable waste to produce value-added products: Conventional utilization and emerging opportunities-A review. Chemosphere 2022, 287, 132221. [Google Scholar] [CrossRef]

- Velasco-Ramírez, A.P.; Velasco-Ramírez, A.; Hernández-Herrera, R.M.; Ceja-Esquivez, J.; Velasco-Ramírez, S.F.; Ramírez-Anguiano, A.C.; Torres-Morán, M.I. The impact of aqueous extracts of verbesina sphaerocephala and verbesina fastigiata on germination and growth in solanum lycopersicum and cucumis sativus seedlings. Horticulturae 2022, 8, 652. [Google Scholar] [CrossRef]

- Gonzalez-Andujar, J.L.; Aguilera, M.J.; Davis, A.S.; Navarrete, L. Disentangling weed diversity and weather impacts on long-term crop productivity in a wheat-legume rotation. Field Crops Res. 2019, 232, 24–29. [Google Scholar] [CrossRef]

- Tanveer, A.; Khaliq, A.; Javaid, M.M.; Chaudhry, M.N.; Awan, I. Implications of weeds of genus euphorbia for crop production: A review. Planta Daninha 2013, 31, 723–731. [Google Scholar] [CrossRef]

- Abdallah, I.S.; Atia, M.A.M.; Nasrallah, A.K.; El-Beltagi, H.S.; Kabil, F.F.; El-Mogy, M.M.; Abdeldaym, E.A. Effect of new pre-emergence herbicides on quality and yield of potato and its associated weeds. Sustainability 2021, 13, 9796. [Google Scholar] [CrossRef]

- Cloyd, R.A.; Herrick, N.J. The case for sanitation as an insect pest management strategy in greenhouse production systems. J. Entomol. Sci. 2022, 57, 315–322. [Google Scholar] [CrossRef]

- Madden, M.K.; Widick, I.V.; Blubaugh, C.K. Weeds impose unique outcomes for pests, natural enemies, and yield in two vegetable crops. Environ. Entomol. 2021, 50, 330–336. [Google Scholar] [CrossRef]

- Thies, J.A. Grafting for managing vegetable crop pests. Pest. Manag. Sci. 2021, 77, 4825–4835. [Google Scholar] [CrossRef]

- Dentika, P.; Ozier-Lafontaine, H.; Penet, L. Weeds as pathogen hosts and disease risk for crops in the wake of a reduced use of herbicides: Evidence from yam (Dioscorea alata) fields and colletotrichum pathogens in the tropics. J. Fungi 2021, 7, 283. [Google Scholar] [CrossRef]

- Pumariño, L.; Sileshi, G.W.; Gripenberg, S.; Kaartinen, R.; Barrios, E.; Muchane, M.N.; Midega, C.; Jonsson, M. Effects of agroforestry on pest, disease and weed control: A meta-analysis. Basic Appl. Ecol. 2015, 16, 573–582. [Google Scholar] [CrossRef]

- Tolman, J.H.; McLeod, D.G.R.; Harris, C.R. Cost of crop losses in processing tomato and cabbage in southwestern Ontario due to insects, weeds and/or diseases. Can. J. Plant Sci. 2004, 3, 915–921. [Google Scholar] [CrossRef]

- Bloomer, D.J.; Harrington, K.C.; Ghanizadeh, H.; James, T.K. Robots and shocks: Emerging non-herbicide weed control options for vegetable and arable cropping. N. Z. J. Agric. Res. 2024, 67, 81–103. [Google Scholar] [CrossRef]

- Abit, M.; Dimas, E.; Ramirez, A. Weed survey of small-scale vegetable farms in ormoc city, philippines with emphasis on altitude variation. Philipp. J. Crop Sci. 2022, 3, 40–48. [Google Scholar]

- Da, S.S.R.; Vechia, J.; Dos, S.C.; Almeida, D.P.; Da, C.F.M. Relationship of contact angle of spray solution on leaf surfaces with weed control. Sci. Rep. 2021, 11, 9886. [Google Scholar] [CrossRef]

- Kaur, R.; Das, T.K.; Banerjee, T.; Raj, R.; Singh, R.; Sen, S. Impacts of sequential herbicides and residue mulching on weeds and productivity and profitability of vegetable pea in North-western Indo-Gangetic Plains. Sci. Hortic. 2020, 270, 109456. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time weed-crop classification and localisation technique for robotic weed control in lettuce. Biosyst. Eng. 2020, 192, 257–274. [Google Scholar] [CrossRef]

- Parkash, V.; Saini, R.; Singh, M.; Singh, S. Comparison of the effects of ammonium nonanoate and an essential oil herbicide on weed control efficacy and water use efficiency of pumpkin. Weed Technol. 2022, 36, 64–72. [Google Scholar] [CrossRef]

- Asaf, E.; Rozenberg, G.; Shulner, I.; Eizenberg, H.; Lati, R.N. Evaluation of finger weeder safety and efficacy for intra-row weed removal in irrigated field crops. Weed Res. 2023, 63, 102–114. [Google Scholar] [CrossRef]

- Jiao, J.; Wang, Z.; Luo, H.; Chen, G.; Liu, H.; Guan, J.; Hu, L.; Zang, Y. Development of a mechanical weeder and experiment on the growth, yield and quality of rice. Int. J. Agric. Biol. Eng. 2022, 15, 92–99. [Google Scholar] [CrossRef]

- Jiao, J.; Hu, L.; Chen, G.; Chen, C.; Zang, Y. Development and experimentation of intra-row weeding device for organic rice. Agriculture 2024, 14, 146. [Google Scholar] [CrossRef]

- Abdelaal, K.; Alsubeie, M.S.; Hafez, Y.; Emeran, A.; Moghanm, F.; Okasha, S.; Omara, R.; Basahi, M.A.; Darwish, D.B.E.; Ibrahim, M.F.M.; et al. Physiological and biochemical changes in vegetable and field crops under drought, salinity and weeds stresses: Control strategies and management. Agriculture 2022, 12, 2084. [Google Scholar] [CrossRef]

- Lewis, D.G.; Cutulle, M.A.; Schmidt-Jeffris, R.A.; Blubaugh, C.K. Better together? Combining cover crop mulches, organic herbicides, and weed seed biological control in reduced-tillage systems. Environ. Entomol. 2020, 49, 1327–1334. [Google Scholar] [CrossRef]

- Merfield, C.N.; Hampton, J.G.; Wratten, S.D. A direct-fired steam weeder. Weed Res. 2009, 49, 553–556. [Google Scholar] [CrossRef]

- Sportelli, M.; Frasconi, C.; Fontanelli, M.; Pirchio, M.; Gagliardi, L.; Raffaelli, M.; Peruzzi, A.; Antichi, D. Innovative living mulch management strategies for organic conservation field vegetables: Evaluation of continuous mowing, flaming, and tillage performances. Agronomy 2022, 12, 622. [Google Scholar] [CrossRef]

- Rastgordani, F.; Oveisi, M.; Mashhadi, H.R.; Naeimi, M.H.; Hosseini, N.M.; Asadian, N.; Bakhshian, A.; Müller-Schärer, H. Climate change impact on herbicide efficacy: A model to predict herbicide dose in common bean under different moisture and temperature conditions. Crop Prot. 2023, 163, 106097. [Google Scholar] [CrossRef]

- Abdallah, I.S.; Abdelgawad, K.F.; El-Mogy, M.M.; El-Sawy, M.B.I.; Mahmoud, H.A.; Fahmy, M.A.M. Weed control, growth, nodulation, quality and storability of peas as affected by pre- and postemergence herbicides. Horticulturae 2021, 7, 307. [Google Scholar] [CrossRef]

- Mosqueda, E.G.; Lim, C.A.; Sbatella, G.M.; Jha, P.; Lawrence, N.C.; Kniss, A.R. Effect of crop canopy and herbicide application on kochia (Bassia scoparia) density and seed production. Weed Sci. 2020, 68, 278–284. [Google Scholar] [CrossRef]

- Colquhoun, J.B.; Heider, D.J.; Rittmeyer, R.A. Potato injury risk and weed control from reduced rates of PPO-inhibiting herbicides. Weed Technol. 2021, 35, 632–637. [Google Scholar] [CrossRef]

- Buzanini, A.C.; Boyd, N.S. Tomato and bell pepper tolerance to preemergence herbicides applied posttransplant in plasticulture production. Weed Technol. 2023, 37, 67–70. [Google Scholar] [CrossRef]

- Boyd, N.S.; Moretti, M.L.; Sosnoskie, L.M.; Singh, V.; Kanissery, R.; Sharpe, S.; Besançon, T.; Culpepper, S.; Nurse, R.; Hatterman-Valenti, H.; et al. Occurrence and management of herbicide resistance in annual vegetable production systems in North America. Weed Sci. 2022, 70, 515–528. [Google Scholar] [CrossRef]

- Jhala, A.J.; Singh, M.; Shergill, L.; Singh, R.; Jugulam, M.; Riechers, D.E.; Ganie, Z.A.; Selby, T.P.; Werle, R.; Norsworthy, J.K. Very long chain fatty acid-inhibiting herbicides: Current uses, site of action, herbicide-resistant weeds, and future. Weed Technol. 2023, 38, e1. [Google Scholar] [CrossRef]

- Rao, A.N.; Singh, R.G.; Mahajan, G.; Wani, S.P. Weed research issues, challenges, and opportunities in India. Crop Prot. 2020, 134, 104451. [Google Scholar] [CrossRef]

- Martin, R.J. Weed research issues, challenges, and opportunities in Cambodia. Crop Prot. 2020, 134, 104288. [Google Scholar] [CrossRef]

- Zawada, M.; Legutko, S.; Gościańska-Łowińska, J.; Szymczyk, S.; Nijak, M.; Wojciechowski, J.; Zwierzyński, M. Mechanical weed control systems: Methods and effectiveness. Sustainability 2023, 15, 15206. [Google Scholar] [CrossRef]

- Zejak, D.; Popović, V.; Spalević, V.; Popović, D.; Radojević, V.; Ercisli, S.; Glišić, I. State and economical benefit of organic production: Fields crops and fruits in the world and Montenegro. Not. Bot. Horti Agrobot. Cluj-Napoca 2022, 50, 12815. [Google Scholar] [CrossRef]

- Mazur-Włodarczyk, K.; Gruszecka-Kosowska, A. Conventional or organic? Motives and trends in polish vegetable consumption. Int. J. Environ. Res. Public Health 2022, 19, 4667. [Google Scholar] [CrossRef] [PubMed]

- Migliavada, R.; Ricci, F.Z.; Denti, F.; Haghverdian, D.; Torri, L. Is purchasing of vegetable dishes affected by organic or local labels? Empirical evidence from a university canteen. Appetite 2022, 173, 105995. [Google Scholar] [CrossRef]

- Loera, B.; Murphy, B.; Fedi, A.; Martini, M.; Tecco, N.; Dean, M. Understanding the purchase intentions for organic vegetables across EU: A proposal to extend the TPB model. Br. Food J. 2022, 124, 4736–4754. [Google Scholar] [CrossRef]

- de Lima, D.P.; Dos Santos Pinto Júnior, E.; de Menezes, A.V.; de Souza, D.A.; de São José, V.P.B.; Da Silva, B.P.; de Almeida, A.Q.; de Carvalho, I.M.M. Chemical composition, minerals concentration, total phenolic compounds, flavonoids content and antioxidant capacity in organic and conventional vegetables. Food Res. Int. 2024, 175, 113684. [Google Scholar] [CrossRef] [PubMed]

- Imran; Amanullah. Assessment of chemical and manual weed control approaches for effective weed suppression and maize productivity enhancement under maize-wheat cropping system. Gesunde Pflanz. 2022, 74, 167–176. [Google Scholar] [CrossRef]

- Awan, D.; Ahmad, F.; Ashraf, S. Effective weed control strategy in tomato kitchen gardens—herbicides, mulching or manual weeding. Curr. Sci. India 2018, 6, 1325–1329. [Google Scholar] [CrossRef]

- Gazoulis, I.; Kanatas, P.; Antonopoulos, N. Cultural practices and mechanical weed control for the management of a low-diversity weed community in spinach. Diversity 2021, 13, 616. [Google Scholar] [CrossRef]

- Pandey, H.S.; Tiwari, G.S.; Sharma, A.K. Design and development of an e-powered inter row weeder for small farm mechanization. J. Sci. Ind. Res. 2023, 82, 671–682. [Google Scholar] [CrossRef]

- Baidhe, E.; Kigozi, J.; Kambugu, R.K. Design, construction and performance evaluation for a maize weeder attachable to an ox-plough frame. J. Biosyst. Eng. 2020, 45, 65–70. [Google Scholar] [CrossRef]

- Richard, D.; Leimbrock-Rosch, L.; Keßler, S.; Stoll, E.; Zimmer, S. Soybean yield response to different mechanical weed control methods in organic agriculture in Luxembourg. Eur. J. Agron. 2023, 147, 126842. [Google Scholar] [CrossRef]

- Jiao, J.K.; Hu, L.; Chen, G.L.; Tu, T.P.; Wang, Z.M.; Zang, Y. Design and experiment of an inter-row weeding equipment applied in paddy field. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2023, 39, 11–22. [Google Scholar]

- Ghorai, A.K. “Agricultural weeder with nail assembly” for weed control, soil moisture conservation, soil aeration and increasing crop productivity. Int. J. Environ. Clim. Chang. 2022, 12, 3056–3068. [Google Scholar] [CrossRef]

- Robačer, M.; Canali, S.; Kristensen, H.L.; Bavec, F.; Mlakar, S.G.; Jakop, M.; Bavec, M. Cover crops in organic field vegetable production. Sci. Hortic. 2016, 208, 104–110. [Google Scholar] [CrossRef]

- Greer, L.; Dole, J.M. Aluminum Foil, Aluminium-painted, plastic, and degradable mulches increase yields and decrease insectvectored viral diseases of vegetables. Hort. Technol. 2003, 2, 276–284. [Google Scholar] [CrossRef]

- McCollough, M.R.; Poulsen, F.; Melander, B. Informing the operation of intelligent automated intra-row weeding machines in direct-sown sugar beet (Beta vulgaris L.): Crop effects of hoeing and flaming across early growth stages, tool working distances, and intensities. Crop Prot. 2024, 177, 106562. [Google Scholar] [CrossRef]

- Morselli, N.; Puglia, M.; Pedrazzi, S.; Muscio, A.; Tartarini, P.; Allesina, G. Energy, environmental and feasibility evaluation of tractor-mounted biomass gasifier for flame weeding. Sustain. Energy Technol. Assess. 2022, 50, 101823. [Google Scholar] [CrossRef]

- Borowy, A.; Kapłan, M. Evaluating glufosinate-ammonium and flame weeding for weed control in sweet marjoram (Origanum majorana L.) cultivation. Acta Sci. Pol. Hortorum Cultus 2022, 21, 71–83. [Google Scholar] [CrossRef]

- Rajković, M.; Malidža, G.; Tomaš Simin, M.; Milić, D.; Glavaš-Trbić, D.; Meseldžija, M.; Vrbničanin, S. Sustainable organic corn production with the use of flame weeding as the most sustainable economical solution. Sustainability 2021, 13, 572. [Google Scholar] [CrossRef]

- Galbraith, C.G. Electrical Weed Control in Integrated Weed Management: Impacts on Vegetable Production, Weed Seed Germination, and Soil Microbial Communities. Master’s Thesis, Michigan State University, East Lansing, MI, USA, 2023. [Google Scholar]

- Moore, L.D.; Jennings, K.M.; Monks, D.W.; Boyette, M.D.; Leon, R.G.; Jordan, D.L.; Ippolito, S.J.; Blankenship, C.D.; Chang, P. Evaluation of electrical and mechanical Palmer amaranth (Amaranthus palmeri) management in cucumber, peanut, and sweetpotato. Weed Technol. 2023, 37, 53–59. [Google Scholar] [CrossRef]

- Matsuda, Y.; Kakutani, K.; Toyoda, H. Unattended electric weeder (UEW): A novel approach to control floor weeds in orchard nurseries. Agronomy 2023, 13, 1954. [Google Scholar] [CrossRef]

- Bloomer, D.J.; Harrington, K.C.; Ghanizadeh, H.; James, T.K. Micro electric shocks control broadleaved and grass weeds. Agronomy 2022, 12, 2039. [Google Scholar] [CrossRef]

- Guerra, N.; Fennimore, S.A.; Siemens, M.C.; Goodhue, R.E. Band steaming for weed and disease control in leafy greens and carrots. Hortscience 2022, 57, 1453–1459. [Google Scholar] [CrossRef]

- Zhang, Y.; Staab, E.S.; Slaughter, D.C.; Giles, D.K.; Downey, D. Automated weed control in organic row crops using hyperspectral species identification and thermal micro-dosing. Crop Prot. 2012, 41, 96–105. [Google Scholar] [CrossRef]

- Rasmussen, J.; Griepentrog, H.W.; Nielsen, J.; Henriksen, C.B. Automated intelligent rotor tine cultivation and punch planting to improve the selectivity of mechanical intra-row weed control. Weed Res. 2012, 52, 327–337. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Zhuang, H.; Li, H. YOLOX-based blue laser weeding robot in corn field. Front. Plant Sci. 2022, 13, 1017803. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, H.; Fennimore, S.A.; Slaughter, D.C.; Nguyen, T.T.; Vuong, V.L.; Raja, R.; Smith, R.F. Crop signal markers facilitate crop detection and weed removal from lettuce and tomato by an intelligent cultivator. Weed Technol. 2020, 34, 342–350. [Google Scholar] [CrossRef]

- Mennan, H.; Jabran, K.; Zandstra, B.H.; Pala, F. Non-chemical weed management in vegetables by using cover crops: A review. Agronomy 2020, 10, 257. [Google Scholar] [CrossRef]

- Merfield, C.N. Could the dawn of Level 4 robotic weeders facilitate a revolution in ecological weed management? Weed Res. 2023, 63, 83–87. [Google Scholar] [CrossRef]

- Cutulle, M.A.; Maja, J.M. Determining the utility of an unmanned ground vehicle for weed control in specialty crop systems. Ital. J. Agron. 2021, 16, 1865. [Google Scholar] [CrossRef]

- Stenchly, K.; Lippmann, S.; Waongo, A.; Nyarko, G.; Buerkert, A. Weed species structural and functional composition of okra fields and field periphery under different management intensities along the rural-urban gradient of two West African cities. Agric. Ecosyst. Environ. 2017, 237, 213–223. [Google Scholar] [CrossRef]

- Cruz-Garcia, G.S.; Price, L.L. Weeds as important vegetables for farmers. Acta Soc. Bot. Pol. 2012, 81, 397–403. [Google Scholar] [CrossRef]

- Roberts, J.; Florentine, S. Advancements and developments in the detection and control of invasive weeds: A global review of the current challenges and future opportunities. Weed Sci. 2024, 72, 205–215. [Google Scholar] [CrossRef]

- Xiang, M.; Qu, M.; Wang, G.; Ma, Z.; Chen, X.; Zhou, Z.; Qi, J.; Gao, X.; Li, H.; Jia, H. Crop detection technologies, mechanical weeding executive parts and working performance of intelligent mechanical weeding: A review. Front. Plant Sci. 2024, 15, 1361002. [Google Scholar] [CrossRef]

- Coleman, M.J.; Kristiansen, P.; Sindel, B.M.; Fyfe, C. Imperatives for integrated weed management in vegetable production: Evaluating research and adoption. Weed Biol. Manag. 2024, 24, 3–14. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Shuang, F.; Zhang, M.; Li, X. Key technologies of machine vision for weeding robots: A review and benchmark. Comput. Electron. Agric. 2022, 196, 106880. [Google Scholar] [CrossRef]

- Zhang, W.; Miao, Z.; Li, N.; He, C.; Sun, T. Review of current robotic approaches for precision weed management. Curr. Robot. Rep. 2022, 3, 139–151. [Google Scholar] [CrossRef]

- Murad, N.Y.; Mahmood, T.; Forkan, A.; Morshed, A.; Jayaraman, P.P.; Siddiqui, M.S. Weed detection using deep learning: A systematic literature review. Sensors 2023, 23, 3670. [Google Scholar] [CrossRef] [PubMed]

- Esposito, M.; Crimaldi, M.; Cirillo, V.; Sarghini, F.; Maggio, A. Drone and sensor technology for sustainable weed management: A review. Chem. Biol. Technol. Agric. 2021, 8, 18. [Google Scholar] [CrossRef]

- Fernández Quintanilla, C.; Peña, J.M.; Andújar, D.; Dorado, J.; Ribeiro, A.; López Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- Singh, V.; Rana, A.; Bishop, M.; Filippi, A.M.; Cope, D.; Rajan, N.; Bagavathiannan, M. Chapter Three—Unmanned aircraft systems for precision weed detection and management: Prospects and challenges. Adv. Agron. 2020, 159, 93–134. [Google Scholar] [CrossRef]

- Bolch, E.A. Comparing Mapping Capabilities of Small Unmanned Aircraft and Manned Aircraft for Monitoring Invasive Plants in a Wetland Environment. Master’s Thesis, University of California, Merced, CA, USA, 2020. [Google Scholar]

- Mohidem, N.A.; Che’Ya, N.N.; Juraimi, A.S.; Fazlil Ilahi, W.F.; Mohd Roslim, M.H.; Sulaiman, N.; Saberioon, M.; Mohd Noor, N. How Can Unmanned aerial vehicles be used for detecting weeds in agricultural fields? Agriculture 2021, 10, 1004. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Coombes, M.; Liu, C.; Zhai, X.; McDonald-Maier, K.; Chen, W. Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2022, 192, 106621. [Google Scholar] [CrossRef]

- de Camargo, T.; Schirrmann, M.; Landwehr, N.; Dammer, K.; Pflanz, M. Optimized deep learning model as a basis for fast uav mapping of weed species in winter wheat crops. Remote Sens. 2021, 13, 1704. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GISci. Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Anderegg, J.; Tschurr, F.; Kirchgessner, N.; Treier, S.; Schmucki, M.; Streit, B.; Walter, A. On-farm evaluation of UAV-based aerial imagery for season-long weed monitoring under contrasting management and pedoclimatic conditions in wheat. Comput. Electron. Agric. 2023, 204, 107558. [Google Scholar] [CrossRef]

- Fraccaro, P.; Butt, J.; Edwards, B.; Freckleton, R.P.; Childs, D.Z.; Reusch, K.; Comont, D. A deep learning application to map weed spatial extent from unmanned aerial vehicles imagery. Remote Sens. 2022, 14, 4197. [Google Scholar] [CrossRef]

- Lambert, J.P.T.; Hicks, H.L.; Childs, D.Z.; Freckleton, R.P. Evaluating the potential of Unmanned Aerial Systems for mapping weeds at field scales: A case study with Alopecurus myosuroides. Weed Res. 2018, 58, 35–45. [Google Scholar] [CrossRef]

- Lambert, J.P.T.; Childs, D.Z.; Freckleton, R.P. Testing the ability of unmanned aerial systems and machine learning to map weeds at subfield scales: A test with the weed Alopecurus myosuroides (Huds). Pest Manag. Sci. 2019, 75, 2283–2294. [Google Scholar] [CrossRef] [PubMed]

- Castaldi, F.; Pelosi, F.; Pascucci, S.; Casa, R. Assessing the potential of images from unmanned aerial vehicles (UAV) to support herbicide patch spraying in maize. Precis. Agric. 2017, 18, 76–94. [Google Scholar] [CrossRef]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pižurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.J.; Wulfsohn, D.; Rasmussen, J. Sugar beet (Beta vulgaris L.) and thistle (Cirsium arvensis L.) discrimination based on field spectral data. Biosyst. Eng. 2015, 139, 1–15. [Google Scholar] [CrossRef]

- Sa, I.; Popovic, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap A large-scale semantic weed mapping framework using aerial multispectral imaging and deep neural network for precision farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef]

- Zou, K.; Chen, X.; Zhang, F.; Zhou, H.; Zhang, C. A field weed density evaluation method based on UAV imaging and modified. Remote Sens. 2021, 13, 310. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Iqbal, J.; Alam, M. A novel semi-supervised framework for UAV based crop/weed classification. PLoS ONE 2021, 16, e0251008. [Google Scholar] [CrossRef] [PubMed]

- Gašparović, M.; Zrinjski, M.; Barković, Đ.; Radočaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173, 105385. [Google Scholar] [CrossRef]

- de Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA Algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Peña, J. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between and within crop-row weed mapping using UAV-imagery. Expert. Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Rozenberg, G.; Kent, R.; Blank, L. Consumer-grade UAV utilized for detecting and analyzing late-season weed spatial distribution patterns in commercial onion fields. Precis. Agric. 2021, 22, 1317–1332. [Google Scholar] [CrossRef]

- Genze, N.; Wirth, M.; Schreiner, C.; Ajekwe, R.; Grieb, M.; Grimm, D.G. Improved weed segmentation in UAV imagery of sorghum fields with a combined deblurring segmentation model. Plant Methods 2023, 19, 87. [Google Scholar] [CrossRef]

- Zhang, Y.; Slaughter, D.C.; Staab, E.S. Robust hyperspectral vision-based classification for multi-season weed mapping. ISPRS J. Photogramm. Remote Sens. 2012, 69, 65–73. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Wen, S.; Zhang, H.; Zhang, Y. Accurate weed mapping and prescription map generation based on fully convolutional networks using UAV imagery. Sensors 2018, 18, 3299. [Google Scholar] [CrossRef]

- de Castro, A.I.; Jurado-Expósito, M.; Peña-Barragán, J.M.; López-Granados, F. Airborne multi-spectral imagery for mapping cruciferous weeds in cereal and legume crops. Precis. Agric. 2012, 13, 302–321. [Google Scholar] [CrossRef]

- de Castro, A.I.; López-Granados, F.; Jurado-Expósito, M. Broad-scale cruciferous weed patch classification in winter wheat using QuickBird imagery for in-season site-specific control. Precis. Agric. 2013, 14, 392–413. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Mesas-Carrascosa, F.J.; López-Granados, F. Evaluation of pixel- and object-based approaches for mapping wild oat (Avena sterilis) weed patches in wheat fields using QuickBird imagery for site-specific management. Eur. J. Agron. 2014, 59, 57–66. [Google Scholar] [CrossRef]

- Revathy, R.; Setia, R.; Jain, S.; Das, S.; Gupta, S.; Pateriya, B. Classification of potato in Indian Punjab using time-series sentinel-2 images. In Artificial Intelligence and Machine Learning in Satellite Data Processing and Services; Lecture Notes in Electrical Engineering; Springer Nature: Singapore, 2023; Volume 970, pp. 193–201. [Google Scholar]

- Mudereri, B.T.; Dube, T.; Niassy, S.; Kimathi, E.; Landmann, T.; Khan, Z.; Abdel-Rahman, E.M. Is it possible to discern Striga weed (Striga hermonthica) infestation levels in maize agro-ecological systems using in-situ spectroscopy? Int. J. Appl. Earth Obs. 2020, 85, 102008. [Google Scholar] [CrossRef]

- Mudereri, B.T.; Abdel-Rahman, E.M.; Dube, T.; Niassy, S.; Khan, Z.; Tonnang, H.E.Z.; Landmann, T. A two-step approach for detecting Striga in a complex agroecological system using Sentinel-2 data. Sci. Total Environ. 2021, 762, 143151. [Google Scholar] [CrossRef] [PubMed]

- Mkhize, Y.; Madonsela, S.; Cho, M.; Nondlazi, B.; Main, R.; Ramoelo, A. Mapping weed infestation in maize fields using Sentinel-2 data. Phys. Chem. Earth Parts A/B/C 2024, 134, 103571. [Google Scholar] [CrossRef]

- Mudereri, B.T.; Dube, T.; Adel-Rahman, E.M.; Niassy, S.; Kimathi, E.; Khan, Z.; Landmann, T. A comparative analysis of planetscope and sentinel sentinel-2 space-borne sensors in mapping striga weed using guided regularised random forest classification ensemble. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 701–708. [Google Scholar] [CrossRef]

- He, J.; Zang, Y.; Luo, X.; Zhao, R.; He, J.; Jiao, J. Visual detection of rice rows based on Bayesian decision theory and robust regression least squares method. Int. J. Agric. Biol. Eng. 2021, 14, 199–206. [Google Scholar] [CrossRef]

- Wang, S.; Su, D.; Jiang, Y.; Tan, Y.; Qiao, Y.; Yang, S.; Feng, Y.; Hu, N. Fusing vegetation index and ridge segmentation for robust vision based autonomous navigation of agricultural robots in vegetable farms. Comput. Electron. Agric. 2023, 213, 108235. [Google Scholar] [CrossRef]

- Suh, H.K.; Hofstee, J.W.; IJsselmuiden, J.; van Henten, E.J. Sugar beet and volunteer potato classification using Bag-of-Visual-Words model, Scale-Invariant Feature Transform, or Speeded Up Robust Feature descriptors and crop row information. Biosyst. Eng. 2018, 166, 210–226. [Google Scholar] [CrossRef]

- Bah, M.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row detection BASED navigation and guidance for agricultural robots and autonomous vehicles in row-crop fields: Methods and applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Ronchetti, G.; Mayer, A.; Facchi, A.; Ortuani, B.; Sona, G. Crop row detection through UAV surveys to optimize on-farm irrigation management. Remote Sens. 2020, 12, 1967. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep network for crop row detection in UAV images. IEEE Access 2020, 8, 5189–5200. [Google Scholar] [CrossRef]

- de Silva, R.; Cielniak, G.; Gao, J. Vision based crop row navigation under varying field conditions in arable fields. Comput. Electron. Agric. 2024, 217, 108581. [Google Scholar] [CrossRef]

- Han, C.J.; Zheng, K.; Zhao, X.G.; Zheng, S.Y.; Fu, H.; Zhai, C.Y. Design and experiment of row identification and row-oriented spray control system for field cabbage crops. Trans. Chin. Soc. Agric. Mach. 2022, 53, 89–101. [Google Scholar]

- de Silva, R.; Cielniak, G.; Wang, G.; Gao, J. Deep learning-based crop row detection for infield navigation of agri-robots. J. Field Robot. 2023, 40, 1–23. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.V.; Dornhege, C.; Burgard, W. Crop row detection on tiny plants with the pattern hough transform. IEEE Robot. Autom. Let. 2018, 3, 3394–3401. [Google Scholar] [CrossRef]

- Winterhalter, W.; Fleckenstein, F.; Dornhege, C.; Burgard, W. Localization for precision navigation in agricultural fields—Beyond crop row following. J. Field Robot. 2021, 38, 429–451. [Google Scholar] [CrossRef]

- Chen, J.; Qiang, H.; Wu, J.; Xu, G.; Wang, Z. Navigation path extraction for greenhouse cucumber-picking robots using the prediction-point Hough transform. Comput. Electron. Agric. 2021, 180, 105911. [Google Scholar] [CrossRef]

- Cruz Ulloa, C.; Krus, A.; Barrientos, A.; Cerro, J.D.; Valero, C. Robotic fertilization in strip cropping using a cnn vegetables detection-characterization method. Comput. Electron. Agric. 2022, 193, 106684. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Self-supervised weed detection in vegetable crops using ground based hyperspectral imaging. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 5128–5135. [Google Scholar]

- Ospina, R.; Noguchi, N. Simultaneous mapping and crop row detection by fusing data from wide angle and telephoto images. Comput. Electron. Agric. 2019, 162, 602–612. [Google Scholar] [CrossRef]

- Tian, Z.; Junfang, X.; Gang, W.; Jianbo, Z. Automatic navigation path detection method for tillage machines working on high crop stubble fields based on machine vision. Int. J. Agric. Biol. Eng. 2014, 7, 29. [Google Scholar]

- Shi, J.; Bai, Y.; Zhou, J.; Zhang, B. Multi-crop navigation line extraction based on improved YOLO-v8 and threshold-DBSCAN under complex agricultural environments. Agriculture 2024, 14, 45. [Google Scholar] [CrossRef]

- Yang, R.; Zhai, Y.; Zhang, J.; Zhang, H.; Tian, G.; Huang, P.; Li, L. Potato Visual navigation line detection based on deep learning and feature midpoint adaptation. Agriculture 2022, 12, 1363. [Google Scholar] [CrossRef]

- Louargant, M.; Jones, G.; Faroux, R.; Paoli, J.; Maillot, T.; Gée, C.; Villette, S. Unsupervised classification algorithm for early weed detection in row-crops by combining spatial and spectral information. Remote Sens. 2018, 10, 718–761. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Hierarchical graph representation for unsupervised crop row detection in images. Expert. Syst. Appl. 2023, 216, 119478. [Google Scholar] [CrossRef]

- Zhao, R.; Yuan, X.; Yang, Z.; Zhang, L. Image-based crop row detection utilizing the Hough transform and DBSCAN clustering analysis. IET Image Process 2024, 18, 1161–1177. [Google Scholar] [CrossRef]

- Rabab, S.; Badenhorst, P.; Chen, Y.P.; Daetwyler, H.D. A template-free machine vision-based crop row detection algorithm. Precis. Agric. 2021, 22, 124–153. [Google Scholar] [CrossRef]

- Vidović, I.; Cupec, R.; Hocenski, Ž. Crop row detection by global energy minimization. Pattern Recogn. 2016, 55, 68–86. [Google Scholar] [CrossRef]

- Wang, A.C.; Zhang, M.; Liu, Q.S.; Wang, L.L.; Wei, X.H. Seedling crop row extraction method based on regional growth and mean shift clustering. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 202–210. [Google Scholar]

- Chen, Z.W.; Li, W.; Zhang, W.Q.; Li, Y.W.; Li, M.S.; Li, H. Vegetable crop row extraction method based on accumulation threshold of Hough Transformation. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2019, 35, 314–322. [Google Scholar]

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Improved image processing-based crop detection using Kalman filtering and the Hungarian algorithm. Comput. Electron. Agric. 2018, 148, 37–44. [Google Scholar] [CrossRef]

- Singh, K.; Agrawal, K.N.; Bora, G.C. Advanced techniques for Weed and crop identification for site specific Weed management. Biosyst. Eng. 2011, 109, 52–64. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in agriculture by machine and deep learning techniques: A review of recent developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of weed detection methods based on computer vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef] [PubMed]

- Al-Badri, A.H.; Ismail, N.A.; Al-Dulaimi, K.; Salman, G.A.; Khan, A.R.; Al-Sabaawi, A.; Salam, M.S.H. Classification of weed using machine learning techniques: A review—Challenges, current and future potential techniques. J. Plant Dis. Protect 2022, 129, 745–768. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Hu, W.; Wane, S.O.; Zhu, J.; Li, D.; Zhang, Q.; Bie, X.; Lan, Y. Review of deep learning-based weed identification in crop fields. Int. J. Agric. Biol. Eng. 2023, 16, 1–10. [Google Scholar] [CrossRef]

- Hu, K.; Wang, Z.; Coleman, G.; Bender, A.; Yao, T.; Zeng, S.; Song, D.; Schumann, A.; Walsh, M. Deep Learning Techniques for in-Crop Weed Identification: A Review; Cornell University Library: Ithaca, NY, USA, 2024. [Google Scholar]

- Qu, H.; Su, W. Deep learning-based weed–crop recognition for smart agricultural equipment: A review. Agronomy 2024, 14, 363. [Google Scholar] [CrossRef]

- Rico-Fernández, M.P.; Rios-Cabrera, R.; Castelán, M.; Guerrero-Reyes, H.I.; Juarez-Maldonado, A. A contextualized approach for segmentation of foliage in different crop species. Comput. Electron. Agric. 2019, 156, 378–386. [Google Scholar] [CrossRef]

- Kamath, R.; Balachandra, M.; Prabhu, S. Crop and weed discrimination using Laws’ texture masks. Int. J. Agric. Biol. Eng. 2020, 13, 191–197. [Google Scholar] [CrossRef]

- Liu, B.; Li, R.; Li, H.; You, G.; Yan, S.; Tong, Q. Crop/weed discrimination using a field imaging spectrometer system. Sensors 2019, 19, 5154. [Google Scholar] [CrossRef] [PubMed]

- Kazmi, W.; Garcia-Ruiz, F.; Nielsen, J.; Rasmussen, J.; Andersen, H.J. Exploiting affine invariant regions and leaf edge shapes for weed detection. Comput. Electron. Agric. 2015, 118, 290–299. [Google Scholar] [CrossRef]

- Lottes, P.; Hörferlin, M.; Sander, S.; Stachniss, C. Effective vision-based classification for separating sugar beets and weeds for precision farming. J. Field Robot. 2017, 34, 1160–1178. [Google Scholar] [CrossRef]

- Pulido-Rojas, C.A.; Molina-Villa, M.A.; Solaque-Guzmán, L.E. Machine vision system for weed detection using image filtering in vegetables crops. Rev. Fac. Ing. Univ. Antioq. 2016, 80, 124–130. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Z.; Wu, C.; Sun, L. Segmentation algorithm for overlap recognition of seedling lettuce and weeds based on SVM and image blocking. Comput. Electron. Agric. 2022, 201, 107284. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Gai, J.; Tang, L.; Steward, B.L. Automated crop plant detection based on the fusion of colour and depth images for robotic weed control. J. Field Robot. 2020, 37, 35–52. [Google Scholar] [CrossRef]

- Pallottino, F.; Menesatti, P.; Figorilli, S.; Antonucci, F.; Tomasone, R.; Colantoni, A.; Costa, C. Machine vision retrofit system for mechanical weed control in precision agriculture applications. Sustainability 2018, 10, 2209. [Google Scholar] [CrossRef]

- Li, N.; Zhang, C.; Chen, Z.; Ma, Z.; Sun, Z.; Yuan, T.; Li, W.; Zhang, J. Crop positioning for robotic intra-row weeding based on machine vision. Int. J. Agric. Biol. Eng. 2015, 8, 20. [Google Scholar] [CrossRef]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deeplearning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef]

- Ma, Z.; Wang, G.; Yao, J.; Huang, D.; Tan, H.; Jia, H.; Zou, Z. An improved U-Net model based on multi-scale input and attention mechanism: Application for recognition of chinese cabbage and weed. Sustainability 2023, 15, 5764. [Google Scholar] [CrossRef]

- Hussain, N.; Farooque, A.A.; Schumann, A.W.; Abbas, F.; Acharya, B.; McKenzie-Gopsill, A.; Barrett, R.; Afzaal, H.; Zaman, Q.U.; Cheema, M.J.M. Application of deep learning to detect Lamb’s quarters (Chenopodium album L.) in potato fields of Atlantic Canada. Comput. Electron. Agric. 2021, 182, 106040. [Google Scholar] [CrossRef]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Arribas, J.I. An automatic visible-range video weed detection, segmentation and classification prototype in potato field. Heliyon 2020, 6, e03685. [Google Scholar] [CrossRef]

- Zhao, J.; Tian, G.; Qiu, C.; Gu, B.; Zheng, K.; Liu, Q. Weed detection in potato fields based on improved YOLOv4: Optimal speed and accuracy of weed detection in potato fields. Electronics 2022, 11, 3709. [Google Scholar] [CrossRef]

- Abouzahir, S.; Sadik, M.; Sabir, E. Bag-of-visual-words-augmented Histogram of Oriented Gradients for efficient weed detection. Biosyst. Eng. 2021, 202, 179–194. [Google Scholar] [CrossRef]

- Nnadozie, E.C.; Iloanusi, O.; Ani, O.; Yu, K. Cassava detection from UAV images using YOLOv5 object detection model: Towards weed control in a cassava farm. BioRxiv 2022, 2011–2022. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep learning-based identification system of weeds and crops in strawberry and pea fields for a precision agriculture sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar] [CrossRef]

- Sun, H.; Liu, T.; Wang, J.; Zhai, D.; Yu, J. Evaluation of two deep learning-based approaches for detecting weeds growing in cabbage. Pest Manag. Sci. 2024, 80, 2817–2826. [Google Scholar] [CrossRef]

- Suh, H.K.; IJsselmuiden, J.; Hofstee, J.W.; van Henten, E.J. Transfer learning for the classification of sugar beet and volunteer potato under field conditions. Biosyst. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Ruigrok, T.; van Henten, E.; Booij, J.; van Boheemen, K.; Kootstra, G. Application-specific evaluation of a weed-detection algorithm for plant-specific spraying. Sensors 2020, 20, 7262. [Google Scholar] [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Qureshi, W.S.; Tiwana, M.I.; Rashid, N.; Alasmary, W.S.; Iqbal, J.; Hamza, A. A patch-image based classification approach for detection of weeds in sugar beet crop. IEEE Access 2021, 9, 121698–121715. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, J.; Li, H.; Chen, H.; Xu, Z.; Ou, Z. Weed detection method based on lightweight and contextual information fusion. Appl. Sci. 2023, 13, 13074. [Google Scholar] [CrossRef]

- Guo, Z.; Goh, H.H.; Li, X.; Zhang, M.; Li, Y. WeedNet-R: A sugar beet field weed detection algorithm based on enhanced RetinaNet and context semantic fusion. Front. Plant Sci. 2023, 14, 1226329. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Peng, T.; Cao, H.; Xu, Y.; Wei, X.; Cui, B. TIA-YOLOv5: An improved YOLOv5 network for real-time detection of crop and weed in the field. Front. Plant Sci. 2022, 13, 1091655. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Che, J.; Chen, Y. Weed identification using deep learning and image processing in vegetable plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- López-Correa, J.M.; Moreno, H.; Ribeiro, A.; Andújar, D. Intelligent weed management based on object detection neural networks in tomato crops. Agronomy 2022, 12, 2953. [Google Scholar] [CrossRef]

- Bender, A.; Whelan, B.; Sukkarieh, S. A high-resolution, multimodal data set for agricultural robotics: ALadybird ‘s-eye view of Brassica. J. Field Robot. 2020, 37, 73–96. [Google Scholar] [CrossRef]

- Moreno, H.; Gómez, A.; Altares-López, S.; Ribeiro, A.; Andújar, D. Analysis of Stable Diffusion-derived fake weeds performance for training Convolutional Neural Networks. Comput. Electron. Agric. 2023, 214, 108324. [Google Scholar] [CrossRef]

- Patel, J.; Ruparelia, A.; Tanwar, S.; Alqahtani, F.; Tolba, A.; Sharma, R.; Raboaca, M.S.; Neagu, B.C. Deep learning-based model for detection of brinjal weed in the era of precision agriculture. Comput. Mater. Contin. 2023, 77, 1281–1301. [Google Scholar] [CrossRef]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep object detection of crop weeds: Performance of YOLOv7 on a real case dataset from UAV images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Fatima, H.S.; Ul Hassan, I.; Hasan, S.; Khurram, M.; Stricker, D.; Afzal, M.Z. Formation of a lightweight, deep learning-based weed detection system for a commercial autonomous laser weeding robot. Appl. Sci. 2023, 13, 3997. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Goosegrass detection in strawberry and tomato using a convolutional neural network. Sci. Rep. 2020, 10, 9548. [Google Scholar] [CrossRef] [PubMed]

- Albraikan, A.A.; Aljebreen, M.; Alzahrani, J.S.; Othman, M.; Mohammed, G.P.; Ibrahim Alsaid, M. Modified barnacles mating optimization with deep learning based weed detection model for smart agriculture. Appl. Sci. 2022, 12, 12828. [Google Scholar] [CrossRef]

- Janneh, L.L.; Zhang, Y.; Cui, Z.; Yang, Y. Multi-level feature re-weighted fusion for the semantic segmentation of crops and weeds. J. King Saud Univ.—Comput. Inf. Sci. 2023, 35, 101545. [Google Scholar] [CrossRef]

- Madanan, M.; Muthukumaran, N.; Tiwari, S.; Vijay, A.; Saha, I. RSA based improved YOLOv3 network for segmentation and detection of weed species. Multimed. Tools Appl. 2024, 83, 34913–34942. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer neural network for weed and crop classification of high resolution UAV images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Ying, B.; Xu, Y.; Zhang, S.; Shi, Y.; Liu, L. Weed detection in images of carrot fields based on improved YOLO v4. Trait. Signal 2021, 38, 341–348. [Google Scholar] [CrossRef]

- Hamuda, E.; Mc Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Ram, B.G.; Schumacher, L.; Yellavajjala, R.K.; Bajwa, S.; Sun, X. Applications of deep learning in precision weed management: A review. Comput. Electron. Agric. 2023, 206, 107698. [Google Scholar] [CrossRef]

- Su, W. Advanced machine learning in point spectroscopy, RGB and hyperspectral-imaging for automatic discriminations of crops and weeds: A review. Smart Cities 2020, 3, 767–792. [Google Scholar] [CrossRef]

- Deng, B.; Lu, Y.; Xu, J. Weed database development: An updated survey of public weed datasets and cross-season weed detection adaptation. Ecol. Inform. 2024, 81, 102546. [Google Scholar] [CrossRef]

- Available online: https://github.com/vicdxxx/Weed-Datasets-Survey-2023 (accessed on 25 June 2024).

- Lu, Y. 2seasonweeddet8: A Two-season, 8-class Dataset for Cross-season Weed Detection Generalization Evaluation. Zenodo, 2024. [CrossRef]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Pallottino, F.; Antonucci, F.; Costa, C.; Bisaglia, C.; Figorilli, S.; Menesatti, P. Optoelectronic proximal sensing vehicle-mounted technologies in precision agriculture: A review. Comput. Electron. Agric. 2019, 162, 859–873. [Google Scholar] [CrossRef]

- Mohammadi, V.; Gouton, P.; Rossé, M.; Katakpe, K.K. Design and development of large-band Dual-MSFA sensor camera for precision agriculture. Sensors 2023, 24, 64. [Google Scholar] [CrossRef]

- Tao, T.; Wu, S.; Li, L.; Li, J.; Bao, S.; Wei, X. Design and experiments of weeding teleoperated robot spectral sensor for winter rape and weed identification. Adv. Mech. Eng. 2018, 10, 2072046762. [Google Scholar] [CrossRef]

- Duncan, L.; Miller, B.; Shaw, C.; Graebner, R.; Moretti, M.L.; Walter, C.; Selker, J.; Udell, C. Weed Warden: A low-cost weed detection device implemented with spectral triad sensor for agricultural applications. Hardwarex 2022, 11, e00303. [Google Scholar] [CrossRef]

- Che Ya, N.N.; Dunwoody, E.; Gupta, M. Assessment of weed classification using hyperspectral reflectance and optimal multispectral UAV imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- Martín, M.P.; Ponce, B.; Echavarría, P.; Dorado, J.; Fernández-Quintanilla, C. Early-season mapping of Johnsongrass (Sorghum halepense), Common Cocklebur (Xanthium strumarium) and Velvetleaf (Abutilon theophrasti) in corn fields using airborne hyperspectral imagery. Agronomy 2023, 13, 528. [Google Scholar] [CrossRef]

- Elstone, L.; How, K.Y.; Brodie, S.; Ghazali, M.Z.; Heath, W.P.; Grieve, B. High speed crop and weed identification in lettuce fields for precision weeding. Sensors 2020, 20, 455. [Google Scholar] [CrossRef] [PubMed]

- Eddy, P.R.; Smith, A.M.; Hill, B.D.; Peddle, D.R.; Coburn, C.A.; Blackshaw, R.E. Weed and crop discrimination using hyperspectral image data and reduced bandsets. Can. J. Remote Sens. 2014, 39, 481–490. [Google Scholar] [CrossRef]

- Longchamps, L.; Panneton, B.; Samson, G.; Leroux, G.D.; Thériault, R. Discrimination of corn, grasses and dicot weeds by their UV-induced fluorescence spectral signature. Precis. Agric. 2010, 11, 181–197. [Google Scholar] [CrossRef]

- Panneton, B.; Guillaume, S.; Roger, J.M.; Samson, G. Improved discrimination between monocotyledonous and dicotyledonous plants for weed control based on the blue-green region of ultraviolet-induced fluorescence spectra. Appl. Spectrosc. 2010, 64, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Li, W.; Men, X.; Gao, B.; Xu, Y.; Wei, X. Vegetation detection based on spectral information and development of a low-cost vegetation sensor for selective spraying. Pest Manag. Sci. 2022, 78, 2467–2476. [Google Scholar] [CrossRef]

- Wang, A.C.; Gao, B.J.; Zhao, C.J.; Xu, Y.F.; Wang, M.L.; Yan, S.G.; Li, L.; Wei, X.H. Detecting green plants based on fluorescence spectroscopy. Spectrosc. Spectr. Anal. 2022, 42, 788–794. [Google Scholar] [CrossRef]

- Wang, P.; Peteinatos, G.; Li, H.; Gerhards, R. Rapid in-season detection of herbicide resistant Alopecurus myosuroides using a mobile fluorescence imaging sensor. Crop Prot. 2016, 89, 170–177. [Google Scholar] [CrossRef]

- Lednev, V.N.; Grishin, M.Y.; Sdvizhenskii, P.A.; Kurbanov, R.K.; Litvinov, M.A.; Gudkov, S.V.; Pershin, S.M. Fluorescence mapping of agricultural fields utilizing drone-based LIDAR. Photonics 2022, 9, 963. [Google Scholar] [CrossRef]

- Zhao, X.; Zhai, C.; Wang, S.; Dou, H.; Yang, S.; Wang, X.; Chen, L. Sprayer boom height measurement in wheat field using ultrasonic sensor: An exploratory study. Front. Plant Sci. 2022, 13, 1008122. [Google Scholar] [CrossRef] [PubMed]

- Wei, Z.; Xue, X.; Salcedo, R.; Zhang, Z.; Gil, E.; Sun, Y.; Li, Q.; Shen, J.; He, Q.; Dou, Q.; et al. Key Technologies for an orchard variable-rate sprayer: Current status and future prospects. Agronomy 2023, 13, 59. [Google Scholar] [CrossRef]

- Lin, Y. LiDAR: An important tool for next-generation phenotyping technology of high potential for plant phenomics? Comput. Electron. Agric. 2015, 119, 61–73. [Google Scholar] [CrossRef]

- Rivera, G.; Porras, R.; Florencia, R.; Sánchez-Solís, J.P. LiDAR applications in precision agriculture for cultivating crops: A review of recent advances. Comput. Electron. Agric. 2023, 207, 107737. [Google Scholar] [CrossRef]

- Krus, A.; van Apeldoorn, D.; Valero, C.; Ramirez, J.J. Acquiring plant features with optical sensing devices in an organic strip-cropping system. Agronomy 2020, 10, 197. [Google Scholar] [CrossRef]

- Shahbazi, N.; Ashworth, M.B.; Callow, J.N.; Mian, A.; Beckie, H.J.; Speidel, S.; Nicholls, E.; Flower, K.C. Assessing the capability and potential of LiDAR for weed detection. Sensors 2021, 21, 2328. [Google Scholar] [CrossRef] [PubMed]

- Cai, S.; Gou, W.; Wen, W.; Lu, X.; Fan, J.; Guo, X. Design and development of a low-cost UGV 3D phenotyping platform with integrated LiDAR and electric slide rail. Plants 2023, 12, 483. [Google Scholar] [CrossRef]

- Reiser, D.; Vázquez-Arellano, M.; Paraforos, D.S.; Garrido-Izard, M.; Griepentrog, H.W. Iterative individual plant clustering in maize with assembled 2D LiDAR data. Comput. Ind. 2018, 99, 42–52. [Google Scholar] [CrossRef]

- Forero, M.G.; Murcia, H.F.; Méndez, D.; Betancourt-Lozano, J. LiDAR platform for acquisition of 3D plant phenotyping database. Plants 2022, 11, 2199. [Google Scholar] [CrossRef]

- Jayakumari, R.; Nidamanuri, R.R.; Ramiya, A.M. Object-level classification of vegetable crops in 3D LiDAR point cloud using deep learning convolutional neural networks. Precis. Agric. 2021, 22, 1617–1633. [Google Scholar] [CrossRef]

- Martínez-Guanter, J.; Garrido-Izard, M.; Valero, C.; Slaughter, D.C.; Pérez-Ruiz, M. Optical sensing to determine tomato plant spacing for precise agrochemical application: Two scenarios. Sensors 2017, 17, 1096. [Google Scholar] [CrossRef]

- Guo, Q.; Wu, F.; Pang, S.; Zhao, X.; Chen, L.; Liu, J.; Xue, B.; Xu, G.; Li, L.; Jing, H.; et al. Crop 3D—A LiDAR based platform for 3D high-throughput crop phenotyping. Sci. China Life Sci. 2018, 61, 328–339. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Hassanzadeh, A.; Kikkert, J.; Pethybridge, S.J.; van Aardt, J. Evaluation of leaf area index (LAI) of broadacre crops using UAS-based LiDAR point clouds and multispectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4027–4044. [Google Scholar] [CrossRef]

- Weis, M.; Andújar, D.; Peteinatos, G.G.; Gerhards, R. Improving the determination of plant characteristics by fusion of four different sensors. In Precision Agriculture’13; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013; pp. 63–69. [Google Scholar]

- Maldaner, L.F.; Molin, J.P.; Canata, T.F.; Martello, M. A system for plant detection using sensor fusion approach based on machine learning model. Comput. Electron. Agric. 2021, 189, 106382. [Google Scholar] [CrossRef]

- Wang, G.; Huang, D.; Zhou, D.; Liu, H.; Qu, M.; Ma, Z. Maize (Zea mays L.) seedling detection based on the fusion of a modified deep learning model and a novel Lidar points projecting strategy. Int. J. Agric. Biol. Eng. 2022, 15, 172–180. [Google Scholar] [CrossRef]

- Liu, X.; Bo, Y. Object-based crop species classification based on the combination of airborne hyperspectral images and LiDAR data. Remote Sens. 2015, 7, 922–950. [Google Scholar] [CrossRef]

- Chen, B.; Shi, S.; Gong, W.; Xu, Q.; Tang, X.; Bi, S.; Chen, B. Wavelength selection of dual-mechanism LiDAR with reflection and fluorescence spectra for plant detection. Opt. Express 2023, 31, 3660. [Google Scholar] [CrossRef] [PubMed]

- Su, W. Crop plant signaling for real-time plant identification in smart farm: A systematic review and new concept in artificial intelligence for automated weed control. Artif. Intell. Agric. 2020, 4, 262–271. [Google Scholar] [CrossRef]

- Jiang, B.; Zhang, H.Y.; Su, W.H. Automatic localization of soybean seedlings based on crop signaling and multi-view imaging. Sensors 2024, 24, 3066. [Google Scholar] [CrossRef]

- Raja, R.; Slaughter, D.C.; Fennimore, S.A.; Nguyen, T.T.; Vuong, V.L.; Sinha, N.; Tourte, L.; Smith, R.F.; Siemens, M.C. Crop signalling: A novel crop recognition technique for robotic weed control. Biosyst. Eng. 2019, 187, 278–291. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Slaughter, D.C.; Fennimore, S.A. Real-time robotic weed knife control system for tomato and lettuce based on geometric appearance of plant labels. Biosyst. Eng. 2020, 194, 152–164. [Google Scholar] [CrossRef]

- Raja, R.; Nguyen, T.T.; Vuong, V.L.; Slaughter, D.C.; Fennimore, S.A. RTD-SEPs: Real-time detection of stem emerging points and classification of crop-weed for robotic weed control in producing tomato. Biosyst. Eng. 2020, 195, 152–171. [Google Scholar] [CrossRef]

- Su, W.; Sheng, J.; Huang, Q. Development of a three-dimensional plant localization technique for automatic differentiation of soybean from intra-row weeds. Agriculture 2022, 12, 195. [Google Scholar] [CrossRef]

- Li, J.; Su, W.; Zhang, H.; Peng, Y. A real-time smart sensing system for automatic localization and recognition of vegetable plants for weed control. Front. Plant Sci. 2023, 14, 1133969. [Google Scholar] [CrossRef]

- Su, W.; Fennimore, S.A.; Slaughter, D.C. Computer vision technology for identification of snap bean crops using Systemic Rhodamine B. In Proceedings of the 2019 ASABE Annual International Meeting 2019, Boston, MA, USA, 7–10 July 2019; An ASABE Meeting Presentation 2019. p. 1900075. [Google Scholar] [CrossRef]

- Su, W.; Fennimore, S.A.; Slaughter, D.C. Development of a systemic crop signalling system for automated real-time plant care in vegetable crops. Biosyst. Eng. 2020, 193, 62–74. [Google Scholar] [CrossRef]

- Su, W.; Fennimore, S.A.; Slaughter, D.C. Fluorescence imaging for rapid monitoring of translocation behaviour of systemic markers in snap beans for automated crop/weed discrimination. Biosyst. Eng. 2019, 186, 156–167. [Google Scholar] [CrossRef]

- Su, W.; Slaughter, D.C.; Fennimore, S.A. Non-destructive evaluation of photostability of crop signaling compounds and dose effects on celery vigor for precision plant identification using computer vision. Comput. Electron. Agric. 2020, 168, 105155. [Google Scholar] [CrossRef]

- Fennimore, S.A.; Siemens, M.C. Mechanized Weed Management in Vegetable Crops. In Encyclopedia of Digital Agricultural Technologies; Zhang, Q., Ed.; Springer International Publishing: Cham, Switzerland, 2023; pp. 807–817. [Google Scholar]

- Fennimore, S.A.; Slaughter, D.C.; Siemens, M.C.; Leon, R.G.; Saber, M.N. Technology for automation of weed control in specialty crops. Weed Technol. 2016, 30, 823–837. [Google Scholar] [CrossRef]

- Fennimore, S.A.; Cutulle, M. Robotic weeders can improve weed control options for specialty crops. Pest Manag. Sci. 2019, 75, 1767–1774. [Google Scholar] [CrossRef] [PubMed]

- Gerhards, R.; Risser, P.; Spaeth, M.; Saile, M.; Peteinatos, G. A comparison of seven innovative robotic weeding systems and reference herbicide strategies in sugar beet (Beta vulgaris subsp. vulgaris L.) and rapeseed (Brassica napus L.). Weed Res. 2024, 64, 42–53. [Google Scholar] [CrossRef]

- Allmendinger, A.; Spaeth, M.; Saile, M.; Peteinatos, G.G.; Gerhards, R. Precision chemical weed management strategies: A review and a design of a new CNN-based modular spot sprayer. Agronomy 2022, 12, 1620. [Google Scholar] [CrossRef]

- Özlüoymak, Ö.B. Development and assessment of a novel camera-integrated spraying needle nozzle design for targeted micro-dose spraying in precision weed control. Comput. Electron. Agric. 2022, 199, 107134. [Google Scholar] [CrossRef]

- Özlüoymak, Ö.B. Design and development of a servo-controlled target-oriented robotic micro-dose spraying system in precision weed control. Semin. Ciências Agrárias 2021, 42, 635–656. [Google Scholar] [CrossRef]

- Hussain, N.; Farooque, A.; Schumann, A.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B.; Zaman, Q. Design and development of a smart variable rate sprayer using deep learning. Remote Sens. 2020, 12, 4091. [Google Scholar] [CrossRef]

- Zhang, X.; Cao, C.; Luo, K.; Wu, Z.; Qin, K.; An, M.; Ding, W.; Xiang, W. Design and operation of a Peucedani Radix weeding device based on YOLOV5 and a parallel manipulator. Front. Plant Sci. 2023, 14, 1171737. [Google Scholar] [CrossRef] [PubMed]

- Raja, R.; Slaughter, D.C.; Fennimore, S.A.; Siemens, M.C. Real-time control of high-resolution micro-jet sprayer integrated with machine vision for precision weed control. Biosyst. Eng. 2023, 228, 31–48. [Google Scholar] [CrossRef]

- Dammer, K. Real-time variable-rate herbicide application for weed control in carrots. Weed Res. 2016, 56, 237–246. [Google Scholar] [CrossRef]

- Utstumo, T.; Urdal, F.; Brevik, A.; Dørum, J.; Netland, J.; Overskeid, Ø.; Berge, T.W.; Gravdahl, J.T. Robotic in-row weed control in vegetables. Comput. Electron. Agric. 2018, 154, 36–45. [Google Scholar] [CrossRef]

- Spaeth, M.; Sökefeld, M.; Schwaderer, P.; Gauer, M.E.; Sturm, D.J.; Delatrée, C.C.; Gerhards, R. Smart sprayer a technology for site-specific herbicide application. Crop Prot. 2024, 177, 106564. [Google Scholar] [CrossRef]

- Parasca, S.C.; Spaeth, M.; Rusu, T.; Bogdan, I. Mechanical weed control: Sensor-based inter-row hoeing in sugar beet (Beta vulgaris L.) in the transylvanian depression. Agronomy 2024, 14, 176. [Google Scholar] [CrossRef]

- Ye, S.; Xue, X.; Si, S.; Xu, Y.; Le, F.; Cui, L.; Jin, Y. Design and testing of an elastic comb reciprocating a soybean plant-to-plant seedling avoidance and weeding device. Agriculture 2023, 13, 2157. [Google Scholar] [CrossRef]

- Chang, C.; Xie, B.; Chung, S. Mechanical control with a deep learning method for precise weeding on a farm. Agriculture 2021, 11, 1049. [Google Scholar] [CrossRef]

- Quan, L.; Jiang, W.; Li, H.; Li, H.; Wang, Q.; Chen, L. Intelligent intra-row robotic weeding system combining deep learning technology with a targeted weeding mode. Biosyst. Eng. 2022, 216, 13–31. [Google Scholar] [CrossRef]

- Fennimore, S.A.; Smith, R.F.; Tourte, L.; LeStrange, M.; Rachuy, J.S. Evaluation and economics of a rotating cultivator in bok choy, celery, lettuce, and radicchio. Weed Technol. 2014, 28, 176–188. [Google Scholar] [CrossRef]

- Tillett, N.D.; Hague, T.; Grundy, A.C.; Dedousis, A.P. Mechanical within-row weed control for transplanted crops using computer vision. Biosyst. Eng. 2008, 99, 171–178. [Google Scholar] [CrossRef]

- Van Der Weide, R.Y.; Bleeker, P.O.; Achten, V.T.J.M.; Lotz, L.A.P.; Fogelberg, F.; Melander, B. Innovation in mechanical weed control in crop rows. Weed Res. 2008, 48, 215–224. [Google Scholar] [CrossRef]

- Lati, R.N.; Rosenfeld, L.; David, I.B.; Bechar, A. Power on! Low-energy electrophysical treatment is an effective new weed control approach. Pest Manag. Sci. 2021, 77, 4138–4147. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Comput. Electron. Agric. 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Young, S.L. Beyond precision weed control: A model for true integration. Weed Technol. 2018, 32, 7–10. [Google Scholar] [CrossRef]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Lehnert, C.; Perez, T. Robot for weed species plant-specific management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- Wu, X.; Aravecchia, S.; Lottes, P.; Stachniss, C.; Pradalier, C. Robotic weed control using automated weed and crop classification. J. Field Robot. 2020, 37, 322–340. [Google Scholar] [CrossRef]

- Merfield, C.N. Robotic weeding’s false dawn? Ten requirements for fully autonomous mechanical weed management. Weed Res. 2016, 56, 340–344. [Google Scholar] [CrossRef]

| Categories | Description | Examples |

|---|---|---|

| Root vegetables | Vegetables with tuberous roots and tubers as edible portions | Carrots, turnips, root beets, white radishes, potatoes, taro, yams, sweet potatoes, winter bamboo shoots, etc. |

| Leafy vegetables | Vegetables with fresh and tender green leaves, petioles, and tender stems as edible portions | Lettuce, spinach, coriander, water spinach, sunflower, celery, chrysanthemum, amaranth, celery, rapeseed, water spinach, etc. |

| Cabbages | Vegetables with leaf bulbs, tender stems, flower bulbs, and tender leaf clusters as edible portions | Chinese cabbage, head cabbage, bulbous cabbage, cauliflower, baby cabbage, broccoli, pickled cabbage, head mustard, etc. |

| Fruits and melons | Vegetables with fruit as edible portions | Pumpkin, golden pumpkin, winter melon, bitter gourd, breast gourd, cucumber, luffa, bergamot, watermelon, Hu gua, gourd, watermelon, tomato, eggplant, etc. |

| Scallions and garlics | Vegetables with bulbs, pseudostems, tubular leaves, or strip-shaped leaves as edible portions | Onions, scallions, scallions, garlic, chives, etc. |

| Sprouted vegetables | Cultivate edible “sprouts” from seeds of various grains, beans, and trees | Pea sprouts, Chinese toon sprouts, radish sprouts, buckwheat sprouts, peanut sprouts, ginger sprouts, soybean sprouts, mung bean sprouts, bean sprouts, etc. |

| Beans | Vegetables with bean kernels or tender pods as edible portions | Kidney beans, cowpeas, peas, string beans, knife beans, lentils, green beans, mung beans, broad beans, kidney beans, eyebrow beans, four winged beans, etc. |

| Aquatic vegetables | Vegetables that grow in water and are edible | Lotus root, water chestnut, water celery, water chestnuts, cigu, water bamboo, etc. |

| Fungi | Fungi that are non-toxic to the human body and can be safely consumed | Black fungus, white fungus, ground fungus, stone fungus, shiitake mushroom, shiitake mushroom, shiitake mushroom, monkey head mushroom, etc. |

| Platforms | Crops | Weeds | Sensors | Resolution Ratio/mm·pixel−1 | Recognition Algorithms | Precision | References |

|---|---|---|---|---|---|---|---|

| UAV | Wheat | Broadleaf herba, Gramineae | RGB | 2.7 | Random forest (RF) | 72% | Anderegg et al. [95] |

| Gahnia tristis | RGB/Multispectral | 10/30 | UNET-ResNet | >90% | Fraccaro et al. [96] | ||

| Multispectral | 11.6 | RF | >93% | Su et al. [92] | |||

| Alopecurus myosuroides | RGB, Multispectral | 32 | RF | 87% (RGB); 61% (Multispectral) | Lambert et al. [97] | ||

| Multispectral | 82.7 | Convolutional Neural Networks (CNN) | 82.5% | Lambert et al. [98] | |||

| Matricaria chamomilla L., Papaver rhoeas L., Veronica hederifolia L., and Viola arvensis ssp. Arvensis | RGB | - | Deep residual convolutional neural network (ResNet-18) | 94% | Camargo et al. [93] | ||

| Maize | Cyperus rotundus L., Cynodon dactylon L., Malva sylvestris L., Artemisia vulgaris L., Polygonum aviculare L. | Multispectral | 50/80/90 | Support vector machine (SVM) | 61% | Castaldi et al. [99] | |

| Convolvulus canariensis, Chenopodium album, Digitaria sanguinalis | RGB | 1.78 | Hough transform (HT) and object based image analysis (OBIA) | 94.5% | Gao et al. [100] | ||

| Sugar beet | Cirsium arvensis L. | Multispectral | 5.2 | PLS-DA analysis model | 95% (weeds); 89% (sugar beet) | Garcia-Ruiz et al. [101] | |

| Galinsoga spec., Amaranthus retroflexus, Atriplex spec., Polygonum spec., Gramineae, Convolvulusarvensis, Stellaria media, Taraxacum spec. | Multispectral | 82 | Improved Deep Nueral Network (DNN) | >78% | Sa et al. [102] | ||

| Marigold | Setaria viridis, Asclepias syriaca, Cistothorus stellaris | RGB | 5 | U-net | >93% | Zou et al. [103] | |

| Pea, Strawberry | Eleusine indica | RGB | 3 | Semi-Supervised Generative Adversarial Network (SGAN) | 90% | Khan et al. [104] | |

| Oat | Chamaemelum nobile, Cirsium arvense | RGB | 35 | RF, K-means | >87% | Gašparović et al. [105] | |

| Cotton, Sunflower | Conyza crispa, Fallopia japonica, Chenopodium retusum, Phalaris canariensis, Convolvulus arvensis | RGB | 12–24 | OBIA | 81% (Cotton); 84% (Sunflower) | de Castro et al. [106] | |

| Sunflower | Chenopodium retusum, Brassica juncea var. megarrhiza, Convolvulus arvensis, Chenopodium album Linn. | RGB, Multispectral | - | OBIA | >85% | López-Granados et al. [107] | |

| Sunflower, maize | Chenopodium retusum, Brassica juncea var. megarrhiza, Convolvulus arvensis, Batis maritime | RGB | 14 | OBIA | 95% (Sunflower); 79% (maize) | Pérez-Ortiz et al. [108] | |

| Onion | Cyperus rotundus, Sorghum halepense, Chenopodium album, Xanthium strumarium, Amaranth, Conyza bonariensis L., Solanum L. | RGB | 5 | Maximum Likelihood and SVM | >85% | Rozenberg et al. [109] | |

| Sorghum | Chenopodium album L., Cirsium arvense L. | RGB | 1 | Deblurring and segmentation models | 83.7% | Genze et al. [110] | |

| Tomato | Solanum nigrum, Amaranthus retroflexus | Hyperspectral | - | Multiclassifier architecture | 95.8% | Zhang et al. [111] | |

| Rice | Leptochloa chinensis Nees, Cyperus L. | RGB | 3/5 | Fully convolutional network (FCN) | >90% | Huang et al. [112,113] | |

| Aviation aircraft | Pea, broad bean and winter wheat | Diplotaxis spp., Sinapis spp. | RGB, NIR | 250 | Vegetation indices, Maximum Likelihood | >85% | de Castro et al. [114] |

| QuickBird satellite | Wheat | Brassica napus | Multispectral | 2.4 × 103 | Vegetation indices, Maximum Likelihood | >89% | de Castro et al. [115] |

| Avena sterilis | Multispectral | 2.4 × 103 | Maximum Likelihood, SVM | >91% | Castillejo -González et al. [116] | ||

| Sentinel-1A and Sentinel-2A | Beans, beetroot, maize, potato, and wheat | - | Multispectral, MSI | 10/20/60 × 103 | Kernel-based extreme learning machine (KELM) | 96.8% | Sonobe et al. [94] |

| Sentinel-2 satellite | Potato | Triticum aestivum | Multispectral, NIR, SWIR | - | Unsupervised classification | 86% | Revathy et al. [117] |

| Maize | Davilla strigosa | Multispectral | 10 × 103 | Guided regularized random forest (GRRF)/Machine learning discriminant | >85% | Mudereri et al. [118,119] | |

| RF/Subpixel multiple endmember spectral mixture analysis (MESMA) | 88% | ||||||

| Richardia brasiliensis, Chenopodium album, Cyperus esculentus, Megathyrsus maximus | 10/20/60 × 103 | RF | 95% | Mkhize et al. [120] | |||

| PlanetScope and Sentinel-2 | Davilla strigosa | 3/10 × 103 | GRRF | 92%(PS); 88%(S2) | Mudereri et al. [121] |

| Platforms | Crops | Sensors | Resolution Ratio | Detection Algorithms | Precision | References |

|---|---|---|---|---|---|---|

| UAV | Grapevine, pear, and tomato | RGB | 0.1 m | Thresholding algorithms, classification algorithms, and Bayesian segmentation | >90% | Ronchetti et al. [127] |

| Sugar beet, maize | Multispectral | 2.5 cm | CRowNet—a model formed with SegNet (S-SegNet) and a CNN based Hough transform (HoughCNet) | 93.58% | Bah et al. [128] | |

| Spinach, legumes | RGB | 0.35 cm | Hough transform; simple linear iterative clustering (SLIC); ResNet-18 (CNN) | >90% | Bah et al. [125] | |

| Robot | Sugar beet, maize | RGB | - | Deep learning (DL) | 90.25% | Silva et al. [129] |

| Cabbage | RGB | 1920 × 1080 px | A method of cabbage crop row localization and multi-row adaptive ROI extraction based on the limited threshold vertical projection | 95.75% | Han et al. [130] | |

| Sugar beet | RGB-D | 1280 × 720 px | A semantic segmentation approach for crop row detection based on U-Net | 85% | Silva et al. [131] | |

| Sugar beet, canola, and leek | 3D laser and vision camera | - | Pattern Hough transform | - | Winterhalter et al. [132] | |

| Kohlrabi, Chinese cabbage, and pointed cabbage | GNSS and colour camera | 5-megapixel | Localization—beyond crop row following | 83% | Winterhalter et al. [133] | |

| Cucumber | RGB | 5-megapixel | Prediction point Hough transform algorithm | - | Chen et al. [134] | |

| Cabbage and red cabbage | RGB/Multispectral | 1280 × 960 px | CNN Vegetables Detection—characterization method | 90.5% | Cruz Ulloa et al. [135] | |

| Maize | Hyperspectral | 2 nm | Self-supervised method | >80% | Wendel et al. [136] | |

| Tractor | Soybean | RGB | 1280 × 480 px | Image analysis method | - | Ospinad et al. [137] |

| Rice, rape, and wheat | RGB | 480 × 640 px | Shear-binary-image algorithm; least square method | 96.7% | Zhang et al. [138] | |

| Handheld camera/UAV | Cabbage, kohlrabi, and rice | RGB | 4032 × 3024 px | Improved YOLO-v8 and threshold-DBSCAN | 98.9%/97.9%/100% | Shi et al. [139] |

| Mobile phone/Moving vehicle | Potato | RGB | 1280 × 720 px | Deep learning (VGG16) and feature midpoint adaptation | >90% | Yang et al. [140] |

| Handheld camera | Sugar beet, maize | Multispectral | 6 mm | Unsupervised classification algorithm based on spatial information (Hough transform) and spectral information | 100% | Louargant et al. [141] |

| Public data | Sugar beet, legumes | Multispectral | - | CNN (autoencoder) and simple linear iterative clustering (SLIC) | 88% | Bah et al. [142] |

| Corn, celery, potato, onion, sunflower, and soybean | RGB | - | Hough transform and DBSCAN clustering analysis | 63.3% | Zhao et al. [143] | |

| Maize, celery, potato, onion, sunflower, and soybean | RGB | 2560 × 1920 px | New crop row detection algorithm | 84% | Rabab et al. [144] | |

| New method | 74.7% | Vidović et al. [145] | ||||

| Garlic, corn, oilseed rape, rice, and wheat | RGB | - | Seedling crop row extraction method based on regional growth and mean shift clustering | 98.18% | Wang et al. [146] | |

| Lettuce and cabbage | RGB | 768 × 1024 px | Automatic accumulation threshold of Hough Transformation; K-means clustering method | 97.1% | Chen et al. [147] | |

| Cauliflower | RGB | 2704 × 1520 px | Kalman filtering and Hungarian algorithm | 99.34% | Hamuda et al. [148] |

| Crops | Weeds | Platforms | Sensors | Image Pre-Processing Methods | Image Segmentation Methods | Feature Extraction Methods | Classification Algorithms | Accuracy | Precision | References |

|---|---|---|---|---|---|---|---|---|---|---|

| Carrot, maize, and tomato | - | Robot | RGB | Colour space transformation from RGB to CIE Luv | Based on learning method | Neighbourhood pixel information | SVM | - | 91%/88%/89% | Rico-Fernández et al. [157] |

| Carrot | - | Public data | RGB | Technique of contrast limited adaptive histogram equalisation (CLAHE); dimensionality reduction | - | Laws’ texture masks | RF | 94% | - | Kamath et al. [158] |

| Impatiens balsamina Linn., Humifuse Euphorbia Herb, Eleusine indica | - | Hyperspectral | Data normalization, soil background removal | Normalized Vegetation Index (NDVI) | Band selection, wavelet transform | Fisher linear discriminant analysis (LDA) and SVM | - | >85% | Liu et al. [159] | |

| Sugar beet | Thistle | Ground vehicle | RGB | - | Modified excess green (ExG) index | Local features based on affine invariant regions and scale invariant keypoints, colour vegetation indices | Bag-of-Visual-Word scheme with SVM | 99.07% | - | Kazmi et al. [160] |

| - | Robot | RGB + NIR | Normalization processing | Near-infrared information | Statistical and shape features | RF | - | >90% | Lottes et al. [161] | |

| Spinach | - | Robot | RGB | - | ExG | Image filtering to extract colour and area features | A classification based on area | - | >80% | Pulido-Rojas et al. [162] |

| Lettuce | Chenopodium serotinum, Polygonum lapathifolium | Robot | RGB | - | Based on colour features | Histogram of oriented gradient (HOG), local binary pattern (LBP), and grey-level co-occurrence matrix (GLCM) | SVM and image blocking | - | 94.73% | Zhang et al. [163] |

| Tomato, cotton | Solanum nigrum, Abutilon theophrasti | - | RGB | Random rotation and zooming, Gaussian, Poisson, and salt–pepper noise addition, and contrast and brightness shifts | Normalized difference vegetation index, OTSU threshold | - | Fine-tuned densenet and SVM | - | 99.29% (F1) | Espejo-Garcia et al. [164] |

| Broccoli, lettuce | Bromus, Chenopodium retusum, Chenopodium album Linn., Dysophyllastellata Benth., Echinochloa crusgalli L., Convolvulus arvensis, Impatiens balsamina Linn., Trifolium repens | Remote-controlled ground vehicle | RGB-D | Removed invalid pixels and noise pixels in point clouds | Colour and depth information | Canopy and leaf features | ML; Colour-depth fusion-segmentation algorithm | - | 96.6%/92.4% | Gai et al. [165] |