A Method for Cropland Layer Extraction in Complex Scenes Integrating Edge Features and Semantic Segmentation

Abstract

:1. Introduction

2. Materials and Methods

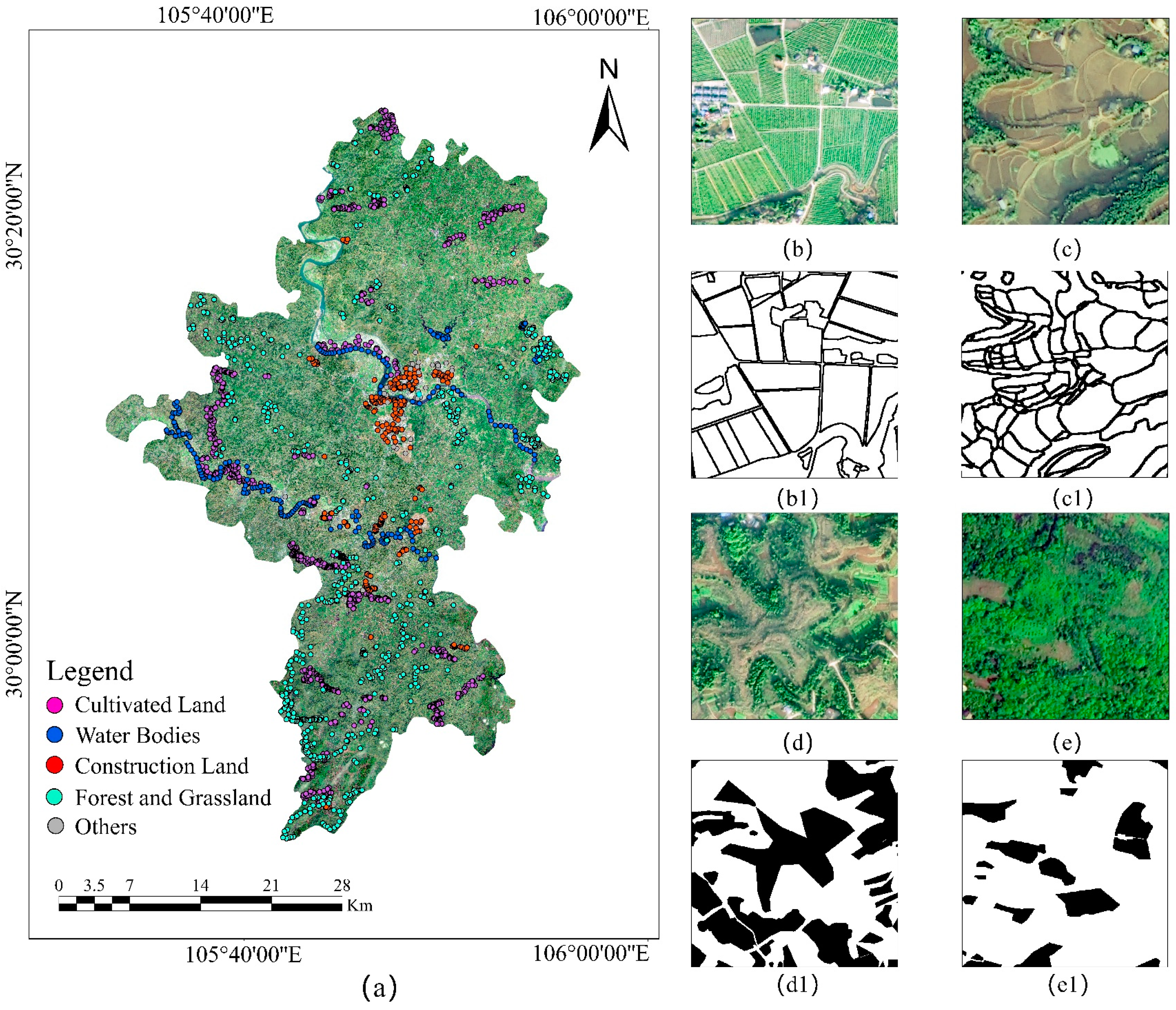

2.1. Study Area and Data

2.1.1. Study Area

2.1.2. Data and Processing

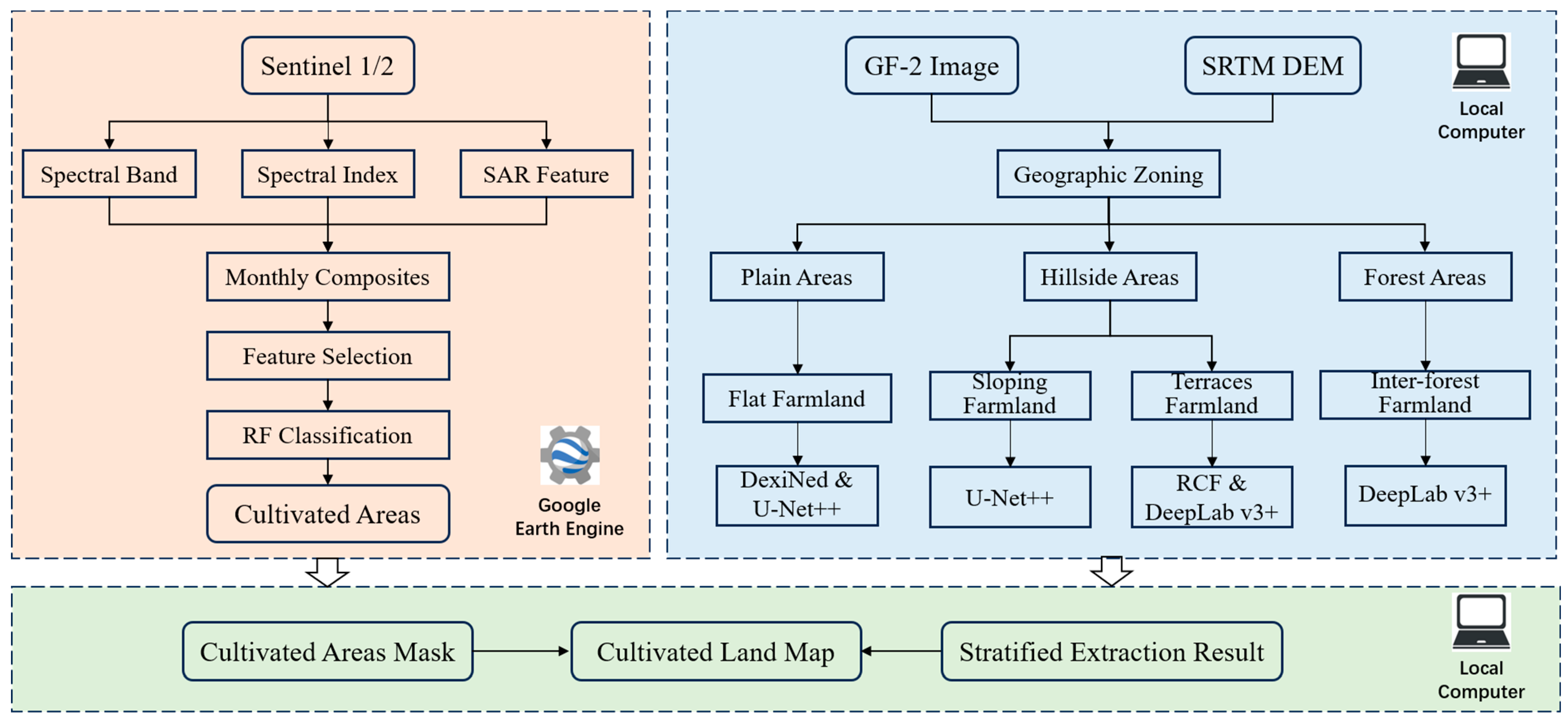

2.2. Research Methodology

2.2.1. Extraction of Cultivated Land Areas

2.2.2. Regional Partitioning and Cultivated Land Layering

2.2.3. Layered Extraction of Cultivated Land

2.2.4. Integration of Extraction Results

3. Result

3.1. Overall Distribution Mapping of Cultivated Land

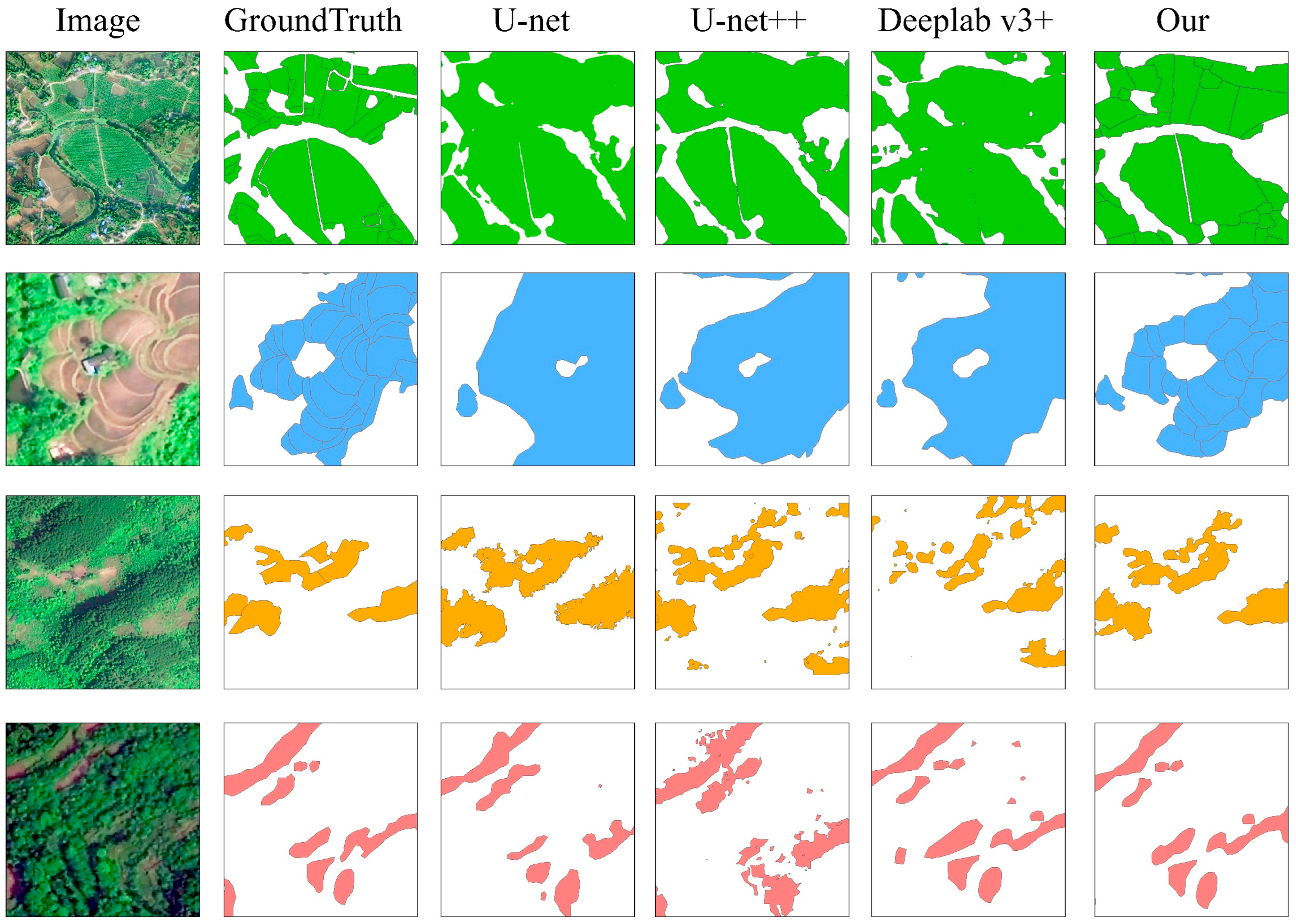

3.2. Analysis of Different Types of Cultivated Land Extraction

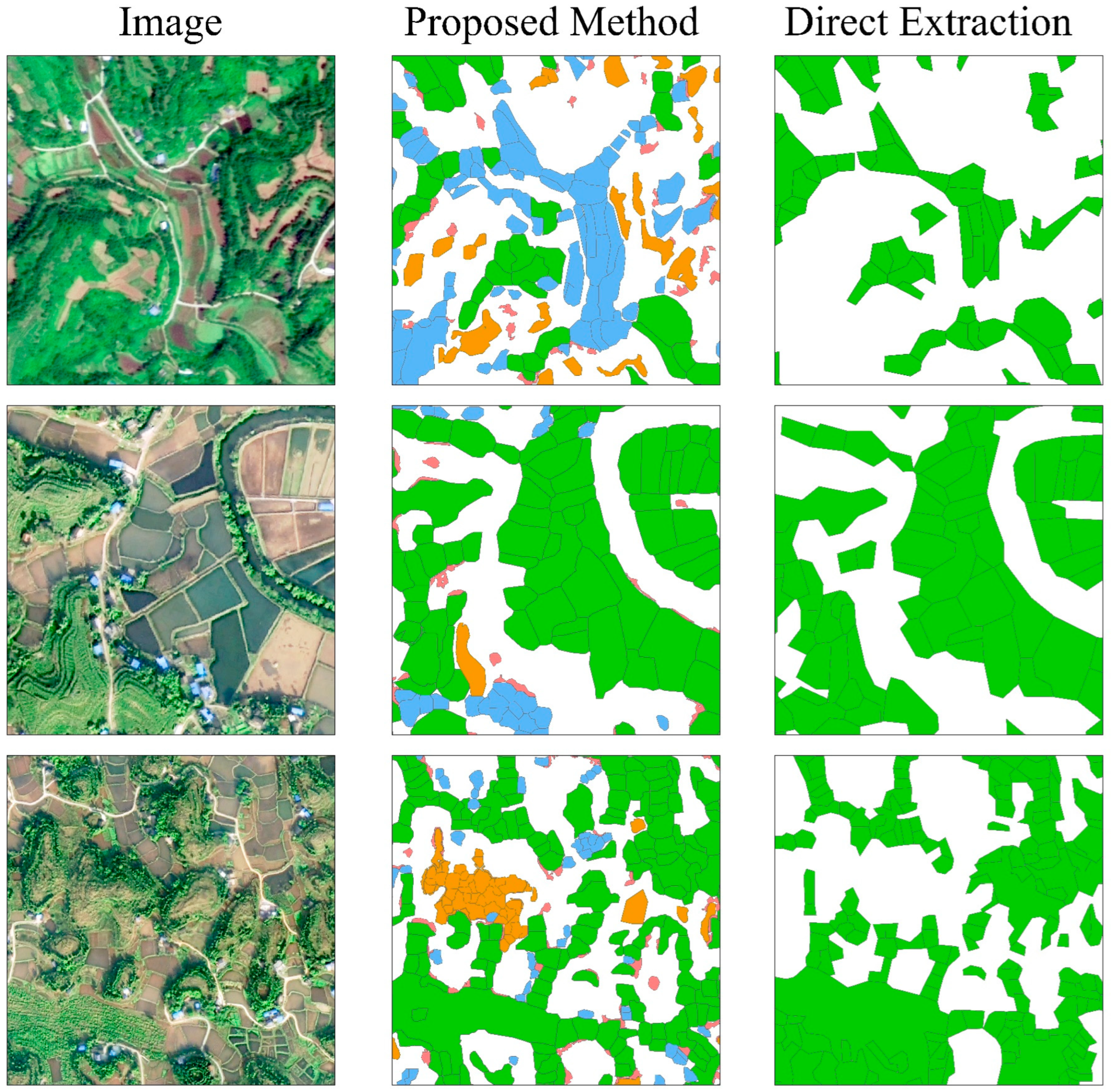

3.3. Analysis of the Role of Partitioning and Layering

3.4. Efficiency Analysis of Cultivated Land Extraction

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dong, J.W.; Wu, W.B.; Huang, J.X.; You, N.S.; He, Y.L.; Yan, H.M. State of the art and perspective of agricultural land use remote sensing information extraction. J. Geo-Inf. Sci. 2020, 22, 772–783. [Google Scholar]

- Chen, Z.X.; Ren, J.Q.; Tang, H.J.; Shi, Y.; Leng, P.; Liu, J.; Wang, L.M.; Wu, W.B.; Yao, Y.M. Hashtuya. Progress and perspectives on agricultural remote sensing research and applications in China. J. Remote Sens. 2016, 20, 748–767. [Google Scholar]

- Tilman, D.; Cassman, K.G.; Matson, P.A.; Naylor, R.; Polasky, S. Agricultural sustainability and intensive production practices. Nature 2002, 418, 671–677. [Google Scholar] [CrossRef] [PubMed]

- Wu, B.; Zhang, F.; Liu, C.; Zhang, L.; Luo, Z.M. An integrated method for crop condition monitoring. J. Remote Sens. 2004, 8, 498–514. [Google Scholar]

- Wang, J.; Xin, L. Spatial-temporal variations of cultivated land and grain production in China based on GlobeLand30. Trans. Chin. Soc. Agric. Eng. 2017, 33, 1–8. [Google Scholar]

- Chen, J.; Huang, J.; Lin, H.; Pei, Z.Y. Rice yield estimation by assimilation remote sensing into crop growth model. Sci. Chin. Inf. Sci. 2010, 40, 173–183. [Google Scholar]

- Ozdogan, M. The spatial distribution of crop types from MODIS data: Temporal unmixing using Independent Component Analysis. Remote Sens. Environ. 2010, 114, 1190–1204. [Google Scholar] [CrossRef]

- Zhang, G.; Zhu, Y.; Zhai, B. WebGIS-based warning information system for crop pest and disease. Trans. Chin. Soc. Agric. Eng. 2007, 23, 176–181. [Google Scholar]

- Stibig, H.J.; Belward, A.S.; Roy, P.S.; Rosalina-Wasrin, U.; Agrawal, S.; Joshi, P.K.; Hildanus Beuchle, R.; Fritz, S.; Mubareka, S.; Giri, C. A land-cover map for South and Southeast Asia derived from SPOT-VEGETATION data. J. Biogeogr. 2007, 34, 625–637. [Google Scholar] [CrossRef]

- Li, S.; Zhang, R. The decision tree classification and its application in land cover. Areal Res. 2003, 22, 17–21. [Google Scholar]

- Chen, J.; Chen, J.; Liao, A.P.; Cao, X.; Chen, L.J.; Chen, X.H.; He, C.Y.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, J.; Ning, T. Progress and Prospect of Cultivated Land Extraction from High-Resolution Remote Sensing Images. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1582–1590. [Google Scholar]

- Li, S.T.; Li, C.Y.; Kang, X.D. Development status and future prospects of multi-source remote sensing image fusion. Natl. Remote Sens. Bull. 2021, 25, 148–166. [Google Scholar] [CrossRef]

- Cord, A.F.; Klein, D.; Mora, F.; Dech, S. Comparing the suitability of classified land cover data and remote sensing variables for modeling distribution patterns of plants. Ecol. Model. 2014, 272, 129–140. [Google Scholar] [CrossRef]

- Valjarević, A.; Popovici, C.; Štilić, A.; Radojković, M. Cloudiness and water from cloud seeding in connection with plants distribution in the Republic of Moldova. Appl. Water Sci. 2022, 12, 262. [Google Scholar] [CrossRef]

- Hernandez, I.E.R.; Shi, W.Z. A Random Forests classification method for urban land-use mapping integrating spatial metrics and texture analysis. Int. J. Remote Sens. 2018, 39, 1175–1198. [Google Scholar] [CrossRef]

- Li, C.J.; Huang, H.; Li, W. Research on agricultural remote sensing image cultivated land extraction technology based on support vector. Instrum. Technol. 2018, 11, 5–8. [Google Scholar]

- Singh, M.; Tyagi, K.D. Pixel based classification for Landsat 8 OLI multispectral satellite images using deep learning neural network. Remote Sens. Appl. Soc. Environ. 2021, 24, 100645. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Saito, S.; Yamashita, T.; Aoki, Y. Multiple Object Extraction from Aerial Imagery with Convolutional Neural Networks. Electron. Imaging 2016, 28, 10402-1–10402-9. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, K.; Ji, S. Simultaneous Road Surface and Centerline Extraction From Large-Scale Remote Sensing Images Using CNN-Based Segmentation and Tracing. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8919–8931. [Google Scholar] [CrossRef]

- Wang, G.J.; Hu, Y.F.; Zhang, S.; Ru, Y.; Chen, K.N.; Wu, M.J. Water identification from the GF-1 satellite image based on the deep Convolutional Neural Networks. Natl. Remote Sens. Bull. 2022, 26, 2304–2316. [Google Scholar] [CrossRef]

- Farabet, C.; Couprie, C.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1915–1929. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar]

- Cheng, W.F.; Zhou, Y.; Wang, S.X.; Han, Y.; Wang, F.T.; Pu, Q.Y. Study on the Method of Recognizing Abandoned Farmlands Based on Multispectral Remote Sensing. Spectrosc. Spectral Anal. 2011, 31, 1615–1620. [Google Scholar]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T.T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Liu, Y.; Shen, C.; Lin, G.; Reid, I. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Peng, L.; Hu, Y.; Chi, T. FD-RCF-Based Boundary Delineation of Agricultural Fields in High Resolution Remote Sensing Images. J. Univ. Chin. Acad. Sci. 2020, 37, 483–489. [Google Scholar]

- Zhou, N.; Yang, P.; Wei, C.; Shen, Z.F.; Yu, J.J.; Ma, X.Y.; Luo, J.C. Accurate Extraction Method for Cropland in Mountainous Areas Based on Field Parcel. Trans. Chin. Soc. Agric. Eng. 2021, 37, 260–266. [Google Scholar]

- Ren, H.; Zhao, Y.; Li, X.; Ge, Y. Cultivated Land Fragmentation in Mountainous Areas Based on Different Resolution Images and Its Scale Effects. Geogr. Res. 2020, 39, 1283–1294. [Google Scholar]

- Jing, X.; Wang, J.D.; Wang, J.H.; Huang, W.J.; Liu, L.Y. Classifying Forest Vegetation Using Sub-Region Classification Based on Multi-Temporal Remote Sensing Images. Remote Sens. Technol. Appl. 2008, 23, 394–397. [Google Scholar]

- Liu, D.; Han, L.; Han, X. High Spatial Resolution Remote Sensing Image Classification Based on Deep Learning. Acta Opt. Sin. 2016, 36, 0428001. [Google Scholar]

- Wu, Z.F.; Luo, J.C.; Sun, Y.W.; Wu, T.J.; Cao, Z.; Liu, W.; Yang, Y.P.; Wang, L.Y. Research on Precision Agriculture Based on the Spatial-Temporal Remote Sensing Collaboration. J. Geo-Inf. Sci. 2020, 22, 731–742. [Google Scholar]

- Liu, W.; Wu, Z.F.; Luo, J.C.; Sun, Y.W.; Wu, T.J.; Zhou, N.; Hu, X.D.; Wang, L.Y.; Zhou, Z.F. A Divided and Stratified Extraction Method of High-Resolution Remote Sensing Information for Cropland in Hilly and Mountainous Areas Based on Deep Learning. Acta Geod. Cartogr. Sin. 2021, 50, 105–116. [Google Scholar]

- Luo, J.C.; Wu, T.J.; Wu, Z.F.; Zhou, Y.N.; Gao, L.J.; Sun, Y.W.; Wu, W.; Yang, Y.P. Methods of Intelligent Computation and Pattern Mining Based on Geo-Parcels. J. Geo-Inf. Sci. 2020, 22, 57–75. [Google Scholar]

- You, N.; Dong, J. Examining the Earliest Identifiable Timing of Crops Using All Available Sentinel-1/2 Imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Wan, J.; Liao, J.J.; Xu, T.; Shen, G. Accuracy evaluation of SRTM data based on ICESat/GLAS altimeter data: A case study in the Tibetan Plateau. Remote Sens. Land Resour. 2015, 27, 100–105. [Google Scholar]

- Zanaga, D.; Van De Kerchove, R.; De Keersmaecker, W.; Souverijns, N.; Brockmann, C.; Quast, R.; Wevers, J.; Grosu, A.; Paccini, A.; Vergnaud, S.; et al. ESA WorldCover 10 m 2020 v100. 2021. Available online: https://worldcover2020.esa.int (accessed on 1 August 2023).

- Zanaga, D.; Van De Kerchove, R.; Daems, D.; De Keersmaecker, W.; Brockmann, C.; Kirches, G.; Wevers, J.; Cartus, O.; Santoro, M.; Fritz, S.; et al. ESA WorldCover 10 m 2021 v200. 2022. Available online: https://worldcover2021.esa.int (accessed on 1 August 2023).

- Yang, J.; Huang, X. The 30 m annual land cover datasets and its dynamics in China from 1985 to 2023. Earth Syst. Sci. Data 2024, 13, 3907–3925. [Google Scholar] [CrossRef]

- Huang, X.; Meng, Q. Land Cover Classification of Sentinel-2 Image Based on Multi-feature Convolution Neural Network. J. Appl. Sci. 2023, 41, 766–776. [Google Scholar]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A Scalable Satellite-Based Crop Yield Mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Clinton, N.; Wang, J.; Wang, X.; Liu, C.; Gong, P.; Yang, J.; Bai, Y.; Zheng, Y.; et al. Mapping Major Land Cover Dynamics in Beijing Using All Landsat Images in Google Earth Engine. Remote Sens. Environ. 2017, 202, 166–176. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Xiang, K.; Yuan, W.; Wang, L.; Deng, Y. An LSWI-Based Method for Mapping Irrigated Areas in China Using Moderate-Resolution Satellite Data. Remote Sens. 2020, 12, 4181. [Google Scholar] [CrossRef]

- Xu, H. Modification of Normalized Difference Water Index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Sulik, J.J.; Long, D.S. Spectral considerations for modeling yield of canola. Remote Sens. Environ. 2016, 184, 161–174. [Google Scholar] [CrossRef]

- Kearney, M.S.; Rogers, A.S.; Townshend, J.R.G.; Rizzo, E.; Stutzer, D.; Stevenson, J.C.; Sundborg, K. Developing a model for determining coastal marsh “health”. In Proceedings of the Third Thematic Conference on Remote Sensing for Marine and Coastal Environments, Seattle, WA, USA, 18–20 September 1995. [Google Scholar]

- Lyu, S.Y.; Li, J.T.; A, X.H.; Yang, C.; Yang, R.C.; Shang, X.M. Res_ASPP_UNet++: Building an Extraction Network from Remote Sensing Imagery Combining Depthwise Separable Convolution with Atrous Spatial Pyramid Pooling. Nat. Remote Sens. Bull. 2023, 27, 502–519. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Y.Y.; Qin, Z.Y.; Zhang, M.Y.; Zhang, J. Remote Sensing Extraction Method of Terraced Fields Based on Improved DeepLab v3+. Smart Agric. 2024, 6, 46–57. [Google Scholar]

- Dong, D.; Ming, D.; Weng, Q.; Yang, Y.; Fang, K.; Xu, L.; Du, T.; Zhang, Y.; Liu, R. Building Extraction from High Spatial Resolution Remote Sensing Images of Complex Scenes by Combining Region-Line Feature Fusion and OCNN. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2023, 16, 4423–4438. [Google Scholar] [CrossRef]

- Chen, Q.; Zhu, L.; Lyu, S.D.; Wu, J. Segmentation of High-Resolution Remote Sensing Image by Collaborating with Edge Loss. Enhancement. J. Image Graphics. 2021, 26, 674–685. [Google Scholar]

| No. | Satellite | Resolution (m) | Time Range | Composition Frequency |

|---|---|---|---|---|

| 1 | GF-2 | 0.8 (panchromatic band) | 1 January 2022–1 July 2022 | Annual Composition |

| 3.24 (multispectral bands) | ||||

| 2 | Sentinel-1 | 10 | 1 March 2022–1 October 2022 | Monthly Composition |

| 3 | Sentinel-2 | 10 | 1 March 2022–1 October 2022 | Monthly Composition |

| Feature Category | Feature Name |

|---|---|

| Spectral Bands | Red Band |

| Green Band | |

| Blue Band | |

| Near-Infrared Band | |

| SWIR1 | |

| SWIR2 | |

| Spectral Indexes | NDVI |

| NDYI | |

| NDSI | |

| MNDWI | |

| LSWI | |

| SAR Features | VH |

| VV | |

| VH/VV |

| Cultivated Land Type | Number of Plots | Proportion of Plots (%) | Area (km2) | Area Proportion (%) |

|---|---|---|---|---|

| Flat Cultivated Land | 302,609 | 46.93 | 593.134 | 72.42 |

| Terraced Cultivated Land | 164,382 | 25.49 | 111.376 | 13.60 |

| Sloping Cultivated Land | 31,959 | 4.96 | 81.602 | 9.96 |

| Forest Intercrop Land | 145,902 | 22.62 | 32.931 | 4.02 |

| Cultivated Land Type | Method | IoU | OA (%) | Kappa |

|---|---|---|---|---|

| Flat Cultivated Land | Proposed Method | 0.8788 | 92.49 | 0.8461 |

| U-net | 0.7732 | 84.21 | 0.6653 | |

| U-net++ | 0.8082 | 87.40 | 0.7345 | |

| DeepLab v3+ | 0.7906 | 84.46 | 0.6722 | |

| Terraced Cultivated Land | Proposed Method | 0.8831 | 96.18 | 0.9112 |

| U-net | 0.6821 | 85.66 | 0.7052 | |

| U-net++ | 0.7119 | 87.22 | 0.7337 | |

| DeepLab v3+ | 0.7636 | 90.18 | 0.7901 | |

| Sloping Cultivated Land | Proposed Method | 0.7335 | 93.80 | 0.8017 |

| U-net | 0.6363 | 89.01 | 0.7023 | |

| U-net++ | 0.6236 | 90.05 | 0.7048 | |

| DeepLab v3+ | 0.5044 | 82.70 | 0.5538 | |

| Forest Intercrop Land | Proposed Method | 0.7164 | 78.83 | 0.5668 |

| U-net | 0.5260 | 77.54 | 0.4709 | |

| U-net++ | 0.4654 | 75.17 | 0.4051 | |

| DeepLab v3+ | 0.7057 | 78.57 | 0.5280 |

| Indicator | IoU | OA (%) | Kappa |

|---|---|---|---|

| Proposed Method | 0.8181 | 95.07 | 0.9004 |

| Direct Extraction Method | 0.6654 | 79.29 | 0.5686 |

| Extraction Method | Step | Time (hours) | Total Time (hours) |

|---|---|---|---|

| Proposed Method | Personnel Training | 8 | 304 |

| Sample Preparation | 72 | ||

| Model Training | 144 | ||

| Model Prediction | 30 | ||

| Post-processing | 50 | ||

| Manual Interpretation | Personnel Training | 8 | 2118 |

| Feature Mapping | 2110 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Li, L.; Dong, W.; Zheng, Y.; Zhang, X.; Zhang, J.; Wu, T.; Liu, M. A Method for Cropland Layer Extraction in Complex Scenes Integrating Edge Features and Semantic Segmentation. Agriculture 2024, 14, 1553. https://doi.org/10.3390/agriculture14091553

Lu Y, Li L, Dong W, Zheng Y, Zhang X, Zhang J, Wu T, Liu M. A Method for Cropland Layer Extraction in Complex Scenes Integrating Edge Features and Semantic Segmentation. Agriculture. 2024; 14(9):1553. https://doi.org/10.3390/agriculture14091553

Chicago/Turabian StyleLu, Yihang, Lin Li, Wen Dong, Yizhen Zheng, Xin Zhang, Jinzhong Zhang, Tao Wu, and Meiling Liu. 2024. "A Method for Cropland Layer Extraction in Complex Scenes Integrating Edge Features and Semantic Segmentation" Agriculture 14, no. 9: 1553. https://doi.org/10.3390/agriculture14091553

APA StyleLu, Y., Li, L., Dong, W., Zheng, Y., Zhang, X., Zhang, J., Wu, T., & Liu, M. (2024). A Method for Cropland Layer Extraction in Complex Scenes Integrating Edge Features and Semantic Segmentation. Agriculture, 14(9), 1553. https://doi.org/10.3390/agriculture14091553