Emerging Technologies for Precision Crop Management Towards Agriculture 5.0: A Comprehensive Overview

Abstract

1. Introduction

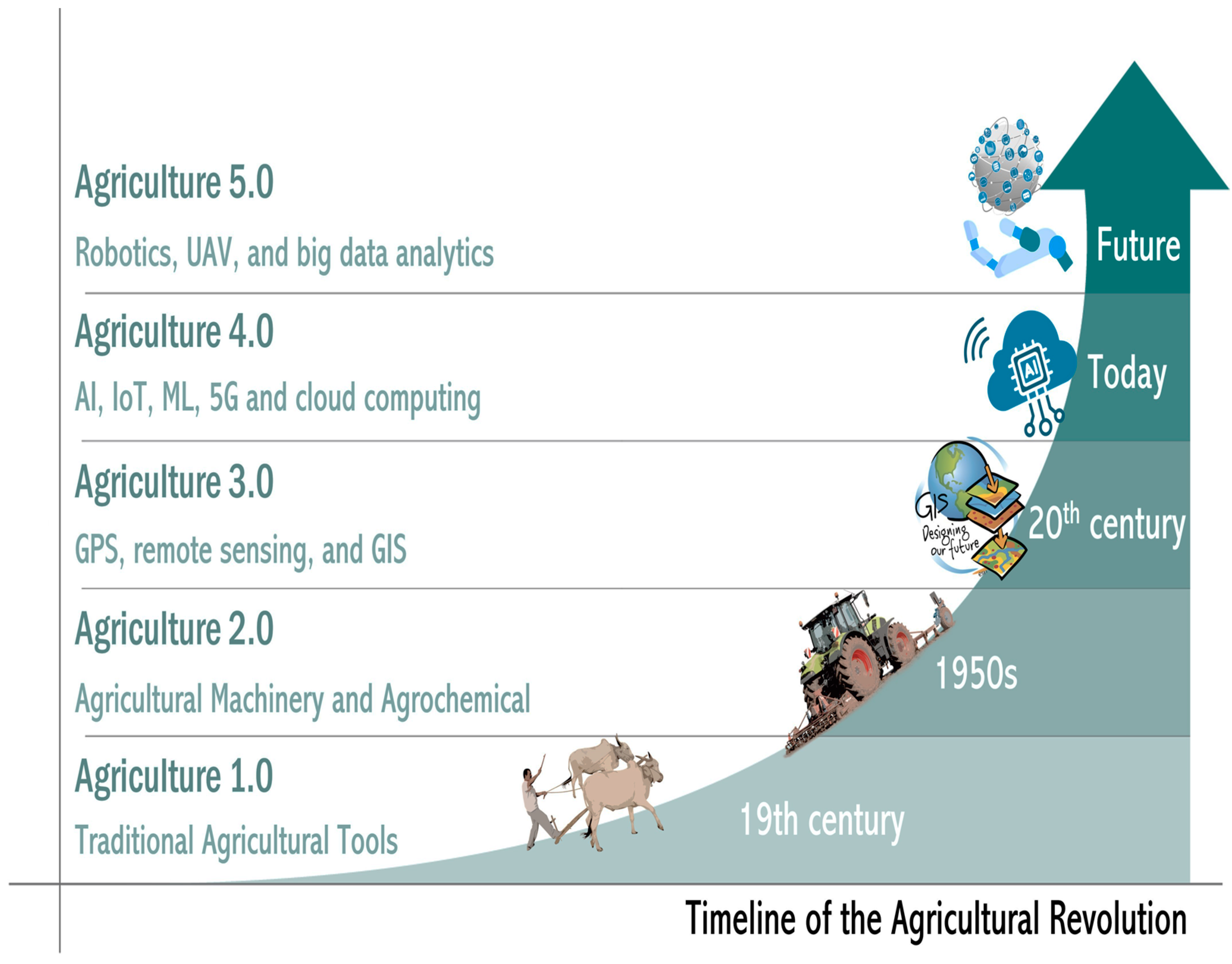

2. From Traditional Agriculture to Smart Farming and Agriculture 5.0

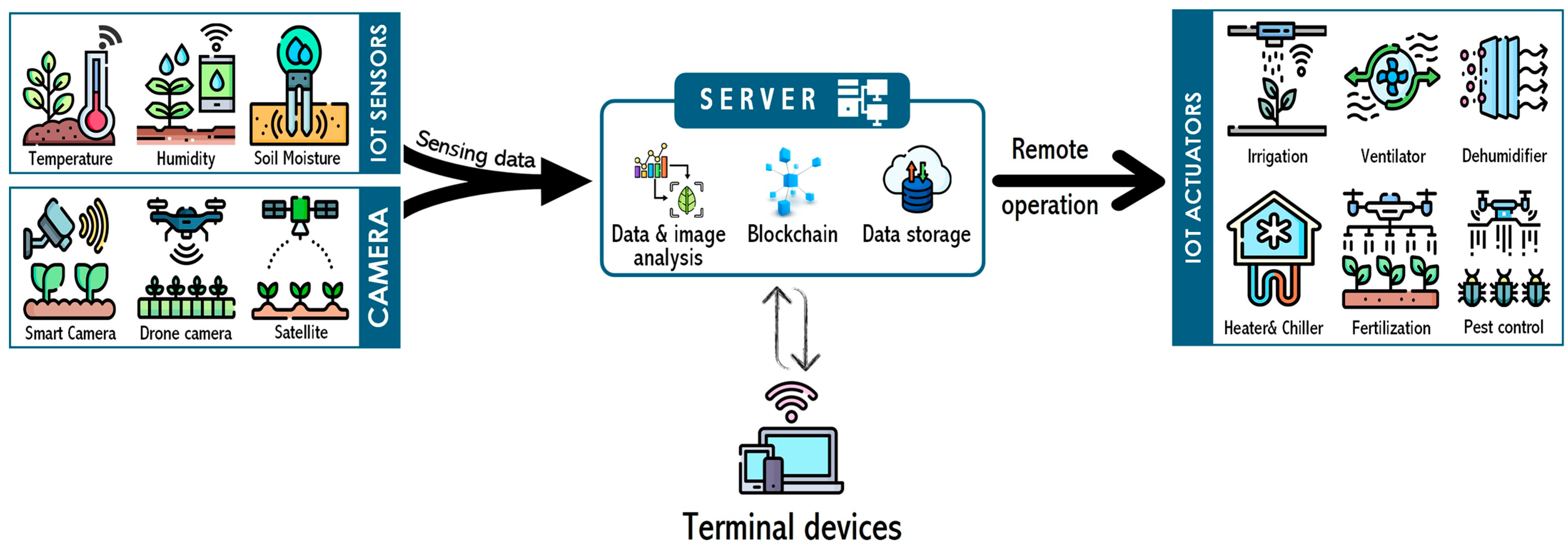

3. Enabling Technologies for Agriculture 5.0

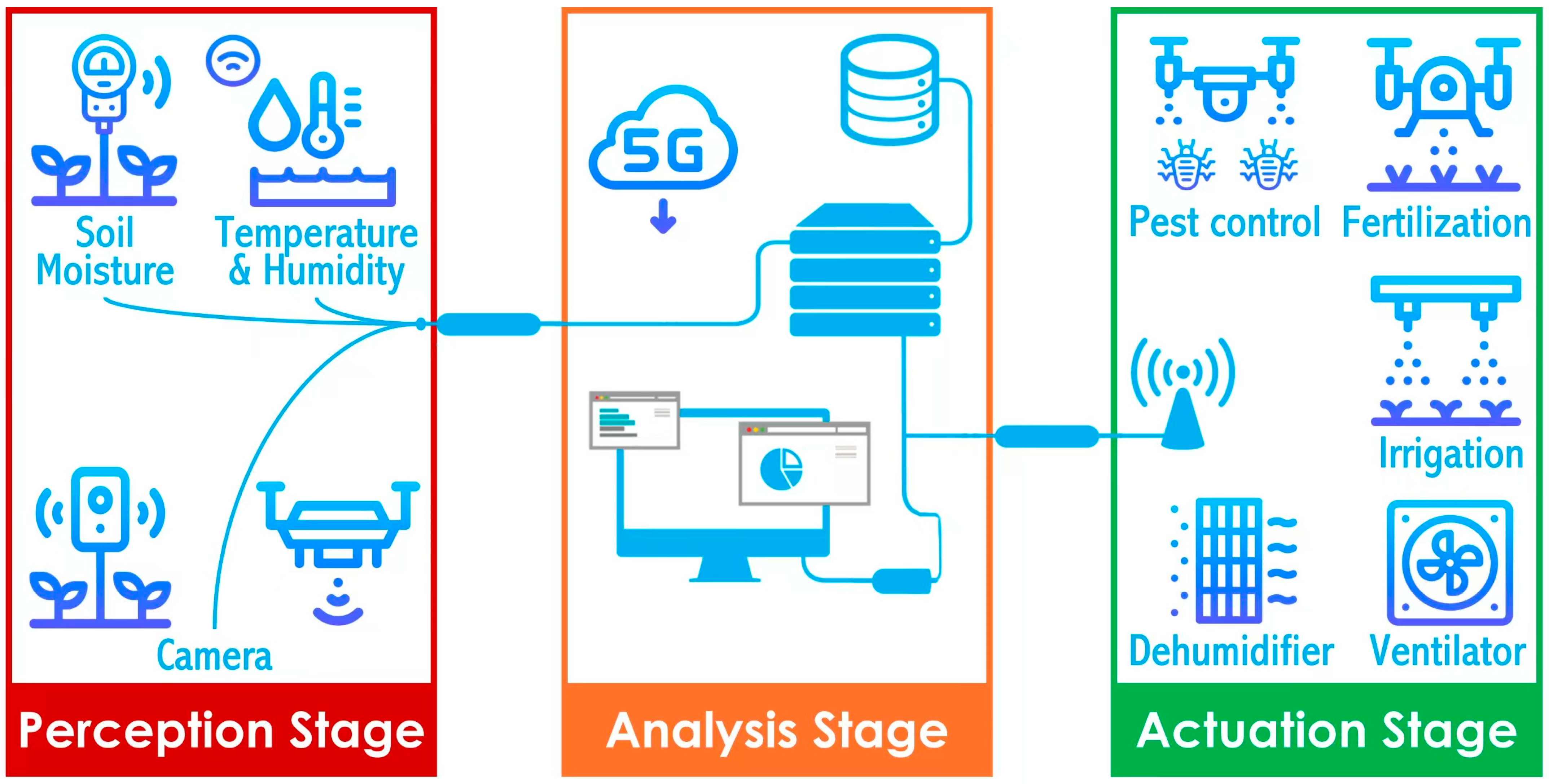

4. Perception, Analysis, and Actuation of Precision Crop Monitoring

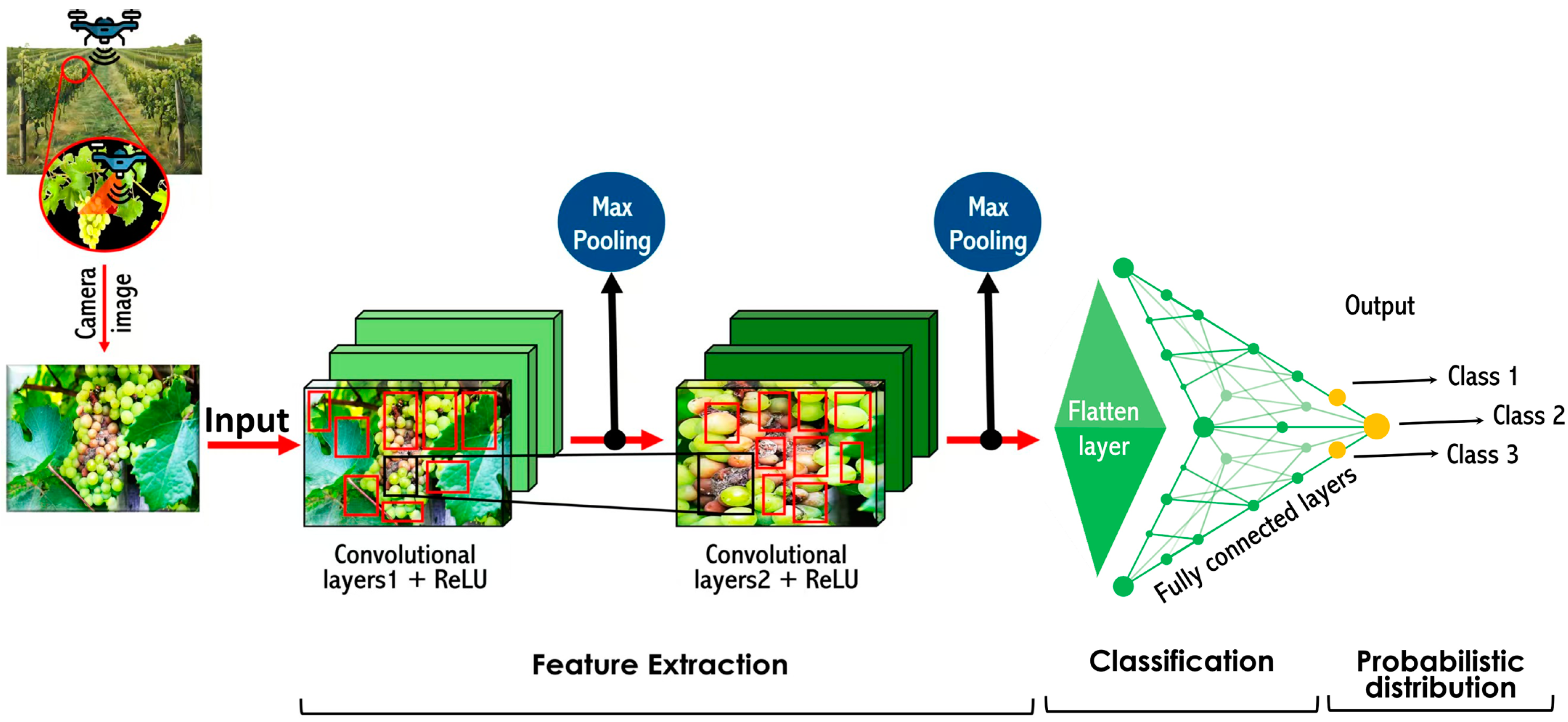

5. Machine Learning Applications for Precision Crop Monitoring

5.1. Germination Assessment

5.2. Diseases Detection and Crop Protection

5.3. Weeds Detection

5.4. Nutrient Stress Detection and Chlorophyll Estimation

5.5. Water Status

5.6. Prediction of Crop Yield

6. Innovative Technologies Associated with Ag5.0 for Precision Crop Monitoring

6.1. Innovative Hardware-Based Crop Monitoring

6.2. Crop Monitoring Through Communication Technology

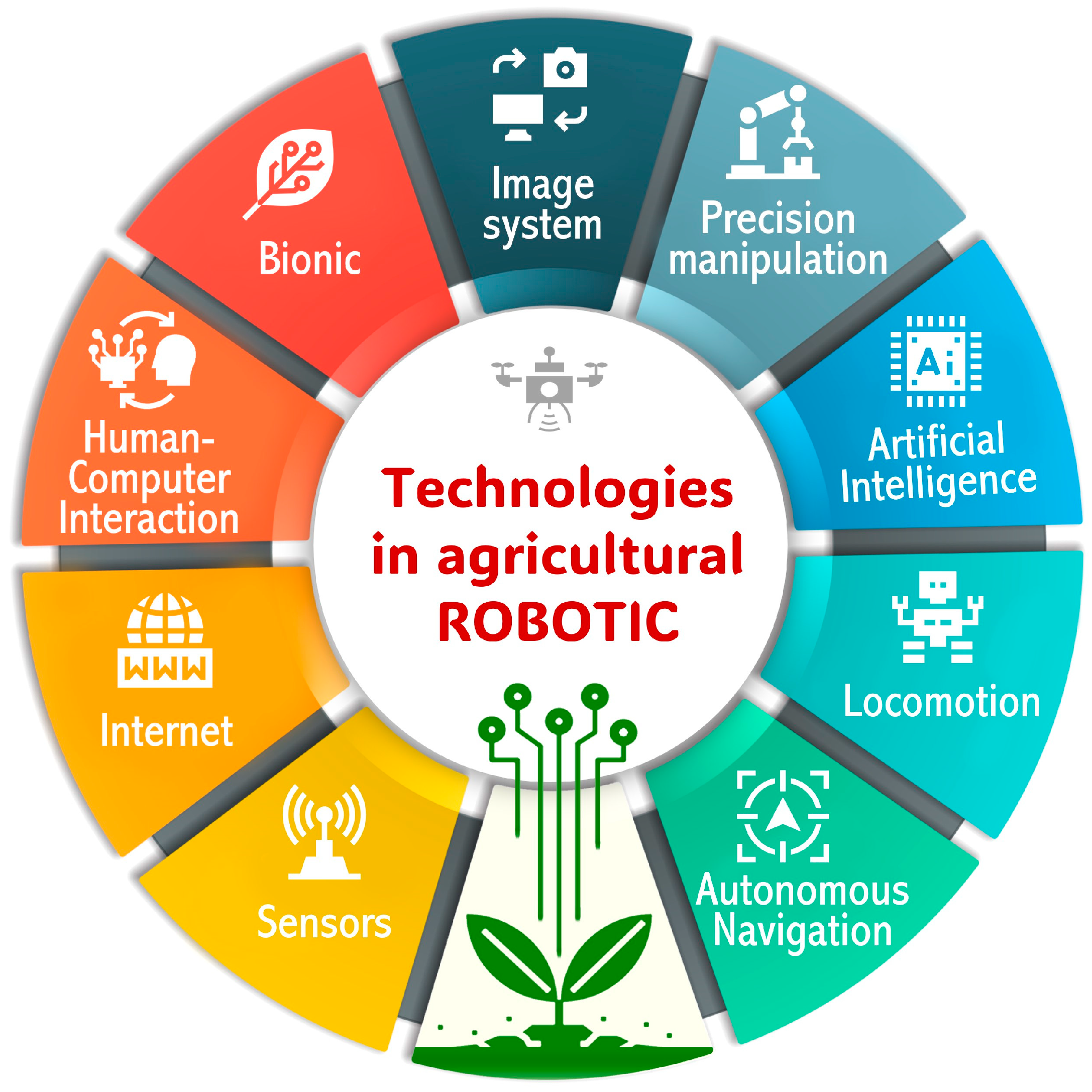

6.3. Advancements in Robotics Towards Ag5.0

7. Opportunities and Challenges Towards Ag5.0

8. Future Trends and Research Needs

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Ag5.0 | Agriculture 5.0 |

| AI | Artificial intelligence |

| ML | Machine Learning |

| IoT | Internet of Things |

| cobots | Collaborative Robots |

| GNSS | Global Navigation Satellite Systems |

| GIS | Geographic Information Systems |

| LiDAR | Light Detection and Ranging |

| RNN | Recurrent Neural Network |

| GANs | Generative Adversarial Networks |

| PCA | Principal Component Analysis |

| SVMs | Support Vector Machines |

| GNN | Graph Convolutional Network |

| ISP | Image Signal Processor |

| GPU | Graphics Processing Units |

| TPU | Tensor Processing Units |

| NVMe | Non-Volatile Memory Express |

| FPGAs | Field Programmable Gate Arrays |

| ASICs | Application-Specific Integrated Circuits |

| ANNs | Artificial Neural Networks |

| SNNs | Spiking Neural Networks |

| WSNs | Wireless Sensor Networks |

| LPWAN | Low-Power Wide Area Networks |

| AMRs | Autonomous Mobile Robots |

| UGVs | Unmanned Ground Vehicles |

| UAV | Unmanned Aerial Vehicles |

| MFS | Multirobot Fleet Systems |

References

- Eltohamy, K.M.; Taha, M.F. Use of Inductively Coupled Plasma Mass Spectrometry (ICP-MS) to Assess the Levels of Phosphorus and Cadmium in Lettuce. In Plant Chemical Compositions and Bioactivities; Springer: New York, NY, USA, 2024; pp. 231–248. [Google Scholar]

- Maja, M.M.; Ayano, S.F. The Impact of Population Growth on Natural Resources and Farmers’ Capacity to Adapt to Climate Change in Low-Income Countries. Earth Syst. Environ. 2021, 5, 271–283. [Google Scholar] [CrossRef]

- Miyake, Y.; Kimoto, S.; Uchiyama, Y.; Kohsaka, R. Income Change and Inter-Farmer Relations through Conservation Agriculture in Ishikawa Prefecture, Japan: Empirical Analysis of Economic and Behavioral Factors. Land 2022, 11, 245. [Google Scholar] [CrossRef]

- Nowak, B. Precision Agriculture: Where Do We Stand? A Review of the Adoption of Precision Agriculture Technologies on Field Crops Farms in Developed Countries. Agric. Res. 2021, 10, 515–522. [Google Scholar] [CrossRef]

- Zinke-Wehlmann, C.; Charvát, K. Introduction of Smart Agriculture. In Big Data in Bioeconomy; Springer International Publishing: Cham, Switzerland, 2021; pp. 187–190. [Google Scholar]

- Ramachandran, K.K.; Apsara, A.; Hawladar, S.; Asokk, D.; Bhaskar, B.; Pitroda, J.R. Machine Learning and Role of Artificial Intelligence in Optimizing Work Performance and Employee Behavior. Mater. Today Proc. 2022, 51, 2327–2331. [Google Scholar] [CrossRef]

- Rajendra, P.; Kumari, M.; Rani, S.; Dogra, N.; Boadh, R.; Kumar, A.; Dahiya, M. Impact of Artificial Intelligence on Civilization: Future Perspectives. Mater. Today Proc. 2022, 56, 252–256. [Google Scholar] [CrossRef]

- Bensoussan, A.; Li, Y.; Nguyen, D.P.C.; Tran, M.-B.; Yam, S.C.P.; Zhou, X. Machine Learning and Control Theory. In Handbook of Numerical Analysis; Elsevier: Amsterdam, The Netherlands, 2022; pp. 531–558. [Google Scholar]

- Mustafa, M.A.A.; Alshaibi, A.J.; Kostyuchenko, E.; Shelupanov, A. A Review of Artificial Intelligence Based Malware Detection Using Deep Learning. Mater. Today Proc. 2023, 80, 2678–2683. [Google Scholar] [CrossRef]

- Song, J.; Rondao, D.; Aouf, N. Deep Learning-Based Spacecraft Relative Navigation Methods: A Survey. Acta Astronaut. 2022, 191, 22–40. [Google Scholar] [CrossRef]

- Liang, N.; Sun, S.; Zhou, L.; Zhao, N.; Taha, M.F.; He, Y.; Qiu, Z. High-Throughput Instance Segmentation and Shape Restoration of Overlapping Vegetable Seeds Based on Sim2real Method. Measurement 2023, 207, 112414. [Google Scholar] [CrossRef]

- Liang, N.; Sun, S.; Yu, J.; Farag Taha, M.; He, Y.; Qiu, Z. Novel Segmentation Method and Measurement System for Various Grains with Complex Touching. Comput. Electron. Agric. 2022, 202, 107351. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, X.; Zhang, C.; Zhao, N.; Taha, M.F.; He, Y.; Qiu, Z. Powdery Food Identification Using NIR Spectroscopy and Extensible Deep Learning Model. Food Bioprocess Technol. 2022, 15, 2354–2362. [Google Scholar] [CrossRef]

- Taha, M.F.; Mao, H.; Mousa, S.; Zhou, L.; Wang, Y.; Elmasry, G.; Al-Rejaie, S.; Elwakeel, A.E.; Wei, Y.; Qiu, Z. Deep Learning-Enabled Dynamic Model for Nutrient Status Detection of Aquaponically Grown Plants. Agronomy 2024, 14, 2290. [Google Scholar] [CrossRef]

- Niu, Z.; Huang, T.; Xu, C.; Sun, X.; Taha, M.F.; He, Y.; Qiu, Z. A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement. Agriculture 2025, 15, 173. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, N.; Ma, G.; Farag Taha, M.; Mao, H.; Zhang, X.; Shi, Q. Detection of Spores Using Polarization Image Features and BP Neural Network. Int. J. Agric. Biol. Eng. 2024, 17, 213–221. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Fan, Y.; Zhou, L.; Qiu, Z. Fusion of Feature Selection Methods and Regression Algorithms for Predicting the Canopy Water Content of Rice Based on Hyperspectral Data. Agriculture 2021, 11, 51. [Google Scholar] [CrossRef]

- Elsherbiny, O.; Zhou, L.; He, Y.; Qiu, Z. A Novel Hybrid Deep Network for Diagnosing Water Status in Wheat Crop Using IoT-Based Multimodal Data. Comput. Electron. Agric. 2022, 203, 107453. [Google Scholar] [CrossRef]

- Galal, H.; Elsayed, S.; Elsherbiny, O.; Allam, A.; Farouk, M. Using RGB Imaging, Optimized Three-Band Spectral Indices, and a Decision Tree Model to Assess Orange Fruit Quality. Agriculture 2022, 12, 1558. [Google Scholar] [CrossRef]

- Fan, H. The Digital Asset Value and Currency Supervision under Deep Learning and Blockchain Technology. J. Comput. Appl. Math. 2022, 407, 114061. [Google Scholar] [CrossRef]

- Doshi, M.; Varghese, A. Smart Agriculture Using Renewable Energy and AI-Powered IoT. In AI, Edge and IoT-Based Smart Agriculture; Elsevier: Amsterdam, The Netherlands, 2022; pp. 205–225. [Google Scholar]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. A Literature Review of the Challenges and Opportunities of the Transition from Industry 4.0 to Society 5.0. Energies 2022, 15, 6276. [Google Scholar] [CrossRef]

- King, T.; Cole, M.; Farber, J.M.; Eisenbrand, G.; Zabaras, D.; Fox, E.M.; Hill, J.P. Food Safety for Food Security: Relationship between Global Megatrends and Developments in Food Safety. Trends Food Sci. Technol. 2017, 68, 160–175. [Google Scholar] [CrossRef]

- Rapela, M.A. Fostering Innovation for Agriculture 4.0; Springer International Publishing: Cham, Switzerland, 2019; ISBN 978-3-030-32492-6. [Google Scholar]

- Liu, Y.; Ma, X.; Shu, L.; Hancke, G.P.; Abu-Mahfouz, A.M. From Industry 4.0 to Agriculture 4.0: Current Status, Enabling Technologies, and Research Challenges. IEEE Trans. Ind. Inform. 2021, 17, 4322–4334. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine Vision Systems in Precision Agriculture for Crop Farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef] [PubMed]

- Zhai, Z.; Martínez, J.F.; Beltran, V.; Martínez, N.L. Decision Support Systems for Agriculture 4.0: Survey and Challenges. Comput. Electron. Agric. 2020, 170, 105256. [Google Scholar] [CrossRef]

- Saiz-Rubio, V.; Rovira-Más, F. From Smart Farming towards Agriculture 5.0: A Review on Crop Data Management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef]

- Ragazou, K.; Garefalakis, A.; Zafeiriou, E.; Passas, I. Agriculture 5.0: A New Strategic Management Mode for a Cut Cost and an Energy Efficient Agriculture Sector. Energies 2022, 15, 3113. [Google Scholar] [CrossRef]

- Zambon, I.; Cecchini, M.; Egidi, G.; Saporito, M.G.; Colantoni, A. Revolution 4.0: Industry vs. Agriculture in a Future Development for SMEs. Processes 2019, 7, 36. [Google Scholar] [CrossRef]

- Mulla, S.; Singh, S.K.; Singh, K.K.; Praveen, B. Climate Change and Agriculture: A Review of Crop Models. In Global Climate Change and Environmental Policy; Springer: Singapore, 2020; pp. 423–435. [Google Scholar]

- van Dijk, M.; Gramberger, M.; Laborde, D.; Mandryk, M.; Shutes, L.; Stehfest, E.; Valin, H.; Faradsch, K. Stakeholder-Designed Scenarios for Global Food Security Assessments. Glob. Food Secur. 2020, 24, 100352. [Google Scholar] [CrossRef]

- Fraser, E.D.G.; Campbell, M. Agriculture 5.0: Reconciling Production with Planetary Health. One Earth 2019, 1, 278–280. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.-V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A Survey on Enabling Technologies and Potential Applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Cesco, S.; Sambo, P.; Borin, M.; Basso, B.; Orzes, G.; Mazzetto, F. Smart Agriculture and Digital Twins: Applications and Challenges in a Vision of Sustainability. Eur. J. Agron. 2023, 146, 126809. [Google Scholar] [CrossRef]

- Pandrea, V.-A.; Ciocoiu, A.-O.; Machedon-Pisu, M. IoT-Based Irrigation System for Agriculture 5.0. In Proceedings of the 2023 17th International Conference on Engineering of Modern Electric Systems (EMES), Oradea, Romania, 9–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4. [Google Scholar]

- Behmann, J.; Mahlein, A.-K.; Rumpf, T.; Römer, C.; Plümer, L. A Review of Advanced Machine Learning Methods for the Detection of Biotic Stress in Precision Crop Protection. Precis. Agric. 2015, 16, 239–260. [Google Scholar] [CrossRef]

- Pätzold, S.; Hbirkou, C.; Dicke, D.; Gerhards, R.; Welp, G. Linking Weed Patterns with Soil Properties: A Long-Term Case Study. Precis. Agric. 2020, 21, 569–588. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision Agriculture Techniques and Practices: From Considerations to Applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Hong, S.-J.; Kim, S.-Y.; Kim, E.; Lee, C.-H.; Lee, J.-S.; Lee, D.-S.; Bang, J.; Kim, G. Moth Detection from Pheromone Trap Images Using Deep Learning Object Detectors. Agriculture 2020, 10, 170. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional Neural Networks: An Overview and Application in Radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Debnath, O.; Saha, H.N. An IoT-Based Intelligent Farming Using CNN for Early Disease Detection in Rice Paddy. Microprocess. Microsyst. 2022, 94, 104631. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, H.; Peng, Z. Rice Diseases Detection and Classification Using Attention Based Neural Network and Bayesian Optimization. Expert Syst. Appl. 2021, 178, 114770. [Google Scholar] [CrossRef]

- Genze, N.; Bharti, R.; Grieb, M.; Schultheiss, S.J.; Grimm, D.G. Accurate Machine Learning-Based Germination Detection, Prediction and Quality Assessment of Three Grain Crops. Plant Methods 2020, 16, 157. [Google Scholar] [CrossRef]

- ElMasry, G.; Mandour, N.; Al-Rejaie, S.; Belin, E.; Rousseau, D. Recent Applications of Multispectral Imaging in Seed Phenotyping and Quality Monitoring—An Overview. Sensors 2019, 19, 1090. [Google Scholar] [CrossRef]

- ElMasry, G.; Mandour, N.; Ejeez, Y.; Demilly, D.; Al-Rejaie, S.; Verdier, J.; Belin, E.; Rousseau, D. Multichannel Imaging for Monitoring Chemical Composition and Germination Capacity of Cowpea (Vigna unguiculata) Seeds during Development and Maturation. Crop J. 2022, 10, 1399–1411. [Google Scholar] [CrossRef]

- Peng, Q.; Tu, L.; Wu, Y.; Yu, Z.; Tang, G.; Song, W. Automatic Monitoring System for Seed Germination Test Based on Deep Learning. J. Electr. Comput. Eng. 2022, 2022, 4678316. [Google Scholar] [CrossRef]

- Awty-Carroll, D.; Clifton-Brown, J.; Robson, P. Using K-NN to Analyse Images of Diverse Germination Phenotypes and Detect Single Seed Germination in Miscanthus Sinensis. Plant Methods 2018, 14, 5. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, A.; Saraswat, D.; El Gamal, A. A Survey on Using Deep Learning Techniques for Plant Disease Diagnosis and Recommendations for Development of Appropriate Tools. Smart Agric. Technol. 2023, 3, 100083. [Google Scholar] [CrossRef]

- Oerke, E.-C.; Dehne, H.-W. Safeguarding Production—Losses in Major Crops and the Role of Crop Protection. Crop Prot. 2004, 23, 275–285. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Abdalla, A.; Wheeler, T.A.; Dever, J.; Lin, Z.; Arce, J.; Guo, W. Assessing Fusarium Oxysporum Disease Severity in Cotton Using Unmanned Aerial System Images and a Hybrid Domain Adaptation Deep Learning Time Series Model. Biosyst. Eng. 2024, 237, 220–231. [Google Scholar] [CrossRef]

- Ahmed, I.; Yadav, P.K. A Systematic Analysis of Machine Learning and Deep Learning Based Approaches for Identifying and Diagnosing Plant Diseases. Sustain. Oper. Comput. 2023, 4, 96–104. [Google Scholar] [CrossRef]

- Orchi, H.; Sadik, M.; Khaldoun, M.; Sabir, E. Automation of Crop Disease Detection through Conventional Machine Learning and Deep Transfer Learning Approaches. Agriculture 2023, 13, 352. [Google Scholar] [CrossRef]

- Nagachandrika, B.; Prasath, R.; Praveen Joe, I.R. An Automatic Classification Framework for Identifying Type of Plant Leaf Diseases Using Multi-Scale Feature Fusion-Based Adaptive Deep Network. Biomed. Signal Process. Control 2024, 95, 106316. [Google Scholar] [CrossRef]

- Guerrero-Ibañez, A.; Reyes-Muñoz, A. Monitoring Tomato Leaf Disease through Convolutional Neural Networks. Electronics 2023, 12, 229. [Google Scholar] [CrossRef]

- Tamilvizhi, T.; Surendran, R.; Anbazhagan, K.; Rajkumar, K. Quantum Behaved Particle Swarm Optimization-Based Deep Transfer Learning Model for Sugarcane Leaf Disease Detection and Classification. Math. Probl. Eng. 2022, 2022, 3452413. [Google Scholar] [CrossRef]

- Zhao, N.; Zhou, L.; Huang, T.; Taha, M.F.; He, Y.; Qiu, Z. Development of an Automatic Pest Monitoring System Using a Deep Learning Model of DPeNet. Measurement 2022, 203, 111970. [Google Scholar] [CrossRef]

- Lee, S.; Choi, G.; Park, H.-C.; Choi, C. Automatic Classification Service System for Citrus Pest Recognition Based on Deep Learning. Sensors 2022, 22, 8911. [Google Scholar] [CrossRef] [PubMed]

- Dai, M.; Shen, Y.; Li, X.; Liu, J.; Zhang, S.; Miao, H. Digital Twin System of Pest Management Driven by Data and Model Fusion. Agriculture 2024, 14, 1099. [Google Scholar] [CrossRef]

- Routis, G.; Michailidis, M.; Roussaki, I. Plant Disease Identification Using Machine Learning Algorithms on Single-Board Computers in IoT Environments. Electronics 2024, 13, 1010. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Guan, H.; Wang, L. Recognition Method of Crop Disease Based on Image Fusion and Deep Learning Model. Agronomy 2024, 14, 1518. [Google Scholar] [CrossRef]

- Wang, Y.; Li, T.; Chen, T.; Zhang, X.; Taha, M.F.; Yang, N.; Mao, H.; Shi, Q. Cucumber Downy Mildew Disease Prediction Using a CNN-LSTM Approach. Agriculture 2024, 14, 1155. [Google Scholar] [CrossRef]

- Balaji, V.; Anushkannan, N.K.; Narahari, S.C.; Rattan, P.; Verma, D.; Awasthi, D.K.; Pandian, A.A.; Veeramanickam, M.R.M.; Mulat, M.B. Deep Transfer Learning Technique for Multimodal Disease Classification in Plant Images. Contrast Media Mol. Imaging 2023, 2023, 5644727. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Taha, M.F.; Chen, T.; Yang, N.; Zhang, J.; Mao, H. Detection Method of Fungal Spores Based on Fingerprint Characteristics of Diffraction–Polarization Images. J. Fungi 2023, 9, 1131. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2017, 10, 11. [Google Scholar] [CrossRef]

- Ramcharan, A.; Baranowski, K.; McCloskey, P.; Ahmed, B.; Legg, J.; Hughes, D.P. Deep Learning for Image-Based Cassava Disease Detection. Front. Plant Sci. 2017, 8, 1852. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Hu, J.; Zhao, G.; Mei, F.; Zhang, C. An In-Field Automatic Wheat Disease Diagnosis System. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef]

- Fuentes, S.; Tongson, E.; Unnithan, R.R.; Gonzalez Viejo, C. Early Detection of Aphid Infestation and Insect-Plant Interaction Assessment in Wheat Using a Low-Cost Electronic Nose (E-Nose), Near-Infrared Spectroscopy and Machine Learning Modeling. Sensors 2021, 21, 5948. [Google Scholar] [CrossRef] [PubMed]

- Feng, Z.-H.; Wang, L.-Y.; Yang, Z.-Q.; Zhang, Y.-Y.; Li, X.; Song, L.; He, L.; Duan, J.-Z.; Feng, W. Hyperspectral Monitoring of Powdery Mildew Disease Severity in Wheat Based on Machine Learning. Front. Plant Sci. 2022, 13, 828454. [Google Scholar] [CrossRef]

- DeChant, C.; Wiesner-Hanks, T.; Chen, S.; Stewart, E.L.; Yosinski, J.; Gore, M.A.; Nelson, R.J.; Lipson, H. Automated Identification of Northern Leaf Blight-Infected Maize Plants from Field Imagery Using Deep Learning. Phytopathology 2017, 107, 1426–1432. [Google Scholar] [CrossRef] [PubMed]

- Kaneda, Y.; Shibata, S.; Mineno, H. Multi-Modal Sliding Window-Based Support Vector Regression for Predicting Plant Water Stress. Knowl. Based Syst. 2017, 134, 135–148. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.; Park, D. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef]

- Krishnaswamy Rangarajan, A.; Purushothaman, R. Disease Classification in Eggplant Using Pre-Trained VGG16 and MSVM. Sci. Rep. 2020, 10, 2322. [Google Scholar] [CrossRef]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A Comparative Study of Fine-Tuning Deep Learning Models for Plant Disease Identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Rançon, F.; Bombrun, L.; Keresztes, B.; Germain, C. Comparison of SIFT Encoded and Deep Learning Features for the Classification and Detection of Esca Disease in Bordeaux Vineyards. Remote Sens. 2018, 11, 1. [Google Scholar] [CrossRef]

- Cruz, A.; Ampatzidis, Y.; Pierro, R.; Materazzi, A.; Panattoni, A.; De Bellis, L.; Luvisi, A. Detection of Grapevine Yellows Symptoms in Vitis Vinifera L. with Artificial Intelligence. Comput. Electron. Agric. 2019, 157, 63–76. [Google Scholar] [CrossRef]

- Liang, Q.; Xiang, S.; Hu, Y.; Coppola, G.; Zhang, D.; Sun, W. PD2SE-Net: Computer-Assisted Plant Disease Diagnosis and Severity Estimation Network. Comput. Electron. Agric. 2019, 157, 518–529. [Google Scholar] [CrossRef]

- Gold, K.M.; Townsend, P.A.; Herrmann, I.; Gevens, A.J. Investigating Potato Late Blight Physiological Differences across Potato Cultivars with Spectroscopy and Machine Learning. Plant Sci. 2020, 295, 110316. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Ehsani, R.; de Castro, A.I. Evaluating the Performance of Spectral Features and Multivariate Analysis Tools to Detect Laurel Wilt Disease and Nutritional Deficiency in Avocado. Comput. Electron. Agric. 2018, 155, 203–211. [Google Scholar] [CrossRef]

- Lu, J.; Ehsani, R.; Shi, Y.; de Castro, A.I.; Wang, S. Detection of Multi-Tomato Leaf Diseases (Late Blight, Target and Bacterial Spots) in Different Stages by Using a Spectral-Based Sensor. Sci. Rep. 2018, 8, 2793. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Ehsani, R.; Shi, Y.; Abdulridha, J.; de Castro, A.I.; Xu, Y. Field Detection of Anthracnose Crown Rot in Strawberry Using Spectroscopy Technology. Comput. Electron. Agric. 2017, 135, 289–299. [Google Scholar] [CrossRef]

- Barreto, A.; Paulus, S.; Varrelmann, M.; Mahlein, A.-K. Hyperspectral Imaging of Symptoms Induced by Rhizoctonia Solani in Sugar Beet: Comparison of Input Data and Different Machine Learning Algorithms. J. Plant Dis. Prot. 2020, 127, 441–451. [Google Scholar] [CrossRef]

- Polder, G.; Blok, P.M.; de Villiers, H.A.C.; van der Wolf, J.M.; Kamp, J. Potato Virus Y Detection in Seed Potatoes Using Deep Learning on Hyperspectral Images. Front. Plant Sci. 2019, 10, 209. [Google Scholar] [CrossRef]

- Zhu, H.; Chu, B.; Zhang, C.; Liu, F.; Jiang, L.; He, Y. Hyperspectral Imaging for Presymptomatic Detection of Tobacco Disease with Successive Projections Algorithm and Machine-Learning Classifiers. Sci. Rep. 2017, 7, 4125. [Google Scholar] [CrossRef]

- de Carvalho Alves, M.; Pozza, E.A.; Sanches, L.; Belan, L.L.; de Oliveira Freitas, M.L. Insights for Improving Bacterial Blight Management in Coffee Field Using Spatial Big Data and Machine Learning. Trop. Plant Pathol. 2021, 47, 118–139. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Camino, C.; Beck, P.S.A.; Calderon, R.; Hornero, A.; Hernández-Clemente, R.; Kattenborn, T.; Montes-Borrego, M.; Susca, L.; Morelli, M.; et al. Previsual Symptoms of Xylella Fastidiosa Infection Revealed in Spectral Plant-Trait Alterations. Nat. Plants 2018, 4, 432–439. [Google Scholar] [CrossRef]

- Ramos, A.P.M.; Gomes, F.D.G.; Pinheiro, M.M.F.; Furuya, D.E.G.; Gonçalvez, W.N.; Junior, J.M.; Michereff, M.F.F.; Blassioli-Moraes, M.C.; Borges, M.; Alaumann, R.A.; et al. Detecting the Attack of the Fall Armyworm (Spodoptera frugiperda) in Cotton Plants with Machine Learning and Spectral Measurements. Precis. Agric. 2022, 23, 470–491. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of Two Aerial Imaging Platforms for Identification of Huanglongbing-Infected Citrus Trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Liu, L.; Wang, R.; Xie, C.; Yang, P.; Wang, F.; Sudirman, S.; Liu, W. PestNet: An End-to-End Deep Learning Approach for Large-Scale Multi-Class Pest Detection and Classification. IEEE Access 2019, 7, 45301–45312. [Google Scholar] [CrossRef]

- Xia, D.; Chen, P.; Wang, B.; Zhang, J.; Xie, C. Insect Detection and Classification Based on an Improved Convolutional Neural Network. Sensors 2018, 18, 4169. [Google Scholar] [CrossRef] [PubMed]

- Ding, Y.; Jiang, C.; Song, L.; Liu, F.; Tao, Y. RVDR-YOLOv8: A Weed Target Detection Model Based on Improved YOLOv8. Electronics 2024, 13, 2182. [Google Scholar] [CrossRef]

- Almalky, A.M.; Ahmed, K.R. Deep Learning for Detecting and Classifying the Growth Stages of Consolida Regalis Weeds on Fields. Agronomy 2023, 13, 934. [Google Scholar] [CrossRef]

- Mu, Y.; Feng, R.; Ni, R.; Li, J.; Luo, T.; Liu, T.; Li, X.; Gong, H.; Guo, Y.; Sun, Y.; et al. A Faster R-CNN-Based Model for the Identification of Weed Seedling. Agronomy 2022, 12, 2867. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Zhuang, H.; Li, H. YOLOX-Based Blue Laser Weeding Robot in Corn Field. Front. Plant Sci. 2022, 13, 1017803. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.M.; Wibowo, S.; Xu, C.-Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Tran, D.; Schouteten, J.J.; Degieter, M.; Krupanek, J.; Jarosz, W.; Areta, A.; Emmi, L.; De Steur, H.; Gellynck, X. European Stakeholders’ Perspectives on Implementation Potential of Precision Weed Control: The Case of Autonomous Vehicles with Laser Treatment. Precis. Agric. 2023, 24, 2200–2222. [Google Scholar] [CrossRef] [PubMed]

- Aravind, K.R.; Raja, P.; Pérez-Ruiz, M. Task-Based Agricultural Mobile Robots in Arable Farming: A Review. Span. J. Agric. Res. 2017, 15, e02R01. [Google Scholar] [CrossRef]

- Jiang, W.; Quan, L.; Wei, G.; Chang, C.; Geng, T. A Conceptual Evaluation of a Weed Control Method with Post-Damage Application of Herbicides: A Composite Intelligent Intra-Row Weeding Robot. Soil Tillage Res. 2023, 234, 105837. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Z.; Wu, C.; Sun, L. Segmentation Algorithm for Overlap Recognition of Seedling Lettuce and Weeds Based on SVM and Image Blocking. Comput. Electron. Agric. 2022, 201, 107284. [Google Scholar] [CrossRef]

- Guo, X.; Ge, Y.; Liu, F.; Yang, J. Identification of Maize and Wheat Seedlings and Weeds Based on Deep Learning. Front. Earth Sci. 2023, 11, 1146558. [Google Scholar] [CrossRef]

- Eide, A.; Koparan, C.; Zhang, Y.; Ostlie, M.; Howatt, K.; Sun, X. UAV-Assisted Thermal Infrared and Multispectral Imaging of Weed Canopies for Glyphosate Resistance Detection. Remote Sens. 2021, 13, 4606. [Google Scholar] [CrossRef]

- Li, D.; Shi, G.; Li, J.; Chen, Y.; Zhang, S.; Xiang, S.; Jin, S. PlantNet: A Dual-Function Point Cloud Segmentation Network for Multiple Plant Species. ISPRS J. Photogramm. Remote Sens. 2022, 184, 243–263. [Google Scholar] [CrossRef]

- Fawakherji, M.; Potena, C.; Pretto, A.; Bloisi, D.D.; Nardi, D. Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming. Robot. Auton. Syst. 2021, 146, 103861. [Google Scholar] [CrossRef]

- Ashraf, T.; Khan, Y.N. Weed Density Classification in Rice Crop Using Computer Vision. Comput. Electron. Agric. 2020, 175, 105590. [Google Scholar] [CrossRef]

- Shen, Y.; Yin, Y.; Li, B.; Zhao, C.; Li, G. Detection of Impurities in Wheat Using Terahertz Spectral Imaging and Convolutional Neural Networks. Comput. Electron. Agric. 2021, 181, 105931. [Google Scholar] [CrossRef]

- Alam, M.S.; Alam, M.; Tufail, M.; Khan, M.U.; Güneş, A.; Salah, B.; Nasir, F.E.; Saleem, W.; Khan, M.T. TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots. Appl. Sci. 2022, 12, 1308. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep Learning-Based Identification System of Weeds and Crops in Strawberry and Pea Fields for a Precision Agriculture Sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar] [CrossRef]

- Ramirez, W.; Achanccaray, P.; Mendoza, L.F.; Pacheco, M.A.C. Deep Convolutional Neural Networks for Weed Detection in Agricultural Crops Using Optical Aerial Images. In Proceedings of the 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conference (LAGIRS), Santiago, Chile, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 133–137. [Google Scholar]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-Time Blob-Wise Sugar Beets Vs Weeds Classification For Monitoring Fields Using Convolutional Neural Networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 41–48. [Google Scholar] [CrossRef]

- Anul Haq, M. CNN Based Automated Weed Detection System Using UAV Imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef]

- Andy Lease, B.; Wong, W.; Gopal, L.; Chiong, W.R. Weed Pixel Level Classification Based on Evolving Feature Selection on Local Binary Pattern With Shallow Network Classifier. IOP Conf. Ser. Mater. Sci. Eng. 2020, 943, 012001. [Google Scholar] [CrossRef]

- Dhal, S.B.; Bagavathiannan, M.; Braga-Neto, U.; Kalafatis, S. Nutrient Optimization for Plant Growth in Aquaponic Irrigation Using Machine Learning for Small Training Datasets. Artif. Intell. Agric. 2022, 6, 68–76. [Google Scholar] [CrossRef]

- Ennaji, O.; Vergütz, L.; El Allali, A. Machine Learning in Nutrient Management: A Review. Artif. Intell. Agric. 2023, 9, 1–11. [Google Scholar] [CrossRef]

- Bera, A.; Bhattacharjee, D.; Krejcar, O. PND-Net: Plant Nutrition Deficiency and Disease Classification Using Graph Convolutional Network. Sci. Rep. 2024, 14, 15537. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; Wan, L.; Mehmood, K.; He, Y. Nutrient Status Diagnosis of Infield Oilseed Rape via Deep Learning-Enabled Dynamic Model. IEEE Trans. Ind. Inform. 2021, 17, 4379–4389. [Google Scholar] [CrossRef]

- Taha, M.F.; Abdalla, A.; ElMasry, G.; Gouda, M.; Zhou, L.; Zhao, N.; Liang, N.; Niu, Z.; Hassanein, A.; Al-Rejaie, S.; et al. Using Deep Convolutional Neural Network for Image-Based Diagnosis of Nutrient Deficiencies in Plants Grown in Aquaponics. Chemosensors 2022, 10, 45. [Google Scholar] [CrossRef]

- Taha, M.F.; Mao, H.; Wang, Y.; ElManawy, A.I.; Elmasry, G.; Wu, L.; Memon, M.S.; Niu, Z.; Huang, T.; Qiu, Z. High-Throughput Analysis of Leaf Chlorophyll Content in Aquaponically Grown Lettuce Using Hyperspectral Reflectance and RGB Images. Plants 2024, 13, 392. [Google Scholar] [CrossRef] [PubMed]

- Kou, J.; Duan, L.; Yin, C.; Ma, L.; Chen, X.; Gao, P.; Lv, X. Predicting Leaf Nitrogen Content in Cotton with UAV RGB Images. Sustainability 2022, 14, 9259. [Google Scholar] [CrossRef]

- Yu, X.; Lu, H.; Liu, Q. Deep-Learning-Based Regression Model and Hyperspectral Imaging for Rapid Detection of Nitrogen Concentration in Oilseed Rape (Brassica napus L.) Leaf. Chemom. Intell. Lab. Syst. 2018, 172, 188–193. [Google Scholar] [CrossRef]

- Anami, B.S.; Malvade, N.N.; Palaiah, S. Deep Learning Approach for Recognition and Classification of Yield Affecting Paddy Crop Stresses Using Field Images. Artif. Intell. Agric. 2020, 4, 12–20. [Google Scholar] [CrossRef]

- Thompson, L.J.; Ferguson, R.B.; Kitchen, N.; Frazen, D.W.; Mamo, M.; Yang, H.; Schepers, J.S. Model and Sensor-Based Recommendation Approaches for In-Season Nitrogen Management in Corn. Agron. J. 2015, 107, 2020–2030. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine Learning Approaches for Crop Yield Prediction and Nitrogen Status Estimation in Precision Agriculture: A Review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Kusanur, V.; Chakravarthi, V.S. Using Transfer Learning for Nutrient Deficiency Prediction and Classification in Tomato Plant. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 784–790. [Google Scholar] [CrossRef]

- Song, Y.; Teng, G.; Yuan, Y.; Liu, T.; Sun, Z. Assessment of Wheat Chlorophyll Content by the Multiple Linear Regression of Leaf Image Features. Inf. Process. Agric. 2021, 8, 232–243. [Google Scholar] [CrossRef]

- Sharma, M.; Nath, K.; Sharma, R.K.; Kumar, C.J.; Chaudhary, A. Ensemble Averaging of Transfer Learning Models for Identification of Nutritional Deficiency in Rice Plant. Electronics 2022, 11, 148. [Google Scholar] [CrossRef]

- Ghosal, S.; Blystone, D.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An Explainable Deep Machine Vision Framework for Plant Stress Phenotyping. Proc. Natl. Acad. Sci. USA 2018, 115, 4613–4618. [Google Scholar] [CrossRef]

- Sethy, P.K.; Barpanda, N.K.; Rath, A.K.; Behera, S.K. Nitrogen Deficiency Prediction of Rice Crop Based on Convolutional Neural Network. J. Ambient Intell. Humaniz. Comput. 2020, 11, 5703–5711. [Google Scholar] [CrossRef]

- Watchareeruetai, U.; Noinongyao, P.; Wattanapaiboonsuk, C.; Khantiviriya, P.; Duangsrisai, S. Identification of Plant Nutrient Deficiencies Using Convolutional Neural Networks. In Proceedings of the 2018 International Electrical Engineering Congress (iEECON), Krabi, Thailand, 7–9 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Joshi, P.; Das, D.; Udutalapally, V.; Pradhan, M.K.; Misra, S. RiceBioS: Identification of Biotic Stress in Rice Crops Using Edge-as-a-Service. IEEE Sens. J. 2022, 22, 4616–4624. [Google Scholar] [CrossRef]

- Chang, L.; Li, D.; Hameed, M.K.; Yin, Y.; Huang, D.; Niu, Q. Using a Hybrid Neural Network Model DCNN–LSTM for Image-Based Nitrogen Nutrition Diagnosis in Muskmelon. Horticulturae 2021, 7, 489. [Google Scholar] [CrossRef]

- Manoharan, S.; Sariffodeen, B.; Ramasinghe, K.T.; Rajaratne, L.H.; Kasthurirathna, D.; Wijekoon, J.L. Smart Plant Disorder Identification Using Computer Vision Technology. In Proceedings of the 2020 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 0445–0451. [Google Scholar]

- Azimi, S.; Kaur, T.; Gandhi, T.K. A Deep Learning Approach to Measure Stress Level in Plants Due to Nitrogen Deficiency. Measurement 2021, 173, 108650. [Google Scholar] [CrossRef]

- Yi, J.; Krusenbaum, L.; Unger, P.; Hüging, H.; Seidel, S.J.; Schaaf, G.; Gall, J. Deep Learning for Non-Invasive Diagnosis of Nutrient Deficiencies in Sugar Beet Using RGB Images. Sensors 2020, 20, 5893. [Google Scholar] [CrossRef]

- Ahsan, M.; Eshkabilov, S.; Cemek, B.; Küçüktopcu, E.; Lee, C.W.; Simsek, H. Deep Learning Models to Determine Nutrient Concentration in Hydroponically Grown Lettuce Cultivars (Lactuca sativa L.). Sustainability 2021, 14, 416. [Google Scholar] [CrossRef]

- Akbari, M.; Sabouri, H.; Sajadi, S.J.; Yarahmadi, S.; Ahangar, L. Classification and Prediction of Drought and Salinity Stress Tolerance in Barley Using GenPhenML. Sci. Rep. 2024, 14, 17420. [Google Scholar] [CrossRef]

- Gupta, A.; Kaur, L.; Kaur, G. Drought Stress Detection Technique for Wheat Crop Using Machine Learning. PeerJ Comput. Sci. 2023, 9, e1268. [Google Scholar] [CrossRef]

- Okyere, F.G.; Cudjoe, D.K.; Virlet, N.; Castle, M.; Riche, A.B.; Greche, L.; Mohareb, F.; Simms, D.; Mhada, M.; Hawkesford, M.J. Hyperspectral Imaging for Phenotyping Plant Drought Stress and Nitrogen Interactions Using Multivariate Modeling and Machine Learning Techniques in Wheat. Remote Sens. 2024, 16, 3446. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, J.; Zhang, X.; Zhang, J.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Combining Machine Learning Algorithm and Multi-Temporal Temperature Indices to Estimate the Water Status of Rice. Agric. Water Manag. 2023, 289, 108521. [Google Scholar] [CrossRef]

- Jin, K.; Zhang, J.; Wang, Z.; Zhang, J.; Liu, N.; Li, M.; Ma, Z. Application of Deep Learning Based on Thermal Images to Identify the Water Stress in Cotton under Film-Mulched Drip Irrigation. Agric. Water Manag. 2024, 299, 108901. [Google Scholar] [CrossRef]

- An, J.; Li, W.; Li, M.; Cui, S.; Yue, H. Identification and Classification of Maize Drought Stress Using Deep Convolutional Neural Network. Symmetry 2019, 11, 256. [Google Scholar] [CrossRef]

- Zhuang, S.; Wang, P.; Jiang, B.; Li, M. Learned Features of Leaf Phenotype to Monitor Maize Water Status in the Fields. Comput. Electron. Agric. 2020, 172, 105347. [Google Scholar] [CrossRef]

- Sun, H.; Feng, M.; Xiao, L.; Yang, W.; Wang, C.; Jia, X.; Zhao, Y.; Zhao, C.; Muhammad, S.K.; Li, D. Assessment of Plant Water Status in Winter Wheat (Triticum aestivum L.) Based on Canopy Spectral Indices. PLoS ONE 2019, 14, e0216890. [Google Scholar] [CrossRef]

- Zuo, Z.; Mu, J.; Li, W.; Bu, Q.; Mao, H.; Zhang, X.; Han, L.; Ni, J. Study on the Detection of Water Status of Tomato (Solanum lycopersicum L.) by Multimodal Deep Learning. Front. Plant Sci. 2023, 14, 1094142. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Cheng, Q.; Fei, S.; Zhou, X. Deep Learning Models Outperform Generalized Machine Learning Models in Predicting Winter Wheat Yield Based on Multispectral Data from Drones. Drones 2023, 7, 505. [Google Scholar] [CrossRef]

- Li, D.; Wu, X. Individualized Indicators and Estimation Methods for Tiger Nut (Cyperus esculentus L.) Tubers Yield Using Light Multispectral UAV and Lightweight CNN Structure. Drones 2023, 7, 432. [Google Scholar] [CrossRef]

- Tanaka, Y.; Watanabe, T.; Katsura, K.; Tsujimoto, Y.; Takai, T.; Tanaka, T.S.T.; Kawamura, K.; Saito, H.; Homma, K.; Mairoua, S.G.; et al. Deep Learning Enables Instant and Versatile Estimation of Rice Yield Using Ground-Based RGB Images. Plant Phenomics 2023, 5, 0073. [Google Scholar] [CrossRef] [PubMed]

- Mia, M.S.; Tanabe, R.; Habibi, L.N.; Hashimoto, N.; Homma, K.; Maki, M.; Matsui, T.; Tanaka, T.S.T. Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sens. 2023, 15, 2511. [Google Scholar] [CrossRef]

- Zhou, S.; Xu, L.; Chen, N. Rice Yield Prediction in Hubei Province Based on Deep Learning and the Effect of Spatial Heterogeneity. Remote Sens. 2023, 15, 1361. [Google Scholar] [CrossRef]

- Sarr, A.B.; Sultan, B. Predicting Crop Yields in Senegal Using Machine Learning Methods. Int. J. Climatol. 2023, 43, 1817–1838. [Google Scholar] [CrossRef]

- Surana, R.; Khandelwal, R. Crop Yield Prediction Using Machine Learning: A Pragmatic Approach 2024. Available online: https://www.researchgate.net/publication/381910719_Crop_Yield_Prediction_Using_Machine_Learning_A_Pragmatic_Approach (accessed on 10 June 2024).

- Kuradusenge, M.; Hitimana, E.; Hanyurwimfura, D.; Rukundo, P.; Mtonga, K.; Mukasine, A.; Uwitonze, C.; Ngabonziza, J.; Uwamahoro, A. Crop Yield Prediction Using Machine Learning Models: Case of Irish Potato and Maize. Agriculture 2023, 13, 225. [Google Scholar] [CrossRef]

- Maraveas, C.; Konar, D.; Michopoulos, D.K.; Arvanitis, K.G.; Peppas, K.P. Harnessing Quantum Computing for Smart Agriculture: Empowering Sustainable Crop Management and Yield Optimization. Comput. Electron. Agric. 2024, 218, 108680. [Google Scholar] [CrossRef]

- Nigam, A.; Garg, S.; Agrawal, A.; Agrawal, P. Crop Yield Prediction Using Machine Learning Algorithms. In Proceedings of the 2019 Fifth International Conference on Image Information Processing (ICIIP), Shimla, India, 15–17 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 125–130. [Google Scholar]

- Shen, Y.; Mercatoris, B.; Cao, Z.; Kwan, P.; Guo, L.; Yao, H.; Cheng, Q. Improving Wheat Yield Prediction Accuracy Using LSTM-RF Framework Based on UAV Thermal Infrared and Multispectral Imagery. Agriculture 2022, 12, 892. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Z.; Feng, L.; Du, Q.; Runge, T. Combining Multi-Source Data and Machine Learning Approaches to Predict Winter Wheat Yield in the Conterminous United States. Remote Sens. 2020, 12, 1232. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Luo, Y.; Zhang, L.; Zhang, J.; Li, Z.; Tao, F. Wheat Yield Predictions at a County and Field Scale with Deep Learning, Machine Learning, and Google Earth Engine. Eur. J. Agron. 2021, 123, 126204. [Google Scholar] [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.; Dias Paiao, G.; Fernández, F.G.; Mulla, D.; Yang, C. Estimation of Corn Yield Based on Hyperspectral Imagery and Convolutional Neural Network. Comput. Electron. Agric. 2021, 184, 106092. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop Yield Prediction with Deep Convolutional Neural Networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Chakraborty, M.; Pourreza, A.; Zhang, X.; Jafarbiglu, H.; Shackel, K.A.; DeJong, T. Early Almond Yield Forecasting by Bloom Mapping Using Aerial Imagery and Deep Learning. Comput. Electron. Agric. 2023, 212, 108063. [Google Scholar] [CrossRef]

- Shankar, P.; Johnen, A.; Liwicki, M. Data Fusion and Artificial Neural Networks for Modelling Crop Disease Severity. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Picon, A.; Alvarez-Gila, A.; Seitz, M.; Ortiz-Barredo, A.; Echazarra, J.; Johannes, A. Deep Convolutional Neural Networks for Mobile Capture Device-Based Crop Disease Classification in the Wild. Comput. Electron. Agric. 2019, 161, 280–290. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 2016; ACM: New York, NY, USA, 2016; pp. 26–35. [Google Scholar]

- Shawahna, A.; Sait, S.M.; El-Maleh, A. FPGA-Based Accelerators of Deep Learning Networks for Learning and Classification: A Review. IEEE Access 2019, 7, 7823–7859. [Google Scholar] [CrossRef]

- Ibrahim, A.; Gebali, F. Compact Hardware Accelerator for Field Multipliers Suitable for Use in Ultra-Low Power IoT Edge Devices. Alex. Eng. J. 2022, 61, 13079–13087. [Google Scholar] [CrossRef]

- Oñate, W.; Sanz, R. Analysis of Architectures Implemented for IIoT. Heliyon 2023, 9, e12868. [Google Scholar] [CrossRef]

- Strukov, D.B.; Snider, G.S.; Stewart, D.R.; Williams, R.S. The Missing Memristor Found. Nature 2008, 453, 80–83. [Google Scholar] [CrossRef]

- Zidan, M.A.; Strachan, J.P.; Lu, W.D. The Future of Electronics Based on Memristive Systems. Nat. Electron. 2018, 1, 22–29. [Google Scholar] [CrossRef]

- Esser, S.K.; Merolla, P.A.; Arthur, J.V.; Cassidy, A.S.; Appuswamy, R.; Andreopoulos, A.; Berg, D.J.; McKinstry, J.L.; Melano, T.; Barch, D.R.; et al. Convolutional Networks for Fast, Energy-Efficient Neuromorphic Computing. Proc. Natl. Acad. Sci. USA 2016, 113, 11441–11446. [Google Scholar] [CrossRef]

- Zeadally, S.; Siddiqui, F.; Baig, Z. 25 Years of Bluetooth Technology. Future Internet 2019, 11, 194. [Google Scholar] [CrossRef]

- Castro, S.; Iñacasha, J.; Mesias, G.; Oñate, W. Prototype Based on a LoRaWAN Network for Storing Multivariable Data, Oriented to Agriculture with Limited Resources. In Proceedings of the Seventh International Congress on Information and Communication Technology, London, UK, 21–24 February 2022; Springer Nature: Singapore, 2023; pp. 245–255. [Google Scholar]

- Lavric, A. LoRa (Long-Range) High-Density Sensors for Internet of Things. J. Sens. 2019, 2019, 3502987. [Google Scholar] [CrossRef]

- Ullah, Z.; Al-Turjman, F.; Mostarda, L. Cognition in UAV-Aided 5G and Beyond Communications: A Survey. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 872–891. [Google Scholar] [CrossRef]

- Yang, Y.; Lin, M.; Lin, Y.; Zhang, C.; Wu, C. A Survey of Blockchain Applications for Management in Agriculture and Livestock Internet of Things. Future Internet 2025, 17, 40. [Google Scholar] [CrossRef]

- Maniah; Abdurachman, E.; Gaol, F.L.; Soewito, B. Survey on Threats and Risks in the Cloud Computing Environment. Procedia Comput. Sci. 2019, 161, 1325–1332. [Google Scholar] [CrossRef]

- Sun, P. Security and Privacy Protection in Cloud Computing: Discussions and Challenges. J. Netw. Comput. Appl. 2020, 160, 102642. [Google Scholar] [CrossRef]

- García-Valls, M.; Escribano-Barreno, J.; García-Muñoz, J. An Extensible Collaborative Framework for Monitoring Software Quality in Critical Systems. Inf. Softw. Technol. 2019, 107, 3–17. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence With Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Gao, W.; Zhou, P. Customized High Performance and Energy Efficient Communication Networks for AI Chips. IEEE Access 2019, 7, 69434–69446. [Google Scholar] [CrossRef]

- Cheng, C.; Fu, J.; Su, H.; Ren, L. Recent Advancements in Agriculture Robots: Benefits and Challenges. Machines 2023, 11, 48. [Google Scholar] [CrossRef]

- Panarin, R.N.; Khvorova, L.A. Software Development for Agricultural Tillage Robot Based on Technologies of Machine Intelligence. In Proceedings of the International Conference on High-Performance Computing Systems and Technologies in Scientific Research, Automation of Control and Production, Barnaul, Russia, 20–21 May 2022; Springer: Cham, Switzerland, 2022; pp. 354–367. [Google Scholar]

- Backman, J.; Linkolehto, R.; Lemsalu, M.; Kaivosoja, J. Building a Robot Tractor Using Commercial Components and Widely Used Standards. IFAC-PapersOnLine 2022, 55, 6–11. [Google Scholar] [CrossRef]

- Haibo, L.; Shuliang, D.; Zunmin, L.; Chuijie, Y. Study and Experiment on a Wheat Precision Seeding Robot. J. Robot. 2015, 2015, 696301. [Google Scholar] [CrossRef]

- Ghafar, A.S.A.; Hajjaj, S.S.H.; Gsangaya, K.R.; Sultan, M.T.H.; Mail, M.F.; Hua, L.S. Design and Development of a Robot for Spraying Fertilizers and Pesticides for Agriculture. Mater. Today Proc. 2023, 81, 242–248. [Google Scholar] [CrossRef]

- Terra, F.P.; Nascimento, G.H.D.; Duarte, G.A.; Drews, P.L.J., Jr. Autonomous Agricultural Sprayer Using Machine Vision and Nozzle Control. J. Intell. Robot. Syst. 2021, 102, 38. [Google Scholar] [CrossRef]

- Putu Devira Ayu Martini, N.; Tamami, N.; Husein Alasiry, A. Design and Development of Automatic Plant Robots with Scheduling System. In Proceedings of the 2020 International Electronics Symposium (IES), Surabaya, Indonesia, 29–30 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 302–307. [Google Scholar]

- An, Z.; Wang, C.; Raj, B.; Eswaran, S.; Raffik, R.; Debnath, S.; Rahin, S.A. Application of New Technology of Intelligent Robot Plant Protection in Ecological Agriculture. J. Food Qual. 2022, 2022, 1257015. [Google Scholar] [CrossRef]

- Ren, G.; Wu, T.; Lin, T.; Yang, L.; Chowdhary, G.; Ting, K.C.; Ying, Y. Mobile Robotics Platform for Strawberry Sensing and Harvesting within Precision Indoor Farming Systems. J. Field Robot. 2024, 41, 2047–2065. [Google Scholar] [CrossRef]

- Cubero, S.; Marco-Noales, E.; Aleixos, N.; Barbé, S.; Blasco, J. RobHortic: A Field Robot to Detect Pests and Diseases in Horticultural Crops by Proximal Sensing. Agriculture 2020, 10, 276. [Google Scholar] [CrossRef]

- Pooranam, N.; Vignesh, T. A Swarm Robot for Harvesting a Paddy Field. In Nature-Inspired Algorithms Applications; Wiley: Hoboken, NJ, USA, 2021; pp. 137–156. [Google Scholar]

- McCool, C.S.; Beattie, J.; Firn, J.; Lehnert, C.; Kulk, J.; Bawden, O.; Russell, R.; Perez, T. Efficacy of Mechanical Weeding Tools: A Study into Alternative Weed Management Strategies Enabled by Robotics. IEEE Robot. Autom. Lett. 2018, 3, 1184–1190. [Google Scholar] [CrossRef]

- Sembiring, A.; Budiman, A.; Lestari, Y.D. Design And Control Of Agricultural Robot For Tomato Plants Treatment And Harvesting. J. Phys. Conf. Ser. 2017, 930, 012019. [Google Scholar] [CrossRef]

- Casseem, M.S.I.S.; Venkannah, S.; Bissessur, Y. Design of a Tomato Harvesting Robot for Agricultural Small and Medium Enterprises (SMEs). In Proceedings of the 2022 IST-Africa Conference (IST-Africa), Virtual, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–8. [Google Scholar]

- Maurice, P.; Malaisé, A.; Amiot, C.; Paris, N.; Richard, G.-J.; Rochel, O.; Ivaldi, S. Human Movement and Ergonomics: An Industry-Oriented Dataset for Collaborative Robotics. Int. J. Robot. Res. 2019, 38, 1529–1537. [Google Scholar] [CrossRef]

- Machleb, J.; Peteinatos, G.G.; Kollenda, B.L.; Andújar, D.; Gerhards, R. Sensor-Based Mechanical Weed Control: Present State and Prospects. Comput. Electron. Agric. 2020, 176, 105638. [Google Scholar] [CrossRef]

- Zhang, K.; Lammers, K.; Chu, P.; Li, Z.; Lu, R. System Design and Control of an Apple Harvesting Robot. Mechatronics 2021, 79, 102644. [Google Scholar] [CrossRef]

- Giampieri, F.; Mazzoni, L.; Cianciosi, D.; Alvarez-Suarez, J.M.; Regolo, L.; Sánchez-González, C.; Capocasa, F.; Xiao, J.; Mezzetti, B.; Battino, M. Organic vs. Conventional Plant-Based Foods: A Review. Food Chem. 2022, 383, 132352. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Lopez-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of Robots for Environmentally-Safe Pest Control in Agriculture. Precis. Agric. 2017, 18, 574–614. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, Technology and the Future of Small Autonomous Drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- Belhajem, I.; Ben Maissa, Y.; Tamtaoui, A. A Robust Low Cost Approach for Real Time Car Positioning in a Smart City Using Extended Kalman Filter and Evolutionary Machine Learning. In Proceedings of the 2016 4th IEEE International Colloquium on Information Science and Technology (CiSt), Tangier, Morocco, 24–26 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 806–811. [Google Scholar]

- Emmi, L.; Gonzalez-de-Soto, M.; Pajares, G.; Gonzalez-de-Santos, P. New Trends in Robotics for Agriculture: Integration and Assessment of a Real Fleet of Robots. Sci. World J. 2014, 2014, 404059. [Google Scholar] [CrossRef] [PubMed]

- Concepcion, R.; Josh Ramirez, T.; Alejandrino, J.; Janairo, A.G.; Jahara Baun, J.; Francisco, K.; Relano, R.-J.; Enriquez, M.L.; Grace Bautista, M.; Vicerra, R.R.; et al. A Look at the Near Future: Industry 5.0 Boosts the Potential of Sustainable Space Agriculture. In Proceedings of the 2022 IEEE 14th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Boracay Island, Philippines, 1–4 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Polymeni, S.; Plastras, S.; Skoutas, D.N.; Kormentzas, G.; Skianis, C. The Impact of 6G-IoT Technologies on the Development of Agriculture 5.0: A Review. Electronics 2023, 12, 2651. [Google Scholar] [CrossRef]

- Humayun, M. Industrial Revolution 5.0 and the Role of Cutting Edge Technologies. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 605. [Google Scholar] [CrossRef]

- Rietsche, R.; Dremel, C.; Bosch, S.; Steinacker, L.; Meckel, M.; Leimeister, J.-M. Quantum Computing. Electron. Mark. 2022, 32, 2525–2536. [Google Scholar] [CrossRef]

- Siddiquee, K.N.-A.; Islam, M.S.; Singh, N.; Gunjan, V.K.; Yong, W.H.; Huda, M.N.; Naik, D.S.B. Development of Algorithms for an IoT-Based Smart Agriculture Monitoring System. Wirel. Commun. Mob. Comput. 2022, 2022, 7372053. [Google Scholar] [CrossRef]

| Version | Features | Focus and Major Issues | Major Driving Factors | Information and Cybersecurity Issues |

|---|---|---|---|---|

| Agriculture 1.0 | Traditional Agriculture dominated by manpower and animal forces | Human-centric, unsustainable low performance, and not resilient | N/A | N/A |

| Agriculture 2.0 | Agriculture mechanization | Machine-focused, machinery and chemicals usage, unsustainable not resilient | Industrial revolution | N/A |

| Agriculture 3.0 | Automatic agriculture with high-speed development | Technology-focused, Computers, programs, Unsustainable not resilient, and cybersecurity issues | Invention of computers, robotics, programming | Systems security Network security Devices security |

| Agriculture 4.0 | Smart agriculture featured by AI and IoT | Automation-focused Smart systems/devices, renewable energies, sustainable, not resilient, efficient, and cybersecurity issues | Introduction of AI, IoT, cloud computing, and big data | Data security, systems security, network security, security devices, cloud security |

| Agriculture 5.0 | Human-focused agriculture featured AI, IoT, robotics and Human–machine interactions | Human-centered highly sustainable resilient societal well-being cybersecurity issues | Chronic social issues. resilience need, increasing, consumer demands, and unsustainable production | Data security, systems security, network security, security devices, cloud security, and Human–machine security |

| Application | ML Algorithm | Dataset Type | Acc, % | Ref. |

|---|---|---|---|---|

| Coton diseases | CNN-BiLSTM | RGB | 89.7 | [53] |

| Detect diseases | CNN | RGB | 94 | [56] |

| Diagnosing plant diseases | CNN | RGB | 90 | [62] |

| Adzuki bean rust disease | CNN, ResNet-ViT, RMT | Multi-source | 99 | [63] |

| Cucumber downy mildew prediction | CNN-LSTM | RGB | 91 | [64] |

| Diagnosing plant diseases | SVM and CNN | RGB | 99 | [54] |

| Detection of plant diseases | ML and DL models | RGB | 98 | [55] |

| Rice diseases | GA and CNN | RGB | 95 | [65] |

| Tomato leaf disease | CNN | RGB | 99 | [57] |

| Tomato gray mold, cucumber downy mold, and cucumber powdery mildew spores | SVM | Fingerprint characteristics of diffraction–polarized | 95 | [66] |

| Detect brown spots in rice | CNN | RGB | 97 | [43] |

| Detection of apple diseases | CNN | RGB | 97 | [67] |

| Detection of cassava diseases | CNN | RGB | 98 | [68] |

| Wheat diseases | CNN | RGB | 97 | [69] |

| Detection of fusarium head blight disease | CNN | Hyperspectral | 75 | [60] |

| Tomato spotted wilt virus | CNN | Hyperspectral | 96 | [60] |

| Diseases and pests in tomatoes | ANN for regression, SVM | Spectral | 99 | [70] |

| Powdery mildew in wheat | PLSR, SVM, RF | Hyperspectral | 85 | [71] |

| Northern leaf blight in maize | CNNs | RGB | 96 | [72] |

| Tomato water stress | DNNs | RGB | Performed well | [73] |

| Tomato diseases and pests | Faster R-CNN, R-FCN, SSD, ResNet | RGB | 90 | [74] |

| Disease in Eggplant | CNN | RGB | 99 | [75] |

| Plant disease identification | CNN | RGB | 99 | [76] |

| Detection of grapevine esca disease | SIFT encoding and CNN | RGB | 90 | [77] |

| Grapevine yellows symptoms | CNN | RGB | 99 | [78] |

| Plant disease | CNN | RGB | 99 | [79] |

| Late blight in potato | RF and PLS-DA | Spectral | 83 | [80] |

| Laurel wilt | DT and MLP | Spectral | 100 | [81] |

| Bacterial spots in tomato | PCA and k-NN | Spectral | 100 | [82] |

| Anthracnose crown rot in strawberry | FDA, SDA, and kNN | Spectral | 73 | [83] |

| Rhizoctonia root and crown rot in sugar beet | PLS, RF, k-NN, and SVM | Hyperspectral | 72 | [84] |

| Potato virus y | CNN | Hyperspectral | 88 | [85] |

| Tobacco mosaic virus in tobacco | PLS-DA, RF, SVM, BPNN, and ELM | Hyperspectral | 95 | [86] |

| Bacterial blight in coffee | RF, SVM, and Naïve Bayes | Multi-spectral and thermal | 75 | [87] |

| Xylella fastidiosa infection in olive | LDA, SVM, RBF, and ensemble classifier | Hyperspectral and thermal | 80 | [88] |

| Fall armyworm (Spodoptera frugiperda) in cotton | Multiple ML algorithms | Hyperspectral | 91 | [89] |

| Pest monitoring | DPeNet, Faster R-CNN, SSD, and Yolov3 | RGB | 93 | [59] |

| Citrus pest | EfficientNet-b0 | RGB | 97 | [60] |

| Spotted spider mite in cotton | SVM | Multispectral | 85 | [90] |

| Plague species | CNN | RGB | 75 | [91] |

| Plague species in insect images | CNN | RGB | 89 | [92] |

| Crop | ML Algorithm | Dataset Type | Acc, % | Ref. |

|---|---|---|---|---|

| Peanuts | YOLOv4-Tiny | RGB | 96.7 | [101] |

| Sunflower | U-Net | Multispectral | 90 | [102] |

| Soybean | ML | Thermal | 82 | [103] |

| Tobacco, tomato, and sorghum | PlantNet | High Precision 3D Laser | >95 | [104] |

| Carrot | ANN with 15 units in ensemble | Multispectral | 83.5 | [105] |

| Chilli | RF and SVM | RGB | 96 and 94 | [97] |

| Rice | SVM | RGB | 73 | [106] |

| Wheat | Wheat-V2 | Spectral | >96.7 | [107] |

| Tobacco | Faster R-CNN and YOLOv5 | RGB | 98.43 and 94.45 | [108] |

| Pea and Strawberry | Faster R-CNN | RGB | 95.3 | [109] |

| Sugar Beet and Oilseed | An encoder–decoder network with atrous separable convolution | RGB | 96.12 | [110] |

| Soybean | CNN | RGB and spectral | 99.66 | [111] |

| Soybean | CNNLVQ | RGB | 99.44 | [112] |

| Bean and Spinach | RF | RGB | 96.99 | [113] |

| Carrot | ANN | Multispectral | 83.5 | [114] |

| Crop | Dataset | ML Algorithm | Detected Nutrient | Ref. |

|---|---|---|---|---|

| Banana, coffee and potatoes | RGB | CNN—Graph convolutional networks (GCN | Br, Ca, Fe, Mn, Mg, N, K, P, and more deficiencies | [117] |

| oilseed rape | RGB | CNN-LSTM | N-P-K | [118] |

| Coton | RGB | CNN-based regression | Nitrogen | [121] |

| Lettuce | RGB | CNN | NPK | [119] |

| Lettuce | Spectral data and RGB | SVM, PLSR, BPNN, RF, and AutoML | Chlorophyll content | [120] |

| Tomato | RGB | Pre-trained deep-learning model | Ca and Mg | [126] |

| Wheat | RGB | BP-ANN and KNN—stepwise-based ridge regression (SBRR) | Chlorophyll content | [127] |

| Rice | RGB | Ensembling of various Transfer Learning (TL) architectures | Multiple deficiencies | [128] |

| Soybean | RGB | Deep CNN Model framework | Multiple stress, and potassium deficiency | [129] |

| Rice | RGB | CNN, pre-eminent classifier-SVM | Nitrogen | [130] |

| Black gram | RGB | Image Segmentation and CNN | Multiple deficiencies | [131] |

| Paddy | RGB | Deep CNN with pre-trained VGG 16 | Various classes of Biotic and Abiotic stress | [123] |

| Rice | RGB | CNN and using Edge as a service | Biotic stress | [132] |

| Muskmelon | RGB | CNN, BPNN, DCNN, LSTM | Nitrogen | [133] |

| Guava, Groundnut | RGB | CNN, RCNN | N-P-K | [134] |

| Sorghum Plant | RGB | Multilayered Deep Learning | Nitrogen | [135] |

| Sugar beet | RGB | CNN using RGB images | N, P, K, Calcium and fertilization status | [136] |

| Lettuce | RGB | CNN | Nitrogen | [137] |

| Crop | ML Algorithm | Dataset Type | Acc. | Ref. |

|---|---|---|---|---|

| Barley | ANN | Phenotype and genotype features | R2 = 0.99 | [138] |

| Wheat | RF | Fluorescence | 91% | [139] |

| Maize | ResNet50 | RGB | 98% | [143] |

| Wheat | SVM, RF, and DNN | Hyperspectral | 94% | [140] |

| Rice | RF | Thermal | R2 = 0.78 | [141] |

| Maize | CNN+SVM | RGB | 94% | [144] |

| Rice | ML models | Spectral | R2 = 0.87 | [145] |

| Cotton | Multi CNN models | Thermal | F1 = 0.99 | [142] |

| Tomato | VGG-16 and Resnet-50 | RGB, NIR and depth images | 99.18% | [146] |

| Crop | ML Algorithm | Dataset Type | Acc. % | Ref. |

|---|---|---|---|---|

| Wheat | Traditional ML and 1D-CNN | Multispectral data | R2 = 0.703 | [147] |

| Tiger nut | SqueezeNet | Multispectral | 78 | [148] |

| Rice | CNN | RGB | 68 | [149] |

| Rice | CNN (AlexNet) | Meteorological | 86 | [150] |

| Rice | CNN-LSTM | Meteorological | 93.4 | [151] |

| Multiple | LR, NB, and RF | Meteorological | 92.81 | [156] |

| Peanut, maize, millet and sorghum | SVM, RF, and ANN | Meteorological | R2 ≥ 0.50 | [152] |

| Multiple | RF, Adaboost, Gradient Boost, and (SVM) | Meteorological | 82 | [153] |

| Irish potatoes and Maize | RF and SVM | Meteorological | 87.5 | [154] |

| Wheat | LSTM-RF | Multispectral | 71 | [157] |

| Soybean | PLSR, RF, SVM, DNN-F1 and DNN (DNN-F2) | RGB, multispectral and thermal | 72 | [158] |

| Wheat | OLS and LASSO, SVM, RF, AdaBoost, and DNN | Satellite images, climate data, soil maps, and historical yield records. | 84 | [159] |

| Wheat | RF, DNN, 1D-CNN and LSTM | Climate, satellite, soil properties, and spatial information data | 90 | [160] |

| Corn | 1D-CNN | Hyperspectral | 75.50 | [161] |

| Wheat and Barley | CNN | RGB | MAPE = 8.8 | [162] |

| Almond | CNN (U-Net) | RGB | 78 | [163] |

| Application | Accuracy | Ref. |

|---|---|---|

| Tilling | Performed very well | [184] |

| Tractor | Performed very well | [185] |

| Wheat Precision Seeding | The qualified rates of seeding exceed 93% | [186] |

| Spraying fertilizers and pesticides | Performed very well | [187] |

| Spraying fertilizers and pesticides | The system can detect lines in plantations and can be used to retrofit conventional boom sprayers. | [188] |

| Planting | Performed well | [189] |

| Plant protection | Path planning accuracy is up to 97.8% | [190] |

| Strawberry sensing and harvesting | Trials showed an overall success rate of 78% in dealing with harvestable strawberries with a 23% damage rate | [191] |

| Inspect the presence of pests and diseases | A detection rate of 66.4% was obtained for images obtained in the laboratory and 59.8% for images obtained in the field. | [192] |

| Harvesting paddy | Harvesting process improved | [193] |

| Detecting and classifying weed | The results showed that the development deployed automatically on AgBot II was effective in controlling all weeds. | [194] |

| Tomato treatment and harvesting | Performed well | [195] |

| Tomato harvesting | Performed well | [196] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taha, M.F.; Mao, H.; Zhang, Z.; Elmasry, G.; Awad, M.A.; Abdalla, A.; Mousa, S.; Elwakeel, A.E.; Elsherbiny, O. Emerging Technologies for Precision Crop Management Towards Agriculture 5.0: A Comprehensive Overview. Agriculture 2025, 15, 582. https://doi.org/10.3390/agriculture15060582

Taha MF, Mao H, Zhang Z, Elmasry G, Awad MA, Abdalla A, Mousa S, Elwakeel AE, Elsherbiny O. Emerging Technologies for Precision Crop Management Towards Agriculture 5.0: A Comprehensive Overview. Agriculture. 2025; 15(6):582. https://doi.org/10.3390/agriculture15060582

Chicago/Turabian StyleTaha, Mohamed Farag, Hanping Mao, Zhao Zhang, Gamal Elmasry, Mohamed A. Awad, Alwaseela Abdalla, Samar Mousa, Abdallah Elshawadfy Elwakeel, and Osama Elsherbiny. 2025. "Emerging Technologies for Precision Crop Management Towards Agriculture 5.0: A Comprehensive Overview" Agriculture 15, no. 6: 582. https://doi.org/10.3390/agriculture15060582

APA StyleTaha, M. F., Mao, H., Zhang, Z., Elmasry, G., Awad, M. A., Abdalla, A., Mousa, S., Elwakeel, A. E., & Elsherbiny, O. (2025). Emerging Technologies for Precision Crop Management Towards Agriculture 5.0: A Comprehensive Overview. Agriculture, 15(6), 582. https://doi.org/10.3390/agriculture15060582