Correction: Liao et al. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617

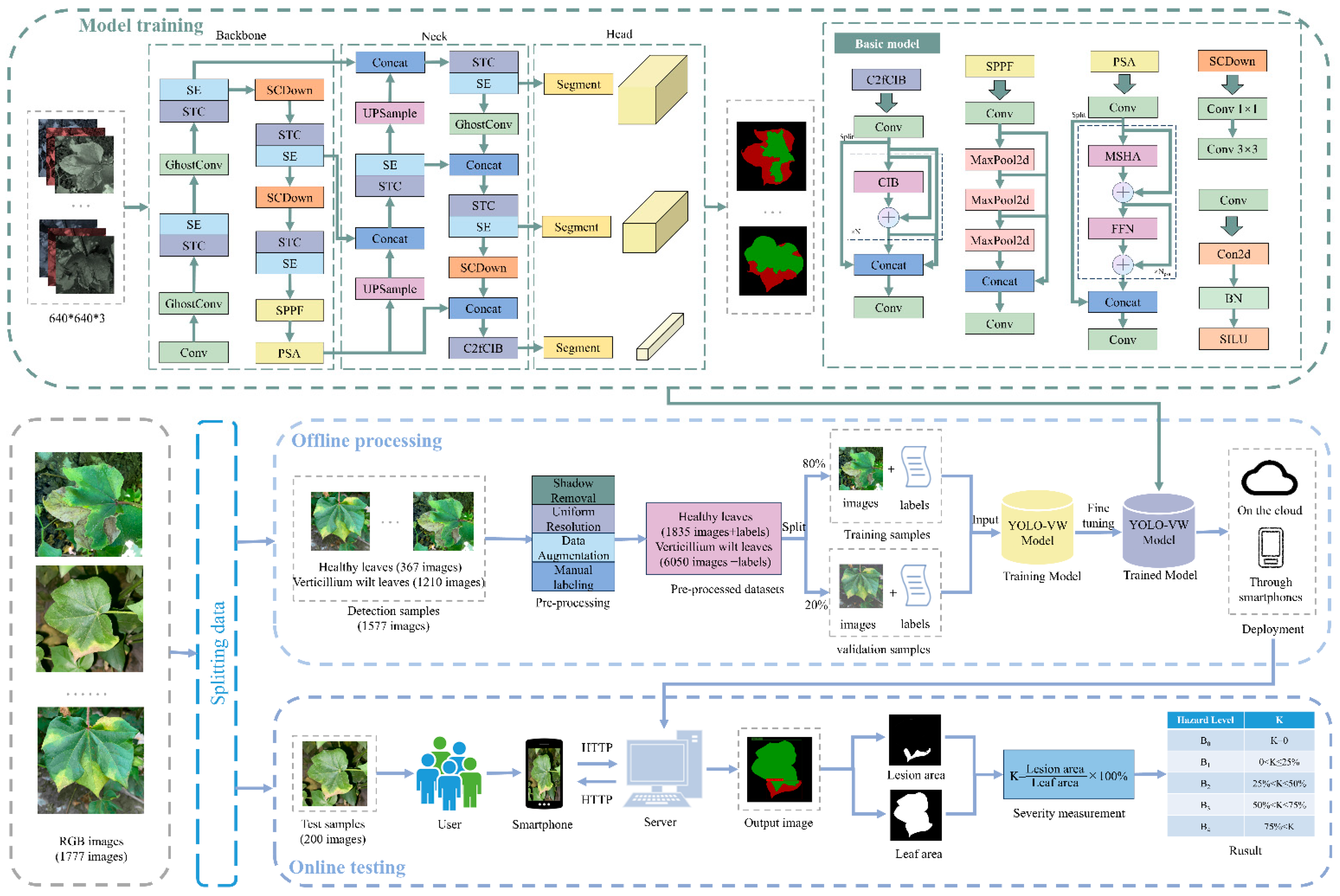

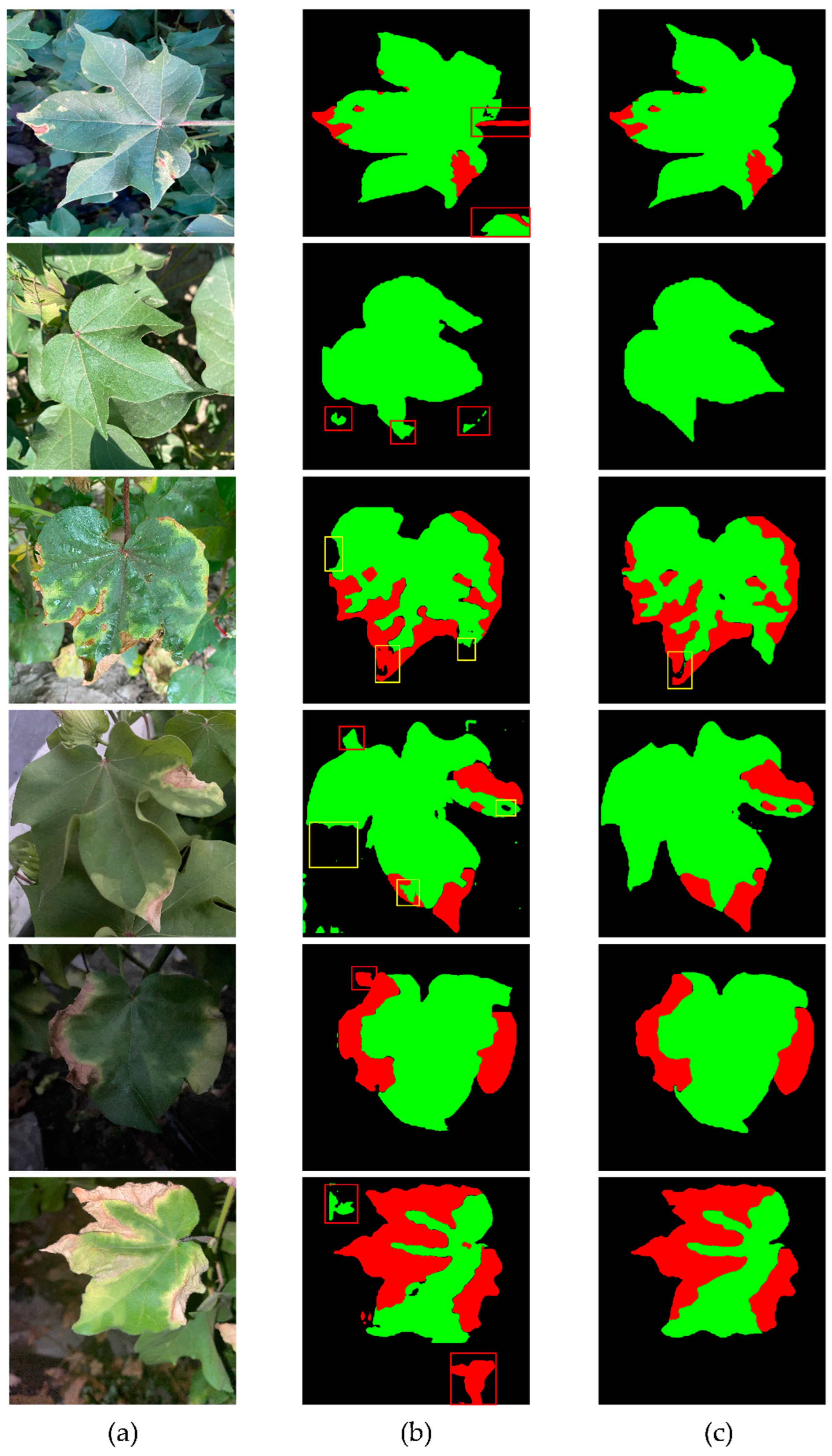

- Accuracy: YOLO-VW achieved a segmentation accuracy mAP(M) of 89.2%, which represents improvements of 3.9%, 2.9%, 4.2%, 2.9%, 12.8%, 1.9%, and 2.4% compared to YOLOv5s, YOLOv7-tiny, YOLOv8n, YOLOv9t, SOLOv2, Mask R-CNN, and the baseline model YOLOv10n, respectively.

- Lightweight Design: In terms of the weight, parameters, and FLOPs, YOLO-VW was compressed to 25.6%, 21.5%, and 30.4% of YOLOv5s; 29.5%, 24.8%, and 33.9% of YOLOv7-tiny; 56.6%, 48.8%, and 65% of YOLOv8n; 51.8%, 46.6%, and 17.8% of YOLOv9t; 2%, 3.4%, and 4% of SOLOv2; and 2.1%, 3.6%, and 3.8% of Mask R-CNN, respectively. Furthermore, compared to the YOLOv10n baseline model, YOLO-VW achieved compression ratios of 64.4%, 56%, and 66.1% of the original size across these three metrics.

- Detection Speed: YOLO-VW exhibited excellent performance, achieving 157.98 FPS, an improvement of 21.37 FPS over the original model. This reduction in the computation time is attributable to the improvements in the lightweight modules.

- An improved YOLO-VW model, incorporating improved modules such as STC, GhostConv, SE, and SGD, demonstrated improved detection accuracy while reducing the model parameters and computation.

- The results showed that the F1 and mAP(M) of the YOLO-VW model were 88.89% and 89.2%, which were increased by 3.91%, and 2.4%, respectively, compared with the YOLOv10n model. The numbers of parameters and FLOPs were also reduced to 1.59 M and 7.8G, respectively. Compared with the YOLOv5s, YOLOv7-tiny, YOLOv8n, YOLOv9t, SOLOv2, and Mask R-CNN models, the YOLO-VW model obtained the greatest accuracy in CVW segmentation with the smallest model size and the most minor parameters.

- The lightweight CVW hazard level assessment system was deployed in a client-server platform, with an Android smartphone app developed for testing the YOLO-VW and YOLOv10n models; the YOLO-VW model showed a processing time of 2.42 s per image and an accuracy of 85.5%, which was 15% higher than that of the YOLOv10n model.

Reference

- Liao, J.; He, X.; Liang, Y.; Wang, H.; Zeng, H.; Luo, X.; Li, X.; Zhang, L.; Xing, H.; Zang, Y. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617. [Google Scholar] [CrossRef]

| Model | Optimizer | P (%) | R (%) | F1 (%) | mAPM@0.5 (%) | Weight/MB | Parameters/M | FLOPs/G |

|---|---|---|---|---|---|---|---|---|

| YOLO-VW | Adam | 90.4 | 85.3 | 87.78 | 88.3 | 3.70 | 1.59 | 7.8 |

| AdamW | 89.0 | 85.0 | 86.95 | 88.3 | 3.70 | 1.59 | 7.8 | |

| SGD | 92.1 | 85.9 | 88.89 | 89.2 | 3.69 | 1.59 | 7.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, J.; He, X.; Liang, Y.; Wang, H.; Zeng, H.; Luo, X.; Li, X.; Zhang, L.; Xing, H.; Zang, Y. Correction: Liao et al. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617. Agriculture 2025, 15, 911. https://doi.org/10.3390/agriculture15090911

Liao J, He X, Liang Y, Wang H, Zeng H, Luo X, Li X, Zhang L, Xing H, Zang Y. Correction: Liao et al. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617. Agriculture. 2025; 15(9):911. https://doi.org/10.3390/agriculture15090911

Chicago/Turabian StyleLiao, Juan, Xinying He, Yexiong Liang, Hui Wang, Haoqiu Zeng, Xiwen Luo, Xiaomin Li, Lei Zhang, He Xing, and Ying Zang. 2025. "Correction: Liao et al. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617" Agriculture 15, no. 9: 911. https://doi.org/10.3390/agriculture15090911

APA StyleLiao, J., He, X., Liang, Y., Wang, H., Zeng, H., Luo, X., Li, X., Zhang, L., Xing, H., & Zang, Y. (2025). Correction: Liao et al. A Lightweight Cotton Verticillium Wilt Hazard Level Real-Time Assessment System Based on an Improved YOLOv10n Model. Agriculture 2024, 14, 1617. Agriculture, 15(9), 911. https://doi.org/10.3390/agriculture15090911