An AUV-Assisted Data Gathering Scheme Based on Deep Reinforcement Learning for IoUT

Abstract

:1. Introduction

- An AUV-assisted IoUT autonomous underwater data collection system is designed. Specifically, it builds a realizable real-world scenario for describing the behavior of AUV and sensor nodes, furthermore challenging environments.

- For the AUV path planning problem, DRL technology is used to dynamically adjust the AUV’s cruise path without prior knowledge of the environment model. Second, it combines multiple dimensions such as the location of nodes, the value of data in nodes, and the AUV status to plan a more reasonable data collection path for AUV.

2. Related Work

3. System Model

3.1. Network Architecture

- Sensor Node: The function of the sensor node is to perform underwater surveillance tasks and send the monitored data to the central node to which it belongs. It is assumed that the sensor node is static in underwater environments, and sensor nodes are also constrained by limited battery resources because they are difficult to replace or charge. According to some time synchronization techniques [24] and positioning schemes [25], it is assumed that the sensor nodes are clock synchronized and the position of each sensor is known.

- Central Node: The central node as the data collection center in the cluster transmits the aggregated data to the AUV, so the central node has a high traffic load. If the central node continues to use underwater acoustic communication to transmit information, due to its high power consumption (the consumption of power for underwater acoustic communication is usually at the watt level [26]), the central node will soon die due to the exhaustion of its own limited battery energy, resulting in the entire network being paralyzed. In addition, because underwater acoustic communication has a limited data rate (tens of Kbps), the AUV needs to stay at the central node for a long time to wait for the data to be transmitted. The data collection scheme based on AUV can greatly reduce the data transmission distance of the central node. Therefore, in order to save the energy of the central node, improve the data transmission rate, and reduce the stagnation time of the AUV at the central node, optical communication with low latency, low power consumption, and high data rate (Gbps) [27] is chosen as the communication mode between the central node and the AUV.

- AUV: The role of the AUV is to obtain data from the central node by using high-speed optical communication and offload the collected data to the surface station. With the sinking of the AUV, long-distance data transmission is replaced by short-range communication, which not only reduces the energy consumption of underwater sensor nodes, but also makes it possible to apply underwater short-range and high-data-rate channels to transmit large amounts of data.

3.2. The Underwater Sensor Node Model

3.3. AUV Model

3.4. Problem Formulation

4. AUV Dynamic Data Collection Based on DRL

4.1. Reinforcement Learning

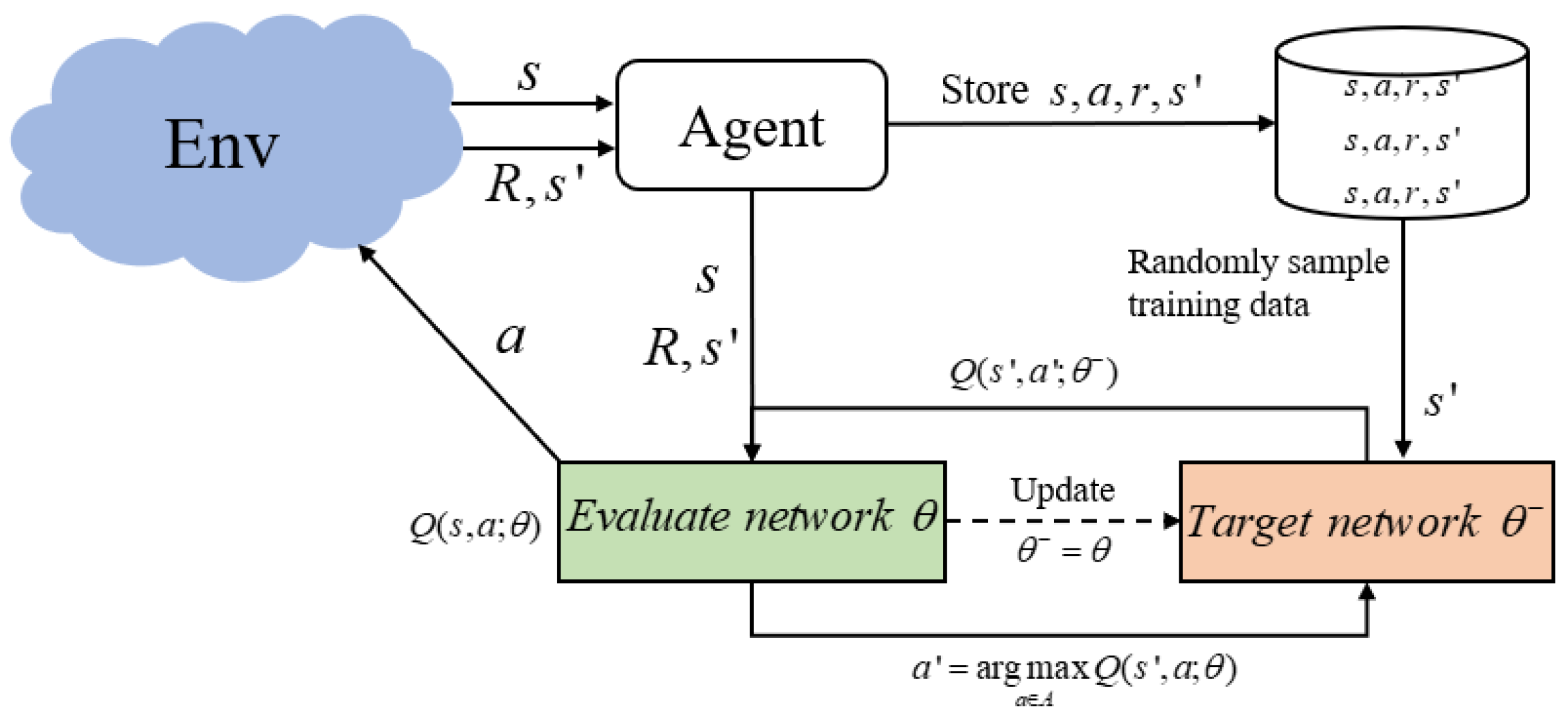

4.2. The Proposed Strategy Based on DDQN

- State: After the AUV has collected data from a central node, we define its observed state as:where presents the amount of data stored on all central nodes after the AUV collects data on the current central node.

- Action: After the AUV has collected data on the current central node, it will perform an action based on the state , which is the next node to traverse:where is the set of water surface station nodes and all central nodes; represents the nodes that have been traversed in the round t.In the existing work based on reinforcement learning, the method of giving negative punishment is usually used to prevent the agent from selecting invalid actions (such as the central node that has been traversed in each round in this paper). However, Ref. [30] proves that when the set of invalid actions is expanded, the ineffective action punishment method will not work, whereas the ineffective action masking method will complete the task well. With the continuous progress of the AUV cruise, the set of invalid actions (the nodes that have been traversed) keeps increasing, so this paper improves the strategy . The improved action selection strategy is shown in Algorithm 1, and Formula (8) of the current network to estimate Q value and select the optimal action is improved as follows:where represents a masking vector of invalid action in state .

- Reward: The reward function evaluates the action of the AUV’s choice in the state . For problem P1, the objective is to achieve efficient data collection, so the reward function can be defined as:where represents the reward for the AUV from the current node to the surface station node.

| Algorithm 1 Improved Action Selection Strategy |

| Input: Current State , AUV state . |

| Output: Next action a. |

|

| Algorithm 2 The AUV Dynamic Data Collection Based on DDQN |

|

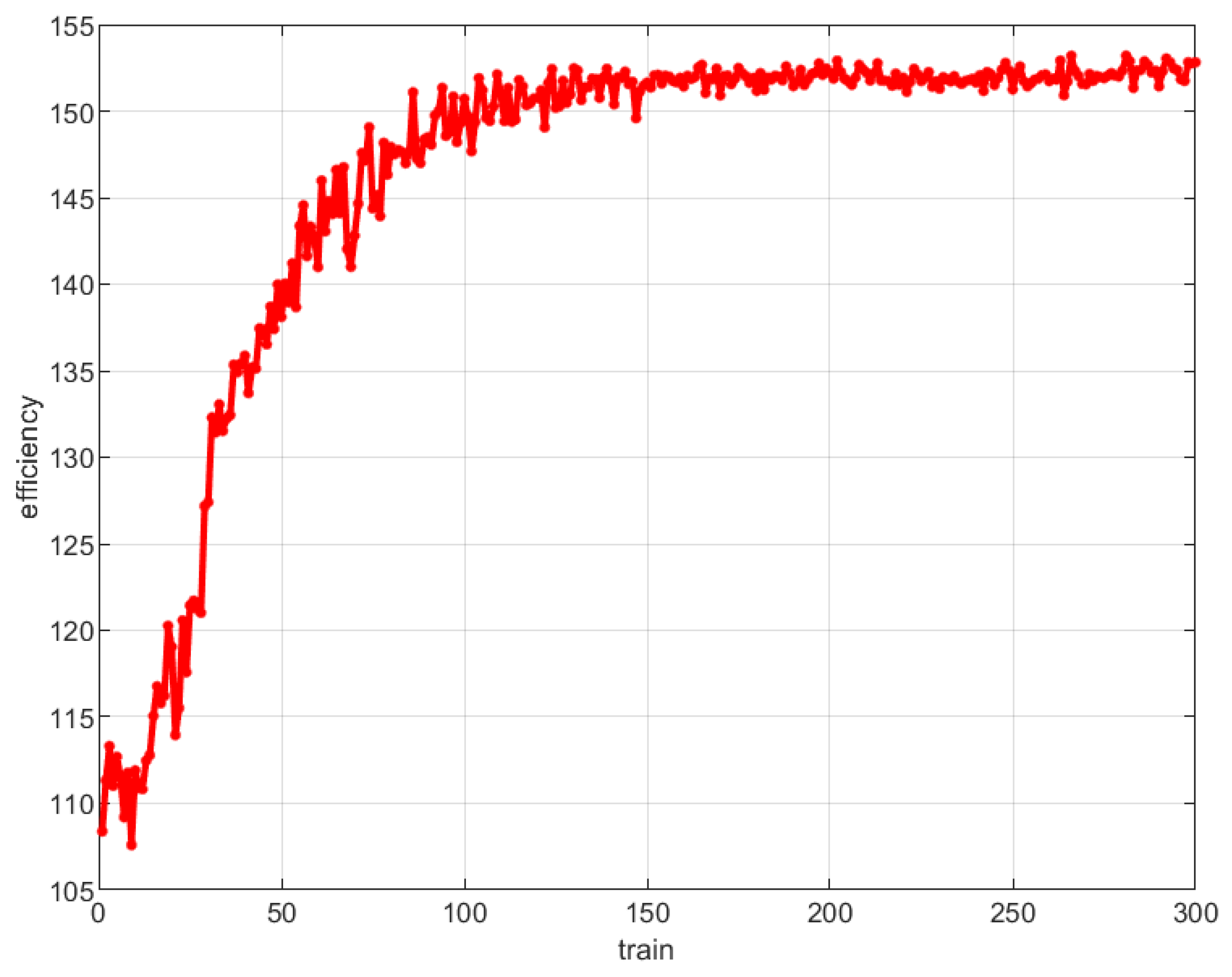

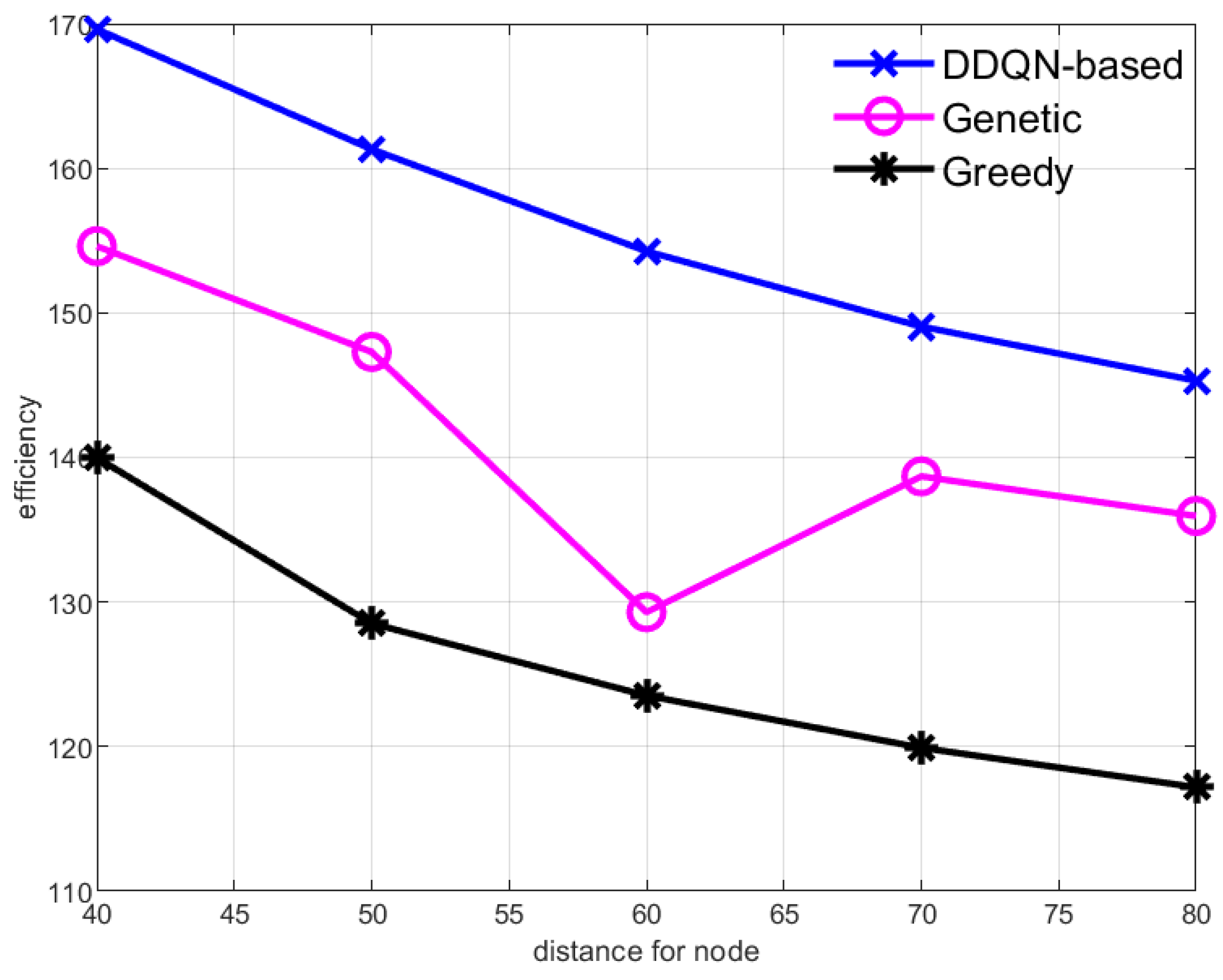

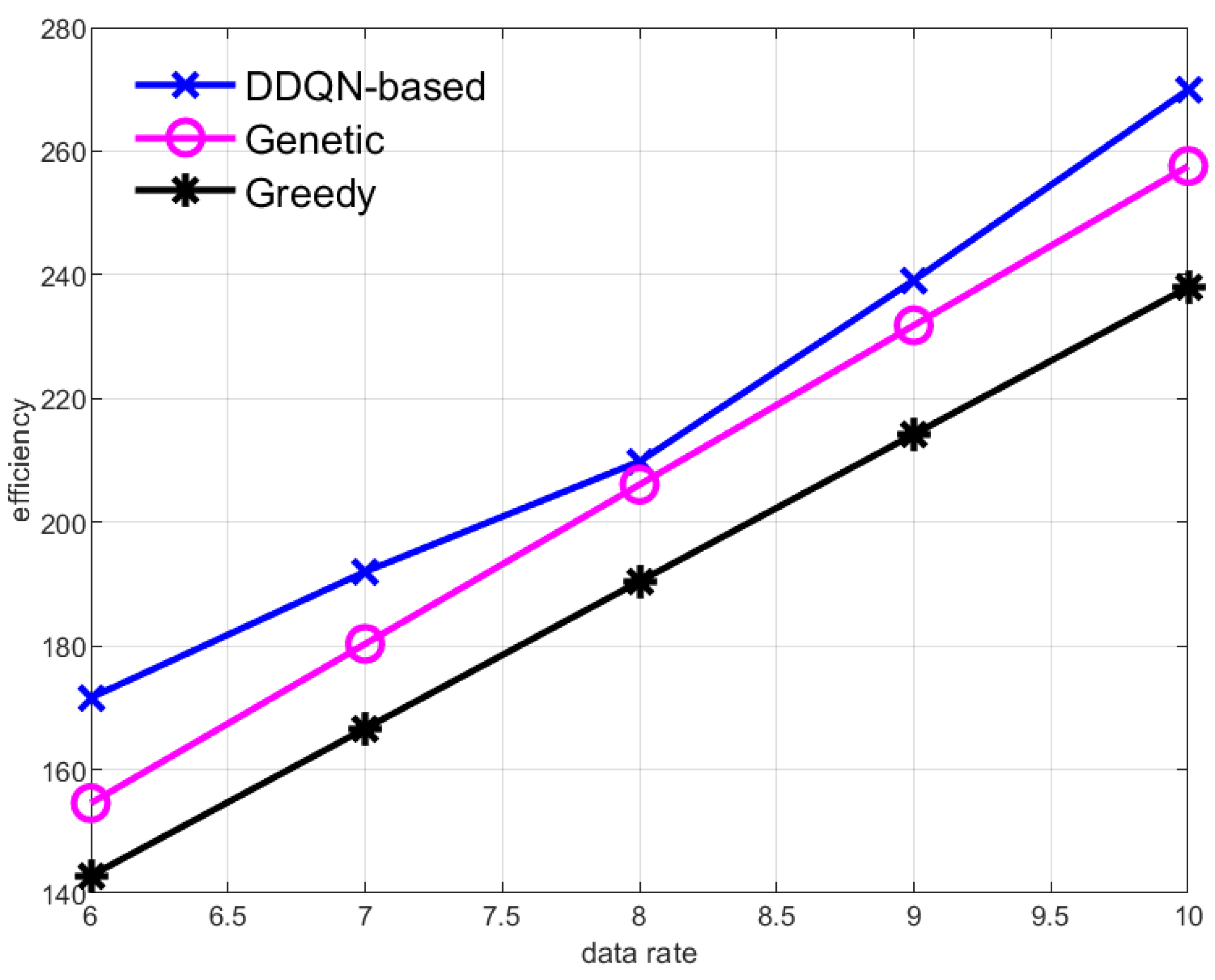

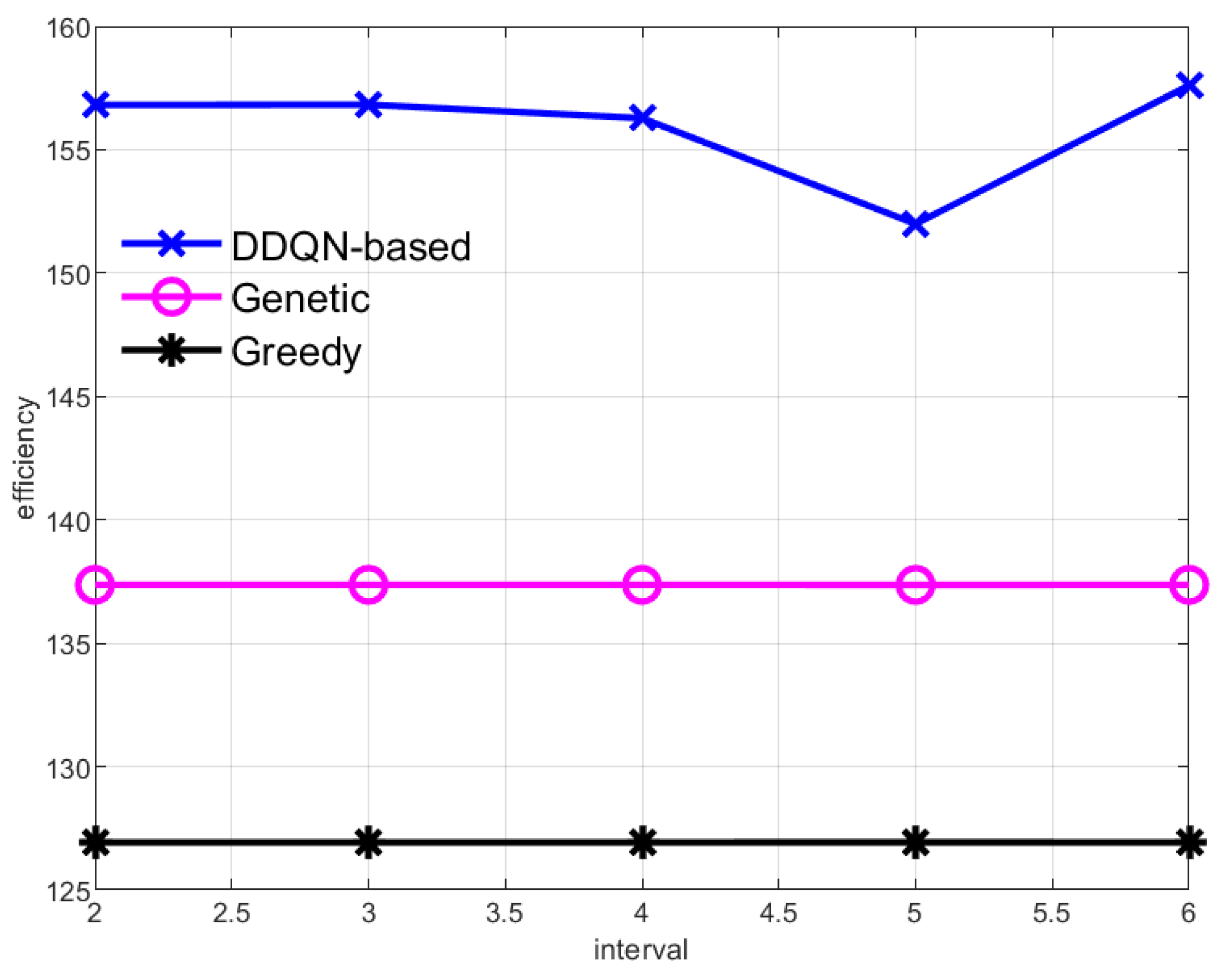

5. Simulation Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Glossary

| Symbol | Implication |

| n | The number of central nodes. |

| The set of the central nodes. | |

| The number of the child nodes contained by the central node n. | |

| The data transmission interval of the sensor nodes. | |

| The data collection rate of child node I of central node n. | |

| The energy expenditure of round t of the AUV. | |

| The navigation energy expenditure of round t of the AUV. | |

| The time spent on round t of the AUV. | |

| The navigation time spent on round t of the AUV. | |

| The value of the data collected at round t. |

References

- Zhu, J.; Pan, X.; Peng, Z.; Liu, M.; Guo, J.; Zhang, T.; Gou, Y.; Cui, J.-H. A uw-cellular network: Design, implementation and experiments. J. Mar. Sci. Eng. 2023, 11, 827. [Google Scholar] [CrossRef]

- Pan, X.; Zhu, J.; Liu, M.; Wang, X.; Peng, Z.; Liu, J.; Cui, J. An on-demand scheduling-based mac protocol for uw-wifi networks. J. Mar. Sci. Eng. 2023, 11, 765. [Google Scholar] [CrossRef]

- Razzaq, A.; Mohsan, S.A.H.; Li, Y.; Alsharif, M.H. Architectural framework for underwater iot: Forecasting system for analyzing oceanographic data and observing the environment. J. Mar. Sci. Eng. 2023, 11, 368. [Google Scholar] [CrossRef]

- Li, Y.; Bai, J.; Chen, Y.; Lu, X.; Jing, P. High value of information guided data enhancement for heterogeneous underwater wireless sensor networks. J. Mar. Sci. Eng. 2023, 11, 1654. [Google Scholar] [CrossRef]

- Kabanov, A.; Kramar, V. Marine internet of things platforms for interoperability of marine robotic agents: An overview of concepts and architectures. J. Mar. Sci. Eng. 2022, 10, 1279. [Google Scholar] [CrossRef]

- Glaviano, F.; Esposito, R.; Cosmo, A.D.; Esposito, F.; Gerevini, L.; Ria, A.; Molinara, M.; Bruschi, P.; Costantini, M.; Zupo, V. Management and sustainable exploitation of marine environments through smart monitoring and automation. J. Mar. Sci. Eng. 2022, 10, 297. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Li, Y.; Sadiq, M.; Liang, J.; Khan, M.A. Recent advances, future trends, applications and challenges of internet of underwater things (iout): A comprehensive review. J. Mar. Sci. Eng. 2023, 11, 124. [Google Scholar] [CrossRef]

- Du, J.; Gelenbe, E.; Jiang, C.; Zhang, H.; Ren, Y. Contract design for traffic offloading and resource allocation in heterogeneous ultra-dense networks. IEEE J. Sel. Areas Commun. 2017, 35, 2457–2467. [Google Scholar] [CrossRef]

- Yoon, S.; Qiao, C. Cooperative search and survey using autonomous underwater vehicles (auvs). IEEE Trans. Parallel Distrib. Syst. 2011, 22, 364–379. [Google Scholar] [CrossRef]

- Qiu, T.; Zhao, Z.; Zhang, T.; Chen, C.; Chen, C.L.P. Underwater internet of things in smart ocean: System architecture and open issues. IEEE Trans. Ind. Inform. 2020, 16, 4297–4307. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, N.; Liu, Y. Topology control models and solutions for signal irregularity in mobile underwater wireless sensor networks. J. Netw. Comput. Appl. 2015, 51, 68–90. [Google Scholar] [CrossRef]

- Jurdak, R.; Lopes, C.; Baldi, P. Battery lifetime estimation and optimization for underwater sensor networks. IEEE Sens. Netw. Oper. 2004, 2006, 397–420. [Google Scholar]

- Yoon, S.; Azad, A.K.; Oh, H.; Kim, S. Aurp: An auv-aided underwater routing protocol for underwater acoustic sensor networks. Sensors 2012, 12, 1827–1845. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, A.; Wahid, A.; Kim, D. Aeerp: Auv aided energy efficient routing protocol for underwater acoustic sensor network. In Proceedings of the 8th ACM Workshop on Performance Monitoring and Measurement of Heterogeneous Wireless and Wired Networks, New York, NY, USA, 3–8 November 2013; pp. 53–60. [Google Scholar]

- Ilyas, N.; Alghamdi, T.A.; Farooq, M.N.; Mehboob, B.; Sadiq, A.H.; Qasim, U.; Khan, Z.A.; Javaid, N. Aedg: Auv-aided efficient data gathering routing protocol for underwater wireless sensor networks. Procedia Comput. Sci. 2015, 52, 568–575. [Google Scholar] [CrossRef]

- Wang, X.; Wei, D.; Wei, X.; Cui, J.; Pan, M. Has4: A heuristic adaptive sink sensor set selection for underwater auv-aid data gathering algorithm. Sensors 2018, 18, 4110. [Google Scholar] [CrossRef] [PubMed]

- Qin, C.; Du, J.; Wang, J.; Ren, Y. A hierarchical information acquisition system for auv assisted internet of underwater things. IEEE Access 2020, 8, 176089–176100. [Google Scholar] [CrossRef]

- Han, G.; Shen, S.; Song, H.; Yang, T.; Zhang, W. A stratification-based data collection scheme in underwater acoustic sensor networks. IEEE Trans. Veh. Technol. 2018, 67, 10671–10682. [Google Scholar] [CrossRef]

- Huang, M.; Zhang, K.; Zeng, Z.; Wang, T.; Liu, Y. An auv-assisted data gathering scheme based on clustering and matrix completion for smart ocean. IEEE Internet Things J. 2020, 7, 9904–9918. [Google Scholar] [CrossRef]

- Zhuo, X.; Liu, M.; Wei, Y.; Yu, G.; Qu, F.; Sun, R. Auv-aided energy-efficient data collection in underwater acoustic sensor networks. IEEE Internet Things J. 2020, 7, 10010–10022. [Google Scholar] [CrossRef]

- Liu, Z.; Meng, X.; Liu, Y.; Yang, Y.; Wang, Y. Auv-aided hybrid data collection scheme based on value of information for internet of underwater things. IEEE Internet Things J. 2022, 9, 6944–6955. [Google Scholar] [CrossRef]

- Cai, S.; Zhu, Y.; Wang, T.; Xu, G.; Liu, A.; Liu, X. Data collection in underwater sensor networks based on mobile edge computing. IEEE Access 2019, 7, 65357–65367. [Google Scholar] [CrossRef]

- Yan, J.; Yang, X.; Luo, X.; Chen, C. Energy-efficient data collection over auv-assisted underwater acoustic sensor network. IEEE Syst. J. 2018, 12, 3519–3530. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Z.; Zuba, M.; Peng, Z.; Cui, J.-H.; Zhou, S. Da-sync: A doppler-assisted time-synchronization scheme for mobile underwater sensor networks. IEEE Trans. Mob. Comput. 2014, 13, 582–595. [Google Scholar] [CrossRef]

- Yan, J.; Xu, Z.; Wan, Y.; Chen, C.; Luo, X. Consensus estimation-based target localization in underwater acoustic sensor networks. Int. J. Robust Nonlinear Control. 2017, 27, 1607–1627. [Google Scholar] [CrossRef]

- Wei, D.; Huang, C.; Li, X.; Lin, B.; Shu, M.; Wang, J.; Pan, M. Power-efficient data collection scheme for auv-assisted magnetic induction and acoustic hybrid internet of underwater things. IEEE Internet Things J. 2022, 9, 11675–11684. [Google Scholar] [CrossRef]

- Lv, Z.; He, G.; Qiu, C.; Liu, Z. Investigation of underwater wireless optical communications links with surface currents and tides for oceanic signal transmission. IEEE Photonics J. 2021, 13, 1–8. [Google Scholar] [CrossRef]

- Zhu, Z.; Hu, C.; Zhu, C.; Zhu, Y.; Sheng, Y. An improved dueling deep double-q network based on prioritized experience replay for path planning of unmanned surface vehicles. J. Mar. Sci. Eng. 2021, 9, 1267. [Google Scholar] [CrossRef]

- Xing, B.; Wang, X.; Yang, L.; Liu, Z.; Wu, Q. An algorithm of complete coverage path planning for unmanned surface vehicle based on reinforcement learning. J. Mar. Sci. Eng. 2023, 11, 645. [Google Scholar] [CrossRef]

- Huang, S.; Onta nón, S. A closer look at invalid action masking in policy gradient algorithms. arXiv 2022, arXiv:200614171. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Tang, Y.; Jin, M.; Jing, L. An AUV-Assisted Data Gathering Scheme Based on Deep Reinforcement Learning for IoUT. J. Mar. Sci. Eng. 2023, 11, 2279. https://doi.org/10.3390/jmse11122279

Shi W, Tang Y, Jin M, Jing L. An AUV-Assisted Data Gathering Scheme Based on Deep Reinforcement Learning for IoUT. Journal of Marine Science and Engineering. 2023; 11(12):2279. https://doi.org/10.3390/jmse11122279

Chicago/Turabian StyleShi, Wentao, Yongqi Tang, Mingqi Jin, and Lianyou Jing. 2023. "An AUV-Assisted Data Gathering Scheme Based on Deep Reinforcement Learning for IoUT" Journal of Marine Science and Engineering 11, no. 12: 2279. https://doi.org/10.3390/jmse11122279

APA StyleShi, W., Tang, Y., Jin, M., & Jing, L. (2023). An AUV-Assisted Data Gathering Scheme Based on Deep Reinforcement Learning for IoUT. Journal of Marine Science and Engineering, 11(12), 2279. https://doi.org/10.3390/jmse11122279