MSFE-UIENet: A Multi-Scale Feature Extraction Network for Marine Underwater Image Enhancement

Abstract

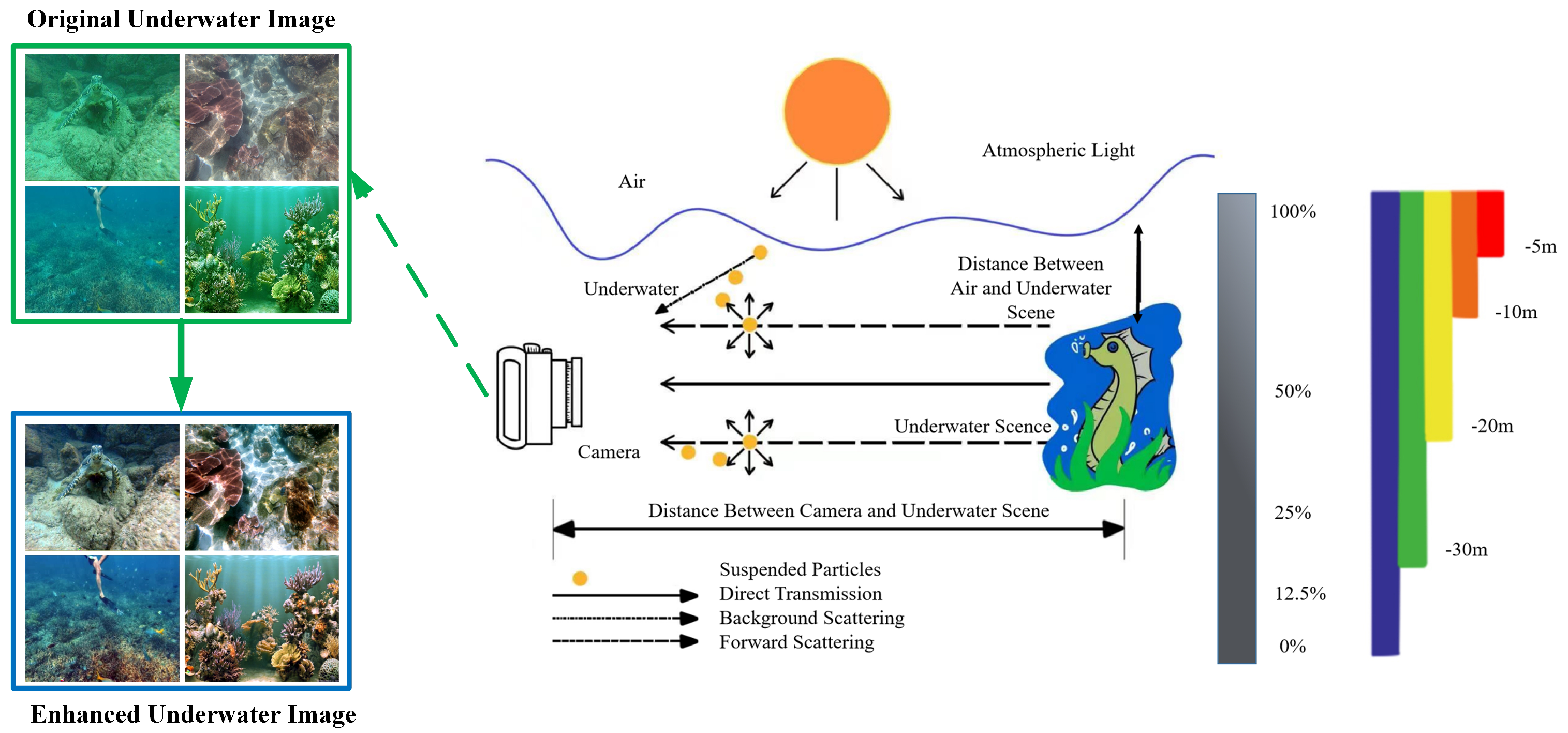

:1. Introduction

- 1.

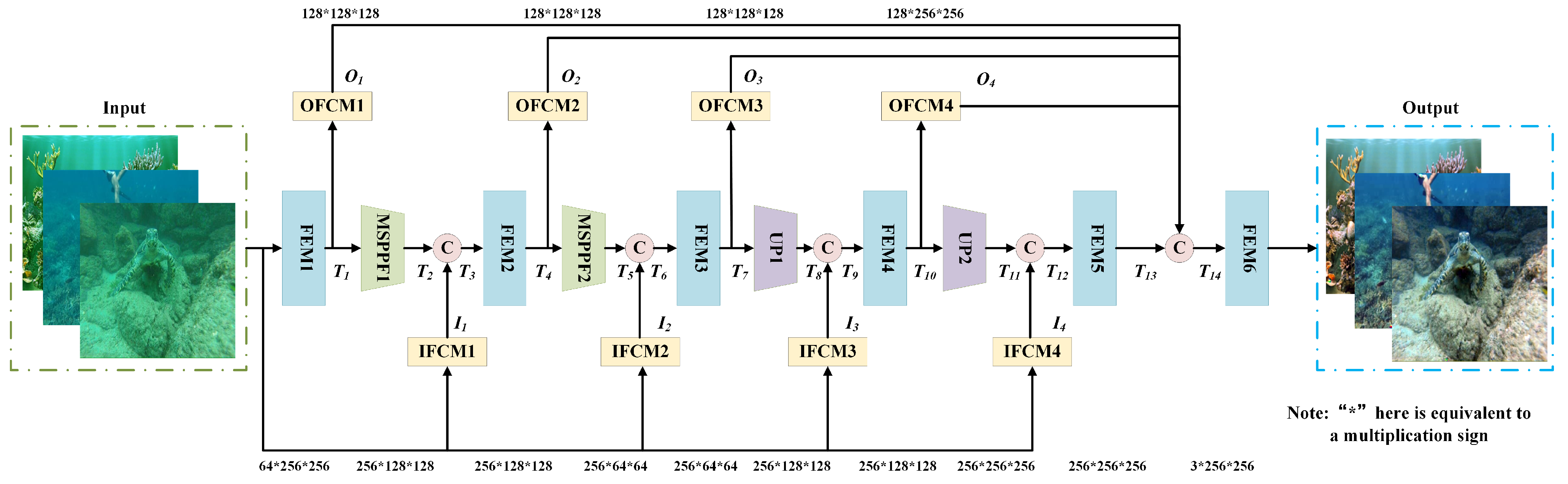

- This study introduces a high-performance UIE network based on multi-scale feature extraction. The network incorporates two fundamental modules: the feature extraction module (FEM) and the multi-scale spatial pyramid pooling features block (MSPPF). These modules effectively amplify the feature extraction capability of the network and minimize the insufficient enhancement effects typically observed in traditional enhancement networks.

- 2.

- Forward and backward branches are incorporated to improve the gradient flow of the network. After processing the source feature using this module, the desired shape can be attained, thereby facilitating the integration of the target feature.

- 3.

- Comprehensive evaluations indicate that the proposed network outperforms several state-of-the-art methods in terms of enhancement effects and computational complexity on widely utilized public underwater datasets.

2. Related Works

2.1. Model-Free Methods

2.2. Model-Based Methods

2.3. Deep-Learning-Based Methods

3. Method

3.1. Framework of MSFE-UIENet

3.2. Feature Extraction Module (FEM)

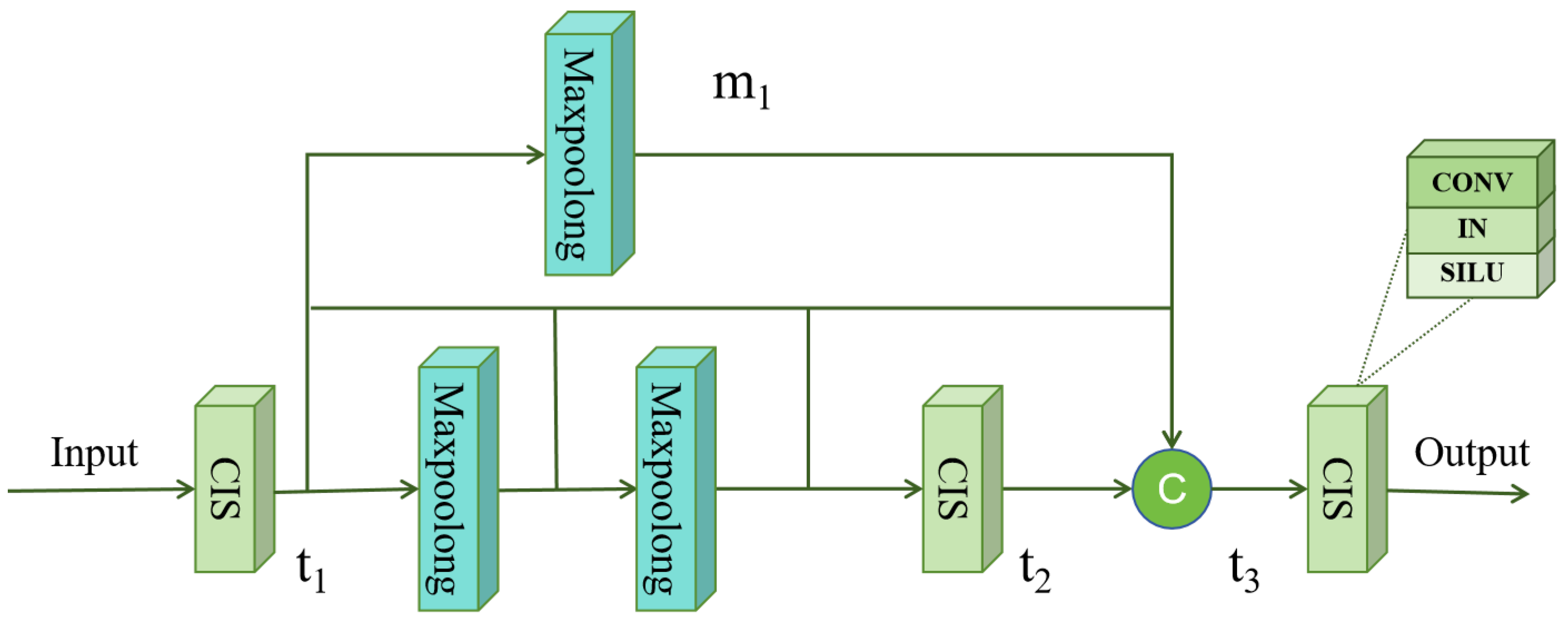

3.3. Multi-Scale Spatial Pyramid Pooling Features (MSPPF)

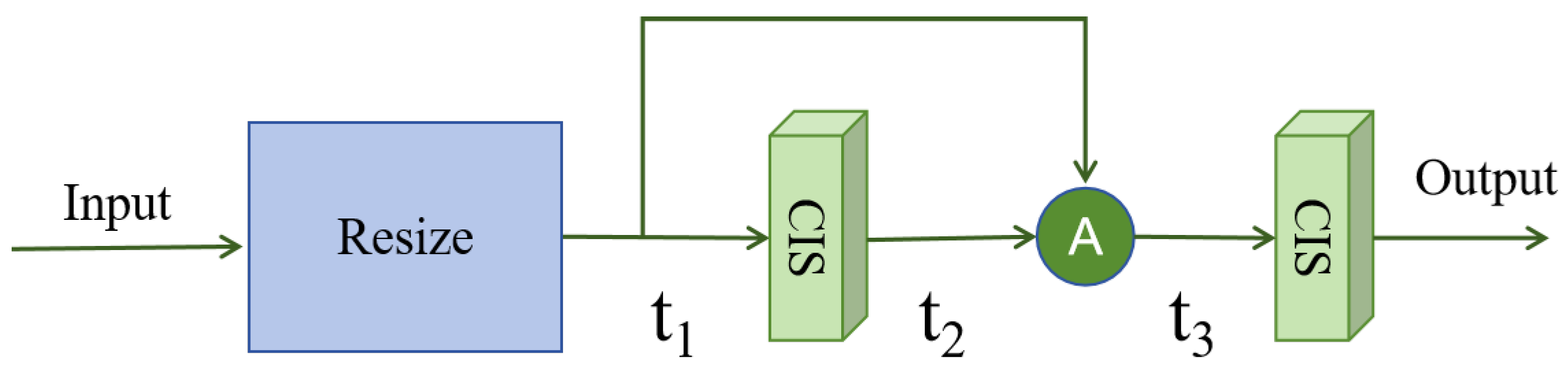

3.4. Forward and Backward Branches

3.4.1. Forward Calculation Module (FCM)

3.4.2. Backward Calculation Module (BCM)

3.5. Loss Function

4. Experiments and Discussion

4.1. Datasets and Settings

4.2. Comparison with State-of-the-Art Methods for UIE

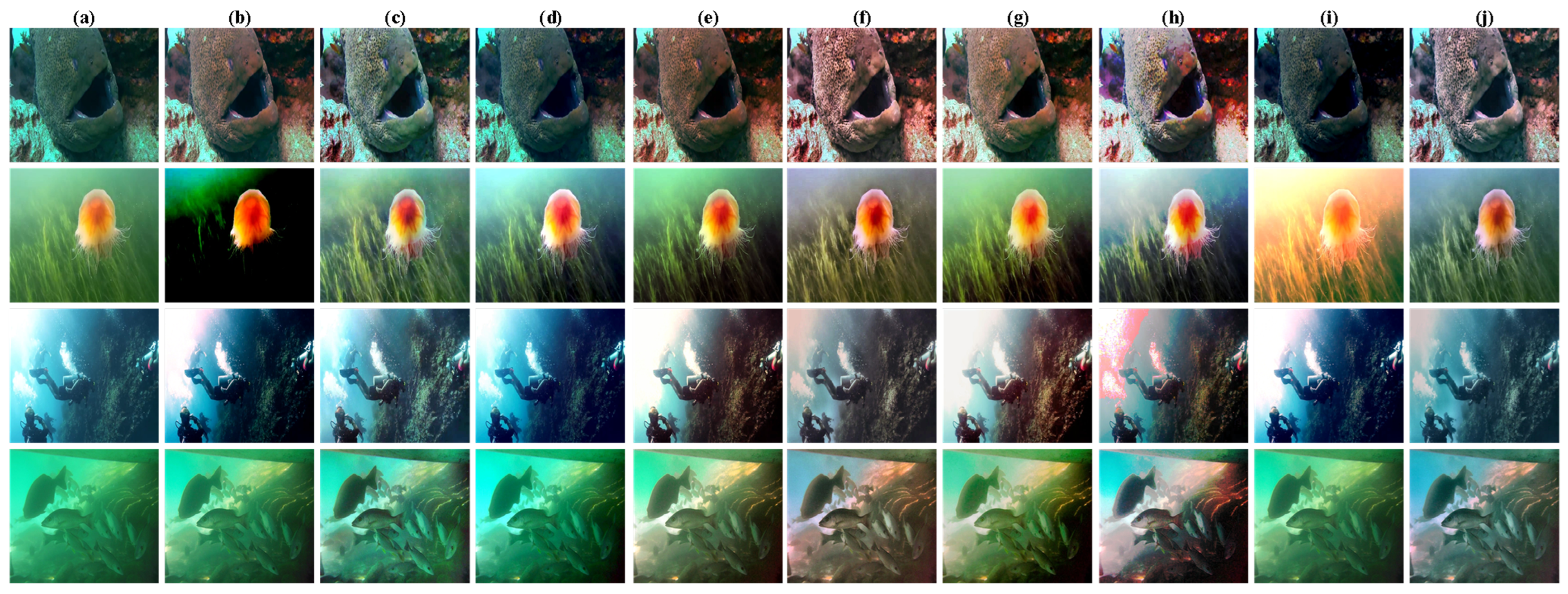

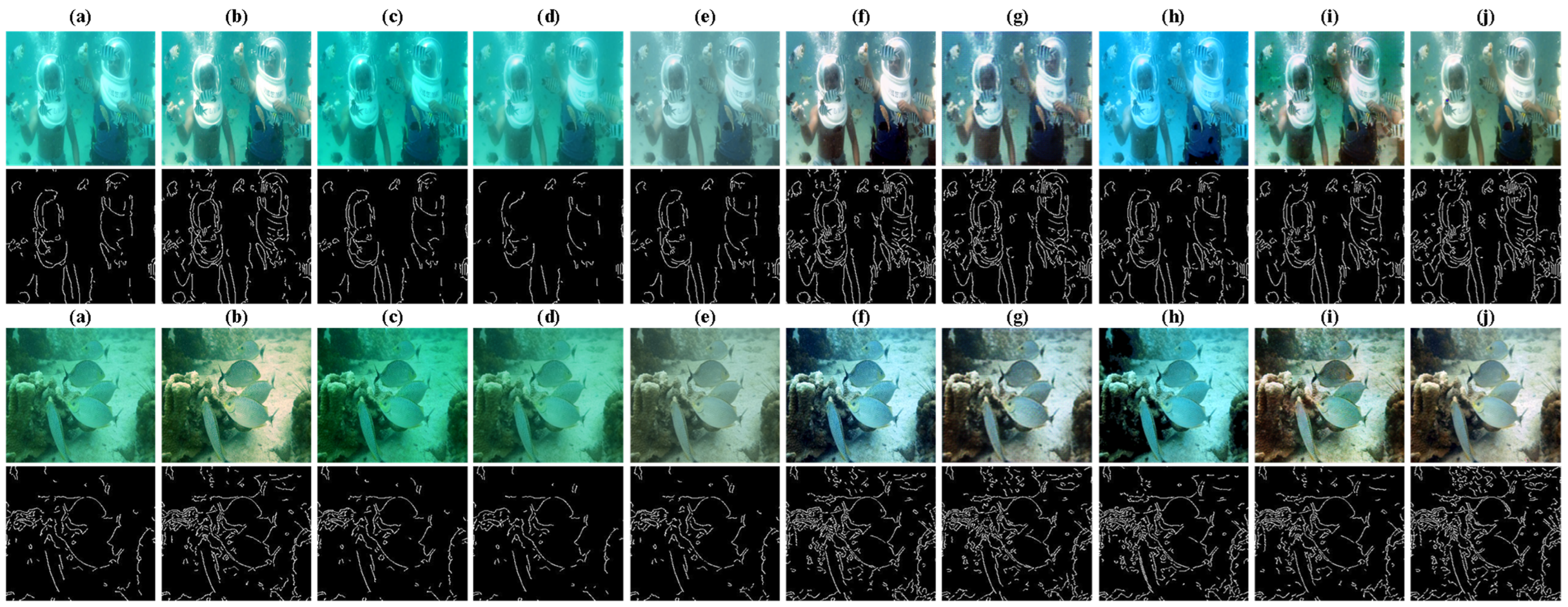

4.2.1. Qualitative Evaluations

4.2.2. Quantitative Evaluation

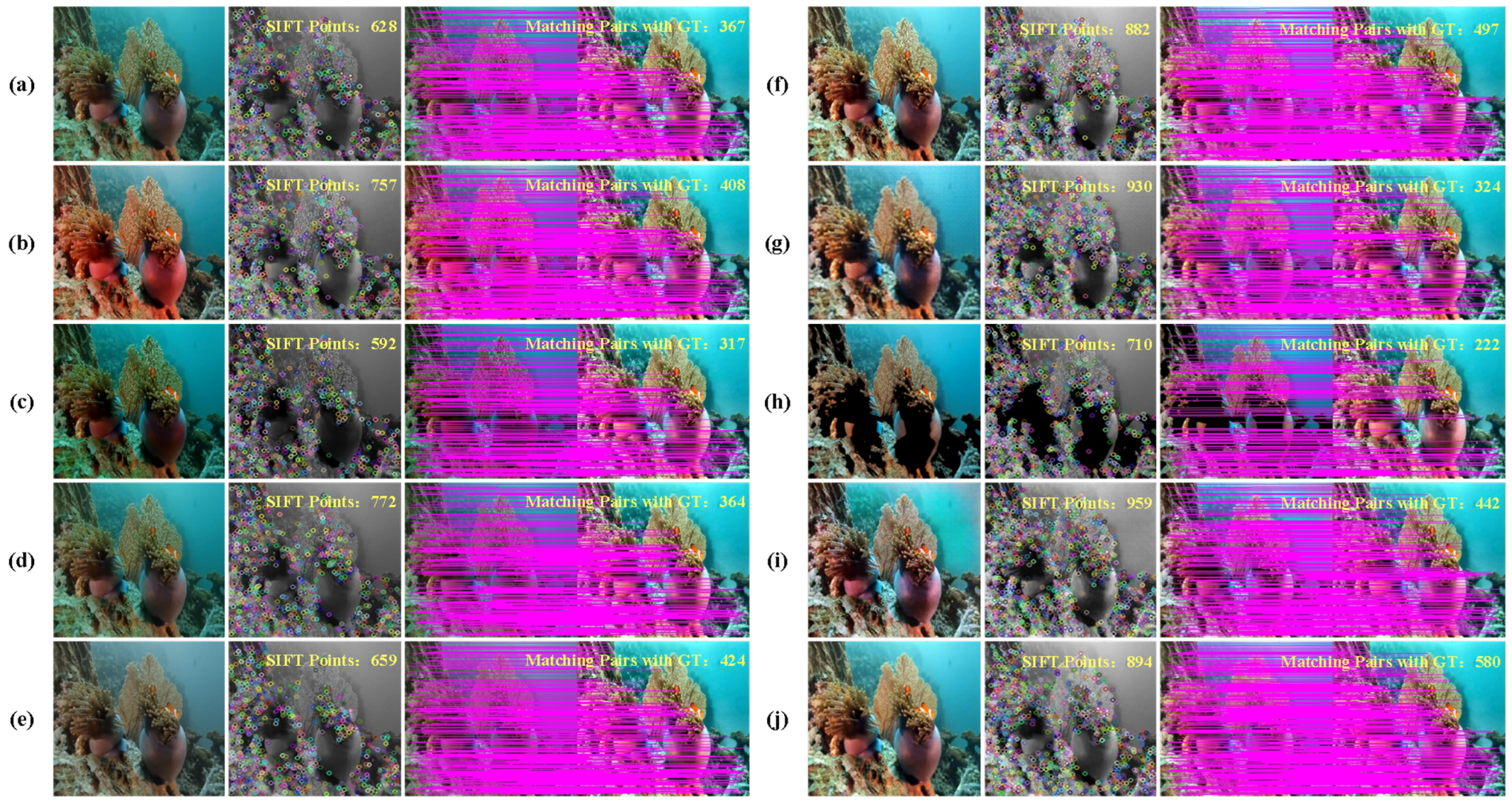

4.2.3. Application Test

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.; Ye, X.; Mei, X.; Wei, X. Learning mapping by curve iteration estimation for real-time underwater image enhancement. Opt. Express 2024, 32, 9931–9945. [Google Scholar] [CrossRef] [PubMed]

- Bertolotti, J.; Van Putten, E.G.; Blum, C.; Lagendijk, A.; Vos, W.L.; Mosk, A.P. Non-invasive imaging through opaque scattering layers. Nature 2012, 491, 232–234. [Google Scholar] [CrossRef] [PubMed]

- Cecconi, V.; Kumar, V.; Bertolotti, J.; Peters, L.; Cutrona, A.; Olivieri, L.; Peccianti, M. Terahertz spatiotemporal wave synthesis in random systems. ACS Photonics 2024, 11, 362–368. [Google Scholar] [CrossRef] [PubMed]

- Vellekoop, I.M.; Mosk, A.P. Focusing coherent light through opaque strongly scattering media. Opt. Lett. 2007, 32, 2309–2311. [Google Scholar] [CrossRef]

- Zhou, J.; Yang, T.; Zhang, W. Underwater vision enhancement technologies: A comprehensive review, challenges, and recent trends. Appl. Intell. 2023, 53, 3594–3621. [Google Scholar] [CrossRef]

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An overview of underwater vision enhancement: From traditional methods to recent deep learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Wei, X.; Ye, X.; Mei, X.; Wang, J.; Ma, H. Enforcing high frequency enhancement in deep networks for simultaneous depth estimation and dehazing. Appl. Soft Comput. 2024, 163, 11873. [Google Scholar] [CrossRef]

- Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A.; Talib, A.Z.H. Enhancing the low quality images using unsupervised colour correction method. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; pp. 1703–1709. [Google Scholar]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Gao, S.B.; Zhang, M.; Zhao, Q.; Zhang, X.S.; Li, Y.J. Underwater image enhancement using adaptive retinal mechanisms. IEEE Trans. Image Process. 2019, 28, 5580–5595. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Cao, W.; Cai, Z.; Su, B. An underwater image vision enhancement algorithm based on contour bougie morphology. IEEE Trans. Geosci. Remote Sens. 2020, 59, 8117–8128. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Drews, P.L.; Nascimento, E.R.; Botelho, S.S.; Campos, M.F.M. Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl. 2016, 36, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef]

- Mei, X.; Ye, X.; Wang, J.; Wang, X.; Huang, H.; Liu, Y.; Jia, Y.; Zhao, S. UIEOGP: An underwater image enhancement method based on optical geometric properties. Opt. Express 2023, 31, 36638–36655. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC^2-Net: CNN-based underwater image enhancement using two color space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Sharma, P.; Bisht, I.; Sur, A. Wavelength-based attributed deep neural network for underwater image restoration. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–23. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Mei, X.; Ye, X.; Zhang, X.; Liu, Y.; Wang, J.; Hou, J.; Wang, X. UIR-Net: A Simple and Effective Baseline for Underwater Image Restoration and Enhancement. Remote Sens. 2022, 15, 39. [Google Scholar] [CrossRef]

- Wang, J.; Ye, X.; Liu, Y.; Mei, X.; Hou, J. Underwater self-supervised monocular depth estimation and its application in image enhancement. Eng. Appl. Artif. Intell. 2023, 120, 105846. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, Australia, 21–25 May2018; pp. 7159–7165. [Google Scholar]

- Pramanick, A.; Sarma, S.; Sur, A. X-CAUNET: Cross-Color Channel Attention with Underwater Image-Enhancing Transformer. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3550–3554. [Google Scholar]

- Huang, S.; Wang, K.; Liu, H.; Chen, J.; Li, Y. Contrastive semi-supervised learning for underwater image restoration via reliable bank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18145–18155. [Google Scholar]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-uwnet: Compressed model for underwater image enhancement (student abstract). Proc. AAAI Conf. Artif. Intell. 2021, 35, 15853–15854. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Bao, P.; Zhang, L.; Wu, X. Canny edge detection enhancement by scale multiplication. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1485–1490. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; You, H.; Fu, X. Adapted anisotropic Gaussian SIFT matching strategy for SAR registration. IEEE Geosci. Remote Sens. Lett. 2014, 12, 160–164. [Google Scholar] [CrossRef]

| Method | PSNR ↑ | SSIM ↑ | RMSE ↓ | UIQM ↑ | UICQE ↑ |

|---|---|---|---|---|---|

| Input | 18.90 | 0.66 | 19.55 | 3.21 | 0.55 |

| IBLA [19] | 16.67 | 0.59 | 24.82 | 5.15 | 0.62 |

| DCP [16] | 14.66 | 0.58 | 24.73 | 3.04 | 0.59 |

| Retinex [10] | 18.08 | 0.62 | 20.59 | 3.22 | 0.54 |

| Shallow [33] | 19.74 | 0.71 | 17.02 | 4.20 | 0.53 |

| WaterNet [24] | 20.15 | 0.79 | 16.24 | 4.31 | 0.54 |

| UGan [30] | 21.15 | 0.72 | 15.85 | 4.63 | 0.62 |

| Deepwave [26] | 16.72 | 0.56 | 24.72 | 4.00 | 0.60 |

| FUnIE-GAN [34] | 19.27 | 0.71 | 17.83 | 4.76 | 0.62 |

| Ours | 25.85 | 0.88 | 9.75 | 4.33 | 0.63 |

| Method | UIQM ↑ | UICQE ↑ | Method | UIQM ↑ | UICQE ↑ |

|---|---|---|---|---|---|

| Input | 3.04 | 0.48 | WaterNet [24] | 4.13 | 0.53 |

| IBLA [19] | 4.10 | 0.57 | UGan [30] | 4.21 | 0.55 |

| DCP [16] | 3.54 | 0.54 | Deepwave [26] | 3.98 | 0.54 |

| Retinex [10] | 4.08 | 0.53 | FunieGan [34] | 4.24 | 0.53 |

| Shallow [33] | 4.02 | 0.49 | Ours | 4.27 | 0.56 |

| Pic1 | TPR ↑ | FPR ↓ | Pic2 | TPR ↑ | FPR ↓ |

|---|---|---|---|---|---|

| Input | 0.38 | 0.07 | Input | 0.38 | 0.08 |

| IBLA [19] | 0.56 | 0.11 | IBLA [19] | 0.52 | 0.12 |

| DCP [16] | 0.39 | 0.07 | DCP [16] | 0.38 | 0.09 |

| Retinex [10] | 0.24 | 0.05 | Retinex [10] | 0.32 | 0.07 |

| Shallow [33] | 0.39 | 0.08 | Shallow [33] | 0.39 | 0.08 |

| WaterNet [24] | 0.63 | 0.14 | WaterNet [24] | 0.54 | 0.18 |

| UGan [30] | 0.55 | 0.17 | UGan [30] | 0.51 | 0.19 |

| Deepwave [26] | 0.50 | 0.11 | Deepwave [26] | 0.52 | 0.17 |

| FUnIE-GAN [34] | 0.60 | 0.17 | FUnIE-GAN [34] | 0.54 | 0.23 |

| Ours | 0.66 | 0.09 | Ours | 0.58 | 0.10 |

| Method | Points | Pairs | Accuracy | Method | Points | Pairs | Accuracy |

|---|---|---|---|---|---|---|---|

| Input | 628 | 367 | 0.58 | WaterNet [24] | 882 | 497 | 0.56 |

| IBLA [19] | 757 | 408 | 0.54 | UGan [30] | 930 | 324 | 0.35 |

| DCP [16] | 592 | 317 | 0.54 | Deepwave [26] | 710 | 222 | 0.31 |

| Retinex [10] | 772 | 364 | 0.47 | FunieGan [34] | 959 | 442 | 0.46 |

| Shallow [33] | 659 | 424 | 0.64 | Ours | 894 | 580 | 0.68 |

| Method | PSNR ↑ | SSIM ↑ | RMSE ↓ | UIQM ↑ | UICQE ↑ |

|---|---|---|---|---|---|

| without FCM | 23.04 | 0.62 | 21.65 | 4.21 | 0.50 |

| without BCM | 24.46 | 0.73 | 10.71 | 4.30 | 0.62 |

| ALL | 25.85 | 0.88 | 9.75 | 4.33 | 0.63 |

| = 0.0 | = 0.2 | = 0.4 | = 0.6 | = 0.8 | = 1.0 | |

|---|---|---|---|---|---|---|

| = 0.0 | (22.73, 0.62) | (23.02, 0.66) | (22.51, 0.61) | (22.42, 0.59) | (22.31, 0.60) | (22.11, 0.58) |

| = 0.2 | (22.82, 0.63) | (23.09, 0.65) | (23.10, 0.63) | (22.98, 0.61) | (22.87, 0.62) | (22.79, 0.60) |

| = 0.4 | (23.23, 0.74) | (23.42, 0.75) | (23.31, 0.74) | (23.22, 0.72) | (23.20, 0.71) | (23.08, 0.69) |

| = 0.6 | (23.81, 0.78) | (23.89, 0.81) | (23.13, 0.80) | (23.71, 0.77) | (23.67, 0.75) | (23.54, 0.73) |

| = 0.8 | (24.23, 0.82) | (24.96, 0.85) | (24.81, 0.83) | (24.73, 0.81) | (24.42, 0.79) | (24.05, 0.80) |

| = 1.0 | (24.11, 0.80) | (24.67, 0.81) | (24.45, 0.80) | (24.36, 0.75) | (24.13, 0.76) | (24.08, 0.73) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, S.; Mei, X.; Ye, X.; Guo, S. MSFE-UIENet: A Multi-Scale Feature Extraction Network for Marine Underwater Image Enhancement. J. Mar. Sci. Eng. 2024, 12, 1472. https://doi.org/10.3390/jmse12091472

Zhao S, Mei X, Ye X, Guo S. MSFE-UIENet: A Multi-Scale Feature Extraction Network for Marine Underwater Image Enhancement. Journal of Marine Science and Engineering. 2024; 12(9):1472. https://doi.org/10.3390/jmse12091472

Chicago/Turabian StyleZhao, Shengya, Xinkui Mei, Xiufen Ye, and Shuxiang Guo. 2024. "MSFE-UIENet: A Multi-Scale Feature Extraction Network for Marine Underwater Image Enhancement" Journal of Marine Science and Engineering 12, no. 9: 1472. https://doi.org/10.3390/jmse12091472