Relative Localization and Dynamic Tracking of Underwater Robots Based on 3D-AprilTag

Abstract

1. Introduction

- A 3D AprilTag marker based on a moving cubic structure is proposed, overcoming the angle limitations of traditional single-plane markers in dynamic localization. Unlike single-plane systems, which face recognition failure or pose estimation interruptions when the robot’s viewpoint changes, the proposed cubic structure distributes multiple AprilTags across adjacent faces, providing continuous visual references from different angles, enhancing observability, and supporting 6-DOF localization of underwater dynamic targets.

- A fusion-based observation-switching Kalman filter model is introduced to ensure smooth pose transitions and continuous tracking during rapid tag switching or occlusion. This model dynamically adjusts fusion weights based on observation distance, angle, and detection confidence, addressing pose inconsistencies caused by switching between different tag faces. It significantly improves pose estimation continuity and robustness in dynamic environments with viewpoint changes and partial occlusion.

- A reconfigurable relative localization framework is developed, demonstrating practicality in dynamic targets and underwater 3D tags, and laying the foundation for future multi-robot collaborative perception and control. The system enables autonomous tracking and navigation without relying on global maps or external base stations, achieving stable pose estimation and trajectory tracking even under dynamic interactions and tag switching. Its scalability and adaptability to various tasks make it suitable for multi-robot systems, providing the basis for low-dependency, high-autonomy underwater collaborative systems.

2. Three-Dimensional AprilTag-Based Relative Localization Framework

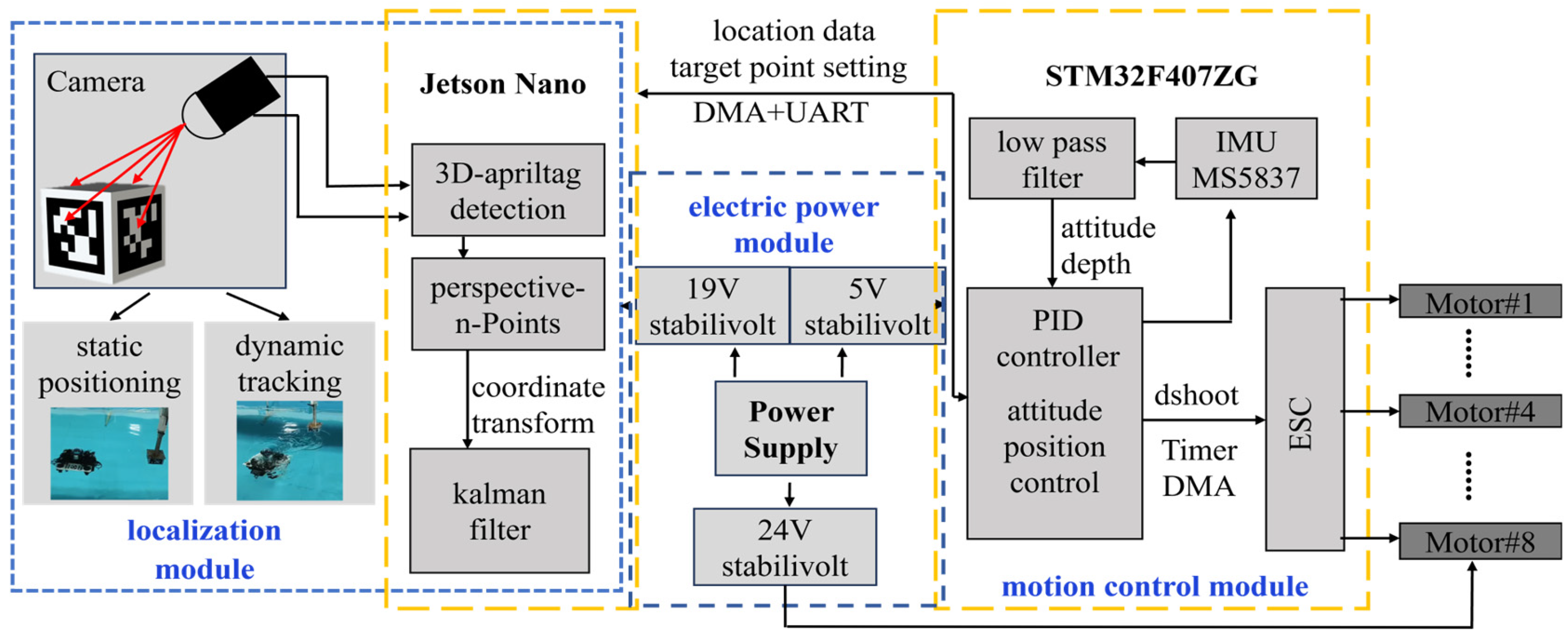

2.1. Underwater Robot Platform

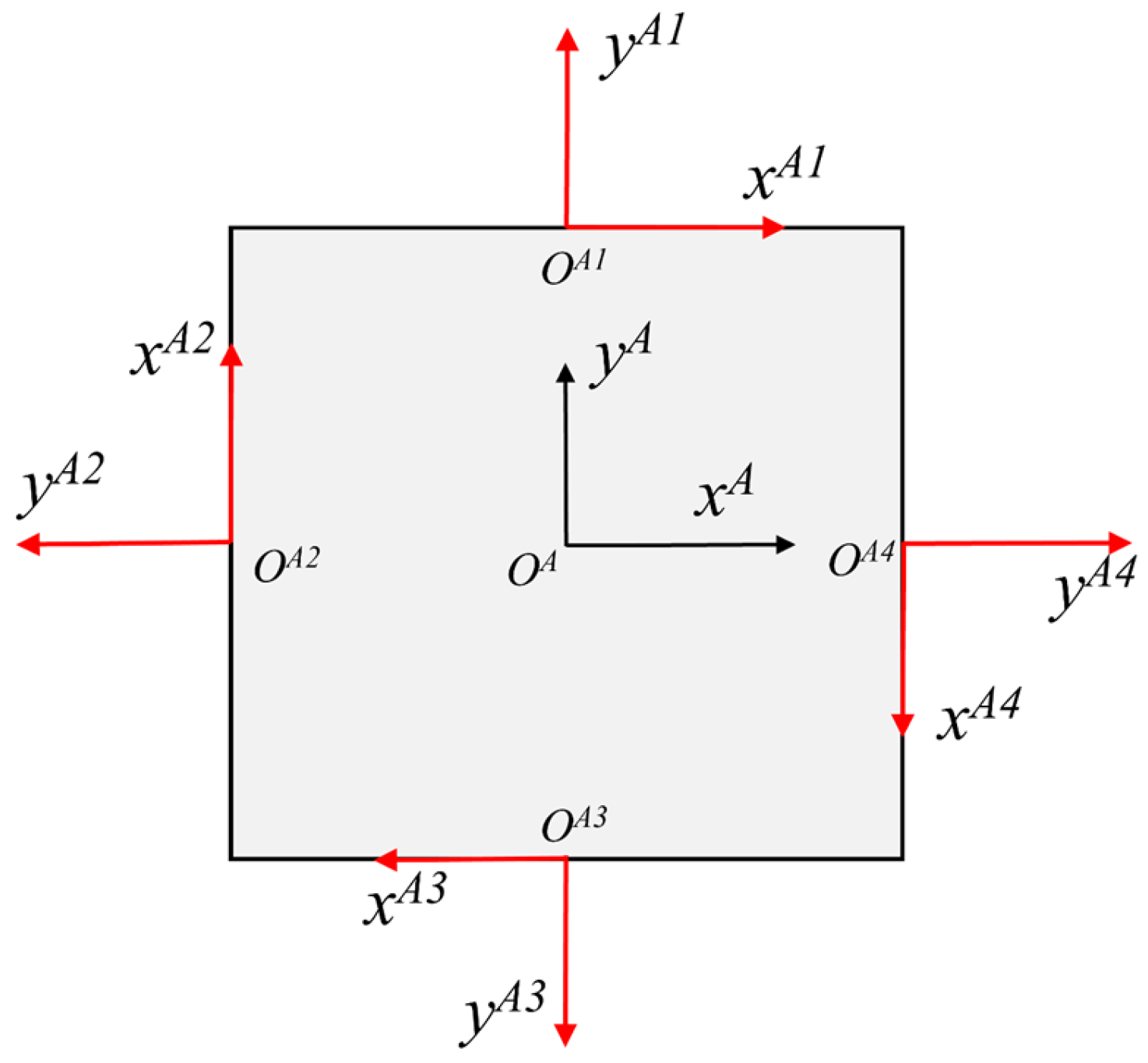

2.2. Target Markers

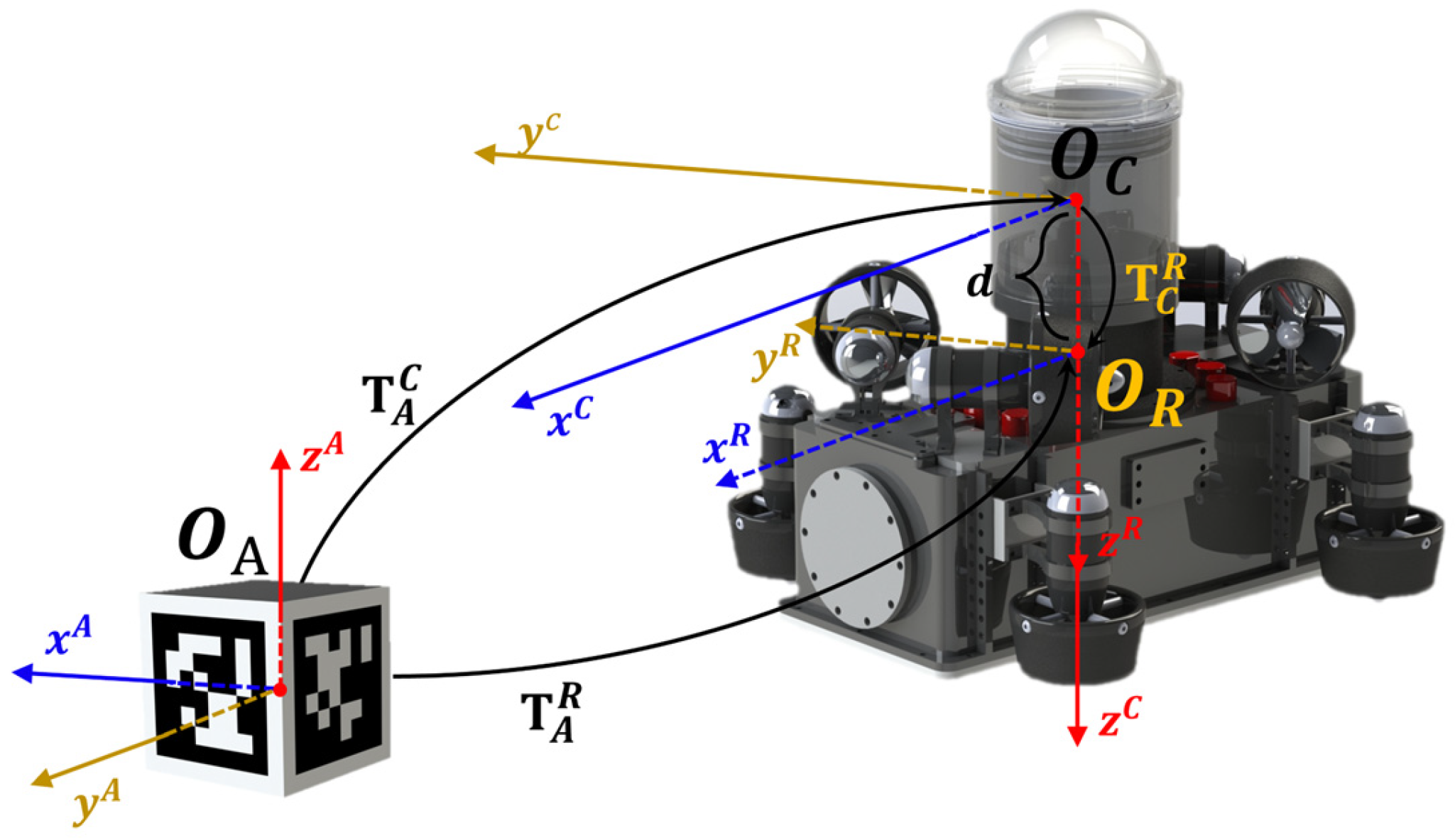

2.3. Coordinate Transformation

2.3.1. Transformation from Camera to Robot Coordinate System

2.3.2. Transformation from 3D-AprilTag to Camera Coordinate System

2.3.3. Transformation from AprilTag to Robot Coordinate System

2.4. Relative Pose Estimation

2.5. Integrated Observation-Switching Filter Model

- Distance-based weighting. The estimation reliability inversely correlates with the relative distance between the underwater robot and the AprilTag marker. Closer proximity yields higher confidence in pose observations. This relationship is formulated as follows:

- View-angle-based weighting. The observation reliability positively correlates with the alignment between the tag’s surface normal vector and the camera’s optical axis. A smaller angular deviation indicates higher geometric consistency for pose estimation. This dependency is modeled as follows:

- Detection confidence weighting. This weighting factor is derived from the intrinsic confidence metric provided by the AprilTag detector, which quantifies the decoding certainty of the fiducial marker. The confidence of AprilTag detection is calculated through a combination of the error correction bits and decoding score: the reliability of error correction is measured by dividing 1 by (1 plus the number of error correction bits), with smaller values indicating more stable results. This value is multiplied by the decoding score percentage (higher scores indicate better matching quality). The final value approaches 1 as the confidence increases, and detections with confidence below 0.3 are typically discarded as low-quality detections. The confidence value for the i-th tag is incorporated as follows:

- State prediction phase

- 2.

- Observation fusion phase

- 3.

- State update phase

| Algorithm 1. Weighted fusion Kalman filter with full annotations |

| Input: Constants: |

| Output: |

| Initialization: , |

| 1: for to do: |

| 2: Predict Step: |

| 3: |

| 4: |

| 5: Observation Fusion: |

| 6: |

| 7: for i to do |

| 8: |

| 9: |

| 10: |

| 11: |

| 12: end for |

| 13: |

| 14: for to do |

| 15: |

| 16: |

| 17: |

| 18: end for |

| 19: Update Step: |

| 20: |

| 21: |

| 22: |

| 23: end for |

3. Results and Discussion

3.1. Relative Positioning

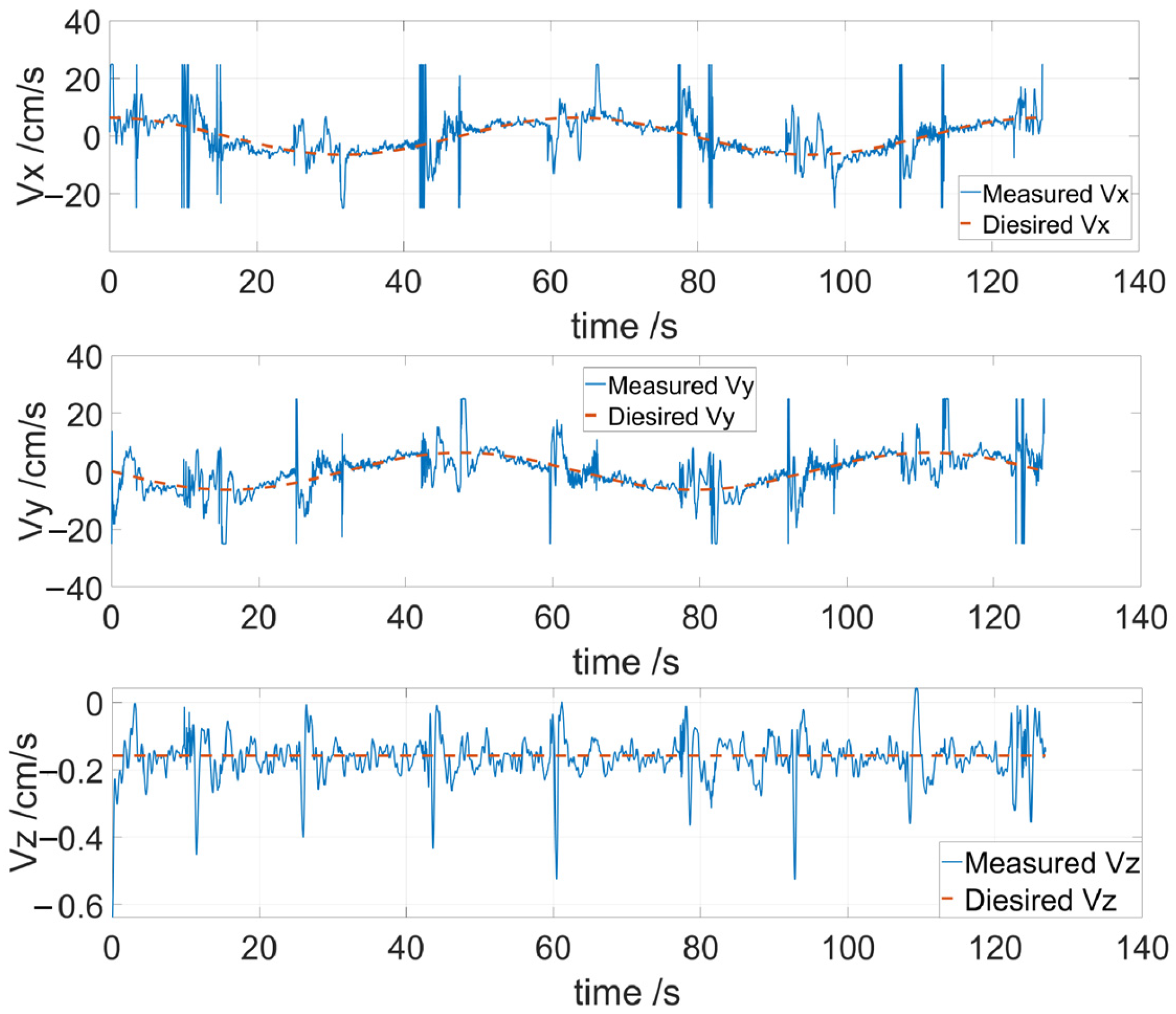

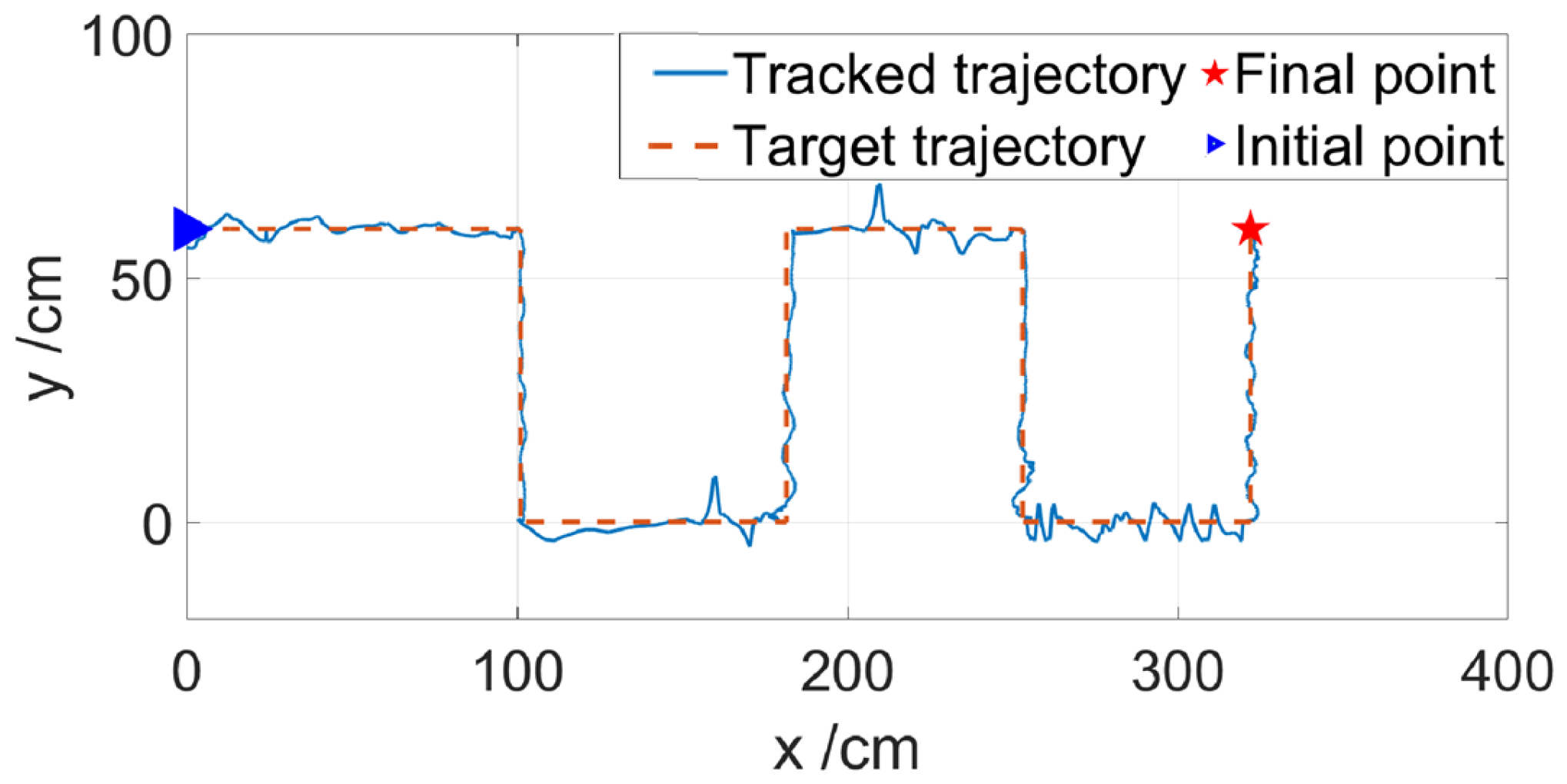

3.2. Dynamic Tracking

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Majumder, A.; Losito, M.; Paramasivam, S.; Kumar, A.; Gatto, G. Buoys for Marine Weather Data Monitoring and LoRaWAN Communication. Ocean. Eng. 2024, 313, 119521. [Google Scholar] [CrossRef]

- Valdés, L.; Bode, A.; Latasa, M.; Nogueira, E.; Somavilla, R.; Varela, M.M.; González-Pola, C.; Casas, G. Three Decades of Continuous Ocean Observations in North Atlantic Spanish Waters: The RADIALES Time Series Project, Context, Achievements and Challenges. Prog. Oceanogr. 2021, 198, 102671. [Google Scholar] [CrossRef]

- Stenvers, V.I.; Sherlock, R.E.; Reisenbichler, K.R.; Robison, B.H. ROV Observations Reveal Infection Dynamics of Gill Parasites in Midwater Cephalopods. Sci. Rep. 2022, 12, 8282. [Google Scholar] [CrossRef]

- Zhao, Q.; Yang, T.; Tang, G.; Yang, Y.; Luan, Y.; Wang, G.; Wan, T.; Xu, M.; Li, S.; Xie, G. Hierarchical Model for an AUV Swarm with a Leader. Pol. Marit. Res. 2025, 32, 71–80. [Google Scholar] [CrossRef]

- Olivastri, E.; Fusaro, D.; Li, W.; Mosco, S.; Pretto, A. A Sonar-Based AUV Positioning System for Underwater Environments with Low Infrastructure Density. arXiv 2024, arXiv:2405.01971. [Google Scholar]

- Ali, M.A.; Kaja Mohideen, S.; Vedachalam, N. Current Status of Underwater Wireless Communication Techniques: A Review. In Proceedings of the 2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 21–22 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–9. [Google Scholar]

- Couturier, A.; Akhloufi, M.A. A Review on Absolute Visual Localization for UAV. Robot. Auton. Syst. 2021, 135, 103666. [Google Scholar] [CrossRef]

- Wei, Q.; Yang, Y.; Zhou, X.; Fan, C.; Zheng, Q.; Hu, Z. Localization Method for Underwater Robot Swarms Based on Enhanced Visual Markers. Electronics 2023, 12, 4882. [Google Scholar] [CrossRef]

- Westman, E.; Kaess, M. Underwater AprilTag SLAM and Calibration for High Precision Robot Localization; Carnegie Mellon University: Pittsburgh, PA, USA, 2018. [Google Scholar]

- Zhang, Z.; Zhong, L.; Lin, M.; Lin, R.; Li, D. Triangle Codes and Tracer Lights Based Absolute Positioning Method for Terminal Visual Docking of Autonomous Underwater Vehicles. Ind. Robot. Int. J. Robot. Res. Appl. 2024, 51, 269–286. [Google Scholar] [CrossRef]

- Wang, R.; Wang, S.; Wang, Y.; Cai, M.; Tan, M. Vision-Based Autonomous Hovering for the Biomimetic Underwater Robot—RobCutt-II. IEEE Trans. Ind. Electron. 2019, 66, 8578–8588. [Google Scholar] [CrossRef]

- Bellarbi, A.; Domingues, C.; Otmane, S.; Benbelkacem, S.; Dinis, A. Augmented Reality for Underwater Activities with the Use of the Dolphyn. In Proceedings of the 2013 10th IEEE International Conference on Networking, Sensing and Control (ICNSC), Evry, France, 10–12 April 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 409–412. [Google Scholar]

- Khan, D.; Ullah, S.; Rabbi, I. Factors Affecting the Design and Tracking of ARToolKit Markers. Comput. Stand. Interfaces 2015, 41, 56–66. [Google Scholar] [CrossRef]

- Olson, E. AprilTag: A Robust and Flexible Visual Fiducial System. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 3400–3407. [Google Scholar]

- Wang, J.; Olson, E. AprilTag 2: Efficient and Robust Fiducial Detection. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4193–4198. [Google Scholar]

- Hanff, H.; Kloss, P.; Wehbe, B.; Kampmann, P.; Kroffke, S.; Sander, A.; Firvida, M.B.; Von Einem, M.; Bode, J.F.; Kirchner, F. AUVx—A Novel Miniaturized Autonomous Underwater Vehicle. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–10. [Google Scholar]

- Abbas, S.M.; Aslam, S.; Berns, K.; Muhammad, A. Analysis and Improvements in AprilTag Based State Estimation. Sensors 2019, 19, 5480. [Google Scholar] [CrossRef] [PubMed]

- Pfrommer, B.; Daniilidis, K. TagSLAM: Robust SLAM with Fiducial Markers. arXiv 2019, arXiv:1910.00679. [Google Scholar]

- Žuži, M.; Čejka, J.; Bruno, F.; Skarlatos, D.; Liarokapis, F. Impact of Dehazing on Underwater Marker Detection for Augmented Reality. Front. Robot. AI 2018, 5, 92. [Google Scholar] [CrossRef] [PubMed]

- Bruno, F.; Barbieri, L.; Mangeruga, M.; Cozza, M.; Lagudi, A.; Čejka, J.; Liarokapis, F.; Skarlatos, D. Underwater Augmented Reality for Improving the Diving Experience in Submerged Archaeological Sites. Ocean. Eng. 2019, 190, 106487. [Google Scholar] [CrossRef]

- Dos Santos Cesar, D.B.; Gaudig, C.; Fritsche, M.; Dos Reis, M.A.; Kirchner, F. An Evaluation of Artificial Fiducial Markers in Underwater Environments. In Proceedings of the OCEANS 2015-Genova, Genova, Italy, 18–21 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Chen, J.; Sun, C.; Zhang, A. Autonomous Navigation for Adaptive Unmanned Underwater Vehicles Using Fiducial Markers. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 2021–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 9298–9304. [Google Scholar]

- Billings, G.; Walter, M.; Pizarro, O.; Johnson-Roberson, M.; Camilli, R. Towards Automated Sample Collection and Return in Extreme Underwater Environments. arXiv 2021, arXiv:2112.15127. [Google Scholar] [CrossRef]

- Mateos, L.A. AprilTags 3D: Dynamic Fiducial Markers for Robust Pose Estimation in Highly Reflective Environments and Indirect Communication in Swarm Robotics. arXiv 2020, arXiv:2001.08622. [Google Scholar]

- Bayraktar, E. ReTrackVLM: Transformer-Enhanced Multi-Object Tracking with Cross-Modal Embeddings and Zero-Shot Re-Identification Integration. Appl. Sci. 2025, 15, 1907. [Google Scholar] [CrossRef]

- Fossen, T.I. Handbook of Marine Craft Hydrodynamics and Motion Control; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Chui, C.K.; Chen, G. Kalman Filtering: With Real-Time Applications, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

| Maximum Distance Along the Axis | Size of the AprilTag (cm2) | ||||

|---|---|---|---|---|---|

| 4 × 4 | 6 × 6 | 8 × 8 | 10 × 10 | 12 × 12 | |

| xA-axis (cm) | 21 | 35 | 55 | 65 | 66 |

| yA-axis (cm) | 70 | 105 | 140 | 170 | 175 |

| Desired Spatial Point (cm) | Motion Capture Recognition Error | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean (cm) | Variance (cm2) | |||||||

| 0 | 65 | 0 | −0.3450 | 65.0218 | −0.0935 | 0.0209 | 0.0207 | 0.0720 |

| 10 | 65 | 0 | 9.7158 | 64.9736 | −0.1015 | 0.0975 | 0.0799 | 0.0986 |

| 10 | 65 | −6 | 10.4109 | 65.0583 | −6.1303 | 0.1252 | 0.1822 | 0.2232 |

| 0 | 80 | 0 | −0.4439 | 80.1758 | 0.1892 | 0.1689 | 0.1752 | 0.2292 |

| 20 | 80 | 0 | 20.6394 | 79.7181 | −0.2264 | 0.2205 | 0.2251 | 0.1183 |

| 20 | 80 | −10 | 20.8513 | 80.7368 | −9.9328 | 0.1988 | 0.6194 | 0.1738 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, G.; Yang, T.; Yang, Y.; Zhao, Q.; Xu, M.; Xie, G. Relative Localization and Dynamic Tracking of Underwater Robots Based on 3D-AprilTag. J. Mar. Sci. Eng. 2025, 13, 833. https://doi.org/10.3390/jmse13050833

Tang G, Yang T, Yang Y, Zhao Q, Xu M, Xie G. Relative Localization and Dynamic Tracking of Underwater Robots Based on 3D-AprilTag. Journal of Marine Science and Engineering. 2025; 13(5):833. https://doi.org/10.3390/jmse13050833

Chicago/Turabian StyleTang, Guoqiang, Tengfei Yang, Yan Yang, Qiang Zhao, Minyi Xu, and Guangming Xie. 2025. "Relative Localization and Dynamic Tracking of Underwater Robots Based on 3D-AprilTag" Journal of Marine Science and Engineering 13, no. 5: 833. https://doi.org/10.3390/jmse13050833

APA StyleTang, G., Yang, T., Yang, Y., Zhao, Q., Xu, M., & Xie, G. (2025). Relative Localization and Dynamic Tracking of Underwater Robots Based on 3D-AprilTag. Journal of Marine Science and Engineering, 13(5), 833. https://doi.org/10.3390/jmse13050833