Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines

Abstract

:1. Introduction

1.1. A Framework for Technical Discussion and Some Basic Definitions

1.2. Key Challenges to Human Ethical Reasoning and Ethical Robot Programming

2. Can We Teach Robots to Be Ethical?

2.1. Asimov’s Three Laws of Robotics

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

2.2. The Need for Prioritization

2.3. Thinking about Harm and Consent

3. Can Robots Teach Us to Be Ethical?

3.1. The Conundrum of No-Win Situations

3.2. Context Matters When Assigning a Value Hierarchy

3.3. Asimov’s Laws and Autonomous Killing Machines

3.4. The Slippery Slope of Robotic Free Will

3.5. Human Limitations Color Our Perception of Acceptable Decisions

- Spooner does not direct his anger towards the truck driver, even though he was at fault.

- A self-driving truck would have prevented the accident from occurring in the first place.

- Both Spooner and the girl would have died without the intervention of the robot.

4. Concrete Applications and Future Directions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Allen, Colin, and Wendell Wallach. 2015. Moral Machines: Teaching Robots Right from Wrong. Oxford: Oxford University Press. [Google Scholar]

- Anderson, Susan Leigh. 2016. Asimov’s “three laws of robotics” and machine metaethics. In Science Fiction and Philosophy: From Time Travel to Superintelligence. Edited by Susan Schneider. Malden: Wiley-Blackwell, pp. 351–73. [Google Scholar]

- Asimov, Isaac. 1950. I, Robot. New York: Gnome Press. [Google Scholar]

- Asimov, Isaac. 1953. Sally. Fantastic, May–June 2, 34–50, 162. [Google Scholar]

- Bhaumik, Arkapravo. 2018. From AI to Robotics: Mobile, Social, and Sentient Robots. Boca Raton: CRC Press. [Google Scholar]

- Bonnefon, Jean-François, Azim Shariff, and Iyan Rahwan. 2016. The social dilemma of autonomous vehicles. Science 352: 1573–76. [Google Scholar] [CrossRef] [PubMed]

- Bringsjord, Selmer, and Joshua Taylor. 2011. The Divine-Command Approach to Robot Ethics. In Robot Ethics: The Ethical and Social Implications of Robotics. Edited by Keith Abney, George A. Bekey, Ronald C. Arkin and Patrick Lin. Cambridge: MIT Press, pp. 85–108. [Google Scholar]

- Christian, Brian, and Tom Griffiths. 2016. Algorithms to Live By: The Computer Science of Human Decisions. New York: Henry Holt & Co. [Google Scholar]

- Collins, Nina L. 2014. Jesus, the Sabbath and the Jewish Debate: Healing on the Sabbath in the 1st and 2nd Centuries CE. London: Bloomsbury T & T Clark. [Google Scholar]

- Contissa, Giuseppe, Francesca Lagioia, and Giovanni Sartor. 2017. The Ethical Knob: ethically-customisable automated vehicles and the law. Artif Intell Law 25: 365–78. [Google Scholar] [CrossRef]

- Dukes, Hunter B. 2015. The Binding of Abraham: Inverting the Akedah in Fail-Safe and WarGames. Journal of Religion Film 19: 37. [Google Scholar]

- Edwards, T. L., K. Xue, H. C. Meenink, M. J. Beelen, G. J. Naus, M. P. Simunovic, M. Latasiewicz, A. D. Farmery, M. D. de Smet, and R. E. MacLaren. 2018. First-in-human study of the safety and viability of intraocular robotic surgery. Nature Biomedical Engineering. [Google Scholar] [CrossRef]

- Eubanks, Virginia. 2015. Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. New York: St. Martin’s Press. [Google Scholar]

- Fleetwood, Janet. 2017. Public Health, Ethics, and Autonomous Vehicles. American Journal Public Health 107: 532–37. [Google Scholar] [CrossRef]

- Gogoll, Jan, and Julian Müller. 2017. Autonomous Cars: In Favor of a Mandatory Ethics Setting. Science & Engineering Ethics 23: 681–700. [Google Scholar] [CrossRef]

- Hampton, Gregory Jerome. 2015. Imagining Slaves and Robots in Literature, Film, and Popular Culture: Reinventing Yesterday’s Slave with Tomorrow’s Robot. Lanham: Lexington Books. [Google Scholar]

- Himmelreich, Johannes. 2018. Never Mind the Trolley: The Ethics of Autonomous Vehicles in Mundane Situations. Ethical Theory and Moral Practice. [Google Scholar] [CrossRef]

- Holstein, Tobias. 2017. The Misconception of Ethical Dilemmas in Self-Driving Cars. Papers presented at the IS4SI 2017 Summit DIGITALISATION FOR A SUSTAINABLE SOCIETY 1 No. 3, Gothenburg, Sweden, June 12–16; p. 174. [Google Scholar] [CrossRef]

- Holstein, Tobias, and Gordana Dodig-Crnkovic. 2018. Avoiding the Intrinsic Unfairness of the Trolley Problem. In Proceedings of the International Workshop on Software Fairness. New York: ACM, pp. 32–37. [Google Scholar] [CrossRef]

- Keiper, Adam, and Ari N. Schulman. 2011. The Problem with ‘Friendly’ Artificial Intelligence. The New Atlantis. 32. June 26. First published 2011 Summer., pp. 80–89. Available online: https://www.thenewatlantis.com/publications/the-problem-with-friendly-artificial-intelligence (accessed on 8 August 2018).

- Liu, Hin-Yan. 2018. Three Types of Structural Discrimination Introduced by Autonomous Vehicles. UC Davis Law Review Online 51: 149–80. [Google Scholar]

- Markoff, John. 2015. Machines of Loving Grace: The Quest for Common Ground Between Humans and Robots. New York: HarperCollins. [Google Scholar]

- McGrath, James F. 2011. Robots, Rights, and Religion. In Religion and Science Fiction. Edited by James F. McGrath. Eugene: Pickwick, pp. 118–53. [Google Scholar]

- McGrath, James F. 2016. Theology and Science Fiction. Eugene: Cascade. [Google Scholar]

- Noble, Safiya Umoja. 2018. Algorithms of Oppression: How Search Engines Reinforce Racism. New York: New York University Press. [Google Scholar]

- Nyholm, Sven, and Jilles Smids. 2016. The Ethics of Accident-Algorithms for Self-Driving Cars. Ethical Theory and Moral Practice 19: 1275–89. [Google Scholar] [CrossRef]

- O’Neil, Cathy. 2016. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown. [Google Scholar]

- Pagallo, Ugo. 2013. The Laws of Robots: Crimes, Contracts, and Torts. Dordrecht: Springer. [Google Scholar]

- Renda, Andrea. 2018. Ethics, algorithms and self-driving cars—A CSI of the ‘trolley problem’. CEPS Policy Insights 2: 1–17. [Google Scholar]

- Repschinski, Boris. 2000. The Controversy Stories in the Gospel of Matthew: Their Redaction, Form and Relevance for the Relationship between the Matthean Community and Formative Judaism. Göttingen: Vandenhoeck & Ruprecht. [Google Scholar]

- Stemwedel, Janet D. 2015. The Philosophy of Star Trek: The Kobayashi Maru, No-Win Scenarios, And Ethical Leadership. Forbes. First published 2015, August 23. Available online: https://www.forbes.com/sites/janetstemwedel/2015/08/23/the-philosophy-of-star-trek-the-kobayashi-maru-no-win-scenarios-and-ethical-leadership/#19aa76675f48 (accessed on 8 August 2018).

- Turing, Alan M. 1950. Computing Machinery and Intelligence. Mind 49: 433–60. [Google Scholar] [CrossRef]

- Vaccaro, Kristen, Dylan Huang, Motahhare Eslami, Christian Sandvig, Kevin Hamilton, and Karrie G. Karahalios. 2018. The Illusion of Control: Placebo Effects of Control Settings. Paper Presented at the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, April 21–26. [Google Scholar] [CrossRef]

- Wiseman, Yair, and Ilan Grinberg. 2018. The Trolley Problem Version of Autonomous Vehicles. The Open Transportation Journal 12: 105–13. [Google Scholar] [CrossRef]

- Yong, Huang. 2017. Why an Upright Son Does not Disclose His Father Stealing a Sheep: A Neglected Aspect of the Confucian Conception of Filial Piety. Asian Studies 5: 15–45. [Google Scholar]

- Zarkadakis, George. 2016. In Our Own Image: Savior or Destroyer? The History and Future of Artificial Intelligence. New York: Pegasus Books. [Google Scholar]

- Zhu, Rui. 2002. What If the Father Commits a Crime? Journal of the History of Ideas 63: 1–17. [Google Scholar] [CrossRef]

| 1 | From a rigorous point of view, our definitions preclude certain types of solutions from being discovered by a robot in a large state space, namely those that necessitate a change to the underlying values of the robot. This limitation is intentional and realistic; furthermore, it defers the question of the rights we should afford to a self-aware robot, which is beyond the scope of this article, although we nevertheless mention it in several places where that subject intersects directly with our article’s main focus. |

| 2 | In theory, a driverless car may employ machine learning to improve its results over time, learning through trial and error, both “offline” with a training set of prior events and “online” during actual operation. Such a car could use any number of metrics to determine success, be that a survey of human reactions to the outcome or the judgement of some select group of human agents (whether the vehicle’s owner, the company’s C-suite that developed the machine, or human society at large). |

| 3 | For example, an online search that included the string “Java” might return results about an island, coffee, a programming language, or a color if the context were not clear. |

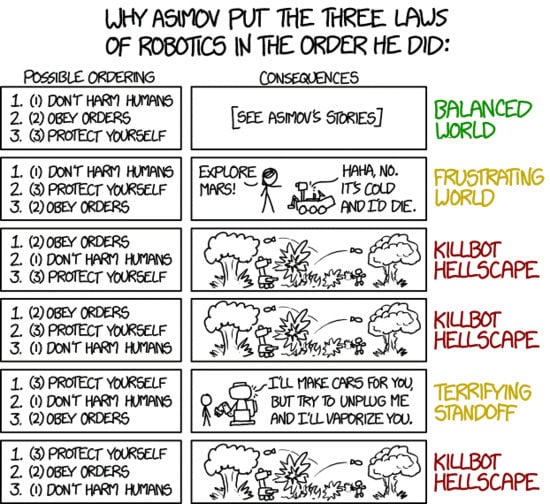

| 4 | The forced surgery problem involves an exception from a requirement to prevent harm, while the military example provides an exception to the prohibition of causing harm, but the point—and the basic challenge for both ethicists and computer programmers—is much the same. However, there is a meaningful difference between these examples, namely that the first resolves a conflict only within the first law of robotics, whereas the second example involves a resolution between the first and second laws, in a way that doesn’t cause a “killbot hellscape” of the sort depicted in Figure 3. |

| 5 | A robot-centered retelling of the Akedah—the binding of Isaac—could explore this topic in interesting ways—see (Bringsjord and Taylor 2011, p. 104); as well as (Dukes 2015), who draws a different sort of connection between the patriarchal narrative in Genesis and an autonomous weapon system. |

| 6 | In later Judaism (in particular the corpus of rabbinic literature) forms of this principle are also articulated. This is often associated with the phrase pikuach nefesh, understood to denote the need to violate laws in order to save a life (Collins 2014, pp. 244–67). |

| 7 | See the study of how placebo options in Facebook and Kickstarter increase the sales of non-optimal purchases by making them seem relatively better, apparently undermining human quantitative and logical reasoning (Vaccaro et al. 2018). |

| 8 | There is a third “challenge” so to speak, in that some tasks are mathematically impossible (provably so), at least in our current computational model. Hence, no amount of design and implementation could ever produce some of the imagined technology in science fiction. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

McGrath, J.; Gupta, A. Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines. Religions 2018, 9, 240. https://doi.org/10.3390/rel9080240

McGrath J, Gupta A. Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines. Religions. 2018; 9(8):240. https://doi.org/10.3390/rel9080240

Chicago/Turabian StyleMcGrath, James, and Ankur Gupta. 2018. "Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines" Religions 9, no. 8: 240. https://doi.org/10.3390/rel9080240

APA StyleMcGrath, J., & Gupta, A. (2018). Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines. Religions, 9(8), 240. https://doi.org/10.3390/rel9080240