2.2. Sentiment Analysis for Product Design

The formulation of closed-ended survey questions limits the usefulness of the insight in the responses. Primarily, the process assumes the customers care about the features mentioned in the survey [

27]. There is more value in collecting customer feedback in an unguided manner. Thus, more open-ended questions are preferred since they encourage more sincere user input quickly and efficiently. This requirement motivates the heightened interest in analyzing data sources, such as social media and online reviews. Other motivating factors are the benefits gained from the faster and less expensive data acquisition processes these online data sources present. Researchers and industry experts need to surmount the challenges of analyzing unstructured and opinionated customer feedback data to access these benefits.

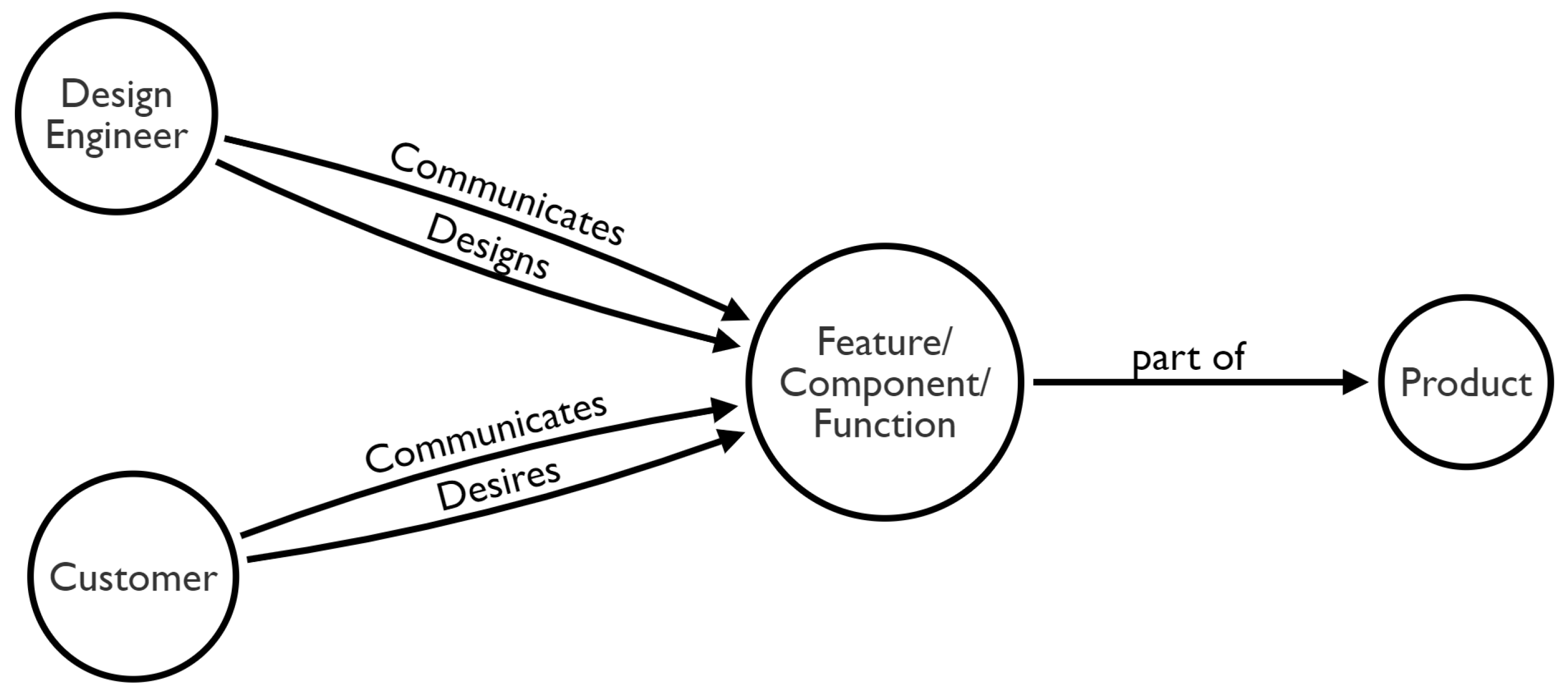

The literature on aspect-based sentiment analysis is quite extensive. This section focuses on studies that have proposed solutions for extracting insights from unstructured textual customer feedback to support the product or service design process. A fundamental task in enhancing product design is to extract user requirements from a corpus of customer statements. The general steps include applying various techniques to perform product aspect extraction, sentiment classification, and opinion summarization. Some research has focused on extracting user requirements to support the early stages of product design [

28]. The frequency of features with positive sentiments is used to estimate and weigh the significance of customer requirements.

A semi-automated systematic approach to support designers in the decision-making process was proposed in [

29]. First, the authors created a dataset by crawling 264 customer reviews from the web. Second, they subjected product review to Document-Level Sentiment Analysis (DLSA). The third processing step involved a round of Aspect-Based Sentiment Analysis (ABSA). A domain-specific lexicon was used with a user-defined dictionary for the ABSA task. The ABSA operator feeds on a generic opinion lexicon to detect sentiment words. Furthermore, the work focused more on finding the cause-effect of the pros and cons through Correspondence Analysis (CA). It is unclear how the solutions will perform over a larger corpus of several thousands of reviews. The results of the data-driven CA require further intervention by the design engineer. The proposed scheme does not give product designers a way to query patterns of their choice. Furthermore, they have no way of deciding the level of granularity for performing sentiment analysis.

The proposed work by [

15] deals with revealing new insights to aid product design through a combination of online product reviews, design theory method, and data analytics. It uses a statistical approach for analyzing product reviews. The study extracts feature-sentiment pairs after a trained model predicts sentence sentiments. The authors identify correlation for feature-sentiment pairs on the assumption that the small size of sentence arrays gives better accuracy on average. In addition, the framework used the frequency of distinct feature pairs as a sign of their significance. However, a simple frequency count in information retrieval is not a good indicator of relevance or significance in large corpora. Overall, the framework performed the defined task without giving the designer enough control over the direction of data processing. Furthermore, it lacked variability in the analytics possible. A designer using this scheme has no way of probing further by asking questions or querying along multiple dimensions. This required flexibility is akin to using traditional surveys and focus groups. They limited the analysis to feature-sentiment pairs and touched little on the product aspect classes.

Although a product can be enhanced by improving its robust features, other works take different approaches. For instance, studies such as that in [

30] try to use approaches such as topic modeling and sentiment analysis to reveal opportunities (new features) for design improvements. On the contrary, other works such as that of [

31] focus on finding obsolete functions of products to improve the design. In [

32], research takes on providing information about the weakness of the product. It proposed an opinion-aware analytical framework that uses a combination of sentiment analysis and network analysis to detect product weakness. It takes into consideration both comparative and non-comparative opinions. The work significantly leverages the comparative statements discussing two products to create a network where vertices are of the same type (products) and sentiment strength serves as weights for edges. The Page Rank algorithm is used to compute the authority of vertices. It relies mainly on the content of the reviews. Although it is related to product design, the results are more suitable for customers than designers. It provides limited options for engineers’ input and intelligent querying. The product improvement strategy in [

33] relies on the combination of the conjoint analysis model and the traditional Kano method. It consumes each review as a stimulus perceived by the customer. It takes the sentiment of the text as the consumer’s utility. Three preference models (i.e., vector, ideal-point, and part-worth function models) are used in the conjoint analysis. The proposed attribute or product aspect identification and sentiment analysis methods do not incorporate the product research plan of the design expert. They depend mainly on the term frequencies and their inter-semantic relationships as Latent Dirichlet Allocation and Page Rank inputs. Moreover, extra effort from design experts was then used to scale down the discovered terms from 4105 to 1123. For design domain experts, it seems counterintuitive to visit the review corpora first for insight into product aspects or their keywords/synonyms. Their work also relied on the naïve summarization of simple tallies. This approach does not lend itself to expressive querying to glean insight into relationships between subcomponents of configurable electronic devices.

A framework was proposed in [

34] to track the trends of customer requirements as well as make product comparisons for product design. Their framework employed Part of Speech tagging and WordNet lexicons to extract product features from reviews. The framework used frequent nouns as seed words to identify aspects. The identification of aspects is preceded by stopwords removal and stemming. These processing tasks are necessary for the semantic clustering of words that follows. They then used a pre-labeled dataset to train a Naïve Bayes classifier for predicting subjective and objective sentences. They combined the subjective representation of sentences and the WordNet lexicon to build a Naïve Bayes sentiment classifier to predict the sentiment polarities of product features. To analyze the dynamics of customer requirements over time, the authors proposed a Kalman filtering [

35]. This approach relies heavily on prelabeled datasets such as the previously discussed literature. Furthermore, it presents only simple opinion summaries, which are inadequate for complex product design. The study in [

36] built a structured preference model centered on sentiment orientation analysis to determine candidate features for improvement. The study constructed a re-design index and a target feature selection model to weigh the feature re-design priority and to identify candidate features in the presence of various factors. The shortlisting of candidate features was implemented whiles considering the factors of engineering cost, re-design lead time, and technical risk.

The application of fuzzy logic and theory has been helpful in some research studies for analyzing customer feedback for product design. The work in [

37] proposed a method for investigating the preferences of consumers with a focus on product design. The approach combines sentiment analysis, fuzzy set theory and uses evidential reasoning as an optimization algorithm for analyzing user-generated content. It essentially shortens the time to review the content and summarizes the results according to the domain expert’s prescribed criteria. The criteria are a shortlist of extracted keywords from the user-generated content. Opinion summaries are valuable outputs of methods that process large volumes of opinionated text. However, it is necessary to further analyze the opinion-based interactions between product aspects when working with complex configurable products.

A dynamic evolving neural-fuzzy inference system was proposed and applied to extract customer preferences via opinion mining [

38]. Their proposed scheme is an adaptive neuro-fuzzy inference system that processes product attribute values and the mined time series data. Similarly, [

39] proposed an approach to dynamically mine user requirements the categories of user attributes, common attributes, and manufacturer attributes. Attribute weights are assigned by the Term Frequency-Inverse Document Frequency (TFIDF) method. In [

40], the presented a fuzzy rough set time series method that combines the results of google trends with opinion mining of online reviews to forecast the importance of product attributes.

Information gathered from social media has also helped support New Product Development decision-making in an automotive industry use case [

41]. Their suggested design process is assisted by applying a sensemaking approach. The authors used Fuzzy logic to perform mining and measurement of the variations of customer sentiments in all the stages of the new product development to identify sensemaking patterns. The automotive industry currently churns out highly complex and configurable products. The analysis in this proposed approach deals with individual aspects of the products in isolation. Automotive components are interrelated in function, position, or appearance. Therefore, experts gain less insight if they analyze user sentiment probing the emotions on specific aspects relative to others. Such an added analytics functionality will be beneficial in cases where the change in one feature may require a justified change in a related feature or component.

Social media platforms have also served as a data source for sentiment analysis for product design improvements. Sentiment analysis has been influential in the analysis of feedback for the design of Product Service Systems (PSS) [

42,

43]. Ref. [

44] focuses on simplifying the information search process for designers by proposing a method to merge and present product design-related insights from social media. The method was applied to the re-design of PSS whiles considering user feedback from multiple sources. The study proposed an opinion extraction tool with a filtering functionality to enable PSS experts to filter information along several dimensions, such as gender, location, and final product.

The New Product Development (NPD) research domain has also benefited from the analysis of unstructured textual feedback provided by stakeholders. The popularity of product features among social media users was measured through sentiment analysis to aid in decision-making in (NPD) [

45]. However, the proposed approach requires the intervention of domain experts to prune the list of commonly occurring aspects in a manual activity that produces the most common features. These features are then weighted against their frequency rates to measure their association with the proposed features.

Textual customer feedback is also applicable to evaluating user experience in the product design research space. Some researchers adopt metrics such as the rating score, number of stars, or sentiment score of a review to measure a customer’s satisfaction. Adopting such a holistic approach is valid for extracting the overall opinion on a product or comparing the general customer opinions on the design of similar products. However, its effectiveness is limited for supporting design decisions because it does not provide information about particular product aspects. In essence, there is a failure to determine the features customers like and dislike. To resolve these challenges, other researchers take an approach that breaks user experience down into evaluative constructs representing interaction with individual aspects. For instance, the work by [

46] offers a way to extract essential elements that enable analysis and understanding of user experience from social media data. Once again, the results are a simple tally of feature sentiments. Their method does not allow related features to be analyzed concurrently interdependently. A critical task in using opinion mining for product design is in summarization, and sampling [

10]. It is especially in this task that this paper identifies an opportunity for improvement. Both review summarization [

47], and hierarchical organization [

48,

49,

50], have a level of granularity such that they operate at the document level. Inherent relationships exist between specific product aspects. These relationships are imbibed in the way customers comment about them and how they interface in the product’s design. Therefore, a more granular approach with functionality to analyze this connectivity is needed. A typical pattern is apparent in recent related works where the eventual results of proposed schemes are based on simple opinion summaries. They cannot give the user the flexibility to perform investigations on product reviews along multiple dimensions of inquiry.

The study in [

51] investigated and quantified the effects of product attributes on customer satisfaction by applying regression and sentiment analysis. Their regression model was based on customer satisfaction results from the sentiment analysis and product features. However, the regression analysis on customer satisfaction results was carried out at the sentence level. The authors judged the proportion of satisfied customers by the number of positive review sentences. The analysis was not done at the aspect level and hence does not give product designers direct access to what features customers liked. The framework proposed in [

52] extracted information from online product reviews and verified its helpfulness to designers. Helpful reviews are classified as positive, while non-helpful reviews are classified as negative. The authors trained a prediction model on helpful/non-helpful reviews from amazon with a CNN on word2vec embeddings. The framework provides a way to perform sentiment prediction but only at the sentence level. The aspect extraction that followed is disjoint from the sentence sentiments. This makes it challenging to determine customers’ opinions at a fine-grained level.