Deep Learning Pet Identification Using Face and Body

Abstract

1. Introduction

- A full-body animal identification system, which matches animals based on face and body where the results of one method complement the other;

- Improved species classification using transfer learning;

- The use of facial landmark detection for animal face detection and comparison of different face alignment functions;

- The design of a recommendation system for animal owners who lost their pets;

- The use of additional information, which are breed, gender, age, and species of lost animals to reduce the search area and to expedite finding as well as improve the accuracy.

2. Related Work

3. Data

- Filtering. After collecting the dataset, irrelevant photos or videos were removed, and only the ones where the whole or partial body of the animals was present were selected for both classification and identification;

- Categorizing. In this stage, cats and dogs were separated. In addition, photos of the same animals were put together in the same directory. Additionally, the dataset is split into test and train categories. For species classification, 17,421 images were used for training, and 5411 for testing;

- Labeling. Each class of animals was labeled. The labels at this stage were just cats and dogs. Each animal was assigned an ID that was unique to the animal itself. We assigned a directory for each animal and the name of the directory is the ID assigned to that animal. Additionally, the name of the animal along with other extra information, including breed, age, and gender, were added to the animal’s profile. We created two different CSV files for cats and dogs. Each CSV file contains information about the animal, including their ID, breed, age, and gender. If no extra information is provided for the animal, for example, their age is not applicable, the respective entry would be a NaN value, which is a numeric data type with an undefined value;

- Structuring. Defining a logic for the algorithm to access the dataset was performed at this stage. Moreover, in the identification data, we considered the worst-case scenario in which only one image of an animal is registered in our database. Although multiple images of an animal can be registered in its profile to increase the chances of finding a match, we only considered one such image. The image taken from the found animal is called a query image. Multiple images can be query images. However, in the worst-case scenario, the query image would be compared only with one registered image. This is so that we are aware of the performance of the model in the worst-case scenario. We used the remaining data from our model for testing;

- Preprocessing. In the data preprocessing stage, normalizing and resizing techniques were applied.

4. Methodology and Implementation

- 1.

- Animal Detection and Species Classification. In this layer, the animal is detected, and its class is recognized as either cat or dog (Figure 2a). For training, COCO, BCSPCA, and Standford dog dataset were used. We used Convolutional Neural Networks (CNN) as the classification algorithm [21]. We trained the data with YOLO (You Only Look Once) V4 [22,23] with transfer learning to detect animals and perform classification. YOLO V4 is a pre-trained one-stage object detection network, consisting of 53 convolution layers. We have applied transfer learning with pre-trained weights on the COCO dataset and then trained the model on our new data, starting with YOLO’s initial weights. The images of the detected animal with the use of segmentation techniques were cropped and connected to the next layers for identification purposes. By doing so, the noise in the image is reduced, and we only consider the animal part in the photos for identification. Animal detection is a crucial component that enhances identification efficiency, as it reduces the search space and minimizes the time required for identification. Furthermore, the classification step can be bypassed if the animal finder inputs the species into an API that connects user inputs to the framework. Our research is designed to be integrated into an app, which means that if the user specifies the species, the system will skip the classification step and proceed to step 2;

- 2.

- Face Detection and Land Marking. We used a pretrained MobileNetV2 model for face detection for both cats and dogs. The detected faces were cropped and transferred to the face alignment layer (Figure 2b). The rest of the animal’s body was then considered for body identification. Additionally, if no face was detected, the image would be directed to the body section, which only applies feature extraction. Both the face and body identification run concurrently afterward. The number of key points used for landmarking for cats is five (two eyes, two ears, and the nose), and for dogs is six (two eyes, two ears, the nose, and the top of the head). Figure 3 illustrates these points. There is no best number of points for landmarking for cats and dogs, but since cats mostly have their ears upwards, having one key point at the top of the head does not make a significant difference. Meanwhile, due to the variation in breeds of dogs, one extra key point (key point number 1) is helpful in cases where ears are downwards;

- 3.

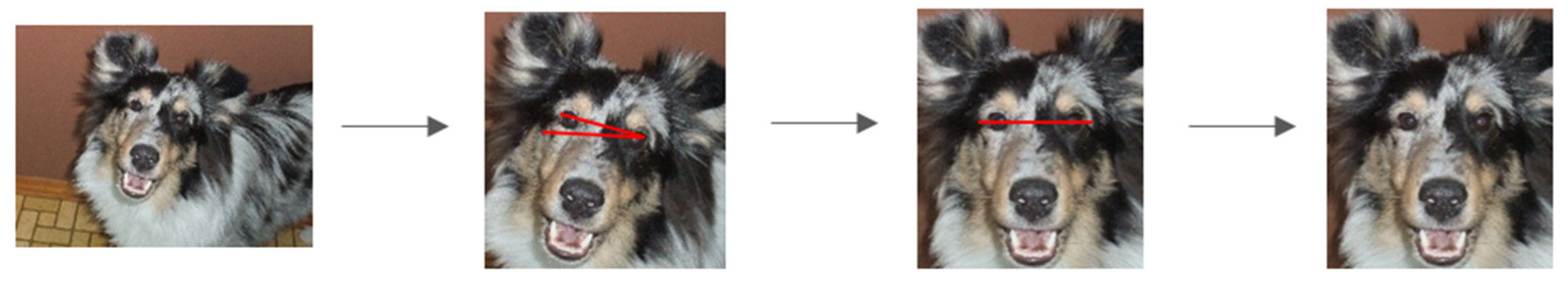

- Face alignment. Based on the labeled dataset, we elaborated on the above; we consider the eyes of the animals to be the baseline for face alignment (Figure 2c). We then calculate the angle (β) between the x-axis and the line connecting the animal’s eyes. Based on this angle, we rotate the image to align the eyes horizontally. Figure 4 illustrates an example of this procedure. We used homography transformation in the OpenCV (https://opencv.org, accessed on 3 May 2023) library. The homography function maps points in one image, in our case, eyes and other landmarks, to the corresponding points in the other image, which are the aligned images in our scenario. The homography function takes into account detected landmarks and applies the appropriate transformation. As a result, the homography function is performed based on the specific features of each animal. We compared homography with getAffineTransform (https://docs.opencv.org/3.4/d4/d61/tutorial_warp_affine.html, accessed on 3 May 2023), getRotationMatrix2D, and getPerspectiveTransformation (https://docs.opencv.org/3.4/da/d54/group__imgproc__transform.html#gafbbc470ce83812914a70abfb604f4326, accessed on 3 May 2023), which are transformation functions in OpenCV. We observed that the homography function maps and aligns images more accurately than the others;

- 4.

- Feature Extraction. After obtaining animals’ faces and bodies, we transform these images into numerical features (Figure 1d);

- 5.

- Comparison. Face alignment techniques provide us with a baseline for comparing different animal faces together. However, due to numerous possible poses of an animal’s body captured from various angles, it is cumbersome to define landmarks for each pose for each animal. In turn, this will negatively affect the time and efficiency of the algorithm. Accordingly, the alternative solution is to encourage animal owners to upload more images of their animals to their profile to increase the possibility of having a similar pose to the query image of the lost pet. Thus, the comparison of the faces is performed after alignment, and the comparison of the body is performed without alignment (Figure 1e). We used cosine similarity. This measurement is defined in Equation (1) as follows:In this equation, x and y are vectors. The dot product of the vectors is divided by the cross product of the length of the vectors. The smaller the distance, the closer the two animals are, which means that the possibility of matching the query image with the suggested one by the model is higher;

- 6.

- Recommendations. The top N results of the comparison are presented to this layer (Figure 1f). The top match means that the similarity value of comparison from the previous step is the highest. We searched the database to find the top animals’ names with the IDs found and recommend them to the user who queried the image. In our case, having false positives is more useful than obtaining more accurate results since we are recommending the top possibilities of the match to an external entity to confirm.

5. Experimental Results

5.1. Species Classification

5.2. Identification and Recommendations

6. Conclusions

7. Limitations and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Weiss, E.; Slater, M.; Lord, L. Frequency of Lost Dogs and Cats in the United States and the Methods Used to Locate Them. Animals 2012, 2, 301–315. [Google Scholar] [CrossRef] [PubMed]

- Zafar, S. The Pros and Cons to Dog GPS Trackers-Blogging Hub. 12 December 2021. Available online: https://www.cleantechloops.com/pros-and-cons-dog-gps-trackers/ (accessed on 19 February 2023).

- Hayasaki, M.; Hattori, C. Experimental induction of contact allergy in dogs by means of certain sensitization procedures. Veter. Dermatol. 2000, 11, 227–233. [Google Scholar] [CrossRef]

- Albrecht, K. Microchip-induced tumors in laboratory rodents and dogs: A review of the literature 1990–2006. In Proceedings of the 2010 IEEE International Symposium on Technology and Society, Wollongong, Australia, 7–9 June 2010; pp. 337–349. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Biometric Recognition for Pet Animal. J. Softw. Eng. Appl. 2014, 7, 470–482. [Google Scholar] [CrossRef]

- Dryden, I.L.; Mardia, K.V. Statistical Shape Analysis: With Applications in R, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Cao, Z.; Principe, J.C.; Ouyang, B.; Dalgleish, F.; Vuorenkoski, A. Marine animal classification using combined CNN and hand-designed image features. In Proceedings of the OCEANS 2015-MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Komarasamy, G.; Manish, M.; Dheemanth, V.; Dhar, D.; Bhattacharjee, M. Automation of Animal Classification Using Deep Learning. In Smart Innovation, Systems and Technologies, Proceedings of the International Conference on Intelligent Emerging Methods of Artificial Intelligence & Cloud Computing; García Márquez, F.P., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 419–427. [Google Scholar] [CrossRef]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef] [PubMed]

- Qiao, Y.; Su, D.; Kong, H.; Sukkarieh, S.; Lomax, S.; Clark, C. Individual Cattle Identification Using a Deep Learning Based Framework. IFAC-PapersOnLine 2019, 52, 318–323. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T.; Santos, P.M. A Study on the Detection of Cattle in UAV Images Using Deep Learning. Sensors 2019, 19, 5436. [Google Scholar] [CrossRef] [PubMed]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C.V. Cats and dogs. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3498–3505. [Google Scholar] [CrossRef]

- Lai, K.; Tu, X.; Yanushkevich, S. Dog Identification using Soft Biometrics and Neural Networks. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Moreira, T.P.; Perez, M.; Werneck, R.; Valle, E. Where is my puppy? Retrieving lost dogs by facial features. Multimed. Tools Appl. 2017, 76, 15325–15340. [Google Scholar] [CrossRef]

- Turk, M.; Pentland, A. Eigenfaces for Recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Sirovich, L.; Kirby, M. Low-dimensional procedure for the characterization of human faces. J. Opt. Soc. Am. A JOSAA 1987, 4, 519–524. [Google Scholar] [CrossRef] [PubMed]

- Belhumeur, P.; Hespanha, J.; Kriegman, D. Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, D.; Yang, J.; Yang, J.-Y. A Two-Phase Test Sample Sparse Representation Method for Use with Face Recognition. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1255–1262. [Google Scholar] [CrossRef]

- Liu, J.; Kanazawa, A.; Jacobs, D.; Belhumeur, P. Dog Breed Classification Using Part Localization. In Lecture Notes in Computer Science, Proceedings of the Computer Vision–ECCV 2012, Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 172–185. [Google Scholar] [CrossRef]

- ImageNet Classification with Deep Convolutional Neural Networks|Communications of the ACM. Available online: https://dl.acm.org/doi/abs/10.1145/3065386 (accessed on 12 April 2023).

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bae, H.B.; Pak, D.; Lee, S. Dog Nose-Print Identification Using Deep Neural Networks. IEEE Access 2021, 9, 49141–49153. [Google Scholar] [CrossRef]

- Yeo, C.Y.; Al-Haddad, S.; Ng, C.K. Animal voice recognition for identification (ID) detection system. In Proceedings of the 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, Penang, Malaysia, 4–6 March 2011; pp. 198–201. [Google Scholar] [CrossRef]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming Auto-Encoders. In Lecture Notes in Computer Science, Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2011, Espoo, Finland, 14–17 June 2011; Honkela, T., Duch, W., Girolami, M., Kaski, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 44–51. [Google Scholar] [CrossRef]

| Predicted | Dog | Cat | |

|---|---|---|---|

| Actual | |||

| Dog | 2679 | 58 | |

| Cat | 40 | 2634 | |

| Metric | Result |

|---|---|

| Training accuracy on COCO dataset | 85.92% |

| Testing accuracy on Kaggle dataset | 98.18% |

| Testing precision on Kaggle dataset | 97.88% |

| Testing recall on Kaggle dataset | 98.52% |

| F1 score on Kaggle dataset | 98.19% |

| Nth Recommendation | Face Identification Accuracy | Body Identification Accuracy | Face and Body Identification Accuracy |

|---|---|---|---|

| 1 | 80% | 81% | 86.5% |

| 2 | 14% | 15% | 10.5% |

| 3 | 3% | 2% | 2% |

| 4 to 10 | 3% | 2% | 1% |

| Metric | Identification Accuracy Using Face | Overall Accuracy (Soft Biometrics) | |

|---|---|---|---|

| Study | |||

| Method by Kenneth et al. [14] | 78.09% | 80% | |

| Our method | 84.94% | 92% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Azizi, E.; Zaman, L. Deep Learning Pet Identification Using Face and Body. Information 2023, 14, 278. https://doi.org/10.3390/info14050278

Azizi E, Zaman L. Deep Learning Pet Identification Using Face and Body. Information. 2023; 14(5):278. https://doi.org/10.3390/info14050278

Chicago/Turabian StyleAzizi, Elham, and Loutfouz Zaman. 2023. "Deep Learning Pet Identification Using Face and Body" Information 14, no. 5: 278. https://doi.org/10.3390/info14050278

APA StyleAzizi, E., & Zaman, L. (2023). Deep Learning Pet Identification Using Face and Body. Information, 14(5), 278. https://doi.org/10.3390/info14050278