QYOLO: Contextual Query-Assisted Object Detection in High-Resolution Images

Abstract

1. Introduction

- In the feature fusion neck network, features from different layers are extracted and fused by using the rows and columns within each layer as queries. This approach enhances feature flow and interaction within the network.

- Recognizing that many inspection targets are situated on towering structures, the algorithm optimizes attention mechanisms for efficient long-range feature modeling. Strengthened by enhanced inter-class cross-attention, it fosters stronger correlations among diverse object categories, heightening the overall recognition capability.

2. Background Materials

2.1. Object Detector

2.2. Query-Based Detector

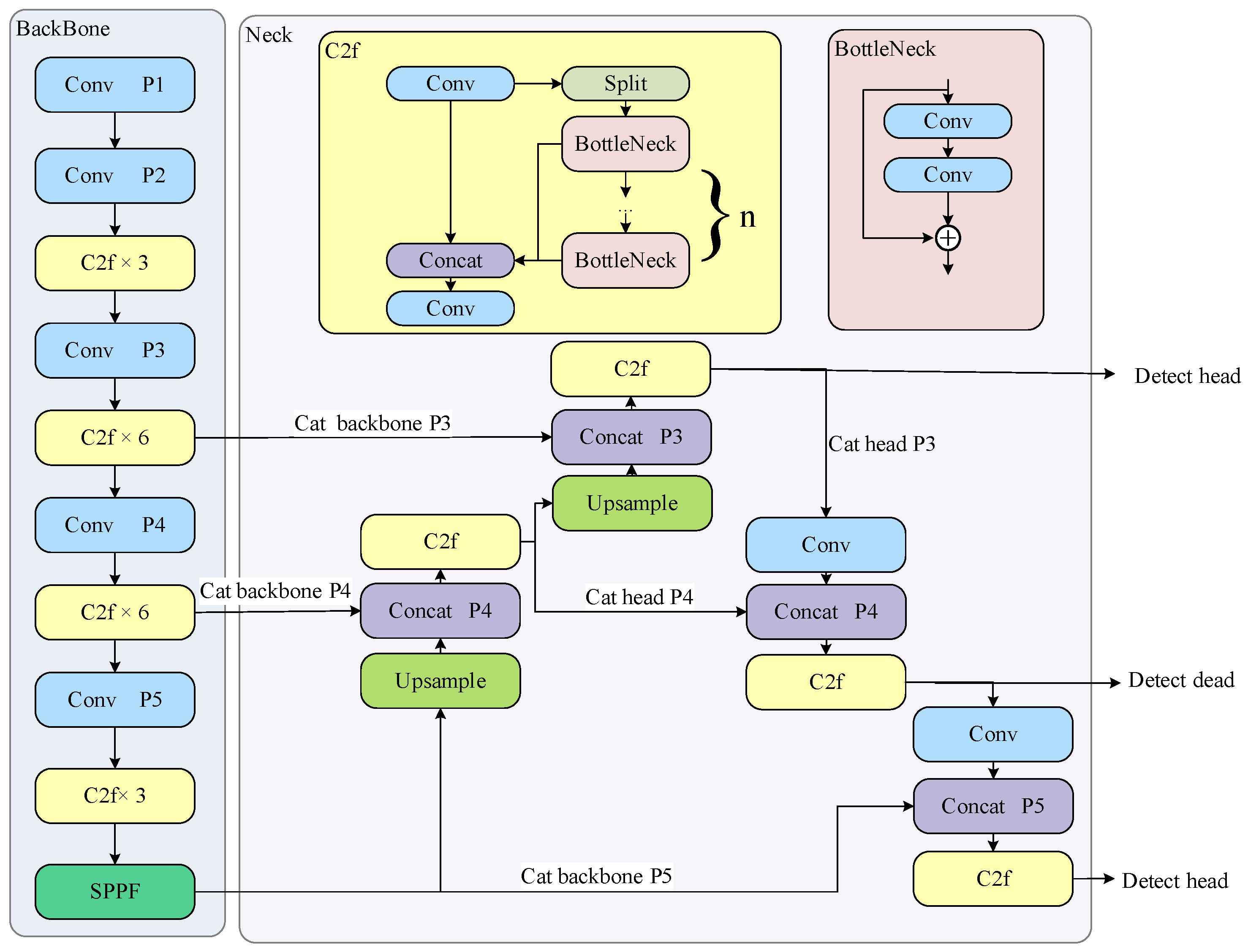

3. QYOLOv8 Algorithm

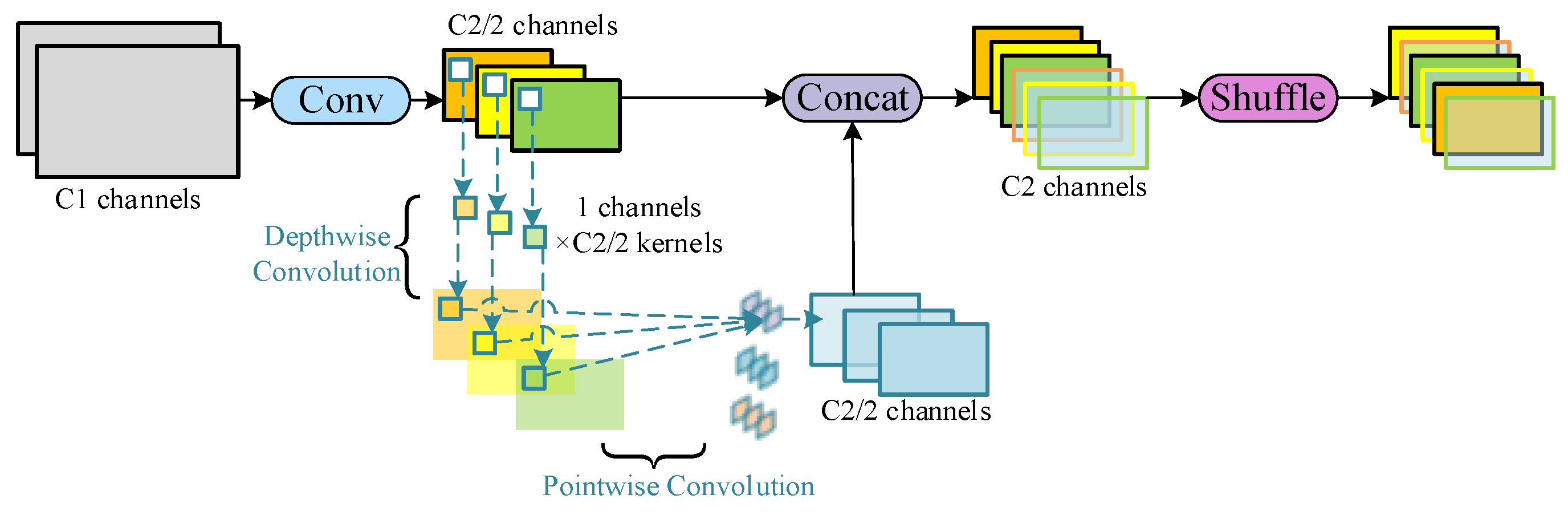

3.1. GSConv

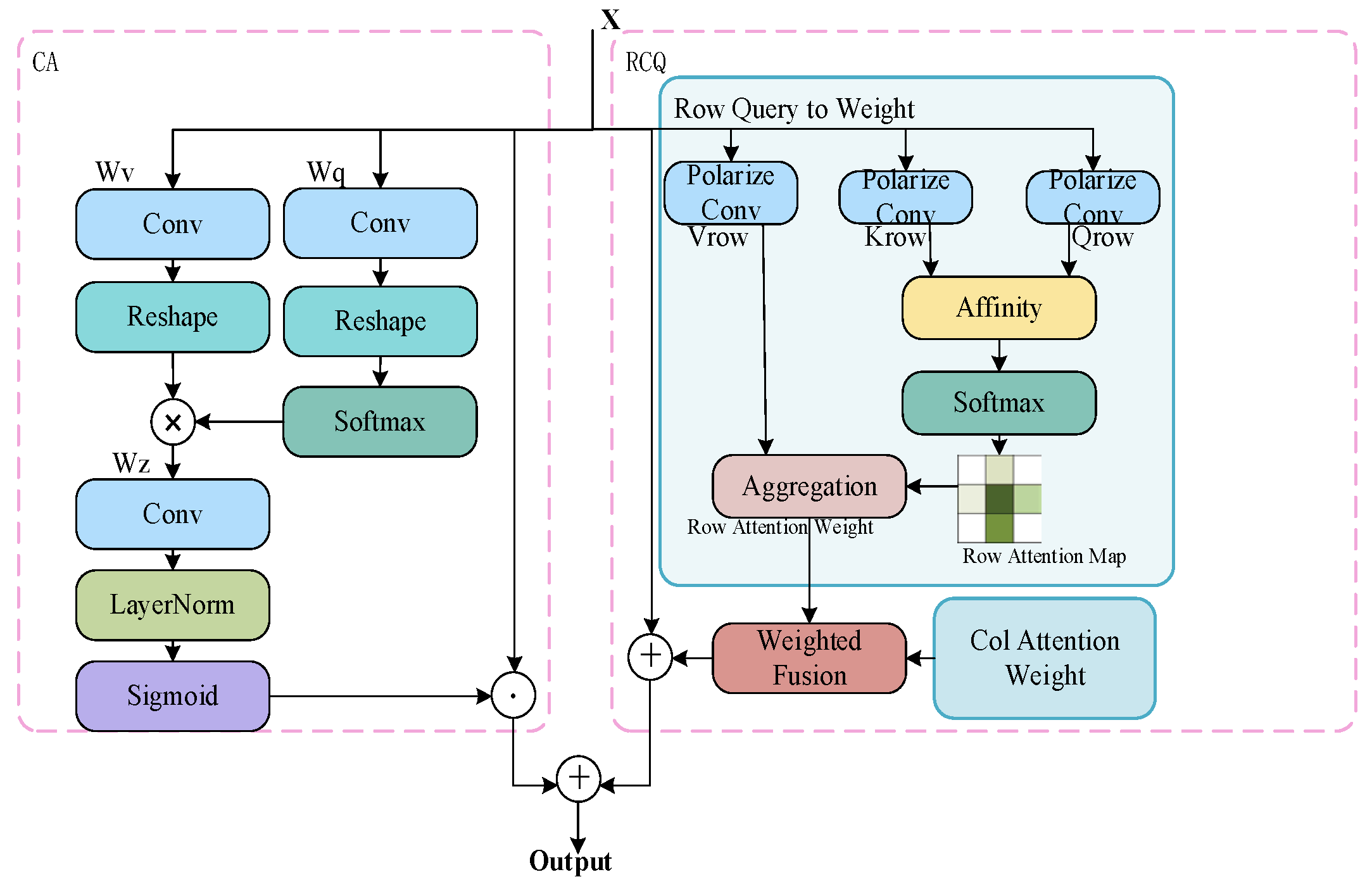

3.2. Introducing Query Methods

3.2.1. BifNet Query

3.2.2. Row–Column Query

4. Experiment

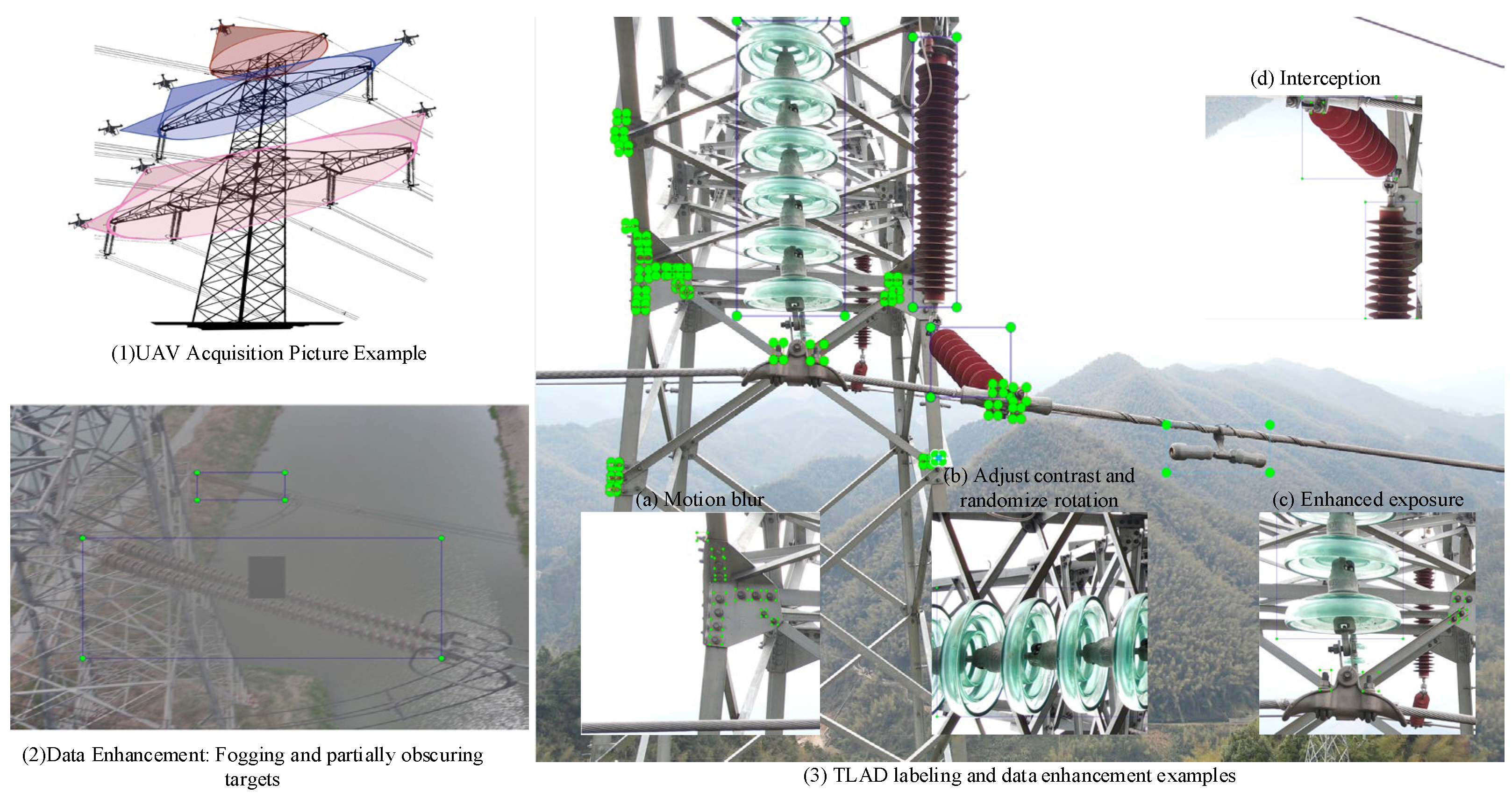

4.1. Datasets

4.2. Training Parameters

4.3. Evaluation Metrics

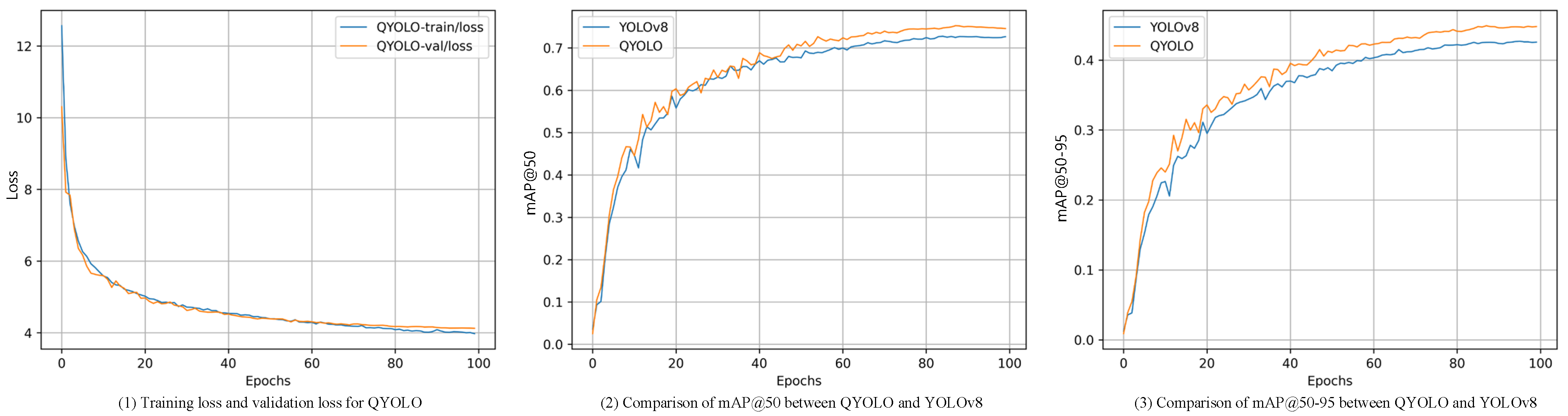

4.4. Results and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lu, L.; Dai, F. Accurate Road User Localization in Aerial Images Captured by Unmanned Aerial Vehicles. Autom. Constr. 2024, 158, 105257. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; IEEE/CVF: Piscataway, NJ, USA, 2021; pp. 2778–2788. [Google Scholar]

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive Sparse Convolutional Networks with Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE/CVF: Piscataway, NJ, USA, 2023; pp. 13435–13444. [Google Scholar]

- Dian, S.; Zhong, X.; Zhong, Y. Faster R-Transformer: An Efficient Method for Insulator Detection in Complex Aerial Environments. Measurement 2022, 199, 111238. [Google Scholar] [CrossRef]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H.; et al. InternImage: Exploring Large-Scale Vision Foundation Models with Deformable Convolutions. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE Computer Society: Piscataway, NJ, USA, 2023; pp. 14408–14419. [Google Scholar]

- Kontogiannis, S.; Konstantinidou, M.; Tsioukas, V.; Pikridas, C. A Cloud-Based Deep Learning Framework for Downy Mildew Detection in Viticulture Using Real-Time Image Acquisition from Embedded Devices and Drones. Information 2024, 15, 178. [Google Scholar] [CrossRef]

- Chen, F.; Zhang, H.; Hu, K.; Huang, Y.; Zhu, C.; Savvides, M. Enhanced Training of Query-Based Object Detection via Selective Query Recollection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE Computer Society: Los Alamitos, CA, USA, 2023; pp. 23756–23765. [Google Scholar]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13668–13677. [Google Scholar]

- Liu, Y.; Li, H.; Hu, C.; Luo, S.; Luo, Y.; Chen, C.W. Learning to Aggregate Multi-Scale Context for Instance Segmentation in Remote Sensing Images. IEEE Trans. Neural Netw. Learn. Syst. 2024, 1–15. Available online: https://ieeexplore.ieee.org/document/10412679 (accessed on 12 September 2023).

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. DN-DETR: Accelerate DETR Training by Introducing Query DeNoising. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE Computer Society: Piscataway, NJ, USA, 2022; pp. 13609–13617. [Google Scholar]

- Yin, X.; Yu, Z.; Fei, Z.; Lv, W.; Gao, X. PE-YOLO: Pyramid Enhancement Network for Dark Object Detection. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2023, Crete, Greece, 26–29 September 2023; Iliadis, L., Papaleonidas, A., Angelov, P., Jayne, C., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 163–174. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO, version 8.0.0; Computer Software; 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 23 August 2023).

- Soylu, E.; Soylu, T. A Performance Comparison of YOLOv8 Models for Traffic Sign Detection in the Robotaxi-Full Scale Autonomous Vehicle Competition. Multimed. Tools Appl. 2023, 83, 25005–25035. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the Computer Vision—ECCV 2018, PT XIV, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer Nature: Heidelberg, Germany, 2018; Volume 11218, pp. 122–138. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-Neck by GSConv: A Better Design Paradigm of Detector Architectures for Autonomous Vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Ben, Y.; Li, X. Dense Small Object Detection Based on Improved Deep Separable Convolution YOLOv5. In Proceedings of the Image and Graphics, Chongqing, China, 6–8 January 2023; Lu, H., Ouyang, W., Huang, H., Lu, J., Liu, R., Dong, J., Xu, M., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 103–115. [Google Scholar]

- Huang, H.; Feng, Y.; Zhou, M.; Qiang, B.; Yan, J.; Wei, R. Receptive Field Fusion RetinaNet for Object Detection. J. Circuits Syst. Comput. 2021, 30, 2150184. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhen, Z.; Zhang, L.; Qi, Y.; Kong, Y.; Zhang, K. Insulator Detection Method in Inspection Image Based on Improved Faster R-CNN. Energies 2019, 12, 1204. [Google Scholar] [CrossRef]

- Cao, X.; Zhang, Y.; Lang, S.; Gong, Y. Swin-Transformer-Based YOLOv5 for Small-Object Detection in Remote Sensing Images. Sensors 2023, 23, 3634. [Google Scholar] [CrossRef] [PubMed]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Yu, C.; Shin, Y. An Enhanced RT-DETR with Dual Convolutional Kernels for SAR Ship Detection. In Proceedings of the 2024 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Osaka, Japan, 19–22 February 2024; pp. 425–428. [Google Scholar]

- Shi, H.; Yang, W.; Chen, D.; Wang, M. CPA-YOLOv7: Contextual and Pyramid Attention-Based Improvement of YOLOv7 for Drones Scene Target Detection. J. Vis. Commun. Image Represent. 2023, 97, 103965. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Cao, S.; Wang, T.; Li, T.; Mao, Z. UAV Small Target Detection Algorithm Based on an Improved YOLOv5s Model. J. Vis. Commun. Image Represent. 2023, 97, 103936. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Lin, X.; Sun, S.; Huang, W.; Sheng, B.; Li, P.; Feng, D.D. EAPT: Efficient Attention Pyramid Transformer for Image Processing. IEEE Trans. Multimed. 2021, 25, 50–61. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, L.; Han, B.; Guo, S. AdaMixer: A Fast-Converging Query-Based Object Detector. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5354–5363. [Google Scholar]

- Teng, Y.; Liu, H.; Guo, S.; Wang, L. StageInteractor: Query-Based Object Detector with Cross-Stage Interaction. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; IEEE Computer Society: Los Alamitos, CA, USA, 2023; pp. 6554–6565. [Google Scholar]

- Tamura, M.; Ohashi, H.; Yoshinaga, T. QPIC: Query-Based Pairwise Human-Object Interaction Detection with Image-Wide Contextual Information. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10405–10414. [Google Scholar]

- Zhuang, J.; Qin, Z.; Yu, H.; Chen, X. Task-Specific Context Decoupling for Object Detection. arXiv 2023, arXiv:2303.01047. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE Computer Society: Piscataway, NJ, USA, 2017; pp. 764–773. [Google Scholar]

- Zhang, Z.-D.; Zhang, B.; Lan, Z.-C.; Liu, H.-C.; Li, D.-Y.; Pei, L.; Yu, W.-X. FINet: An Insulator Dataset and Detection Benchmark Based on Synthetic Fog and Improved YOLOv5. IEEE Trans. Instrum. Meas. 2022, 71, 1–8. [Google Scholar] [CrossRef]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of Traffic Signs in Real-World Images: The German Traffic Sign Detection Benchmark. In Proceedings of the International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013. [Google Scholar]

- Li, H.; Chen, Y.; Zhang, Q.; Zhao, D. BiFNet: Bidirectional Fusion Network for Road Segmentation. IEEE Trans. Cybern. 2022, 52, 8617–8628. [Google Scholar] [CrossRef] [PubMed]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention Mechanisms in Computer Vision: A Survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

| Software configuration | System: Windows 10 |

| Frame: Pytorch1.12.0 | |

| Version: CUDA 11.7, cuDNN 8.5.0, Python 3.8 | |

| Hardware configuration | CPU: Intel Core I7-13700K |

| GeForce RTX 4090 of GPU | |

| Graphics memory: 24 G | |

| UAV: DJ MAVIC2-ENTERPRISE-ADVANCED, aperture: f/2.8, the equivalent focal length 24 mm, 32× digital zoom, a single shot | |

| Training hyperparameters | Optimizer: SGD |

| Learning rate: 0.01 (initial 90 epochs), 0.001 (final 10 epochs) | |

| Weight decay: 0.0005 | |

| Learning momentum: 0.937 |

| Model | Precision | Recall | F1-Score | mAP50 | mAP50–95 |

|---|---|---|---|---|---|

| YOLOv8 | 77.5 | 65.9 | 71.2 | 73.6 | 43.1 |

| YOLOv8-BifNet | 77.9 | 66.7 | 71.9 | 73.5 | 43.9 |

| YOLOv8-BifNet-RCQ | 78.9 | 66.8 | 72.4 | 73.4 | 43.3 |

| YOLOv8-GSConv-GSCSP | 75.6 | 66.9 | 71.0 | 74.6 | 44.0 |

| QYOLO | 79.3 | 68.5 | 73.5 | 75.2 | 44.9 |

| Model | Precision-S | Recall-S | F1-Score-S | AP50-S | AP50–95-S |

|---|---|---|---|---|---|

| YOLOv8 | 70 | 46.6 | 56.0 | 58.3 | 30.9 |

| YOLOv8-BifNet | 70.1 | 47.6 | 56.7 | 58.7 | 31.0 |

| YOLOv8-BifNet-RCQ | 70.6 | 48.6 | 57.6 | 59.4 | 31.2 |

| YOLOv8-GSConv-GSCSP | 71.8 | 45.4 | 55.6 | 62.3 | 33.9 |

| QYOLO | 71.2 | 51.1 | 59.5 | 63.8 | 35.2 |

| Model | Param. | mAP50 | mAP50–95 | mAP50-S | mAP50–95-S | Inference Time |

|---|---|---|---|---|---|---|

| YOLOv5 | 1.76M | 71.5 | 35.4 | 46.1 | 17.6 | 5.8 ms |

| YOLOv8 | 3.01M | 73.6 | 43.1 | 58.3 | 30.9 | 3.0 ms |

| YOLOv8-C3tr | 2.72M | 72.5 | 41.4 | 59.4 | 31.4 | 2.6 ms |

| YOLOv8-CANet | 2.81M | 73.1 | 43.1 | 59.0 | 31.0 | 4.0 ms |

| YOLOv8-DWConv | 3.12M | 74.5 | 43.6 | 62.3 | 34.2 | 3.2 ms |

| QYOLO | 2.92M | 75.2 | 44.9 | 63.8 | 35.2 | 3.9 ms |

| Model | Precision | Recall | F1-Score | mAP50 | mAP50–95 | Inference Time |

|---|---|---|---|---|---|---|

| YOLOv8 | 96.1 | 90.2 | 93.06 | 94.6 | 78.8 | 1.7 ms |

| QYOLO | 97.1 | 92.2 | 94.59 | 94.8 | 79.4 | 2.1 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, M.; Wang, W.; Mao, J.; Xiong, J.; Wang, Z.; Wu, B. QYOLO: Contextual Query-Assisted Object Detection in High-Resolution Images. Information 2024, 15, 563. https://doi.org/10.3390/info15090563

Gao M, Wang W, Mao J, Xiong J, Wang Z, Wu B. QYOLO: Contextual Query-Assisted Object Detection in High-Resolution Images. Information. 2024; 15(9):563. https://doi.org/10.3390/info15090563

Chicago/Turabian StyleGao, Mingyang, Wenrui Wang, Jia Mao, Jun Xiong, Zhenming Wang, and Bo Wu. 2024. "QYOLO: Contextual Query-Assisted Object Detection in High-Resolution Images" Information 15, no. 9: 563. https://doi.org/10.3390/info15090563

APA StyleGao, M., Wang, W., Mao, J., Xiong, J., Wang, Z., & Wu, B. (2024). QYOLO: Contextual Query-Assisted Object Detection in High-Resolution Images. Information, 15(9), 563. https://doi.org/10.3390/info15090563