Exploring Privacy Leakage in Platform-Based Enterprises: A Tripartite Evolutionary Game Analysis and Multilateral Co-Regulation Framework

Abstract

1. Introduction

2. Literature Review

2.1. Privacy Leakage-Related Research

2.2. Privacy Regulation-Related Research

3. Model Assumptions and Construction

4. Evolutionary Game Model Analysis

4.1. Model Building and Solving

4.2. Analysis of the System Equilibrium Points in the Tripartite Evolutionary Game

4.3. Combination Strategy

4.3.1. High-Loss Scenario

4.3.2. Low-Loss Scenario

4.3.3. Moderate Benefit Scenario

4.3.4. High-Revenue Scenario

5. Simulation

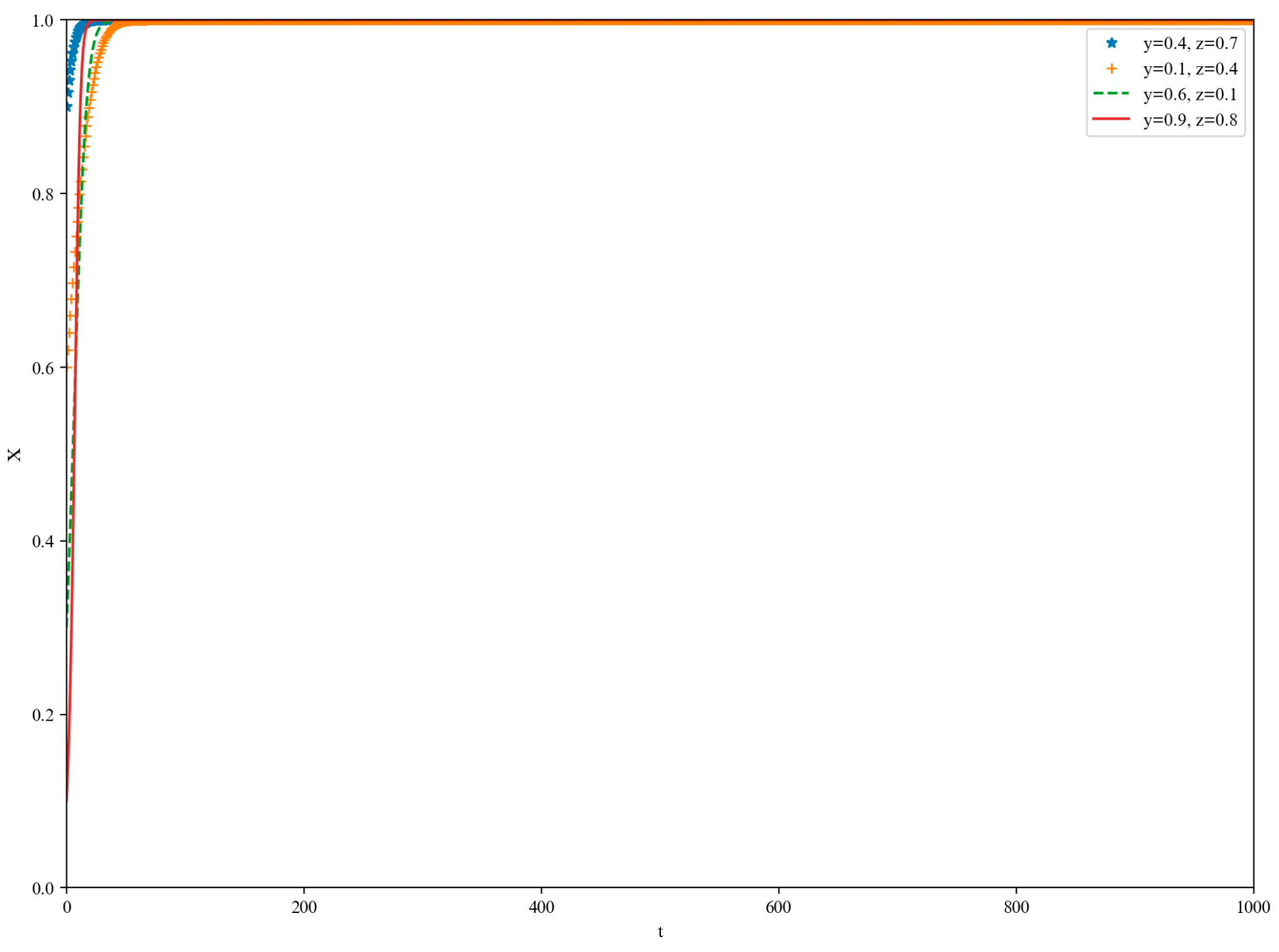

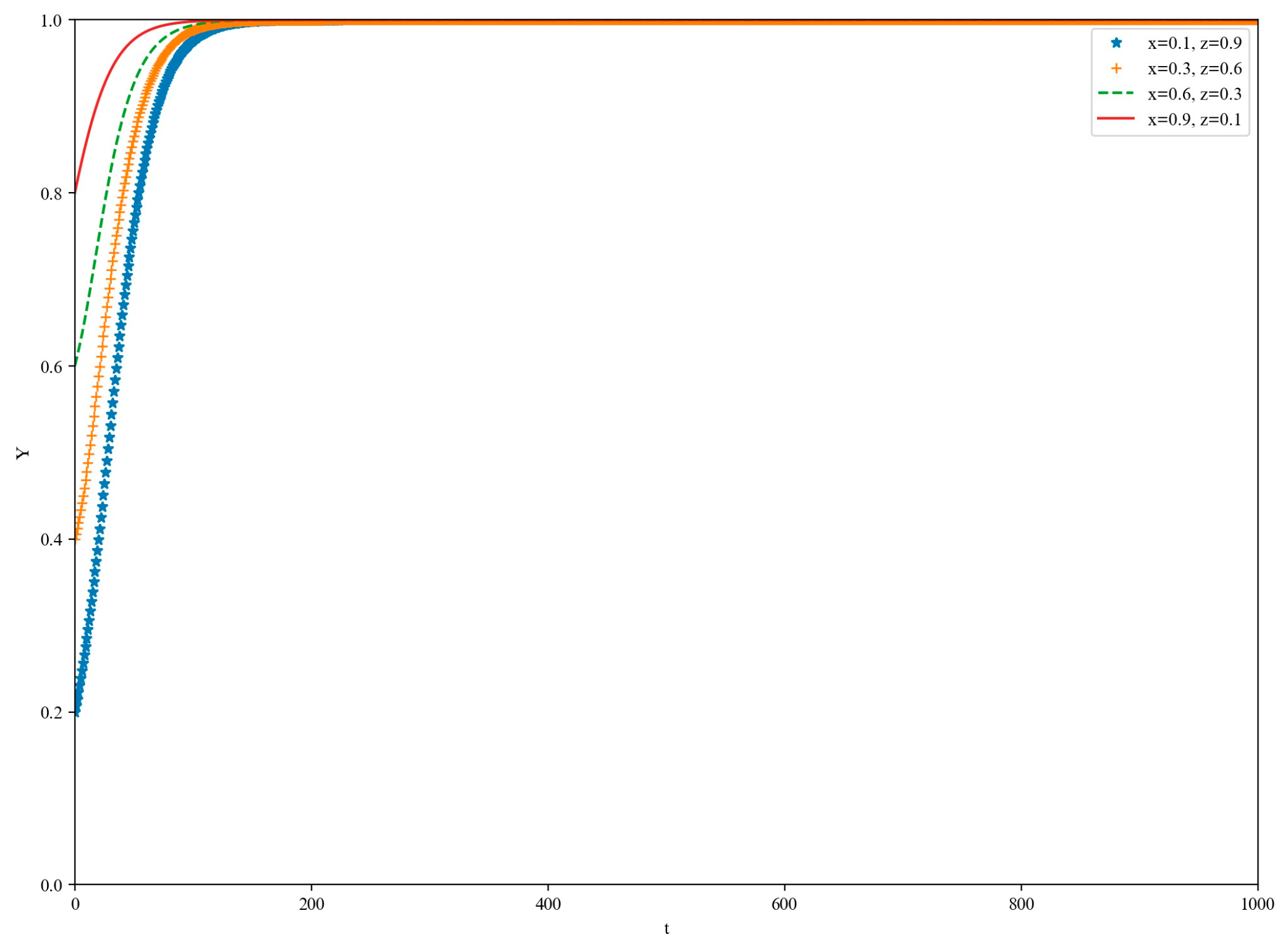

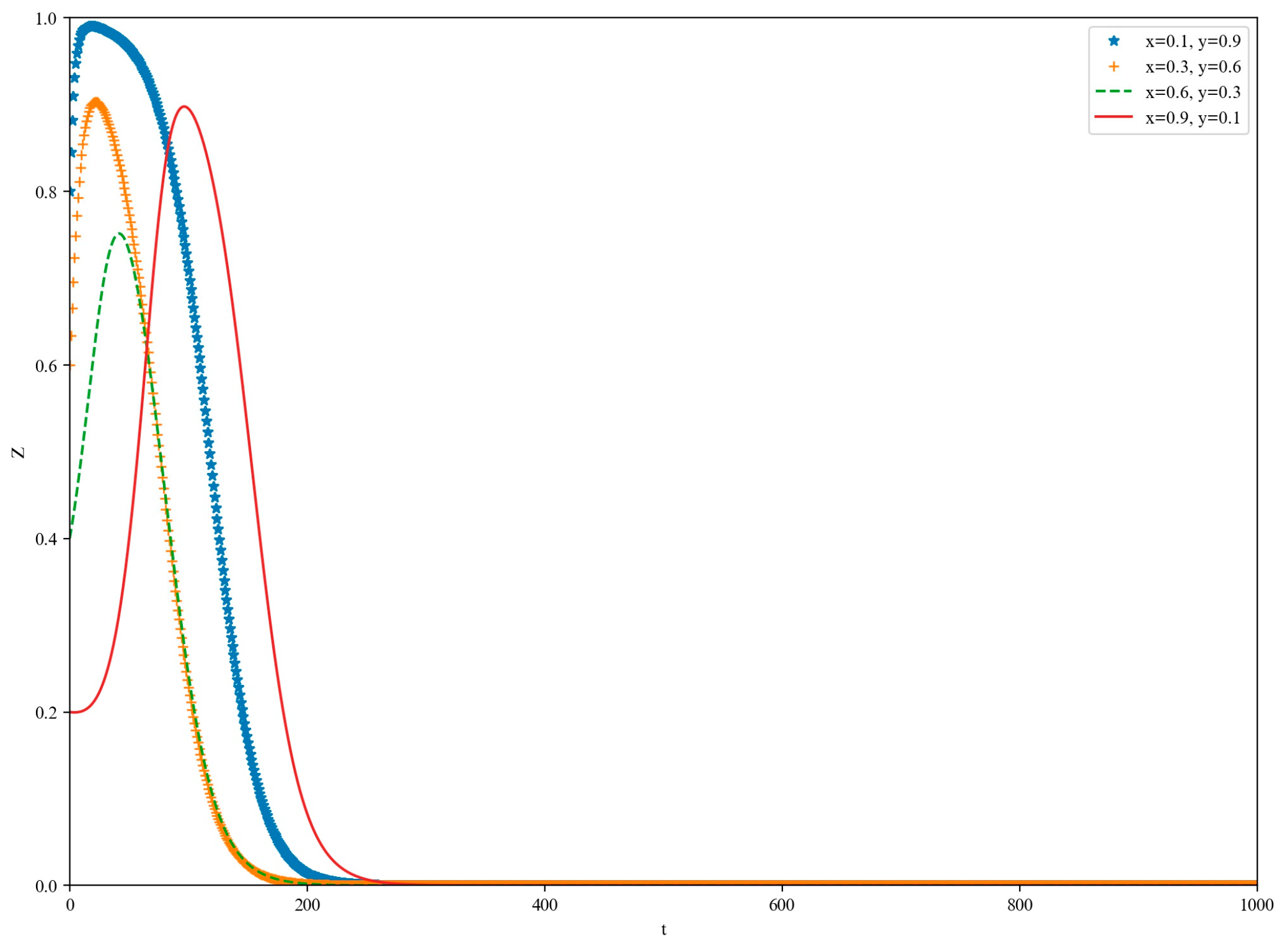

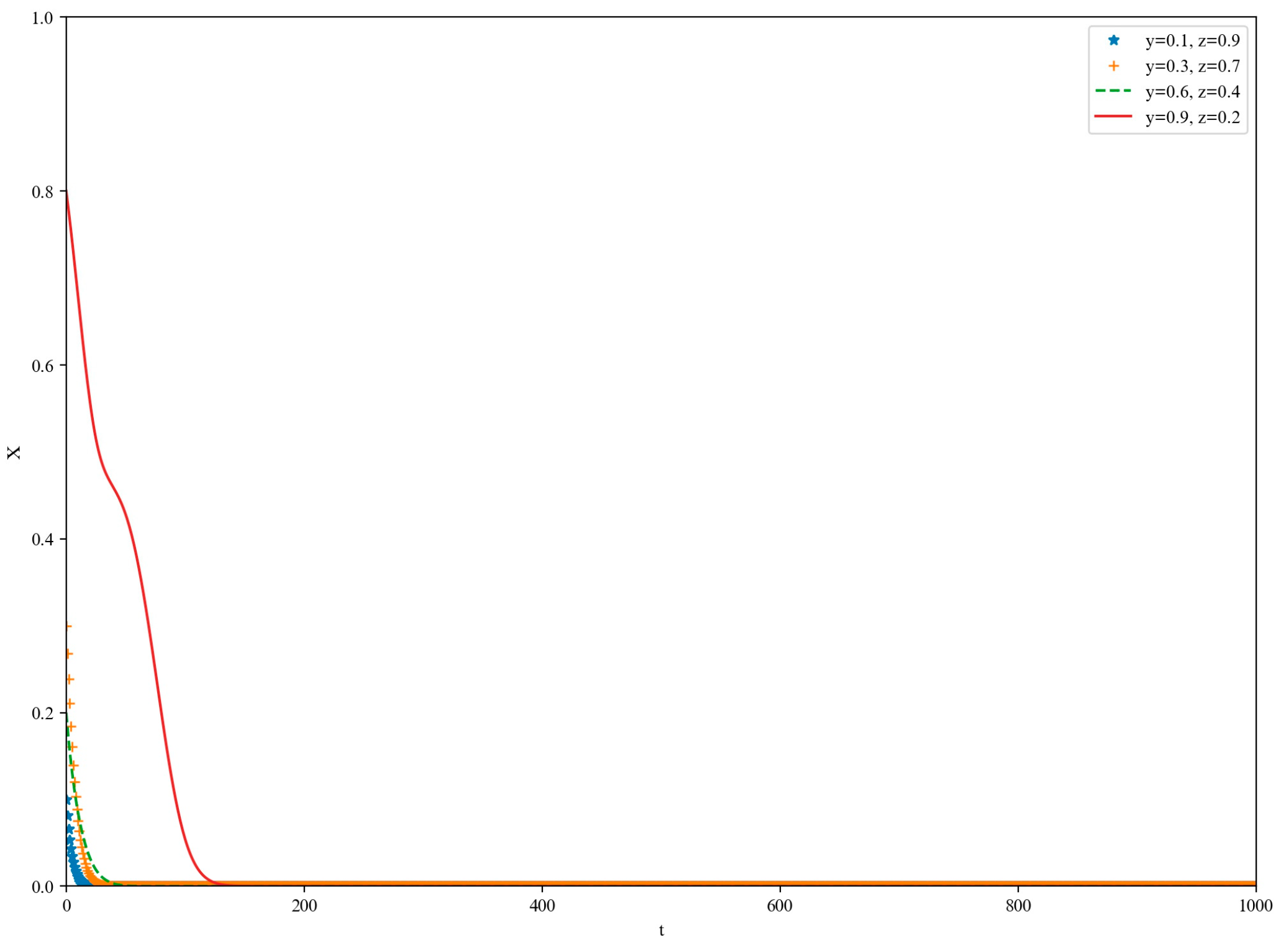

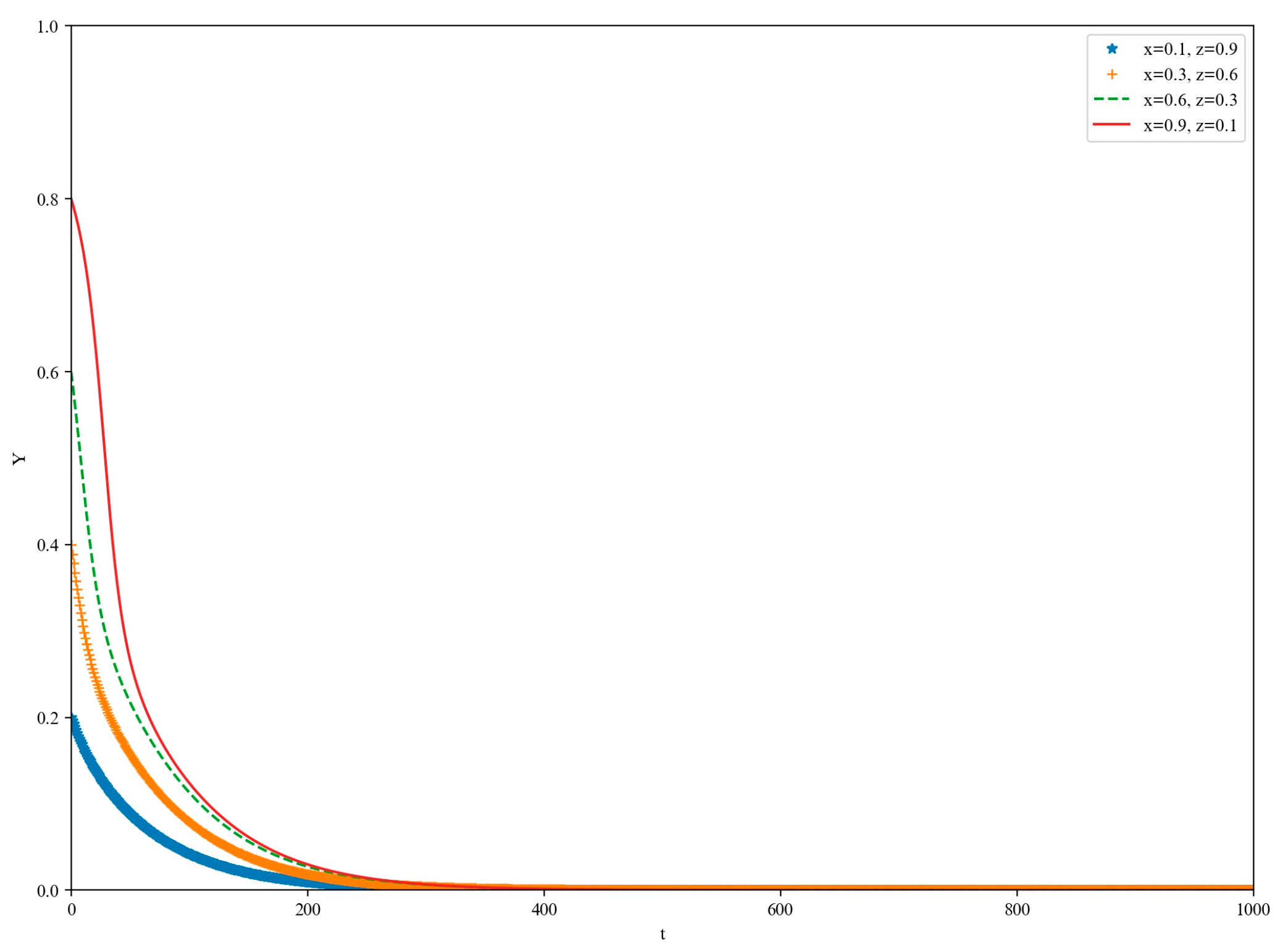

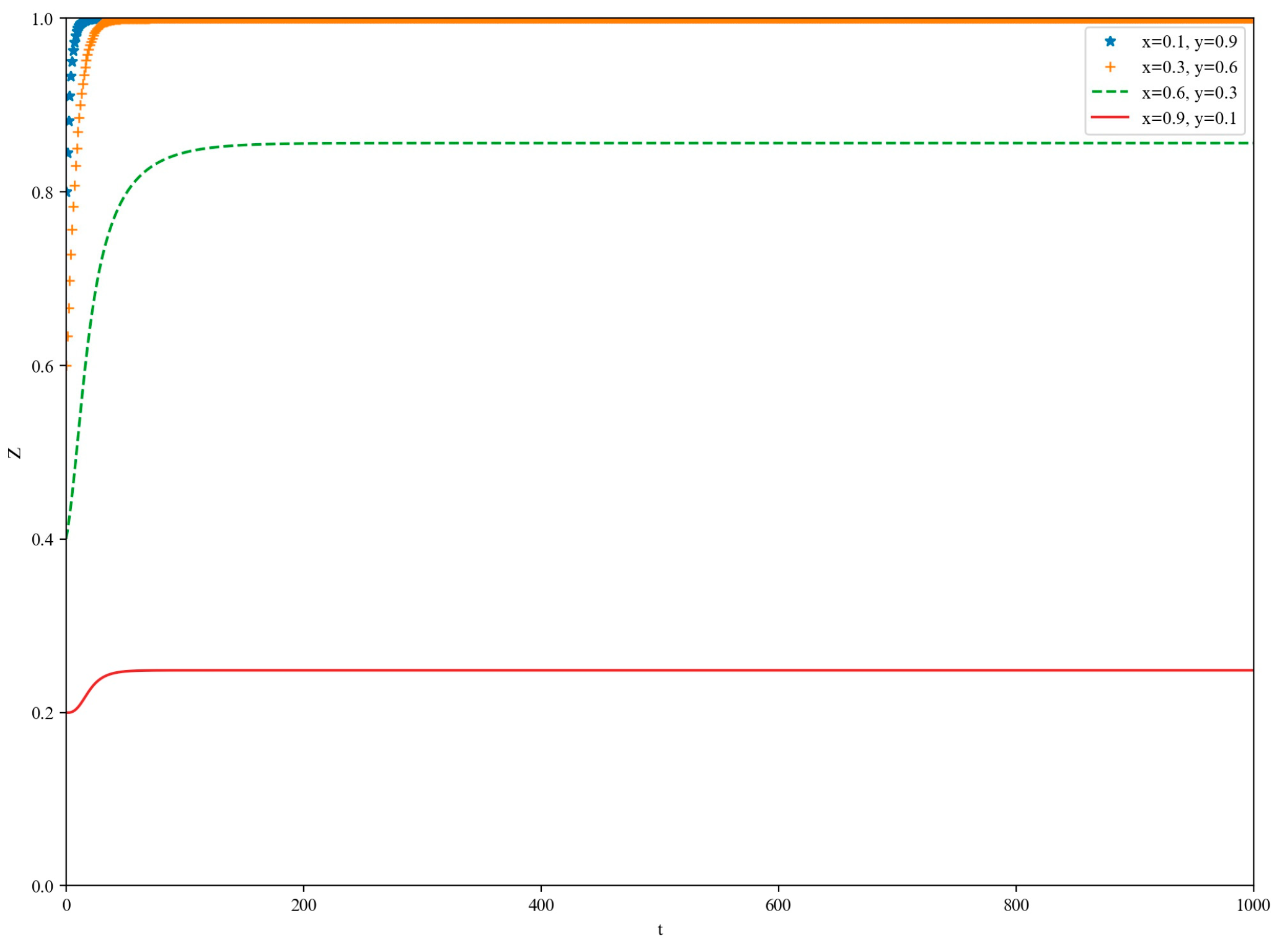

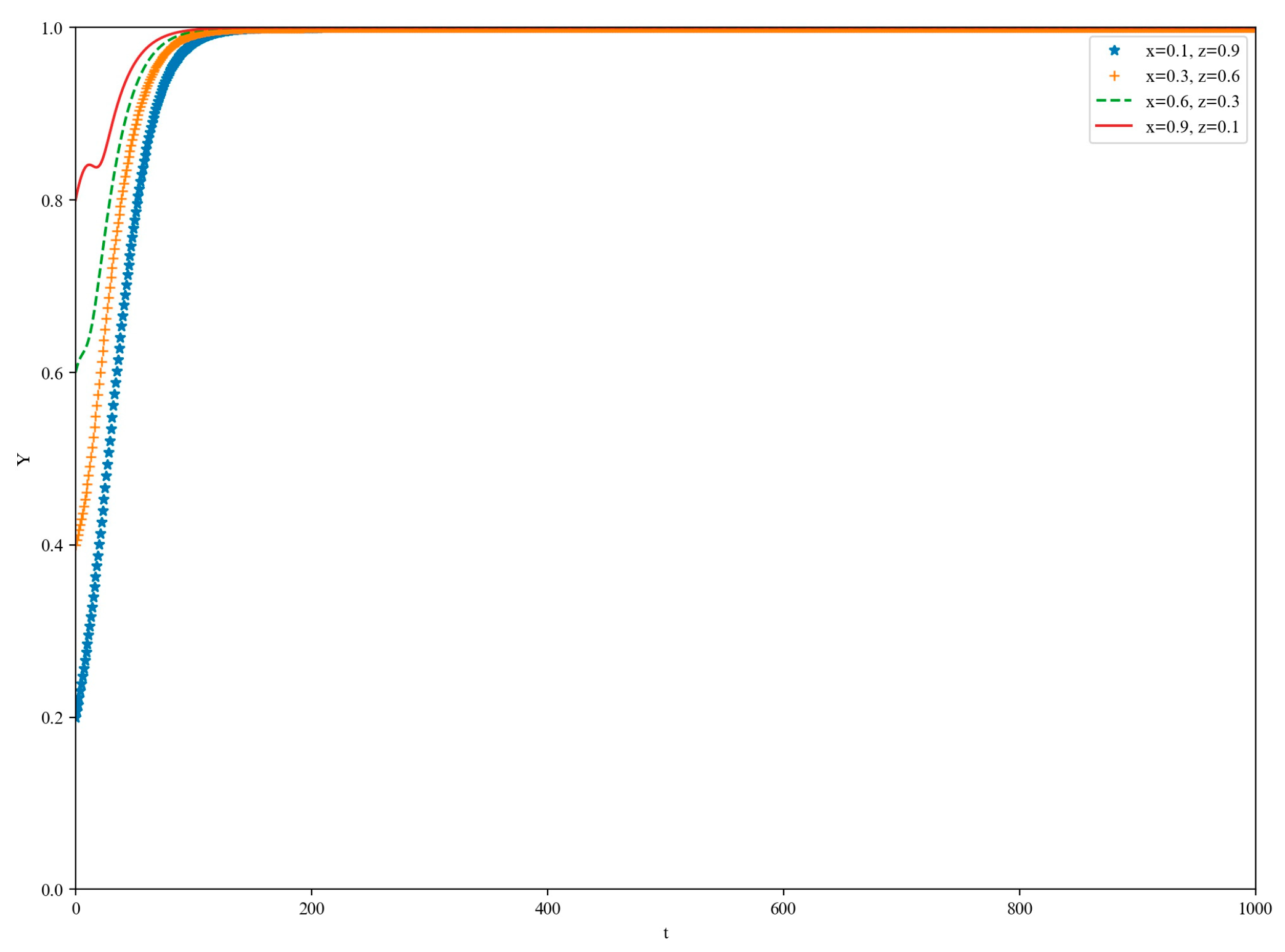

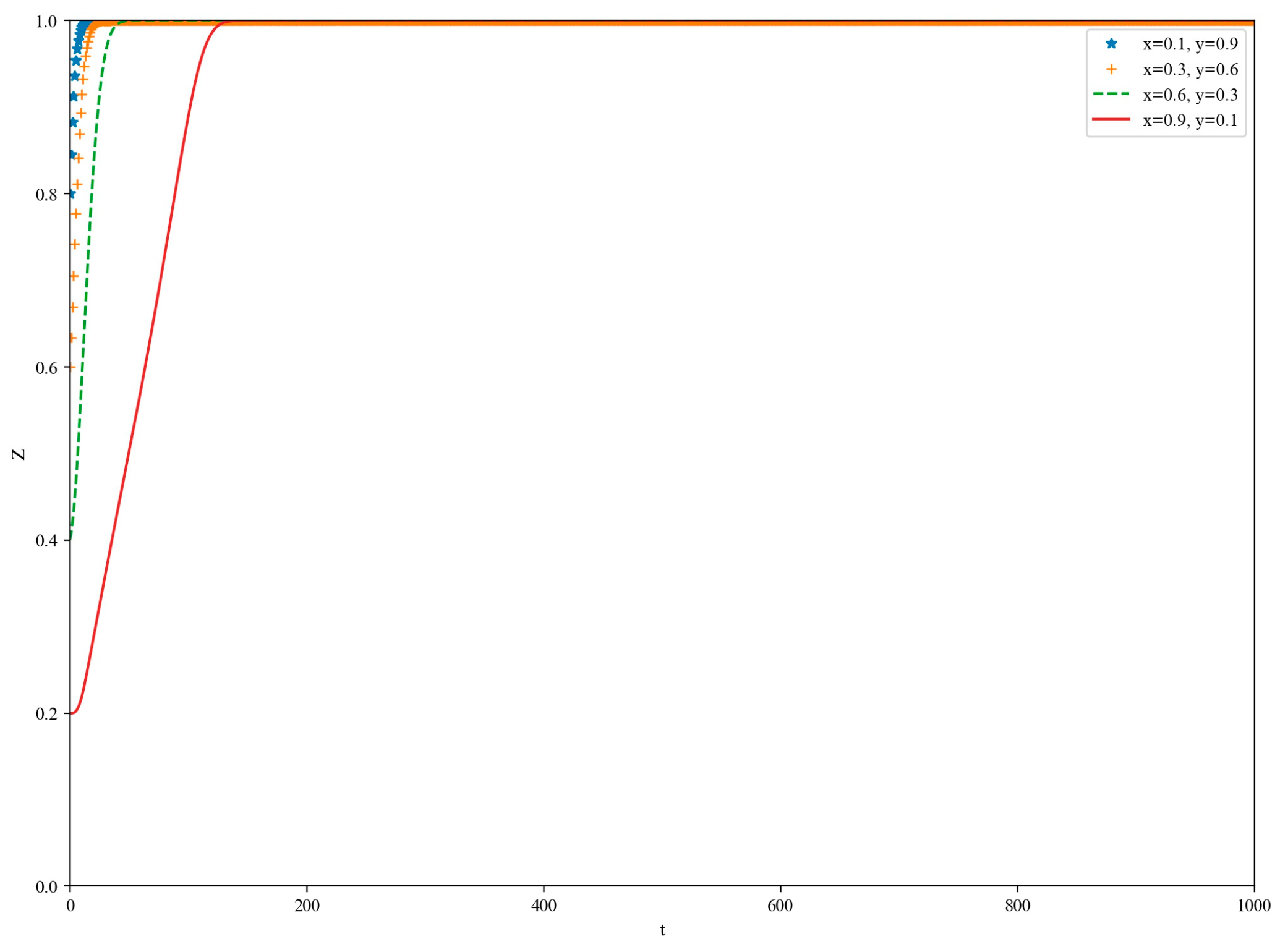

5.1. High-Loss Scenario

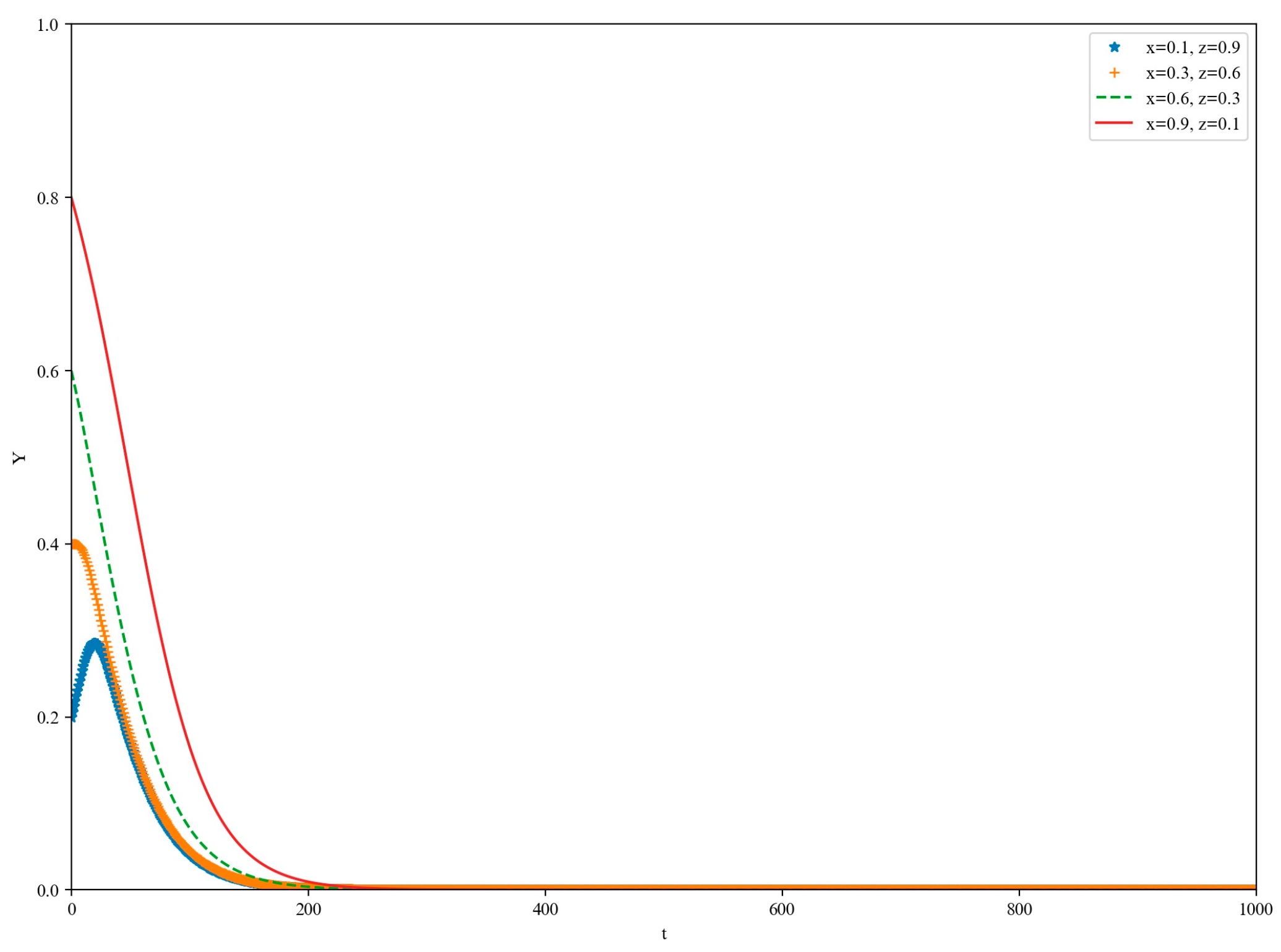

5.2. Low-Yield Scenario

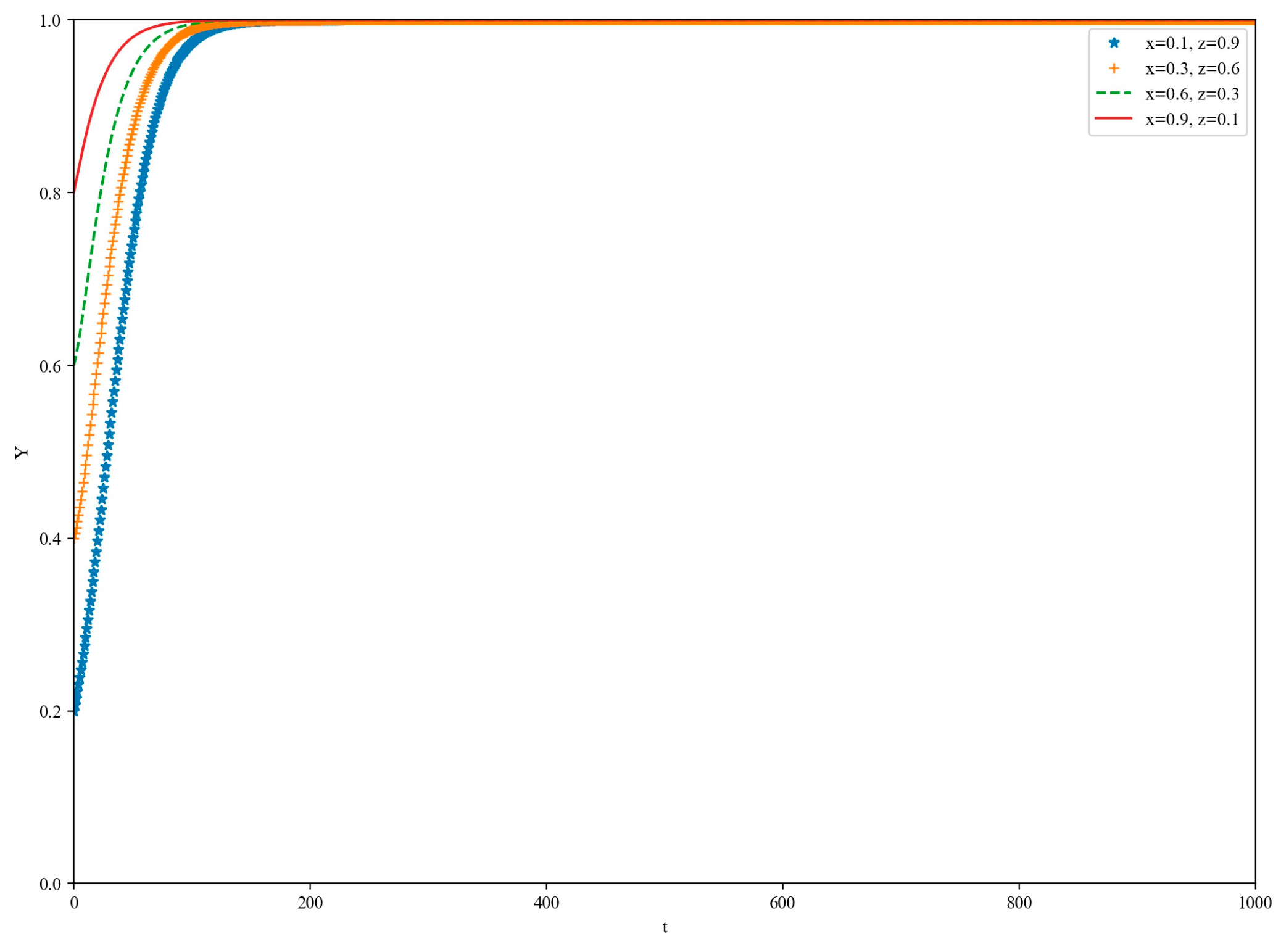

5.3. Medium-Yield Scenario

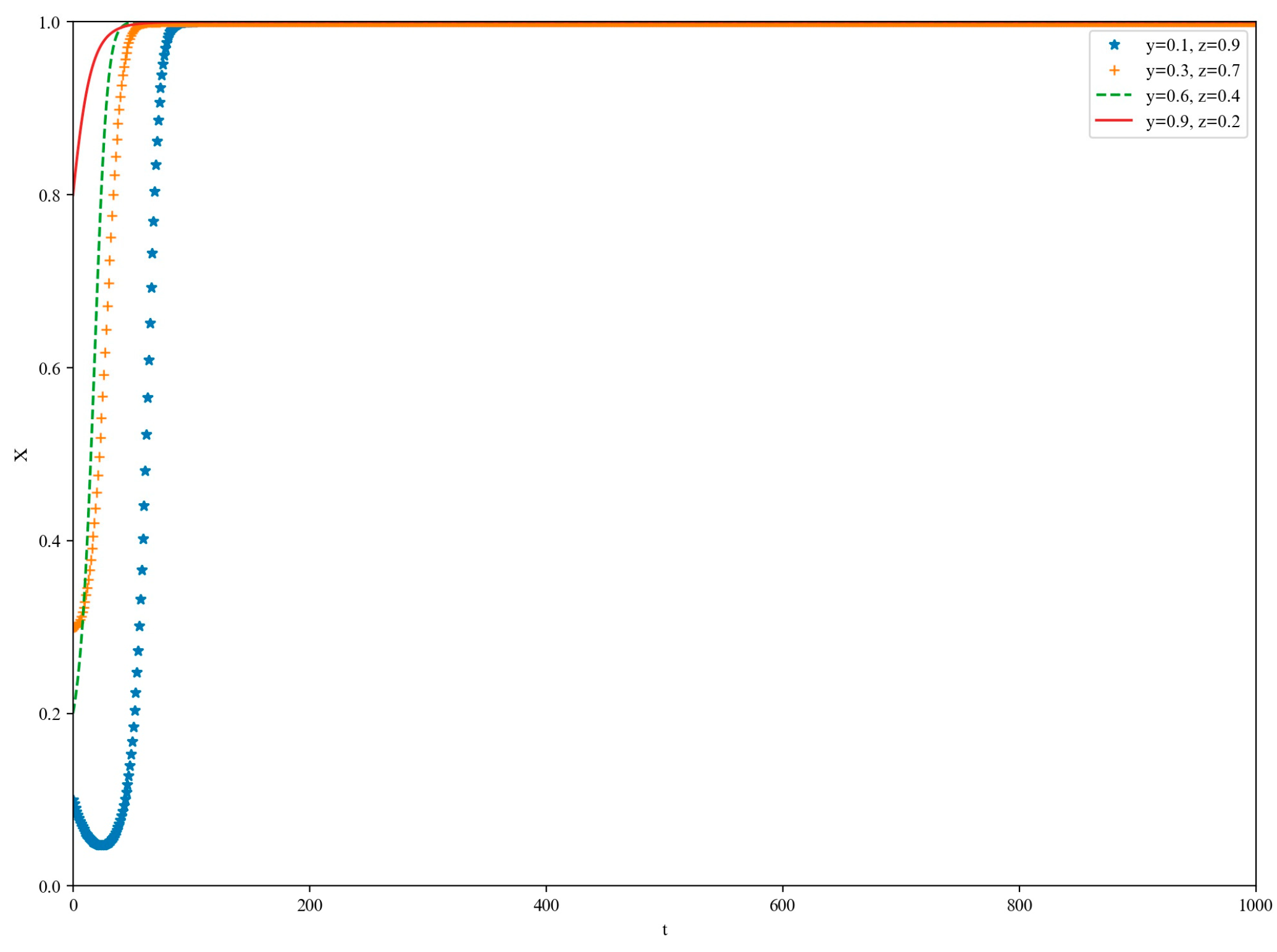

5.4. High-Yield Scenario

6. Discussion and Conclusions

6.1. Discussion

6.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- China Internet Network Information Center. The 55th Statistical Report on Internet Development in China. Available online: https://www.cnnic.net.cn/n4/2025/0117/c88-11229.html (accessed on 17 January 2025).

- Le, C.; Zhang, Z.; Liu, Y. Research on Privacy Disclosure Behavior of Mobile App Users from Perspectives of Online Social Support and Gender Differences. Int. J. Hum.–Comput. Interact. 2025, 41, 861–875. [Google Scholar] [CrossRef]

- Zhang, F.; Pan, Z.; Lu, Y. AIoT-enabled smart surveillance for personal data digitalization: Contextual personalization-privacy paradox in smart home. Inf. Manag. 2023, 60, 103736. [Google Scholar] [CrossRef]

- Xin, X.; Yang, J.; Wang, H.; Ma, J.; Ren, P.; Luo, H.; Shi, X.; Chen, Z.; Ren, Z. On the user behavior leakage from recommender system exposure. ACM Trans. Inf. Syst. 2023, 41, 1–25. [Google Scholar] [CrossRef]

- Al-Huthaifi, R.; Li, T.; Huang, W.; Gu, J.; Li, C. Federated learning in smart cities: Privacy and security survey. Inf. Sci. 2023, 632, 833–857. [Google Scholar] [CrossRef]

- Spagnoletti, P.; Ceci, F.; Bygstad, B. Online Black-Markets: An Investigation of a Digital Infrastructure in the Dark. Inf. Syst. Front. 2022, 24, 1811–1826. [Google Scholar] [CrossRef] [PubMed]

- Standing Committee of the National People’s Congress. Data Security Law of the People’s Republic of China. Available online: https://www.gov.cn/xinwen/2021-06/11/content_5616919.htm (accessed on 1 September 2021).

- Standing Committee of the National People’s Congress. Personal Information Protection Law of the People’s Republic of China. Available online: https://www.samr.gov.cn/wljys/gzzd/art/2023/art_3ef1e889c1e644d4b65b5f5c7f432386.html (accessed on 1 November 2021).

- Drapalova, E.; Wegrich, K. Platforms’ regulatory disruptiveness and local regulatory outcomes in Europe. Internet Policy Rev. 2024, 13, 1745. [Google Scholar] [CrossRef]

- Li, J.; Chen, G. A personalized trajectory privacy protection method. Comput. Secur. 2021, 108, 102323. [Google Scholar] [CrossRef]

- Internet Rule of Law Research Center, China Youth University for Political Studies, Cover Think Tank. China Personal Information Security and Privacy Protection Report. Available online: https://www.thecover.cn/news/158619 (accessed on 22 November 2016).

- Zhang, L.; Xue, J. Analysis of Personal Privacy Leakage Under the Background of Digital Twin. Sci. Soc. Res. 2024, 6, 168–175. [Google Scholar] [CrossRef]

- Sun, Z.; Xie, S.; Xu, W.; Xu, L.; Li, H. User-tailored privacy: Unraveling the influences of psychological needs and message framing on app users’ privacy Disclosure intentions. Curr. Psychol. 2024, 43, 33893–33907. [Google Scholar] [CrossRef]

- Vandana, G. Cross-border flow of personal data (digital trade) ought to have data protection. J. Data Prot. Priv. 2024, 7, 61–79. [Google Scholar]

- Walker, K.L.; Milne, G.R. AI-driven technology and privacy: The value of social media responsibility. J. Res. Interact. Mark. 2024, 18, 815–835. [Google Scholar] [CrossRef]

- Xu, H.; Teo, H.-H.; Tan, B.C.; Agarwal, R. Research note—Effects of individual self-protection, industry self-regulation, and government regulation on privacy concerns: A study of location-based services. Inf. Syst. Res. 2012, 23, 1342–1363. [Google Scholar] [CrossRef]

- Alcántara, J.C.; Tasic, I.; Cano, M.-D. Enhancing Digital Identity: Evaluating Avatar Creation Tools and Privacy Challenges for the Metaverse. Information 2024, 15, 624. [Google Scholar] [CrossRef]

- Yu, L.; Li, H.; He, W.; Wang, F.-K.; Jiao, S. A meta-analysis to explore privacy cognition and information disclosure of internet users. Int. J. Inf. Manag. 2020, 51, 102015. [Google Scholar] [CrossRef]

- Dinev, T.; Hart, P. An extended privacy calculus model for e-commerce transactions. Inf. Syst. Res. 2006, 17, 61–80. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, X.; Min, Q.; Li, W. The effect of role conflict on self-disclosure in social network sites: An integrated perspective of boundary regulation and dual process model. Inf. Syst. J. 2019, 29, 279–316. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J. A Study on the Causes of Information Privacy Concerns and Protective Responses in e-Commerce: Focusing on the Principal-Agent Theory. J. Inf. Syst. 2014, 23, 119–145. [Google Scholar]

- Jozani, M.; Ayaburi, E.; Ko, M.; Choo, K.-K.R. Privacy concerns and benefits of engagement with social media-enabled apps: A privacy calculus perspective. Comput. Hum. Behav. 2020, 107, 106260. [Google Scholar] [CrossRef]

- Zhu, N.; Chen, B.; Wang, S.; Teng, D.; He, J. Ontology-Based Approach for the Measurement of Privacy Disclosure. Inf. Syst. Front. 2021, 24, 1689–1707. [Google Scholar] [CrossRef]

- Xu, Y.; Meng, X.; Li, Y.; Xu, X. Research on privacy disclosure detection method in social networks based on multi-dimensional deep learning. Comput. Mater. Contin. 2020, 62, 137–155. [Google Scholar] [CrossRef]

- Kang, H.; Xiao, Y.; Yin, J. An Intelligent Detection Method of Personal Privacy Disclosure for Social Networks. Secur. Commun. Netw. 2021, 2021, 5518220. [Google Scholar] [CrossRef]

- Zhu, K.; He, X.; Xiang, B.; Zhang, L.; Pattavina, A. How dangerous are your smartphones? App usage recommendation with privacy preserving. Mob. Inf. Syst. 2016, 2016, 6804379. [Google Scholar] [CrossRef]

- Hoffmann, F.; Inderst, R.; Ottaviani, M. Persuasion through selective disclosure: Implications for marketing, campaigning, and privacy regulation. Manag. Sci. 2020, 66, 4958–4979. [Google Scholar] [CrossRef]

- Taneja, A.; Vitrano, J.; Gengo, N.J. Rationality-based beliefs affecting individual’s attitude and intention to use privacy controls on Facebook: An empirical investigation. Comput. Hum. Behav. 2014, 38, 159–173. [Google Scholar] [CrossRef]

- Du, J.; Jiang, C.; Chen, K.-C.; Ren, Y.; Poor, H.V. Community-structured evolutionary game for privacy protection in social networks. IEEE Trans. Inf. Forensics Secur. 2017, 13, 574–589. [Google Scholar] [CrossRef]

- Gopal, R.D.; Hidaji, H.; Patterson, R.A.; Rolland, E.; Zhdanov, D. How much to share with third parties? User privacy concerns and website dilemmas. MIS Q. 2018, 42, 143–164. [Google Scholar] [CrossRef]

- Sun, Z.; Yin, L.; Li, C.; Zhang, W.; Li, A.; Tian, Z. The QoS and privacy trade-off of adversarial deep learning: An evolutionary game approach. Comput. Secur. 2020, 96, 101876. [Google Scholar] [CrossRef]

- Mushtaq, S.; Shah, M. Critical Factors and Practices in Mitigating Cybercrimes Within E-Government Services: A Rapid Review on Optimising Public Service Management. Information 2024, 15, 619. [Google Scholar] [CrossRef]

- Zhang, P.; Wu, H.; Li, H.; Zhong, B.; Fung, I.W.; Lee, Y.Y.R. Exploring the adoption of blockchain in modular integrated construction projects: A game theory-based analysis. J. Clean. Prod. 2023, 408, 137115. [Google Scholar] [CrossRef]

- Zuboff, S. Surveillance Capitalism and the Challenge of Collective Action. New Labor Forum 2019, 28, 10–29. [Google Scholar] [CrossRef]

- European Parliament; Council of the European Union. General Data Protection Regulation. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 25 May 2018).

- Xu, H.; Luo, X.; Carroll, J.M.; Rosson, M.B. The personalization privacy paradox: An exploratory study of decision making process for location-aware marketing. Decis. Support Syst. 2010, 51, 42–52. [Google Scholar] [CrossRef]

| Symbol | Description | Symbol | Description |

|---|---|---|---|

| Platform-based enterprises’ proportion of choosing not to leak user privacy information | Benefits to users from participating in co-governance | ||

| Proportion of platform-based enterprises not leaking user privacy information | Direct profits of platform-based enterprises from disclosing user privacy | ||

| Proportion of co-governance regulation by the regulatory authority | Losses to non-cooperative users when platform-based enterprises disclose user privacy information | ||

| Profit gained by platform-based enterprises from the legal use of user privacy information for commercial activities | Compensation received by users through co-governance from platform-based enterprises for privacy leakage | ||

| Operating cost for platform-based enterprises to avoid leaking user privacy information | Reputation loss for platform-based enterprises due to user participation in co-governance following privacy leakage | ||

| Operating cost for platform-based enterprises to disclose user privacy information | Identification probability of platform privacy breach under co-governance regulation | ||

| Cost of user participation in co-governance | Identification probability of platform privacy breach under traditional regulation | ||

| Regulatory agency’s response cost under co-governance and traditional regulation strategies | Maximum penalty amount for platforms disclosing user privacy information | ||

| Initial investment cost by the regulatory agencies under co-governance and co-management strategies | Penalty severity for platforms disclosing user privacy information | ||

| Reputation benefits for platform-based enterprises that do not disclose user privacy information as a result of user participation in co-governance | Social benefits of regulatory agencies |

| Users | Regulatory Agencies | |||

|---|---|---|---|---|

| Platform-based enterprises | ||||

| 0 | 0 | |||

| ) | ||||

| Equilibrium Points | Eigenvalues of the Jacobian Matrix | ||

|---|---|---|---|

| 0 | |||

| 0 | |||

| 0 | |||

| 0 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, P.; Li, J.; Sun, Z. Exploring Privacy Leakage in Platform-Based Enterprises: A Tripartite Evolutionary Game Analysis and Multilateral Co-Regulation Framework. Information 2025, 16, 193. https://doi.org/10.3390/info16030193

Xu P, Li J, Sun Z. Exploring Privacy Leakage in Platform-Based Enterprises: A Tripartite Evolutionary Game Analysis and Multilateral Co-Regulation Framework. Information. 2025; 16(3):193. https://doi.org/10.3390/info16030193

Chicago/Turabian StyleXu, Peng, Jiaxin Li, and Zhuo Sun. 2025. "Exploring Privacy Leakage in Platform-Based Enterprises: A Tripartite Evolutionary Game Analysis and Multilateral Co-Regulation Framework" Information 16, no. 3: 193. https://doi.org/10.3390/info16030193

APA StyleXu, P., Li, J., & Sun, Z. (2025). Exploring Privacy Leakage in Platform-Based Enterprises: A Tripartite Evolutionary Game Analysis and Multilateral Co-Regulation Framework. Information, 16(3), 193. https://doi.org/10.3390/info16030193