The Impact of Code Smells on Software Bugs: A Systematic Literature Review

Abstract

:1. Introduction

2. Code Smells and Software Bugs

3. Research Methodology

3.1. Searching for Study Sources

3.2. Selection of Primary Studies

3.3. The Automatic Search

3.4. Data Extraction

3.5. Potentially Relevant Studies

4. Results and Discussion

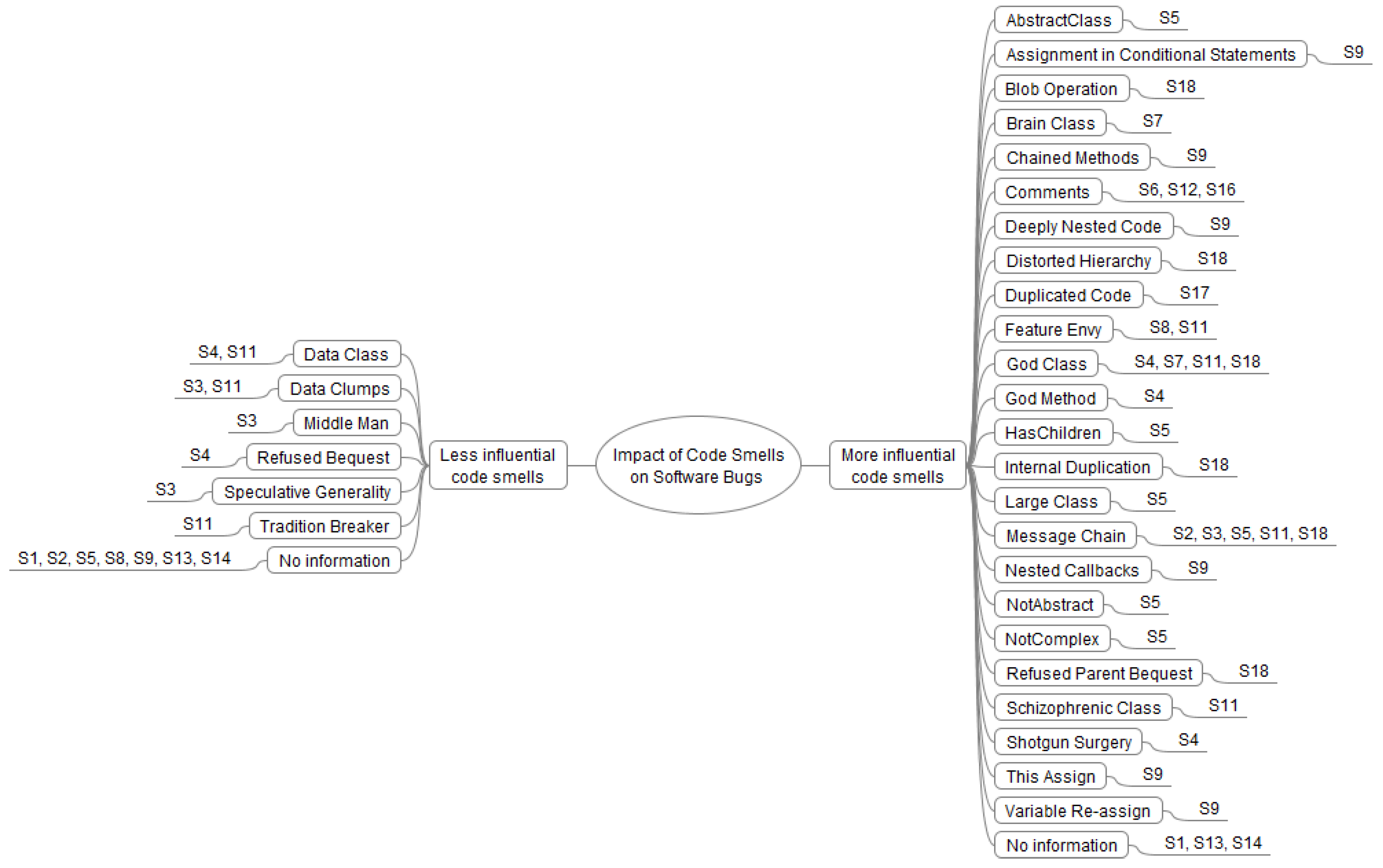

4.1. Influence of Code Smells on Software Bugs

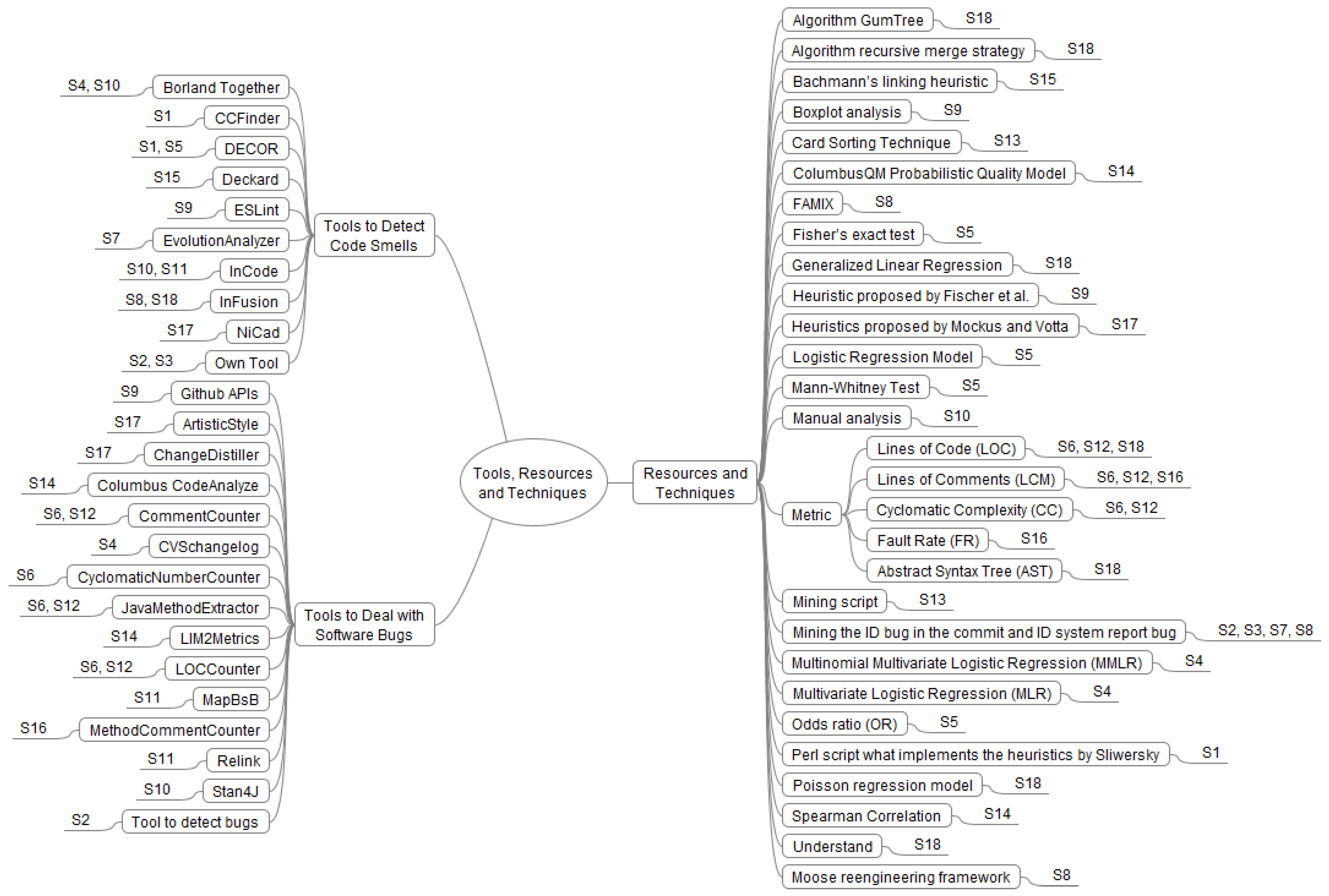

4.2. Tools, Resources, and Techniques to Identify the Influence of Specific Code Smells on Bugs

4.3. Software Projects Analyzed in the Selected Studies

5. Threats to Validity

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| ID | Author, Title | Venue | Year |

|---|---|---|---|

| S4 [9] | Li, W.; Shatnawi, R. An empirical study of the bad smells and class error probability in the post-release object-oriented system evolution | JSSOD | 2007 |

| S16 [69] | Aman, H. An Empirical Analysis on Fault-proneness of Well-Commented Modules | IWESEP | 2012 |

| S15 [1] | Rahman, F.; Bird, C.; Devanbu, P. Clones: What is that smell? | MSR | 2012 |

| S14 [53] | Bán, D.; Ferenc, R. Recognizing antipatterns and analyzing their effects on software maintainability | ICCSA | 2014 |

| S3 [8] | Hall, T.; Zhang, M.; Bowes, D.; Sun, Y. Some code smells have a significant but small effect on faults | ATSME | 2014 |

| S12 [63] | Aman, H.; Amasaki, S.; Sasaki, T.; Kawahara, M. Empirical Analysis of Fault-Proneness in Methods by Focusing on their Comment Lines | APSEC | 2014 |

| S13 [52] | Das, T.; Di Penta, M.; Malavolta, I. A Quantitative and Qualitative Investigation of Performance-Related Commits in Android Apps | ICSME | 2016 |

| S1 [5] | Jaafar, F.; Lozano, A.; Guéhéneuc, Y.-G.; Mens, K. On the analysis of co-occurrence of anti-patterns and clones | QRS | 2017 |

| S2 [7] | Palomba, F.; Bavota, G.; Di Penta, M.; Fasano, F.; Oliveto, R.; Lucia, A.D. On the diffuseness and the impact on maintainability of code smells: a large scale empirical investigation | ESENF | 2017 |

| S11 [13] | Nascimento, R.; Sant’Anna, C. Investigating the Relationship Between Bad Smells and Bugs in Software Systems | SBCARS | 2017 |

| S17 [4] | Rahman, M.S.; Roy, C.K. On the Relationships between Stability and Bug-Proneness of Code Clones: An Empirical Study | SCAM | 2017 |

| ID | Author, Title | Forward/Backward Snowballing | Venue | Year |

|---|---|---|---|---|

| S5 [37] | Khomh, F.; Di Penta, M.; Guéhéneuc, Y.-G. An Exploratory Study of the Impact of Code Smells on Software Change-proneness | Backward of S2 | WCRE | 2009 |

| S7 [11] | Olbrich, S.M.; Cruzes, D.S. Are all Code Smells Harmful? A Study of God Classes and Brain Classes in the Evolution of three Open Source Systems | Backward of S2 | ICSM | 2010 |

| S8 [3] | D’Ambros, M.; Bacchelli, A.; Lanza M. On the Impact of Design Flaws on Software Defects | Backward of S2 | QSIC | 2010 |

| S10 [10] | Yamashita, A.; Moonen, L. To what extent can maintenance problems be predicted by code smell detection? –An empirical study | Backward of S3 | Information and Software Technology | 2013 |

| S6 [70] | Aman, H.; Amasaki, S.; Sasaki, T.; Kawahara, M. Lines of Comments as a Noteworthy Metric for Analyzing Fault-Proneness in Methods | Forward of S16 | IEICE | 2015 |

| S9 [39] | Saboury, A.; Musavi, P.; Khomh, F.; Antoniol, G. An Empirical Study of Code Smells in JavaScript projects | Forward of S2 | SANER | 2017 |

| S18 [12] | Ahmed, I; Brindescu, C; Ayda, U; Jensen, C; Sarma, A An empirical examination of the relationship between code smells and merge conflicts | Forward of S3 | ESEM | 2017 |

References

- Rahman, F.; Bird, C.; Devanbu, P. Clones: What is that smell? Empir. Softw. Eng. 2012, 17, 503–530. [Google Scholar] [CrossRef]

- Fowler, M.; Beck, K. Refactoring: Improving the Design of Existing Code; Addison-Wesley Professional: Boston, MA, USA, 1999. [Google Scholar]

- D’Ambros, M.; Bacchelli, A.; Lanza, M. On the impact of design flaws on software defects. In Proceedings of the 10th International Conference on Quality Software (QSIC), Los Alamitos, CA, USA, 14–15 July 2010; pp. 23–31. [Google Scholar]

- Rahman, M.S.; Roy, C.K. On the relationships between stability and bug-proneness of code clones: An empirical study. In Proceedings of the 17th International Working Conference on Source Code Analysis and Manipulation (SCAM), Shanghai, China, 17–18 September 2017; pp. 131–140. [Google Scholar]

- Jaafar, F.; Lozano, A.; Guéhéneuc, Y.G.; Mens, K. On the Analysis of Co-Occurrence of Anti-Patterns and Clones. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security (QRS), Prague, Czech Republic, 25–29 July 2017; pp. 274–284. [Google Scholar]

- Khomh, F.; Di Penta, M.; Gueheneuc, Y.G. An exploratory study of the impact of code smells on software change-proneness. In Proceedings of the 16th Working Conference on Reverse Engineering, Lille, France, 13–16 October 2009; pp. 75–84. [Google Scholar]

- Palomba, F.; Bavota, G.; Di Penta, M.; Fasano, F.; Oliveto, R.; De Lucia, A. On the diffuseness and the impact on maintainability of code smells: A large scale empirical investigation. Empir. Softw. Eng. 2017, 23, 1188–1221. [Google Scholar] [CrossRef]

- Hall, T.; Zhang, M.; Bowes, D.; Sun, Y. Some code smells have a significant but small effect on faults. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2014, 23, 33. [Google Scholar] [CrossRef]

- Li, W.; Shatnawi, R. An empirical study of the bad smells and class error probability in the post-release object-oriented system evolution. J. Syst. Softw. 2007, 80, 1120–1128. [Google Scholar] [CrossRef]

- Yamashita, A.; Moonen, L. To what extent can maintenance problems be predicted by code smell detection?—An empirical study. Inf. Softw. Technol. 2013, 55, 2223–2242. [Google Scholar] [CrossRef]

- Olbrich, S.M.; Cruzes, D.S.; Sjøberg, D.I. Are all code smells harmful? A study of God Classes and Brain Classes in the evolution of three open source systems. In Proceedings of the 2010 IEEE International Conference on Software Maintenance (ICSM), Timisoara, Romania, 12–18 September 2010; pp. 1–10. [Google Scholar]

- Ahmed, I.; Brindescu, C.; Mannan, U.A.; Jensen, C.; Sarma, A. An empirical examination of the relationship between code smells and merge conflicts. In Proceedings of the 11th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Toronto, ON, Canada, 9–10 November 2017; pp. 58–67. [Google Scholar]

- Nascimento, R.; Sant’Anna, C. Investigating the relationship between bad smells and bugs in software systems. In Proceedings of the 11th Brazilian Symposium on Software Components, Architectures, and Reuse, Fortaleza, Ceará, Brazil, 18–19 September 2017; p. 4. [Google Scholar]

- Rasool, G.; Arshad, Z. A review of code smell mining techniques. J. Softw. Evol. Process 2015, 27, 867–895. [Google Scholar] [CrossRef]

- Fernandes, E.; Oliveira, J.; Vale, G.; Paiva, T.; Figueiredo, E. A review-based comparative study of bad smell detection tools. In Proceedings of the 20th International Conference on Evaluation and Assessment in Software Engineering, Limerick, Ireland, 1–3 June 2016; p. 18. [Google Scholar]

- Fontana, F.A.; Braione, P.; Zanoni, M. Automatic detection of bad smells in code: An experimental assessment. J. Object Technol. 2012, 11, 1–38. [Google Scholar]

- Lehman, M.M. Laws of software evolution revisited. In European Workshop on Software Process Technology; Springer: Berlin, Germany, 1996; pp. 108–124. [Google Scholar]

- Tufano, M.; Palomba, F.; Bavota, G.; Oliveto, R.; Di Penta, M.; De Lucia, A.; Poshyvanyk, D. When and why your code starts to smell bad. In Proceedings of the 37th International Conference on Software Engineering-Volume 1, Toronto, ON, Canada, 9–10 November 2017; pp. 403–414. [Google Scholar]

- Palomba, F.; Bavota, G.; Di Penta, M.; Oliveto, R.; Poshyvanyk, D.; De Lucia, A. Mining version histories for detecting code smells. IEEE Trans. Softw. Eng. 2015, 41, 462–489. [Google Scholar] [CrossRef]

- Moha, N.; Gueheneuc, Y.G.; Duchien, L.; Le Meur, A.F. Decor: A method for the specification and detection of code and design smells. IEEE Trans. Softw. Eng. 2010, 36, 20–36. [Google Scholar] [CrossRef]

- Tsantalis, N.; Chatzigeorgiou, A. Identification of move method refactoring opportunities. IEEE Trans. Softw. Eng. 2009, 35, 347–367. [Google Scholar] [CrossRef]

- Sahin, D.; Kessentini, M.; Bechikh, S.; Deb, K. Code-smell detection as a bilevel problem. ACM Trans. Softw. Eng. Methodol. (TOSEM) 2014, 24, 6. [Google Scholar] [CrossRef]

- Khomh, F.; Vaucher, S.; Guéhéneuc, Y.G.; Sahraoui, H. A bayesian approach for the detection of code and design smells. In Proceedings of the 9th International Conference on Quality Software, Jeju, South Korea, 24–25 August 2009; pp. 305–314. [Google Scholar]

- Marinescu, R. Detection strategies: Metrics-based rules for detecting design flaws. In Proceedings of the 20th IEEE International Conference on Software Maintenance, Chicago, IL, USA, 11–14 September 2004; pp. 350–359. [Google Scholar]

- Kessentini, W.; Kessentini, M.; Sahraoui, H.; Bechikh, S.; Ouni, A. A cooperative parallel search-based software engineering approach for code-smells detection. IEEE Trans. Softw. Eng. 2014, 40, 841–861. [Google Scholar] [CrossRef]

- Danphitsanuphan, P.; Suwantada, T. Code smell detecting tool and code smell-structure bug relationship. In Proceedings of the 2012 Spring Congress on Engineering and Technology (S-CET), Xi’an, China, 27–30 May 2012; pp. 1–5. [Google Scholar]

- Griswold, W.G. Program Restructuring as an Aid to Software Maintenance; University of Washington: Seattle, WA, USA, 1992. [Google Scholar]

- Griswold, W.G.; Notkin, D. Automated assistance for program restructuring. ACM Trans. Softw. Eng. Methodol. (TOSEM) 1993, 2, 228–269. [Google Scholar] [CrossRef]

- Opdyke, W.F. Refactoring: An Aid in Designing Application Frameworks and Evolving Object-Oriented Systems. Available online: https://refactory.com/papers/doc_details/29-refactoring-an-aid-in-designing-application-frameworks-and-evolving-object-oriented-systems (accessed on 6 November 2018).

- Opdyke, W.F. Refactoring Object-Oriented Frameworks; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1992. [Google Scholar]

- Ambler, S.; Nalbone, J.; Vizdos, M. Enterprise Unified Process, The: Extending the Rational Unified Process; Prentice Hall Press: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- Van Emden, E.; Moonen, L. Java quality assurance by detecting code smells. In Proceedings of the 2002 Ninth Working Conference on Reverse Engineering, Richmond, VA, USA, 29 October–1 November 2002; pp. 97–106. [Google Scholar] [Green Version]

- Marinescu, R. Detecting design flaws via metrics in object-oriented systems. In Proceedings of the 39th International Conference and Exhibition on Technology of Object-Oriented Languages and Systems, Santa Barbara, CA, USA, 29 July–3 August 2001; pp. 173–182. [Google Scholar] [Green Version]

- Marinescu, R. Measurement and quality in object-oriented design. In Proceedings of the 21st IEEE International Conference on Software Maintenance, Budapest, Hungary, 26–29 September 2005; pp. 701–704. [Google Scholar]

- Lanza, M.; Marinescu, R. Object-Oriented Metrics in Practice: Using Software Metrics to Characterize, Evaluate, and Improve the Design of Object-Oriented Systems; Springer Science & Business Media: Berlin, Germany, 2007. [Google Scholar]

- Mantyla, M.; Vanhanen, J.; Lassenius, C. A taxonomy and an initial empirical study of bad smells in code. In Proceedings of the 2003 International Conference on Software Maintenance, Amsterdam, The Netherlands, 22–26 September 2003; pp. 381–384. [Google Scholar]

- Khomh, F.; Di Penta, M.; Guéhéneuc, Y.G.; Antoniol, G. An exploratory study of the impact of antipatterns on class change-and fault-proneness. Empir. Softw. Eng. 2012, 17, 243–275. [Google Scholar] [CrossRef]

- Visser, J.; Rigal, S.; Wijnholds, G.; van Eck, P.; van der Leek, R. Building Maintainable Software, C# Edition: Ten Guidelines for Future-Proof Code; O’Reilly Media, Inc.: Newton, MA, USA, 2016. [Google Scholar]

- Saboury, A.; Musavi, P.; Khomh, F.; Antoniol, G. An empirical study of code smells in javascript projects. In Proceedings of the 24th International Conference on Software Analysis, Evolution and Reengineering (SANER), Klagenfurt, Austria, 21–24 February 2017; pp. 294–305. [Google Scholar]

- Yamashita, A.; Moonen, L. Do developers care about code smells? An exploratory survey. In Proceedings of the 20th Working Conference on Reverse Engineering (WCRE), Koblenz, Germany, 14–17 October 2013; pp. 242–251. [Google Scholar]

- Olbrich, S.; Cruzes, D.S.; Basili, V.; Zazworka, N. The evolution and impact of code smells: A case study of two open source systems. In Proceedings of the 3rd International Symposium on Empirical Software Engineering and Measurement, Lake Buena Vista, FL, USA, 15–16 October 2009; pp. 390–400. [Google Scholar]

- Palomba, F.; Bavota, G.; Di Penta, M.; Oliveto, R.; De Lucia, A.; Poshyvanyk, D. Detecting bad smells in source code using change history information. In Proceedings of the 28th IEEE/ACM International Conference on Automated Software Engineering, Silicon Valley, CA, USA, 11–15 November 2013; pp. 268–278. [Google Scholar]

- Kitchenham, B.; Charters, S. Guidelines for performing Systematic Literature Reviews in Software Engineering; EBSE: Durham, UK, 2007; Volume 2. [Google Scholar]

- Zhang, H.; Babar, M.A.; Tell, P. Identifying relevant studies in software engineering. Inf. Softw. Technol. 2011, 53, 625–637. [Google Scholar] [CrossRef] [Green Version]

- Dybå, T.; Dingsøyr, T. Empirical studies of agile software development: A systematic review. Inf. Softw. Technol. 2008, 50, 833–859. [Google Scholar] [CrossRef]

- Skoglund, M.; Runeson, P. Reference-based search strategies in systematic reviews. In Proceedings of the 13th international conference on Evaluation and Assessment in Software Engineering, Swindon, UK, 20–21 April 2009. [Google Scholar]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Cotroneo, D.; Pietrantuono, R.; Russo, S.; Trivedi, K. How do bugs surface? A comprehensive study on the characteristics of software bugs manifestation. J. Syst. Softw. 2016, 113, 27–43. [Google Scholar] [CrossRef]

- Qin, F.; Zheng, Z.; Li, X.; Qiao, Y.; Trivedi, K.S. An empirical investigation of fault triggers in android operating system. In Proceedings of the 22nd Pacific Rim International Symposium on Dependable Computing (PRDC), Christchurch, New Zealand, 22–25 January 2017; pp. 135–144. [Google Scholar]

- González-Barahona, J.M.; Robles, G. On the reproducibility of empirical software engineering studies based on data retrieved from development repositories. Empir. Softw. Eng. 2012, 17, 75–89. [Google Scholar] [CrossRef]

- Panjer, L.D. Predicting eclipse bug lifetimes. In Proceedings of the Fourth International Workshop on Mining Software Repositories, Minneapolis, MN, USA, 20–26 May 2007; p. 29. [Google Scholar]

- Das, T.; Di Penta, M.; Malavolta, I. A Quantitative and Qualitative Investigation of Performance-Related Commits in Android Apps. In Proceedings of the 2016 IEEE International Conference on Software Maintenance and Evolution (ICSME), Raleigh, NC, USA, 2–7 October 2016; pp. 443–447. [Google Scholar]

- Bán, D.; Ferenc, R. Recognizing antipatterns and analyzing their effects on software maintainability. In Computational Science and Its Applications—ICCSA 2014; Springer: Cham, Switzerland, 2014; pp. 337–352. [Google Scholar]

- Śliwerski, J.; Zimmermann, T.; Zeller, A. When do changes induce fixes? In Proceedings of the 2005 International Workshop on Mining Software Repositories, St. Louis, MO, USA, 17 May 2005; ACM Sigsoft Software Engineering Notes. ACM: New York, NY, USA, 2005; Volume 30, pp. 1–5. [Google Scholar]

- Sheskin, D.J. Handbook of Parametric and Nonparametric Statistical Procedures; CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Hosmer, D.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2000. [Google Scholar]

- Vokac, M. Defect frequency and design patterns: An empirical study of industrial code. IEEE Trans. Softw. Eng. 2004, 30, 904–917. [Google Scholar] [CrossRef]

- Jiang, L.; Misherghi, G.; Su, Z.; Glondu, S. Deckard: Scalable and accurate tree-based detection of code clones. In Proceedings of the 29th international conference on Software Engineering, Minneapolis, MN, USA, 20–26 May 2007; pp. 96–105. [Google Scholar]

- Demeyer, S.; Tichelaar, S.; Ducasse, S. FAMIX 2.1—The FAMOOS Information Exchange Model; Technical Report; University of Bern: Bern, Switzerland, 2001. [Google Scholar]

- Nierstrasz, O.; Ducasse, S.; Gǐrba, T. The story of Moose: An agile reengineering environment. ACM SIGSOFT Softw. Eng. Notes 2005, 30, 1–10. [Google Scholar] [CrossRef]

- Fischer, M.; Pinzger, M.; Gall, H. Populating a release history database from version control and bug tracking systems. In Proceedings of the International Conference on Software Maintenance, Amsterdam, The Netherlands, 22–26 September 2003; pp. 23–32. [Google Scholar]

- Čubranić, D.; Murphy, G.C. Hipikat: Recommending pertinent software development artifacts. In Proceedings of the 25th international Conference on Software Engineering, Portland, OR, USA, 3–10 May 2003; pp. 408–418. [Google Scholar]

- Aman, H. Quantitative analysis of relationships among comment description, comment out and fault-proneness in open source software. IPSJ J. 2012, 53, 612–621. [Google Scholar]

- Fox, J.; Weisberg, S. An R Companion to Applied Regression; Sage Publications: Thousand Oaks, CA, USA, 2010. [Google Scholar]

- Spencer, D. Card Sorting: Designing Usable Categories; Rosenfeld Media: New York, NY, USA, 2009. [Google Scholar]

- Bakota, T.; Hegedus, P.; Körtvélyesi, P.; Ferenc, R.; Gyimóthy, T. A probabilistic software quality model. In Proceedings of the 27th IEEE International Conference on Software Maintenance (ICSM), Williamsburg, VI, USA, 25–30 September 2011; pp. 243–252. [Google Scholar]

- Ferenc, R.; Beszédes, Á.; Tarkiainen, M.; Gyimóthy, T. Columbus-reverse engineering tool and schema for C++. In Proceedings of the International Conference on Software Maintenance, Washington, DC, USA, 3–6 October 2002; pp. 172–181. [Google Scholar]

- Mockus, A.; Votta, L.G. Identifying Reasons for Software Changes Using Historic Databases; ICSM: Daejeon, Korea, 2000; pp. 120–130. [Google Scholar]

- Chen, J.C.; Huang, S.J. An empirical analysis of the impact of software development problem factors on software maintainability. J. Syst. Softw. 2009, 82, 981–992. [Google Scholar] [CrossRef]

- Aman, H.; Amasaki, S.; Sasaki, T.; Kawahara, M. Lines of comments as a noteworthy metric for analyzing fault-proneness in methods. IEICE Trans. Inf. Syst. 2015, 98, 2218–2228. [Google Scholar] [CrossRef]

| Code Smells (Fowler [2]) | Alternative Name |

|---|---|

| Duplicated Code | Clones (S15), Code clones (S1, S17) |

| Comments | Comments Line (S12) |

| Long Method | God Method (S4) |

| Long Parameter List | Long Parameter List Class (S5) |

| Public Data Source | Search Result | Relevant Studies | Search Effectiveness |

|---|---|---|---|

| ACM | 71 | 4 | 5.63% |

| IEEE | 12 | 2 | 16.6% |

| SCOPUS | 60 | 5 | 8.33% |

| Do Code Smells Influence the Occurrence of Software Bugs? | Selected Studies |

|---|---|

| Yes | S1, S2, S3, S4, S5, S6, S7, S8, S9, S11, S12, S13, S14, S16, S17, S18 |

| No | S10, S15 |

| Project | Language | Study |

|---|---|---|

| Eclipse | Java | S1, S3, S4, S5, S16 |

| Apache Xerces | Java | S2, S7, S11 |

| Apache Ant | Java | S2, S11, S16 |

| Apache Lucene | Java | S2, S7, S8 |

| Apache Tomcat | Java | S11, S16 |

| ArgoUML | Java | S2, S3 |

| Azureus | Java | S1, S5 |

| Checkstyle Plug-in; PMD; SQuirreL SQL Client; Hibernate ORM | Java | S12, S6 |

| JHotDraw | Java | S1, S2 |

| Apache Commons | Java | S3 |

| Apache Jmeter; Apache Lenya | Java | S11 |

| Apache httpd; Nautilus; Evolution; GIMP | C | S15 |

| Software Project A; Software Project B; Software Project C; Software Project D (undisclosed names) | Java | S10 |

| Request; Less.js; Bower; Express; Grunt | JavaScript | S9 |

| Log4J | Java | S7 |

| Maven; Mina; Eclipse CDT; Eclipse PDE UI; Equinox | Java | S8 |

| aTunes; Cassandra; Eclipse Core; Elastic Search; FreeMind; Hadoop; Hbase; Hibernate; Hive; HSQLDB; Incubating; Ivy; JBoss; Jedit; JFreeChart; jSL; JVlt; Karaf; Nutch; Pig; Qpid; Sax; Struts; Wicket; Derby | Java | S2 |

| DNSJava; JabRef; Carol; Ant-Contrib; OpenYMSG | Java | S17 |

| 2443 apps (undisclosed names) | Not provided by the authors | S13 |

| 34 projects (undisclosed names) | Java | S14 |

| 143 projects (undisclosed names) | Java | S18 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cairo, A.S.; Carneiro, G.d.F.; Monteiro, M.P. The Impact of Code Smells on Software Bugs: A Systematic Literature Review. Information 2018, 9, 273. https://doi.org/10.3390/info9110273

Cairo AS, Carneiro GdF, Monteiro MP. The Impact of Code Smells on Software Bugs: A Systematic Literature Review. Information. 2018; 9(11):273. https://doi.org/10.3390/info9110273

Chicago/Turabian StyleCairo, Aloisio S., Glauco de F. Carneiro, and Miguel P. Monteiro. 2018. "The Impact of Code Smells on Software Bugs: A Systematic Literature Review" Information 9, no. 11: 273. https://doi.org/10.3390/info9110273

APA StyleCairo, A. S., Carneiro, G. d. F., & Monteiro, M. P. (2018). The Impact of Code Smells on Software Bugs: A Systematic Literature Review. Information, 9(11), 273. https://doi.org/10.3390/info9110273