Test Experience, Direct Instruction, and Their Combination Promote Accurate Beliefs about the Testing Effect

Abstract

:1. Introduction

1.1. Origins of Learners’ Strategy Beliefs

1.2. When Is Direct Instruction Most Effective?

1.3. When Is Test Experience Most Effective?

1.4. Combining Test Experience and Direct Instruction

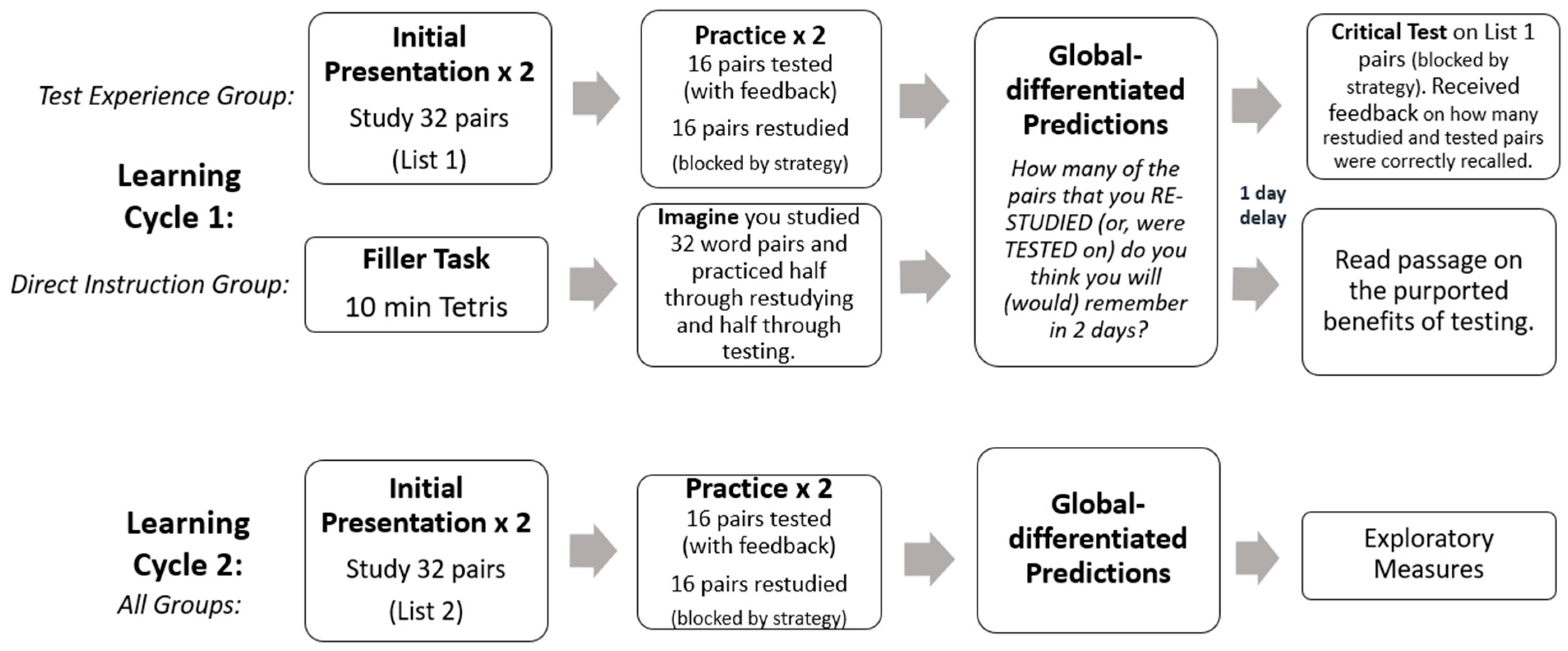

2. Materials and Methods

2.1. Participants

2.2. Design

2.3. Materials

2.4. Procedure

2.4.1. Cycle 1 by Group Assignment

Test Experience Group

Direct Instruction Group

Experience + Instruction Group

2.4.2. Cycle 2 and Exploratory Measures

3. Exclusion Criteria

4. Results

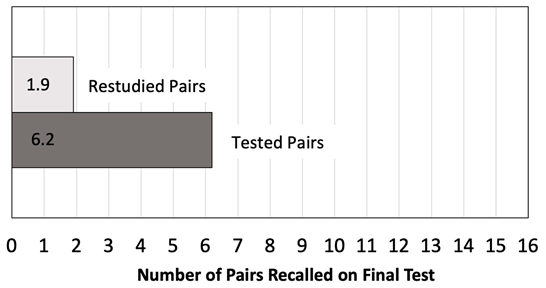

4.1. Memory Performance

4.2. Global-Differentiated Predictions

4.2.1. Test Experience Group

4.2.2. Direct Instruction Group

4.2.3. Experience + Instruction Group

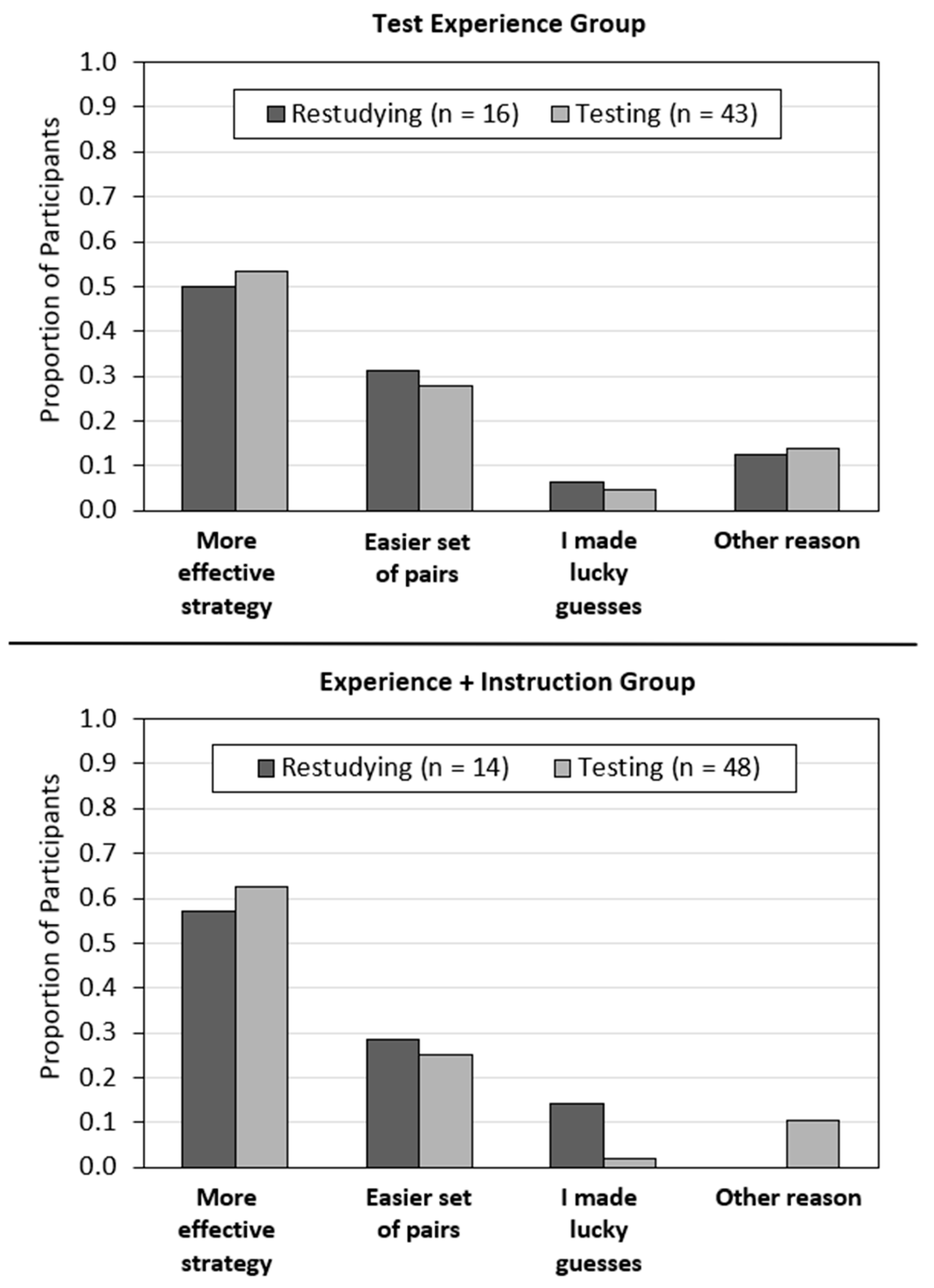

4.3. Exploratory Measures

4.3.1. Ratings of Strategy Effectiveness

4.3.2. Hypothetical Learning Scenario

4.3.3. Ratings of Likelihood of Use

4.3.4. Attributions of Critical Test Performance

5. Discussion

5.1. Conditions That Promote Accurate Strategy Beliefs

5.2. Educational Implications and Future Directions

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Instruction about the Benefits of Practice Testing for Memory

Appendix B. Test on Practice Testing Passage

- Based on the research previously described, which strategy is more effective for learning word pairs (for example, dog–spoon)?

- Self-testing*

- Restudying

- Select all that apply. Tests can be used to:

- Help assess knowledge*

- Help remember information*

- In general, which strategy tends to feel easier during learning?

- Self-testing

- Restudying*

References

- Agarwal, Pooja K., Jeffrey D. Karpicke, Sean H. K. Kang, Henry L. Roediger, and Kathleen B. McDermott. 2008. Examining the testing effect with open- and closed-book tests. Applied Cognitive Psychology 22: 861–76. [Google Scholar] [CrossRef] [Green Version]

- Agarwal, Pooja K., Ludmila D. Nunes, and Janell R. Blunt. 2021. Retrieval practice consistently benefits student learning: A systematic review of applied research in schools and classrooms. Educational Psychology Review 33: 1409–53. [Google Scholar] [CrossRef]

- Ariel, Robert, and Jeffrey D. Karpicke. 2018. Improving self-regulated learning with a retrieval practice intervention. Journal of Experimental Psychology Applied 24: 43–56. [Google Scholar] [CrossRef]

- Bernacki, Matthew L., Lucie Vosicka, and Jenifer C. Utz. 2020. Can a brief, digital skill training intervention help undergraduates “learn to learn” and improve their STEM achievement? Journal of Educational Psychology 112: 765–81. [Google Scholar] [CrossRef]

- Biwer, Felicitas, Anique de Bruin, and Adam Persky. 2023. Study smart–impact of a learning strategy training on students’ study behavior and academic performance. Advances in Health Sciences Education 28: 147–67. [Google Scholar] [CrossRef]

- Biwer, Felicitas, Mirjam G. A. oude Egbrink, Pauline Aalten, and Anique B. H. de Bruin. 2020. Fostering effective learning strategies in higher education—A mixed-methods study. Journal of Applied Research in Memory and Cognition 9: 186–203. [Google Scholar] [CrossRef]

- Bjork, Robert A., John Dunlosky, and Nate Kornell. 2013. Self-regulated learning: Beliefs, techniques, and illusions. Annual Review of Psychology 64: 417–44. [Google Scholar] [CrossRef] [Green Version]

- Borkowski, John G., Martha Carr, and Michael Pressley. 1987. “Spontaneous” strategy use: Perspectives from metacognitive theory. Intelligence 11: 61–75. [Google Scholar] [CrossRef]

- Brigham, Margaret C., and Michael Pressley. 1988. Cognitive monitoring and strategy choice in younger and older adults. Psychology and Aging 3: 249–57. [Google Scholar] [CrossRef]

- Broeren, Marloes, Anita Heijltjes, Peter Verkoeijen, Guus Smeets, and Lidia Arends. 2021. Supporting the self-regulated use of retrieval practice: A higher education classroom experiment. Contemporary Educational Psychology 64: 101939. [Google Scholar] [CrossRef]

- Carpenter, Shana K., Steven C. Pan, and Andrew C. Butler. 2022. The science of effective learning with spacing and retrieval practice. Nature Reviews Psychology 1: 496–511. [Google Scholar] [CrossRef]

- De Boer, Hester, Anouk S. Donker, Danny D. N. M. Kostons, and Greetje P. C. Van der Werf. 2018. Long-term effects of metacognitive strategy instruction on student academic performance: A meta-analysis. Educational Research Review 24: 98–115. [Google Scholar] [CrossRef] [Green Version]

- Donker, Anouk S., Hester De Boer, Danny Kostons, C. C. Dignath Van Ewijk, and Margaretha P. C. van der Werf. 2014. Effectiveness of learning strategy instruction on academic performance: A meta-analysis. Educational Research Review 11: 1–26. [Google Scholar] [CrossRef]

- Dunlosky, John, and Christopher Hertzog. 2000. Updating knowledge about encoding strategies: A componential analysis of learning about strategy effectiveness from task experience. Psychology and Aging 15: 462–74. [Google Scholar] [CrossRef]

- Dunlosky, John, and Katherine A. Rawson. 2015. Do students use testing and feedback while learning? A focus on key concept definitions and learning to criterion. Learning and Instruction 39: 32–44. [Google Scholar] [CrossRef]

- Dunlosky, John, Katherine A. Rawson, Elizabeth J. Marsh, Mitchell J. Nathan, and Daniel T. Willingham. 2013. Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest 14: 4–58. [Google Scholar] [CrossRef] [Green Version]

- Dunlosky, John, Sabrina Badali, Michelle L. Rivers, and Katherine A. Rawson. 2020. The role of effort in understanding educational achievement: Objective effort as an explanatory construct versus effort as a student perception. Educational Psychology Review 32: 1163–75. [Google Scholar] [CrossRef]

- Ecker, Ullrich K. H., Briony Swire, and Stephan Lewandowsky. 2014. Correcting misinformation—A challenge for education and cognitive science. In Processing Inaccurate Information: Theoretical and Applied Perspectives from Cognitive Science and the Educational Sciences. Edited by David N. Rapp and Jason L. G. Braasch. Cambridge, MA: The MIT Press, pp. 13–37. [Google Scholar]

- Einstein, Gilles O., Hillary G. Mullet, and Tyler L. Harrison. 2012. The testing effect: Illustrating a fundamental concept and changing study strategies. Teaching of Psychology 39: 190–93. [Google Scholar] [CrossRef]

- Faul, Franz, Edgar Erdfelder, Albert-Georg Lang, and Axel Buchner. 2007. G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods 39: 175–91. [Google Scholar] [CrossRef]

- Finn, Bridgid, and Sarah K. Tauber. 2015. When confidence is not a signal of knowing: How students’ experiences and beliefs about processing fluency can lead to miscalibrated confidence. Educational Psychology Review 27: 567–86. [Google Scholar] [CrossRef]

- Fiorella, Logan. 2020. The science of habit and its implications for student learning and well-being. Educational Psychology Review 32: 603–25. [Google Scholar] [CrossRef]

- Foerst, Nora M., Julia Klug, Gregor Jöstl, Christiane Spiel, and Barbara Schober. 2017. Knowledge vs. action: Discrepancies in university students’ knowledge about and self-reported use of self-regulated learning strategies. Frontiers in Psychology 8: 1288. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gagnon, Mathieu, and Stéphanie Cormier. 2019. Retrieval practice and distributed practice: The case of French Canadian Students. Canadian Journal of School Psychology 34: 83–97. [Google Scholar] [CrossRef]

- Guzzetti, Barbara J. 2000. Learning counter-intuitive science concepts: What have we learned from over a decade of research? Reading & Writing Quarterly 16: 89–98. [Google Scholar] [CrossRef]

- Guzzetti, Barbara J., Tonja E. Snyder, Gene V. Glass, and Warren S. Gamas. 1993. Promoting conceptual change in science: A comparative meta-analysis of instructional interventions from reading education and science education. Reading Research Quarterly 28: 117–59. [Google Scholar] [CrossRef]

- Hartwig, Marissa K., and John Dunlosky. 2012. Study strategies of college students: Are self-testing and scheduling related to achievement? Psychonomic Bulletin & Review 19: 126–34. [Google Scholar] [CrossRef] [Green Version]

- Hattie, John, John Biggs, and Nola Purdie. 1996. Effects of learning skills interventions on student learning: A meta-analysis. Review of Educational Research 66: 99–136. [Google Scholar] [CrossRef]

- Hertzog, Christopher, Jodi Price, Ailis Burpee, William J. Frentzel, Simeon Feldstein, and John Dunlosky. 2009. Why do people show minimal knowledge updating with task experience: Inferential deficit or experimental artifact? Quarterly Journal of Experimental Psychology 62: 155–73. [Google Scholar] [CrossRef] [Green Version]

- Hotta, Chie, Hidetsugu Tajika, and Ewald Neumann. 2014. Students’ free studying after training with instructions about the mnemonic benefits of testing: Do students use self-testing spontaneously? International Journal of Advances in Psychology 3: 139–43. [Google Scholar] [CrossRef]

- Hui, Luotong, Anique B. H. de Bruin, Jeroen Donkers, and Jeroen J. G. van Merriënboer. 2021a. Does individual performance feedback increase the use of retrieval practice? Educational Psychology Review 33: 1835–57. [Google Scholar] [CrossRef]

- Hui, Luotong, Anique B. H. de Bruin, Jeroen Donkers, and Jeroen J. G. van Merriënboer. 2021b. Stimulating the intention to change learning strategies: The role of narratives. International Journal of Educational Research 107: 101753. [Google Scholar] [CrossRef]

- Hui, Luotong, Anique B. H. de Bruin, Jeroen Donkers, and Jeroen J. G. van Merriënboer. 2022. Why students do (or do not) choose retrieval practice: Their perceptions of mental effort during task performance matter. Applied Cognitive Psychology 36: 433–44. [Google Scholar] [CrossRef]

- Kang, Sean H. K. 2010. Enhancing visuospatial learning: The benefit of retrieval practice. Memory & Cognition 38: 1009–17. [Google Scholar] [CrossRef] [Green Version]

- Kirk-Johnson, Afton, Brian M. Galla, and Scott H. Fraundorf. 2019. Perceiving effort as poor learning: The misinterpreted-effort hypothesis of how experienced effort and perceived learning relate to study strategy choice. Cognitive Psychology 115: 101237. [Google Scholar] [CrossRef]

- Koriat, Asher. 2008. Easy comes, easy goes? The link between learning and remembering and its exploitation in metacognition. Memory & Cognition 36: 416–28. [Google Scholar] [CrossRef] [Green Version]

- Koriat, Asher, and Robert A. Bjork. 2006. Mending metacognitive illusions: A comparison of mnemonic-based and theory-based procedures. Journal of Experimental Psychology: Learning, Memory, and Cognition 32: 1133–45. [Google Scholar] [CrossRef]

- Kornell, Nate, and Lisa K. Son. 2009. Learners’ choices and beliefs about self-testing. Memory 17: 493–501. [Google Scholar] [CrossRef]

- Kornell, Nate, and Robert A. Bjork. 2007. The promise and perils of self-regulated study. Psychonomic Bulletin & Review 14: 219–24. [Google Scholar] [CrossRef] [Green Version]

- Lakens, Daniël. 2013. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Frontiers in Psychology 4: 863. [Google Scholar] [CrossRef] [Green Version]

- Lee, Hee Seung, and John R. Anderson. 2013. Student learning: What has instruction got to do with it? Annual Review of Psychology 64: 445–69. [Google Scholar] [CrossRef]

- Lewandowsky, Stephan, Ullrich K. H. Ecker, Colleen M. Seifert, Norbert Schwarz, and John Cook. 2012. Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest 13: 106–31. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lineweaver, Tara T., Amanda C. G. Hall, Diana Hilycord, and Sarah E. Vitelli. 2019. Introducing and evaluating a “Study Smarter, Not Harder” study tips presentation offered to incoming students at a four-year university. Journal of the Scholarship of Teaching and Learning 19: 16–46. [Google Scholar] [CrossRef]

- Lineweaver, Tara T., Amanda C. G. Hall, Hanna Throgmorton, Sean M. Callahan, and Alexis J. Bell. 2022. Teaching students to study more effectively: Lessons learned from an empirical comparison of a study tips presentation and a review article. Journal of College Reading and Learning 52: 170–90. [Google Scholar] [CrossRef]

- Little, Jeri L., and Mark A. McDaniel. 2015. Metamemory monitoring and control following retrieval practice for text. Memory & Cognition 43: 85–98. [Google Scholar] [CrossRef]

- Luo, Beate. 2020. The influence of teaching learning techniques on students’ long-term learning behavior. Computer Assisted Language Learning 33: 388–412. [Google Scholar] [CrossRef]

- Maddox, Geoffrey B., and David A. Balota. 2012. Self control of when and how much to test face–name pairs in a novel spaced retrieval paradigm: An examination of age-related differences. Aging, Neuropsychology, and Cognition 19: 620–43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McCabe, Jennifer. 2011. Metacognitive awareness of learning strategies in undergraduates. Memory & Cognition 39: 462–76. [Google Scholar] [CrossRef] [Green Version]

- McCabe, Jennifer A., Dara G. Friedman-Wheeler, Samuel R. Davis, and Julia Pearce. 2021. “SET” for success: Targeted instruction in learning strategies and behavior change in introductory psychology. Teaching of Psychology 48: 257–68. [Google Scholar] [CrossRef]

- McDaniel, Mark A., and Gilles O. Einstein. 2020. Training learning strategies to promote self-regulation and transfer: The knowledge, belief, commitment, and planning framework. Perspectives on Psychological Science 15: 1363–81. [Google Scholar] [CrossRef]

- McDaniel, Mark A., Gilles O. Einstein, and Emily Een. 2021. Training college students to use learning strategies: A framework and pilot course. Psychology Learning & Teaching 20: 364–82. [Google Scholar] [CrossRef]

- Morehead, Kayla, Matthew G. Rhodes, and Sarah DeLozier. 2016. Instructor and student knowledge of study strategies. Memory 24: 257–71. [Google Scholar] [CrossRef]

- Moreira, Bruna Fernanda Tolentino, Tatiana Salazar Silva Pinto, Daniela Siqueira Veloso Starling, and Antônio Jaeger. 2019. Retrieval practice in classroom settings: A review of applied research. Frontiers in Education 4: 5. [Google Scholar] [CrossRef] [Green Version]

- Mueller, Michael L., John Dunlosky, and Sarah K. Tauber. 2015. Why is knowledge updating after task experience incomplete? Contributions of encoding experience, scaling artifact, and inferential deficit. Memory & Cognition 43: 180–92. [Google Scholar] [CrossRef]

- Nelson, Douglas L., Cathy L. McEvoy, and Thomas A. Schreiber. 2004. The University of South Florida free association, rhyme, and word fragment norms. Behavior Research Methods, Instruments, & Computers 36: 402–7. [Google Scholar] [CrossRef] [Green Version]

- O’Sullivan, Julia T., and Michael Pressley. 1984. Completeness of instruction and strategy transfer. Journal of Experimental Child Psychology 38: 275–88. [Google Scholar] [CrossRef]

- Palan, Stefan, and Christian Schitter. 2018. Prolific. ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance 17: 22–7. [Google Scholar] [CrossRef]

- Panadero, Ernesto. 2017. A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology 8: 422. [Google Scholar] [CrossRef] [Green Version]

- Pan, Steven C., and Michelle L. Rivers. 2023. Metacognitive awareness of the pretesting effect improves with self-regulation support. Memory & Cognition. [Google Scholar] [CrossRef]

- Peer, Eyal, Laura Brandimarte, Sonam Samat, and Alessandro Acquisti. 2017. Beyond the Turk: Alternative platforms for crowdsourcing behavioral research. Journal of Experimental Social Psychology 70: 153–63. [Google Scholar] [CrossRef] [Green Version]

- Ploran, Elisabeth J., Amy A. Overman, J. Todd Lee, Amy M. Masnick, Kristin M. Weingartner, and Kayla D. Finuf. 2023. Learning to Learn: A pilot study on explicit strategy instruction to incoming college students. Acta Psychologica 232: 103815. [Google Scholar] [CrossRef]

- Pomerance, Laura, Julie Greenberg, and Kate Walsh. 2016. Learning about Learning: What Every New Teacher Needs to Know. Washington, DC: National Council on Teacher Quality. [Google Scholar]

- Pressley, Michael, Fiona Goodchild, Joan Fleet, Richard Zajchowski, and Ellis D. Evans. 1989. The challenges of classroom strategy instruction. The Elementary School Journal 89: 301–42. [Google Scholar] [CrossRef]

- Price, Jodi, Christopher Hertzog, and John Dunlosky. 2008. Age-related differences in strategy knowledge updating: Blocked testing produces greater improvements in metacognitive accuracy for younger than older adults. Aging, Neuropsychology, and Cognition 15: 601–26. [Google Scholar] [CrossRef] [Green Version]

- Rapp, David N., and Panayiota Kendeou. 2007. Revising what readers know: Updating text representations during narrative comprehension. Memory & Cognition 35: 2019–32. [Google Scholar] [CrossRef] [Green Version]

- Rapp, David N., and Panayiota Kendeou. 2009. Noticing and revising discrepancies as texts unfold. Discourse Processes 46: 1–24. [Google Scholar] [CrossRef]

- Rea, Stephany Duany, Lisi Wang, Katherine Muenks, and Veronica X. Yan. 2022. Students can (mostly) recognize effective learning, so why do they not do it? Journal of Intelligence 10: 127. [Google Scholar] [CrossRef]

- Rich, Patrick R., Mariëtte H. Van Loon, John Dunlosky, and Maria S. Zaragoza. 2017. Belief in corrective feedback for common misconceptions: Implications for knowledge revision. Journal of Experimental Psychology: Learning, Memory, and Cognition 43: 492–501. [Google Scholar] [CrossRef]

- Rivers, Michelle L. 2021. Metacognition about practice testing: A review of learners’ beliefs, monitoring, and control of test-enhanced learning. Educational Psychology Review 33: 823–62. [Google Scholar] [CrossRef]

- Rivers, Michelle L., John Dunlosky, and Mason McLeod. 2022. What constrains people’s ability to learn about the testing effect through task experience? Memory 30: 1387–404. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, Fernando, Mariela J. Rivas, Lani H. Matsumura, Mark Warschauer, and Brian K. Sato. 2018. How do students study in STEM courses? Findings from a light-touch intervention and its relevance for underrepresented students. PLoS ONE 13: e0200767. [Google Scholar] [CrossRef] [PubMed]

- Roediger, Henry L., and Jeffrey D. Karpicke. 2006. Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science 17: 249–55. [Google Scholar] [CrossRef] [PubMed]

- Simone, Patricia M., Lisa C. Whitfield, Matthew C. Bell, Pooja Kher, and Taylor Tamashiro. 2023. Shifting students toward testing: Impact of instruction and context on self-regulated learning. Cognitive Research: Principles and Implications 8: 14. [Google Scholar] [CrossRef]

- Sun, Yuqi, Aike Shi, Wenbo Zhao, Yumeng Yang, Baike Li, Xiao Hu, David R. Shanks, Chunliang Yang, and Liang Luo. 2022. Long-lasting effects of an instructional intervention on interleaving preference in inductive learning and transfer. Educational Psychology Review 34: 1679–707. [Google Scholar] [CrossRef]

- Surma, Tim, Kristel Vanhoyweghen, Gino Camp, and Paul A. Kirschner. 2018. The coverage of distributed practice and retrieval practice in Flemish and Dutch teacher education textbooks. Teaching and Teacher Education 74: 229–37. [Google Scholar] [CrossRef]

- Tippett, Christine D. 2010. Refutation text in science education: A review of two decades of research. International Journal of Science and Mathematics Education 8: 951–70. [Google Scholar] [CrossRef]

- Tse, Chi-Shing, David A. Balota, and Henry L. Roediger. 2010. The benefits and costs of repeated testing on the learning of face-name pairs in healthy older adults. Psychology and Aging 25: 8334. [Google Scholar] [CrossRef] [Green Version]

- Tullis, Jonathan G., and Geoffrey B. Maddox. 2020. Self-reported use of retrieval practice varies across age and domain. Metacognition and Learning 15: 129–54. [Google Scholar] [CrossRef]

- Tullis, Jonathan G., Jason R. Finley, and Aaron S. Benjamin. 2013. Metacognition of the testing effect: Guiding learners to predict the benefits of retrieval. Memory & Cognition 41: 429–42. [Google Scholar] [CrossRef] [Green Version]

- Wasylkiw, Louise, Jennifer L. Tomes, and Francine Smith. 2008. Subset testing: Prevalence and implications for study behaviors. The Journal of Experimental Education 76: 243–57. [Google Scholar] [CrossRef]

- Wolters, Christopher A., and Anna C. Brady. 2020. College students’ time management: A self-regulated learning perspective. Educational Psychology Review 33: 1319–51. [Google Scholar] [CrossRef]

- Yan, Veronica X., Elizabeth Ligon Bjork, and Robert A. Bjork. 2016. On the difficulty of mending metacognitive illusions: A priori theories, fluency effects, and misattributions of the interleaving benefit. Journal of Experimental Psychology: General 145: 918–33. [Google Scholar] [CrossRef] [Green Version]

- Yan, Veronica X., Khanh-Phuong Thai, and Robert A. Bjork. 2014. Habits and beliefs that guide self-regulated learning: Do they vary with mindset? Journal of Applied Research in Memory and Cognition 3: 140–52. [Google Scholar] [CrossRef]

- Yang, Chunliang, Liang Luo, Miguel A. Vadillo, Rongjun Yu, and David R. Shanks. 2021. Testing (quizzing) boosts classroom learning: A systematic and meta-analytic review. Psychological Bulletin 147: 399–435. [Google Scholar] [CrossRef] [PubMed]

- Zepeda, Cristina D., Rachel S. Martin, and Andrew C. Butler. 2020. Motivational strategies to engage learners in desirable difficulties. Journal of Applied Research in Memory and Cognition 9: 468–74. [Google Scholar] [CrossRef]

| Restudied Pairs M (SE) | Tested Pairs M (SE) | t | p | |

|---|---|---|---|---|

| Test Experience Group | ||||

| Testing Effect on Performance (n = 43) | ||||

| Cycle 1 | 6.21 (0.57) | 7.30 (0.60) | 2.77 | .008 |

| Cycle 2 | 5.81 (0.52) | 8.77 (0.65) | 6.41 | <.001 |

| No Testing Effect on Performance (n = 27) | ||||

| Cycle 1 | 6.59 (0.82) | 7.00 (0.86) | 1.02 | .32 |

| Cycle 2 | 8.96 (0.83) | 9.00 (0.86) | 0.10 | .92 |

| Experience + Instruction Group | ||||

| Testing Effect on Performance (n = 48) | ||||

| Cycle 1 | 7.06 (0.54) | 7.69 (0.58) | 1.47 | .15 |

| Cycle 2 | 6.96 (0.61) | 9.73 (0.52) | 7.49 | <.001 |

| No Testing Effect on Performance (n = 23) | ||||

| Cycle 1 | 6.17 (0.50) | 5.91 (0.86) | 0.39 | .70 |

| Cycle 2 | 6.00 (0.75) | 7.74 (0.86) | 6.48 | <.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rivers, M.L. Test Experience, Direct Instruction, and Their Combination Promote Accurate Beliefs about the Testing Effect. J. Intell. 2023, 11, 147. https://doi.org/10.3390/jintelligence11070147

Rivers ML. Test Experience, Direct Instruction, and Their Combination Promote Accurate Beliefs about the Testing Effect. Journal of Intelligence. 2023; 11(7):147. https://doi.org/10.3390/jintelligence11070147

Chicago/Turabian StyleRivers, Michelle L. 2023. "Test Experience, Direct Instruction, and Their Combination Promote Accurate Beliefs about the Testing Effect" Journal of Intelligence 11, no. 7: 147. https://doi.org/10.3390/jintelligence11070147

APA StyleRivers, M. L. (2023). Test Experience, Direct Instruction, and Their Combination Promote Accurate Beliefs about the Testing Effect. Journal of Intelligence, 11(7), 147. https://doi.org/10.3390/jintelligence11070147