EvoMBN: Evolving Multi-Branch Networks on Myocardial Infarction Diagnosis Using 12-Lead Electrocardiograms

Abstract

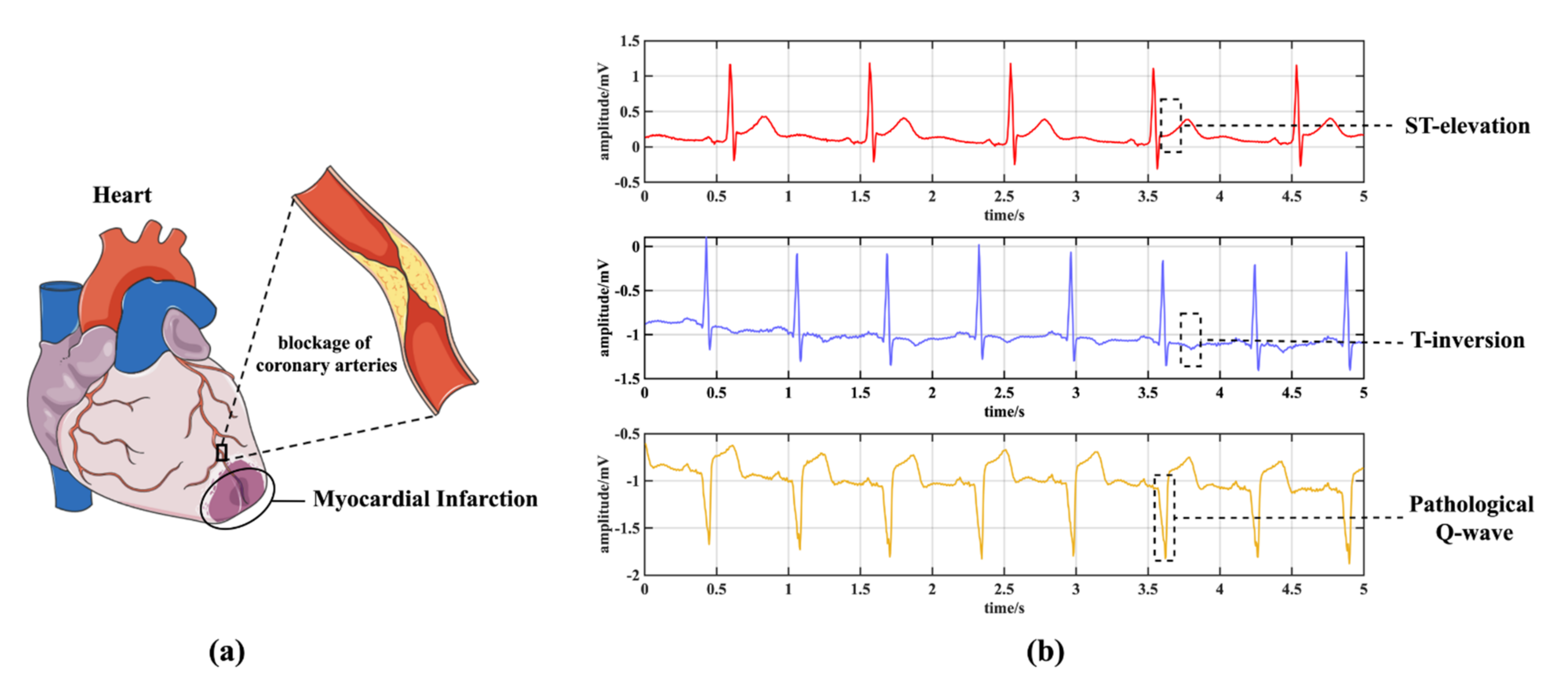

:1. Introduction

- (1)

- To balance computational burden and algorithm flexibility, the EvoMBN employs a GA to implement a constrained architecture optimization based on the MBN skeleton. Specifically, a limited number of branch net layers are given in advance. Then GA iterations are performed to automatically learn an optimal depth for each branch net. An efficient architecture encoding strategy is proposed to represent the whole model, making it possible to globally search the optimal solution.

- (2)

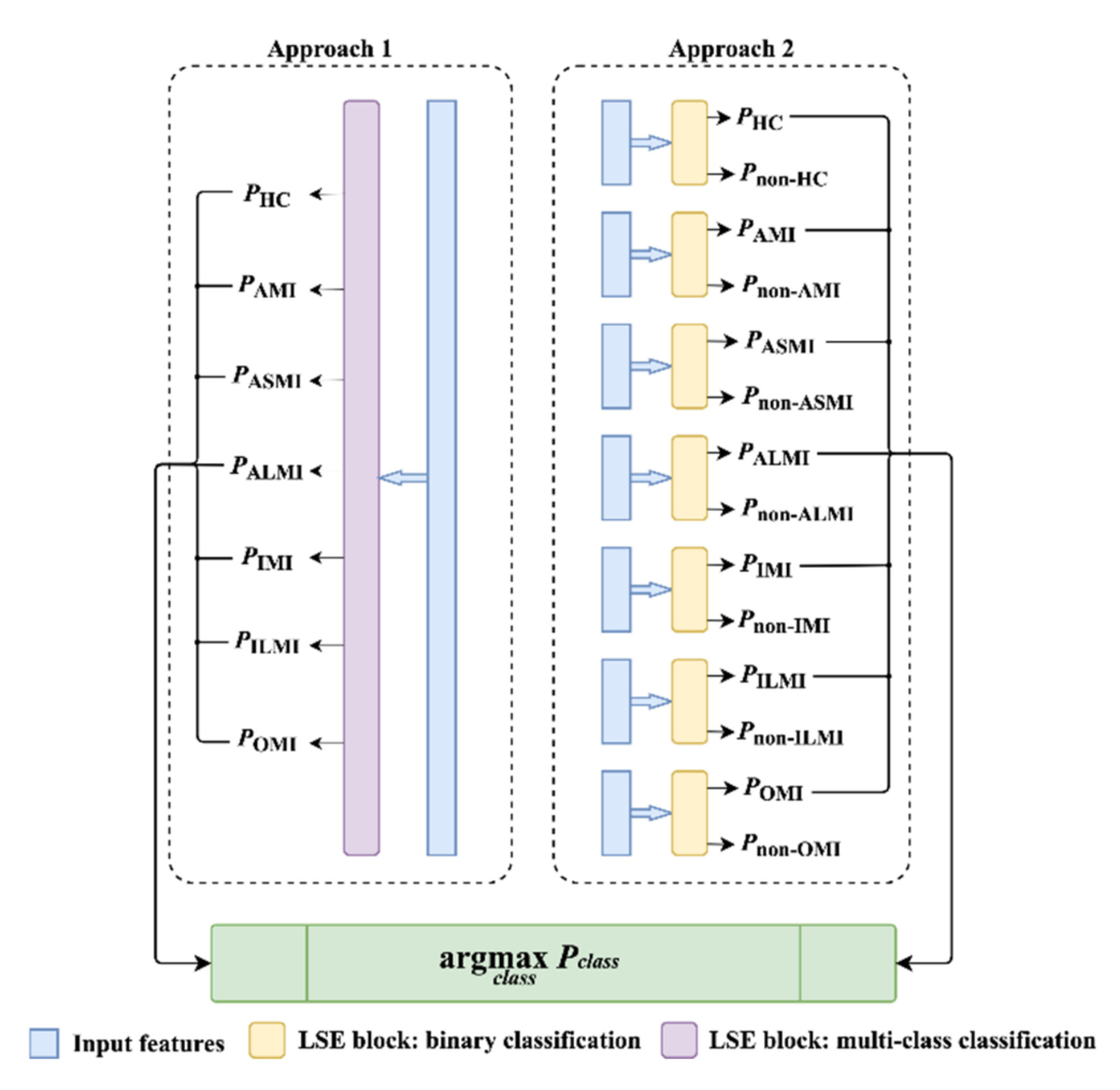

- To efficiently summarize all the leads and produce final results, a novel Lead Squeeze and Excitation (LSE) block that consists of a fully-connected layer and an LSE mechanism is established. The LSE extends the typical SE [39] to weight leads which are more relevant to the target categories. Compared with a simple fully-connected layer for feature summary, the LSE block can achieve a better performance in our experiments.

- (3)

- To comprehensively evaluate the generalization of EvoMBN, five-fold cross validation is performed on the Physikalisch-Technische Bundesanstalt (PTB) diagnostic ECG database [40] under the inter-patient paradigm [41]. The inter-patient paradigm is a more practical evaluation method, as it considers the model generalization on unseen patients. Furthermore, the best EvoMBN architecture learned from the PTB database is directly transferred to the MI detection and localization on the PTB-XL database [42], a larger ECG database which shares no records with the PTB database. To the best of our knowledge, there has not been any architecture transfer developed for cross-database evaluations in ECG-based MI diagnosis. Finally, the superior results in the experiments demonstrate the robustness of our model.

2. Materials and Methods

2.1. Datasets

2.1.1. The PTB Database

2.1.2. The PTB-XL Database

2.2. Separate Training of the Branch Networks

2.3. LSE Block

2.4. Joint GA-Based Architecture Optimization

2.4.1. Encoding Strategy and Problem Formulation

2.4.2. Initialization

2.4.3. Fitness Evaluation

2.4.4. Selection

2.4.5. Crossover and Mutation

2.4.6. Iteration

3. Results

3.1. MI Detection

3.2. MI Localization

4. Discussion

4.1. The Efficiency of the LSE and GA Optimization

4.2. Architecture Transferring

4.3. Comparison with the State-of-the-Art Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Timmis, A.; Townsend, N.; Gale, C.P.; Torbica, A.; Lettino, M.; Petersen, S.E.; Mossialos, E.A.; Maggioni, A.P.; Kazakiewicz, D.; May, H.T.; et al. European society of cardiology: Cardiovascular disease statistics 2019. Eur. Heart J. 2020, 41, 12–85. [Google Scholar] [CrossRef] [PubMed]

- WHO. Cardiovascular Diseases (CVDs). Available online: https://www.who.int/en/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds) (accessed on 15 November 2021).

- Thygesen, K.; Alpert, J.S.; Jaffe, A.S.; Simoons, M.L.; Chaitman, B.R.; White, H.D.; Katus, H.A.; Apple, F.S.; Lindahl, B.; Morrow, D.A.; et al. Third universal definition of myocardial infarction. J. Am. Coll. Cardiol. 2012, 60, 1581–1598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Surawicz, B.; Knilans, T.K. Chou’s Electrocardiography in Clinical Practice; Saunders Elsevier: Philadelphia, PA, USA, 2008. [Google Scholar]

- O’Gara, P.T.; Kushner, F.G.; Ascheim, D.D.; Casey, D.E.; Chung, M.K.; de Lemos, J.A.; Ettinger, S.M.; Fang, J.C.; Fesmire, F.M.; Franklin, B.A.; et al. 2013 ACCF/AHA guideline for the management of ST-elevation myocardial infarction: A report of the American college of cardiology foundation/american heart association task force on practice guidelines. J. Am. Coll. Cardiol. 2013, 61, e78–e140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zimetbaum, P.J.; Josephson, M.E. Use of the electrocardiogram in acute myocardial infarction. N. Engl. J. Med. 2003, 348, 933–940. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tafreshi, R.; Jaleel, A.; Lim, J.; Tafreshi, L. Automated analysis of ECG waveforms with atypical QRS complex morphologies. Biomed. Signal Process. Control. 2014, 10, 41–49. [Google Scholar] [CrossRef]

- Yang, H.; Bukkapatnam, S.T.; Le, T.; Komanduri, R. Identification of myocardial infarction (MI) using spatio-temporal heart dynamics. Med. Eng. Phys. 2012, 34, 485–497. [Google Scholar] [CrossRef]

- Safdarian, N.; Dabanloo, N.J.; Attarodi, G. A new pattern recognition method for detection and localization of myocardial infarction using t-wave integral and total integral as extracted features from one cycle of ECG signal. J. Biomed. Sci. Eng. 2014, 7, 818–824. [Google Scholar] [CrossRef] [Green Version]

- Jayachandran, E.S.; Paul, J.K.; Acharya, U.R. Analysis of myocardial infarction using discrete wavelet transform. J. Med. Syst. 2010, 34, 985–992. [Google Scholar] [CrossRef]

- Banarjee, S.; Mitra, M. Cross wavelet transform based analysis of electrocardiogram signals. Int. J. Electr. Electron. Comput. Eng. 2012, 1, 88–92. [Google Scholar]

- Sun, L.; Lu, Y.; Yang, K.; Li, S. ECG analysis using multiple instance learning for myocardial infarction detection. IEEE Trans. Biomed. Eng. 2012, 59, 3348–3356. [Google Scholar] [CrossRef]

- Padhy, S.; Dandapat, S. Third-Order tensor based analysis of multilead ECG for classification of myocardial infarction. Biomed. Signal Process. Control. 2017, 31, 71–78. [Google Scholar] [CrossRef]

- Acharya, U.R.; Fujita, H.; Adam, M.; Lih, O.S.; Sudarshan, V.K.; Hong, T.J.; Koh, J.E.; Hagiwara, Y.; Chua, C.K.; Poo, C.K.; et al. Automated characterization and classification of coronary artery disease and myocardial infarction by decomposition of ECG signals: A comparative study. Inf. Sci. 2017, 377, 17–29. [Google Scholar] [CrossRef]

- Sharma, L.N.; Tripathy, R.; Dandapat, S. Multiscale energy and eigenspace approach to detection and localization of myocardial infarction. IEEE Trans. Biomed. Eng. 2015, 62, 1827–1837. [Google Scholar] [CrossRef]

- Han, C.; Shi, L. Automated interpretable detection of myocardial infarction fusing energy entropy and morphological features. Comput. Methods Programs Biomed. 2019, 175, 9–23. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Acharya, U.R.; Fujita, H.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M. Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf. Sci. 2017, 415, 190–198. [Google Scholar] [CrossRef]

- Martin, H.; Morar, U.; Izquierdo, W.; Cabrerizo, M.; Cabrera, A.; Adjouadi, M. Real-Time frequency-independent single-lead and single-beat myocardial infarction detection. Artif. Intell. Med. 2021, 121, 102179. [Google Scholar] [CrossRef]

- Martin, H.; Izquierdo, W.; Cabrerizo, M.; Cabrera, A.; Adjouadi, M. Near real-time single-beat myocardial infarction detection from single-lead electrocardiogram using Long Short-Term Memory Neural Network. Biomed. Signal Process. Control. 2021, 68, 102683. [Google Scholar] [CrossRef]

- Odema, M.; Rashid, N.; Al Faruque, M.A. Energy-Aware design methodology for myocardial infarction detection on low-power wearable devices. In Proceedings of the 2021 26th Asia and South Pacific Design Automation Conference (ASP-DAC), Tokyo, Japan, 18–21 January 2021; pp. 621–626. [Google Scholar] [CrossRef]

- Reasat, T.; Shahnaz, C. Detection of inferior myocardial infarction using shallow convolutional neural networks. In Proceedings of the IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Dhaka, Bangladesh, 21–23 December 2017; pp. 718–721. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Zhang, M.; Zhang, Y.; Liao, Y.; Huang, Q.; Chang, S.; Wang, H.; He, J. Real-Time multilead convolutional neural network for myocardial infarction detection. IEEE J. Biomed. Health Inform. 2018, 22, 1434–1444. [Google Scholar] [CrossRef]

- Cao, Y.; Wei, T.; Zhang, B.; Lin, N.; Rodrigues, J.J.P.C.; Li, J.; Zhang, D. ML-Net: Multi-Channel lightweight network for detecting myocardial infarction. IEEE J. Biomed. Health Inform. 2021, 25, 3721–3731. [Google Scholar] [CrossRef]

- Ravi, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.-Z. Deep learning for health informatics. IEEE J. Biomed. Health Inform. 2016, 21, 4–21. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Huang, Q.; Chang, S.; Wang, H.; He, J. Multiple-feature-branch convolutional neural network for myocardial infarction diagnosis using electrocardiogram. Biomed. Signal Process. Control. 2018, 45, 22–32. [Google Scholar] [CrossRef]

- Liu, W.; Wang, F.; Huang, Q.; Chang, S.; Wang, H.; He, J. MFB-CBRNN: A hybrid network for mi detection using 12-lead ECGs. IEEE J. Biomed. Health Inform. 2020, 24, 503–514. [Google Scholar] [CrossRef]

- Han, C.; Shi, L. ML–ResNet: A novel network to detect and locate myocardial infarction using 12 leads ECG. Comput. Methods Programs Biomed. 2020, 185, 105138. [Google Scholar] [CrossRef]

- He, Z.; Yuan, Z.; An, P.; Zhao, J.; Du, B. MFB-LANN: A lightweight and updatable myocardial infarction diagnosis system based on convolutional neural networks and active learning. Comput. Methods Programs Biomed. 2021, 210, 106379. [Google Scholar] [CrossRef]

- Liu, H.; Zhao, Z.; She, Q. Self-Supervised ECG pre-training. Biomed. Signal Process. Control. 2021, 70, 103010. [Google Scholar] [CrossRef]

- Tadesse, G.A.; Javed, H.; Weldemariam, K.; Liu, Y.; Liu, J.; Chen, J.; Zhu, T. DeepMI: Deep multi-lead ECG fusion for identifying myocardial infarction and its occurrence-time. Artif. Intell. Med. 2021, 121, 102192. [Google Scholar] [CrossRef]

- Du, N.; Cao, Q.; Yu, L.; Liu, N.; Zhong, E.; Liu, Z.; Shen, Y.; Chen, K. FM-ECG: A fine-grained multi-label framework for ECG image classification. Inf. Sci. 2021, 549, 164–177. [Google Scholar] [CrossRef]

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding transfer learning for medical imaging. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Ashlock, D. Evolutionary Computation for Modeling and Optimization; Springer: New York, NY, USA, 2006. [Google Scholar]

- Zhou, X.; Qin, A.K.; Gong, M.; Tan, K.C. A survey on evolutionary construction of deep neural Networks. IEEE Trans. Evol. Comput. 2021, 25, 894–912. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. Evolving deep convolutional neural networks for image classification. IEEE Trans. Evol. Comput. 2020, 24, 394–407. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G.; Lv, J. Automatically designing CNN architectures using the genetic algorithm for image classification. IEEE Trans. Cybern. 2020, 50, 3840–3854. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. Completely automated CNN architecture design based on blocks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1242–1254. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G.; Albanie, S.; Wu, E. Squeeze-and-Excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goldberger, A.L.; Amaral, L.A.N.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. Physiobank, physiotoolkit, and physionet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [Green Version]

- Luz, E.; Schwartz, W.R.; Chavez, G.C.; Menotti, D. ECG-based heartbeat classification for arrhythmia detection: A survey. Comput. Methods Programs Biomed. 2016, 127, 144–164. [Google Scholar] [CrossRef]

- Wagner, P.; Strodthoff, N.; Bousseljot, R.-D.; Kreiseler, D.; Lunze, F.I.; Samek, W.; Schaeffter, T. PTB-XL, a large publicly available electrocardiography dataset. Sci. Data 2020, 7, 154. [Google Scholar] [CrossRef]

- Fu, L.; Lu, B.; Nie, B.; Peng, Z.; Liu, H.; Pi, X. Hybrid network with attention mechanism for detection and location of myocardial infarction based on 12-lead electrocardiogram signals. Sensors 2020, 20, 1020. [Google Scholar] [CrossRef] [Green Version]

- Martis, R.J.; Acharya, U.R.; Min, L.C. ECG beat classification using PCA, LDA, ICA and discrete wavelet transform. Biomed. Signal Process. Control. 2013, 8, 437–448. [Google Scholar] [CrossRef]

- Makowski, D.; Pham, T.; Lau, Z.J.; Brammer, J.C.; Lespinasse, F.; Pham, H.; Schölzel, C.; Chen, A.S.H. Neurokit2: A Python Toolbox for Neurophysiological Signal Processing. 2020. Available online: https://github.com/neuropsychology/NeuroKit (accessed on 15 November 2021).

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-Level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019, 25, 65–69. [Google Scholar] [CrossRef]

- King, G.; Zeng, L. Logistic regression in rare events data. Political Anal. 2001, 9, 137–163. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the 13th European Conference on Computer Vision, ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. An experimental study on hyper-parameter optimization for stacked auto-encoders. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Banzhaf, W.; Nordin, P.; Keller, R.E.; Francone, F.D. Genetic Programming: An Introduction; Morgan Kaufmann: San Mateo, CA, USA, 1998. [Google Scholar]

- Chang, P.-C.; Lin, J.-J.; Hsieh, J.-C.; Weng, J. Myocardial infarction classification with multi-lead ECG using hidden Markov models and Gaussian mixture models. Appl. Soft Comput. 2012, 12, 3165–3175. [Google Scholar] [CrossRef]

- Alherbish, A.; Westerhout, C.M.; Fu, Y.; White, H.D.; Granger, C.B.; Wagner, G.; Armstrong, P. The forgotten lead: Does aVR ST-deviation add insight into the outcomes of ST-elevation myocardial infarction patients? Am. Heart J. 2013, 166, 333–339. [Google Scholar] [CrossRef]

- Sharma, L.D.; Sunkaria, R.K. Inferior myocardial infarction detection using stationary wavelet transform and machine learning approach. Signal Image Video Process. 2018, 12, 199–206. [Google Scholar] [CrossRef]

| Class | Acc (%) | Sen (%) | Spe (%) | Ppv (%) | F1 |

|---|---|---|---|---|---|

| HC | 59.21 | 88.84 | 92.41 | 73.07 | 0.802 |

| AMI | 39.37 | 91.43 | 37.19 | 0.382 | |

| ASMI | 59.97 | 89.37 | 59.26 | 0.596 | |

| ALMI | 42.68 | 91.31 | 40.15 | 0.414 | |

| IMI | 48.85 | 91.34 | 62.36 | 0.548 | |

| ILMI | 65.27 | 95.02 | 69.02 | 0.671 | |

| Mean | 59.21 | 57.50 | 91.81 | 56.84 | 0.569 |

| Class | Acc (%) | Sen (%) | Spe (%) | Ppv (%) | F1 |

|---|---|---|---|---|---|

| HC | 71.65 | 88.21 | 97.48 | 89.02 | 0.886 |

| AMI | 42.10 | 95.60 | 55.23 | 0.478 | |

| ASMI | 70.49 | 89.81 | 64.09 | 0.671 | |

| ALMI | 66.09 | 91.71 | 52.32 | 0.584 | |

| IMI | 70.55 | 96.24 | 84.65 | 0.770 | |

| ILMI | 81.38 | 95.13 | 73.98 | 0.775 | |

| Mean | 71.65 | 69.80 | 94.34 | 69.88 | 0.694 |

| Aspect | Leads |

|---|---|

| Anterior | V3, V4 |

| Septal | V1, V2 |

| Lateral | I, aVL, V5, V6 |

| Inferior | II, III, aVF |

| Endocardial | aVR |

| Class | Individual | Acc (%) | Sen (%) | Spe (%) | Ppv (%) | F1 |

|---|---|---|---|---|---|---|

| HC | [17,12,17,2,2,16, 14,2,2,14,16,17] | 79.42 | 93.59 | 98.19 | 96.26 | 0.949 |

| AMI | [10,2,6,6,2,12, 2,6,16,2,17,2] | 39.41 | 94.29 | 47.09 | 0.429 | |

| ASMI | [8,2,6,10,6,12, 12,2,16,4,6,4] | 76.77 | 91.28 | 55.53 | 0.644 | |

| ALMI | [14,14,8,8,12,8,17, 17,17,16,14,10] | 80.81 | 96.59 | 71.75 | 0.760 | |

| IMI | [16,6,16,17,2,12 ,4,2,17,6,2,16] | 83.94 | 96.98 | 88.59 | 0.862 | |

| ILMI | [17,14,12,8,10,8, 16,14,8,4,10,4] | 71.22 | 98.59 | 86.71 | 0.782 | |

| Mean | -- | 79.42 | 74.29 | 95.99 | 74.32 | 0.738 |

| Model | Acc (%) | Sen (%) | Spe (%) | Ppv (%) | F1 |

|---|---|---|---|---|---|

| MBN | 88.70 | 87.02 | 93.31 | 97.27 | 0.919 |

| EvoMBN | 90.80 | 92.59 | 85.88 | 94.73 | 0.936 |

| Model | Class | Acc (%) | Sen (%) | Spe (%) | Ppv (%) | F1 |

|---|---|---|---|---|---|---|

| MBN | HC | 70.79 | 94.75 | 87.08 | 72.79 | 0.823 |

| AMI | 27.95 | 97.72 | 38.35 | 0.323 | ||

| ASMI | 94.43 | 79.21 | 70.94 | 0.810 | ||

| ALMI | 18.18 | 99.80 | 63.41 | 0.283 | ||

| IMI | 30.60 | 99.28 | 94.27 | 0.462 | ||

| ILMI | 59.23 | 96.53 | 40.28 | 0.480 | ||

| Mean | 70.79 | 54.19 | 93.27 | 63.34 | 0.530 | |

| EvoMBN | HC | 75.18 | 88.21 | 92.34 | 80.77 | 0.843 |

| AMI | 35.34 | 95.66 | 29.25 | 0.320 | ||

| ASMI | 83.02 | 92.39 | 85.42 | 0.842 | ||

| ALMI | 22.37 | 97.37 | 14.10 | 0.173 | ||

| IMI | 69.35 | 91.16 | 75.12 | 0.721 | ||

| ILMI | 31.01 | 98.80 | 50.57 | 0.384 | ||

| Mean | 75.18 | 54.88 | 94.62 | 55.87 | 0.547 |

| Method | Hand-Designed Features | Results | |

|---|---|---|---|

| [54] (2018) | 10 | Detection(IMI): Sen = 79.01%; Spe = 79.26%; Ppv = 80.25%; Acc = 81.71% | Localization: NA |

| [16] (2019) | 22 | Detection: Sen = 80.96%; Ppv = 86.14%; Acc = 92.69% | Localization: NA |

| [27] (2020) | 0 | Detection: Sen = 94.42%; Spe = 86.29%; Acc = 93.08% | Localization: NA |

| [43] (2020) | 0 | Detection: Sen = 97.10%; Spe = 93.34%; Acc = 96.50% | Localization: Sen = 63.97%; Spe = 63.00%; Acc = 62.94% |

| [24] (2021) | 0 | Detection(GAMI): Sen = 94.30%; Spe = 97.72%; Acc = 96.65% | Localization(GAMI): Sen = 62.64%; Spe = 68.70%; Acc = 66.85% |

| [28] (2021) | 0 | Detection: Sen = 94.85%; Spe = 97.37%; Acc = 95.49% F1 = 0.969 | Localization: Sen = 47.58%; Spe = 55.37%; Acc = 55.74% F1 = 0.479 |

| Proposed 1 | 0 | Detection: Sen = 98.53%; Spe = 90.02%; Ppv = 98.01% Acc = 97.11%; F1 = 0.983 | Localization: Sen = 69.80%; Spe = 94.34%; Ppv = 69.88% Acc = 71.65%; F1 = 0.694 |

| Proposed 2 | 0 | Detection: Sen = 92.59%; Spe = 85.88%; Ppv = 94.73% Acc = 90.80%; F1 = 0.936 | Localization: Sen = 54.88%; Spe = 94.62%; Ppv = 55.87% Acc = 75.18%; F1 = 0.546 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Ji, J.; Chang, S.; Wang, H.; He, J.; Huang, Q. EvoMBN: Evolving Multi-Branch Networks on Myocardial Infarction Diagnosis Using 12-Lead Electrocardiograms. Biosensors 2022, 12, 15. https://doi.org/10.3390/bios12010015

Liu W, Ji J, Chang S, Wang H, He J, Huang Q. EvoMBN: Evolving Multi-Branch Networks on Myocardial Infarction Diagnosis Using 12-Lead Electrocardiograms. Biosensors. 2022; 12(1):15. https://doi.org/10.3390/bios12010015

Chicago/Turabian StyleLiu, Wenhan, Jiewei Ji, Sheng Chang, Hao Wang, Jin He, and Qijun Huang. 2022. "EvoMBN: Evolving Multi-Branch Networks on Myocardial Infarction Diagnosis Using 12-Lead Electrocardiograms" Biosensors 12, no. 1: 15. https://doi.org/10.3390/bios12010015

APA StyleLiu, W., Ji, J., Chang, S., Wang, H., He, J., & Huang, Q. (2022). EvoMBN: Evolving Multi-Branch Networks on Myocardial Infarction Diagnosis Using 12-Lead Electrocardiograms. Biosensors, 12(1), 15. https://doi.org/10.3390/bios12010015