Identifying the Posture of Young Adults in Walking Videos by Using a Fusion Artificial Intelligent Method

Abstract

:1. Introduction

2. Materials and Methods

2.1. Research Ethics

2.2. Flow of Research

2.3. Participants

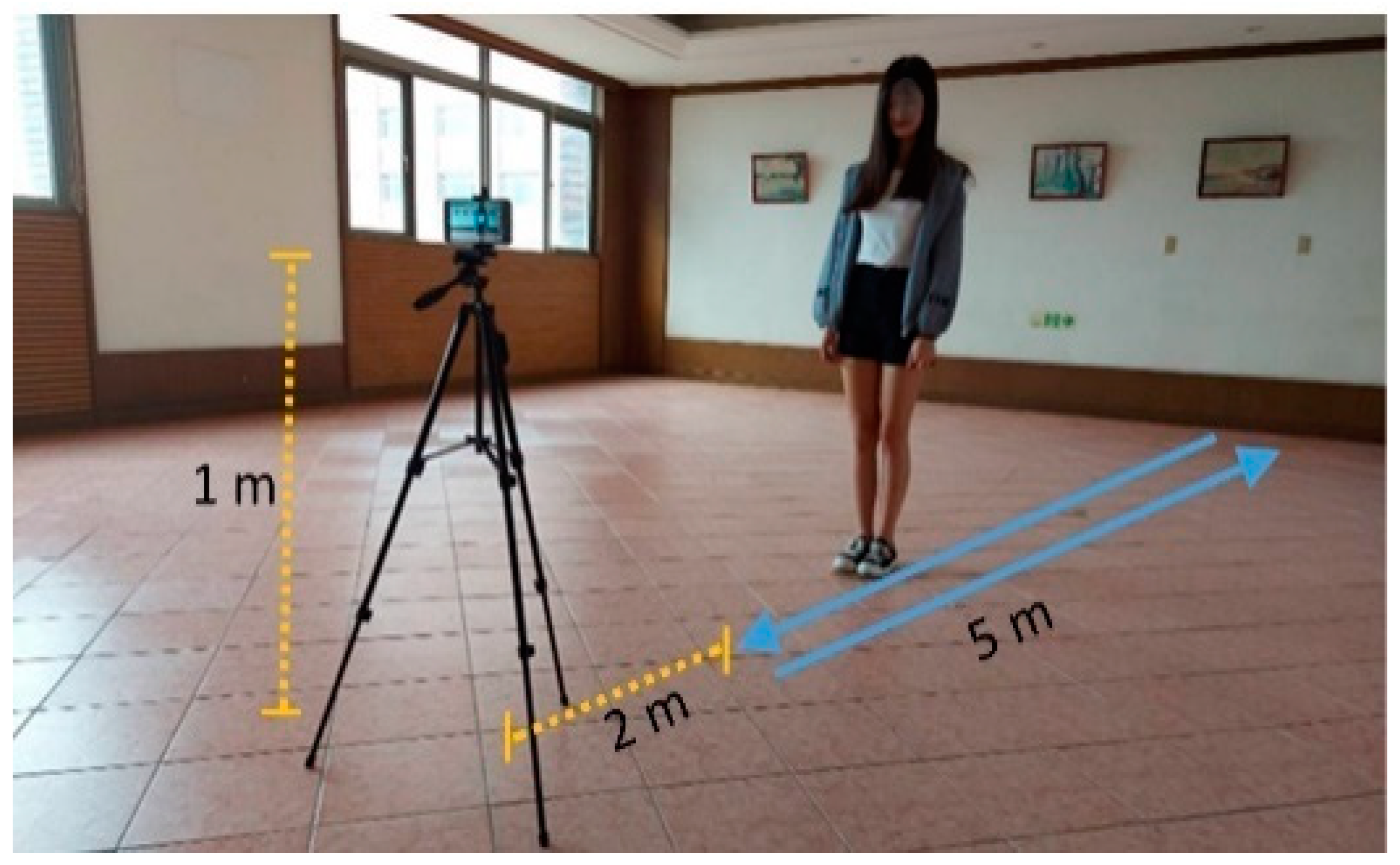

2.4. Experimental Design

2.5. Measurement of Joint Nodes through Openpose-Based Deep Learning

2.6. Definition of the Control and Experimental Groups

2.7. Classification Using Pretrained CNNs and Machine Learning Classifiers

2.8. Validation of Classification Performance

3. Results

4. Discussion

4.1. Measurement of Postural Control

4.2. Literature for Health Issues and Postural Control during Walking

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| CNN | Classifier | Batch Size | Model | CNN | Classifier | Batch Size | Model | CNN | Classifier | Batch Size | Model |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AlexNet | LR | 5 | M1 | GoogleNet | SVM | 5 | M33 | ResNet50 | NB | 5 | M65 |

| AlexNet | LR | 8 | M2 | GoogleNet | SVM | 8 | M34 | ResNet50 | NB | 8 | M66 |

| AlexNet | LR | 11 | M3 | GoogleNet | SVM | 11 | M35 | ResNet50 | NB | 11 | M67 |

| AlexNet | LR | 14 | M4 | GoogleNet | SVM | 14 | M36 | ResNet50 | NB | 14 | M68 |

| AlexNet | NB | 5 | M5 | MobileNetV2 | LR | 5 | M37 | ResNet50 | SVM | 5 | M69 |

| AlexNet | NB | 8 | M6 | MobileNetV2 | LR | 8 | M38 | ResNet50 | SVM | 8 | M70 |

| AlexNet | NB | 11 | M7 | MobileNetV2 | LR | 11 | M39 | ResNet50 | SVM | 11 | M71 |

| AlexNet | NB | 14 | M8 | MobileNetV2 | LR | 14 | M40 | ResNet50 | SVM | 14 | M72 |

| AlexNet | SVM | 5 | M9 | MobileNetV2 | NB | 5 | M41 | VGG16 | LR | 5 | M73 |

| AlexNet | SVM | 8 | M10 | MobileNetV2 | NB | 8 | M42 | VGG16 | LR | 8 | M74 |

| AlexNet | SVM | 11 | M11 | MobileNetV2 | NB | 11 | M43 | VGG16 | LR | 11 | M75 |

| AlexNet | SVM | 14 | M12 | MobileNetV2 | NB | 14 | M44 | VGG16 | LR | 14 | M76 |

| DenseNet201 | LR | 5 | M13 | MobileNetV2 | SVM | 5 | M45 | VGG16 | NB | 5 | M77 |

| DenseNet201 | LR | 8 | M14 | MobileNetV2 | SVM | 8 | M46 | VGG16 | NB | 8 | M78 |

| DenseNet201 | LR | 11 | M15 | MobileNetV2 | SVM | 11 | M47 | VGG16 | NB | 11 | M79 |

| DenseNet201 | LR | 14 | M16 | MobileNetV2 | SVM | 14 | M48 | VGG16 | NB | 14 | M80 |

| DenseNet201 | NB | 5 | M17 | ResNet101 | LR | 5 | M49 | VGG16 | SVM | 5 | M81 |

| DenseNet201 | NB | 8 | M18 | ResNet101 | LR | 8 | M50 | VGG16 | SVM | 8 | M82 |

| DenseNet201 | NB | 11 | M19 | ResNet101 | LR | 11 | M51 | VGG16 | SVM | 11 | M83 |

| DenseNet201 | NB | 14 | M20 | ResNet101 | LR | 14 | M52 | VGG16 | SVM | 14 | M84 |

| DenseNet201 | SVM | 5 | M21 | ResNet101 | NB | 5 | M53 | VGG19 | LR | 5 | M85 |

| DenseNet201 | SVM | 8 | M22 | ResNet101 | NB | 8 | M54 | VGG19 | LR | 8 | M86 |

| DenseNet201 | SVM | 11 | M23 | ResNet101 | NB | 11 | M55 | VGG19 | LR | 11 | M87 |

| DenseNet201 | SVM | 14 | M24 | ResNet101 | NB | 14 | M56 | VGG19 | LR | 14 | M88 |

| GoogleNet | LR | 5 | M25 | ResNet101 | SVM | 5 | M57 | VGG19 | NB | 5 | M89 |

| GoogleNet | LR | 8 | M26 | ResNet101 | SVM | 8 | M58 | VGG19 | NB | 8 | M90 |

| GoogleNet | LR | 11 | M27 | ResNet101 | SVM | 11 | M59 | VGG19 | NB | 11 | M91 |

| GoogleNet | LR | 14 | M28 | ResNet101 | SVM | 14 | M60 | VGG19 | NB | 14 | M92 |

| GoogleNet | NB | 5 | M29 | ResNet50 | LR | 5 | M61 | VGG19 | SVM | 5 | M93 |

| GoogleNet | NB | 8 | M30 | ResNet50 | LR | 8 | M62 | VGG19 | SVM | 8 | M94 |

| GoogleNet | NB | 11 | M31 | ResNet50 | LR | 11 | M63 | VGG19 | SVM | 11 | M95 |

| GoogleNet | NB | 14 | M32 | ResNet50 | LR | 14 | M64 | VGG19 | SVM | 14 | M96 |

References

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Ali, M.S. Does spasticity affect the postural stability and quality of life of children with cerebral palsy? J. Taibah Univ. Med Sci. 2021, 16, 761–766. [Google Scholar] [CrossRef]

- Park, E.-Y. Path analysis of strength, spasticity, gross motor function, and health-related quality of life in children with spastic cerebral palsy. Health Qual. Life Outcomes 2018, 16, 70. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roh, H.; Shin, S.; Han, J.; Lim, S. A deep learning-based medication behavior monitoring system. Math. Biosci. Eng. 2021, 18, 1513–1528. [Google Scholar] [CrossRef]

- Manogaran, G.; Shakeel, P.M.; Fouad, H.; Nam, Y.; Baskar, S.; Chilamkurti, N.; Sundarasekar, R. Wearable IoT Smart-Log Patch: An Edge Computing-Based Bayesian Deep Learning Network System for Multi Access Physical Monitoring System. Sensors 2019, 19, 3030. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, K.W.; Lee, E.-J.; Lee, J.S.; Jeong, J.; Choi, N.; Jo, S.; Jung, M.; Do, J.Y.; Kang, D.-W.; Lee, J.-G.; et al. Machine Learning–Based Automatic Rating for Cardinal Symptoms of Parkinson Disease. Neurology 2021, 96, e1761–e1769. [Google Scholar] [CrossRef] [PubMed]

- Heldman, D.A.; Espay, A.; LeWitt, P.A.; Giuffrida, J.P. Clinician versus machine: Reliability and responsiveness of motor endpoints in Parkinson's disease. Park. Relat. Disord. 2014, 20, 590–595. [Google Scholar] [CrossRef] [Green Version]

- Lin, F.-C.; Ngo, H.-H.; Dow, C.-R.; Lam, K.-H.; Le, H. Student Behavior Recognition System for the Classroom Environment Based on Skeleton Pose Estimation and Person Detection. Sensors 2021, 21, 5314. [Google Scholar] [CrossRef]

- Mehdizadeh, S.; Nabavi, H.; Sabo, A.; Arora, T.; Iaboni, A.; Taati, B. Concurrent validity of human pose tracking in video for measuring gait parameters in older adults: A preliminary analysis with multiple trackers, viewing angles, and walking directions. J. Neuroeng. Rehabilitation 2021, 18, 1–16. [Google Scholar] [CrossRef]

- Ota, M.; Tateuchi, H.; Hashiguchi, T.; Ichihashi, N. Verification of validity of gait analysis systems during treadmill walking and running using human pose tracking algorithm. Gait Posture 2021, 85, 290–297. [Google Scholar] [CrossRef]

- Rapczyński, M.; Werner, P.; Handrich, S.; Al-Hamadi, A. A Baseline for Cross-Database 3D Human Pose Estimation. Sensors 2021, 21, 3769. [Google Scholar] [CrossRef] [PubMed]

- Pagnon, D.; Domalain, M.; Reveret, L. Pose2Sim: An End-to-End Workflow for 3D Markerless Sports Kinematics—Part 1: Robustness. Sensors 2021, 21, 6530. [Google Scholar] [CrossRef] [PubMed]

- Sato, K.; Nagashima, Y.; Mano, T.; Iwata, A.; Toda, T. Quantifying normal and parkinsonian gait features from home movies: Practical application of a deep learning–based 2D pose estimator. PLoS ONE 2019, 14, e0223549. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rupprechter, S.; Morinan, G.; Peng, Y.; Foltynie, T.; Sibley, K.; Weil, R.S.; Leyland, L.-A.; Baig, F.; Morgante, F.; Gilron, R.; et al. A Clinically Interpretable Computer-Vision Based Method for Quantifying Gait in Parkinson’s Disease. Sensors 2021, 21, 5437. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Wu, P.; Chen, D. Application of Skeleton Data and Long Short-Term Memory in Action Recognition of Children with Autism Spectrum Disorder. Sensors 2021, 21, 411. [Google Scholar] [CrossRef] [PubMed]

- Takeda, I.; Yamada, A.; Onodera, H. Artificial Intelligence-Assisted motion capture for medical applications: A comparative study between markerless and passive marker motion capture. Comput. Methods Biomech. Biomed. Eng. 2020, 24, 864–873. [Google Scholar] [CrossRef]

- Kobayashi, T.; Orendurff, M.S.; Hunt, G.; Gao, F.; LeCursi, N.; Lincoln, L.S.; Foreman, K.B. The effects of an articulated ankle-foot orthosis with resistance-adjustable joints on lower limb joint kinematics and kinetics during gait in individuals post-stroke. Clin. Biomech. 2018, 59, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.A.; Mentiplay, B.F.; Hough, E.; Pua, Y.H. Three-dimensional cameras and skeleton pose tracking for physical function assessment: A review of uses, validity, current developments and Kinect alternatives. Gait Posture 2019, 68, 193–200. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Ferraris, C.; Cimolin, V.; Vismara, L.; Votta, V.; Amprimo, G.; Cremascoli, R.; Galli, M.; Nerino, R.; Mauro, A.; Priano, L. Monitoring of Gait Parameters in Post-Stroke Individuals: A Feasibility Study Using RGB-D Sensors. Sensors 2021, 21, 5945. [Google Scholar] [CrossRef]

- Han, K.; Yang, Q.; Huang, Z. A Two-Stage Fall Recognition Algorithm Based on Human Posture Features. Sensors 2020, 20, 6966. [Google Scholar] [CrossRef] [PubMed]

- Kidziński, Ł.; Yang, B.; Hicks, J.L.; Rajagopal, A.; Delp, S.L.; Schwartz, M.H. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 2020, 11, 1–10. [Google Scholar] [CrossRef]

- Lee, P.; Chen, T.-B.; Wang, C.-Y.; Hsu, S.-Y.; Liu, C.-H. Detection of Postural Control in Young and Elderly Adults Using Deep and Machine Learning Methods with Joint–Node Plots. Sensors 2021, 21, 3212. [Google Scholar] [CrossRef] [PubMed]

- Bakator, M.; Radosav, D. Deep Learning and Medical Diagnosis: A Review of Literature. Multimodal Technol. Interact. 2018, 2, 47. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.-G.; Jun, S.; Cho, Y.-W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep Learning in Medical Imaging: General Overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef] [PubMed]

- Ravi, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.-Z. Deep Learning for Health Informatics. IEEE J. Biomed. Health Informatics 2016, 21, 4–21. [Google Scholar] [CrossRef] [Green Version]

| Group | N | Mean Velocity (m/s) | STD Velocity (m/s) | Mean Time (s) | STD Time (s) |

|---|---|---|---|---|---|

| Skew | 102 | 0.68 | 0.08 | 7.48 | 0.84 |

| Straight | 108 | 0.69 | 0.08 | 7.39 | 0.91 |

| CNN | Image Size | Layers | Parametric Size (MB) | Layer of Features |

|---|---|---|---|---|

| AlexNet | 227 × 227 | 25 | 227 | 17th (4096 × 9216) |

| DenseNet201 | 224 × 224 | 709 | 77 | 706th (1000 × 1920) |

| GoogleNet | 224 × 224 | 144 | 27 | 142nd (1000 × 1024) |

| MobileNetV2 | 224 × 224 | 154 | 13 | 152nd (1000 × 1280) |

| ResNet101 | 224 × 224 | 347 | 167 | 345th (1000 × 2048) |

| ResNet50 | 224 × 224 | 177 | 96 | 175th (1000 × 2048) |

| VGG16 | 224 × 224 | 41 | 27 | 33rd (4096 × 25,088) |

| VGG19 | 224 × 224 | 47 | 535 | 39th (4096 × 25,088) |

| CNN | Classifier | Batch Size | Model | Kappa | Accuracy | Sen | Spe | PPV | NPV |

|---|---|---|---|---|---|---|---|---|---|

| ResNet101 | NB | 5 | M53 | 0.71 | 0.86 | 0.87 | 0.84 | 0.84 | 0.87 |

| AlexNet | NB | 11 | M7 | 0.65 | 0.83 | 0.81 | 0.84 | 0.83 | 0.82 |

| ResNet101 | NB | 14 | M56 | 0.65 | 0.83 | 0.81 | 0.84 | 0.83 | 0.82 |

| AlexNet | NB | 5 | M5 | 0.62 | 0.81 | 0.77 | 0.84 | 0.83 | 0.79 |

| VGG16 | NB | 14 | M80 | 0.62 | 0.81 | 0.77 | 0.84 | 0.83 | 0.79 |

| DenseNet201 | SVM | 11 | M23 | 0.62 | 0.81 | 0.68 | 0.94 | 0.91 | 0.75 |

| ResNet101 | NB | 8 | M54 | 0.59 | 0.79 | 0.90 | 0.69 | 0.74 | 0.88 |

| VGG19 | NB | 11 | M91 | 0.59 | 0.79 | 0.84 | 0.75 | 0.77 | 0.83 |

| AlexNet | NB | 14 | M8 | 0.59 | 0.79 | 0.81 | 0.78 | 0.78 | 0.81 |

| DenseNet201 | SVM | 5 | M21 | 0.59 | 0.79 | 0.74 | 0.84 | 0.82 | 0.77 |

| DenseNet201 | SVM | 14 | M24 | 0.59 | 0.79 | 0.77 | 0.81 | 0.80 | 0.79 |

| VGG16 | NB | 8 | M78 | 0.59 | 0.79 | 0.77 | 0.81 | 0.80 | 0.79 |

| AlexNet | NB | 8 | M6 | 0.59 | 0.79 | 0.71 | 0.88 | 0.85 | 0.76 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, P.; Chen, T.-B.; Liu, C.-H.; Wang, C.-Y.; Huang, G.-H.; Lu, N.-H. Identifying the Posture of Young Adults in Walking Videos by Using a Fusion Artificial Intelligent Method. Biosensors 2022, 12, 295. https://doi.org/10.3390/bios12050295

Lee P, Chen T-B, Liu C-H, Wang C-Y, Huang G-H, Lu N-H. Identifying the Posture of Young Adults in Walking Videos by Using a Fusion Artificial Intelligent Method. Biosensors. 2022; 12(5):295. https://doi.org/10.3390/bios12050295

Chicago/Turabian StyleLee, Posen, Tai-Been Chen, Chin-Hsuan Liu, Chi-Yuan Wang, Guan-Hua Huang, and Nan-Han Lu. 2022. "Identifying the Posture of Young Adults in Walking Videos by Using a Fusion Artificial Intelligent Method" Biosensors 12, no. 5: 295. https://doi.org/10.3390/bios12050295

APA StyleLee, P., Chen, T.-B., Liu, C.-H., Wang, C.-Y., Huang, G.-H., & Lu, N.-H. (2022). Identifying the Posture of Young Adults in Walking Videos by Using a Fusion Artificial Intelligent Method. Biosensors, 12(5), 295. https://doi.org/10.3390/bios12050295