Research on the Human Motion Recognition Method Based on Wearable

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Equipment

2.1.1. Design of the Human Movement Data Acquisition Platform

2.1.2. Software Host Computer Design

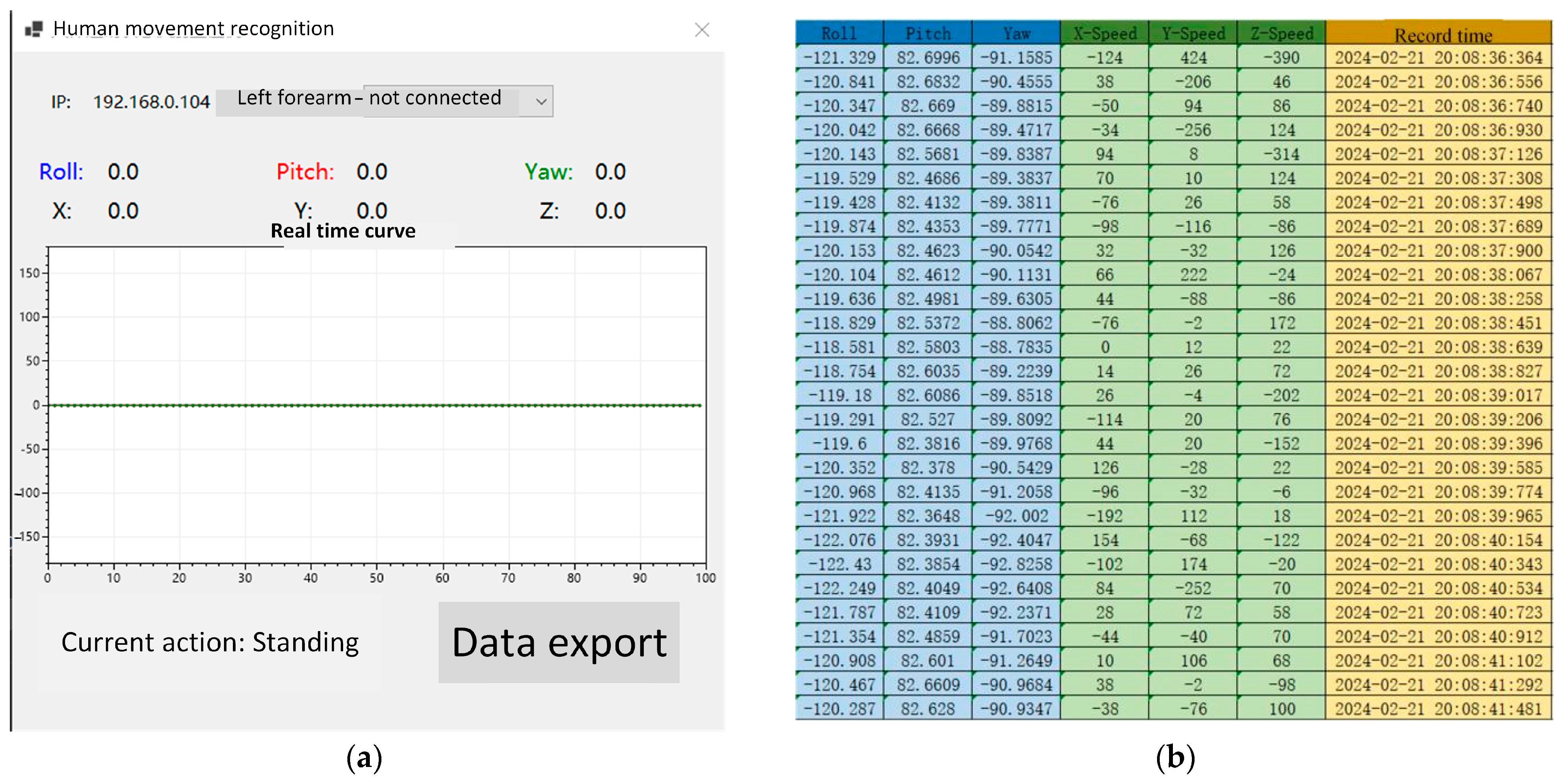

- Data selection area: The IP address currently connected to the human motion recognition software is displayed, and the sensor device is connected to the human motion recognition software. The function of data selection can be achieved using the lower pull bar, which can select nine different body parts.

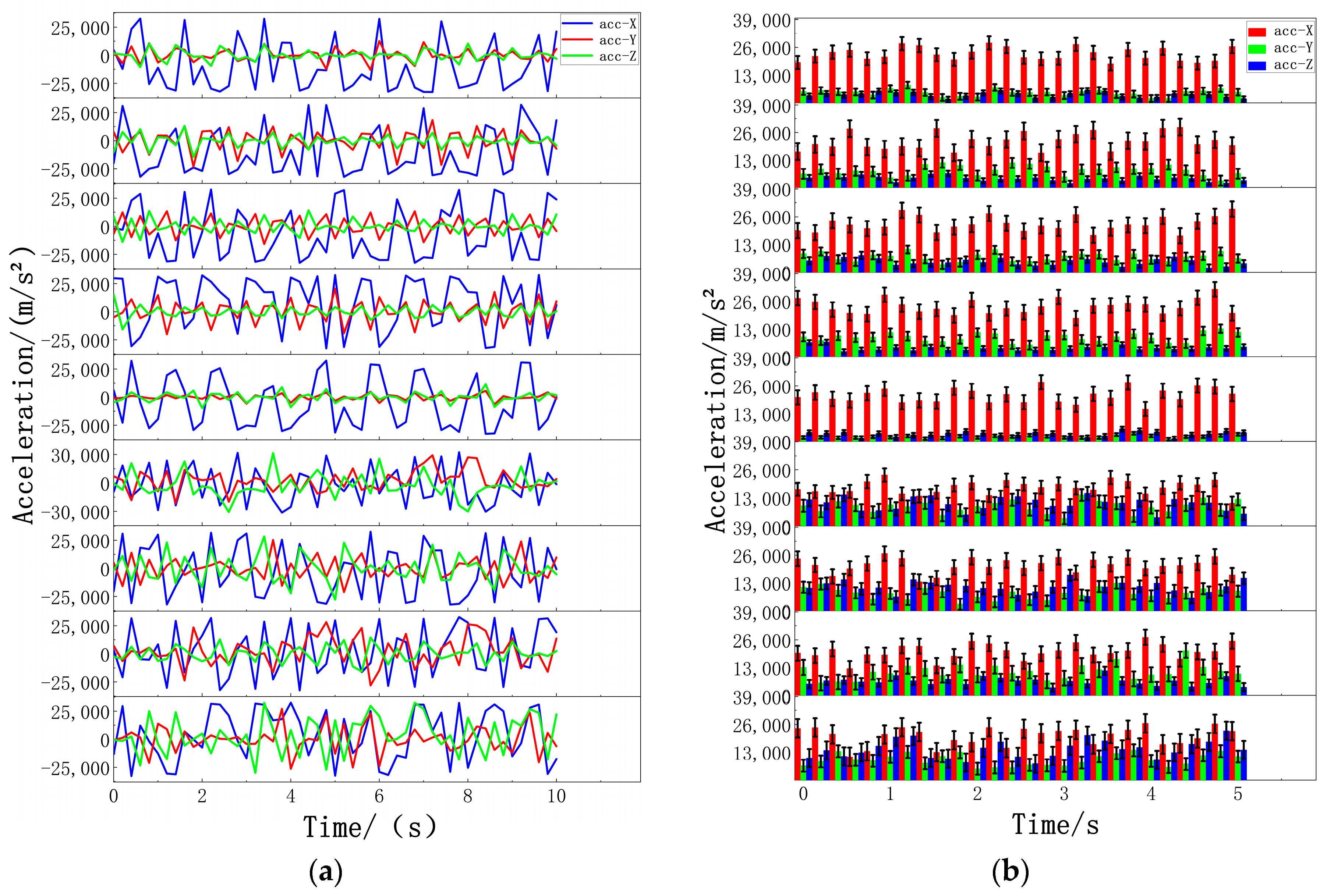

- Data exhibition area: The acceleration data and angular velocity data of the current human movement are displayed in the form of two rows and three columns to update the data movement information of the current user in real time.

- Data display area: The acceleration data of the data display area are displayed in the form of data lines.

- Recognition result display area: When the human body performs different actions, the action recognition result will be displayed in this area.

- Data storage area: The movement data under different movements of the human body can be stored. Different movement data of different body parts can be selected according to the data selection area. After freely setting the storage path, the acceleration and angular velocity data under the current motion state are stored as a table, as shown in Figure 3b.

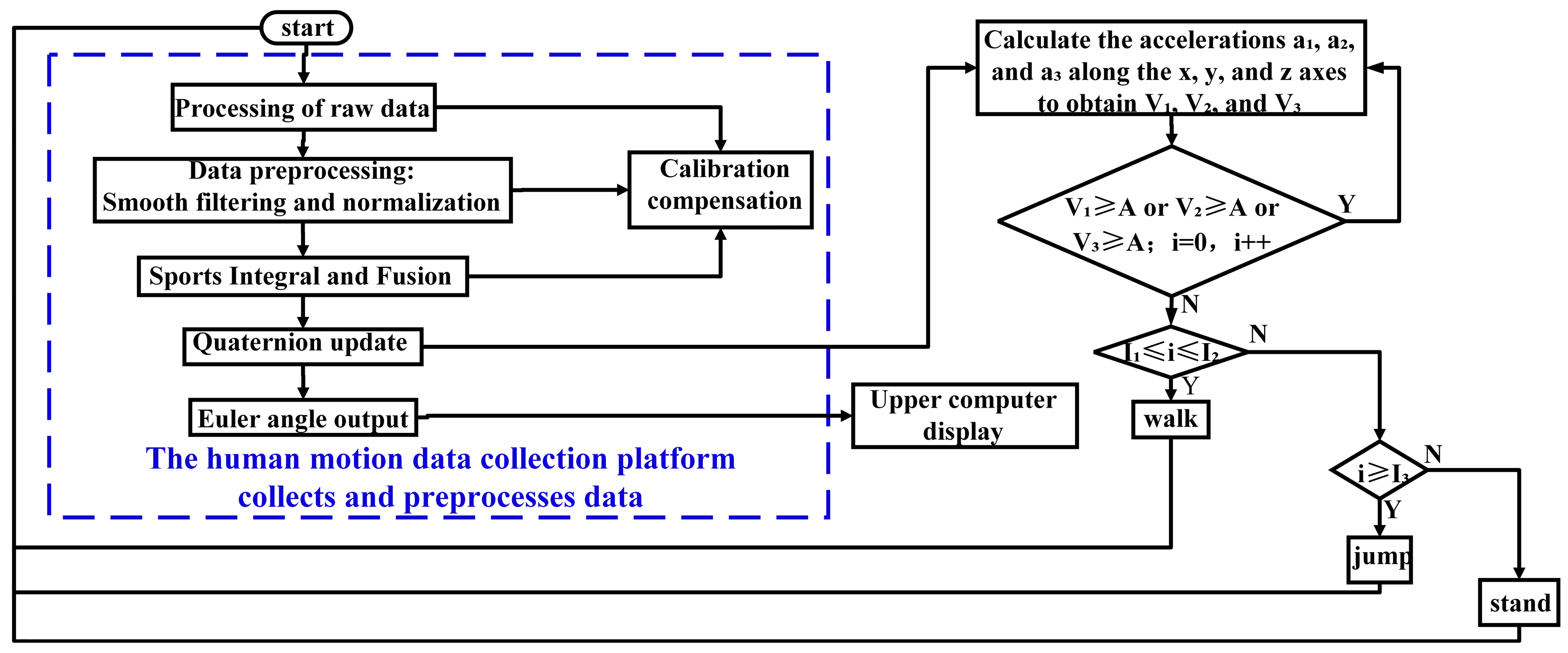

2.2. Human Motion Recognition Algorithm

2.2.1. DMP Attitude Solution Algorithm

- Data Acquisition phase

- 2.

- Quaternion integration stage

- 3.

- Accelerometer calibration phase

- 4.

- Filtering phase

2.2.2. Determination of the Motion State of the Threshold Algorithm

- Input data

- 2.

- Threshold setting

- When the value set by the threshold is greater than A, due to the high threshold value, only the jumping action can exceed the threshold to carry out the internal cycle of the algorithm. In contrast, the number of walking and standing actions is mostly less than the set threshold, resulting in poor recognition of walking and standing actions.

- When the threshold is less than A, because the threshold is too low, large numbers of walking and jumping actions can exceed the set threshold during the algorithm, resulting in confusion between walking and jumping actions and a poor recognition effect, and only standing actions have a good recognition effect.

- When the threshold value is set to A, this method has an ideal recognition effect on standing, walking, and jumping actions. In summary, when the test threshold is A, the recognition effect of this method is relatively ideal.

- 3.

- Output identification results

- When I1 ≤ i ≤ I2, the output body is currently walking.

- When i I3 is used, the current output of the human body is the jumping action; otherwise, the current output of the human body is the standing action. The algorithm flow chart is shown in Figure 4 (I1, I2, and I3 are the set numbers of sensors).

3. Results

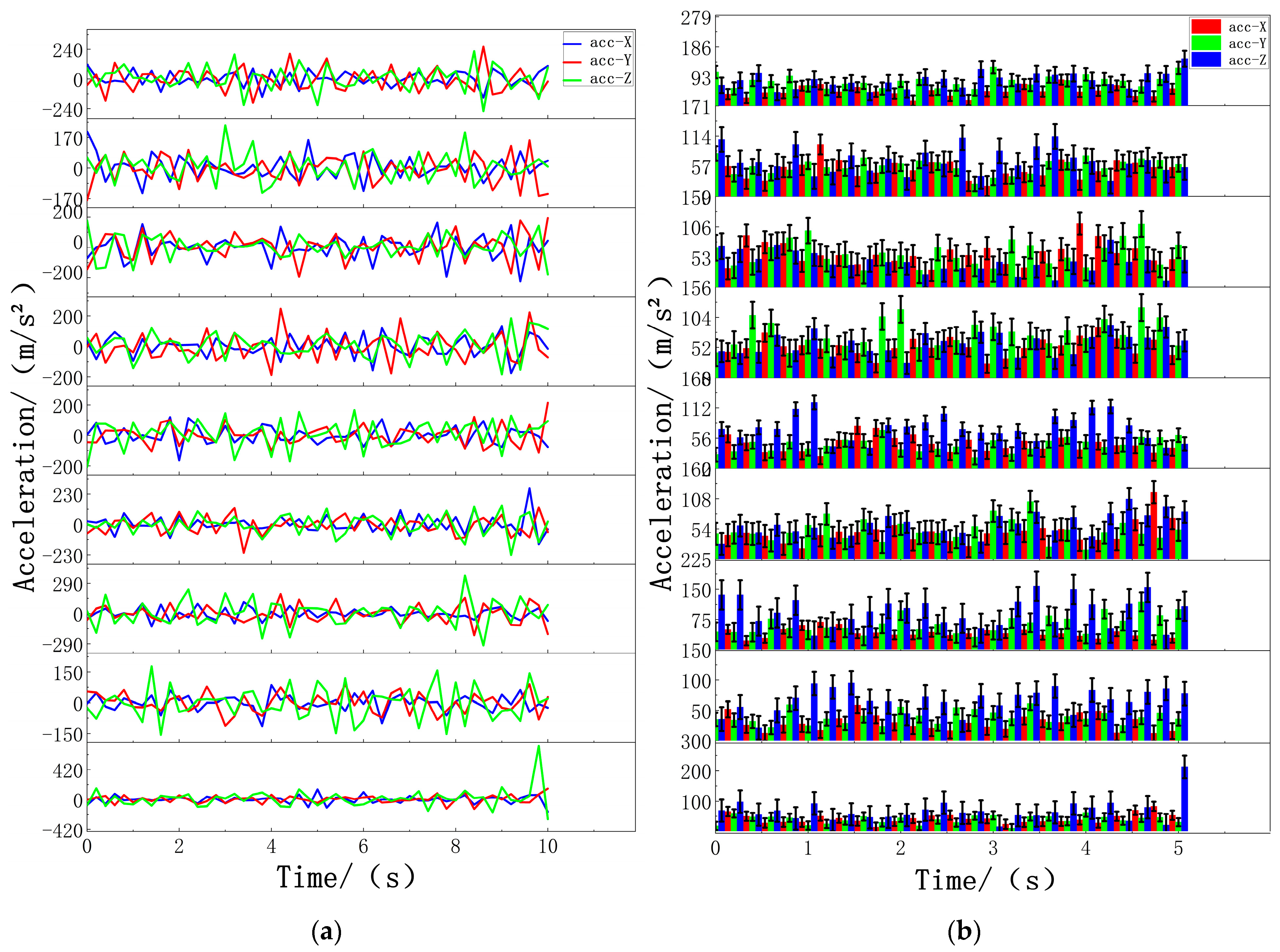

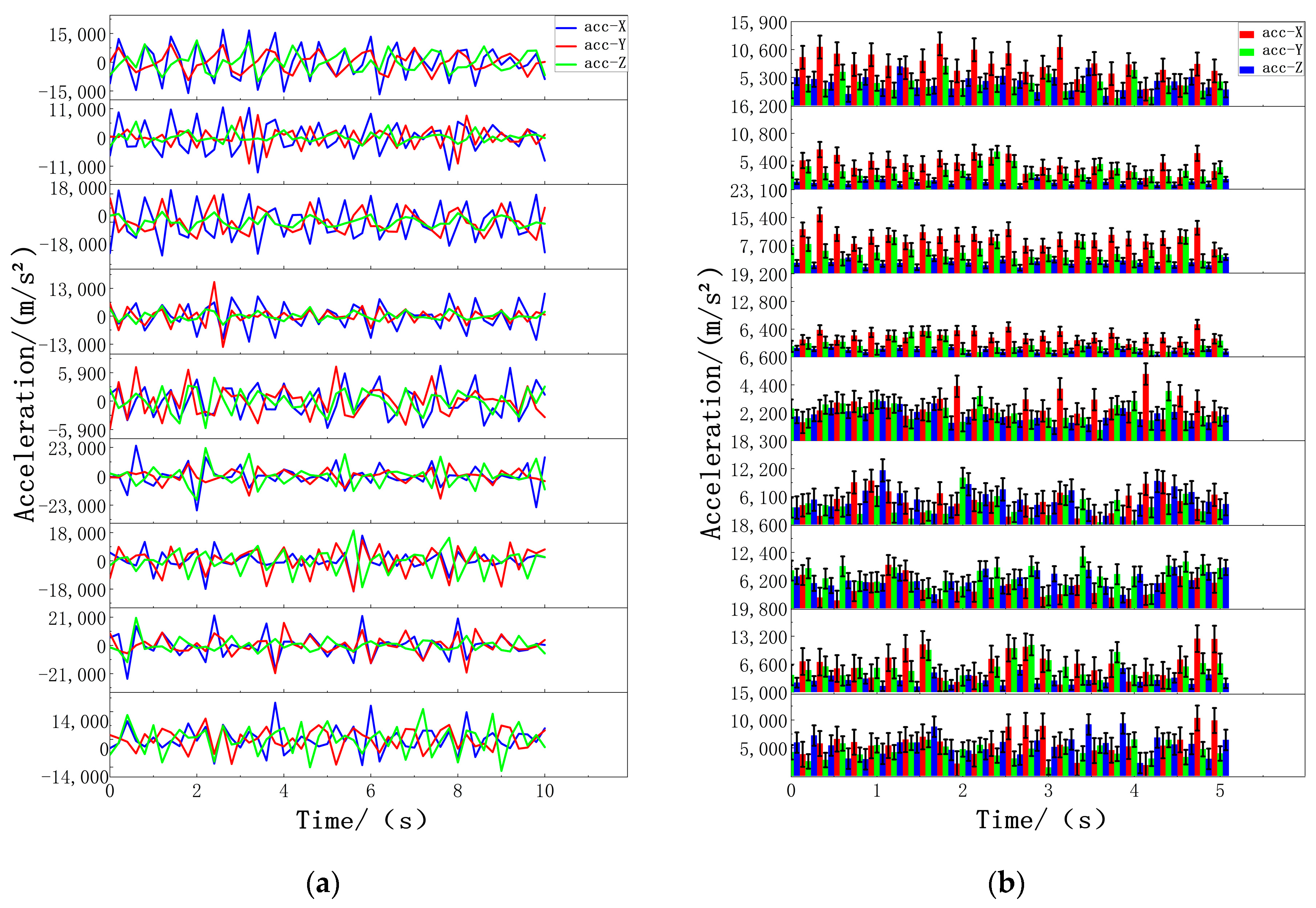

3.1. Experimental Design

3.2. Experimental Results and Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lu, L.; Zhang, J.; Xie, Y.; Gao, F.; Xu, S.; Wu, X.; Ye, Z. Wearable health devices in health care: Narrative systematic review. JMIR mHealth uHealth 2020, 8, e18907. [Google Scholar] [CrossRef]

- Iqbal, S.M.; Mahgoub, I.; Du, E.; Leavitt, M.A.; Asghar, W. Advances in healthcare wearable devices. NPJ Flex. Electron. 2021, 5, 9. [Google Scholar] [CrossRef]

- Yin, J.; Han, J.; Wang, C.; Zhang, B.; Zeng, X. A skeleton-based action recognition system for medical condition detection. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019; pp. 1–4. [Google Scholar]

- Jalal, A.; Batool, M.; Kim, K. Stochastic recognition of physical activity and healthcare using tri-axial inertial wearable sensors. Appl. Sci. 2020, 10, 7122. [Google Scholar] [CrossRef]

- Hu, W.; Zhang, J.; Huang, B.; Zhan, W.; Yang, X. Design of remote monitoring system for limb rehabilitation training based on action recognition. J. Phys. Conf. Ser. 2020, 1550, 032067. [Google Scholar] [CrossRef]

- Basuki, D.K.; Fhamy, R.Z.; Awal, M.I.; Iksan, L.H.; Sukaridhoto, S.; Wada, K. Audio based action recognition for monitoring elderly dementia patients. In Proceedings of the 2022 International Electronics Symposium (IES), Surabaya, Indonesia, 9–11 August 2022; pp. 522–529. [Google Scholar]

- Kohli, M.; Kar, A.K.; Prakash, V.G.; Prathosh, A.P. Deep Learning-Based Human Action Recognition Framework to Assess Children on the Risk of Autism or Developmental Delays. In Proceedings of the International Conference on Neural Information Processing, New Delhi, India, 22–26 November 2022; Springer Nature: Singapore, 2022; pp. 459–470. [Google Scholar]

- Kibbanahalli Shivalingappa, M.S. Real-Time Human Action and Gesture Recognition Using Skeleton Joints Information towards Medical Applications. Master’s Thesis, Université de Montréal, Montréal, QC, Canada, 2020. [Google Scholar]

- Alkhalifa, S.; Al-Razgan, M. Enssat: Wearable technology application for the deaf and hard of hearing. Multimed. Tools Appl. 2018, 77, 22007–22031. [Google Scholar] [CrossRef]

- Shi, H.; Zhao, H.; Liu, Y.; Gao, W.; Dou, S.-C. Systematic analysis of a military wearable device based on a multi-level fusion framework: Research directions. Sensors 2019, 19, 2651. [Google Scholar] [CrossRef]

- Mukherjee, A.; Misra, S.; Mangrulkar, P.; Rajarajan, M.; Rahulamathavan, Y. SmartARM: A smartphone-based group activity recognition and monitoring scheme for military applications. In Proceedings of the 2017 IEEE International Conference on Advanced Networks and Telecommunications Systems (ANTS), Bhubaneswar, India, 17–20 December 2017; pp. 1–6. [Google Scholar]

- Papadakis, N.; Havenetidis, K.; Papadopoulos, D.; Bissas, A. Employing body-fixed sensors and machine learning to predict physical activity in military personnel. BMJ Mil. Health 2023, 169, 152–156. [Google Scholar] [CrossRef]

- Park, S.Y.; Ju, H.; Park, C.G. Stance phase detection of multiple actions for military drill using foot-mounted IMU. Sensors 2016, 14, 16. [Google Scholar]

- Santos-Gago, J.M.; Ramos-Merino, M.; Vallarades-Rodriguez, S.; Álvarez-Sabucedo, L.M.; Fernández-Iglesias, M.J.; García-Soidán, J.L. Innovative use of wrist-worn wearable devices in the sports domain: A systematic review. Electronics 2019, 8, 1257. [Google Scholar] [CrossRef]

- Zhang, Y.; Hou, X. Application of video image processing in sports action recognition based on particle swarm optimization algorithm. Prev. Med. 2023, 173, 107592. [Google Scholar] [CrossRef]

- Kondo, K.; Mukaigawa, Y.; Yagi, Y. Wearable imaging system for capturing omnidirectional movies from a first-person perspective. In Proceedings of the 16th ACM Symposium on Virtual Reality Software and Technology, Kyoto, Japan, 18–20 November 2009; pp. 11–18. [Google Scholar]

- Srinivasan, P. Web-of-Things Solution to Enrich TV Viewing Experience Using Wearable and Ambient Sensor Data. 2014. Available online: https://www.w3.org/2014/02/wot/papers/srinivasan.pdf (accessed on 1 May 2024).

- Yin, R.; Wang, D.; Zhao, S.; Lou, Z.; Shen, G. Wearable sensors-enabled human–machine interaction systems: From design to application. Adv. Funct. Mater. 2021, 31, 2008936. [Google Scholar] [CrossRef]

- Kang, J.; Lim, J. Study on augmented context interaction system for virtual reality animation using wearable technology. In Proceedings of the 7th International Conference on Information Technology Convergence and Services, Vienna, Austria, 26–27 May 2018; pp. 47–58. [Google Scholar]

- Sha, X.; Wei, G.; Zhang, X.; Ren, X.; Wang, S.; He, Z.; Zhao, Y. Accurate recognition of player identity and stroke performance in table tennis using a smart wristband. IEEE Sens. J. 2021, 21, 10923–10932. [Google Scholar] [CrossRef]

- Zhang, H.; Alrifaai, M.; Zhou, K.; Hu, H. A novel fuzzy logic algorithm for accurate fall detection of smart wristband. Trans. Inst. Meas. Control. 2019, 42, 786–794. [Google Scholar] [CrossRef]

- Reeder, B.; David, A. Health at hand: A systematic review of smart watch uses for health and wellness. J. Biomed. Inform. 2016, 63, 269–276. [Google Scholar] [CrossRef] [PubMed]

- Lu, T.C.; Fu, C.M.; Ma, M.H.M.; Fang, C.C.; Turner, A.M. Healthcare applications of smart watches. Appl. Clin. Inform. 2016, 7, 850–869. [Google Scholar] [CrossRef] [PubMed]

- Mauldin, T.R.; Canby, M.E.; Metsis, V.; Ngu, A.H.H.; Rivera, C.C. SmartFall: A smartwatch-based fall detection system using deep learning. Sensors 2018, 18, 3363. [Google Scholar] [CrossRef] [PubMed]

- Mitrasinovic, S.; Camacho, E.; Trivedi, N.; Logan, J.; Campbell, C.; Zilinyi, R.; Lieber, B.; Bruce, E.; Taylor, B.; Martineau, D.; et al. Clinical and surgical applications of smart glasses. Technol. Health Care 2015, 23, 381–401. [Google Scholar] [CrossRef]

- Kumar, N.M.; Krishna, P.R.; Pagadala, P.K.; Kumar, N.S. Use of smart glasses in education-a study. In Proceedings of the2018 2nd International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC) I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 30–31 August 2018; pp. 56–59. [Google Scholar]

- Qiang, S. Analysis of the Impact of Intelligent Sports Devices on Youth Sports. China Youth Res. 2020, 22–29. [Google Scholar]

- Yulun, W. Exploration of wearable device information security. China New Commun. 2019, 21, 130. [Google Scholar]

- Jing, Y.; Jun, G.; Lin, G. Research on Human Action Classification Based on Skeleton Features. Comput. Technol. Dev. 2017, 27, 83–87. [Google Scholar]

- Long, N.; Lei, Y.; Peng, L.; Xu, P.; Mao, P. A scoping review on monitoring mental health using smart wearable devices. Math. Biosci. Eng. 2022, 19, 7899–7919. [Google Scholar] [CrossRef] [PubMed]

- Yadav, S.K.; Luthra, A.; Pahwa, E.; Tiwari, K.; Rathore, H.; Pandey, H.M.; Corcoran, P. DroneAttention: Sparse weighted temporal attention for drone-camera based activity recognition. Neural Netw. 2023, 159, 57–69. [Google Scholar] [CrossRef] [PubMed]

- He, D.; Zhou, Z.; Gan, C.; Li, F.; Liu, X.; Li, Y.; Wang, L.; Wen, S. Stnet: Local and global spatial-temporal modeling for action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8401–8408. [Google Scholar]

- Gholamiangonabadi, D.; Grolinger, K. Personalized models for human activity recognition with wearable sensors: Deep neural networks and signal processing. Appl. Intell. 2023, 53, 6041–6061. [Google Scholar] [CrossRef]

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensor-based human activity recognition with spatio-temporal deep learning. Sensors 2021, 21, 2141. [Google Scholar] [CrossRef] [PubMed]

- Qu, J.; Wu, C.; Li, Q.; Wang, T.; Soliman, A.H. Human fall detection algorithm design based on sensor fusion and multi-threshold comprehensive judgment. Sens. Mater. 2020, 32, 1209–1221. [Google Scholar] [CrossRef]

- Wenfeng, L.; Bingmeng, Y. Human activity state recognition based on single three-axis accelerometer. J. Huazhong Univ. Sci. Technol. (Nat. Sci. Ed.) 2016, 44, 58–62. [Google Scholar] [CrossRef]

- Zhuang, W.; Chen, Y.; Su, J.; Wang, B.; Gao, C. Design of human activity recognition algorithms based on a single wearable IMU sensor. Int. J. Sens. Netw. 2019, 30, 193–206. [Google Scholar] [CrossRef]

- Prasad, A.; Tyagi, A.K.; Althobaiti, M.M.; Almulihi, A.; Mansour, R.F.; Mahmoud, A.M. Human activity recognition using cell phone-based accelerometer and convolutional neural network. Appl. Sci. 2021, 11, 12099. [Google Scholar] [CrossRef]

- Khalifa, S.; Lan, G.; Hassan, M.; Seneviratne, A.; Das, S.K. Harke: Human activity recognition from kinetic energy harvesting data in wearable devices. IEEE Trans. Mob. Comput. 2017, 17, 1353–1368. [Google Scholar] [CrossRef]

| Test Motor Action (Unit: Unit) | Identification Result | Recognition Rate (%) | |||

|---|---|---|---|---|---|

| Stand (Unit: Unit) | Walk (Unit: Unit) | Jump (Unit: Unit) | |||

| Stand | 600 | 590 | 8 | 2 | 98.33% |

| Walk | 600 | 17 | 580 | 3 | 96.67% |

| Jump | 600 | 6 | 26 | 568 | 94.60% |

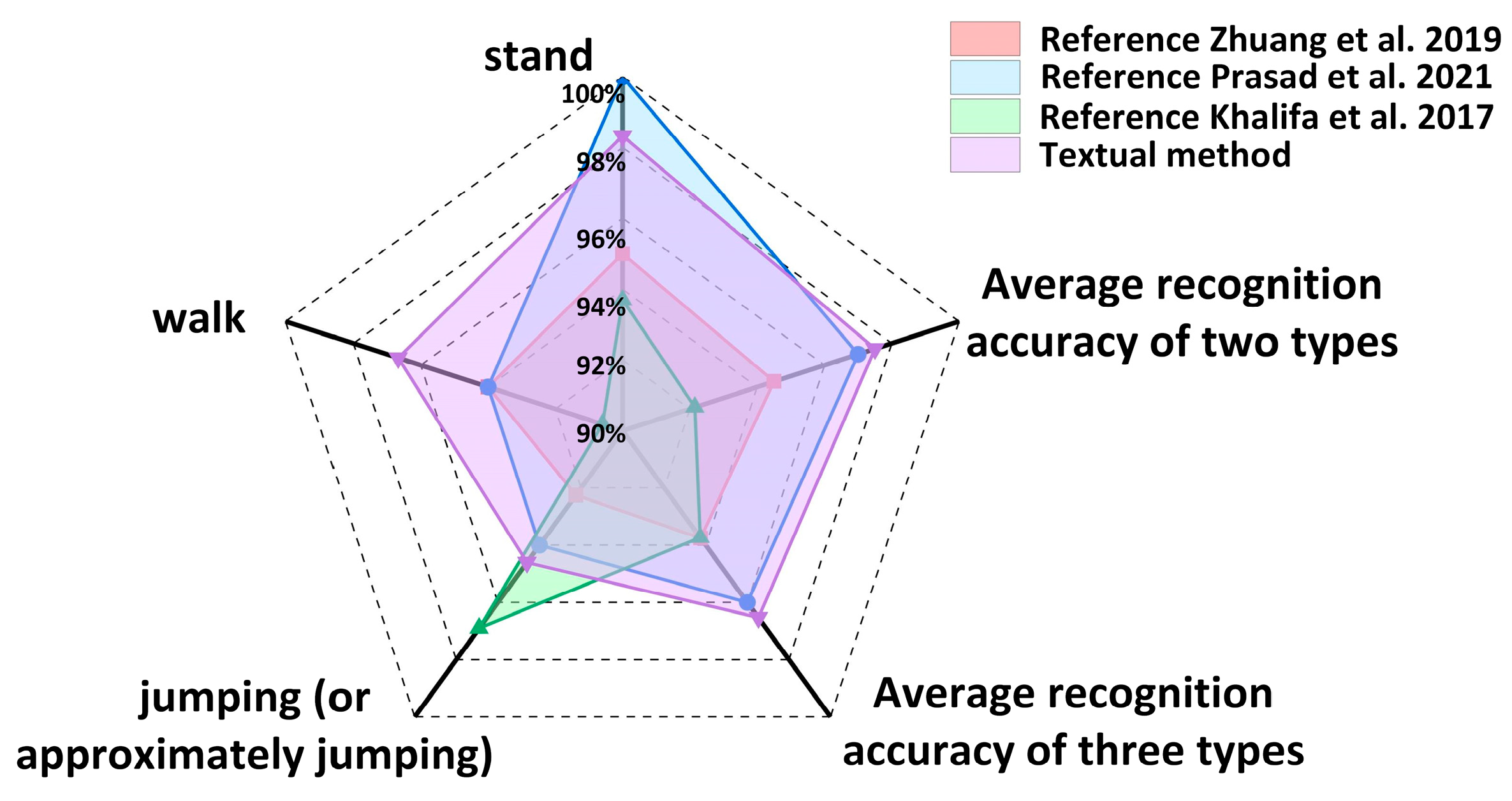

| Reference [37] | Reference [38] | Reference [39] | Textual Method | |

|---|---|---|---|---|

| Stand | 95.00% | 100.00% | 93.70% | 98.33% |

| Walk | 94.00% | 94.00% | 90.60% | 96.67% |

| jumping (or approximately jumping) | 92.25% | 94.00% | 96.90% | 94.60% |

| Average recognition accuracy of two types | 94.50% | 97.00% | 92.15% | 97.50% |

| Average recognition accuracy of three types | 93.75% | 96.00% | 93.73% | 96.53% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Jin, X.; Huang, Y.; Wang, Y. Research on the Human Motion Recognition Method Based on Wearable. Biosensors 2024, 14, 337. https://doi.org/10.3390/bios14070337

Wang Z, Jin X, Huang Y, Wang Y. Research on the Human Motion Recognition Method Based on Wearable. Biosensors. 2024; 14(7):337. https://doi.org/10.3390/bios14070337

Chicago/Turabian StyleWang, Zhao, Xing Jin, Yixuan Huang, and Yawen Wang. 2024. "Research on the Human Motion Recognition Method Based on Wearable" Biosensors 14, no. 7: 337. https://doi.org/10.3390/bios14070337

APA StyleWang, Z., Jin, X., Huang, Y., & Wang, Y. (2024). Research on the Human Motion Recognition Method Based on Wearable. Biosensors, 14(7), 337. https://doi.org/10.3390/bios14070337