Abstract

Antiviral peptides (AVPs) represent a promising strategy for addressing the global challenges of viral infections and their growing resistances to traditional drugs. Lab-based AVP discovery methods are resource-intensive, highlighting the need for efficient computational alternatives. In this study, we developed five non-trained but supervised multi-query similarity search models (MQSSMs) integrated into the StarPep toolbox. Rigorous testing and validation across diverse AVP datasets confirmed the models’ robustness and reliability. The top-performing model, M13+, demonstrated impressive results, with an accuracy of 0.969 and a Matthew’s correlation coefficient of 0.71. To assess their competitiveness, the top five models were benchmarked against 14 publicly available machine-learning and deep-learning AVP predictors. The MQSSMs outperformed these predictors, highlighting their efficiency in terms of resource demand and public accessibility. Another significant achievement of this study is the creation of the most comprehensive dataset of antiviral sequences to date. In general, these results suggest that MQSSMs are promissory tools to develop good alignment-based models that can be successfully applied in the screening of large datasets for new AVP discovery.

1. Introduction

Antiviral peptides (AVPs) represent a promising, innovative, and unconventional approach in the ongoing fight against viral infections, a persistent global health concern [1]. The ever-present threat of viral outbreaks underscores the critical need for effective antiviral treatments. AVPs have emerged as a promising alternative because of their unique ability to target various stages of viral infections [2].

Viruses, notorious for their vast genetic diversity and adept replication within host cells, present immense challenges in disease containment [3]. Traditional antiviral drugs, although effective in certain instances, often have limited reach and may become obsolete because of resistance [4]. This treatment void has catalysed research into AVPs as alternative antiviral agents. AVPs have shown efficacy against numerous viruses, including lethal ones, like HIV [5], influenza [6], hepatitis [7], and emerging zoonotic viruses, such as Ebola [8] and Zika [9].

The therapeutic allure of AVPs is rooted in their distinctive mechanisms of action. They hinder viral attachment, fusion, and replication, offering a comprehensive strategy against viral infections [10]. Derived from synthetic libraries or sections of natural proteins, these peptides boast characteristics vital for their antimicrobial actions. Their low toxicity, high specificity, and efficiency mark them as potent contenders in medical applications [11].

Discovering and validating AVPs through lab experiments is resource-intensive, but computational strategies are gaining traction for their potential in identifying peptides with antimicrobial properties. Crafting robust computational models is imperative for effectively pinpointing potential AVPs. Currently, the strategy for processing expansive peptide databanks relies on machine learning (ML), enabling in-depth multidimensional data analysis. Conventional ML algorithms, such as SVM [12,13,14], kNN [15], RF [16,17,18], NN [19], and deep-learning (DL) algorithms [20,21,22], have shown prowess in discerning patterns in peptide sequences and assessing new ones.

However, a point of contention in this domain is the efficacy of DL models in forecasting AVPs. Many DL techniques necessitate vast experimentally validated peptide sequence datasets, which are often lacking. One remedy is “data augmentation”, although it is mostly untapped [21]. As García-Jacas et al. highlighted [23], DL does not significantly outpace traditional ML, and their algorithmic outputs frequently intersect. Additional challenges include the overrepresentation of specific sequences and imbalanced data, which can distort evaluations based purely on accuracy. Moreover, reproducibility challenges persist, as not all scientists disclose their source code or datasets, obstructing these methods’ widespread acceptance. Therefore, although ML-centric methods hold promise in predicting active peptides, their refinement is an ongoing endeavour [24,25].

Recent studies have showcased a non-trained supervised technique, the multi-query similarity search (MQSS), for predicting peptide bioactivities, including haemolysis [26], tumour-homing [27], and antiparasitic [28] activities, with impressive results. This method trumps conventional ML methods in several ways: it is user friendly, does not rely on web server availability, consumes fewer computational resources, and processes sequences with non-standard amino acids or varying lengths. Remarkably, MQSS models (MQSSMs) function without extensive training, relying instead on fine-tuning certain parameters, like the sequence alignment type and the similarity cutoff value. They can be developed without needing a negative dataset, a significant advantage given the scarcity of validated negative sequences, ensuring the learning phase is not skewed by data imbalances.

In prior research, we explored the AVP chemical space using interactive mining and complex networks via the StarPep toolbox (https://github.com/Grupo-Medicina-Molecular-y-Traslacional/StarPep (v0.8.5, accessed on 17 April 2023), [29]). We generated, disclosed, and made public diverse reducts of the AVP chemical space by implementing scaffold extraction in half-space proximal networks formed from the predefined chemical space. These scaffolds now serve as a foundation for designing reference/query datasets for MQSSM creation [30]. As the MQSS technique has not been applied to AVPs, our primary objective is to create MQSSMs for AVPs that match or surpass existing predictors while addressing the shortcomings of ML predictors. The MQSSMs were crafted using the available resources in StarPep toolbox. Our overarching goal is to enhance the methodologies for identifying and advancing AVPs, potentially revolutionising antiviral therapies. Additionally, we have assembled the most extensive dataset of antiviral sequences to date, essential for developing effective AVP predictors and ensuring robust model validation.

2. Data and Methods

2.1. The Multi-Query Similarity Search Model (MQSSM): The Overall Approach

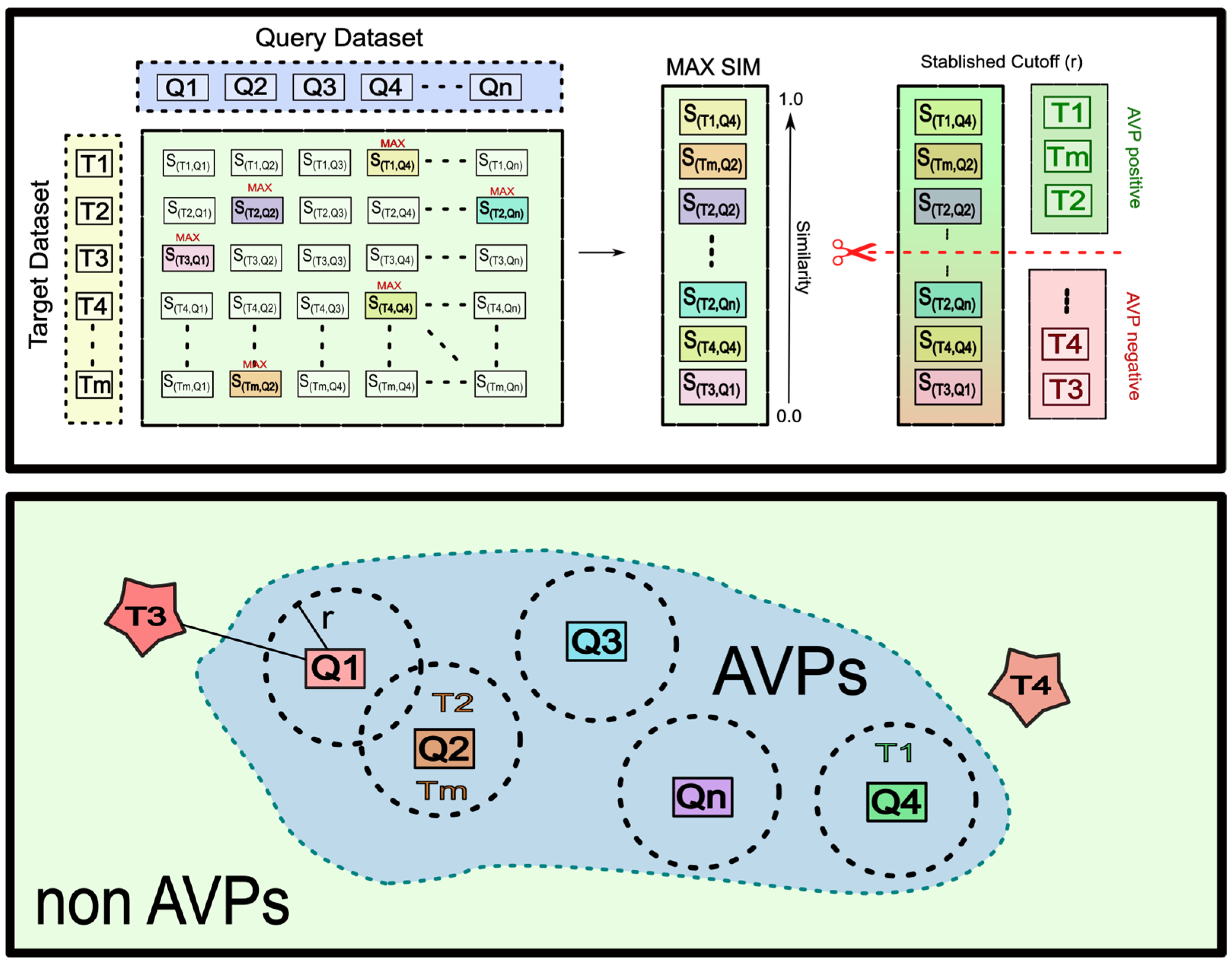

The models proposed herein are based on similarity searching. Multiple positive sequences are employed as queries to predict the antiviral activity of a target dataset. This one-class model identifies potential positive sequences based on their similarity to the provided references. The construction of these models involves tuning three different parameters:

Query Dataset: This is defined as the reference dataset that the model uses for predictions.

Pairwise Sequence Alignment Algorithm: Smith–Waterman (local alignment) and Needleman–Wunsch (global alignment) algorithms are employed, using the Blossum-62 substitution matrix for calculating pairwise similarity scores.

Similarity Threshold: The MQSS is a fusion model, specifically, a group fusion model. In group fusion, a similarity is computed for each reference sequence (q) and target sequence (t), denoted as S (q, t). All the pairwise similarity scores are combined for each sequence (t), and the MAX-SIM rule is applied. The similarity scores are then ranked in decreasing order. A similarity threshold (c) is set to determine which sequences are considered to have a positive activity. For a better understanding of this process, a graphical representation is provided in Scheme 1.

Scheme 1.

Overview of the proposed multi-query similarity search model (MQSSM) for antiviral peptide (AVP) prediction.

2.2. Construction of Query/Reference Datasets

When constructing a query dataset, a critical consideration is its ability to encompass a significant portion of the antiviral active chemical space without disproportionately representing any category of AVPs or neglecting rare sequences. To achieve this, we leveraged the reductions obtained in a previous study [30].

Recalling the Scaffold Extraction Procedure

To ensure the comprehensive coverage of the antiviral active chemical space, we established a half-space proximal network (HSPN) using the 4663 antiviral sequences stored in StarPepDB [31], recognised as one of the most extensive databases of biologically active peptides to date (36). Several scaffold (representative AVP subset) extractions were executed using the resources available in the StarPep toolbox. During this process, we adjusted the alignment algorithm type, the pairwise identity percentage, and the centrality measures (community hub bridge [32] and harmonic [33,34]). These adjustments yielded a total of 20 scaffolds or representative AVP subsets. Subsequently, we thoroughly examined these scaffolds using a Dover Analyser [35] to assess the extent of the similarity overlap between them.

According to these observations, we selected 10 scaffolds for their representativeness and diversity. Furthermore, an additional five scaffolds were crafted by combining some of the 20 previously generated scaffolds, considering their pairwise identity percentages. These 15 scaffolds served as the foundational datasets used as references for the MQSSMs. The particularities of each of the mentioned scaffolds are briefly explained in Table S1.1 in Supporting Information File S1 (File S1).

2.3. Target Datasets for Calibration and Validation of MQSSMs

We conducted a comprehensive literature review to gather a range of positive and negative sequences for testing our models. Ultimately, we selected 15 datasets, containing positive and negative sequences from synthetic and natural origins. These datasets from the literature played a crucial role in various stages of model calibration and validation. All the mentioned datasets are depicted in Table 1.

Table 1.

List of all the datasets used for calibration and validation stages.

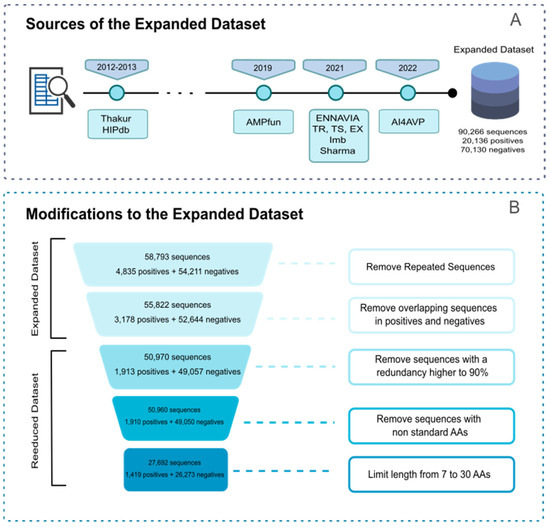

Furthermore, we created two new datasets, amassing a total of 20,136 positive sequences and 70,130 negative sequences. These 90,266 sequences underwent several filters to eliminate redundancy, resulting in an “expanded” dataset comprising 55,822 sequences, including 3178 positive and 52,644 negative sequences. The different sources for the “expanded” dataset are specified in Scheme 2.

Scheme 2.

(A) Sources for the “expanded” dataset; (B) filter applied to obtain the “reduced” dataset.

Additionally, for benchmarking against state-of-the-art methods, we curated a “reduced” dataset with specific criteria, excluding non-standard amino acids and restricting sequence lengths to between 7 and 30 amino acids, as commonly specified by many existing predictors. Refer to Scheme 2 for a better depiction of the distinct filters that this dataset went through. The “reduced” dataset consists of 27,692 sequences, encompassing 1419 positive and 26,273 negative sequences. Both the “expanded and reduced” versions can be found in the Supplementary Materials (File S2).

2.4. Construction, Selection, and Improvement of MQSSMs

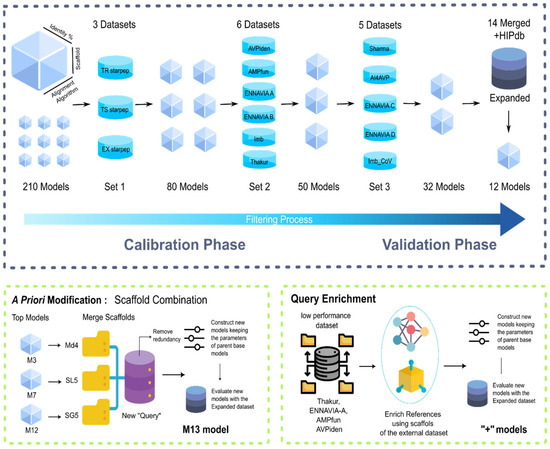

As mentioned earlier, three primary parameters require fine-tuning for the construction of multi-query similarity search models (MQSSMs). The first parameter is the choice of the query dataset, for which 15 different scaffolds were evaluated. The second parameter is the selection of the alignment algorithm, alternating between global and local alignments. Lastly, the similarity threshold was varied from 0.3 to 0.9. These parameters collectively allowed for the potential creation of 210 variations of MQSSMs. Consequently, reducing the number of models was imperative.

To select the best-performing models, a two-phase approach was implemented, as depicted in Scheme 3. The first phase, known as the calibration phase, aimed to significantly reduce the number of models and identify key trends in parameter calibration. The calibration phase was further divided into two rounds. In the first round, 210 models were assessed using the datasets TS_StarPep, TR_StarPep, and Ex_StarPep, resulting in a reduction to 80 models. The second round of the calibration phase involved testing the models against six different datasets: AVPIden, AMPfun, ENNAVIA-A, ENNAVIA-B, Imb, and Thakur. After this evaluation, a set of 50 models remained.

Scheme 3.

Workflow for the selection and validation of the models. The first section of the scheme (top row) summarises the model selection process. The second section (bottom row) focuses on model improvement.

These 50 models subsequently entered the validation stage, where the primary objective was to fine-tune parameter values while also assessing the models against more specific datasets. Once again, the validation phase was subdivided into two rounds. In the first round, the 50 models were tested against five datasets: Sharma, AI4AVP, ENNAVIA-C, ENNAVIA-D, and Imb_CoV, with the last three containing anti-SARS-CoV-2 sequences. This round led to the selection of 32 models, which were further evaluated against the expanded datasets. Following this analysis, a final set of 12 models was chosen, comprising six that utilised global alignment and six that employed local alignment (M1–M12).

As 12 models still represented a considerable number, an examination was conducted to assess the degree of overlap in the sequences recovered using these different models. After a cursory examination, three base models were selected, referred to as M3, M7, and M12.

2.5. Scaffold Fusion

To enhance the models’ performance, scaffold fusion was performed with the aim for expanding the reference dataset. This fusion process involved combining the individual scaffolds from the best-performing base models (M3, M7, and M12) to create a new query dataset. Once this new query dataset was constructed, 14 MQSSMs were generated using it, including variations in the alignment algorithm and the similarity threshold during the creation of these models.

Subsequently, these models underwent the previously described workflow of calibration and validation. Following this process, the best-performing model was identified and is now referred to as M13 (Scheme 3).

2.6. Scaffold Enrichment

To further enhance the base models, a query enrichment strategy was implemented. This involved gathering the positive sequences from the datasets used in the calibration phase, round 2 (Thakur, ENNAVIA-A, AMPfun, and Imb), into a single dataset. This process resulted in 2403 unique sequences. To ensure the absence of these sequences from the currently top-performing model’s scaffolds, a pairwise similarity comparison was conducted using Dover Analyser (https://mobiosd-hub.com/doveranalyzer/, v0.1.2 accessed on 24 November 2023). Subsequently, these sequences were used to construct a half-space proximal network (HSPN), similar to the one constructed for the StarPepDB AVP sequences.

With the newly curated and validated dataset in hand, it was integrated into the StarPep toolbox to construct an HSPN, similar to the HSPNs developed previously for the entire AVP space. Following HSPN construction, scaffold extraction was applied to the network, varying the centrality measure between harmonic and the community hub bridge. The alignment algorithm type was adjusted between local and global, considering only 80% and 90% as the sequence identity percentages. This process yielded 8 new scaffolds, designated as “external scaffolds”. These scaffolds were utilised to develop new MQSSMs employing the same methodology as before, resulting in 112 new models. These newly generated models underwent testing against the calibration and validation workflow of the 15 datasets to identify the most effective external scaffolds.

Finally, two models, named E1 and E2, were selected. The scaffolds from models E1 and E2 were added to those of models M3, M7, M12, and M13, ensuring that there were no repeated sequences in the enriched scaffolds. Once the enriched scaffolds were obtained, the models retained the alignment type and similarity cutoff of the base models, and they were tested against the 14 individual datasets and the expanded dataset (Scheme 3).

2.7. Performance Evaluation

The performances of all the models and state-of-the-art models were measured using the metrics of accuracy (ACC), sensitivity (SN), specificity (SP), Mathew’s correlation coefficient (MCC), false positive rate (FPR), and F1 score as follows [41]:

where TP is the true positives, TN is the true negatives, FP is the false positives, and FN is the false negatives.

Additionally, the Friedman test was employed to analyse the performance rankings of the various MQSS models across all the described metrics. The corresponding significance test was carried out using KEEL software (http://www.keel.es, v3.0, accessed on 1 December 2023 [42]) and the non-parametric statistical analysis module. This non-parametric test is well suited for comparing multiple models across different datasets or conditions, especially when dealing with ranked data, as in the present case.

2.8. Comparison with State-of-the-Art Predictors

To assess the robustness of the MQSSMs, a selection of state-of-the-art predictors was made, primarily by considering their availability through web services and ease of implementation. Table 2 provides a summary of the predictors used in this study. It is important to highlight that the use of the predictors was based on their computational availability, and some of these models show a reduced domain of application because of their training, for instance, the classifier developed to predict the antiviral actions of plant-derived peptides, PTPAMP (http://www.nipgr.ac.in/PTPAMP/, accessed on 24 August 2024). However, most of these classifiers have a general application domain because they were trained with sequences from different origins and with actions against various types of viruses. Ultimately, this experiment aims to evaluate the true merit of the predictors regardless of the learning performed. For comparison with the predictors, the reduced dataset was employed, as previously stated.

Table 2.

Web servers and standalone predictors used for comparison.

3. Results and Discussion

3.1. Performances of MQSSMs in the Calibration Phase

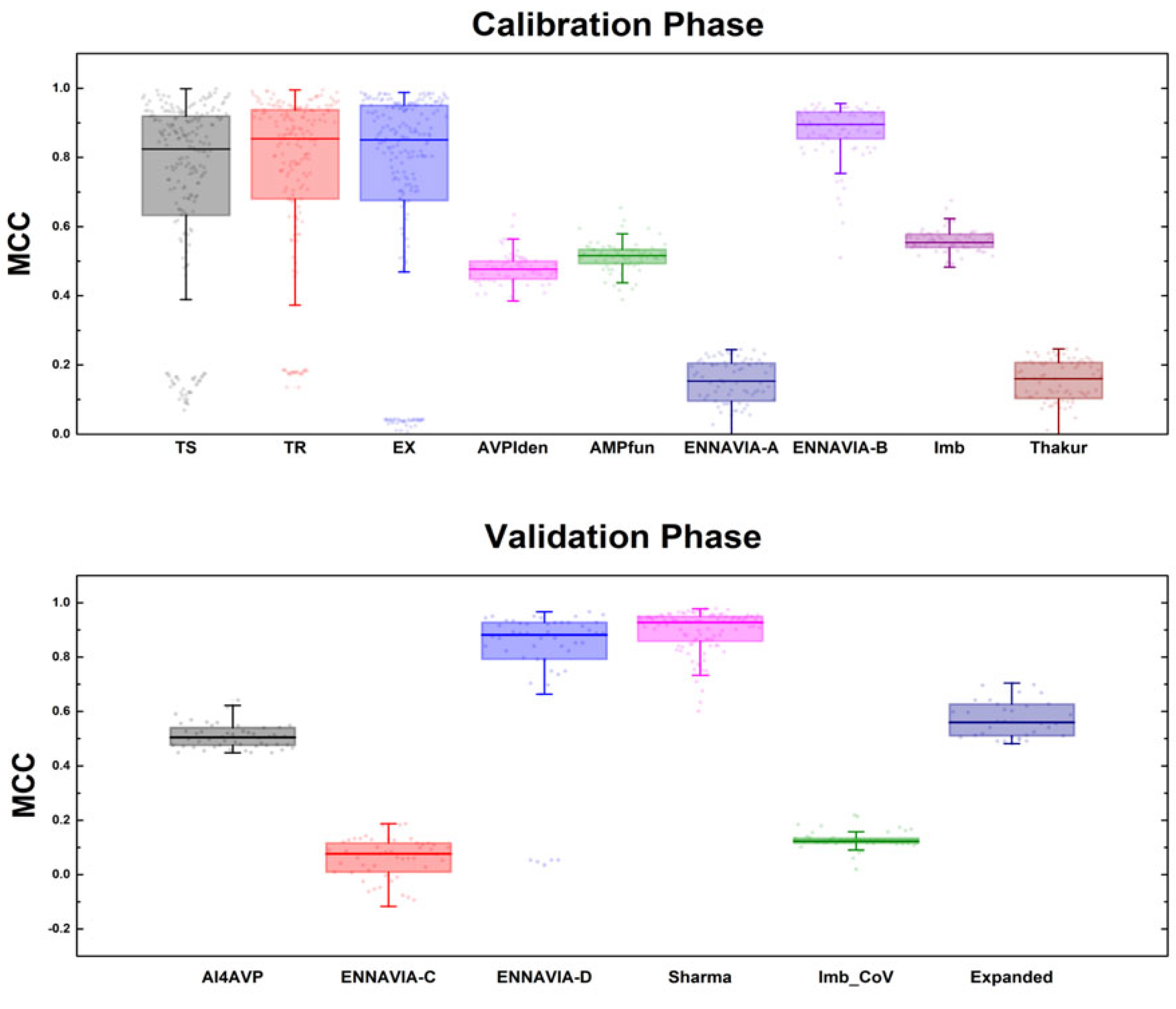

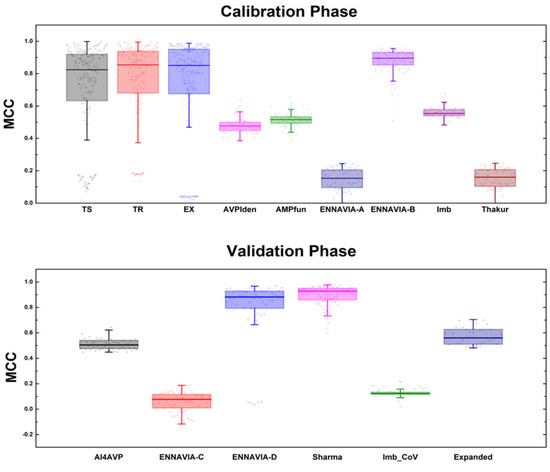

This segment focuses on managing the initial number of 210 models via a reduction process. In the preliminary phase of the calibration, an evaluation of these 210 models was conducted utilising three datasets: TR StarPep, TS StarPep, and EX StarPep. These datasets, predominantly derived from StarPepDB, demonstrated relatively uniform behaviours across the models’ performances, as depicted in Figure 1. Such an outcome is expected, considering the considerable overlap in the sequences shared between these datasets and the employed “query”.

Figure 1.

MQSS models of Mathew’s correlation coefficient (MCC). Distributions in each of the 15 tested datasets at the different stations of the model selection.

During the next phase of the calibration, six datasets not associated with StarPepDB were incorporated (Figure 1). Predictably, in this phase, the models’ effectiveness was reduced compared to their effectiveness in the initial round. A critical revelation from this stage is that the models’ performance was better when dealing with datasets composed of both randomly generated negative sequences and sequences yet to be experimentally tested. In contrast, when the datasets had experimentally validated sequences, the performance metrics dropped. This behaviour might be linked to the fact that a significant number of these negative sequences closely resembled the positive ones. Consequently, the methods based on alignment faced challenges in differentiating the unique attributes of each category.

This problem was especially pronounced in datasets like ENNAVIA-A and Thakur, where experimental sequences were used as negative datasets. Conversely, with ENNAVIA-B, which contained the same positive sequences, the models more effectively identified non-antiviral sequences. It is important to emphasise that experimentally validated negative sequences are scarce in comparison to positive sequences. Hence, the predominant challenge in modelling lay in improving the detection rate (recall) of the positive sequences overall.

From the calibration stage, clear trends in the MQSSMs’ parameters emerged. A notable observation was that models with larger scaffolds performed better. This improvement was attributed to the more detailed characterisation of the AVP chemical space. Scaffolds such as Md4, Md5, SG4, SG5, SL4, and SL5 generated the most model variants (Table S1.1). In terms of the alignment, the global alignment proved to be more effective with lower sequence identity percentages, while local alignments were more successful with higher percentages. For simpler scaffolds, global alignments consistently outperformed local alignments, regardless of the identity percentage.

3.2. Performances of MQSSMs in the Validation Phase

In the validation stage, we assessed the models against datasets with sequences targeting specific viruses, like SARS-CoV-2. Datasets including ENNAVIA-C, ENNAVIA-D, and Imb_CoV were used for this purpose. As shown in Figure 1, the base models had poor performances with ENNAVIA-C and Imb_CoV. This result was expected because the models’ references are from 2019. This highlights the importance of the “query” dataset’s representation in the model performance. However, the models showed better performances with the ENNAVIA-D dataset, which contained random negative sequences. This aligns with previous observations of the model behaviour with similar datasets.

During the model selection process, 32 models chosen in the first round of the validation were tested against the expanded dataset. From this, 12 models were identified as top performers using a multi-variable Friedman ranking method. These models, labelled from M1 to M12, included six based on global alignment and six on local alignment strategies. The parameters for these models are summarised in Table S1.2. Additionally, from these 12 models, the three top-performing ones were further singled out. This selection focused efforts on fine-tuning these models, particularly towards enhancing the recovery of positive (AVP) sequences.

3.3. Improving MQSSM Performances by Fusing Scaffolds

Continuing from the focused analysis of the top models for positive sequence recovery, an initial approach to enhancement involved combining the scaffolds from models M3, M7, and M12 (md4, SL5, and SG5) into a single consolidated scaffold. This step included the removal of duplicate sequences, culminating in a scaffold that contained 3206 unique sequences. Subsequent testing of this modified scaffold indicated slight improvements in the performances of the models using the global alignment with a 90% similarity threshold. Labelled as M13, a new model was crafted using these parameters. The improvements in M13 were primarily because of the expanded representation of the sequence space, enriched by the increased number of sequences.

Although these modifications provided insights into the nuances of the MQSSMs, their overall efficacy remained unsatisfactory. The subsequent strategy aimed to leverage the performance information gathered during the calibration phase. As shown in Figure 1, the base models struggled with datasets such as Thakur, ENNAVIA-A, AMPfun, and AVPiden. This struggle was likely because of the inadequate representation of diverse sequences from these datasets in the MQSS scaffolds. Furthermore, the presence of many experimentally validated negative sequences in some datasets increased the difficulty of making accurate predictions.

3.4. Improving MQSSM Performances by Enriching the Best Scaffolds

In tackling the identified issues, a half-space proximal network (HSPN) was made by pooling together positive sequences from the challenging datasets (Thakur, ENNAVIA-A, AMPfun, and AVPiden). A total of 2403 sequences were employed in constructing the HSPN. This effort resulted in the production of eight scaffolds, out of which the top two were chosen to enhance the existing scaffolds. Consequently, this led to the introduction of new, improved models: M3+, M7+, M12+, and M13+. The “+” in their names signifies their reference enrichment. Post enhancement, these scaffolds contained 3155, 3437, 3472, and 3606 sequences, respectively. To incorporate the unique scaffolds not present in StarPepDB, we crafted E1 and E2 models using external scaffolds comprising 1517 and 1261 sequences, respectively. This increased our analysis pool to 10 models, adding six newly enriched models to the pre-existing M3, M7, M12, and M13 models. The complete details of these models are outlined in Table S1.2.

Following their development, these 10 models were extensively tested across 15 databases, the 14 datasets from our workflow plus the expanded dataset (File S3 in Supplementary Material). We used a Friedman ranking system to halve the number of top-performing models, evaluating them based on their accuracy (ACC), specificity (SP), sensitivity (SN), Matthew’s correlation coefficient (MCC), and F1 score. The ranking results identified M3+, M13+, M7, M12, and E1 as being the most effective, as highlighted in grey in Table 3.

Table 3.

Evaluation metrics for the 10 top-performing models a.

Notably, although the models with a greater number of reference sequences, like M3+ and M13+, ranked high, models, such as E1, M7, and M12, with fewer sequences performed comparably well. This suggests that the effectiveness of the references hinges more on their diversity and representational range than on their quantity.

The scaffold enrichment, in general, improved the performance of the models. There were two exceptions: M12+ and M7+ showed worse accuracies (Qs) than their original models, M12 and M7, respectively. In these two cases, the increased representations of positive sequences resulted in decreases in Q because more negative sequences were identified as false positives, which is reflected in the increase in the false positive rate (FPR). In other words, in these cases, the scaffold enrichment improved the sensitivity (recall and SN) but worsened the specificity of the model.

3.5. Benchmarking the Best MQSSMs against State-of-the-Art Predictors

The top five models were subsequently benchmarked against existing predictors in the literature, providing a comparative assessment of their performances relative to those of other available tools. To ensure unbiased comparisons, any sequences shared between the scaffolds of models M3+, M7, M12, M13+, E1, and the reduced dataset were eliminated. This action reduced the number of positive sequences to 116, while the number of negative sequences remained unchanged. It is important to note that many negative sequences in the reduced dataset are a part of the training sets for the external predictors, but these were not removed. This decision placed our models at a significant disadvantage in terms of the performance evaluation. We tested a total of 14 external predictors (File S4) using evaluation metrics, such as the ACC, SP, SN, MCC, FPR, and F1 score. MCC, which is unaffected by the dataset’s imbalance, was the primary metric for our analysis.

The alteration made to the reduced dataset resulted in marked decreases in the performances of the MQSSMs. These declines were especially pronounced in the SN and MCC values. However, ACC and SP remained relatively stable, a situation due to the substantial imbalance between the positive and negative cases in the class distribution. The notable drop in the sensitivity underscores a significant consistent shortfall in correctly identifying positive sequences. This inability to accurately recall true positives notably affected the MCC, with a stark decrease observed, for example, in the M13+ model, where MCC plummeted from 0.731 to 0.214, as detailed in Table 4. The F1 score, which depends on both the recall and precision, also experienced a corresponding decline.

Table 4.

Evaluation metrics of the five top-performing MQSS models and state-of-the-art predictors a.

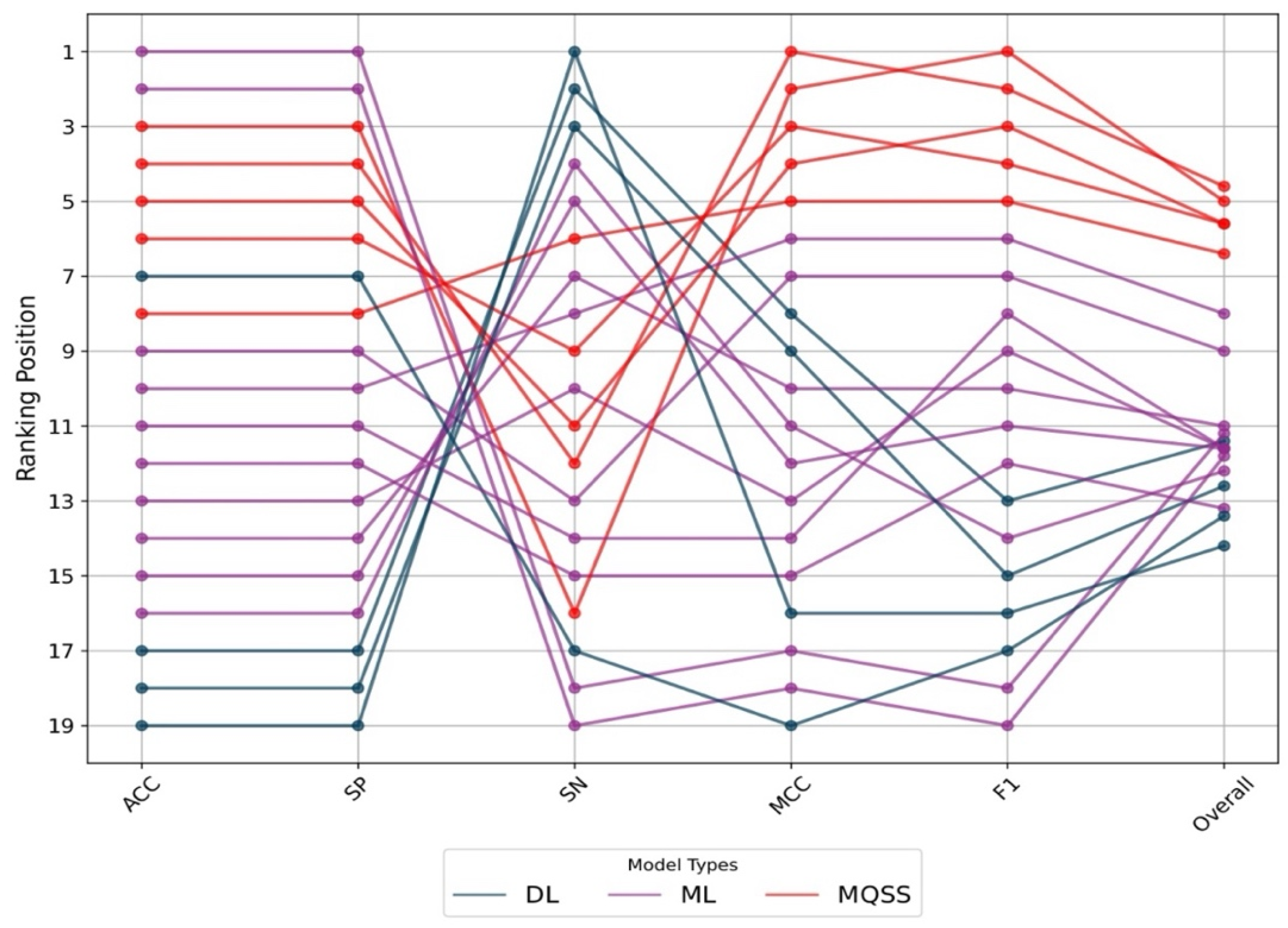

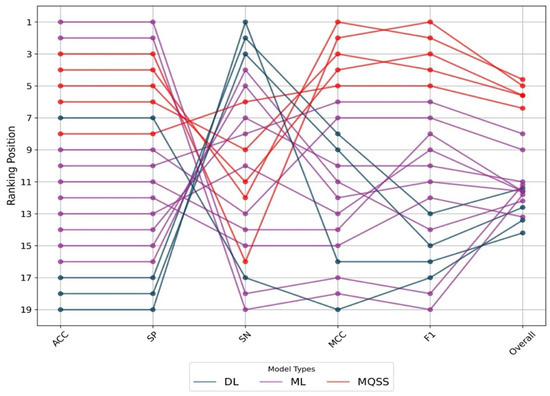

Despite these less-than-ideal results, the MQSSMs still surpassed the external predictors in the overall performance. Analysing the performance data of the external predictors, Figure 2 highlights two distinct trends. Some models are highly effective at identifying most positive sequences, resulting in a high SN but with the trade-off of a higher rate of false positives. Conversely, other models excel at correctly classifying all the negative sequences but tend to misclassify many positive ones, a trait observed in the MQSSMs we developed. Typically, most models based on deep learning fall into the former category, demonstrating high sensitivity, whereas traditional ML models are more likely to be in the latter category, with a stronger emphasis on specificity.

Figure 2.

Ranking fluctuation in each of the performance metrics for deep-learning (DL), machine-learning (ML), and MQSS models. ACC = accuracy; SP = specificity; SN = sensitivity; MCC = Mathew’s correlation coefficient; F1 = F1 score.

The analysis revealed that no single predictor excelled in all the evaluation categories, supporting Garcia-Jacas et al.’s conclusion that DL methods may not be the most effective for AVP prediction [36]. Extending this observation, it is evident that none of the ML models tested demonstrated satisfactory performance, suggesting a need for significant improvements. That is, the “no free lunch” theorem is fulfilled here, in the sense that there is no single (unique) best model for all the scenarios and that the use of several models is needed to improve performance and hit recovery in the general virtual screening of large databases. Therefore, these models will be more useful for general screening processes rather than for optimising lead compounds because of the structurally diverse training series that are not based on potency criteria for antiviral actions. In fact, a central issue identified is the quality and representativeness of the training data. Most positive sequences used in model training exhibit a high degree of similarity, often up to 90%. Moreover, the experimentally validated negative sequences often mirror their positive counterparts. The challenge, therefore, does not lie in the complexity of the models’ architectures but rather in the data’s availability and diversity. This highlights an ongoing challenge in gathering and effectively utilising comprehensive data for such models.

Evaluating state-of-the-art predictors revealed a common issue of accessibility. Many predictors were difficult to assess for various reasons. A significant issue was the poor construction of several web servers, leading to operational failures or frequent malfunctions. This problem affected even relatively new servers, those less than 2 years old. Furthermore, a few research repositories lacked comprehensive instructions, complicating their use. This difficulty in accessing and implementing these tools echoes concerns previously noted by researchers [46] about the availability of source codes. Nonetheless, a comprehensive summary of the prediction tools currently available is provided in the Supplementary Information (Table S1.4).

In contrast to these challenges, the MQSSMs distinguish themselves with their accessibility. They are available through StarPep toolbox standalone software, which boasts a user-friendly interface, making these models more approachable and easier to use for users.

Despite the need for improvements to address their shortcomings, the MQSSMs still have a performance edge over traditional ML models. This is substantiated by the Friedman MCC, ACC, SP, SN, and the F1 Score. An important aspect to note is that the MQSSMs require considerably fewer computational resources and are not constrained by sequence length limitations, presenting significant benefits.

When choosing a prediction model, it is essential to align with the specific needs of the researcher. Some may prioritise identifying a larger number of potential AVPs, while others might prefer a smaller, more accurate set to reduce false positives. Considering the resource-intensive nature of the experimental procedures, the latter approach is often more practical for synthesising potential AVPs, as it optimises the balance between resource use and the likelihood of accurate predictions.

4. Conclusions

This research marks a significant advancement with the development of multi-query similarity search models (MQSSMs). These models, devised from a deep understanding gained in the chemical space exploration and scaffold extraction phases, employ a novel approach that leverages structural similarities for predicting biological activities. This has been instrumental in improving the model selection.

Another major achievement of this study is the assembly of the most extensive datasets of AVPs, referred to as the “expanded” and “reduced” datasets. These datasets were designed to cater to a spectrum of research needs in the fields of AVP modelling and prediction and are available as File S2 in the Supplementary Materials.

Such rich data diversity is essential for the development of effective AVP predictors. Following comprehensive evaluations and filtering processes, five MQSSMs were selected for their superior performances. These models were rigorously tested across various metrics, with the top model demonstrating impressive results: ACC = 0.969, SP = 0.979, SN = 0.802, MCC = 0.71, FPR = 0.021, and F1 = 0.746. In contrast, 14 contemporary ML-based predictors, though extensively promoted, were surpassed by the MQSSMs. This not only emphasises the limitations of existing methods but also highlights the advanced capabilities of MQSSMs in predicting AVP sequences, effectively handling the challenges of variable-length sequences and imbalanced datasets. As these models notably eclipsed existing state-of-the-art predictors, they have set a new standard in the field of AVP discovery.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/antibiotics13080768/s1: File S1: Table S1.1. Brief description of the initial scaffolds used for the MQSSMs; Table S1.2. Parameters used for selected MQSSMs; Table S1.3. The final overall ranking; Table S1.4. Web server and standalone software for the prediction of AVPs. File S2: Datasets used for the calibration and validation stages of the MQSSMs. File S3: Classification results of the 10 top-performing MQSSMs with 14 datasets. File S4: Classification results of 14 web-based AVP predictors with the “reduced dataset”.

Author Contributions

Y.M.-P. and G.A.-C. contributed to the conceptualisation, methodology, supervision, drafting, and reviewing of the manuscript. D.d.L.G. was responsible for data curation, model building, validation, and data/result visualisation. F.M.-R. and F.J.F. participated in programming tasks and data analysis. A.A. and H.R. were responsible for funding acquisition, supervision, and reviewing the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

USFQ MED Grant 2023-4 (Project ID23234) and FCT—UIDB/04423/913/2020 and FCT—UIDP/04423/2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The presented MQSSMs can be easily run using the StarPep toolbox, which is freely available at https://github.com/Grupo-Medicina-Molecular-y-Traslacional/StarPep (v0.8.5, accessed on 17 April 2023). The 4663 AVPs stored in StarPepDB can be accessed and downloaded directly through the StarPep toolbox. The half-space proximal networks (HSPNs) are also constructed using the StarPep toolbox. The datasets used in the calibration and validation stages of the MQSSMs, as well as those used for the performance comparison with existing tools, are included as a part of the supplementary files in this work.

Acknowledgments

Y.M.-P. acknowledges support from USFQ MED Grant 2023-4 (Project ID23234). Y.M.-P. also thanks the program ‘Estades Temporals per a Investigadors Convidats’ for a fellowship to work at Valencia University in 2024-2025. G.A.-C. and A.A. acknowledge the support of the FCT—Foundation for Science and Technology (Portugal) within the scope of UIDB/04423/913/2020 and UIDP/04423/2020.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Enquist, L.W. Virology in the 21st Century. J. Virol. 2009, 83, 5296–5308. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wang, N.; Zhang, W.; Cheng, X.; Yan, Z.; Shao, G.; Wang, X.; Wang, R.; Fu, C. Therapeutic Peptides: Current Applications and Future Directions. Signal Transduct. Target. Ther. 2022, 7, 48. [Google Scholar] [CrossRef] [PubMed]

- Sanjuán, R.; Domingo-Calap, P. Mechanisms of Viral Mutation. Cell. Mol. Life Sci. 2016, 73, 4433–4448. [Google Scholar] [CrossRef] [PubMed]

- Mahmoud, A. New Vaccines: Challenges of Discovery. Microb. Biotechnol. 2016, 9, 549–552. [Google Scholar] [CrossRef] [PubMed]

- Carter, E.P.; Ang, C.G.; Chaiken, I.M. Peptide Triazole Inhibitors of HIV-1: Hijackers of Env Metastability. Curr. Protein Pept. Sci. 2023, 24, 59–77. [Google Scholar] [CrossRef] [PubMed]

- Agamennone, M.; Fantacuzzi, M.; Vivenzio, G.; Scala, M.C.; Campiglia, P.; Superti, F.; Sala, M. Antiviral Peptides as Anti-Influenza Agents. Int. J. Mol. Sci. 2022, 23, 11433. [Google Scholar] [CrossRef] [PubMed]

- Divyashree, M.; Mani, M.K.; Reddy, D.; Kumavath, R.; Ghosh, P.; Azevedo, V.; Barh, D. Clinical Applications of Antimicrobial Peptides (AMPs): Where Do We Stand Now? Protein Pept. Lett. 2020, 27, 120–134. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Cooper, C.L.; Wang, G.; Morwitzer, M.J.; Kota, K.; Tran, J.P.; Bradfute, S.B.; Liu, Y.; Shao, J.; Zhang, A.K.; et al. Engineered Human Cathelicidin Antimicrobial Peptides Inhibit Ebola Virus Infection. iScience 2020, 23, 100999. [Google Scholar] [CrossRef] [PubMed]

- Jackman, J.A.; Costa, V.V.; Park, S.; Real, A.L.C.V.; Park, J.H.; Cardozo, P.L.; Ferhan, A.R.; Olmo, I.G.; Moreira, T.P.; Bambirra, J.L.; et al. Therapeutic Treatment of Zika Virus Infection Using a Brain-Penetrating Antiviral Peptide. Nat. Mater. 2018, 17, 971–977. [Google Scholar] [CrossRef]

- Vilas Boas, L.C.P.; Campos, M.L.; Berlanda, R.L.A.; de Carvalho Neves, N.; Franco, O.L. Antiviral Peptides as Promising Therapeutic Drugs. Cell. Mol. Life Sci. 2019, 76, 3525–3542. [Google Scholar] [CrossRef]

- Lau, J.L.; Dunn, M.K. Therapeutic Peptides: Historical Perspectives, Current Development Trends, and Future Directions. Bioorg. Med. Chem. 2018, 26, 2700–2707. [Google Scholar] [CrossRef]

- Jaiswal, M.; Singh, A.; Kumar, S. PTPAMP: Prediction Tool for Plant-Derived Antimicrobial Peptides. Amino Acids 2023, 55, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Joseph, S.; Karnik, S.; Nilawe, P.; Jayaraman, V.K.; Idicula-Thomas, S. ClassAMP: A Prediction Tool for Classification of Antimicrobial Peptides. IEEE/ACM Trans. Comput. Biol. Bioinform. 2012, 9, 1535–1538. [Google Scholar] [CrossRef] [PubMed]

- Thakur, N.; Qureshi, A.; Kumar, M. AVPpred: Collection and Prediction of Highly Effective Antiviral Peptides. Nucleic Acids Res. 2012, 40, W199–W204. [Google Scholar] [CrossRef]

- Xiao, X.; Wang, P.; Lin, W.-Z.; Jia, J.-H.; Chou, K.-C. iAMP-2L: A Two-Level Multi-Label Classifier for Identifying Antimicrobial Peptides and Their Functional Types. Anal. Biochem. 2013, 436, 168–177. [Google Scholar] [CrossRef] [PubMed]

- Kurata, H.; Tsukiyama, S.; Manavalan, B. iACVP: Markedly Enhanced Identification of Anti-Coronavirus Peptides Using a Dataset-Specific Word2vec Model. Brief Bioinform. 2022, 23, bbac265. [Google Scholar] [CrossRef] [PubMed]

- Schaduangrat, N.; Nantasenamat, C.; Prachayasittikul, V.; Shoombuatong, W. Meta-iAVP: A Sequence-Based Meta-Predictor for Improving the Prediction of Antiviral Peptides Using Effective Feature Representation. Int. J. Mol. Sci. 2019, 20, 5743. [Google Scholar] [CrossRef] [PubMed]

- Chung, C.-R.; Kuo, T.-R.; Wu, L.-C.; Lee, T.-Y.; Horng, J.-T. Characterization and Identification of Antimicrobial Peptides with Different Functional Activities. Brief Bioinform. 2020, 21, 1098–1114. [Google Scholar] [CrossRef]

- Timmons, P.B.; Hewage, C.M. ENNAVIA Is a Novel Method Which Employs Neural Networks for Antiviral and Anti-Coronavirus Activity Prediction for Therapeutic Peptides. Brief Bioinform. 2021, 22, bbab258. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X. Pep-CNN: An Improved Convolutional Neural Network for Predicting Therapeutic Peptides. Chemom. Intell. Lab. Syst. 2022, 221, 104490. [Google Scholar] [CrossRef]

- Lin, T.-T.; Sun, Y.-Y.; Wang, C.-T.; Cheng, W.-C.; Lu, I.-H.; Lin, C.-Y.; Chen, S.-H. AI4AVP: An Antiviral Peptides Predictor in Deep Learning Approach with Generative Adversarial Network Data Augmentation. Bioinform. Adv. 2022, 2, vbac080. [Google Scholar] [CrossRef] [PubMed]

- Wei, L.; Zhou, C.; Su, R.; Zou, Q. PEPred-Suite: Improved and Robust Prediction of Therapeutic Peptides Using Adaptive Feature Representation Learning. Bioinformatics 2019, 35, 4272–4280. [Google Scholar] [CrossRef] [PubMed]

- García-Jacas, C.R.; Pinacho-Castellanos, S.A.; García-González, L.A.; Brizuela, C.A. Do Deep Learning Models Make a Difference in the Identification of Antimicrobial Peptides? Brief Bioinform. 2022, 23, bbac094. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Cai, J.; Zhang, B.; Wang, Y.; Wong, D.F.; Siu, S.W.I. Recent Progress in the Discovery and Design of Antimicrobial Peptides Using Traditional Machine Learning and Deep Learning. Antibiotics 2022, 11, 1451. [Google Scholar] [CrossRef] [PubMed]

- Basith, S.; Manavalan, B.; Hwan Shin, T.; Lee, G. Machine Intelligence in Peptide Therapeutics: A Next-generation Tool for Rapid Disease Screening. Med. Res. Rev. 2020, 40, 1276–1314. [Google Scholar] [CrossRef] [PubMed]

- Castillo-Mendieta, K.; Agüero-Chapin, G.; Santiago Vispo, N.; Márquez, E.A.; Perez-Castillo, Y.; Barigye, S.J.; Marrero-Ponce, Y. Peptide Hemolytic Activity Analysis using Visual Data Mining of Similarity-based Complex Networks. Preprints 2023, 2023030322. [Google Scholar] [CrossRef]

- Romero, M.; Marrero-Ponce, Y.; Rodríguez, H.; Agüero-Chapin, G.; Antunes, A.; Aguilera-Mendoza, L.; Martinez-Rios, F. A Novel Network Science and Similarity-Searching-Based Approach for Discovering Potential Tumor-Homing Peptides from Antimicrobials. Antibiotics 2022, 11, 401. [Google Scholar] [CrossRef] [PubMed]

- Ayala-Ruano, S.; Marrero-Ponce, Y.; Aguilera-Mendoza, L.; Pérez, N.; Agüero-Chapin, G.; Antunes, A.; Aguilar, A.C. Network Science and Group Fusion Similarity-Based Searching to Explore the Chemical Space of Antiparasitic Peptides. ACS Omega 2022, 7, 46012–46036. [Google Scholar] [CrossRef]

- Aguilera-Mendoza, L.; Ayala-Ruano, S.; Martinez-Rios, F.; Chavez, E.; García-Jacas, C.R.; Brizuela, C.A.; Marrero-Ponce, Y. StarPep Toolbox: An Open-Source Software to Assist Chemical Space Analysis of Bioactive Peptides and Their Functions Using Complex Networks. Bioinformatics 2023, 39, btad506. [Google Scholar] [CrossRef]

- De Llano García, D.; Rodríguez Cabrera, H.M.; Yachay, U.d.I.d.T.E. A Novel Network Science and Similarity-Searching-Based Approach for Discovering Potential Antiviral Peptides, Urcuquí. 2023. Available online: https://repositorio.yachaytech.edu.ec/handle/123456789/698 (accessed on 20 April 2024).

- Aguilera-Mendoza, L.; Marrero-Ponce, Y.; Beltran, J.A.; Tellez Ibarra, R.; Guillen-Ramirez, H.A.; Brizuela, C.A. Graph-Based Data Integration from Bioactive Peptide Databases of Pharmaceutical Interest: Toward an Organized Collection Enabling Visual Network Analysis. Bioinformatics 2019, 35, 4739–4747. [Google Scholar] [CrossRef]

- Aguilera-Mendoza, L.; Marrero-Ponce, Y.; García-Jacas, C.R.; Chavez, E.; Beltran, J.A.; Guillen-Ramirez, H.A.; Brizuela, C.A. Automatic Construction of Molecular Similarity Networks for Visual Graph Mining in Chemical Space of Bioactive Peptides: An Unsupervised Learning Approach. Sci. Rep. 2020, 10, 18074. [Google Scholar] [CrossRef] [PubMed]

- Marchiori, M.; Latora, V. Harmony in the Small-World. Phys. A Stat. Mech. Its Appl. 2000, 285, 539–546. [Google Scholar] [CrossRef]

- Ruan, Y.; Tang, J.; Hu, Y.; Wang, H.; Bai, L. Efficient Algorithm for the Identification of Node Significance in Complex Network. IEEE Access 2020, 8, 28947–28955. [Google Scholar] [CrossRef]

- Aguilera-Mendoza, L.; Marrero-Ponce, Y.; Tellez-Ibarra, R.; Llorente-Quesada, M.T.; Salgado, J.; Barigye, S.J.; Liu, J. Overlap and Diversity in Antimicrobial Peptide Databases: Compiling a Non-Redundant Set of Sequences. Bioinformatics 2015, 31, 2553–2559. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Pinacho-Castellanos, S.A.; García-Jacas, C.R.; Gilson, M.K.; Brizuela, C.A. Alignment-Free Antimicrobial Peptide Predictors: Improving Performance by a Thorough Analysis of the Largest Available Data Set. J. Chem. Inf. Model. 2021, 61, 3141–3157. [Google Scholar] [CrossRef] [PubMed]

- Pang, Y.; Yao, L.; Jhong, J.-H.; Wang, Z.; Lee, T.-Y. AVPIden: A New Scheme for Identification and Functional Prediction of Antiviral Peptides Based on Machine Learning Approaches. Brief Bioinform. 2021, 22, bbab263. [Google Scholar] [CrossRef] [PubMed]

- Pang, Y.; Wang, Z.; Jhong, J.-H.; Lee, T.-Y. Identifying Anti-Coronavirus Peptides by Incorporating Different Negative Datasets and Imbalanced Learning Strategies. Brief Bioinform. 2021, 22, 1085–1095. [Google Scholar] [CrossRef] [PubMed]

- Sharma, R.; Shrivastava, S.; Singh, S.K.; Kumar, A.; Singh, A.K.; Saxena, S. Deep-AVPpred: Artificial Intelligence Driven Discovery of Peptide Drugs for Viral Infections. IEEE J. Biomed. Health Inform. 2022, 26, 5067–5074. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, A.; Thakur, N.; Kumar, M. HIPdb: A Database of Experimentally Validated HIV Inhibiting Peptides. PLoS ONE 2013, 8, e54908. [Google Scholar] [CrossRef]

- Chang, K.Y.; Yang, J.-R. Analysis and Prediction of Highly Effective Antiviral Peptides Based on Random Forests. PLoS ONE 2013, 8, e70166. [Google Scholar] [CrossRef]

- Alcalá-Fdez, J.; Sánchez, L.; García, S.; Del Jesus, M.J.; Ventura, S.; Garrell, J.M.; Otero, J.; Romero, C.; Bacardit, J.; Rivas, V.M.; et al. KEEL: A Software Tool to Assess Evolutionary Algorithms for Data Mining Problems. Soft Comput. 2009, 13, 307–318. [Google Scholar] [CrossRef]

- Otović, E.; Njirjak, M.; Kalafatovic, D.; Mauša, G. Sequential Properties Representation Scheme for Recurrent Neural Network-Based Prediction of Therapeutic Peptides. J. Chem. Inf. Model. 2022, 62, 2961–2972. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, A.S.; Reehl, S.M.; Kehn-Hall, K.; Bishop, B.; Webb-Robertson, B.-J.M. Better Understanding and Prediction of Antiviral Peptides through Primary and Secondary Structure Feature Importance. Sci. Rep. 2020, 10, 19260. [Google Scholar] [CrossRef] [PubMed]

- Beltrán Lissabet, J.F.; Belén, L.H.; Farias, J.G. AntiVPP 1.0: A Portable Tool for Prediction of Antiviral Peptides. Comput. Biol. Med. 2019, 107, 127–130. [Google Scholar] [CrossRef] [PubMed]

- Diakou, I.; Papakonstantinou, E.; Papageorgiou, L.; Pierouli, K.; Dragoumani, K.; Spandidos, D.; Bacopoulou, F.; Chrousos, G.; Eliopoulos, E.; Vlachakis, D. Novel Computational Pipelines in Antiviral Structure-based Drug Design (Review). Biomed. Rep. 2022, 17, 97. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).