A Novel Anomaly Detection Method for Strip Steel Based on Multi-Scale Knowledge Distillation and Feature Information Banks Network

Abstract

1. Introduction

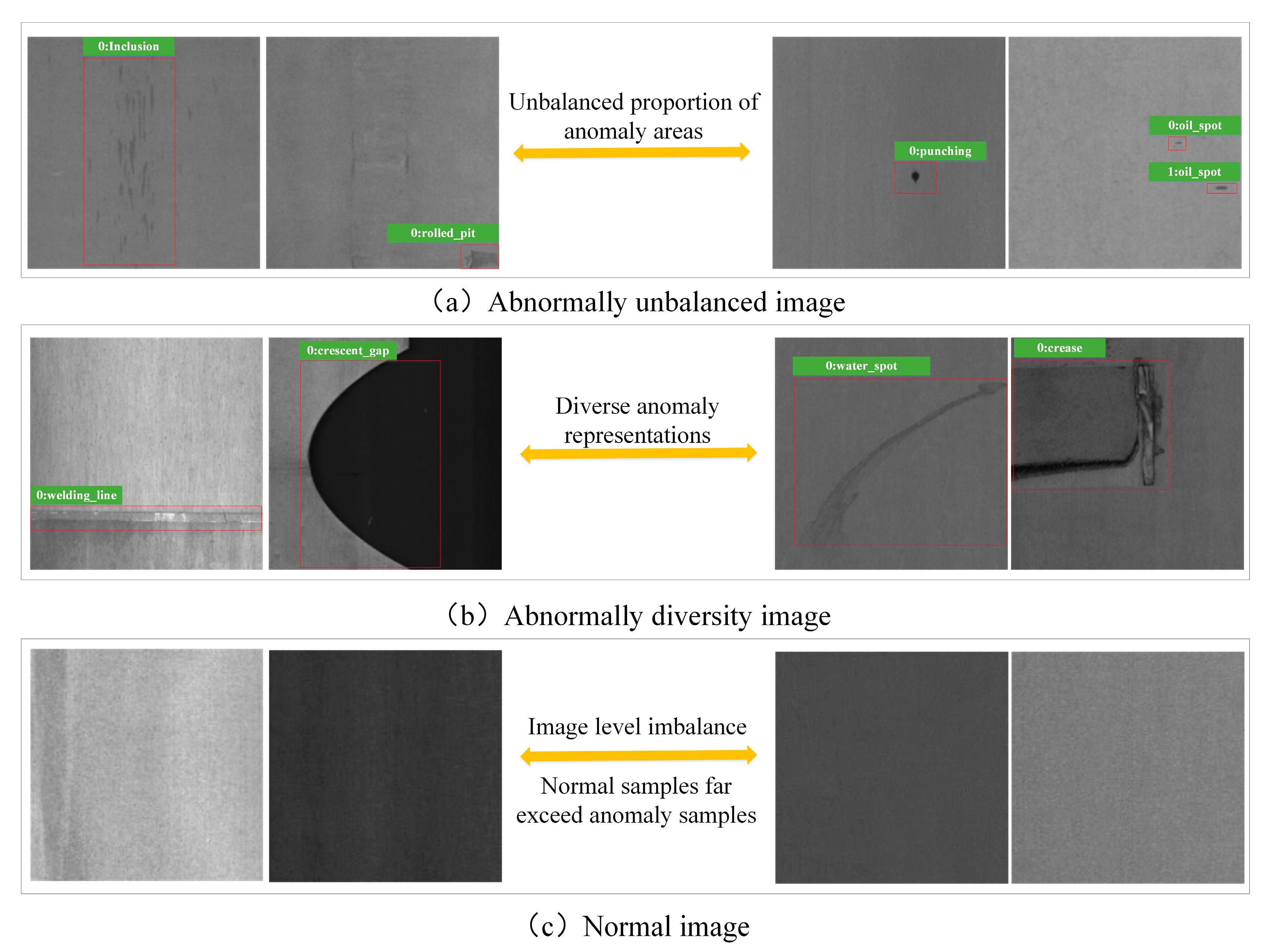

- Rareness of abnormal data: This refers to the scarcity of accurately labeled abnormal data in industrial data. Obtaining accurate pixel-level annotations requires professional skilled workers to sketch, which is costly. In contrast, the labeling cost of natural images is low.

- The imbalance of abnormal data: a. At the image level, the number of normal industrial images is far more than that of abnormal ones, which is caused by different production and processing conditions. b. At the pixel level, the collected abnormal area occupies a small pixel area on the entire surface image, which leads to very high similarity between normal and abnormal samples.

- The diversity of anomaly characterization: Industrial image anomalies often have a variety of different sizes, shapes, locations and texture features. From a statistical point of view, the data distribution of these anomalies is inconsistent. Therefore, there are still some challenges in the application of quality control and surface defect localization.

- (1)

- This paper proposes a novel unsupervised anomaly detection method based on distillation learning. This method uses a better pre-training network to learn the distribution pattern of normal images and analyzes the difference in image processing through the teacher–student model. It can accurately identify unknown abnormal data and effectively solve the problem of unknown anomaly detection.

- (2)

- In this paper, a new dataset SSAD-FSL (strip steel anomaly detection for few-shot learning) is established, including 3000 grayscale images with a resolution of 224 × 224. The construction of the dataset includes image-level and pixel-level labeling of surface defects of cold-rolled strip steel and hot-rolled strip steel.

- (3)

- In the test process, the abnormal score is calculated by calculating the distance between the test sample and the nearest neighbor sample in the feature banks so as to realize the task of distinguishing and locating the defects. The experimental results show that the proposed anomaly detection algorithm can effectively screen out the defect samples and perform better than other similar models.

2. Related Works

2.1. Anomaly Detection Method Based on Distance Measure

2.2. Anomaly Detection Method Based on Constructing Classification Surface

2.3. Anomaly Detection Method Based on Image Reconstruction

3. Methodology

3.1. Methodology Overview

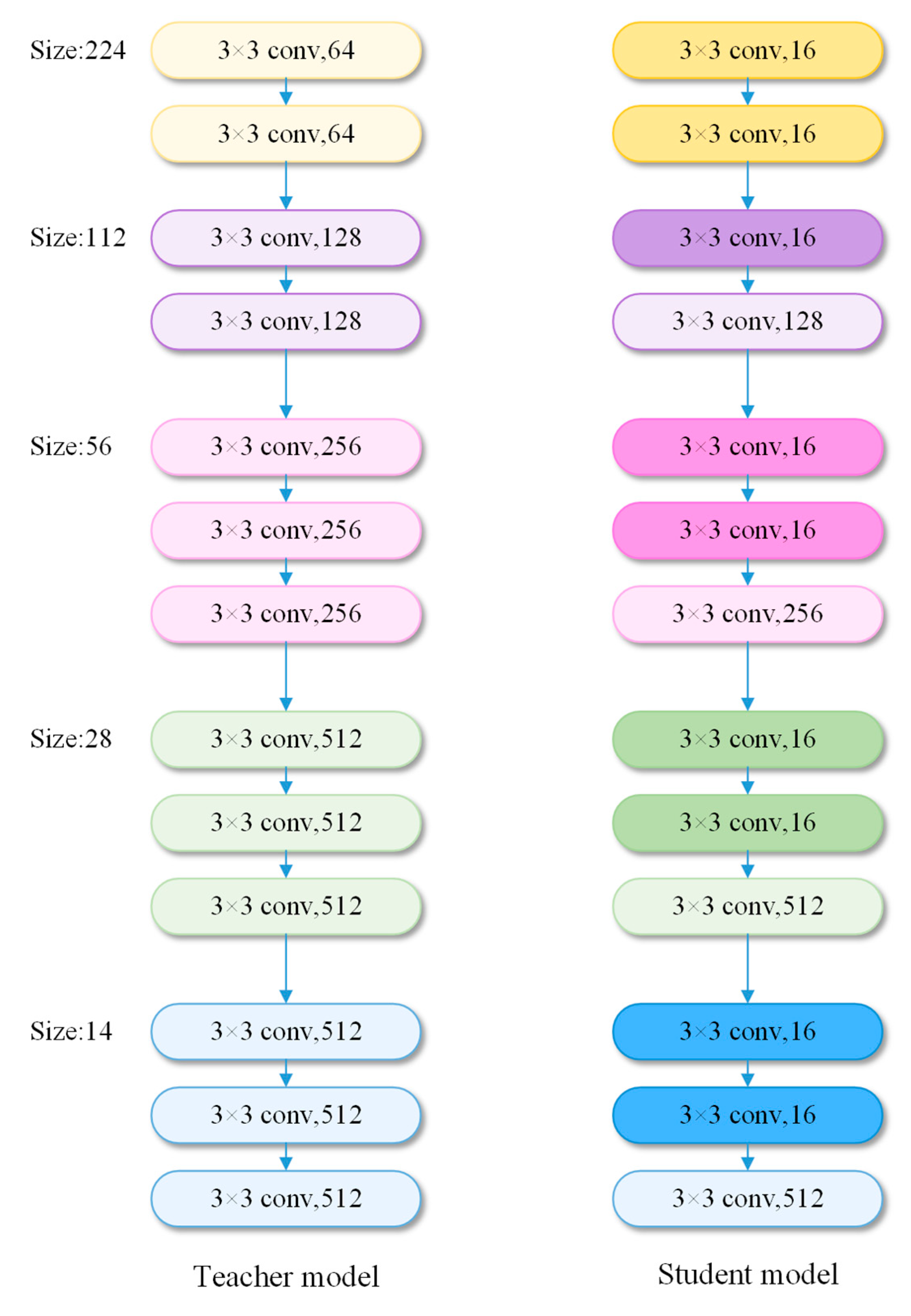

3.2. Ms-KD Module

3.3. BDCI Module

3.3.1. Block Domain Feature Selection

3.3.2. Core Repository

| Algorithm 1: The flow of building a block domain core information bank. |

|

3.4. Loss Design

3.5. Training Configurations

3.6. Test Process and Abnormal Scores

4. Experiments

4.1. Dataset Construction

4.2. Hyperparameter Setting

4.3. Evaluation Metrics

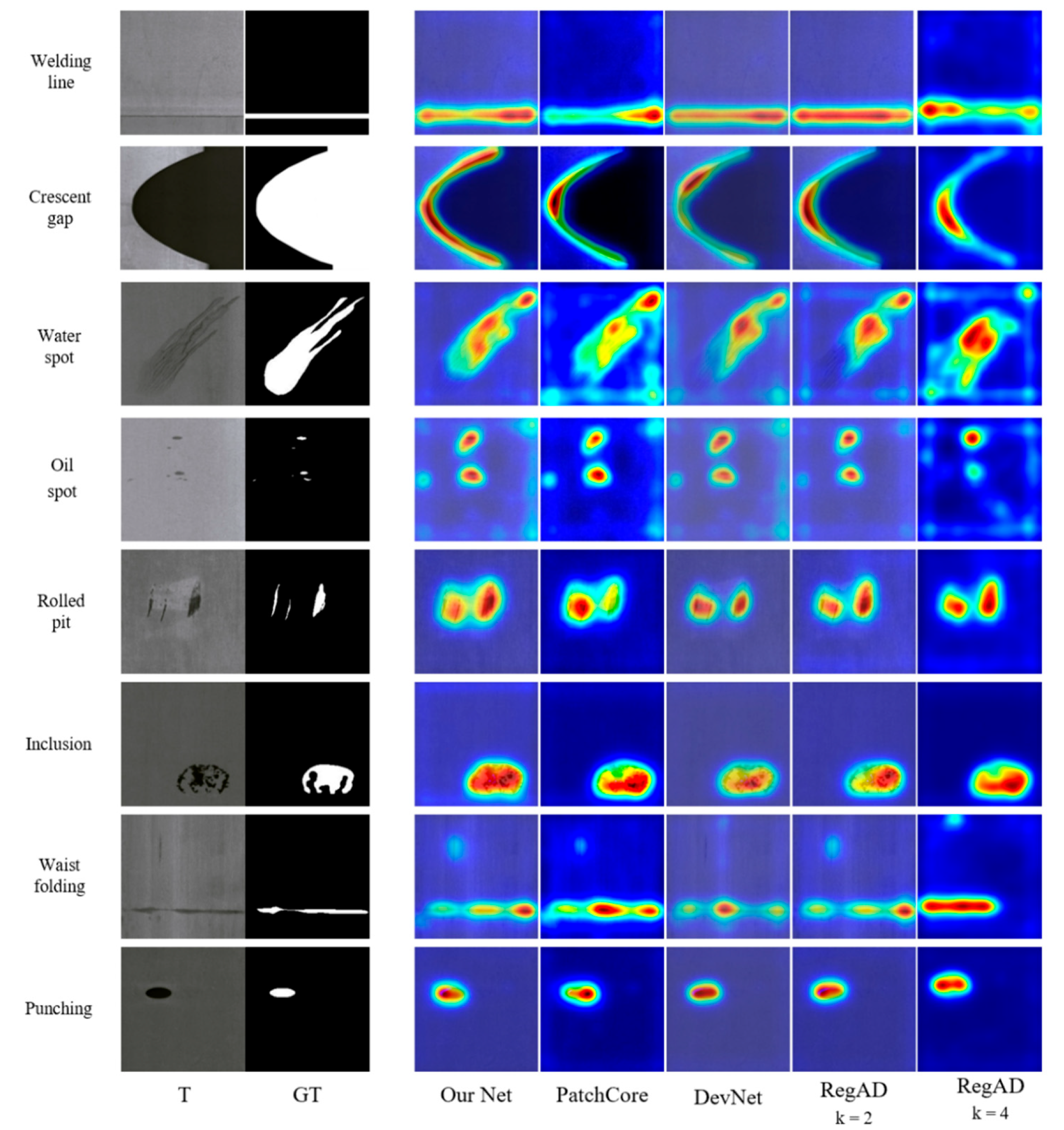

4.4. Comparison with the State-of-the-Art Methods

4.5. Ablation Study

4.6. Other Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, Y.; Wen, X.; Xu, J. A Semi-Supervised Inspection Approach of Textured Surface Defects under Limited Labeled Samples. Coatings 2022, 12, 1707. [Google Scholar] [CrossRef]

- Wen, X.; Shan, J.; He, Y.; Song, K. Steel Surface Defect Recognition: A Survey. Coatings 2022, 13, 17. [Google Scholar] [CrossRef]

- Wan, C.; Ma, S.; Song, K. TSSTNet: A Two-Stream Swin Transformer Network for Salient Object Detection of No-Service Rail Surface Defects. Coatings 2022, 12, 1730. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, L.; Gao, Y.; Li, X. A new graph-based semi-supervised method for surface defect classification, Robot. Comput. Integr. Manuf. 2021, 68, 102083. [Google Scholar] [CrossRef]

- Wang, Y.; Song, K.; Liu, J.; Dong, H.; Jiang, P. RENet: Rectangular convolution pyramid and edge enhancement network for salient object detection of pavement cracks. Measurement 2020, 170, 108698. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, D.; Tong, Z.; Yang, J.; Yu, D. Autonomous pavement distress detection using ground penetrating radar and region-based deep learning. Measurement 2020, 164, 108077. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Piran, M.; Moon, H. A robust instance segmentation framework for underground sewer defect detection. Measurement 2022, 190, 110727. [Google Scholar] [CrossRef]

- Xu, Y.; Li, D.; Xie, Q.; Wu, Q.; Wang, J. Automatic defect detection and segmentation of tunnel surface using modified Mask R-CNN. Measurement 2021, 178, 109316. [Google Scholar] [CrossRef]

- Shu, Y.; Li, B.; Li, X.; Xiong, C.; Cao, S.; Wen, X. Deep learning-based fast recognition of commutator surface defects. Measurement 2021, 178, 109324. [Google Scholar] [CrossRef]

- Yang, J.; Yang, G. Modified convolutional neural network based on dropout and the stochastic gradient descent optimizer. Algorithms 2018, 11, 28. [Google Scholar] [CrossRef]

- Park, J.; Yi, D.; Ji, S. Analysis of recurrent neural network and predictions. Symmetry 2020, 12, 615. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Saberironaghi, A.; Ren, J.; El-Gindy, M. Defect detection methods for industrial products using deep learning techniques: A review. Algorithms 2023, 16, 95. [Google Scholar] [CrossRef]

- Elhanashi, A.; Lowe, D.; Saponara, S.; Moshfeghi, Y. Deep Learning Techniques to Identify and Classify COVID-19 Abnormalities on Chest X-ray Images. In Real-Time Image Processing and Deep Learning 2022; SPIE The International Society for Optical Engineering: Bellingham, WA, USA, 2022; Volume 12102, pp. 15–24. [Google Scholar]

- Reed, I.S.; Yu, X. Adaptive multiple-band CFAR detection of an optical pattern with unknown spectral distribution. IEEE Trans. Acoust. Speech Signal Process 1990, 38, 1760–1770. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X.W. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; AAAI Press: Washington, DC, USA, 1996; pp. 226–231. [Google Scholar]

- Ruff, L.; Vandermeulen, R.; Goernitz, N.; Deecke, L.; Siddiqui, S.; Binder, A.; Muller, E.; Kloft, M. Deep One-Class Classification. In Proceedings of the 35th International Conference on Machine Learning PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 4390–4399. [Google Scholar]

- Gao, Y.; Xu, J.; Zhu, X. A novel anomaly detection method based on improved k-means clustering algorithm. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 4223–4233. [Google Scholar]

- Oza, P.; Patel, V.M. One-class convolutional neural network. IEEE Signal Process. Lett. 2019, 26, 277–281. [Google Scholar] [CrossRef]

- Hendrycks, D.; Mazeika, M.; Dietterich, T.G. Deep Anomaly Detection with Outlier Exposure. In Proceedings of the 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Goyal, S.; Raghunathan, A.; Jain, M.; Simhadri, H.; Jain, P. DROCC: Deep Robust One-Class Classification. In Proceedings of the 37th International Conference on Machine Learning (PMLR), Virtual, 13–18 July 2020; pp. 3711–3721. [Google Scholar]

- Wang, J.Z.; Li, Q.Y.; Gan, J.R.; Yu, H.M.; Yang, X. Surface defect detection via entity sparsity pursuit with intrinsic priors. IEEE Trans. Ind. Inform. 2020, 16, 141–1501. [Google Scholar] [CrossRef]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.; Venkatesh, S.; Hengel, A. Memorizing Normality to Detect Anomaly: Memory-Augmented Deep Autoencoder for Unsupervised Anomaly Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE Publishing: New York, NY, USA, 2019; pp. 1705–1714. [Google Scholar]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Ganomaly: Semi-Supervised Anomaly Detection via Adversarial Training. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Cham, Switzerland, 2018; pp. 622–637. [Google Scholar]

- Schlegl, T.; Seebock, P.; M.Waldstein, S.; Schmidt-Erfurth, U.; Langs, G. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discover. In Proceedings of the International Conference on Information Processing in Medical Imaging, Boone, NC, USA, 25–30 June 2017; Springer: Cham, Switzerland, 2017; pp. 146–157. [Google Scholar]

- Perera, P.; Nallapati, R.; Xiang, B. Ocgan: One-Class Novelty Detection Using Gans with Constrained Latent Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2898–2906. [Google Scholar]

- Feng, H.; Song, K.; Cui, W.; Zhang, Y.; Yan, Y. Cross Position Aggregation Network for Few-shot Strip Steel Surface Defect Segmentation. IEEE Trans. Instrum. Meas. 2023, 72, 5007410. [Google Scholar] [CrossRef]

- Xiong, L.; Póczos, B.; Schneider, J. Group anomaly detection using flexible genre models. Adv. Neural Inf. Process. Syst. 2011, 24, 1071–1079. [Google Scholar]

- Laurence, W.; Nemhauser, G. Integer and Combinatorial Optimization; Wiley: Hoboken, NJ, USA, 2014. [Google Scholar]

- Dasgupta, S.; Gupta, A. An elementary proof of a theorem of Johnson and Lindenstrauss. Random Struct. Algorithms 2003, 22, 60–65. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Feng, X.; Gao, X.; Luo, L. X-SDD: A new benchmark for hot rolled steel strip surface defects setection. Symmetry 2021, 13, 706. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L. Deep metallic surface defect detection: The new benchmark and detection network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef] [PubMed]

- Roth, K.; Pemula, L.; Zepeda, J.; Scholkopf, B.; Brox, T.; Gehler, P. Towards Total Recall in Industrial Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Pang, G.; Shen, C.; van den Hengel, A. Deep Anomaly Detection with Deviation Networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 353–362. [Google Scholar]

- Huang, C.; Guan, H.; Jiang, A.; Zhang, Y.; Spratling, M.; Wang, Y.-F. Registration Based Few-Shot Anomaly Detection Computer Vision–ECCV 2022. In Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 303–319. [Google Scholar]

- Sheynin, S.; Benaim, S.; Wolf, L. A hierarchical Transformation-Discriminating Generative Model for Few Shot Anomaly Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8495–8504. [Google Scholar]

| Category | Model | Ref. | Advantages | Disadvantages |

|---|---|---|---|---|

| Anomaly detection method based on distance measure | One-class convolutional neural network | [17] | Support vector machine is applied to surface defect detection tasks | Methods are not effective for complex datasets and are prone to model degradation |

| Deep anomaly detection with outlier exposure | [18] | Support vector machine is applied to surface defect detection tasks | Methods are not effective for complex datasets and are prone to model degradation | |

| Anomaly detection method based on constructing classification surface | Surface defect detection via entity sparsity pursuit with intrinsic priors | [20] | Flexibly handle complex data distribution | Complex |

| Anomaly detection method based on image reconstruction | Memorizing normality to detect anomaly | [21] | Improving the performance of classification and clustering | Poor performance in some real datasets |

| Unsupervised anomaly detection with generative adversarial networks to guide marker discovery | [23] | The reconstructed image has integrity | The dimension of the hidden variable relative to the dimension of the generated image will cause its effect to change | |

| One-class novelty detection using gans with constrained latent representations | [24] | Uniform vector distribution | The robustness of this model is not good |

| Defect Type | PatchCore | DevNet | RegAD | HTDG | OurNet | ||

|---|---|---|---|---|---|---|---|

| Batch Size 32 k = 2 | Batch Size 16 k = 4 | Without Norm | With T1 Norm | ||||

| Welding line | 0.9853 | 0.9858 | 0.8126 | 0.8754 | 0.8753 | 0.8847 | 0.9753 |

| Crescent gap | 1 | 1 | 1 | 1 | 0.8930 | 0.8929 | 1 |

| Water spot | 1 | 0.9655 | 0.8514 | 0.8848 | 0.8012 | 0.8012 | 1 |

| Oil spot | 0.9406 | 0.9495 | 0.9267 | 0.9619 | 0.8388 | 0.8400 | 0.9506 |

| Rolled pit | 0.9751 | 0.9460 | 0.9244 | 0.9519 | 0.8376 | 0.8365 | 0.9741 |

| Inclusion | 1 | 1 | 0.9482 | 0.9804 | 0.7812 | 0.7812 | 1 |

| Waist folding | 0.9465 | 0.9893 | 0.9173 | 0.9535 | 0.9505 | 0.9506 | 0.9941 |

| Punching | 1 | 1 | 1 | 1 | 0.9787 | 0.9786 | 1 |

| Average | 0.9809 | 0.9795 | 0.9226 | 0.9510 | 0.8695 | 0.8707 | 0.9868 |

| Defect Type | PatchCore | DevNet | RegAD | HTDG | OurNet | |

|---|---|---|---|---|---|---|

| Batch Size 32 k = 2 | Batch Size 16 k = 4 | |||||

| Welding line | 0.8290 | 0.8030 | 0.6833 | 0.7435 | \ | 0.9195 |

| Crescent gap | 0.9193 | 0.5912 | 0.9930 | 0.9876 | \ | 0.9585 |

| Water spot | 0.7551 | 0.6511 | 0.6664 | 0.6892 | \ | 0.7419 |

| Oil spot | 0.8734 | 0.7656 | 0.7525 | 0.7554 | \ | 0.7791 |

| Rolled pit | 0.9019 | 0.8601 | 0.7267 | 0.7964 | \ | 0.8822 |

| Inclusion | 0.9069 | 0.9295 | 0.7495 | 0.7058 | \ | 0.9478 |

| Waist folding | 0.8483 | 0.6504 | 0.7375 | 0.7661 | \ | 0.9172 |

| Punching | 0.9956 | 0.9210 | 0.7357 | 0.7795 | \ | 0.9932 |

| Average | 0.8787 | 0.7715 | 0.7556 | 0.7779 | \ | 0.8924 |

| Defect Type | PatchCore | DevNet | RegAD | HTDG | OurNet | |

|---|---|---|---|---|---|---|

| Batch Size 32 k = 2 | Batch Size 16 k = 4 | |||||

| Welding line | 0.9212 | 0.9746 | 0.9199 | 0.9497 | \ | 0.9764 |

| Crescent gap | 1 | 1 | 1 | 1 | \ | 1 |

| Water spot | 0.9678 | 0.9937 | 0.9107 | 0.9406 | \ | 0.9902 |

| Oil spot | 0.9758 | 0.9683 | 0.9715 | 0.9838 | \ | 0.9673 |

| Rolled pit | 0.9847 | 0.9789 | 0.9632 | 0.9767 | \ | 0.9951 |

| Inclusion | 1 | 1 | 0.9880 | 0.9919 | \ | 1 |

| Waist folding | 0.9798 | 0.9977 | 0.9660 | 0.9812 | \ | 0.9874 |

| Punching | 1 | 1 | 1 | 1 | \ | 1 |

| Average | 0.9787 | 0.9892 | 0.9649 | 0.9780 | \ | 0.9896 |

| Ms-KD | BDCI | Img-AUROC | Pixel-AUROC | PRO |

|---|---|---|---|---|

| ✓ | 0.9587 | 0.8767 | 0.9654 | |

| ✓ | 0.9665 | 0.8842 | 0.9733 | |

| ✓ | ✓ | 0.9868 | 0.8924 | 0.9896 |

| Type | Welding Line | Crescent Gap | Water Spot | Oil Spot | Rolled Pit | Inclusion | Waist Folding | Punching | Average |

|---|---|---|---|---|---|---|---|---|---|

| LMID(first three layers) | 0.9453 | 0.9600 | 0.9535 | 0.9434 | 0.9412 | 0.9500 | 0.9621 | 0.9574 | 0.9516 |

| LMID(last three layers) | 0.9465 | 0.9800 | 0.9738 | 0.9258 | 0.9600 | 0.9467 | 0.9870 | 0.9628 | 0.9603 |

| Llast layer | 0.9589 | 0.9841 | 0.9924 | 0.9558 | 0.9587 | 0.9600 | 0.9697 | 0.9710 | 0.9688 |

| LALL | 0.9753 | 1.0000 | 1.0000 | 0.9506 | 0.9741 | 1.0000 | 0.9941 | 1.0000 | 0.9868 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, X.; Zhao, W.; Yu, Z.; Zhao, J.; Song, K. A Novel Anomaly Detection Method for Strip Steel Based on Multi-Scale Knowledge Distillation and Feature Information Banks Network. Coatings 2023, 13, 1171. https://doi.org/10.3390/coatings13071171

Wen X, Zhao W, Yu Z, Zhao J, Song K. A Novel Anomaly Detection Method for Strip Steel Based on Multi-Scale Knowledge Distillation and Feature Information Banks Network. Coatings. 2023; 13(7):1171. https://doi.org/10.3390/coatings13071171

Chicago/Turabian StyleWen, Xin, Wenli Zhao, Zhenhao Yu, Jianxun Zhao, and Kechen Song. 2023. "A Novel Anomaly Detection Method for Strip Steel Based on Multi-Scale Knowledge Distillation and Feature Information Banks Network" Coatings 13, no. 7: 1171. https://doi.org/10.3390/coatings13071171

APA StyleWen, X., Zhao, W., Yu, Z., Zhao, J., & Song, K. (2023). A Novel Anomaly Detection Method for Strip Steel Based on Multi-Scale Knowledge Distillation and Feature Information Banks Network. Coatings, 13(7), 1171. https://doi.org/10.3390/coatings13071171