Automated Crack Detection in 2D Hexagonal Boron Nitride Coatings Using Machine Learning

Abstract

:1. Introduction

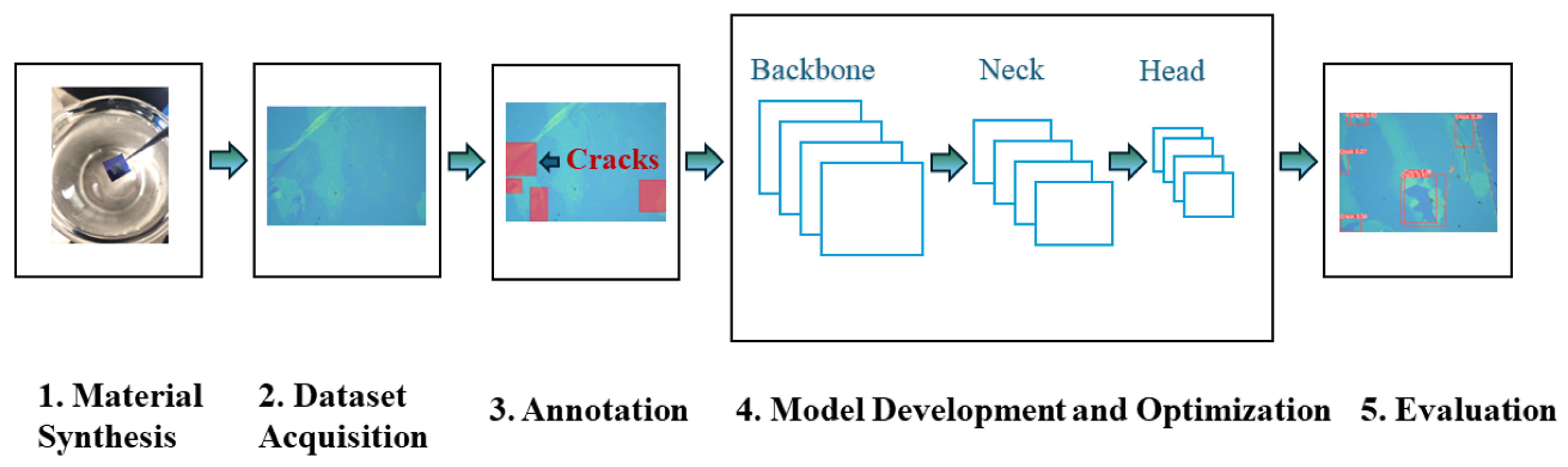

2. Materials and Methods

2.1. Material Synthesis

2.2. Dataset Acquisition

2.3. Annotation

- Training Set (92 images): The core dataset used to train the machine learning model.

- Validation Set (24 images): Used to optimize model hyperparameters.

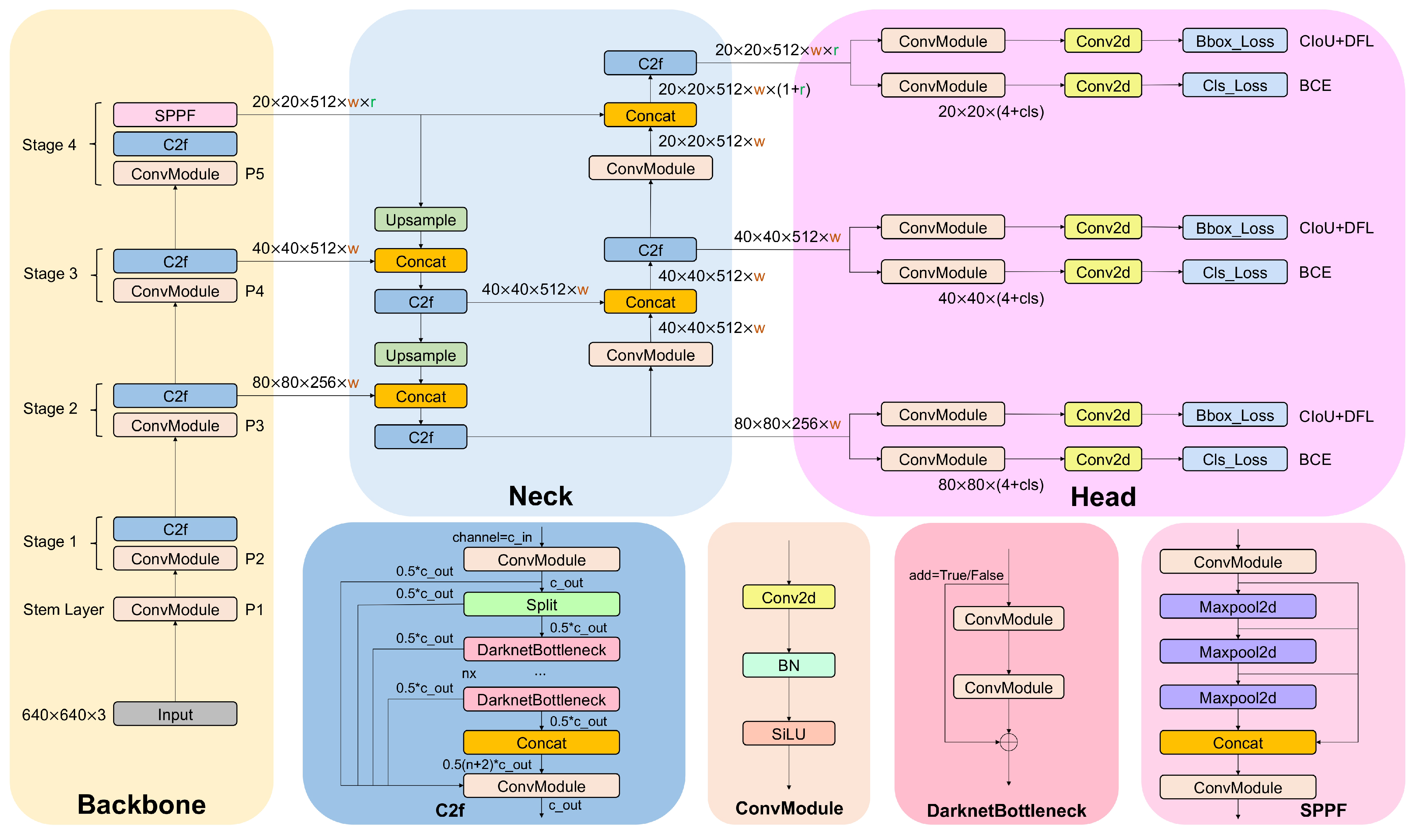

2.4. Model Development and Optimization

2.4.1. Backbone

2.4.2. Neck

2.4.3. Head

2.4.4. Loss

2.4.5. Data Augmentation and Model Optimization

- (a)

- HSV (Hue, Saturation, Value) Augmentation: This method adjusts the color properties of images to simulate a wider range of lighting conditions and object appearances. The transformations can be represented mathematically aswhere H, S, and V are the original hue, saturation, and value components of the image pixels, respectively. , , and are the augmented components, and , , and represent small, random perturbations applied to each channel.

- (b)

- Translation: This technique shifts the image by a certain number of pixels horizontally and vertically, introducing variability in object positioning within the frame. The translation operation can be described by the transformation matrix:where and denote the horizontal and vertical displacements, respectively.

- (c)

- Scaling: Scaling alters the size of the image, simulating objects at different distances from the viewer. This operation can be mathematically represented by the scaling matrix:where and are scaling factors for the width and height of the image, respectively.

- (d)

- Flipping Operations: Flipping operations mirror the image either horizontally, vertically, or both to simulate different orientations of objects. The flipping transformation can be represented as a reflection matrix, for example, for horizontal flipping:where W is the width of the image, ensuring the flipped image remains within the original dimensions.

2.5. Evaluation

3. Results

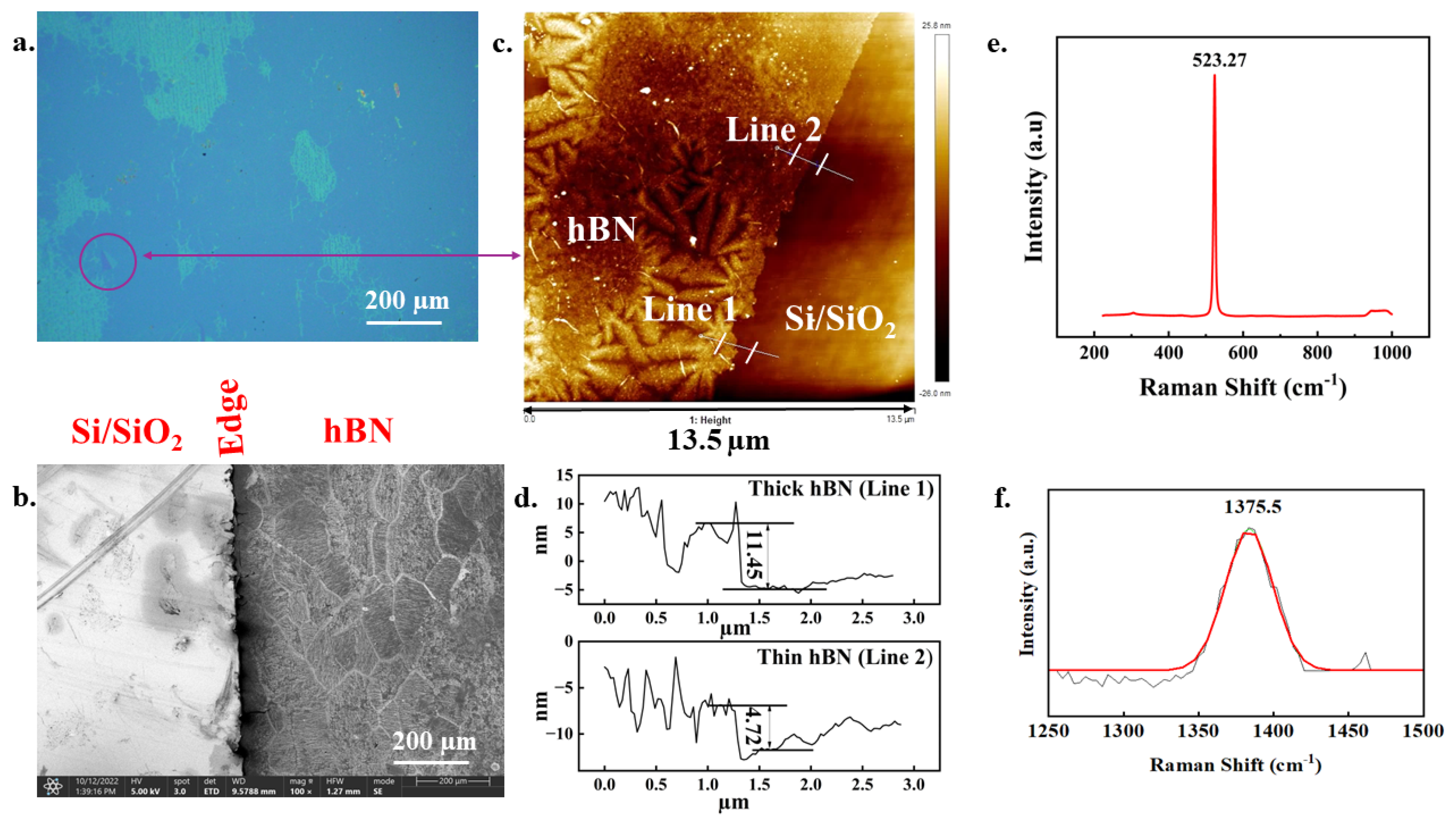

3.1. hBN Film Characterization

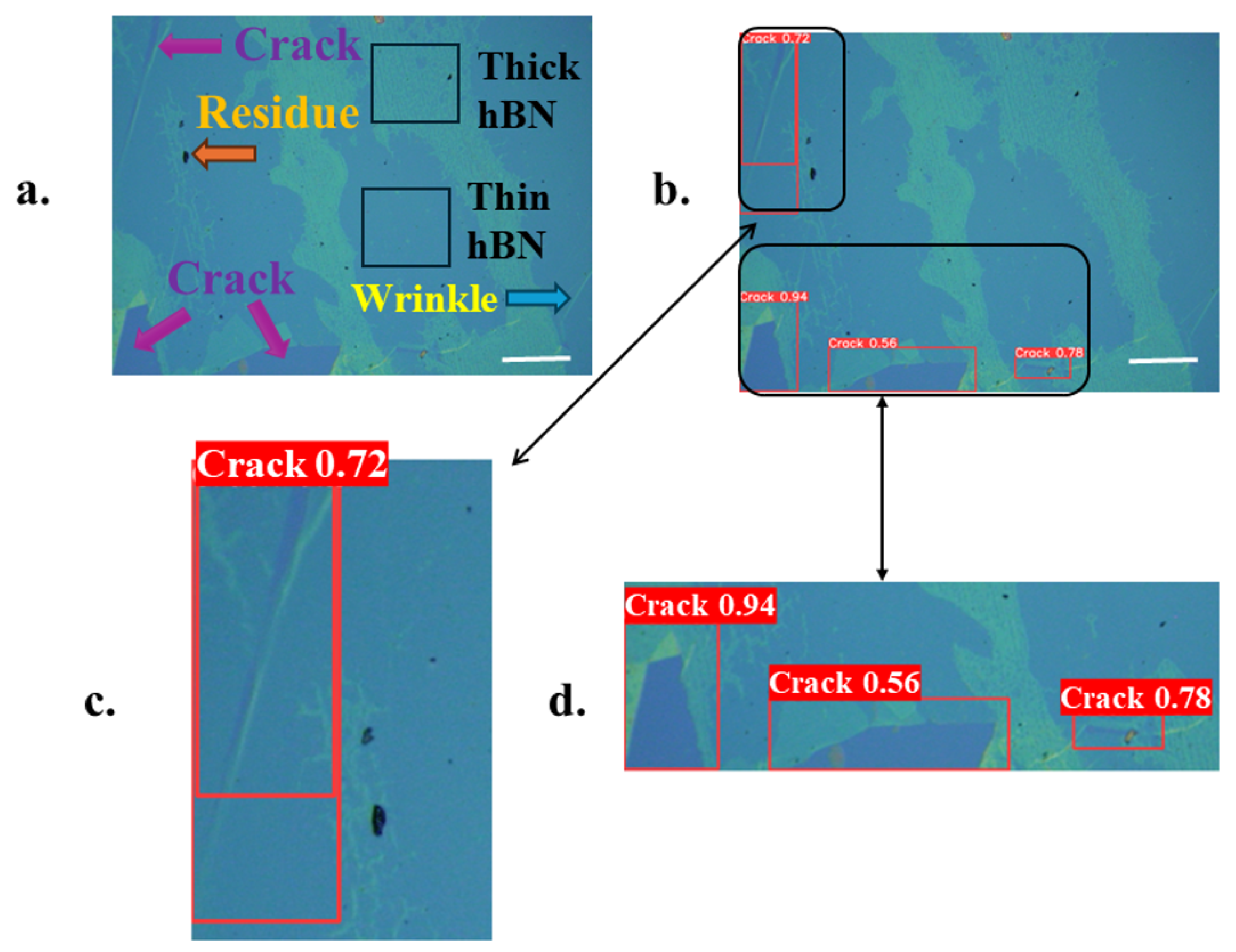

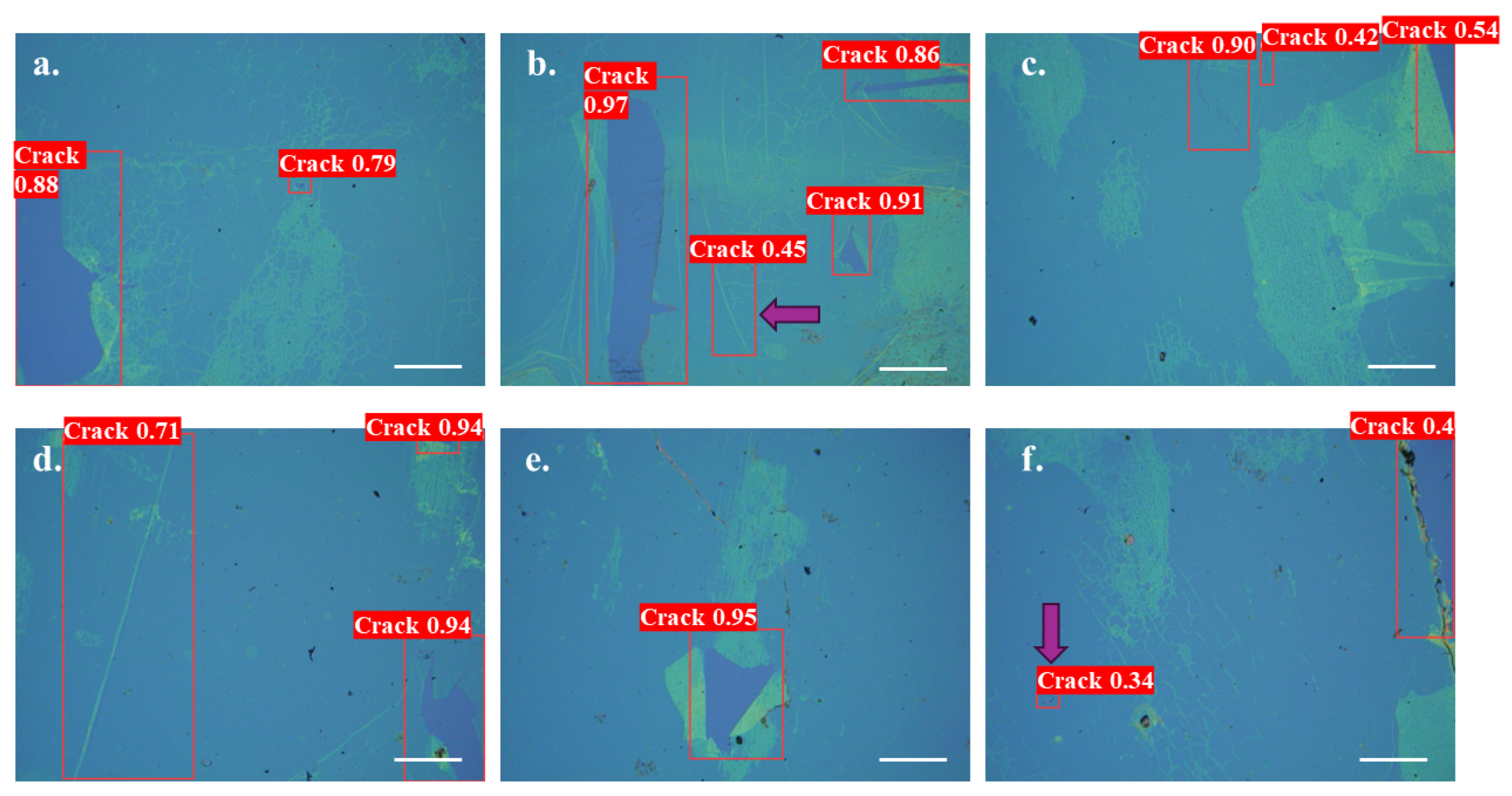

3.2. Visualizing Model Performance: Qualitative Analysis of Crack Detection

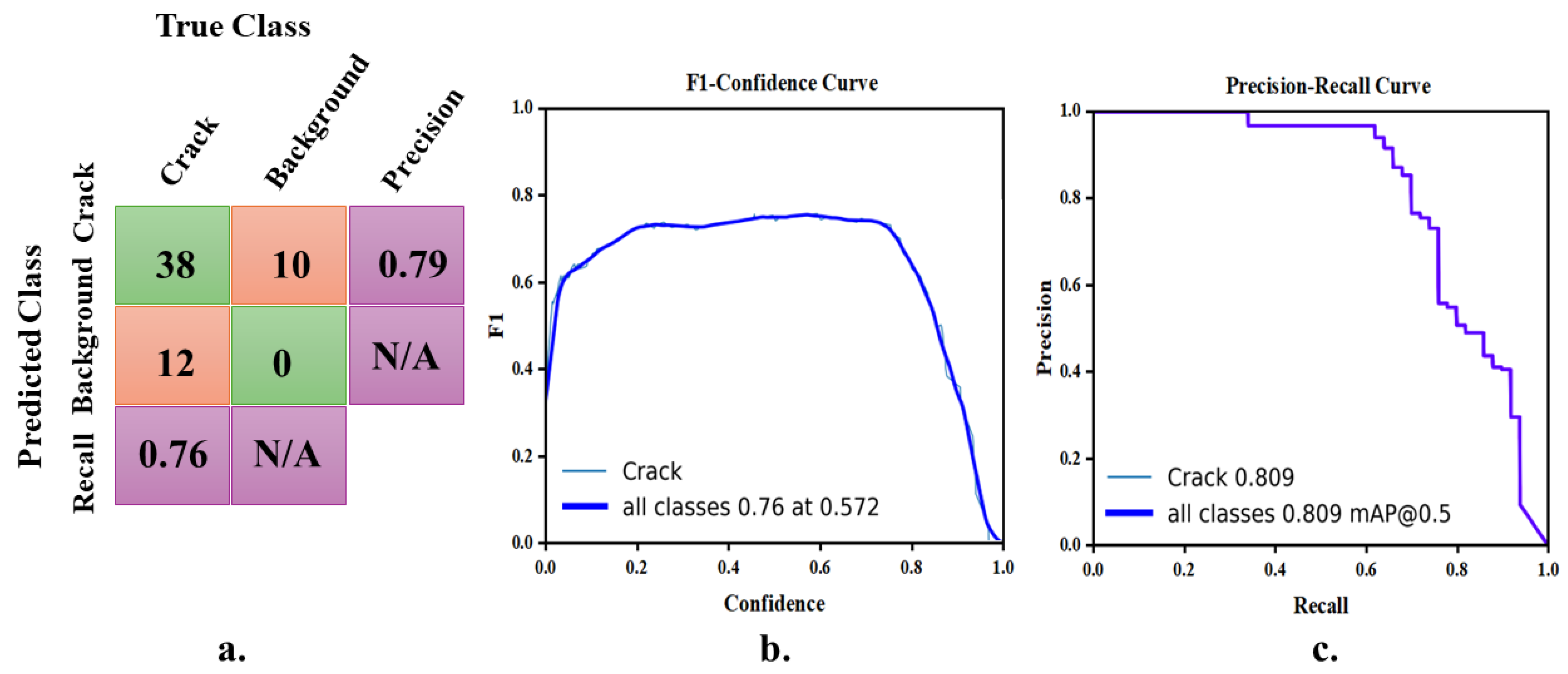

3.3. Quantitative Analysis of Model Performance: Errors and Metrics

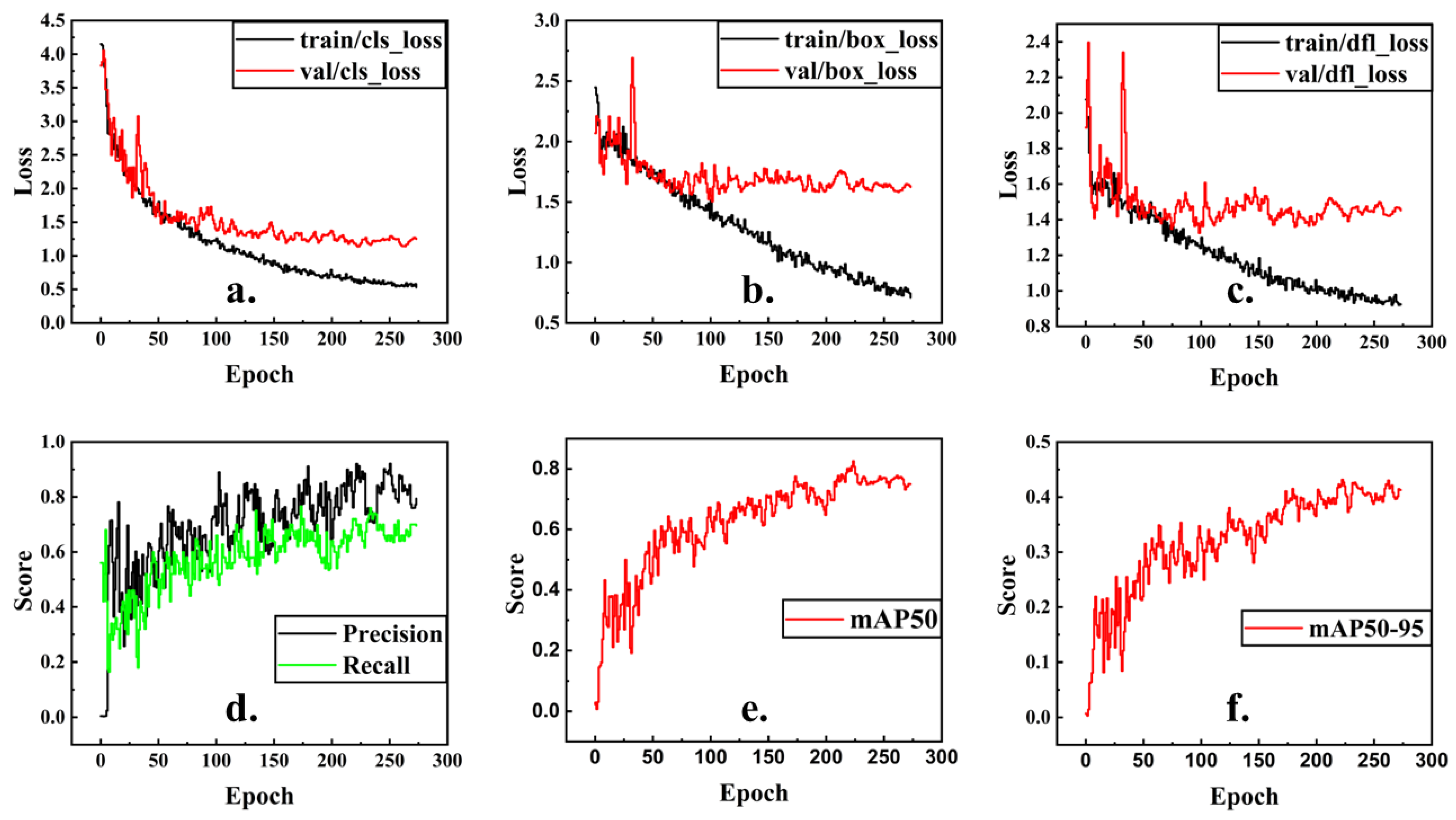

3.4. Understanding Model Training Dynamics: Loss Curve Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gupta, A.; Sakthivel, T.; Seal, S. Recent development in 2D materials beyond graphene. Prog. Mater. Sci. 2015, 73, 44–126. [Google Scholar] [CrossRef]

- Song, L.; Ci, L.; Lu, H.; Sorokin, P.B.; Jin, C.; Ni, J.; Kvashnin, A.G.; Kvashnin, D.G.; Lou, J.; Yakobson, B.I.; et al. Large scale growth and characterization of atomic hexagonal boron nitride layers. Nano Lett. 2010, 10, 3209–3215. [Google Scholar] [CrossRef]

- Kim, K.K.; Hsu, A.; Jia, X.; Kim, S.M.; Shi, Y.; Dresselhaus, M.; Palacios, T.; Kong, J. Synthesis and characterization of hexagonal boron nitride film as a dielectric layer for graphene devices. ACS Nano 2012, 6, 8583–8590. [Google Scholar] [CrossRef]

- Roy, S.; Zhang, X.; Puthirath, A.B.; Meiyazhagan, A.; Bhattacharyya, S.; Rahman, M.M.; Babu, G.; Susarla, S.; Saju, S.K.; Tran, M.K.; et al. Structure, Properties and Applications of Two-Dimensional Hexagonal Boron Nitride. Adv. Mater. 2021, 33, 2101589. [Google Scholar] [CrossRef]

- Zhang, K.; Feng, Y.; Wang, F.; Yang, Z.; Wang, J. Two dimensional hexagonal boron nitride (2D-hBN): Synthesis, properties and applications. J. Mater. Chem. C 2017, 5, 11992–12022. [Google Scholar] [CrossRef]

- Maity, A.; Grenadier, S.J.; Li, J.; Lin, J.Y.; Jiang, H.X. Hexagonal boron nitride: Epitaxial growth and device applications. Prog. Quantum Electron. 2021, 76, 100302. [Google Scholar] [CrossRef]

- Ogawa, S.; Fukushima, S.; Shimatani, M. Hexagonal Boron Nitride for Photonic Device Applications: A Review. Materials 2023, 16, 2005. [Google Scholar] [CrossRef]

- Li, M.; Huang, G.; Chen, X.; Yin, J.; Zhang, P.; Yao, Y.; Shen, J.; Wu, Y.; Huang, J. Perspectives on environmental applications of hexagonal boron nitride nanomaterials. Nano Today 2022, 44, 101486. [Google Scholar] [CrossRef]

- Zhang, J.; Tan, B.; Zhang, X.; Gao, F.; Hu, Y.; Wang, L.; Duan, X.; Yang, Z.; Hu, P.A. Atomically Thin Hexagonal Boron Nitride and Its Heterostructures. Adv. Mater. 2021, 33, 2000769. [Google Scholar] [CrossRef] [PubMed]

- Xi, Y.; Zhuang, J.; Hao, W.; Du, Y. Recent Progress on Two-Dimensional Heterostructures for Catalytic, Optoelectronic, and Energy Applications. ChemElectroChem 2019, 6, 2841–2851. [Google Scholar] [CrossRef]

- Li, Q.; Liu, M.; Zhang, Y.; Liu, Z. Hexagonal Boron Nitride–Graphene Heterostructures: Synthesis and Interfacial Properties. Small 2016, 12, 32–50. [Google Scholar] [CrossRef]

- Wang, J.; Ma, F.; Sun, M. Graphene, hexagonal boron nitride, and their heterostructures: Properties and applications. RSC Adv. 2017, 7, 16801–16822. [Google Scholar] [CrossRef]

- Novoselov, K.S.; Mishchenko, A.; Carvalho, A.; Neto, A.H.C. 2D materials and van der Waals heterostructures. Science 2016, 353, 6298. [Google Scholar] [CrossRef]

- Butler, S.Z.; Hollen, S.M.; Cao, L.; Cui, Y.; Gupta, J.A.; Gutiérrez, H.R.; Heinz, T.F.; Hong, S.S.; Huang, J.; Ismach, A.F.; et al. Progress, challenges, and opportunities in two-dimensional materials beyond graphene. ACS Nano 2013, 7, 2898–2926. [Google Scholar] [CrossRef]

- Li, X.; Cai, W.; An, J.; Kim, S.; Nah, J.; Yang, D.; Piner, R.; Velamakanni, A.; Jung, I.; Tutuc, E.; et al. Large-area synthesis of high-quality and uniform graphene films on copper foils. Science 2009, 324, 1312–1314. [Google Scholar] [CrossRef]

- Kim, S.M.; Hsu, A.; Park, M.H.; Chae, S.H.; Yun, S.J.; Lee, J.S.; Cho, D.H.; Fang, W.; Lee, C.; Palacios, T.; et al. Synthesis of large-area multilayer hexagonal boron nitride for high material performance. Nat. Commun. 2015, 6, 8662. [Google Scholar] [CrossRef]

- Kim, K.K.; Hsu, A.; Jia, X.; Kim, S.M.; Shi, Y.; Hofmann, M.; Nezich, D.; Rodriguez-Nieva, J.F.; Dresselhaus, M.; Palacios, T.; et al. Synthesis of monolayer hexagonal boron nitride on Cu foil using chemical vapor deposition. Nano Lett. 2012, 12, 161–166. [Google Scholar] [CrossRef]

- Shi, Y.; Hamsen, C.; Jia, X.; Kim, K.K.; Reina, A.; Hofmann, M.; Hsu, A.L.; Zhang, K.; Li, H.; Juang, Z.Y.; et al. Synthesis of few-layer hexagonal boron nitride thin film by chemical vapor deposition. Nano Lett. 2010, 10, 4134–4139. [Google Scholar] [CrossRef]

- Park, J.H.; Park, J.C.; Yun, S.J.; Kim, H.; Luong, D.H.; Kim, S.M.; Choi, S.H.; Yang, W.; Kong, J.; Kim, K.K.; et al. Large-area monolayer hexagonal boron nitride on Pt foil. ACS Nano 2014, 8, 8520–8528. [Google Scholar] [CrossRef]

- Chen, C.C.; Li, Z.; Shi, L.; Cronin, S.B. Thermoelectric transport across graphene/hexagonal boron nitride/graphene heterostructures. Nano Res. 2015, 8, 666–672. [Google Scholar] [CrossRef]

- Chen, Y.; Gong, X.L.; Gai, J.G.; Chen, Y.; Gong, X.L.; Gai, J.G. Progress and Challenges in Transfer of Large-Area Graphene Films. Adv. Sci. 2016, 3, 1500343. [Google Scholar] [CrossRef]

- Yang, Y.; Song, Z.; Lu, G.; Zhang, Q.; Zhang, B.; Ni, B.; Wang, C.; Li, X.; Gu, L.; Xie, X.; et al. Intrinsic toughening and stable crack propagation in hexagonal boron nitride. Nature 2021, 594, 57–61. [Google Scholar] [CrossRef]

- Chilkoor, G.; Karanam, S.P.; Star, S.; Shrestha, N.; Sani, R.K.; Upadhyayula, V.K.; Ghoshal, D.; Koratkar, N.A.; Meyyappan, M.; Gadhamshetty, V. Hexagonal Boron Nitride: The Thinnest Insulating Barrier to Microbial Corrosion. ACS Nano 2018, 12, 2242–2252. [Google Scholar] [CrossRef]

- Chilkoor, G.; Jawaharraj, K.; Vemuri, B.; Kutana, A.; Tripathi, M.; Kota, D.; Arif, T.; Filleter, T.; Dalton, A.B.; Yakobson, B.I.; et al. Hexagonal boron nitride for sulfur corrosion inhibition. ACS Nano 2020, 14, 14809–14819. [Google Scholar] [CrossRef]

- Watson, A.J.; Lu, W.; Guimaraes, M.H.; Stöhr, M. Transfer of large-scale two-dimensional semiconductors: Challenges and developments. 2D Mater. 2021, 8, 032001. [Google Scholar] [CrossRef]

- Rahman, M.H.U.; Tripathi, M.; Dalton, A.; Subramaniam, M.; Talluri, S.N.; Jasthi, B.K.; Gadhamshetty, V. Machine Learning-Guided Optical and Raman Spectroscopy Characterization of 2D Materials. In Machine Learning in 2D Materials Science; CRC Press: Boca Raton, FL, USA, 2023; pp. 163–177. [Google Scholar] [CrossRef]

- Lin, Z.; McCreary, A.; Briggs, N.; Subramanian, S.; Zhang, K.; Sun, Y.; Li, X.; Borys, N.J.; Yuan, H.; Fullerton-Shirey, S.K.; et al. 2D materials advances: From large scale synthesis and controlled heterostructures to improved characterization techniques, defects and applications. 2D Mater. 2016, 3, 042001. [Google Scholar] [CrossRef]

- Khadir, S.; Bon, P.; Vignaud, D.; Galopin, E.; McEvoy, N.; McCloskey, D.; Monneret, S.; Baffou, G. Optical Imaging and Characterization of Graphene and Other 2D Materials Using Quantitative Phase Microscopy. ACS Photon. 2017, 4, 3130–3139. [Google Scholar] [CrossRef]

- Bachmatiuk, A.; Schäffel, F.; Warner, J.H.; Rümmeli, M.; Allen, C.S. Characterisation Techniques. In Graphene: Fundamentals and Emergent Applications; Elsevier: Amsterdam, The Netherlands, 2012; pp. 229–332. [Google Scholar] [CrossRef]

- Gorbachev, R.V.; Riaz, I.; Nair, R.R.; Jalil, R.; Britnell, L.; Belle, B.D.; Hill, E.W.; Novoselov, K.S.; Watanabe, K.; Taniguchi, T.; et al. Hunting for Monolayer Boron Nitride: Optical and Raman Signatures. Small 2011, 7, 465–468. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Sikder, R.; Zhang, T.; Ye, T. Predicting THM Formation and Revealing Its Contributors in Drinking Water Treatment Using Machine Learning. ACS ES T Water 2024, 4, 899–912. [Google Scholar] [CrossRef]

- Gurung, B.D.S.; Khanal, A.; Hartman, T.W.; Do, T.; Chataut, S.; Lushbough, C.; Gadhamshetty, V.; Gnimpieba, E.Z. Transformer in Microbial Image Analysis: A Comparative Exploration of TransUNet, UNet, and DoubleUNet for SEM Image Segmentation. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; IEEE: Piscataway, NY, USA, 2023; pp. 4500–4502. [Google Scholar] [CrossRef]

- Devadig, R.; Gurung, B.D.S.; Gnimpieba, E.; Jasthi, B.; Gadhamshetty, V. Computational methods for biofouling and corrosion-resistant graphene nanocomposites. A transdisciplinary approach. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; IEEE: Piscataway, NY, USA, 2023; pp. 4494–4496. [Google Scholar] [CrossRef]

- Gurung, B.D.S.; Devadig, R.; Do, T.; Gadhamshetty, V.; Gnimpieba, E.Z. U-net based image segmentation techniques for development of non-biocidal fouling-resistant ultra-thin two-dimensional (2D) coatings. In Proceedings of the 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Las Vegas, NV, USA, 6–8 December 2022; IEEE: Piscataway, NY, USA, 2022; pp. 3602–3604. [Google Scholar] [CrossRef]

- Oruganti, R.K.; Biji, A.P.; Lanuyanger, T.; Show, P.L.; Sriariyanun, M.; Upadhyayula, V.K.; Gadhamshetty, V.; Bhattacharyya, D. Artificial intelligence and machine learning tools for high-performance microalgal wastewater treatment and algal biorefinery: A critical review. Sci. Total. Environ. 2023, 876, 162797. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhao, T.; Ju, W.; Shi, S. Materials discovery and design using machine learning. J. Mater. 2017, 3, 159–177. [Google Scholar] [CrossRef]

- Yin, H.; Sun, Z.; Wang, Z.; Tang, D.; Pang, C.H.; Yu, X.; Barnard, A.S.; Zhao, H.; Yin, Z. The data-intensive scientific revolution occurring where two-dimensional materials meet machine learning. Cell Rep. Phys. Sci. 2021, 2, 100482. [Google Scholar] [CrossRef]

- Ryu, B.; Wang, L.; Pu, H.; Chan, M.K.; Chen, J. Understanding, discovery, and synthesis of 2D materials enabled by machine learning. Chem. Soc. Rev. 2022, 51, 1899–1925. [Google Scholar] [CrossRef] [PubMed]

- Masubuchi, S.; Machida, T. Classifying optical microscope images of exfoliated graphene flakes by data-driven machine learning. npj Mater. Appl. 2019, 3, 4. [Google Scholar] [CrossRef]

- Masubuchi, S.; Watanabe, E.; Seo, Y.; Okazaki, S.; Sasagawa, T.; Watanabe, K.; Taniguchi, T.; Machida, T. Deep-learning-based image segmentation integrated with optical microscopy for automatically searching for two-dimensional materials. npj Mater. Appl. 2020, 4, 3. [Google Scholar] [CrossRef]

- Han, B.; Lin, Y.; Yang, Y.; Mao, N.; Li, W.; Wang, H.; Yasuda, K.; Wang, X.; Fatemi, V.; Zhou, L.; et al. Deep-Learning-Enabled Fast Optical Identification and Characterization of 2D Materials. Adv. Mater. 2020, 32, 2000953. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Yao, H. Automated identification and characterization of two-dimensional materials via machine learning-based processing of optical microscope images. Extrem. Mech. Lett. 2020, 39, 100771. [Google Scholar] [CrossRef]

- Vincent, T.; Kawahara, K.; Antonov, V.; Ago, H.; Kazakova, O. Data cluster analysis and machine learning for classification of twisted bilayer graphene. Carbon 2023, 201, 141–149. [Google Scholar] [CrossRef]

- Lin, X.; Si, Z.; Fu, W.; Yang, J.; Guo, S.; Cao, Y.; Zhang, J.; Wang, X.; Liu, P.; Jiang, K.; et al. Intelligent identification of two-dimensional nanostructures by machine-learning optical microscopy. Nano Res. 2018, 11, 6316–6324. [Google Scholar] [CrossRef]

- Li, Y.; Kong, Y.; Peng, J.; Yu, C.; Li, Z.; Li, P.; Liu, Y.; Gao, C.F.; Wu, R. Rapid identification of two-dimensional materials via machine learning assisted optic microscopy. J. Mater. 2019, 5, 413–421. [Google Scholar] [CrossRef]

- Sterbentz, R.M.; Haley, K.L.; Island, J.O. Universal image segmentation for optical identification of 2D materials. Sci. Rep. 2021, 11, 5808. [Google Scholar] [CrossRef]

- Ramezani, F.; Parvez, S.; Fix, J.P.; Battaglin, A.; Whyte, S.; Borys, N.J.; Whitaker, B.M. Automatic detection of multilayer hexagonal boron nitride in optical images using deep learning-based computer vision. Sci. Rep. 2023, 13, 1595. [Google Scholar] [CrossRef] [PubMed]

- Rahman, M.H.U.; Bommanapally, V.; Abeyrathna, D.; Ashaduzzman, M.; Tripathi, M.; Zahan, M.; Subramaniam, M.; Gadhamshetty, V. Machine Learning-Assisted Optical Detection of Multilayer Hexagonal Boron Nitride for Enhanced Characterization and Analysis. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; IEEE: Piscataway, NY, USA, 2023; pp. 4506–4508. [Google Scholar] [CrossRef]

- Patra, T.K.; Zhang, F.; Schulman, D.S.; Chan, H.; Cherukara, M.J.; Terrones, M.; Das, S.; Narayanan, B.; Sankaranarayanan, S.K. Defect dynamics in 2-D MoS2 probed by using machine learning, atomistic simulations, and high-resolution microscopy. ACS Nano 2018, 12, 8006–8016. [Google Scholar] [CrossRef]

- Guo, Y.; Kalinin, S.V.; Cai, H.; Xiao, K.; Krylyuk, S.; Davydov, A.V.; Guo, Q.; Lupini, A.R. Defect detection in atomic-resolution images via unsupervised learning with translational invariance. npj Comput. Mater. 2021, 7, 180. [Google Scholar] [CrossRef]

- Lee, C.H.; Khan, A.; Luo, D.; Santos, T.P.; Shi, C.; Janicek, B.E.; Kang, S.; Zhu, W.; Sobh, N.A.; Schleife, A.; et al. Deep learning enabled strain mapping of single-atom defects in two-dimensional transition metal dichalcogenides with sub-picometer precision. Nano Lett. 2020, 20, 3369–3377. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhu, Y.; Cai, W.; Borysiak, M.; Han, B.; Chen, D.; Piner, R.D.; Colomba, L.; Ruoff, R.S. Transfer of large-area graphene films for high-performance transparent conductive electrodes. Nano Lett. 2009, 9, 4359–4363. [Google Scholar] [CrossRef] [PubMed]

- Fukamachi, S.; Solís-Fernández, P.; Kawahara, K.; Tanaka, D.; Otake, T.; Lin, Y.C.; Suenaga, K.; Ago, H. Large-area synthesis and transfer of multilayer hexagonal boron nitride for enhanced graphene device arrays. Nat. Electron. 2023, 6, 126–136. [Google Scholar] [CrossRef]

- Park, H.; Lim, C.; Lee, C.J.; Kang, J.; Kim, J.; Choi, M.; Park, H. Optimized poly(methyl methacrylate)-mediated graphene-transfer process for fabrication of high-quality graphene layer. Nanotechnology 2018, 29, 415303. [Google Scholar] [CrossRef]

- Liu, Z.; Gong, Y.; Zhou, W.; Ma, L.; Yu, J.; Idrobo, J.C.; Jung, J.; Macdonald, A.H.; Vajtai, R.; Lou, J.; et al. Ultrathin high-temperature oxidation-resistant coatings of hexagonal boron nitride. Nat. Commun. 2013, 4, 2541. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5: A State-Of-The-Art Real-Time Object Detection System. 2021. Available online: https://docs.ultralytics.com (accessed on 30 March 2024).

- Wang, C.Y.; Mark Liao, H.Y.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Virtual, 14–19 June 2020; IEEE: Piscataway, NY, USA, 2020. [Google Scholar] [CrossRef]

- Ju, R.Y.; Cai, W. Fracture detection in pediatric wrist trauma X-ray images using YOLOv8 algorithm. Sci. Rep. 2023, 13, 20077. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| initial learning rate (lr0) | 0.01 |

| final learning rate (lrf) | 0.01 |

| Adam beta1 (momentum) | 0.937 |

| optimizer weight decay (weight_decay) | 0.0005 |

| warmup epochs (warmup_epochs) | 3.0 |

| warmup initial momentum | 0.8 |

| warmup initial bias lr | 0.1 |

| Automatic Mixed Precision (AMP) training | True |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, M.H.-U.; Shrestha Gurung, B.D.; Jasthi, B.K.; Gnimpieba, E.Z.; Gadhamshetty, V. Automated Crack Detection in 2D Hexagonal Boron Nitride Coatings Using Machine Learning. Coatings 2024, 14, 726. https://doi.org/10.3390/coatings14060726

Rahman MH-U, Shrestha Gurung BD, Jasthi BK, Gnimpieba EZ, Gadhamshetty V. Automated Crack Detection in 2D Hexagonal Boron Nitride Coatings Using Machine Learning. Coatings. 2024; 14(6):726. https://doi.org/10.3390/coatings14060726

Chicago/Turabian StyleRahman, Md Hasan-Ur, Bichar Dip Shrestha Gurung, Bharat K. Jasthi, Etienne Z. Gnimpieba, and Venkataramana Gadhamshetty. 2024. "Automated Crack Detection in 2D Hexagonal Boron Nitride Coatings Using Machine Learning" Coatings 14, no. 6: 726. https://doi.org/10.3390/coatings14060726

APA StyleRahman, M. H.-U., Shrestha Gurung, B. D., Jasthi, B. K., Gnimpieba, E. Z., & Gadhamshetty, V. (2024). Automated Crack Detection in 2D Hexagonal Boron Nitride Coatings Using Machine Learning. Coatings, 14(6), 726. https://doi.org/10.3390/coatings14060726