Simple Summary

Sex estimation based on bones is a technique used to determine the biological sex of an individual from skeletal remains. It relies on the anatomical differences between male and female skeletons. Various bone characteristics have been incorporated into methods for sex estimation. Linear measurements are commonly used features in classification models for sex estimation. On the other hand, angle measurements are rarely included in such models, although they are important characteristics of the geometry of the bones and could provide essential information for the discrimination between the male and female bones. The goal of this research is to examine the potential of cranial angles for sex estimation and to identify the set of the most dimorphic angles by applying machine learning algorithms.

Abstract

The development of current sexing methods largely depends on the use of adequate sources of data and adjustable classification techniques. Most sex estimation methods have been based on linear measurements, while the angles have been largely ignored, potentially leading to the loss of valuable information for sex discrimination. This study aims to evaluate the usefulness of cranial angles for sex estimation and to differentiate the most dimorphic ones by training machine learning algorithms. Computed tomography images of 154 males and 180 females were used to derive data of 36 cranial angles. The classification models were created by support vector machines, naïve Bayes, logistic regression, and the rule-induction algorithm CN2. A series of cranial angle subsets was arranged by an attribute selection scheme. The algorithms achieved the highest accuracy on subsets of cranial angles, most of which correspond to well-known features for sex discrimination. Angles characterizing the lower forehead and upper midface were included in the best-performing models of all algorithms. The accuracy results showed the considerable classification potential of the cranial angles. The study demonstrates the value of the cranial angles as sex indicators and the possibility to enhance the sex estimation accuracy by using them.

1. Introduction

Skeletal remains provide valuable information about various biological characteristics of an individual (including sex, age, ancestry, stature, etc.). The extraction of this information can be achieved by different features and approaches. The use of macroscopic traits is considered a straightforward method for obtaining data about an individual’s biological profile. The methods based on measurable characteristics are also widely used in anthropological studies because of their quantifiable nature and potential for yielding essential information after adequate statistical processing. Linear measurements are established as standard metrics in morphometric studies because they can be easily obtained directly and virtually. Angles are particularly useful for the metric description of complex structures like the facial skeleton, pelvic bones, and long bone epiphyses, but they are frequently overlooked due to the difficulties in measuring them, which requires specialized equipment. Although the use of virtual tools facilitates their measuring, they are often omitted from the studies. However, angles could provide valuable information about bone morphology regardless of size differences between compared objects.

The human skull is the most complex part of the axial skeleton and is a focus of many studies due to its unique assemblage of bones and the variation observed in its components in relation to developmental, evolutionary, genetic, and environmental influence. Moreover, the skull has been examined because of its connection to the brain, sensory organs, onset of the respiratory and digestive systems, facial soft tissues and, in particular, its association to the facial appearance. In forensic anthropology, cranial measurements have been extensively used, particularly in sex estimation studies, where they are incorporated into models for sex classification. Cranial angles have been included in several sex estimation models along with linear measurements, making it difficult to distinguish their individual contribution [1,2,3,4,5]. The studies based solely on cranial angles have either focused on a specific bone, such as the frontal bone [6,7], or on a particular segment, such as the nasion-glabella-sella triangle [8]. In the referenced studies, the angular values have been obtained using different types of images: cephalograms [1,2,4,8], three-dimensional (3D) models [3,5], and two-dimensional (2D) lateral screenshots on 3D models [6,7].

Various imaging technologies can be employed to generate images of bones, including medical imaging of living individuals, as well as laser scanning and photogrammetry of dry bone specimens. Working with images facilitates not only the easy calculation of traditionally used measurements but also the derivation of additional metrics that would be difficult to obtain through direct measurement methods. This is particularly useful for angles that fall into three categories: between two vectors, between a vector and a plane, and between two planes. Thus, their calculation requires between three and six points. Some studies use geometric morphometrics to differentiate male and female skulls based on shape, but they examine larger cranial regions and provide a generalized overview of sex differences in landmark configurations [9,10,11,12,13]. Yet, the use of metric data offers a more straightforward approach for incorporating these measurements into sex estimation models.

Sex estimation based on bone features is a standard classification task in forensic anthropology. Machine learning (ML) algorithms are proper tools for classifying input data into mutually exclusive categories, and so far, they have been extensively used to develop sex estimation models. Various classification algorithms have been applied for that purpose, as most of them belong to the type of eager learners such as support vector machines (SVM), artificial neural networks, naïve Bayes (NB), and decision trees [14,15,16,17,18,19,20,21]. The advantage of the ML algorithms that are eager learners is that the intensive computation is performed during the training phase while building a generalized prediction model, and its application afterwards is very fast, providing quick predictions. Since classification is a form of supervised learning, the input data used for training ML models should be clearly assigned to specific classes. In studies providing sex estimation models, the classes are “male” and “female”, and these labels need to be derived from trustworthy sources. This would guarantee the development of models allowing fast sex identification in current cases.

The present study aimed to assess the usefulness of cranial angles for sex estimation and to differentiate the subset of most dimorphic angular characteristics. For this purpose, a set of ML algorithms was trained on datasets containing only cranial angles to evaluate the accuracy of the models for sex estimation and to identify the angles involved in the best-performing classifiers, i.e., those providing the most accurate sex identification.

2. Material and Methods

Computed tomography (CT) scans of 334 adult Bulgarians (154 males and 180 females) were used in the study. The mean age of the males was 54 years (age range: 19–89 years) and that of the females was 57 years (age range: 20–89 years). The head scanning was performed by a medical CT system Toshiba Aquilion 64. The scanning parameters included a detector configuration of 32 × 0.5 mm, a tube voltage of 120 kV, a tube current in the range of 165 to 500 mA, and an exposure time of 0.5 s. The reconstruction parameters were set as follows: a reconstruction matrix of 512 × 512 pixels, a slice thickness of 0.5 mm, a reconstruction interval of 0.3 mm, and the convolution filter FC63. The study was approved by the Human Research Ethics Committee at the IEMPAM-BAS (No. 14/21.02.2022). All DICOM series were anonymized, keeping only the information about the sex and age of the individuals. The CT images included in the study did not show any visible pathological changes on the skull bones. The DICOM series were used to generate polygonal models of the skulls in InVesalius 3.1. (CTI, São Paulo, Brazil). This procedure included segmentation of the bone tissue at a predefined threshold of 227-3071 HU and saving of the produced surface models in STL format. Afterwards, the 3D coordinates of 37 landmarks were collected from the skull models using MeshLab 2016.12 [22] (Table 1).

Table 1.

Cranial landmarks.

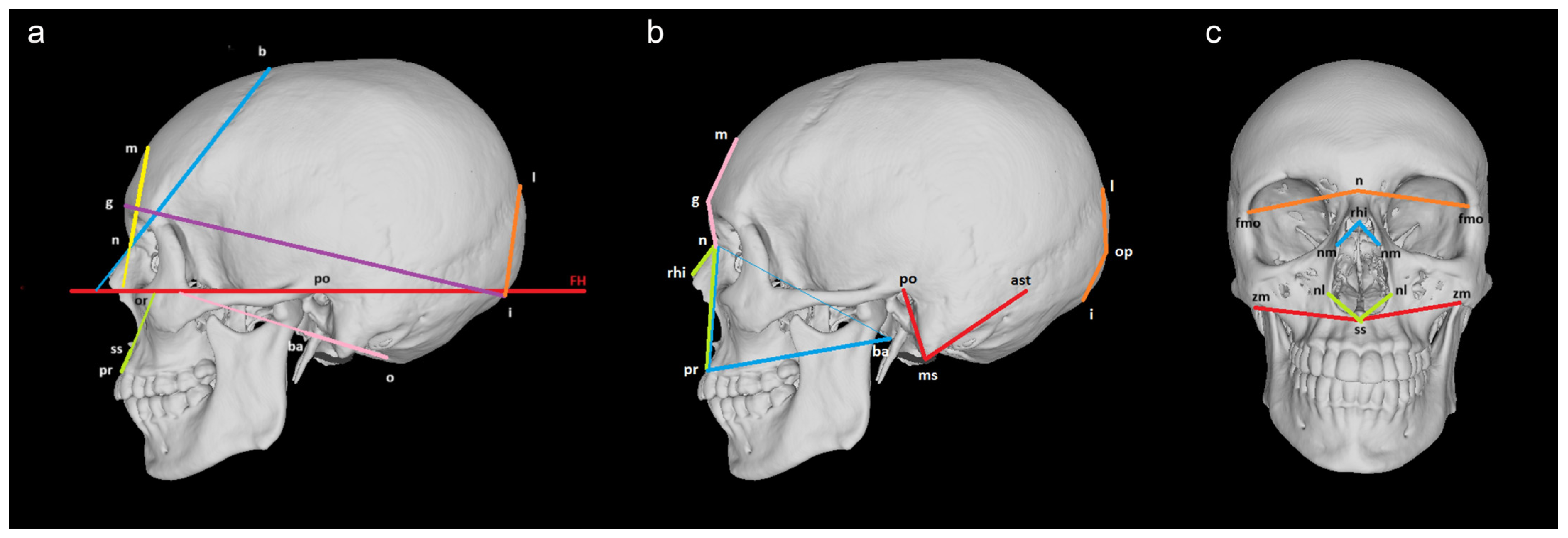

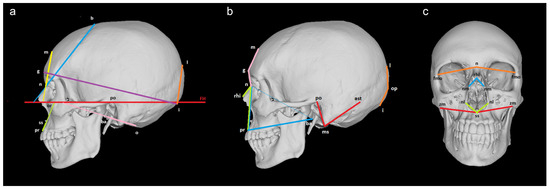

Thirty-six angles were calculated based on the 3D coordinates of the cranial landmarks (Table 2). Two types of angles were computed: between a line and a plane and between two lines (Figure 1, Supplementary File S1). Twenty (14 midsagittal and 3 bilateral) angles referred to the first type, which was calculated based on the direction vector of the line and the normal vector of the plane i.e., the arccosine of the ratio of the dot product of the two vectors to the product of their magnitudes. The plane in all these cases represented the Frankfurt horizontal plane (FH) built between the landmarks left orbitale, left porion, and right porion. The other sixteen (9 midsagittal, 5 transversal, and 1 bilateral) angles represented the second type of angles. The calculation of these angles was based on the Law of Cosines and the sides’ lengths of the triangle constructed for each set of three landmarks. The triangle’s sides were calculated as interlandmark distances in PAST, version 2.17c [23]. The computation of the angles was performed in MATLAB 2018a and MS Excel 2010.

Table 2.

Cranial angles.

Figure 1.

Cranial angles measured between (a) a line and a plane: n-b-FH (blue), n-m-FH (yellow), g-i-FH (purple), l-i-FH (orange), ss-pr-FH (green), ba-o-FH (pink); (b) two lines (lateral view): n-g-m (pink), n-pr-ba (blue), rhi-n-pr (green), l-op-i (orange), po-ms-ast (red); (c) two lines (front view): fmo-n-fmo (orange), nm-rhi-nm (blue), nl-ss-nl (green), zm-ss-zm (red).

The normality in the data distribution of each angle was assessed by the Shapiro–Wilk test and the equality of variances of the two samples was assessed by the Levene’s test. Depending on the results of these two tests, the significance of the sex differences was evaluated using either the t-test or the Mann–Whitney U-test. The significance level was set at p < 0.05.

Intraobserver errors were assessed by calculating the standard deviation of acquisition trials for each landmark [24]. The landmarks were digitized three times on 40 crania. There was a gap of 3 to 5 days between the successive trials. The error results of the neurocranial landmarks were reported in Toneva et al. [12] and those of the viscerocranial landmarks were described in Toneva et al. [13]. A summary of the errors of the landmarks included in the present study is provided in Table S1. All of them showed small intraobserver errors within 1 mm.

Several ML algorithms were selected for learning classification models (classifiers) for sex estimation. The algorithms are as follows: the rule induction algorithm CN2 [25], the optimization algorithm Support Vector Machines (SVM) [26], the probabilistic naïve Bayes (NB) [27] and logistic regression (LR) [28]. All these algorithms were implemented in the machine learning and data visualization environment Orange 2.7.8. The default values provided by the Orange environment were used for all parameters of the algorithms. SVM was applied with the RBF kernel, and the other parameters of the algorithm were set only once before the first run of cross-validation using the option “Automatic parameter search” in the Orange environment.

The training datasets of this study included examples belonging to two classes: males (class 1) and females (class 2). The classification accuracy of the default (majority-based, MB) classifier was 0.539. The ML classification models were evaluated by applying a repeated stratified k-cross-validation schema [29]. A 5-fold cross-validation, repeated 10 times at different randomly selected initial conditions, was used. Three measures were used to evaluate the quality of the models: average classification accuracy (the proportion of all correctly classified examples averaged across the 10 runs of the 5-fold cross-validation), and class-dependent classification accuracy for each class (the proportion of correctly classified examples belonging to class 1 or class 2 averaged across all runs of the 5-fold cross-validation). All classifiers were trained and tested on the same data subsets constructed by the repeated 5-fold cross-validation.

An attribute selection scheme was applied for selecting the most important attributes to train the ML algorithms and enhance their classification ability. The Correlation-based Feature Selection method [30] implemented as the CfsSubsetEval algorithm in the Weka environment (https://ml.cms.waikato.ac.nz/weka/index.html, accessed on 8 September 2024) was used as an attribute-selection method (ASM). This filter approach evaluates the quality of a subset of attributes by considering the individual predictive ability of each attribute along with the degree of redundancy between them. For searching the best subset of attributes by this method, the Weka BestFirst algorithm [31] was applied. It searches the space of attribute subsets by greedy hillclimbing augmented with a backtracking facility. Firstly, the selected ASM was applied in 5-fold cross-validation mode, and thus, the attribute selection was done based on five different subsets of the training sets, each containing only 80% of the training examples. This step was repeated 10 times with randomly selected initial conditions in order to avoid the results being dependent on the initial selection of examples included in data subsets. Hence, fifty different subsets of attributes were constructed for each training dataset. Secondly, the frequency of each attribute selection by ASM was calculated. The more frequently an attribute was selected, the more important it was for the sex classification. Finally, the attributes were grouped into several subsets, which corresponded to specific attribute importance (AI) intervals (e.g., >0, >0.1, >0.2; >0.3, etc.). Each ML algorithm was applied to all attribute subsets to identify the one that achieved the highest classification accuracy.

3. Results

3.1. Sex Differences

Significant sex differences were established in 27 out of the 36 cranial angles i.e., in 75% of all angles (Table 3). They described different parts of the skull, such as the frontal bone, occipital bone, mastoid process, nasal bones, and nasal aperture, and facial convexity. However, non-significant sex differences were observed in some standard angles, such as the frontal slope angle, facial profile angle, nasal profile angle, nasal bone slope angle, alveolar profile angle, etc.

Table 3.

Descriptive and test statistics of the cranial angles.

3.2. ML Classifiers

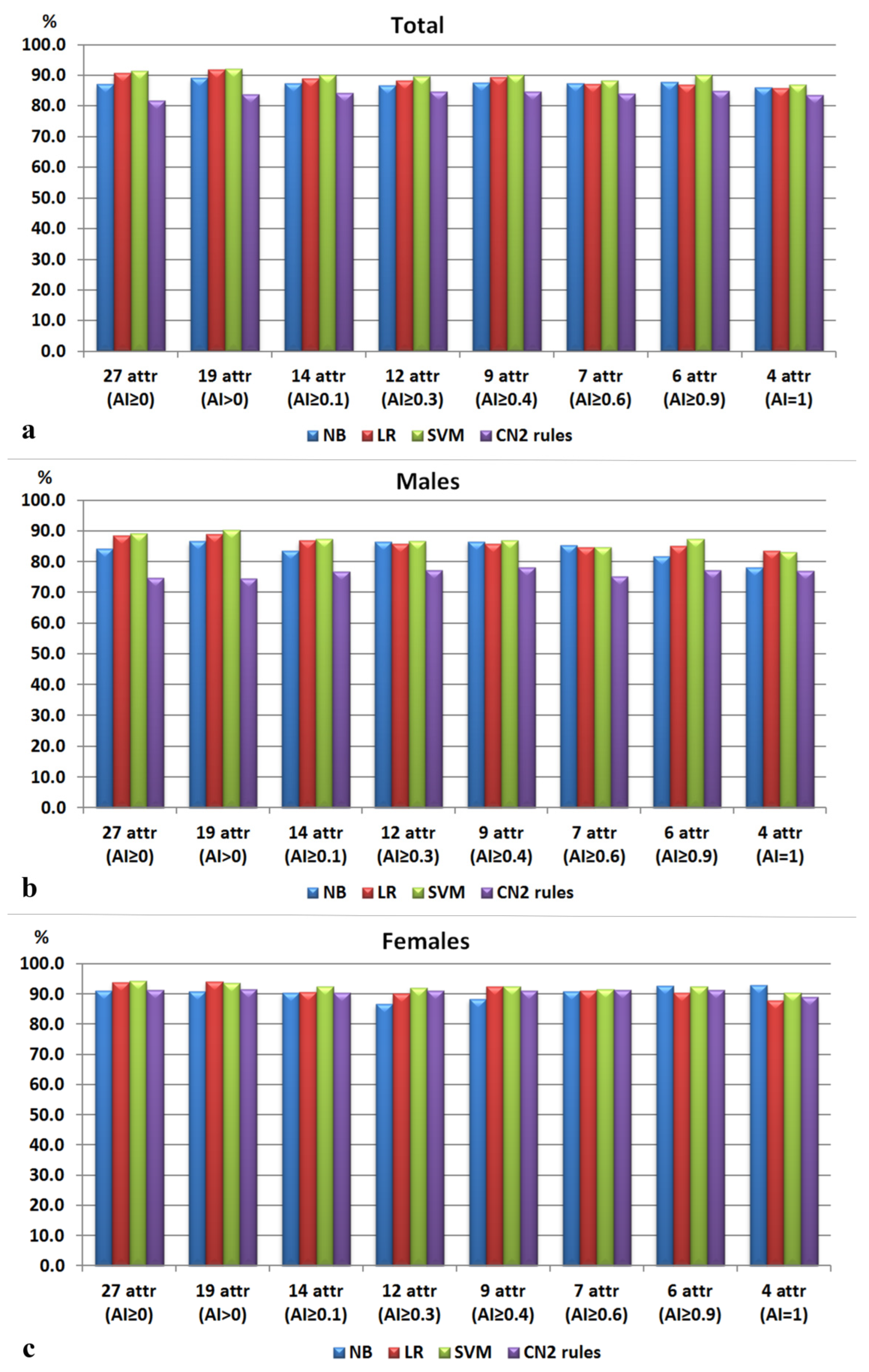

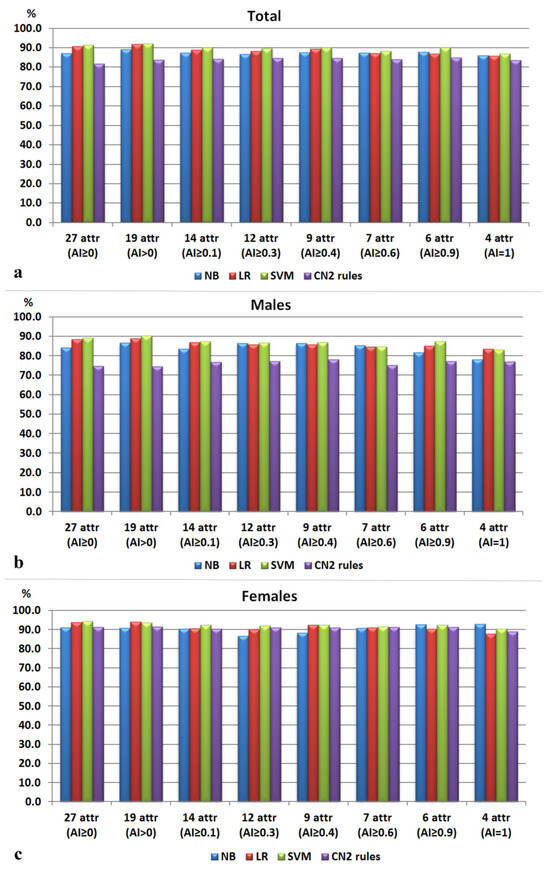

The initial dataset used to train the ML algorithms comprised the cranial angles that exhibited significant sex differences. All ML algorithms trained on this dataset of 27 cranial angles produced classification accuracy of more than 80%. The best result was achieved by SVM (91.3%), while the lowest result was obtained by the CN2 rules (81.7%).

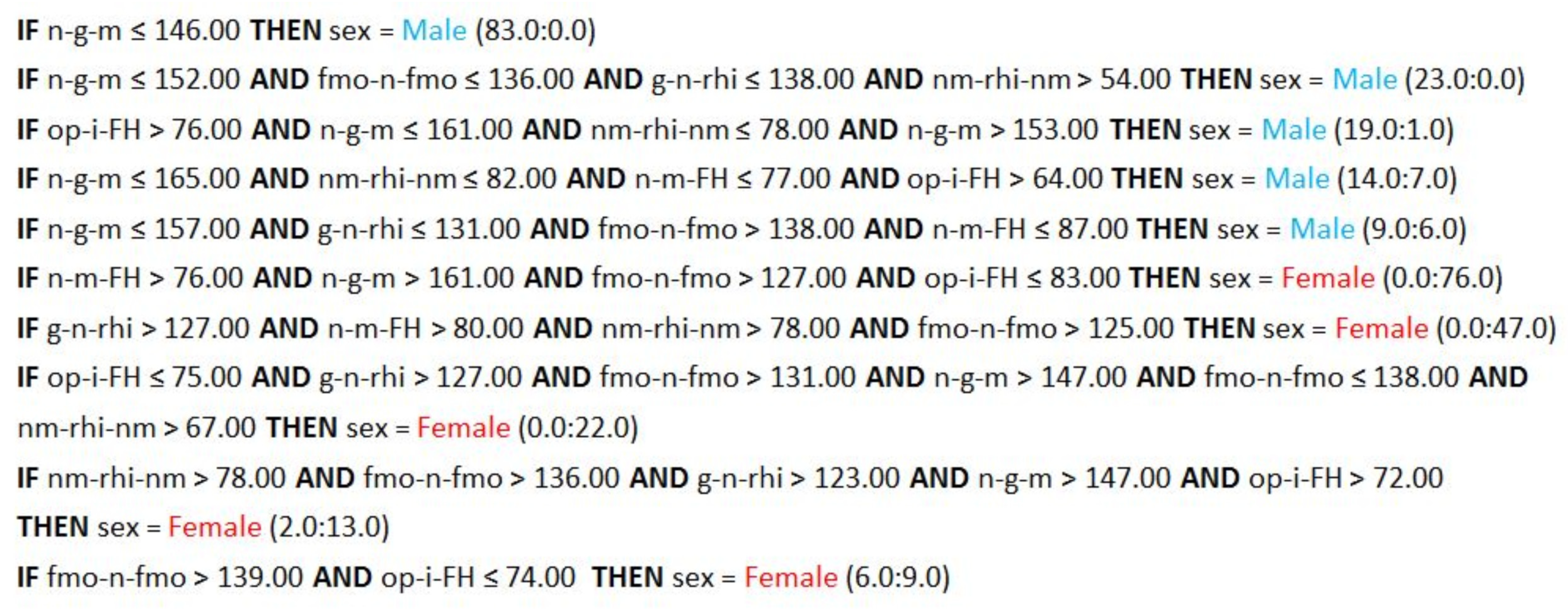

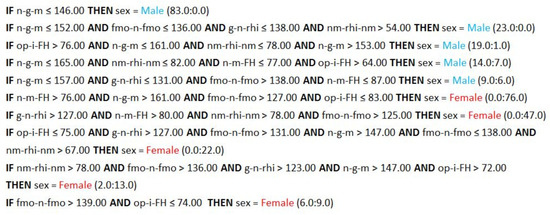

Implementing the attribute selection scheme led to improved accuracy rates across all ML algorithms. The attributes included in each selection dataset are given in Table 4. The dataset including the attributes with AI > 0 provided the best accuracy for SVM, LR, and NB of all experiments (Figure 2, Supplementary File S2). This subset included nineteen features and represented a mixture of neurocranial and viscerocranial angles that described the upper and lower midface, the frontal, mastoid, and occipital regions, as well as their interrelations. The highest accuracy rate was attained by SVM (92.1%), closely followed by LR (91.8%), and finally by the NB model whose accuracy did not surpass 90%. The performance of the CN2 algorithm also improved when it was trained on the aforementioned dataset (AI > 0), but the highest accuracy for this algorithm was achieved with the dataset containing attributes with AI ≥ 0.9 (85%). Figure 3 presents a set of 10 rules generated by the CN2 algorithm, each covering at least 15 examples. Five of these rules described the class of males, and the other five described the class of females. The six attributes included in this subset (AI ≥ 0.9) and, respectively, in the CN2 rules, characterized the lower forehead region (n-g-m, n-m-FH, g-n-rhi), the upper midface (fmo-n-fmo, nm-rhi-nm), and the occipital protrusion at the inion (op-i-FH).

Table 4.

Attribute importance (AI) of the cranial angles.

Figure 2.

Accuracy of the models for sex estimation: (a) for the whole sample; (b) for class 1 (males); (c) for class 2 (females).

Figure 3.

CN2 rules based on the attribute subset with AI ≥ 0.9. The numbers in brackets following each rule indicate its coverage. The first number indicates how many examples from the training set are classified correctly to class 1 by this rule, while the second number represents the number of examples from the training set that are misclassified by the rule.

4. Discussion

Most previous morphometric studies on cranial sexual dimorphism have been focused on linear measurements and rarely made use of angular characteristics. Therefore, the present study examined whether angles are useful for sex estimation or their neglect in the former studies was justified.

In the studies conducted so far, the number and selection of investigated cranial angles have varied considerably, leading to inconsistent findings in observed significant sex differences [1,2,3,4,5,6,7,8,32]. The full set of cranial angles used in the present study has shown that three-quarters of them differ significantly between the male and female skulls. When cranial linear measurements have been compared, there is an ultimate prevalence in favour of the male crania because of the bigger size compared to that of the female crania [1,2,3,19,33,34,35,36,37,38,39]. However, the angles are size-irrelevant, and an angle can have the same value in a smaller and in a bigger skull. Thus, since the angles describe the shape of the geometric object independent of size, the presence of significant sex differences in cranial angles refers to the presence of significant differences in the geometry of the male and female skulls. The angles included in the models with the highest accuracy in the present study describe mainly cranial structures that are well-known to differ in shape between males and females (glabella, occipital protuberance, mastoids, nasal aperture, etc.). The lack of significant sex differences in some angles suggests that the corresponding special interactions between the defined lines and surfaces are consistent across skulls, regardless of sex.

The ML models developed in this study have shown that cranial angles are useful features for sex estimation identifying sex correctly in over 90% of the skulls. Such high accuracy has been reached by ML models using linear cranial measurements [19]. The standard discriminant and regression models based on linear measurements derived from different population groups have provided accuracy rates mostly in the range between 80% and 90% [3,33,34,35,36,37,38,39,40,41,42]. Training ML models on a dataset of mixed types of measurements (linear measurements, angles, areas, and indices) has provided correct sex estimation of 95% [5]. In the present study, the highest accuracy of sex estimation (92%) was achieved by SVM on a subset of 19 cranial angles. Most SVM models trained on cranial features have also achieved accuracy of more than 90% [14,15,20]. Indeed, among the different ML algorithms, the SVM models have been the best-performing on datasets of cranial and mandibular measurements [5,43]. They are considered less likely to misclassify a skull because SVM is among the algorithms most resistant to overfitting [14]. In the present study, LR achieved high accuracy, comparable to that of SVM, again on the dataset of cranial angles with AI > 0. LR has performed very well in previous studies on cranial and mandibular measurements, also demonstrating accuracy rates exceeding 90% [5,43]. The NB algorithm delivered an accuracy of 89% on the same subset, a result similar to that of Nikita and Nikitas [18] and notably higher than that obtained by Mota et al. [21]. The highest accuracy of the CN2 rule-induction algorithm was achieved on a smaller subset including only six attributes (85%). The results of the CN2 rules correspond to those of other symbolic algorithms, such as decision trees, that have demonstrated accuracy rates in the range 80-90% [15,16,19]. It could be inferred that all algorithms, except for CN2, have performed better with a larger number of attributes. This suggests that tracing sex differences across more cranial regions enhances the accuracy of sex identification.

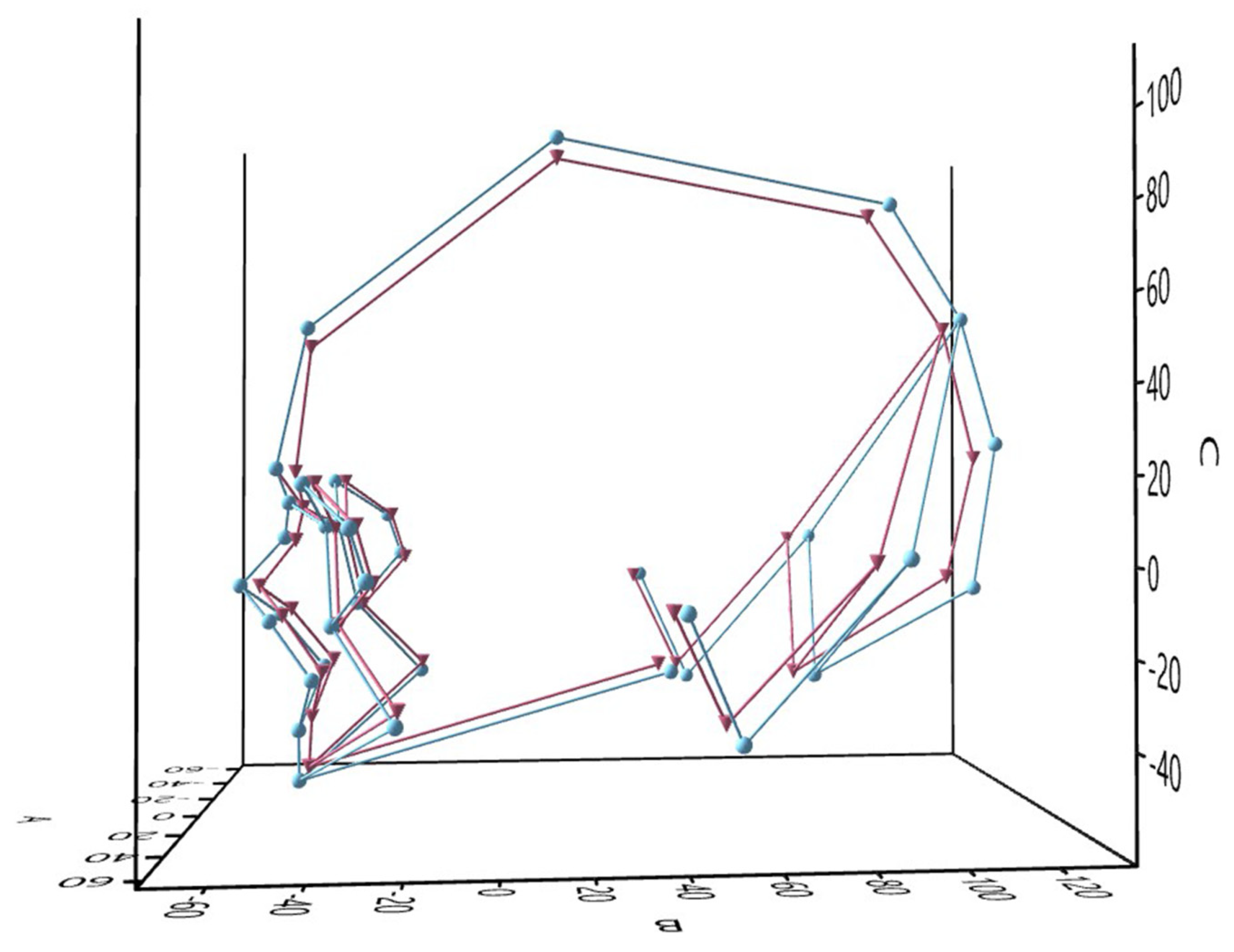

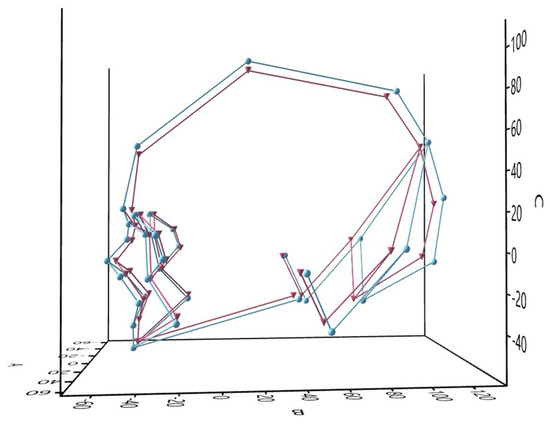

The best classification models in this study included almost an equal number of neurocranial and viscerocranial characteristics. There is a discrepancy regarding whether the neurocranium [30] or the viscerocranium [14,42] exhibits more pronounced dimorphic features. Four of the angles were present in all datasets: n-g-m, n-m-FH, fmo-n-fmo, and nm-rhi-nm. All of them have been located at the frontonasoorbital area, including the lower part of the frontal bone (nasion to metopion) and the upper midface. It is well known that the male frontal bone is more inclined and has more prominent glabellar region [6,9,10,11,14,44,45,46,47]. The latter results in a more angled nasofrontal configuration of the male skull compared to the more flattened nasofrontal region of the female one [48]. In fact, the frontal bone contains many dimorphic features, and their metric description has significantly contributed to accurate sex classification [12,45]. The present study also demonstrated that accurate sex identification is closely linked to the involvement of the frontal bone (Figure 4). Aside from the frontal bone, the posterior part of the skull also provides useful sex indicators, such as the nuchal region of the occipital bone and the mastoid processes of the temporal bone. Previous observations have clearly shown that male skulls are characterized by a more prominent and robust occiput [11,14], longer and more projecting mastoids [10,46,49], and a flattening of the lower parietal and lambda region [11]. The sex differences related to these neurocranial features are also evident in the results of the angles included in the present study.

Figure 4.

Mean landmark configurations of the male (blue) and female (red) skulls.

The complex configuration of the facial skeleton undergoes dynamic shape changes during growth and development, especially during the period when sexual dimorphism becomes more pronounced [50,51]. It has been commonly reported that the male midface is more profiled, whereas the female midface is characterized by more subtle angles [14,40,52,53], which corresponds to the more obtuse transversal facial angles (e.g., fmo-n-fmo, zm-ss-zm) observed in the female crania of the studied sample. Concerning the orbital region, female orbits are typically described as relatively larger and more rounded, while male orbits are generally more rectangular in shape [11,40,53,54,55]. In the present study, significant differences were found in the inclination of the orbital height relative to the FH, whereas no such differences were observed in the slope of the orbital breadth axis relative to the FH. The more acute angle of the orbital height in males could be explained by the more protruding supraorbital rim, whereas the superior and inferior orbital borders in females tend to align one over another. This result is consistent with previous observations that the male supraorbital rim projects forward, in contrast to the subtle supraorbital rim in females [48,52]. Additionally, males are often described as having a higher and narrower nasal aperture with more prominent nasal bones, whereas females typically have a wider nasal aperture and flatter nasal bones [11,44,53]. The sex differences described in the nasal region correspond to the results of the present study, which show that the male crania exhibit more acute angles in the nasal ridge (n-mn-rhi) and nasal aperture (nm-rhi-nm, nl-ss-nl) compared to the female crania. Hence, most of the observations described in the former studies are also present by their angular characteristics in the best discriminating subset selected in this study.

The dataset providing the highest accuracy in this study included nine angles measured between a line and a plane and ten angles between two lines. This means that half of these angles were based on the FH. The FH is a reference plane widely used in anthropological and medical practice. The FH has proved to be a stable plane providing small deviations in different measurement trials [56,57]. This makes the FH angles reliable for inclusion in sex estimation models, despite the larger number of landmarks they involve. The advantage of using 3D models is the easy collection of landmark coordinates. This method of collecting metric data facilitates the calculation of various measurements between landmarks. Consequently, landmark coordinates offer greater flexibility in studies, enabling the exploration of a broader and more diverse set of metrics. In the present study, the intraobserver error was assessed by analyzing the acquisition trials of the landmark coordinates. By using standard cranial landmarks with well-defined locations, small deviations were obtained across different trials (less than 1 mm), suggesting high reliability of the derived measurements as well.

Various factors can impact dimensions and spatial relationships between and within bones, with age being one that influences bone morphology. Bones undergo remodeling with age, but the balance between bone resorption and bone formation changes throughout different life stages. With aging, bone loss occurs in specific areas of the facial skeleton, which can significantly contribute to the appearance of the so-called aging face [58,59]. However, not all cranial bones endure resorption. While the midface recedes, the forehead expands, due to the bone deposition in the external wall of the frontal bone (especially in the supraorbital rim) [52]. Age-related changes in the facial skeleton involve an increase in the size of the orbit and pyriform aperture at the expense of the maxillary bone. The latter together with the zygoma undergo anterior and inferior resorption [52,59]. These changes have mainly been studied in relation to cosmetic surgery and their effects on soft tissues (soft-tissue descent and sagging, deepening of folds, lengthening of the lid–cheek junction, drooping of the nose tip, etc. [52]), but they could also reflect on the angular characteristics of the skull and their potential for sex discrimination, which necessitates further examination.

Cranial morphology varies among different population groups, and population-specificity is an essential part for assessing cranial sexual dimorphism. The present study explored sex differences in cranial angles within a sample of the Bulgarian population. Because of the differences in the strength and expression of sexual dimorphism across various population groups, it is hard to suggest which angles would be the most sexually dimorphic and what classification accuracy they might achieve in other populations. Due to the limited number of studies examining cranial angles as sex indicators, their behavior with respect to population specificity remains unclear. However, the usefulness of cranial angles for sex estimation, as demonstrated by the ML models in this study, could urge their testing on other population groups as well as checking their classification potential by different algorithms and subsets of angles.

5. Conclusions

In conclusion, cranial angles are valuable features for sex estimation, and a combination of certain angles can achieve correct sex identification in over 90% of skulls. The most effective models for sex estimation were developed using SVM and LR, based on a dataset of nineteen cranial angles characterizing both neurocranium and viscerocranium. Most of the angles described well-known features for sex discrimination such as glabellar projection, lower forehead inclination, occipital protuberance protrusion, facial profile convexity, etc. Accordingly, the cranial angles demonstrate their usefulness as sex indicators, providing accuracy comparable to that of standard linear measurements. Certainly, combining linear and angular measurements would guarantee greater confidence in the outcome of sex identification in practice.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/biology13100780/s1, Supplementary File S1: Visualization of the cranial angles; Supplementary File S2: Accuracy of the ML models on the separate attribute datasets; Table S1: Intraobserver measurement error of the landmarks (in mm).

Author Contributions

Conceptualization, D.T. and S.N.; methodology, D.T., S.N., G.A. and S.H.; validation, D.T.; formal analysis, G.A. and D.T.; investigation, D.T.; resources, D.Z., N.F. and G.M.; data curation, D.T.; writing—original draft preparation, D.T.; writing—review and editing, S.N., G.A., S.H., D.Z., N.F. and G.M.; visualization, D.T.; supervision, D.T. and S.N.; project administration, S.N.; funding acquisition, S.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Bulgarian National Science Fund, Grant number КП-06-Н51/4–11.11.2021.

Institutional Review Board Statement

The study was approved by the Human Research Ethics Committee at the Institute of Experimental Morphology, Pathology and Anthropology with Museum, Bulgarian Academy of Sciences (No. 14/21.02.2022).

Informed Consent Statement

Informed consent was waived due to advanced anonymization of the personal information from the images.

Data Availability Statement

The data presented in the study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Veyre-Goulet, S.A.; Mercier, C.; Robin, O.; Gurin, C. Recent human sexual dimorphism study using cephalometric plots on lateral teleradiography and discriminant function analysis. J. Forensic Sci. 2008, 53, 786–789. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, T.H.; Chang, H.P.; Liu, K.M. Sex determination by discriminant function analysis of lateral radiographic cephalometry. J. Forensic Sci. 1996, 41, 792–795. [Google Scholar] [CrossRef] [PubMed]

- Abdel Fatah, E.E.; Shirley, N.R.; Jantz, R.L.; Mahfouz, M.R. Improving sex estimation from crania using a novel three-dimensional quantitative method. J. Forensic Sci. 2014, 59, 590–600. [Google Scholar] [CrossRef] [PubMed]

- Qaq, R.; Manica, S.; Revie, G. Sex estimation using lateral cephalograms: A Statistical Analysis. Forensic Sci. Int. Rep. 2019, 1, 100034. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Hadjidekov, V.; Lazarov, N. Machine learning approaches for sex estimation using cranial measurements. Int. J. Legal Med. 2021, 135, 951–966. [Google Scholar] [CrossRef]

- Petaros, A.; Garvin, H.M.; Sholts, S.B.; Schlager, S.; Wärmländer, S.K.T.S. Sexual dimorphism and regional variation in human frontal bone inclination measured via digital 3D models. Legal Med. 2017, 29, 53–61. [Google Scholar] [CrossRef]

- Koelzer, S.C.; Kuemmel, I.V.; Koelzer, J.T.; Ramsthaler, F.; Holz, F.; Gehl, A.; Verhoff, M.A. Definitions of frontal bone inclination: Applicability and quantification. Forensic Sci. Int. 2019, 303, 109929. [Google Scholar] [CrossRef]

- Ningtyas, A.H.; Widyaningrum, R.; Shantiningsih, R.R.; Yanuaryska, R.D. Sex estimation using angular measurements of nasion, sella, and glabella on lateral cephalogram among Indonesian adults in Yogyakarta. Egypt. J. Forensic Sci. 2023, 13, 48. [Google Scholar] [CrossRef]

- Franklin, D.; Freedman, L.; Milne, N.; Oxnard, C.E. Geometric morphometric study of population variation in indigenous southern African crania. Am. J. Hum. Biol. 2007, 19, 20–33. [Google Scholar] [CrossRef]

- Green, H.; Curnoe, D. Sexual dimorphism in Southeast Asian crania: A geometric morphometric approach. HOMO 2009, 60, 517–534. [Google Scholar] [CrossRef]

- Bigoni, L.; Veleminska, J.; Bružek, J. Three-dimensional geometric morphometric analysis of craniofacial sexual dimorphism in a Central European sample of known sex. HOMO 2010, 61, 16–32. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.H.; Nikolova, S.Y.; Tasheva-Terzieva, E.D.; Zlatareva, D.K.; Lazarov, N.E. Sexual dimorphism in shape and size of the neurocranium. Int. J. Legal. Med. 2022, 136, 1851–1863. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.H.; Nikolova, S.N.; Tasheva-Terzieva, E.D.; Zlatareva, D.K.; Lazarov, N.E. A Geometric Morphometric Study on Sexual Dimorphism in Viscerocranium. Biology 2022, 11, 1333. [Google Scholar] [CrossRef] [PubMed]

- Musilová, B.; Dupej, J.; Velemínská, J.; Chaumoitre, K.; Bruzek, J. Exocranial surfaces for sex assessment of the human cranium. Forensic Sci. Int. 2016, 269, 70–77. [Google Scholar] [CrossRef]

- Gao, H.; Geng, G.; Yang, W. Sex determination of 3D skull based on a novel unsupervised learning method. Comput. Math. Methods Med. 2018, 2018, 4567267. [Google Scholar] [CrossRef]

- Yang, W.; Reziwanguli, X.; Xu, J.; Wang, P.; Hu, J.; Liu, X. Sex determination of skull based on fuzzy decision tree. In Proceedings of the 4th Workshop on Advanced Research and Technology in Industry (WARTIA 2018), Advances in Engineering Research, Dalian, China, 28–29 September 2018; Volume 173, pp. 14–20. [Google Scholar] [CrossRef]

- Bewes, J.; Low, A.; Morphett, A.; Pate, F.; Henneberg, M. Artificial intelligence for sex determination of skeletal remains: Application of a deep learning artificial neural network to human skulls. J. Forensic Legal Med. 2019, 62, 40–43. [Google Scholar] [CrossRef]

- Nikita, E.; Nikitas, P. Sex estimation: A comparison of techniques based on binary logistic, probit and cumulative probit regression, linear and quadratic discriminant analysis, neural networks, and naïve Bayes classification using ordinal variables. Int. J. Legal Med. 2020, 134, 1213–1225. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Hadjidekov, V.; Lazarov, N. Data mining for sex estimation based on cranial measurements. Forensic Sci. Int. 2020, 315, 110441. [Google Scholar] [CrossRef]

- Yang, W.; Zhou, M.; Zhang, P.; Geng, G.; Liu, X.; Zhang, H. Skull sex estimation based on wavelet transform and Fourier transform. Biomed. Res. Int. 2020, 2020, 8608209–8608210. [Google Scholar] [CrossRef]

- Mota, M.J.S.; da Silva, F.R.; Santos, G.S.; Pereira, L.S.; Almeida, R.S.; Costa, M.J.; Rodrigues, A.P.; Gomes, P.L. Sex determination based on craniometric parameters: A comparative approach between linear and non-linear machine learning algorithms. J. Arch. Health 2024, 5, 15–27. [Google Scholar] [CrossRef]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An open-source mesh processing tool. In Sixth Eurographics Italian Chapter Conference; Scarano, V., de Chiara, R., Erra, U., Eds.; Eurographics Association: Salerno, Italy, 2008; pp. 129–136. [Google Scholar]

- Hammer, Ø.; Harper, D.A.T.; Ryan, P.D. PAST: Paleontological statistics software package for education and data analysis. Palaeontol. Electron. 2001, 4, 9–18. [Google Scholar]

- von Cramon-Taubadel, N.; Frazier, B.C.; Lahr, M.M. The problem of assessing landmark error in geometric morphometrics: Theory, methods, and modifications. Am. J. Phys. Anthropol. 2007, 134, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Clark, P.; Niblett, T. The CN2 induction algorithm. Mach. Learn. 1989, 3, 261–283. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Domingos, P.; Pazzani, M. Beyond independence: Conditions for optimality of the simple Bayesian classifier. In Proceedings of the 13th International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; pp. 105–112. [Google Scholar]

- Hosmer, D.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2000. [Google Scholar]

- Mitchell, T. Machine Learning; McGraw Hill: New York, NY, USA, 1997. [Google Scholar]

- Hall, M. Correlation-Based Feature Selection for Machine Learning. Ph.D. Thesis, University of Waikato, Waikato, New Zealand, 1999. [Google Scholar]

- Witten, I.; Eibe, F.; Hall, M. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann: San Mateo, CA, USA, 2011. [Google Scholar]

- Bibby, R.E. A cephalometric study of sexual dimorphism. Am. J. Orthod. 1979, 76, 256–259. [Google Scholar] [CrossRef]

- Steyn, M.; Iscan, M.Y. Sexual dimorphism in the crania and mandibles of South African whites. Forensic Sci. Int. 1998, 98, 9–16. [Google Scholar] [CrossRef]

- Dayal, M.R.; Spocter, M.A.; Bidmos, M.A. An assessment of sex using the skull of black south Africans by discriminant function analysis. HOMO 2008, 59, 209–221. [Google Scholar] [CrossRef]

- Franklin, D.; Cardini, A.; Flavel, A.; Kuliukas, A. Estimation of sex from cranial measurements in a Western Australian population. Forensic Sci. Int. 2013, 229, 158.e1–158.e8. [Google Scholar] [CrossRef]

- Dillon, A. Cranial Sexual Dimorphism and the Population Specificity of Anthropological Standards. Master’s Thesis, University of Western Australia, Crawley, WA, Australia, 2014. [Google Scholar]

- Ekizoglu, O.; Hocaoglu, E.; Inci, E.; Can, I.O.; Solmaz, D.; Aksoy, S.; Buran, C.F.; Sayin, I. Assessment of sex in a modern Turkish population using cranial anthropometric parameters. Legal Med. 2016, 21, 45–52. [Google Scholar] [CrossRef]

- Ibrahim, A.; Alias, A.; Nor, F.M.; Swarhib, M.; Bakar, A.N.; Das, S. Study of sexual dimorphism of Malaysian crania: An important step in identification of the skeletal remains. Anat. Cell Biol. 2017, 50, 86–92. [Google Scholar] [CrossRef]

- Zaafrane, M.; Ben, K.M.; Naccache, I.; Ezzedine, E.; Savall, F.; Telmon, N.; Mnif, N.; Hamdoun, M. Sex determination of a Tunisian population by CT scan analysis of the skull. Int. J. Legal Med. 2017, 132, 853–862. [Google Scholar] [CrossRef] [PubMed]

- Franklin, D.; Cardini, A.; Flavel, A.; Kuliukas, A. The application of traditional and geometric morphometric analyses for forensic quantification of sexual dimorphism: Preliminary investigations in a Western Australian population. Int. J. Legal Med. 2012, 26, 549–558. [Google Scholar] [CrossRef] [PubMed]

- Ogawa, Y.; Imaizumi, K.; Miyasaka, S.; Yoshino, M. Discriminant functions for sex estimation of modern Japanese skulls. J. Forensic Legal Med. 2013, 20, 234–238. [Google Scholar] [CrossRef] [PubMed]

- Marinescu, M.; Panaitescu, V.; Rosu, M.; Maru, N.; Punga, A. A sexual dimorphism of crania in a Romanian population: Discriminant function analysis approach for sex estimation. Rom. J. Leg. Med. 2014, 22, 21–26. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Fileva, N.; Lazarov, N. Sex estimation based on mandibular measurements. Anthropol. Anz. 2024, 81, 19–42. [Google Scholar] [CrossRef]

- Best, K.C.; Garvin, H.M.; Cabo, L.L. An investigation into the relationship between human cranial and pelvic sexual dimorphism. J. Forensic Sci. 2017, 63, 990–1000. [Google Scholar] [CrossRef]

- Maass, P.; Friedling, L.J. Morphometric variation of the frontal bone in an adult South African cadaveric sample. HOMO 2020, 71, 205–218. [Google Scholar] [CrossRef]

- Murphy, R.E.; Garvin, H.M. A morphometric outline analysis of ancestry and sex differences in cranial shape. J. Forensic Sci. 2018, 63, 1001–1009. [Google Scholar] [CrossRef]

- del Bove, A.; Profico, A.; Riga, A.; Bucchi, A.; Lorenzo, C. A geometric morphometric approach to the study of sexual dimorphism in the modern human frontal bone. Am. J. Phys. Anthropol. 2020, 173, 643–654. [Google Scholar] [CrossRef]

- Bannister, J.J.; Juszczak, H.; Aponte, J.D.; Katz, D.C.; Knott, P.D.; Weinberg, S.M.; Hallgrímsson, B.; Forkert, N.D.; Seth, R. Sex Differences in Adult Facial Three-Dimensional Morphology: Application to Gender-Affirming Facial Surgery. Facial Plast. Surg. Aesthet. Med. 2022, 24, S24–S30. [Google Scholar] [CrossRef]

- Toneva, D.H.; Nikolova, S.Y.; Zlatareva, D.K.; Hadjidekov, V.G.; Lazarov, N.E. Sex estimation by Mastoid Triangle using 3D models. HOMO 2019, 70, 63–73. [Google Scholar] [CrossRef] [PubMed]

- Scheuer, L.; Black, S. Developmental Juvenile Osteology; Elsevier: Amsterdam, The Netherlands; Academic Press: San Diego, CA, USA, 2000. [Google Scholar]

- Vidarsdottir, U.S.; O’Higgins, P.; Stringer, C. A geometric morphometrics study of regional differences in the ontogeny of the modern human facial skeleton. J. Anat. 2002, 201, 221–229. [Google Scholar] [CrossRef] [PubMed]

- Avelar, L.E.T.; Cardoso, M.A.; Santos Bordoni, L.; de Miranda Avelar, L.; de Miranda Avelar, J.V. Aging and Sexual Differences of the Human Skull. Plast. Reconstr. Surg. Glob. Open 2017, 5, e1297. [Google Scholar] [CrossRef] [PubMed]

- Milella, M.; Franklin, D.; Belcastro, M.G.; Cardini, A. Sexual differences in human cranial morphology: Is one sex more variable or one region more dimorphic? Anat. Rec. 2021, 304, 2789–2810. [Google Scholar] [CrossRef] [PubMed]

- Bejdová, Š.; Dupej, J.; Krajíček, V.; Velemínská, J.; Velemínský, P. Stability of upper face sexual dimorphism in central European populations (Czech Republic) during the modern age. Int. J. Legal Med. 2018, 132, 321–330. [Google Scholar] [CrossRef] [PubMed]

- Pretorius, E.; Steyn, M.; Scholtz, Y. Investigation into the usability of geometric morphometric analysis in assessment of sexual dimorphism. Am. J. Phys. Anthropol. 2006, 129, 64–70. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.; Nikolova, S.; Georgiev, I. Reliability and accuracy of angular measurements on laser scanning created 3D models of dry skulls. J. Anthropol. 2016, 2016, 6218659. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Georgiev, I.; Lazarov, N. Impact of resolution and texture of laser scanning generated 3D models on landmark identification. Anat. Rec. 2020, 303, 1950–1965. [Google Scholar] [CrossRef]

- Pessa, J.E.; Zadoo, V.P.; Yuan, C.; Ayedelotte, J.D.; Cuellar, F.J.; Cochran, C.S.; Mutimer, K.L.; Garza, J.R. Concertina effect and facial aging: Nonlinear aspects of youthfulness and skeletal remodeling, and why, perhaps, infants have jowls. Plast. Reconstr. Surg. 1999, 103, 635–644. [Google Scholar] [CrossRef]

- Mendelson, B.; Wong, C.H. Changes in the facial skeleton with aging: Implications and clinical applications in facial rejuvenation. Aesthetic Plast. Surg. 2012, 36, 753–760. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).