NEMAS: Norm Entrepreneurship in Multi-Agent Systems †

Abstract

1. Introduction

“The folkways are habits of the individual and customs of the society which arise from efforts to satisfy needs. The step an individual takes for an immediate motive is repeated when similar situations arise. This repetition of certain acts produces a habit in the individual. As this habit becomes widely acceptable in a society, people tend to comply with them even though they are not enforced by any authority and it thus evolves as a norm in the group.”

1.1. Background and Motivation

1.2. Research Gap

“… a multi agent system together with normative systems in which agents on the one hand can decide whether to follow the explicitly represented norms, and on the other the normative systems specify how and in which extent the agents can modify the norms.”

- Green cells: lawn;

- Brown cells: pathway;

- Grey cells: open space.

2. Materials and Methods

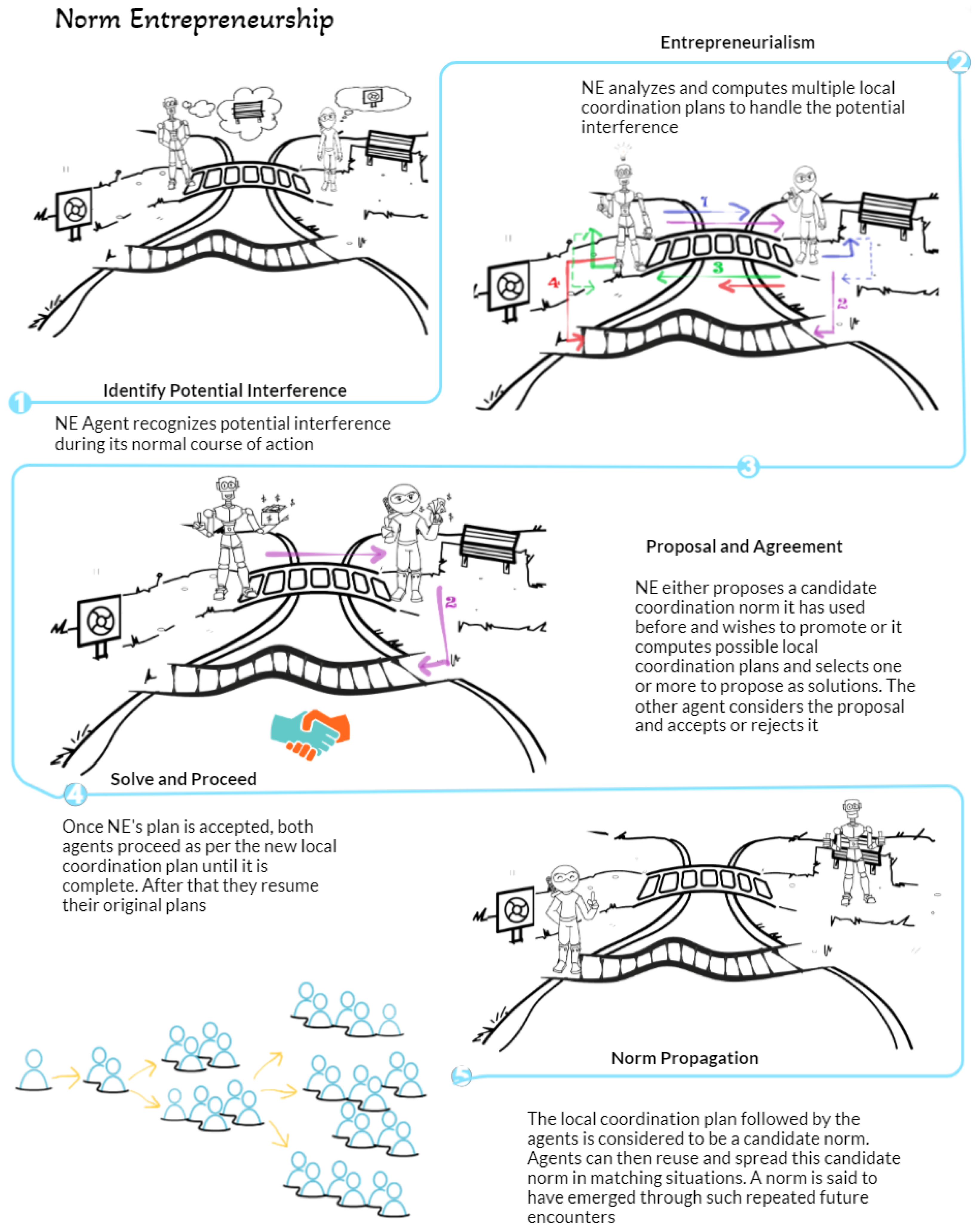

2.1. The Five Stages of Our Norm Entrepreneurship Framework

2.1.1. Identifying Potential Interference

2.1.2. Local Coordination Planning by Entrepreneurs

2.1.3. Proposal and Agreement

2.1.4. Plan Execution

2.1.5. Norm Propagation

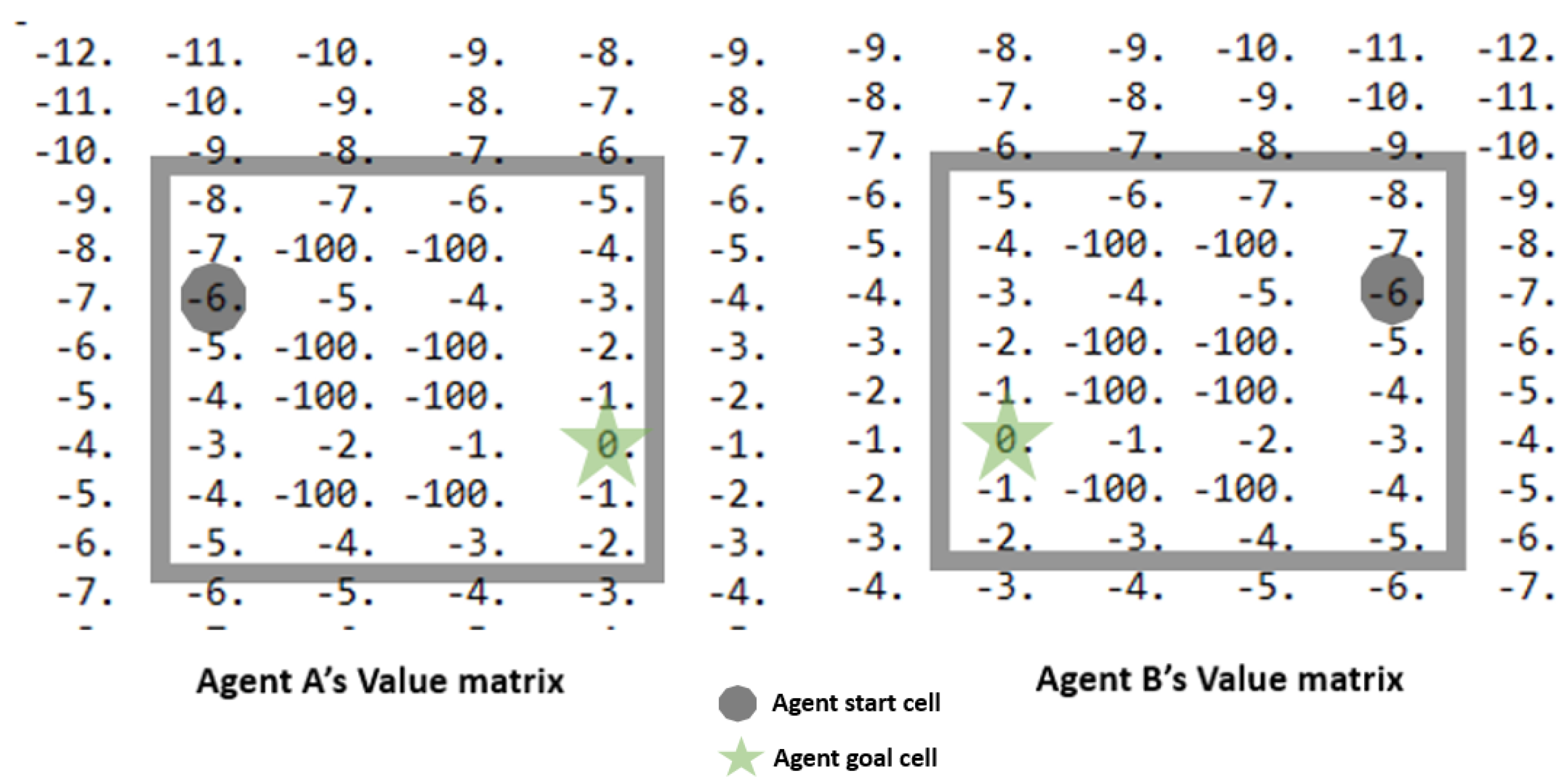

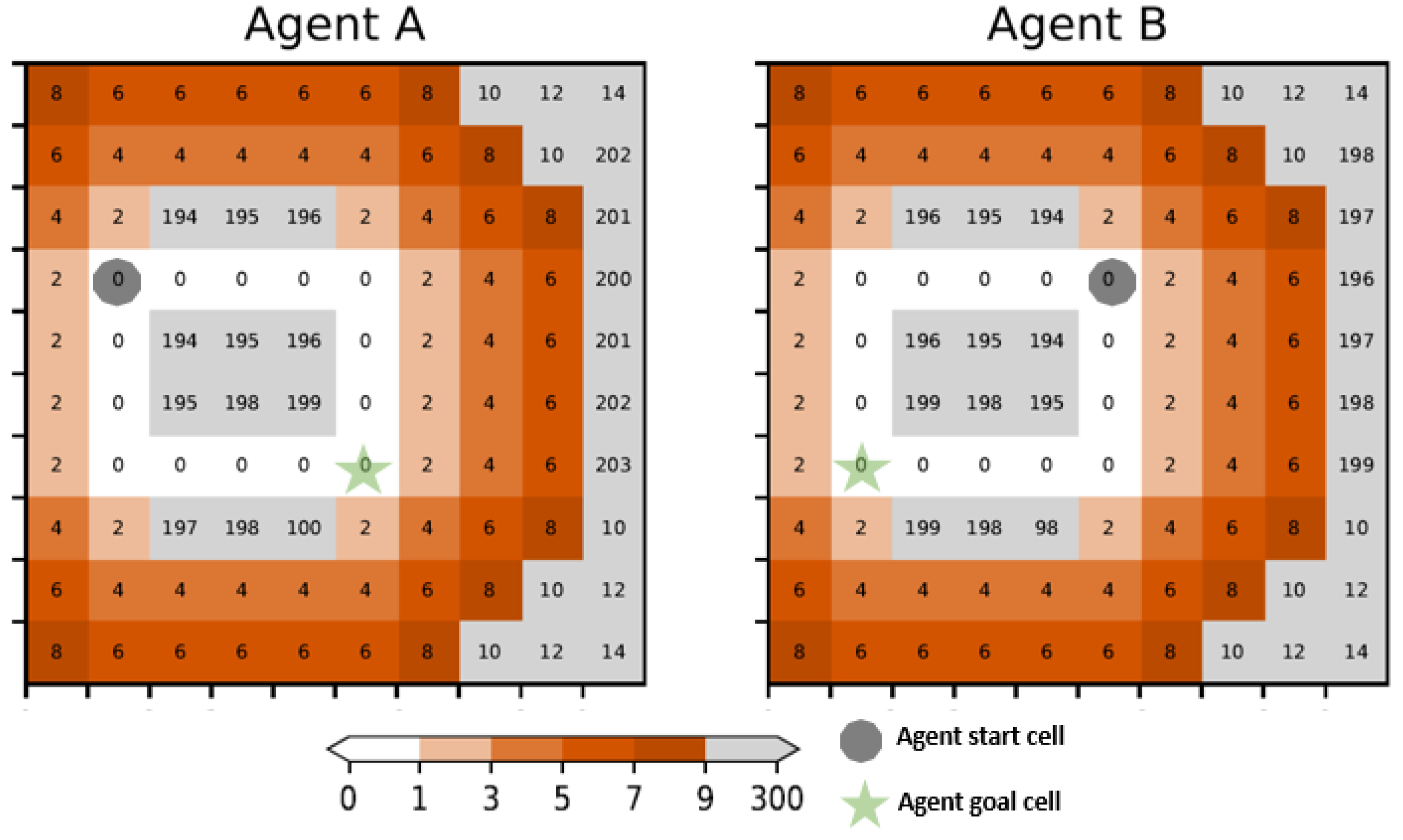

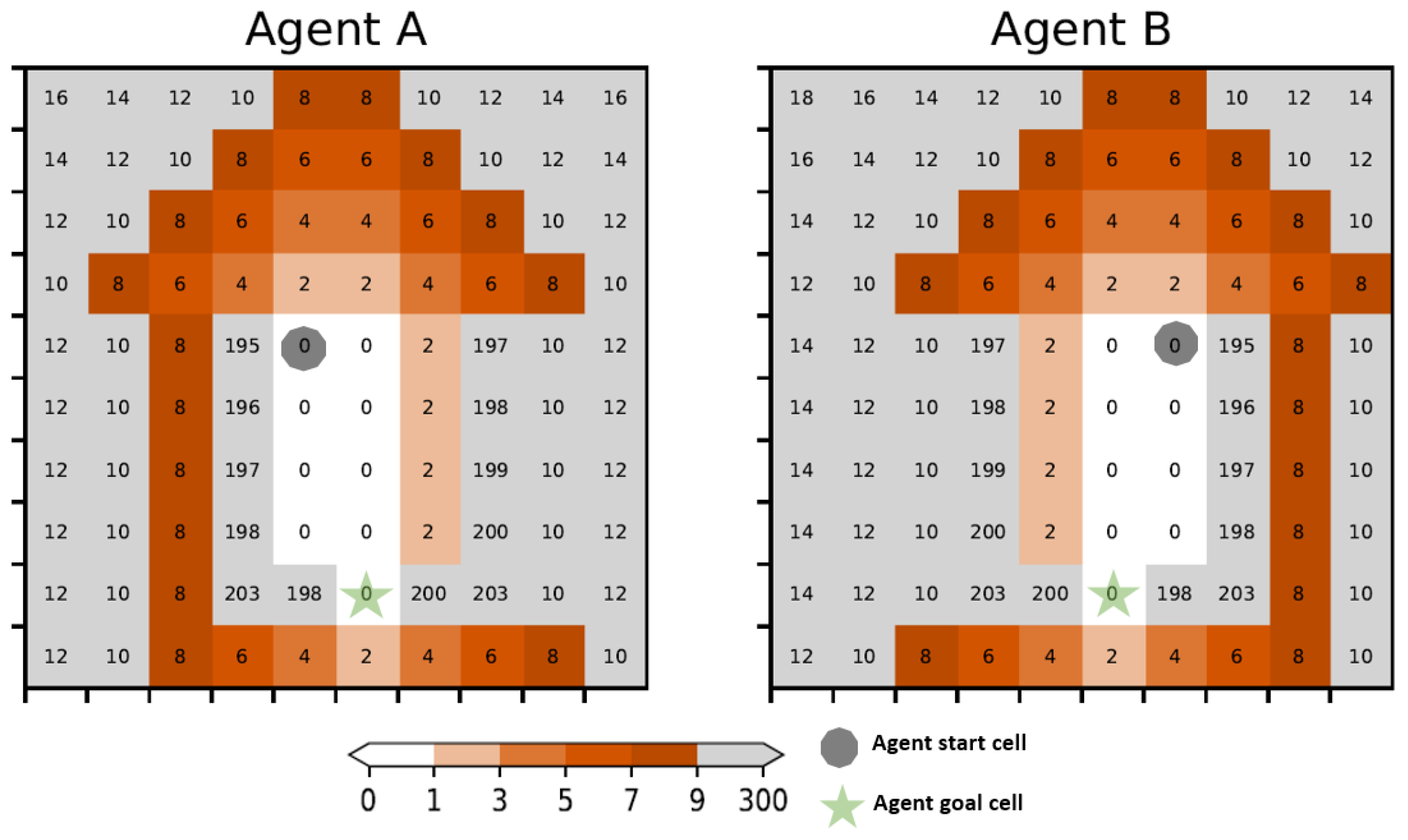

2.2. Individual Agent Planning

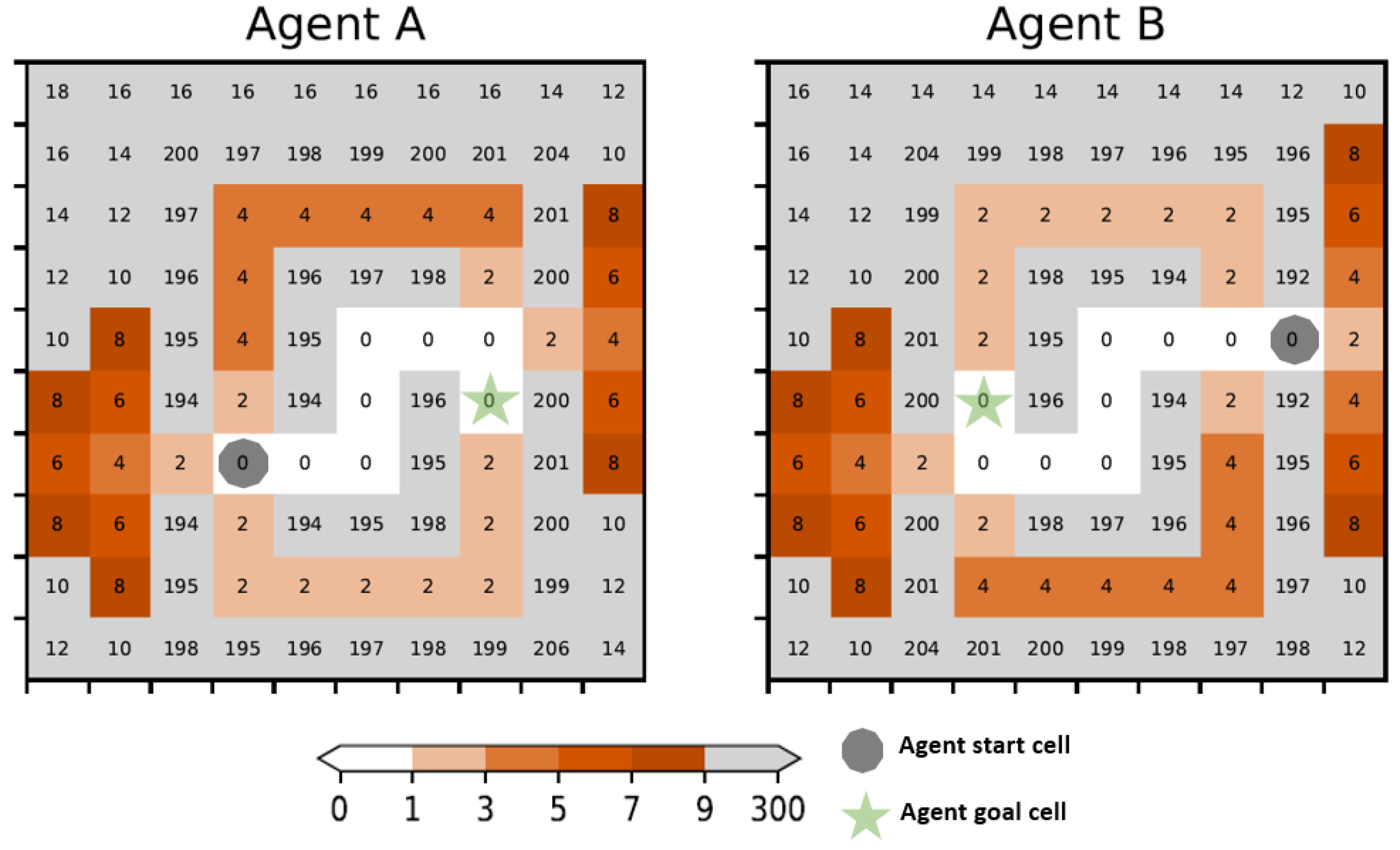

- S is the finite set of states. In this paper, the states are cells in a grid environment such as those shown in Figure 2;

- A is the finite set of possible actions that can be taken at each state;

- represents the probability of transitioning from one state to another given a particular action;

- is a function that returns the reward that an agent receives after taking action in state s resulting in state ;

- is the discount factor, a value between 0 and 1 that represents the preference for immediate rewards over future rewards. We set to 1 as we consider finite horizon planning problems;

- is the goal state.

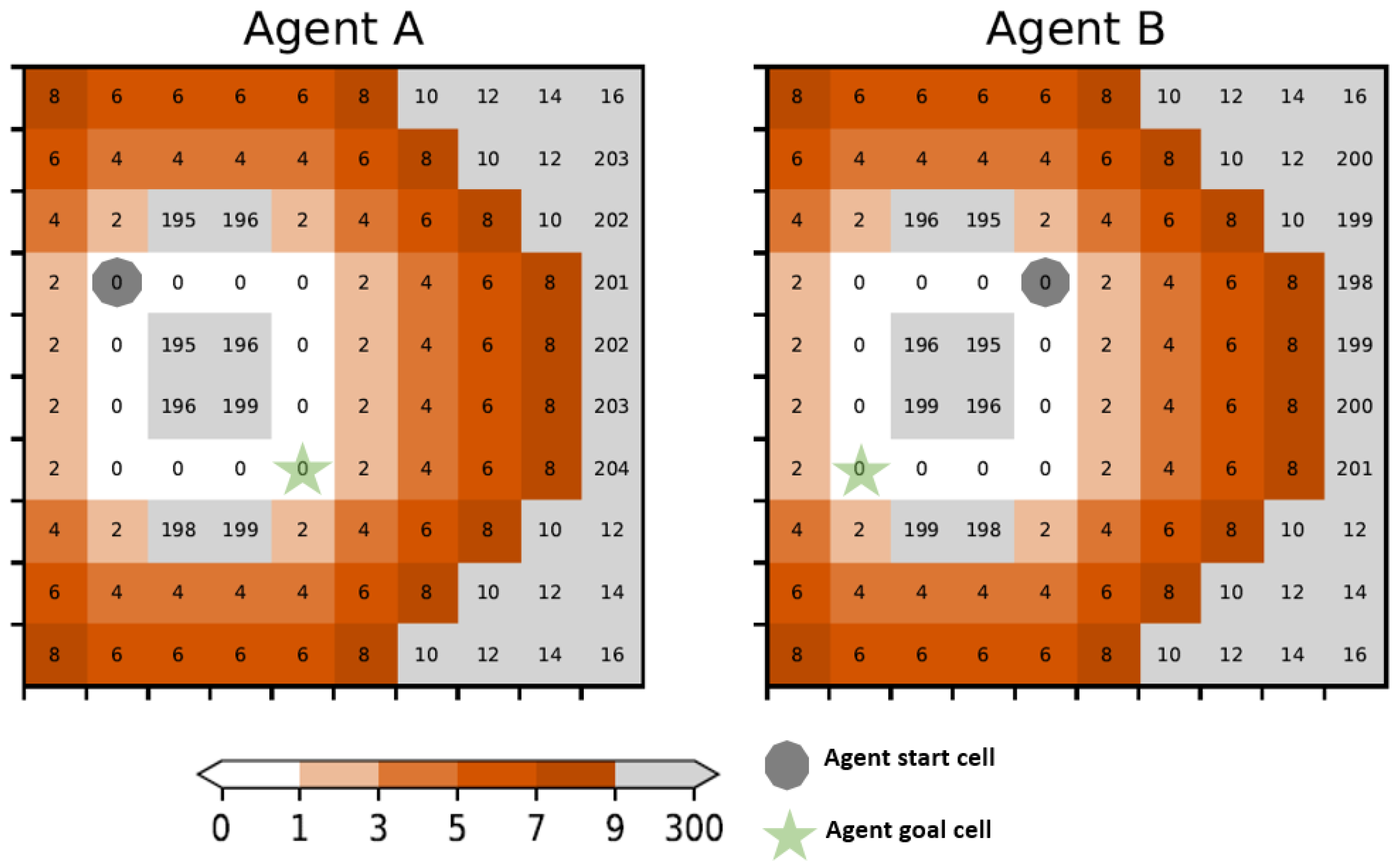

2.3. Coordination Planning by Entrepreneurs

2.4. Regret Landscapes

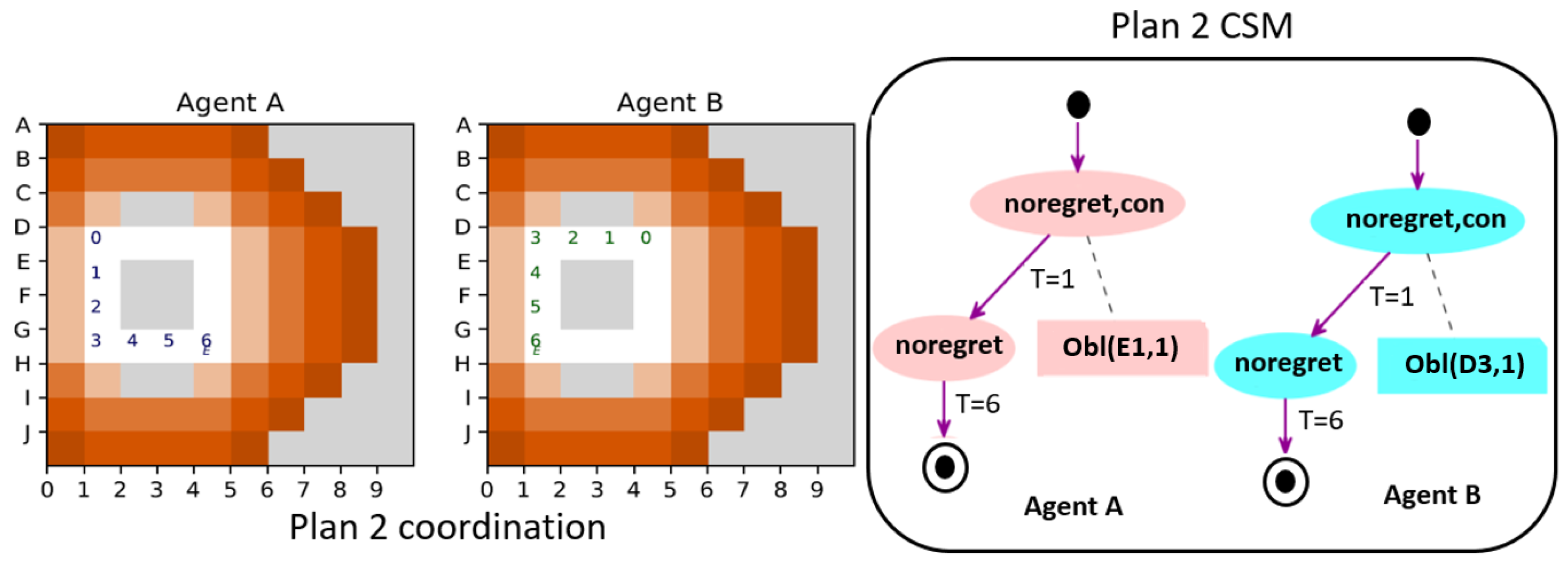

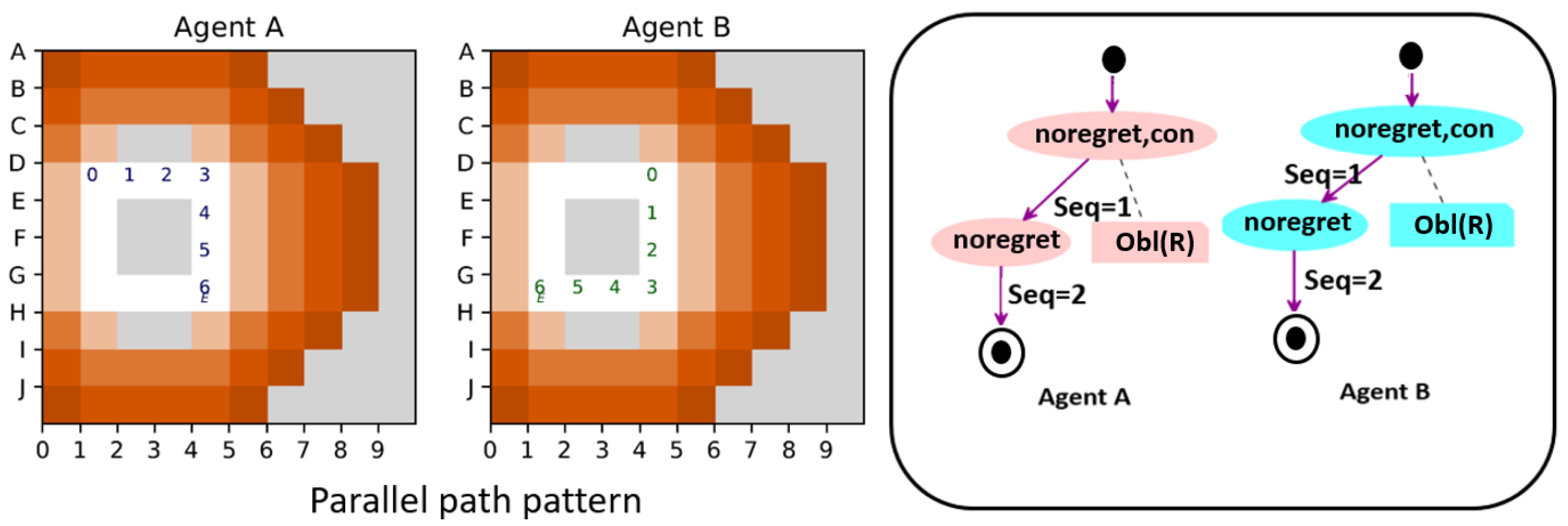

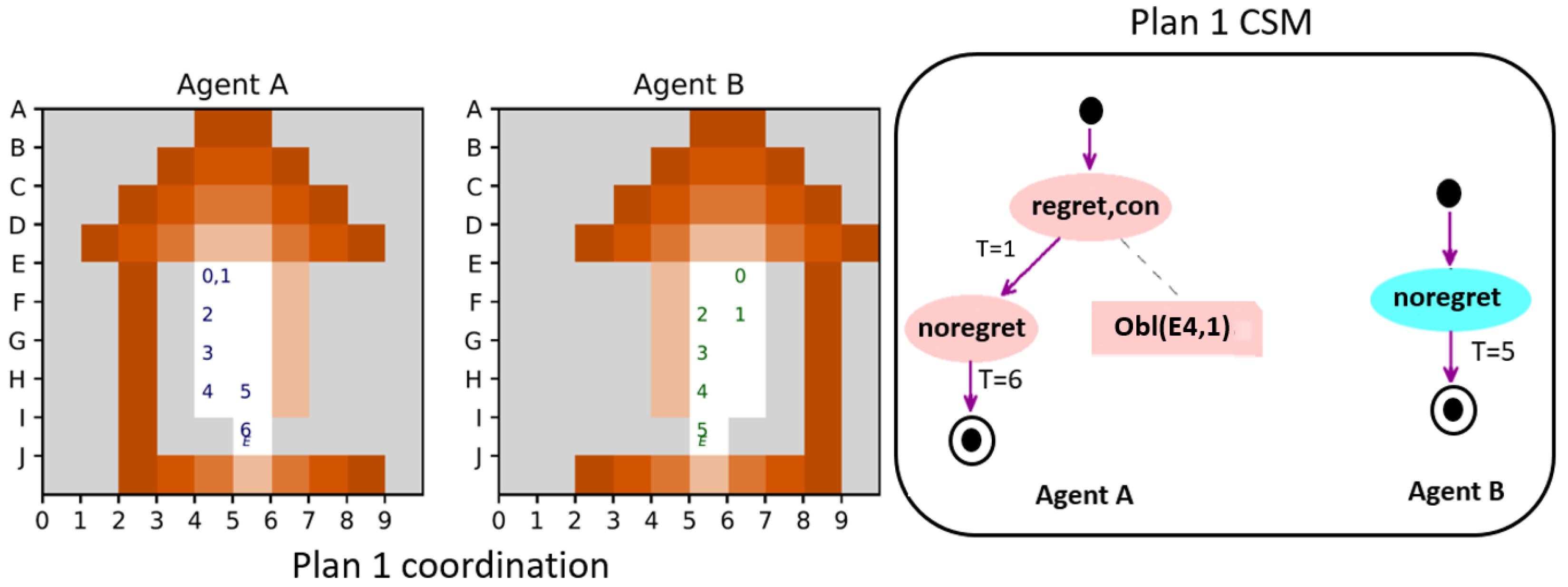

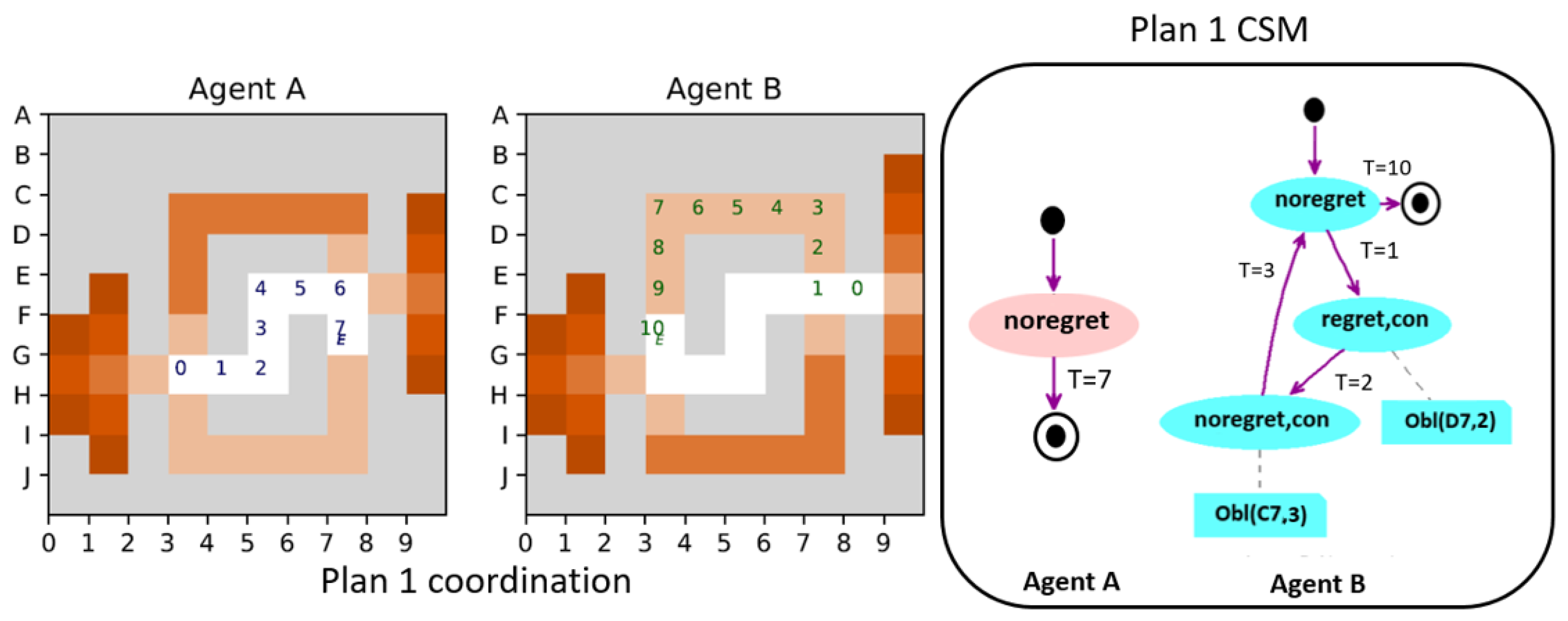

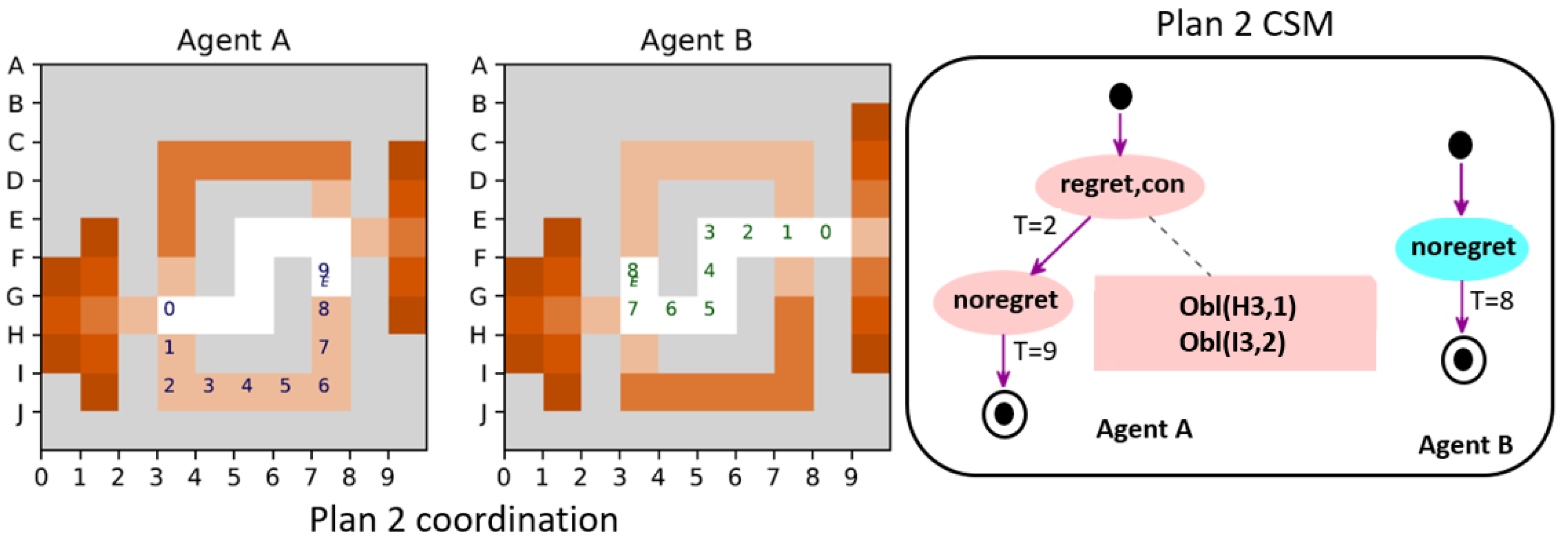

2.5. Coordination State Machines (CSMs)

- 1.

- Whether or not they must incur regret when they move, compared to the optimal action from the current location;

- 2.

- Whether their movements are constrained or unconstrained.

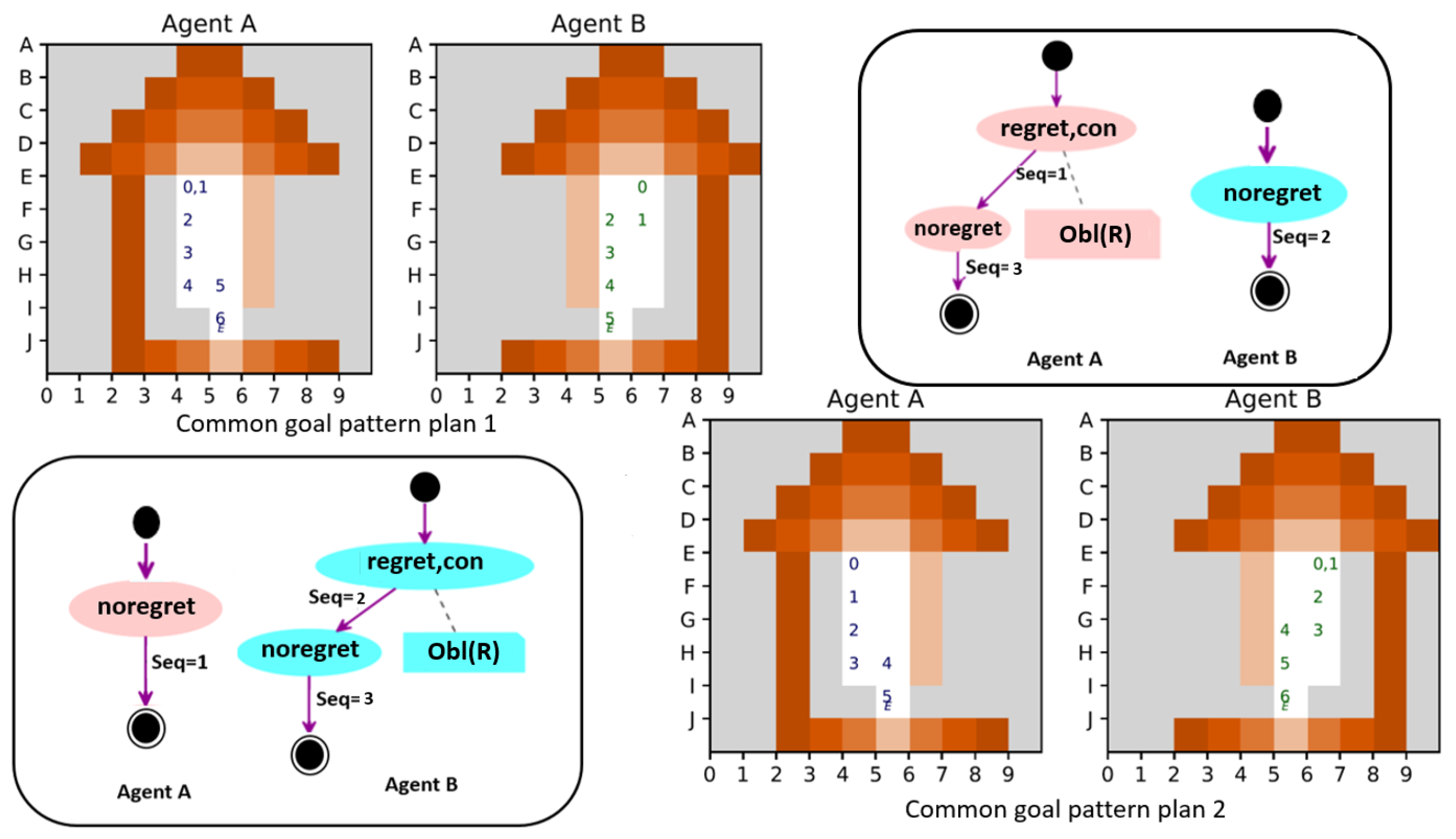

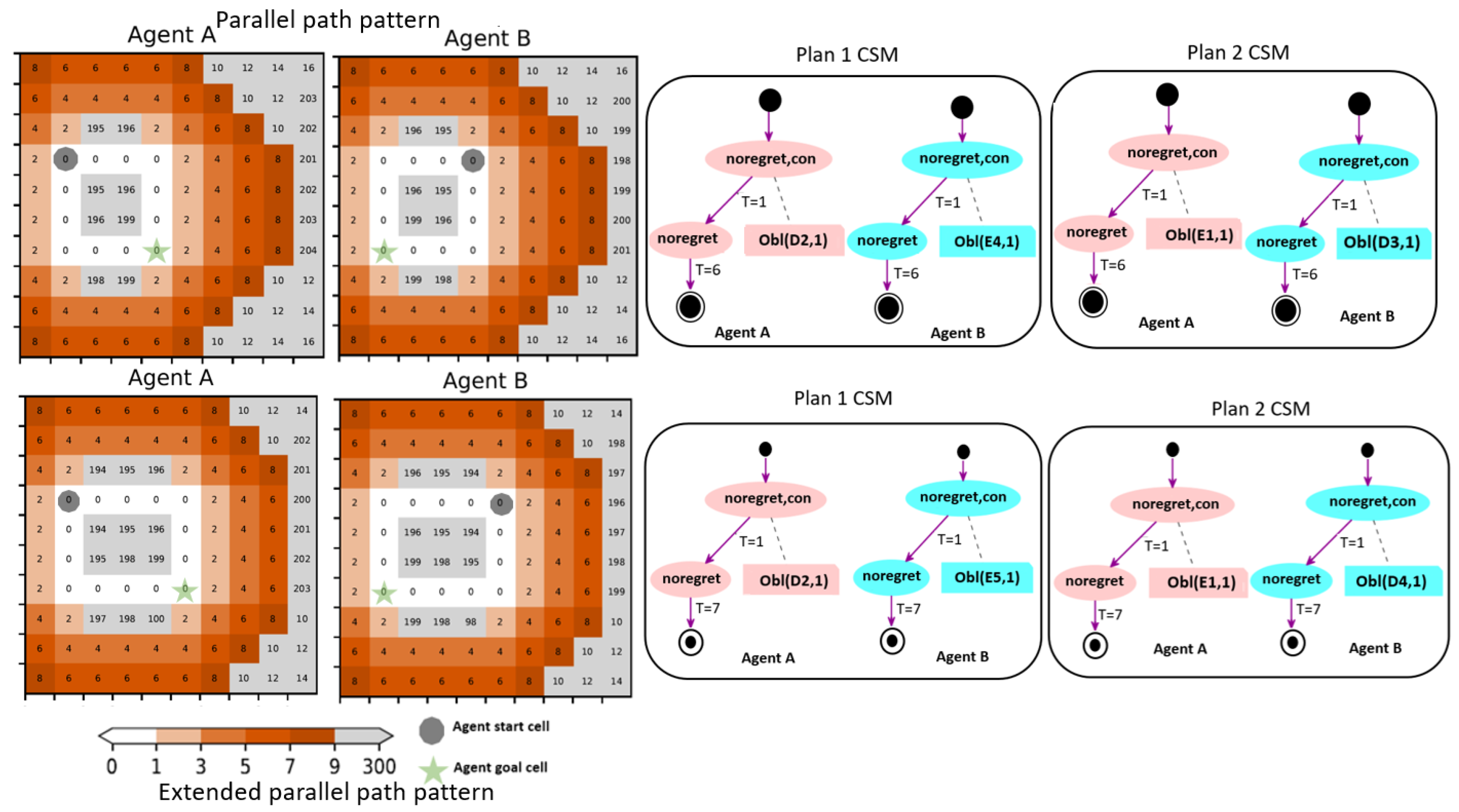

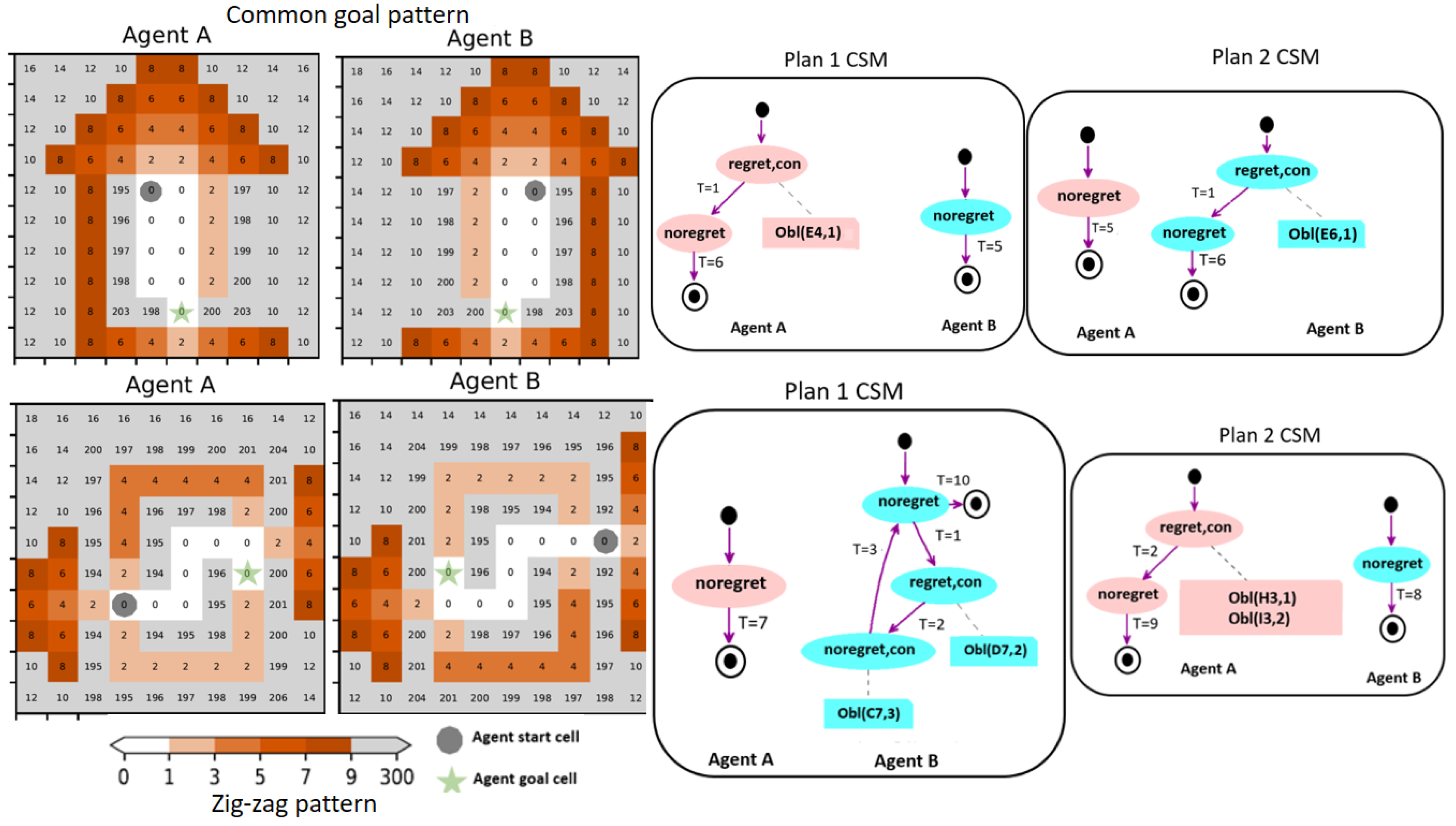

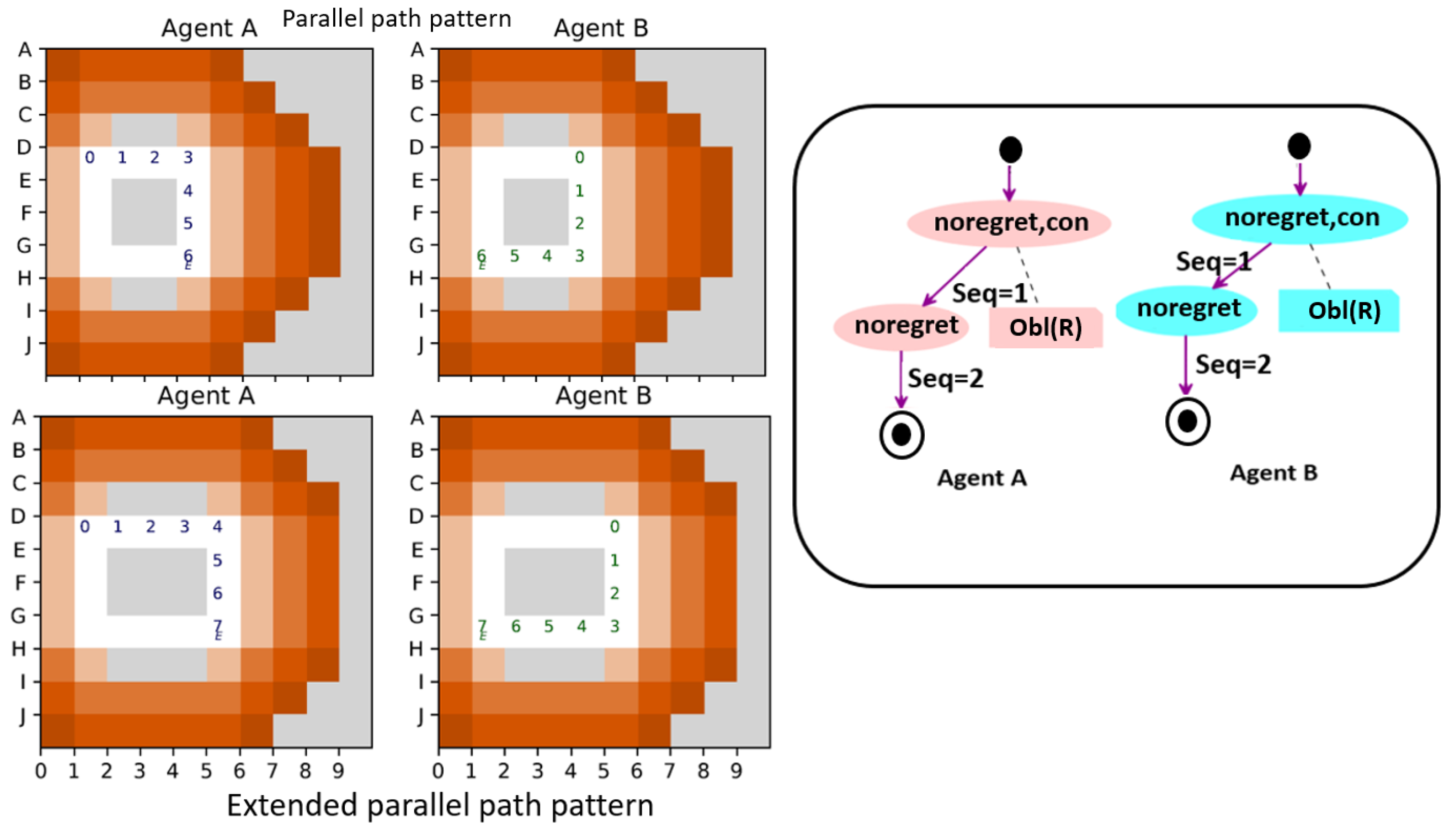

- A set of states: (“noregret ”, “noregret,con”, “regret,con”)

- ⁻

- noregret: when an agent is in this state, it is free to choose an optimal path, i.e., it does not need to incur regret;

- ⁻

- noregret,con”: When an agent is in this state, it does not need to incur regret. However, the coordination requires the agent to constrain its movements;

- ⁻

- “regret,con”: When an agent is in this state, the coordination requires the agent to incur regret by choosing a suboptimal action. The agent’s movement choices are also constrained.

- Movement constraints are associated with the states “noregret,con” and “regret,con”. These states are associated with sets of obligation and/or prohibition constraints. The syntax for these two types of constraints are shown in Table 1;

- Transitions are identified by the timesteps: .

| Algorithm 1 Function to construct a coordination state machine. |

| 1: Input: Value matrices: and , a list of actions A and LCP paths: and |

| 2: |

| 3: function ConstructCSM() |

| 4: movement_map_A←ComputeMoveOptions ▹ Compute move options for agent A |

| 5: movement_map_B←ComputeMoveOptions ▹ Compute move options for agent B |

| 6: sm_A←ConstructSM(movement_map_A) ▹ Construct an SM for agent A |

| 7: sm_B←ConstructSM(movement_map_B) ▹ Construct an SM for agent B |

| 8: (sm_A,sm_B) |

| 9: return csm |

| 10: |

| 11: function ComputeMoveOptions() |

| 12: movement_map |

| 13: for each cell C in lcp do |

| 14: ▹ Find optimal moves from C |

| 15: the next cell in lcp after C, or None if C is the last cell |

| 16: movement_map[C] |

| 17: return movement_map |

| 18: |

| 19: function ConstructSM(movement_map) |

| 20: sm←InitializeStateMachine ▹ Create a state machine |

| 21: path_states |

| 22: for tstep, (_, desc) in enumerate(movement_map.items()) do |

| 23: |

| 24: |

| 25: if then ▹ Compute state for each move |

| 26: |

| 27: else if then |

| 28: |

| 29: else if then |

| 30: |

| 31: else |

| 32: |

| 33: Add(state, path_states) |

| 34: |

| 35: if length(options) >1 then ▹ Compute constraints |

| 36: constraint ← (“obl”,desc[1],tstep + 1) |

| 37: if state ∈ keys(constraints) then |

| 38: constraints[state].add(constraint) |

| 39: else |

| 40: |

| 41: SetInitialState(sm, path_states[0]) ▹ Set the SM’s initial state |

| 42: |

| 43: for i←0 to len(path_states) −1 do ▹ Compute transitions |

| 44: current←path_states[i] |

| 45: next_state←current_states[i+1] |

| 46: if current≠next_state then |

| 47: ▹ This represents a step of the LCP |

| 48: transitions.append({‘source’:current,‘target’:next_state,‘events’:[event]}) |

| 49: SetTransitions() |

| 50: return sm |

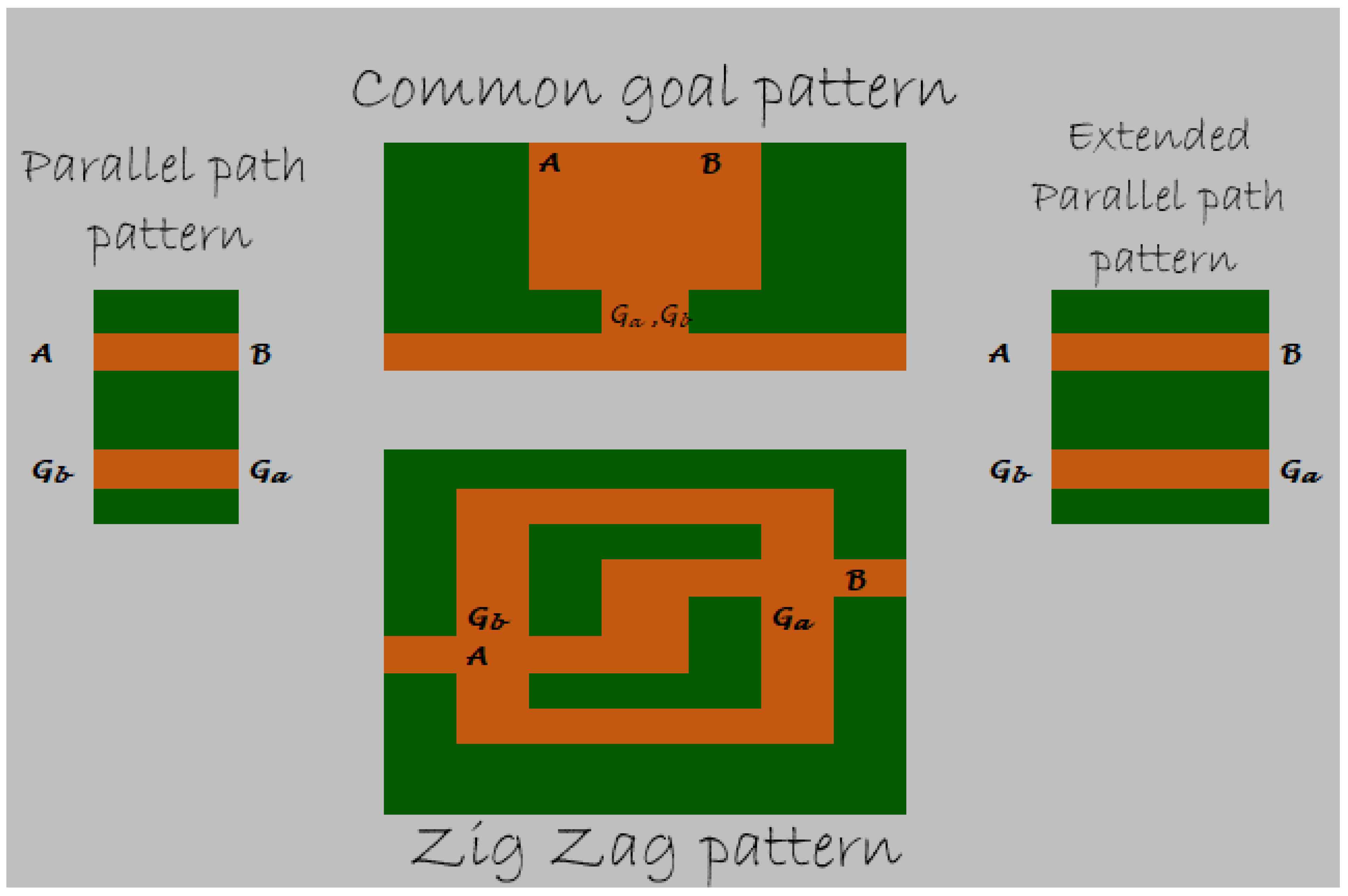

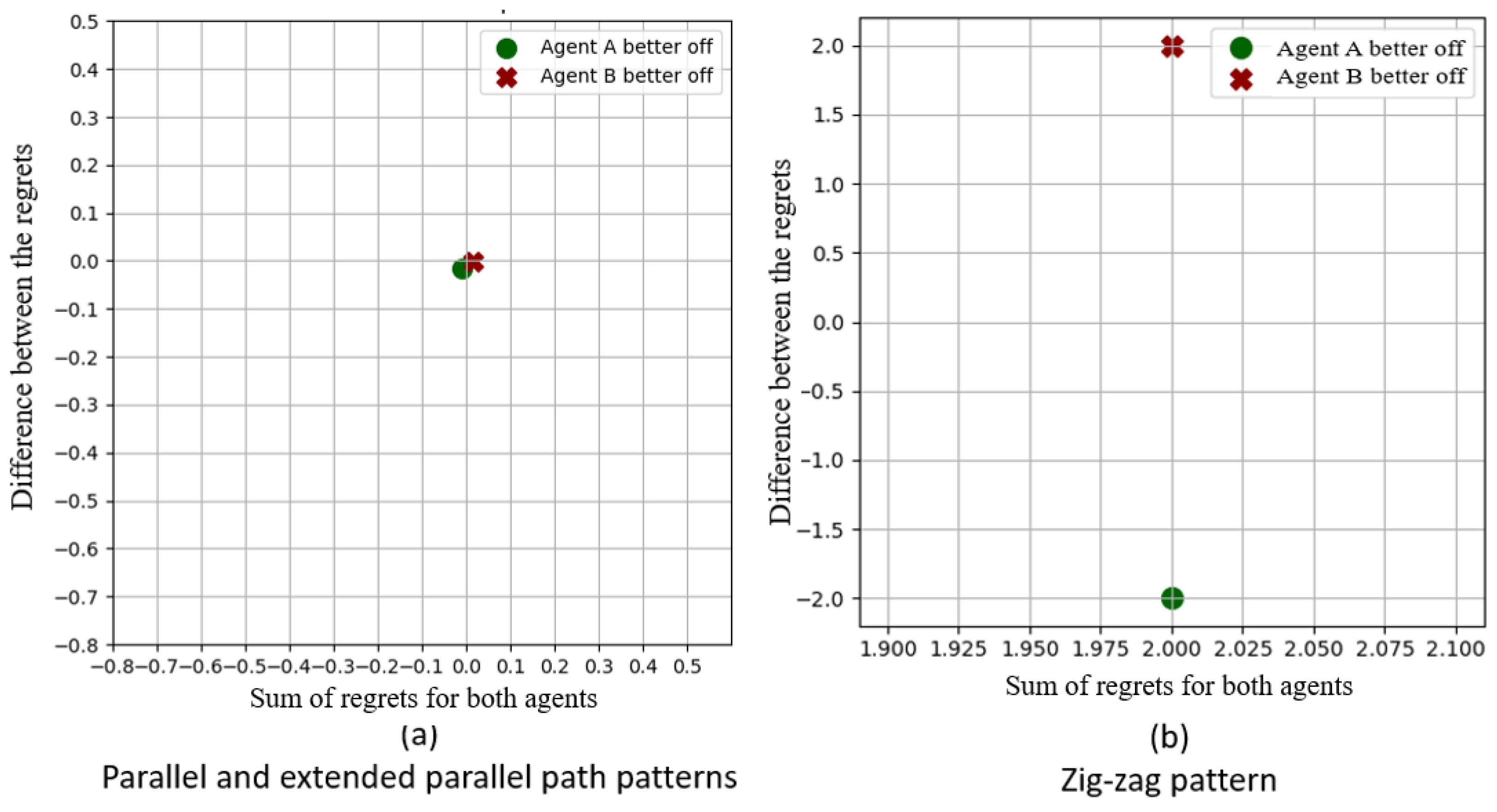

2.5.1. Parallel Path Pattern Plan

2.5.2. Cross-Scenario CSMs

2.6. Examples

2.6.1. Extended Parallel Path Pattern

2.6.2. Common Goal Pattern

2.6.3. Zigzag Pattern

2.7. Executing a CSM

- Timestep 1: The agent is in the state ’noregret,con’ at position (3, 1). A constraint is present requiring the agent to reach position (4, 1). The agent chooses to move to position (4, 1);

- Timestep 2: The agent is now in the state ’noregret’ at position (4, 1). No constraints are present. The agent is free to move optimally and chooses to move to position (5, 1);

- Timestep 3: The agent is still in the state ’noregret’ at position (5, 1). No constraints are present. The agent is free to move optimally and chooses to move to position (6, 1);

- Timestep 4: The agent is still in the state ’noregret’ at position (6, 1). No constraints are present. The agent is free to move optimally and chooses to move to position (6, 2);

- Timestep 5: The agent is still in the state ’noregret’ at position (6, 2). No constraints are present. The agent is free to move optimally and chooses to move to position (6, 3);

- Timestep 6: The agent is still in the state ’noregret’ at position (6, 3). No constraints are present. The agent chooses to move to position (6, 4), successfully reaching its goal.

3. Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

| 1 | |

| 2 | This paper does not propose a specific mechanism for goal inference, but this is an active area of research [21]. |

| 3 | We currently consider only two-agent scenarios. |

| 4 | We leave consideration of an agent reneging on an agreed plan as a topic for future work. |

References

- Sumner, W. Folkways: A Study of the Sociological Importance of Usages, Manners, Customs, Mores, and Morals; Good Press: Addison, TX, USA, 1906. [Google Scholar]

- Kubler, D. On the Regulation of Social Norms. J. Law Econ. Organ. 2001, 17, 449–476. [Google Scholar] [CrossRef]

- Sunstein, C.R. Social Norms and Social Roles. Columbia Law Rev. 1996, 96, 903–968. [Google Scholar] [CrossRef]

- Finnemore, M.; Sikkink, K. International Norm Dynamics and Political Change. Int. Organ. 1998, 52, 887–917. [Google Scholar] [CrossRef]

- Anavankot, A.M.; Cranefield, S.; Savarimuthu, B.T.R. Towards Norm Entrepreneurship in Agent Societies. In International Conference on Practical Applications of Agents and Multi-Agent Systems; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar] [CrossRef]

- Opp, K.D. When Do Norms Emerge by Human Design and When by the Unintended Consequences of Human Action?: The Example of the No-smoking Norm. Ration. Soc. 2002, 14, 131–158. [Google Scholar] [CrossRef]

- Centola, D.; Baronchelli, A. The Spontaneous Emergence of Conventions: An Experimental Study of Cultural Evolution. Proc. Natl. Acad. Sci. USA 2015, 112, 1989–1994. [Google Scholar] [CrossRef] [PubMed]

- Boella, G.; Torre, L.; Verhagen, H. Introduction to the Special Issue on Normative Multiagent Systems. Auton. Agents -Multi-Agent Syst. 2008, 17, 1–10. [Google Scholar] [CrossRef]

- Shoham, Y.; Tennenholtz, M. On the Synthesis of Useful Social Laws for Artificial Agent Societies. In Proceedings of the Tenth National Conference on Artificial intelligence (AAAI’92), San Jose, CA, USA, 12–16 July 1992; pp. 276–281. [Google Scholar]

- Morales, J.; López-Sánchez, M.; Rodríguez-Aguilar, J.; Wooldridge, M.; Vasconcelos, W. Automated Synthesis of Normative Systems. In Proceedings of the 12th International Conference on Autonomous Agents and Multiagent Systems, Saint Paul, MN, USA, 6–10 May 2013. [Google Scholar]

- Morales, J.; López-Sánchez, M.; Rodríguez-Aguilar, J.; Wooldridge, M.; Vasconcelos, W. Minimality and simplicity in the on-line automated synthesis of normative systems. In Proceedings of the 13th International Conference on Autonomous Agents and Multiagent Systems, Paris, France, 5–9 May 2014; pp. 109–116. [Google Scholar]

- Morales, J.; López-Sánchez, M.; Rodríguez-Aguilar, J.A.; Wooldridge, M.J.; Vasconcelos, W.W. Synthesising Liberal Normative Systems. In Proceedings of the Proceedings of the 2015 International Conference on Autonomous Agents and Multiagent Systems, Istanbul, Turkey, 4–8 May 2015; ACM: New York, NY, USA, 2015; pp. 433–441. [Google Scholar]

- Morales, J.; Wooldridge, M.; Rodríguez-Aguilar, J.; López-Sánchez, M. Synthesising Evolutionarily Stable Normative Systems. Auton. Agents -Multi-Agent Syst. 2018, 32, 635–671. [Google Scholar] [CrossRef] [PubMed]

- Alechina, N.; De Giacomo, G.; Logan, B.; Perelli, G. Automatic Synthesis of Dynamic Norms for Multi-Agent Systems. In Proceedings of the 19th International Conference on Principles of Knowledge Representation and Reasoning, Haifa, Israel, 31 July–5 August 2022; Volume 8, pp. 12–21. [Google Scholar] [CrossRef]

- Riad, M.; Golpayegani, F. Run-Time Norms Synthesis in Multi-objective Multi-agent Systems. In Coordination, Organizations, Institutions, Norms, and Ethics for Governance of Multi-Agent Systems XIV; Theodorou, A., Nieves, J.C., Vos, M.D., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; Volume 13239, Lecture Notes in Computer Science; pp. 78–93. [Google Scholar] [CrossRef]

- Riad, M.; Golpayegani, F. A Normative Multi-objective Based Intersection Collision Avoidance System. In Smart Innovation, Systems and Technologies; Springer: Singapore, 2022; pp. 289–300. [Google Scholar] [CrossRef]

- Riad, M.; Ghanadbashi, S.; Golpayegani, F. Run-Time Norms Synthesis in Dynamic Environments with Changing Objectives. In Proceedings of the Artificial Intelligence and Cognitive Science—30th Irish Conference, Munster, Ireland, 8–9 December 2022; Revised Selected Papers. Longo, L., O’Reilly, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2022; Volume 1662, Communications in Computer and Information Science. pp. 462–474. [Google Scholar] [CrossRef]

- Morris-Martin, A.; Vos, M.D.; Padget, J.A.; Ray, O. Agent-directed Runtime Norm Synthesis. In Proceedings of the Proceedings of the 2023 International Conference on Autonomous Agents and Multiagent Systems, London, UK, 29 May–2 June 2023. [Google Scholar] [CrossRef]

- Morris-Martin, A.; De Vos, M.; Padget, J. A Norm Emergence Framework for Normative MAS–Position Paper. In Proceedings of the Coordination, Organizations, Institutions, Norms, and Ethics for Governance of Multi-Agent Systems XIII: International Workshops COIN 2017 and COINE 2020, Sao Paulo, Brazil, 8–9 May 2017; Virtual Event, 9 May 2020; Revised Selected Papers; Springer: Berlin/Heidelberg, Germany, 2021; pp. 156–174. [Google Scholar]

- Morris-Martin, A.; Vos, M.D.; Padget, J.A. Norm Emergence in Multiagent Systems: A Viewpoint Paper. In Proceedings of the 19th International Conference on Autonomous Agents and Multiagent Systems, AAMAS’20, Auckland, New Zealand, 9–13 May 2020. [Google Scholar] [CrossRef]

- Aldewereld, H.; Grossi, D.; Vázquez-Salceda, J.; Dignum, F. Designing Normative Behaviour Via Landmarks. In International Conference on Autonomous Agents and Multiagent Systems; Springer: Berlin/Heidelberg, Germany, 2005; number 3913 in Lecture Notes in Computer Science; pp. 157–169. [Google Scholar] [CrossRef]

- Ben-Or, M.; Linial, N. Collective coin flipping, robust voting schemes and minima of Banzhaf values. In Proceedings of the 26th Annual Symposium on Foundations of Computer Science (sfcs 1985), Portland, OR, USA, 21–23 October 1985; pp. 408–416. [Google Scholar] [CrossRef]

- Savarimuthu, B.T.R.; Cranefield, S. Norm creation, spreading and emergence: A survey of simulation models of norms in multi-agent systems. Multiagent Grid Syst. 2011, 7, 21–54. [Google Scholar] [CrossRef]

- Balke, T.; Cranefield, S.; di Tosto, G.; Mahmoud, S.; Paolucci, M.; Savarimuthu, B.T.R.; Verhagen, H. Simulation and NorMAS. In Normative Multi-Agent Systems; Andrighetto, G., Governatori, G., Noriega, P., van der Torre, L.W.N., Eds.; Dagstuhl Follow-Ups, Schloss Dagstuhl—Leibniz-Zentrum für Informatik: Hatfield, UK, 2013; pp. 171–189. [Google Scholar] [CrossRef]

- Kallenberg, L. Finite State and Action MDPS. In Handbook of Markov Decision Processes: Methods and Applications; Feinberg, E.A., Shwartz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 21–87. [Google Scholar] [CrossRef]

- Mandow, L.; Pérez de la Cruz, J.L. Multiobjective A* Search with Consistent Heuristics. J. ACM 2010, 57, 1–25. [Google Scholar] [CrossRef]

| Notation | Description | Example |

|---|---|---|

| ||

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anavankot, A.M.; Cranefield, S.; Savarimuthu, B.T.R. NEMAS: Norm Entrepreneurship in Multi-Agent Systems. Systems 2024, 12, 187. https://doi.org/10.3390/systems12060187

Anavankot AM, Cranefield S, Savarimuthu BTR. NEMAS: Norm Entrepreneurship in Multi-Agent Systems. Systems. 2024; 12(6):187. https://doi.org/10.3390/systems12060187

Chicago/Turabian StyleAnavankot, Amritha Menon, Stephen Cranefield, and Bastin Tony Roy Savarimuthu. 2024. "NEMAS: Norm Entrepreneurship in Multi-Agent Systems" Systems 12, no. 6: 187. https://doi.org/10.3390/systems12060187

APA StyleAnavankot, A. M., Cranefield, S., & Savarimuthu, B. T. R. (2024). NEMAS: Norm Entrepreneurship in Multi-Agent Systems. Systems, 12(6), 187. https://doi.org/10.3390/systems12060187