InSight: An FPGA-Based Neuromorphic Computing System for Deep Neural Networks †

Abstract

:1. Introduction

- We implement a complete, fully-functional, non-Von Neumann system that can execute modern deep CNNs and compare it with various existing computing systems including existing neuromorphic systems, FPGAs, GPUs, and CPUs. This reveals that the neuromorphic approach is worth to explore despite the progress of the conventional specialized systems.

- The dataflow architecture enabled by the one-to-one mapping between operations and compute units has the fundamental scalability issue, although it does not require any array-type memory access. However, this work increases the capacity by adopting model compression and word-serial structures for 2D convolution.

- We demonstrate that neural networks can be implemented in the neuromorphic fashion efficiently without the crossbars for synapses. This is possible because we can convert dense neural networks into sparse neural networks.

2. Background

2.1. A Neuron Model

2.2. Neural Networks

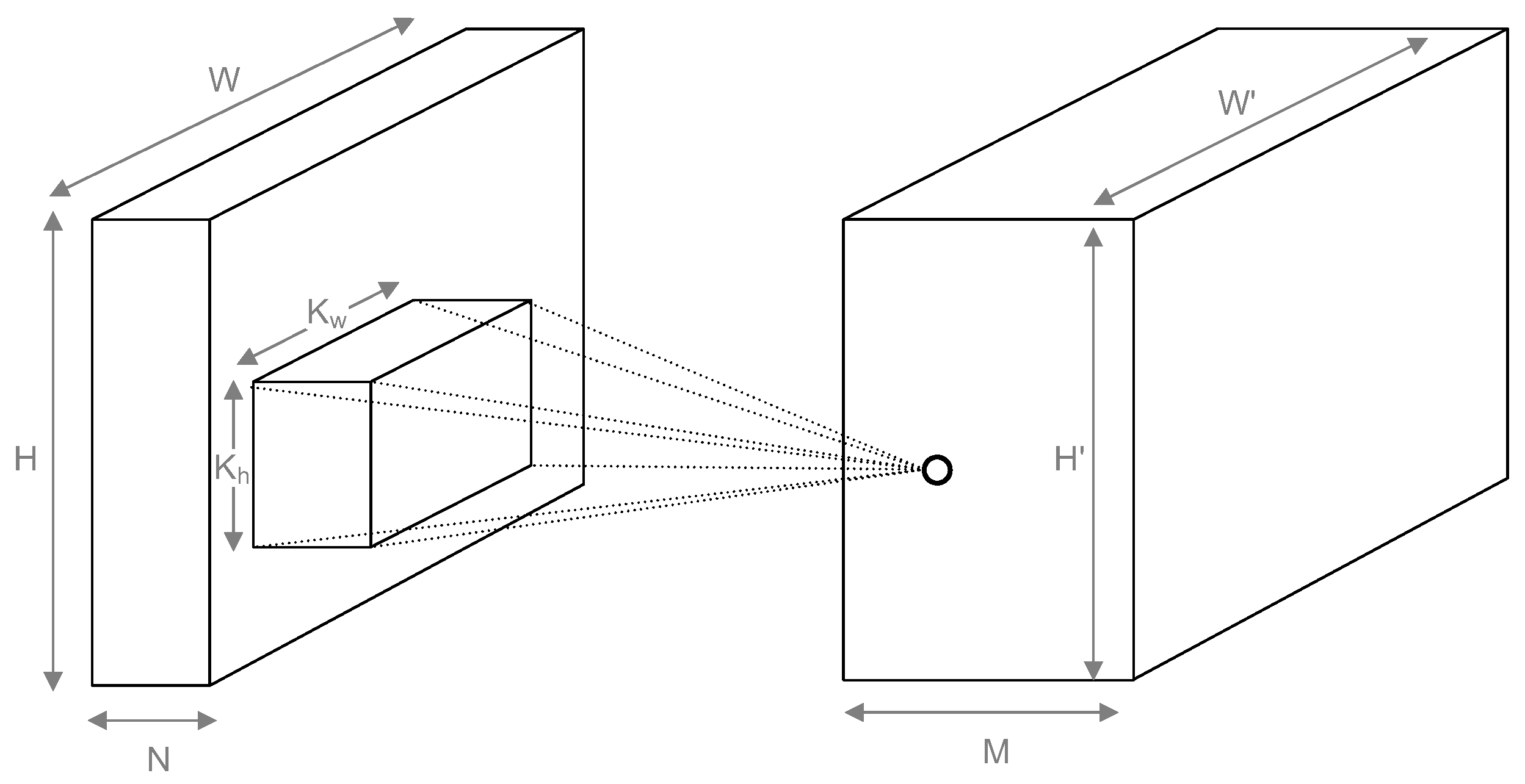

2.3. Convolutional Neural Networks

2.4. Neuromorphic Architectures

3. Neural Network Compiler

3.1. Model Compression (Simplification)

3.2. Time Delay Neural Net Conversion

3.3. Overall Procedure

4. Neuromorphic Hardware

- Synapse: consists of a -bit register to store the weight and a bit-serial multiplier, which mainly comprises full adders and a register to store intermediate results. For minimum area, we employ the semi-systolic multiplier [25], which approximately requires AND gates, full adders, and flip-flops.

- Neuron: with k inputs comprises a k-input bit-serial adder to sum up the outputs of the k synapses connected to the neuron. We prepare neurons with various k. Adding a bias and evaluating the activation function is related to the function of biological neurons, so it may be natural for the neuron to have units for those functions. However, in our system, many neurons do not require those functions and we implement them in separate modules.

- Delay element: is realized by a 1-bit stage shift register.

- Bias: consists of an -bit register to store the bias value and a full-adder, whose inputs are fed by a module input, a selected bit of the register, and the carries out at the previous cycle.

- Relu: zeros out negative activations. Since the sign bit comes last, this module should have cycle latency at minimum.

- Max: compares k input activations by using bit-serial substractors. If , one bit-serial substractor is used. The comparison is done after the MSB of activations is received, so it should also have cycle latency at minimum. If , we can create a tree of the two-input max modules, but this increases the latency. For minimum latency, we can perform comparisons in parallel.

- Pool: performs subsampling, handles borders, and pads zeros. It keeps track of the spatial coordinates of the current input activation in the activation map and invalidates the output activations depending on the border handling and the stride. It can also replace invalidated activations by valid zeros for zero-padding.

5. Experimental Results

5.1. Benchmarks

5.2. Network Simplification

5.3. Bit-Width Determination

5.4. Comparison Baseline

5.5. Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ciresan, D.; Meier, U.; Schmidhuber, J. Multi-column deep neural networks for image classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3642–3649. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Chetlur, S.; Woolley, C.; Vandermersch, P.; Cohen, J.; Tran, J.; Catanzaro, B.; Shelhamer, E. Cudnn: Efficient primitives for deep learning. arXiv 2014, arXiv:1410.0759. [Google Scholar]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015. [Google Scholar]

- Alwani, M.; Chen, H.; Ferdman, M.; Milder, P. Fused-layer CNN accelerators. In Proceedings of the 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016; pp. 1–12. [Google Scholar]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient inference engine on compressed deep neural network. In Proceedings of the 43rd International Symposium on Computer Architecture, Seoul, Korea, 18–22 June 2016; pp. 243–254. [Google Scholar]

- Shin, D.; Lee, J.; Lee, J.; Yoo, H.J. 14.2 DNPU: An 8.1 TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 240–241. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Miyashita, D.; Lee, E.H.; Murmann, B. Convolutional neural networks using logarithmic data representation. arXiv 2016, arXiv:1603.01025. [Google Scholar]

- Jo, S.H.; Chang, T.; Ebong, I.; Bhadviya, B.B.; Mazumder, P.; Lu, W. Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 2010, 10, 1297–1301. [Google Scholar] [CrossRef] [PubMed]

- Schemmel, J.; Bruderle, D.; Grubl, A.; Hock, M.; Meier, K.; Millner, S. A wafer-scale neuromorphic hardware system for large-scale neural modeling. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems (ISCAS), Paris, France, 30 May–2 June 2010; pp. 1947–1950. [Google Scholar]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Cassidy, A.S.; Alvarez-Icaza, R.; Akopyan, F.; Sawada, J.; Arthur, J.V.; Merolla, P.A.; Datta, P.; Tallada, M.G.; Taba, B.; Andreopoulos, A.; et al. Real-time scalable cortical computing at 46 giga-synaptic OPS/watt with ∼100× Speed Up in Time-to-Solution and ∼100,000× Reduction in Energy-to-Solution. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, New Orleans, LA, USA, 16–21 November 2014; pp. 27–38. [Google Scholar]

- Cassidy, A.S.; Merolla, P.; Arthur, J.V.; Esser, S.K.; Jackson, B.; Alvarez-Icaza, R.; Datta, P.; Sawada, J.; Wong, T.M.; Feldman, V.; et al. Cognitive Computing Building Block: A Versatile and Efficient Digital Neuron Model for Neurosynaptic Cores. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–10. [Google Scholar]

- Arthur, J.V.; Merolla, P.A.; Akopyan, F.; Alvarez, R.; Cassidy, A.; Chandra, S.; Esser, S.K.; Imam, N.; Risk, W.; Rubin, D.B.; et al. Building block of a programmable neuromorphic substrate: A digital neurosynaptic core. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–8. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Gong, Y.; Liu, L.; Yang, M.; Bourdev, L. Compressing deep convolutional networks using vector quantization. arXiv 2014, arXiv:1412.6115. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Chung, J.; Shin, T. Simplifying Deep Neural Networks for Neuromorphic Architectures. In Proceedings of the 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 5–9 June 2016. [Google Scholar]

- Bosi, B.; Bois, G.; Savaria, Y. Reconfigurable pipelined 2-D convolvers for fast digital signal processing. IEEE Trans. Very Large Scale Integr. 1999, 7, 299–308. [Google Scholar] [CrossRef] [Green Version]

- Cmar, R.; Rijnders, L.; Schaumont, P.; Vernalde, S.; Bolsens, I. A methodology and design environment for DSP ASIC fixed point refinement. In Proceedings of the Conference on Design, Automation and Test in Europe, Munich, Germany, 9–12 March 1999; ACM: New York, NY, USA, 1999; p. 56. [Google Scholar]

- Wen, W.; Wu, C.R.; Hu, X.; Liu, B.; Ho, T.Y.; Li, X.; Chen, Y. An EDA framework for large scale hybrid neuromorphic computing systems. In Proceedings of the 52nd Annual Design Automation Conference, San Francisco, CA, USA, 8–12 June 2015; ACM: New York, NY, USA, 2015; p. 12. [Google Scholar]

- Agrawal, E. Systolic and Semi-Systolic Multiplier. MIT Int. J. Electron. Commun. Eng. 2013, 3, 90–93. [Google Scholar]

- Esser, S.K.; Appuswamy, R.; Merolla, P.; Arthur, J.V.; Modha, D.S. Backpropagation for energy-efficient neuromorphic computing. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; pp. 1117–1125. [Google Scholar]

- Neil, D.; Liu, S.C. Minitaur, an event-driven FPGA-based spiking network accelerator. IEEE Trans. Very Large Scale Integr. Syst. 2014, 22, 2621–2628. [Google Scholar] [CrossRef]

- Esser, S.K.; Merolla, P.A.; Arthur, J.V.; Cassidy, A.S.; Appuswamy, R.; Andreopoulos, A.; Berg, D.J.; McKinstry, J.L.; Melano, T.; Barch, D.R.; et al. Convolutional Networks for Fast, Energy-Efficient Neuromorphic Computing. arXiv 2016, arXiv:1603.08270. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Original | Simplified | ||||

|---|---|---|---|---|---|

| Config. | Params | SOPs | Config. | Params | SOPs |

| conv3-64 | 1.73 K | 1.56 M | conv1-3 | 9 | 9.22 K |

| conv3-27 | 186 | 167 K | |||

| conv1-64 | 351 | 316 K | |||

| Subtotal | 1.73 K | 1.56 M | Subtotal | 0.55 K | 493 K |

| conv3-64 | 36.9 K | 28.9 M | conv1-64 | 719 | 647 K |

| conv3-64 | 705 | 553 K | |||

| conv1-64 | 847 | 664 K | |||

| Subtotal | 36.9 K | 28.9 M | Subtotal | 2.27 K | 1.86 M |

| maxpool | |||||

| conv3-128 | 73.7 K | 8.92 M | conv1-64 | 1263 | 213 K |

| conv3-128 | 899 | 109 K | |||

| conv1-128 | 1586 | 192 K | |||

| Subtotal | 73.7 K | 8.92 M | Subtotal | 3.75 K | 514 K |

| conv3-128 | 147 K | 17.8 M | conv1-128 | 2689 | 325 K |

| conv3-128 | 964 | 117 K | |||

| conv1-128 | 2717 | 329 K | |||

| Subtotal | 147 K | 17.8 M | Subtotal | 6.37 K | 771 K |

| maxpool | |||||

| FC-256 | 524 K | 524 K | conv1-128 | 1617 | 26 K |

| conv4-256 | 701 | 701 | |||

| conv1-256 | 1497 | 1497 | |||

| Subtotal | 524 K | 524 K | Subtotal | 4 K | 28 K |

| FC-10 | 2.56 K | 2.56 K | FC-10 | 1166 | 1166 |

| FC-10 | 84 | 84 | |||

| Subtotal | 2.56 K | 2.56 K | Subtotal | 1.25 K | 1.25 K |

| Total | |||||

| Conv total | 260 K | 57.2 M | Conv total | 13 K | 3.64 M |

| FC total | 527 K | 527 K | FC total | 5 K | 29.3 K |

| Net total | 787 K | 58 M | Net total | 18 K | 3.7 M |

| Net | Task | Original Model | |||||

| Accu | Param | SOPs | Conn | Unit | Delay | ||

| 1F | MNIST | 0.9234 | 7840 | 7840 | 7840 | ||

| 2C1F | MNIST | 0.9957 | 0.1 M | 6 M | 6 M | - | - |

| 4C2F | CIFAR | 0.8910 | 0.8 M | 58 M | 58 M | ||

| Net | Task | Simplified TDNN Model | |||||

| Accu | Param | SOPs | Conn | Unit | Delay | ||

| 1F | MNIST | 0.9265 | 3000 | 17 K | 3161 | 243 | 7047 |

| 2C1F | MNIST | 0.9938 | 4000 | 0.4 M | 4567 | 1173 | 5020 |

| 4C2F | CIFAR | 0.8358 | 18K | 3.7 M | 17338 | 3125 | 4274 |

| Net | Task | FPGA Implementation | |||||

| Accuracy | Area | Power | |||||

| 1F | MNIST | 0.9209 | 29 K | 0.72 W | |||

| 2C1F | MNIST | 0.9937 | 49 K | 0.54 W | |||

| 4C2F | CIFAR | 0.8343 | 177 K | 2.14 W | |||

| TrueNorth [26] | SpiNNaker [4] | Minitaur [27] | This Work | |

|---|---|---|---|---|

| Neural Model | Spiking | Spiking | Spiking | Non- spiking |

| Archtecture | Non-von Neumann | Von Neumann | Von Neumann | Non-von Neumann |

| Technology Node | 28 nm | 130 nm | 45 nm | 28 nm |

| Off-chip IO | No | Yes | Yes | No |

| Device | Custom | Custom | Spartan 6 | Artix 7 |

| Accuracy | 99.42% | 95.01% | 92% | 99.37% |

| Power | 0.12W | 0.3W | 1.5W a | 0.59W |

| Throughput | 1000 FPS | 50 FPS | 7.54 FPS | 6337 FPS |

| Energy | 0.121 mJ | 6 mJ | 200 mJ | 0.093 mJ |

| CPU | GPU | FPGA Accelerator [4] | TrueNorth [28] | This Work | ||||

|---|---|---|---|---|---|---|---|---|

| Device Type | E5-2650 | GeForce Titan X | Virtex 7 | Custom | Kintex 7 | |||

| 485T | 325T | |||||||

| Memory Array | DRAM | DRAM | DRAM | SRAM | None | |||

| Technology Node | 32 nm | 28 nm | 28 nm | 28 nm | 28 nm | |||

| Net Model | Original | Original | Original | Simplified | Original | Simplified | - | Simplified |

| Tensor Type | Dense | Dense | Dense | Sparse | Dense | Sparse | - | - |

| SOPs | 58 M | 58 M | 58 M | 3.7 M | 58 M | 3.7 M | - | 3.7 M |

| Batch Size | 1 | 1 | 64 | 1 | 1 | 1 | - | 1 |

| Accuracy | 0.8910 | 0.8910 | 0.8910 | 0.8358 | 0.8910 | 0.8358 | 0.8341 | 0.8343 |

| Power (B) | - | 109 W | 190 W | 109 W | 18.61 W | 18.61 W | - | 4.92 W |

| Power (P + M) | 37.25 W | - | - | - | 14.61 W | 14.61 W | 0.204 W | 2.14 W |

| Throughput | 39 FPS | 1040 FPS | 13,502 FPS | 5824 FPS | 531 FPS | 2974 FPS | 1249 FPS | 4882 FPS |

| Energy (B) | - | 105 mJ | 14 mJ | 18.72 mJ | 35.05 mJ | 6.26 mJ | - | 1 mJ |

| Energy (P + M) | 954 mJ | - | - | - | 27.51 mJ (a) | 4.91 mJ (b) | 0.16 mJ | 0.44 mJ (c) |

| Improvement by simplification and hardware (a/c) | 62.5× | |||||||

| Improvement by hardware (b/c) | 11.2× | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, T.; Kang, Y.; Chung, J. InSight: An FPGA-Based Neuromorphic Computing System for Deep Neural Networks. J. Low Power Electron. Appl. 2020, 10, 36. https://doi.org/10.3390/jlpea10040036

Hong T, Kang Y, Chung J. InSight: An FPGA-Based Neuromorphic Computing System for Deep Neural Networks. Journal of Low Power Electronics and Applications. 2020; 10(4):36. https://doi.org/10.3390/jlpea10040036

Chicago/Turabian StyleHong, Taeyang, Yongshin Kang, and Jaeyong Chung. 2020. "InSight: An FPGA-Based Neuromorphic Computing System for Deep Neural Networks" Journal of Low Power Electronics and Applications 10, no. 4: 36. https://doi.org/10.3390/jlpea10040036