Aggressive Exclusion of Scan Flip-Flops from Compression Architecture for Better Coverage and Reduced TDV: A Hybrid Approach

Abstract

:1. Introduction

2. Background of Scan Compression Technology

2.1. Partial Scan

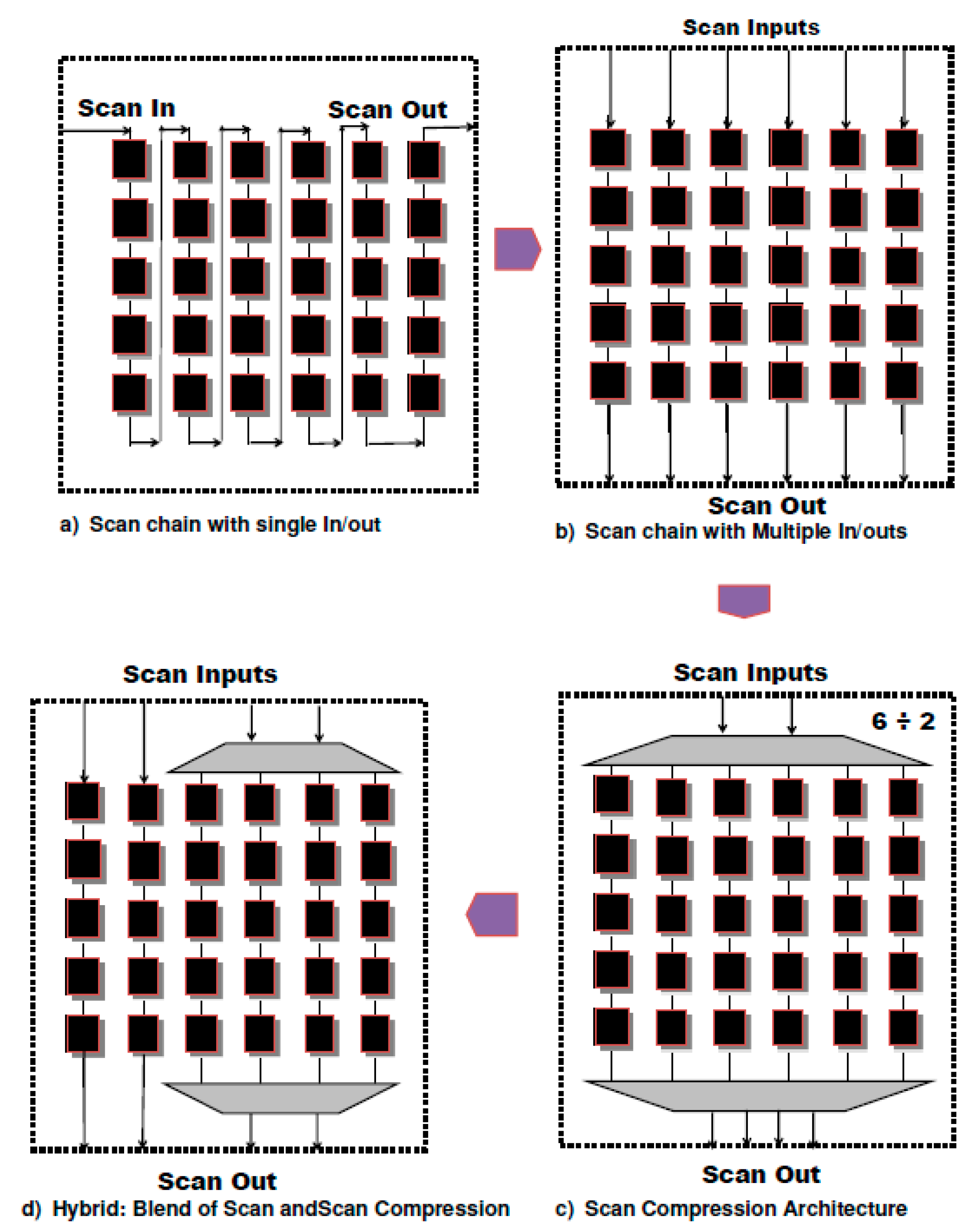

2.2. Fully Scan-Based Techniques

2.2.1. Random Access

2.2.2. Code-Based Techniques

2.2.3. Linear Decompressor Based Techniques

- (a)

- Linear combinational decompressor: The linear combinational decompressor is made up of combinational logic, which spreads the input test data received from ATE into the output space of the decompressor. The output space of the decompressor is connected into many internal scan chains. The XOR [2] network is a commonly used circuit in linear decompressors [2,3,12].

- (b)

- Linear sequential decompressor: These decompressors are constructed using LFSRs, a ring generator and cellular automata [2,3]. There are two types of sequential decompressors.

- (1)

- Fixed length sequential decompressors: Fixed length sequential decompressor techniques make use of the previous state value of scan slice to calculate new values in current clock cycles. Linear combinational decompressors suffer from handling more specified values in scan load test patterns in the worst-case scenarios. This is handled better in sequential decompressors to achieve better compression. The sequential decompressor performance increases with an increased number of sequential cells. This compression technique gets test load patterns fed from the ATE to the sequential logic (LFSR or cellular automata or ring generators) and then distributes this data to internal scan chains through combinational logic like XOR network. This compression technique suffers from encoding efficiency issues when more care bits are specified in the scan load test set [2,3].

- (2)

- Variable length sequential decompressor: This scan compression technique makes it possible to vary the number of free variables to be used for each test cube. The method provides improved encoding efficiency but comes at increased cost. The disadvantage of this technique is need for a gating channel. Various variable length linear sequential decompressors have been proposed [2,3].

- (3)

2.2.4. Broadcasting Based Techniques

- (c)

- General (without reconfiguration) broadcasting-based techniques: The scan compression technique [13] proposed supplies scan load test patterns through a single dedicated scan-data-input to multiple scan chains which are connected. Compared to scan mode, this technique reduces TDV and TAT, but suffers from the correlation of specified values in scan cells. The technique proposed in [14] shares scan-data-in among multiple circuits to supply scan load test patterns. Various scan compression techniques in this category are proposed in [2,3,4,15,16,17].

- (d)

- Broadcasting techniques with static reconfiguration: The static reconfiguration-based broadcasting scan decompressor techniques use sequential and combinational logic in the decompressor with a reconfiguration capability to handle correlation among scan cells in the scan chains. These techniques are adopted and are currently being used in the industry. In this technique, the reconfiguration of scan chains takes place while applying a new scan load test pattern. The method proposed in Illinois scan dual mode architecture reduces the length of the scan chain in shared scan-data-in mode and reduced TAT [18,19].

- (e)

- Broadcasting techniques with dynamic reconfiguration: In this scan compression technique, the selection of different configurations of scan chains happens while loading scan load test patterns. This feature of the broadcast scan compression leads to better compression, TAT and TDV. The disadvantage of these techniques is a greater need for control information.

- (1)

- Broadcasting techniques with streaming dynamic reconfiguration: The streaming decompressor-based broadcasting scan compression [20] which supports reconfiguration of scan compression for better compression ratio, reduced TAT and TDV. In this technique, each clock cycle is applied when data is fed from the ATE to the decompressor, and the same data is streamed into the internal scan chains in a diagonal fashion; Figure 2a shows the architecture. Figure 3a shows the diagonal correlation introduced in the streaming compression technique. Our proposed AE method is validated using a scan synthesis technique; details are provided in the subsequent sections.

- (2)

- Broadcasting techniques with non-streaming dynamic reconfiguration: In these scan compression techniques, scan load test data is loaded from ATE into the shift register of the decompressor. Then, data is shifted into each scan slice of the scan chain on a per clock cycle basis. These compression techniques have horizontal dependency, as shown in Figure 3b. These techniques are adopted by the industry and provide better compression, TAT, and TDV, but suffer from compression-induced correlation of scan cells. See [21,22,23,24,25] for more details.

- (f)

- Broadcasting techniques with patterns overlapping: The theme behind pattern overlapping is to identify the overlap of the scan load test pattern for the given scan load pattern, which is already generated by the ATPG. The test pattern overlap is identified by shifting non-overlapping beginning bits and finding overlapping bits at the end of the current scan load test set with the next scan load test set. This way, a new pattern is created. This technique is good at achieving higher compression ratios and TDV reduction. A statistical analysis-based patterns overlapping method for scan architecture was proposed in [26]. The patterns overlapping-based technique to reduce TDV and TAT was proposed in [27]. Dynamic structures are used to store the test data by encoding sparse test vector. The patterns overlapping technique, which works by exploiting the unknown values in scan load test patterns, is proposed in [28]. The deterministic patterns slice overlapping technique based on LFSR reseeding is proposed in [29] to reduce TDV.

- (g)

- Broadcasting techniques with hybrid approach

- (1)

- Broadcasting and patterns overlapping: This hybrid technique takes advantage of both the broadcasting and the pattern overlapping scan compression techniques. These techniques achieve better compression ratios and TDV. The TAT of these techniques is huge, as they need to figure out the next pattern based on the current to identify the overlapping pattern. A hybrid approach combining broadcast-based scan architecture along with patterns overlapping is proposed in [30]. The broadcast-based patterns overlapping technique was proposed in [31]. It is claimed that it is able to reduce TAT with increased TDV.

- (2)

- Broadcasting techniques with a mixture of both compression and scan mode: The hybrid approach, which is a combination of scan and compression to improve pattern count in the presence of unknowns in scan unload patterns, is proposed in [32]. This significantly reduces the pattern count. The proposed AE method results in improvements in TC and reductions in TPC, based on an analysis of scan load patterns.

- (h)

- Broadcasting techniques with circular scan architecture: In this architecture [33,34,35], the first scan load test pattern is loaded into all internal scan chains. Each scan channel output is connected back to the input of the same channel. These chains have the option of getting scan load data from the ATE or the shift-in capture response of current pattern as the next scan load test pattern for the chain. The disadvantage of these techniques is that the pattern being circulated is non-deterministic.

- (i)

- Broadcasting techniques with tree-based architecture: Scan tree-based compression techniques are based on compatible scan cells considering ATPG generated test patterns. The success of scan tree-based techniques depends on the presence of compatible sets of scan cells. These techniques are not feasible for highly compacted scan test patterns. A scan tree-based compression technique algorithm which is able to handle scan cells having little or no compatibility in the given scan test patterns is proposed in [36]. The dynamically-configurable dual mode scan tree-based compression architecture is proposed in [37]. This works in both scan mode and scan tree mode. A tree-based LFSR which exploits the merits of both input scan-data-in sharing and re-use methodology is presented in [38] to test both sequential and combinational circuits.

3. Coverage Reduction Cases Because Of Scan Compression

- (1)

- The fault being detected must have structural correlation on two or more scan cells.

- (2)

- These two or more scan cells must be present in different scan chains and fan-out from the same data bit/ATE channel.

Sparseness in Output Space of the Decompressor

4. Proposed AE Method

4.1. Scan Cells Exclusion and Care Bits Density

4.2. AE Method

4.3. Flow Chart Showing Execution Flow of the AE Method

5. Aggressive Exclusion of Scan Cells Algorithm from Compression Architecture

| Algorithm 1: GetExtScanChains() |

| Inputs: Ts—Set of scan load test stimulus Si—Number of Scan-Data-In Ports assigned to scan compression technique Output: Chains[]—Array of external chains holding relevant scan cells excluded from compression technique Let Np = SizeOf(Ts) // Number of scan load test stimulus Let Skip5Per = Np × (5/100) // Skipping first 5% Patterns Let C = 1 While (Ts[C] < Skip5Per) Let C = C + 1 EndWhile While (C ≤ Np) Let Tp = Ts[C] Let Len = Length[Tp] Let N = Len While (Len > 0) If (Tp[Len] == ‘0’ OR Tp[Len] == ‘1’) // considering care bit 0 or 1 SFF[Len] = SFF[Len] + 1 CB = CB + 1 EndIF Let Len = Len − 1 EndWhile Let C = C + 1 EndWhile O_SFF[] = ORDER_IN_DESCENDING(SFF, N) // sorting scan cells in descending order based on specified value ranking of scan cell Let LenExt = FindLenCh() // Finding length of the external scan chains Let N_Ext = Si / 2 // Number of external scan chains equal to 50% of scan-data-in ports Let Max = CB × (43/100) // maximum up to 43% of total cbd Let Min = CB × (12 /100) // minimum 12% of total cbd Let Chains[] = CreateExtChains(N_Ext, O_SFF, LenExt, Max, Min) // set of external scan chains Return Chains[] // Set of external scan chains End |

| Algorithm 2: FindLenCh() |

| Input: Test Protocol file of compression technique Output: eChLen—length of an external chain Read Test protocol file of compression technique Find length of longest internal scan chain as ‘L’ Find serial register length as ‘Srl’ eChLen = L + Srl // Length of an external scan chain calculation Return eChLen End |

| Algorithm 3: Function: CreateExtChains() |

| Inputs: N_Ext—Number of external chains to be formed O_SFF—Set scan cells having specified value in number of scan load test stimulus LenExt—Length of each external chain being formed Max—43% value of care bits density Min—12% value of the care bits density Output: Chains[]—To hold scan cells of the external chains Let Cnt = 1 Let CB = 0 Let N = SizeOf(O_SFF) Let X = 1 While (Cnt < N) Let CB = CB + O_SFF[Cnt] // care bits density If (CB ≤ Max) // Check whether care bits density of scan chain is less than or equal to the maximum limit Let Chains[N_Ext][x] = O_SFF[Cnt] If (X < LenExt) Let X = X + 1 Else Let X = 1 Let N_Ext = N_Ext − 1 EndIf Else Break EndIf EndWhile If (CB < Min) // if care bits density is less than the minimum threshold ignore such chains delete Chains[] EndIf Return Chains[] End |

6. Procedure of Aggressive Exclusion Method

| Algorithm 4: Phase I_FlowOfExecution() |

| Inputs: Verilog_netlist—Verilog netlist which is DUT Verilog_libraries—Verilog libraries for lib cells DFT configuration—ATE channels, Internal chains and etc. Outputs: TC—Test coverage TPC—Test Patterns Count Read verilog_netlist Read verilig_libraries Input scan synthesis configuration, including chains count, ATE channels, and etc. Invoke scan synthesis and insertion engine Write out scan synthesized output netlist Write out scan protocol file Invoke ATPG engine to generate test patterns for all the faults including stuck-at, transition and etc. Measure percentage of TC and TPC End |

| Algorithm 5: PhaseII_FlowOfExecution() |

| Inputs: Verilog _netlist—Verilog netlist which is DUT Verilog_libraries—Verilog libraries for lib cells DFT configuration—ATE Channels, Internal scan chains and External scan chains specification Outputs: TC—Test Coverage TPC—Test Patterns Count TCF—Test Coverage at full run of AE method TPCF—Test Patterns Count at full run of AE method Read verilog_netlist Read verilig_libraries Input scan synthesis configuration, including internal scan chains, number of the ATE channels, and etc. Allot 50% scan-data-in ports into the external chains Allot 50% of scan-data-in ports into compression technique Specification for external scan chains creation Invoke scan synthesis and insertion engine Write out scan synthesized output netlist Write out scan protocol file Invoke ATPG engine to generate the test patterns for all the faults including stuck-at, transition and etc. Measure percentage of TC and TPC at same coverage as produced at Step (8) of Phase I Measure percentage of TCF and TPCF at the end of full run and compare it with Step (8) of Phase I End |

7. Experimental Results

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kobayashi, S.; Edahiro, M.; Kubo, M. Scan-chain optimization algorithms for multiple scan-paths. In Proceedings of the 1998 Asia and South Pacific Design Automation Conference, Yokohama, Japan, 13 February 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 301–306. [Google Scholar]

- Wang, L.T.; Wu, C.W.; Wen, X. VLSI Test Principles and Architectures: Design for Testability; Elsevier: Amsterdam, The Netherland, 2006. [Google Scholar]

- Touba, N.A. Survey of test vector compression techniques. IEEE Des. Test Comput. 2006, 23, 294–303. [Google Scholar] [CrossRef]

- Kapur, R.; Mitra, S.; Williams, T.W. Historical perspective on scan compression. IEEE Des. Test Comput. 2008, 25, 114–120. [Google Scholar] [CrossRef]

- Agrawal, V.D.; Cheng, T.K.T.; Johnson, D.D.; Lin, T. A complete solution to the partial scan problem. In Proceedings of the International Test Conference 1987, Washington, DC, USA, 30 August–3 September 1987; pp. 44–51. [Google Scholar]

- Park, S. A partial scan design unifying structural analysis and testabilities. Int. J. Electron. 2001, 88, 1237–1245. [Google Scholar] [CrossRef]

- Sharma, S.; Hsiao, M.S. Combination of structural and state analysis for partial scan. In Proceedings of the VLSI Design 2001. Fourteenth International Conference on VLSI Design, Bangalore, India, 7 January 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 134–139. [Google Scholar]

- Chickermane, V.; Patel, J.H. An optimization based approach to the partial scan design problem. In Proceedings of the International Test Conference 1990, Washington, DC, USA, 10–14 September 1990; IEEE: Piscataway, NJ, USA, 1990; pp. 377–386. [Google Scholar]

- Jou, J.Y.; Cheng, K.T. Timing-driven partial scan. IEEE Des. Test Comput. 1995, 12, 52–59. [Google Scholar]

- Kagaris, D.; Tragoudas, S. Retiming-based partial scan. IEEE Trans. Comput. 1996, 45, 74–87. [Google Scholar] [CrossRef]

- Narayanan, S.; Gupta, R.; Breuer, M.A. Optimal configuring of multiple scan chains. IEEE Trans. Comput. 1993, 42, 1121–1131. [Google Scholar] [CrossRef]

- Balakrishnan, K.J.; Touba, N.A. Improving linear test data compression. IEEE Trans. Very Larg. Scale Integr. (VLSI) Syst. 2006, 14, 1227–1237. [Google Scholar] [CrossRef]

- Lee, K.J.; Chen, J.J.; Huang, C.H. Using a single input to support multiple scan chains. In Proceedings of the 1998 IEEE/ACM International Conference on Computer-Aided Design. Digest of Technical Papers, San Jose, CA, USA, 8–12 November 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 74–78. [Google Scholar]

- Hsu, F.F.; Butler, K.M.; Patel, J.H. A Case Study on the Implementation of the Illinois Scan Architecture. In Proceedings of the International Test Conference 2001, Baltimore, MD, USA, 1 November 2001; IEEE: Piscataway, NJ, USA, 2001; p. 538. [Google Scholar]

- Hamzaoglu, I.; Patel, J.H. Reducing test application time for full scan embedded cores. In Proceedings of the Digest of Papers. Twenty-Ninth Annual International Symposium on Fault-Tolerant Computing, Madison, WI, USA, 15–18 June 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 260–267. [Google Scholar]

- Shah, M.A.; Patel, J.H. Enhancement of the Illinois scan architecture for use with multiple scan inputs. In Proceedings of the IEEE Computer Society Annual Symposium on VLSI, Lafayette, LA, USA, 19–20 February 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 167–172. [Google Scholar]

- Lee, K.J.; Chen, J.J.; Huang, C.H. Broadcasting test patterns to multiple circuits. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 1999, 18, 1793–1802. [Google Scholar]

- Pandey, A.R.; Patel, J.H. Reconfiguration technique for reducing test time and test data volume in Illinois scan architecture based designs. In Proceedings of the 20th IEEE VLSI Test Symposium (VTS 2002), Monterey, CA, USA, 28 April–2 May 2002; IEEE: Piscataway, NJ, USA, 2002; pp. 9–15. [Google Scholar]

- Jas, A.; Pouya, B.; Touba, N.A. Virtual scan chains: A means for reducing scan length in cores. In Proceedings of the 18th IEEE VLSI Test Symposium, Montreal, QC, Canada, 30 April–4 May 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 73–78. [Google Scholar]

- Chandra, A.; Kapur, R.; Kanzawa, Y. Scalable adaptive scan (SAS). In Proceedings of the Conference on Design, Automation and Test in Europe, Nice, France, 20–24 April 2009; European Design and Automation Association: Leuven, Belgium, 2009; pp. 1476–1481. [Google Scholar]

- Samaranayake, S.; Sitchinava, N.; Kapur, R.; Amin, M.B.; Williams, T.W. Dynamic scan: Driving down the cost of test. Computer 2002, 10, 63–68. [Google Scholar] [CrossRef]

- Li, L.; Chakrabarty, K. Test set embedding for deterministic BIST using a reconfigurable interconnection network. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2004, 23, 1289–1305. [Google Scholar] [CrossRef]

- Sitchinava, N.; Gizdarski, E.; Samaranayake, S.; Neuveux, F.; Kapur, R.; Williams, T.W. Changing the scan enable during shift. In Proceedings of the 22nd IEEE VLSI Test Symposium, Napa Valley, CA, USA, 25–29 April 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 73–78. [Google Scholar]

- Wang, L.T.; Wen, X.; Furukawa, H.; Hsu, F.S.; Lin, S.H.; Tsai, S.W.; Abdel-Hafez, K.S.; Wu, S. VirtualScan: A new compressed scan technology for test cost reduction. In Proceedings of the 2004 International Conference on Test, Charlotte, NC, USA, 26–28 October 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 916–925. [Google Scholar]

- Han, Y.; Li, X.; Swaminathan, S.; Hu, Y.; Chandra, A. Scan data volume reduction using periodically alterable MUXs decompressor. In Proceedings of the 14th Asian Test Symposium (ATS’05), Calcutta, India, 18–21 December 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 372–377. [Google Scholar]

- Su, C.; Hwang, K. A serial scan test vector compression methodology. In Proceedings of the IEEE International Test Conference—(ITC), Baltimore, MD, USA, 17–21 October 1993; IEEE: Piscataway, NJ, USA, 1993; pp. 981–988. [Google Scholar]

- Jenicek, J.; Novak, O. Test pattern compression based on pattern overlapping. In Proceedings of the 2007 IEEE Design and Diagnostics of Electronic Circuits and Systems (DDECS’07), Krakow, Poland, 11–13 April 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1–6. [Google Scholar]

- Rao, W.; Bayraktaroglu, I.; Orailoglu, A. Test application time and volume compression through seed overlapping. In Proceedings of the 40th Annual Design Automation Conference, Anaheim, CA, USA, 2–3 June 2003; ACM: New York, NY, USA, 2003; pp. 732–737. [Google Scholar]

- Li, J.; Han, Y.; Li, X. Deterministic and low power BIST based on scan slice overlapping. In Proceedings of the 2005 IEEE International Symposium on Circuits and Systems (ISCAS 2005), Kobe, Japan, 23–26 May 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 5670–5673. [Google Scholar]

- Chloupek, M.; Novak, O. Test pattern compression based on pattern overlapping and broadcasting. In Proceedings of the 2011 10th International Workshop on Electronics, Control, Measurement and Signals (ECMS), Liberec, Czech, 1–3 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–5. [Google Scholar]

- Chloupek, M.; Novak, O.; Jenicek, J. On test time reduction using pattern overlapping, broadcasting and on-chip decompression. In Proceedings of the 2012 IEEE 15th International Symposium on Design and Diagnostics of Electronic Circuits & Systems (DDECS), Tallinn, Estonia, 18–20 April 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 300–305. [Google Scholar]

- Shantagiri, P.V.; Kapur, R. Handling Unknown with Blend of Scan and Scan Compression. J. Electron. Test. 2018, 34, 135–146. [Google Scholar] [CrossRef]

- Arslan, B.; Orailoglu, A. CircularScan: A scan architecture for test cost reduction. In Proceedings of the Design, Automation and Test in Europe Conference and Exhibition, Paris, France, 16–20 February 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 1290–1295. [Google Scholar]

- Azimipour, M.; Eshghi, M.; Khademzahed, A. A Modification to Circular-Scan Architecture to improve test data compression. In Proceedings of the 15th International Conference on Advanced Computing and Communications (ADCOM 2007), Guwahati, India, 18–21 December 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 27–33. [Google Scholar]

- Azimipour, M.; Fathiyan, A.; Eshghi, M. A parallel Circular-Scan architecture using multiple-hot decoder. In Proceedings of the 2008 15th International Conference on Mixed Design of Integrated Circuits and Systems, Poznan, Poland, 19–21 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 475–480. [Google Scholar]

- Banerjee, S.; Chowdhury, D.R.; Bhattacharya, B.B. An efficient scan tree design for compact test pattern set. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2007, 26, 1331–1339. [Google Scholar] [CrossRef]

- Bonhomme, Y.; Yoneda, T.; Fujiwara, H.; Girard, P. An efficient scan tree design for test time reduction. In Proceedings of the 9th IEEE European Test Symposium (ETS’04), Corsica, France, 23–26 May 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 174–179. [Google Scholar]

- Rau, J.C.; Jone, W.B.; Chang, S.C.; Wu, Y.L. Tree-structured LFSR synthesis scheme for pseudo-exhaustive testing of VLSI circuits. IEE Proc.-Comput. Digit. Technol. 2000, 147, 343–348. [Google Scholar] [CrossRef]

- DFTMAX Ultra New Technology to Address Key Test Challenges. Available online: https://www.synopsys.com/content/dam/synopsys/implementation&signoff/white-papers/dftmax-ultra-wp.pdf (accessed on 5 May 2019).

- TetraMAX. Synopsys ATPG Solution. Available online: http://www.synopsys.com/products/test/tetramax_ds.pdf (accessed on 1 June 2018).

| Test Patterns | Percentage of TC Achieved | Type of Faults |

|---|---|---|

| First 5% of TPC | 56% to 86% | Easy to detect faults including random and deterministic. |

| Last 95% of TPC | 18% to 39.17% | Hard-to-detect faults. |

| Name of the Circuit | Overall TPC Reduction |

|---|---|

| C1 | Up to 77.13% |

| C2 | Up to 76.13% |

| C3 | Up to 24.91% |

| C5 | Up to 22.68% |

| C6 | Up to 17.55% |

| Name of Circuit | Percentage of TC Improvement |

|---|---|

| C1 | Up to 1.33% |

| C2 | Up to 1.22% |

| C3 | Up to 0.09% |

| C4 | Up to 0.08% |

| C5 | Up to 0.16% |

| C6 | Up to 0.16% |

| Circuit | #Scan Cells | #SI/SO | #Chains | Scan Compression | AE Method at Same Coverage | Full Run of AE Method | Improvements | External Chains Contributing | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #TPC | %TC | #TPC | %TC | #TPC | %TC | %TC | %TPC | %Care Bits | ||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| C1 | 28 K | |||||||||||

| 6 | 200 | 2207 | 74.71 | 1058 | 74.74 | 2791 | 75.04 | 0.33 | 52.06 | 30% | ||

| 8 | 200 | 2339 | 74.66 | 1577 | 74.68 | 2797 | 74.89 | 0.23 | 32.58 | 29% | ||

| 8 | 400 | 2765 | 75.61 | 888 | 76.20 | 3111 | 76.83 | 1.22 | 67.88 | 26% | ||

| 10 | 500 | 2851 | 7595 | 652 | 76.43 | 3494 | 77.45 | 0.50 | 77.13 | 26% | ||

| 16 | 400 | 1782 | 75.42 | 2306 | 75.42 | 2820 | 75.50 | 0.08 | -- | 34% | ||

| 16 | 800 | 2139 | 76.95 | 568 | 77.76 | 2650 | 79.09% | 1.33 | 73.45 | 26% | ||

| C2 | 147 K | |||||||||||

| 8 | 800 | 6889 | 94.42 | 4117 | 94.42 | 7337 | 94.66 | 0.24 | 40.24 | 24% | ||

| 8 | 1000 | 6151 | 94.74 | 4925 | 94.74 | 7427 | 94.90 | 0.16 | 19.93 | 20% | ||

| 8 | 2000 | 8266 | 92.43 | 4150 | 92.80 | 9253 | 93.76 | 0.33 | 49.79 | 12% | ||

| 10 | 500 | 9658 | 84.56 | 13,644 | 84.56 | 16,539 | 84.60 | 0.04 | -- | 36% | ||

| 10 | 1000 | 5657 | 94.37 | 5481 | 94.37 | 7317 | 94.48 | 0.11 | 3 | 28% | ||

| 12 | 600 | 9784 | 95.17% | -- | -- | 6478 | 95.00% | 0.17 | 33.79 | 43% | ||

| 12 | 1200 | 13,326 | 93.50 | 3181 | 93.53 | 8040 | 94.61 | 1.11 | 76.13 | 24% | ||

| 16 | 1000 | 5380 | 94.51 | 3272 | 94.5 | 6411 | 94.78 | 0.27 | 39.18 | 36% | ||

| 16 | 1500 | 12,310 | 92.94 | 3586 | 92.94 | 7563 | 93.31 | 0.37 | 70.87 | 31% | ||

| 16 | 1600 | 6950 | 93.02 | 2333 | 93.03 | 8408 | 94.24 | 1.22 | 66.43 | 25% | ||

| 16 | 2000 | 7550 | 92.28 | 5922 | 92.28 | 8484 | 92.43 | 0.15 | 21.56 | 21% | ||

| 24 | 2000 | 6378 | 93,76 | 5365 | 93.76 | 7735 | 93.90 | 0.14 | 15.88 | 29% | ||

| C3 | ||||||||||||

| 8 | 400 | 12,080 | 84.57 | 15,787 | 84.57 | 17,528 | 84.59 | 0.02 | -- | 37% | ||

| 36 | 1800 | 11,979 | 84.63 | 8995 | 84.63 | 12,683 | 84.72 | 0.09 | 24.91 | 38% | ||

| 40 | 2000 | 9615 | 84.64 | 10,557 | 84.64 | 13,499 | 84.70 | 0.06 | -- | 37% | ||

| C4 | ||||||||||||

| 8 | 1000 | 8198 | 95.90 | 9052 | 95.90 | 9651 | 95.95 | 0.05 | -- | |||

| 20 | 2000 | 8596 | 95.90 (9+11) | 10,396 | 95.90 | 11,137 | 95.93 | 0.03 | -- | 27% | ||

| 8 | 1600 | 8654 | 95.88 | 12,445 | 95.88 | 13245 | 95.94 | 0.06 | -- | 22% | ||

| 24 | 1200 | 6906 | 95.90 | 7676 | 95.90 | 8252 | 95.93 | 0.03 | -- | 37% | ||

| 12 | 2000 | 9770 | 95.89 | 10,374 | 95.89 | 11,951 | 95.97 | 0.08 | -- | 25% | ||

| 36 | 1800 | 6444 | 95.88 | 7020 | 95.88 | 7402 | 95.93 | 0.05 | -- | 37% | ||

| C5 | 530 K | |||||||||||

| 20 | 3000 (9+11) | 13,024 | 92.90 | 11,925 | 92.90 | 13,746 | 93.06 | 0.16 | 8.84 | 36% | ||

| 12 | 600 | 6625 | 92.91 | 7182 | 92.92 | 7361 | 92.94 | 0.03 | -- | 40% | ||

| 12 | 1200 | 6773 | 92.91 | 7786 | 92.91 | 8233 | 92.97 | 0.06 | -- | 38% | ||

| 20 | 1000 | 6461 | 92.88 | 8658 | 92.88 | 9226 | 92.91 | 0.03 | -- | 40% | ||

| 16 | 800 | 8351 | 92.87 | 7491 | 92.87 | 8197 | 92.93 | 0.06 | 10.30 | 40% | ||

| 16 | 1600 | 7352 | 92.92 | 9696 | 92.93 | 9947 | 92.95 | 0.03 | -- | 38% | ||

| 10 | 800 | 9650 | 92.88 | 7461 | 92.88 | 7674 | 92.90 | 0.02 | 22.68 | 40% | ||

| 10 | 1600 | 11,054 | 92.89 | 15,392 | 92.89 | 16,452 | 92.96 | 0.07 | -- | 36% | ||

| 20 | 2000 | 8309 | 92.93 | 10,182 (9+11) | 92.95 | 10,329 | 92.96 | 0.03 | -- | 38% | ||

| 10 | 3000 | 14,389 | 92.95 | 15,945 | 92.95 | 17,594 | 93.03 | 0.08 | -- | 32% | ||

| C6 | ||||||||||||

| 16 | 800 | 6027 | 93.29 | 6653 | 93.29 | 7812 | 93.32 | 0.03 | -- | 29% | ||

| 20 | 1000 | 6033 | 93.29 | 6453 | 93.29 | 7474 | 93.31 | 0.02 | -- | 29% | ||

| 8 | 400 | 6199 | 93.29 | 6871 | 93.29 | 7803 | 93.31 | 0.02 | -- | 28% | ||

| 36 | 1800 | 6305 | 93.27 | 5169 | 93.27 | 7822 | 93.36 | 0.09 | 18 | 29% | ||

| 36 | 3600 | 7270 | 93.29 | 5994 | 93.29 | 10,100 | 93.45 | 0.16 | 17.55 | 22% | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shantagiri, P.V.; Kapur, R. Aggressive Exclusion of Scan Flip-Flops from Compression Architecture for Better Coverage and Reduced TDV: A Hybrid Approach. J. Low Power Electron. Appl. 2019, 9, 18. https://doi.org/10.3390/jlpea9020018

Shantagiri PV, Kapur R. Aggressive Exclusion of Scan Flip-Flops from Compression Architecture for Better Coverage and Reduced TDV: A Hybrid Approach. Journal of Low Power Electronics and Applications. 2019; 9(2):18. https://doi.org/10.3390/jlpea9020018

Chicago/Turabian StyleShantagiri, Pralhadrao V., and Rohit Kapur. 2019. "Aggressive Exclusion of Scan Flip-Flops from Compression Architecture for Better Coverage and Reduced TDV: A Hybrid Approach" Journal of Low Power Electronics and Applications 9, no. 2: 18. https://doi.org/10.3390/jlpea9020018