Land Use/Cover Classification of Large Conservation Areas Using a Ground-Linked High-Resolution Unmanned Aerial Vehicle

Abstract

1. Introduction

| UAV Type | Satellite System | Research and Major Findings | Location, Size, and Habitats | Scope | Reference |

|---|---|---|---|---|---|

| mX-SIGHT, Germany | - | Analyzed and revealed the potential use of UAV-derived imagery to measure areas of land plots for monitoring land policies | Spain: experimental sites (0.3–29 ha of crops) | Limited to land plots | [35] |

| DJI-Phantom 2 with a spatial resolution of 2.8 cm | Pleiades-IB | Compared and established the higher capability of the UAV over the satellite in mapping mangroves in terms of image quality: accuracies, area coverage, and costs (time and user). Reported that better spectral resolution provides Pleiades-IB with more advantages over UAV-derived RGB orthoimages for assessing health and biomass. | Setiu wetland in Malaysia: mangroves (4.18 km2) | Focused on small areas of mangroves | [55] |

| Bormatec-MAJA: Bormatec, Mooswiesen, Ravensburg, Germany | Satellite tracking tool | Assessed and demonstrated the usefulness of combining UAV and satellite tracking of individual animals (e.g., proboscis monkey) for detecting key conservation issues such as deforestation and influencing policy reviews | Sabah, Malaysian Borneo. Riparian habitats (273.51 ha) | Riparian habitats for a proboscis monkey | [56] |

| Octocopter (OktoXL–HiSystems GmbH) | Sentinel-2 | Developed a methodological framework for estimating the fractional coverage (FC%) of an invasive shrub species, Ules Europaeus (common gorse) | Chiloé Island (south–central Chile): Ten flown sites, each 50 ha | Selected areas invaded with shrubs | [57] |

| Parrot Bluegrass quadcopter and DJI Phantom 4 Pro | Sentinel-2 | Assessed and quantitatively demonstrated the improvements of a multispectral UAV mapping technique for higher-resolution images used for advanced mapping and assessing coastal land cover. It also compared UAV and satellite capabilities in the same area. | Indian River Lagoon along the central Atlantic coast of Florida, USA | Coastal habitats | [58] |

| Fixed-wing Sense fly eBee-S.O.D.A. and Parrot Sequoia cameras | - | Evaluated the potential of UAVs for the collection of ultra-high spatial resolution imagery for mapping tree line ecotone land covers, showing a higher efficiency | Norway: 32 tree-line ecotone sites | Alpine tree-line ecotone | [59] |

| Phantom 4-Pro with MicaSense RedEdge-M multispectral camera system | WorldView-4 satellite | Utilized high-spatial-resolution drone and WorldView-4 satellite data to map and monitor grazing land cover change and pasture quality pre- and post-flooding. The two platforms were found to be useful in detecting grazing land cover change at a finer scale. | Cheatham County, middle Tennessee, USA | Cattle grazing land | [60] |

| DJI INSPIRE 1 quad-rotor with Zenmuse × 5 onboard cameras. | - | Quantified the spatial pattern distributions of dominated vegetation along the elevation gradient | Luntai County, China: 22 sample plots | Field experimental plots | [34] |

| DJI Inspire 1 v2 (Shenzen, China). MicaSense RedEdge camera | World-View 3 Sentinel-2 | Investigated using UAV and satellite platforms to monitor and classify aquatic vegetation in irrigation channels. The UAV was found to be effective for intensive monitoring of weeds in small areas of irrigation channels. | Murrumbidgee Irrigation Area (MIA), Australia: 38.5 km2 | Irrigation channels | [61] |

| Sensefly eBee with multispectral Parrot Sequoia and RGB sensors | - | Examined object-based classification accuracies for different cover types and vegetation species using data from UAV-based multispectral cameras | Trent University campus, Central Ontario, Canada: 10 ha | Small, mixed forest and agricultural area | [62] |

| Octocopter (University of Tehran) with a MAPIR Survey1 Visible Light Camera (San Diego, CA, USA) | Sentinel-2 | Assessed and proved the suitability of integrating UAV-obtained RGB images, Sentinel-2 data, and ML models for estimating forest canopy cover (FCC), intended for precise and fast mapping at the landscape-level scale. | Kheyrud Experimental Forest, Northern Iran. Four flown plots: 20 ha, 15 ha, 17 ha and 19 ha. | Canopy cover in a Forest | [63] |

| DJI Phantom 4 Pro (DJI, Shenzhen, China) | Sentinel-2 | Assessed and revealed that UAV-based RGB orthophotos and CHM data have a very good ability to detect and classify scattered trees and different land covers along the narrow river. | Chaharmahal-va-Bakhtiari province of Iran: Five plots | Riparian landscape adjoining a narrow river | [47] |

| UAV Type | Satellite System | Research and Major Findings | Location, Size, and Habitats | Scope | Reference |

|---|---|---|---|---|---|

| DJI Inspire, Ebee (senseFly SA, Cheseaux-sur-Lausanne, Switzerland) and Parrot Disco (Parrot, Paris, France) | S1 SAR and S2 | Used UAV-based imagery to create a ground-truthing dataset for mapping cropped areas, establishing a higher potential use of UAVs compared to satellite platforms. | Rwanda: Small mono-cropped fields, intercropped and natural vegetation (80 ha each location) | Crops, mixed crops and grassland, small tree stands, woodlands and small forests. | [64] |

| SenseFly eBeeX with a Parrot Sequoia+ multispectral camera | Synthetic aperture radar (SAR) | Assessed the synergistic approach of a multispectral UAV-based dataset and SAR on understanding the spectral features of intended objects. Used SVM and RFC. | Nigeria International Institute of Tropical Agriculture (IITA) agricultural fields. | Experimental plots | [48] |

| DJI Mavic Pro micro-quadcopter and a Sequoia parrot multispectral sensor | - | Explored whether fractional vegetation component (FVC) estimates vary with different classification approaches (pixel- and segment-based random forest classifiers) applied to very high-resolution small UAV-derived imagery. | Botswana: Chobe Enclave, Southern African dryland savanna: nine sites | Savanna cover: grass-, shrub-, and tree-dominated sites | [65] |

| Micro-quadcopter and a multispectral sensor (Micasense) | - | Assessed the efficacy of UAS for monitoring vegetation structural characteristics in a mixed savanna woodland using UAS imagery. | Botswana: Chobe Enclave, grass, shrub, and tree sites (9) | Savanna cover and woody vegetation structure | [38] |

| eBeeX fixed-wing (Airinov multispec 4C sensor) | -- | Successfully mapped the spatial extent of banana farmland mixed with buildings, bareland, and other areas of vegetation in 4 villages in Rwanda. | Rwanda: Small-holder farmland | Small plots of Banana farmland | [66] |

| DJI Phantom 4 Pro | Sentienl-2 | Assessed the coastal shoreline changes using multi-platform data drones, a shore-based camera, Sentinel satellite images, and a dumpy level for effective monitoring. The UAV and local video cameras were more effective than Sentinel-2. | Elmina Bay, Ghana: 1.5 m beach. | Beach area | [67] |

2. Materials and Methods

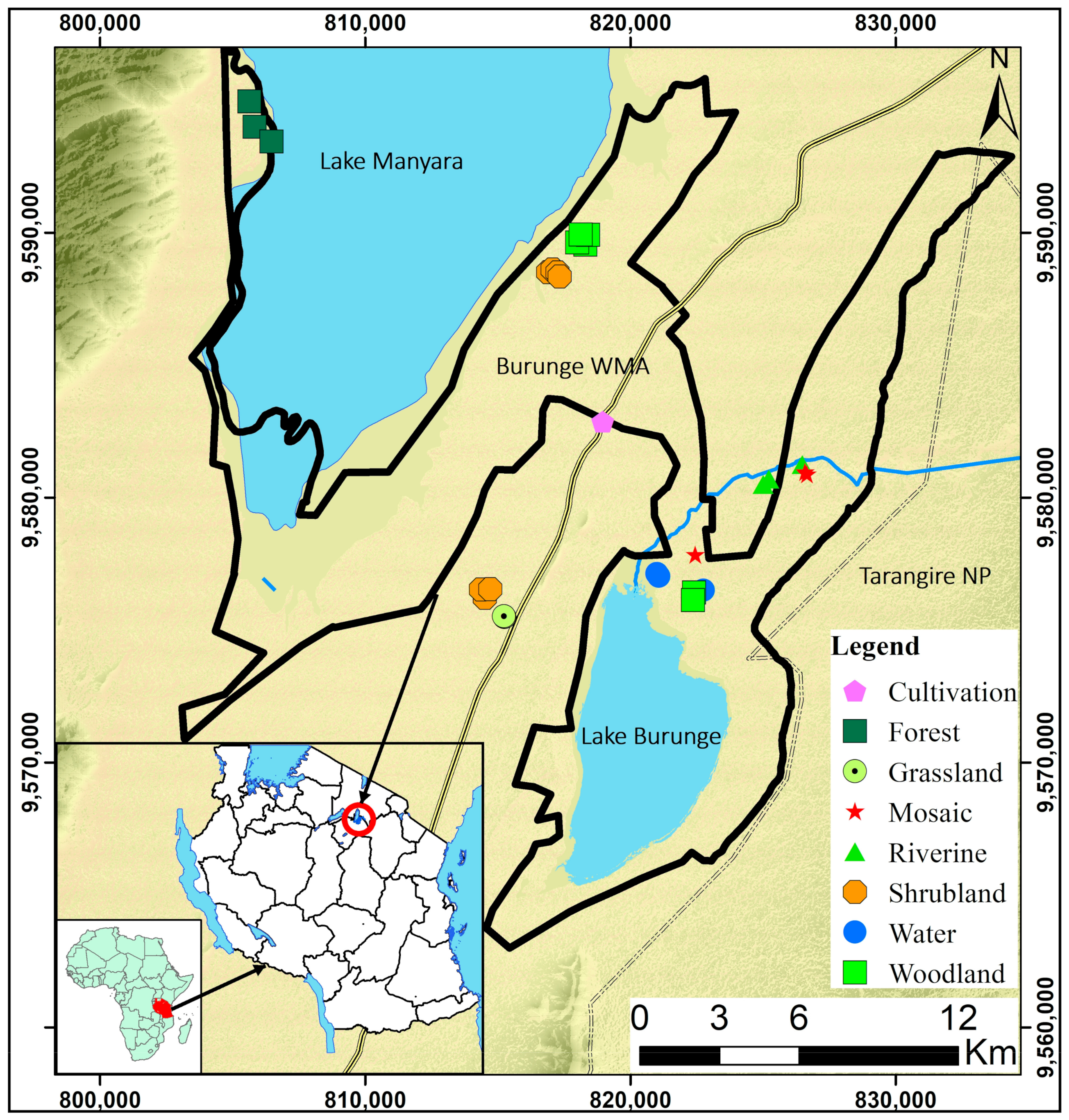

2.1. Study Area

2.2. Data Collection

2.2.1. Ground Survey for the Determination of Land Cover Types

2.2.2. UAV-Based Survey

UAV Used and Flight Mission Planning

Image Processing to Create RGB Orthoimages

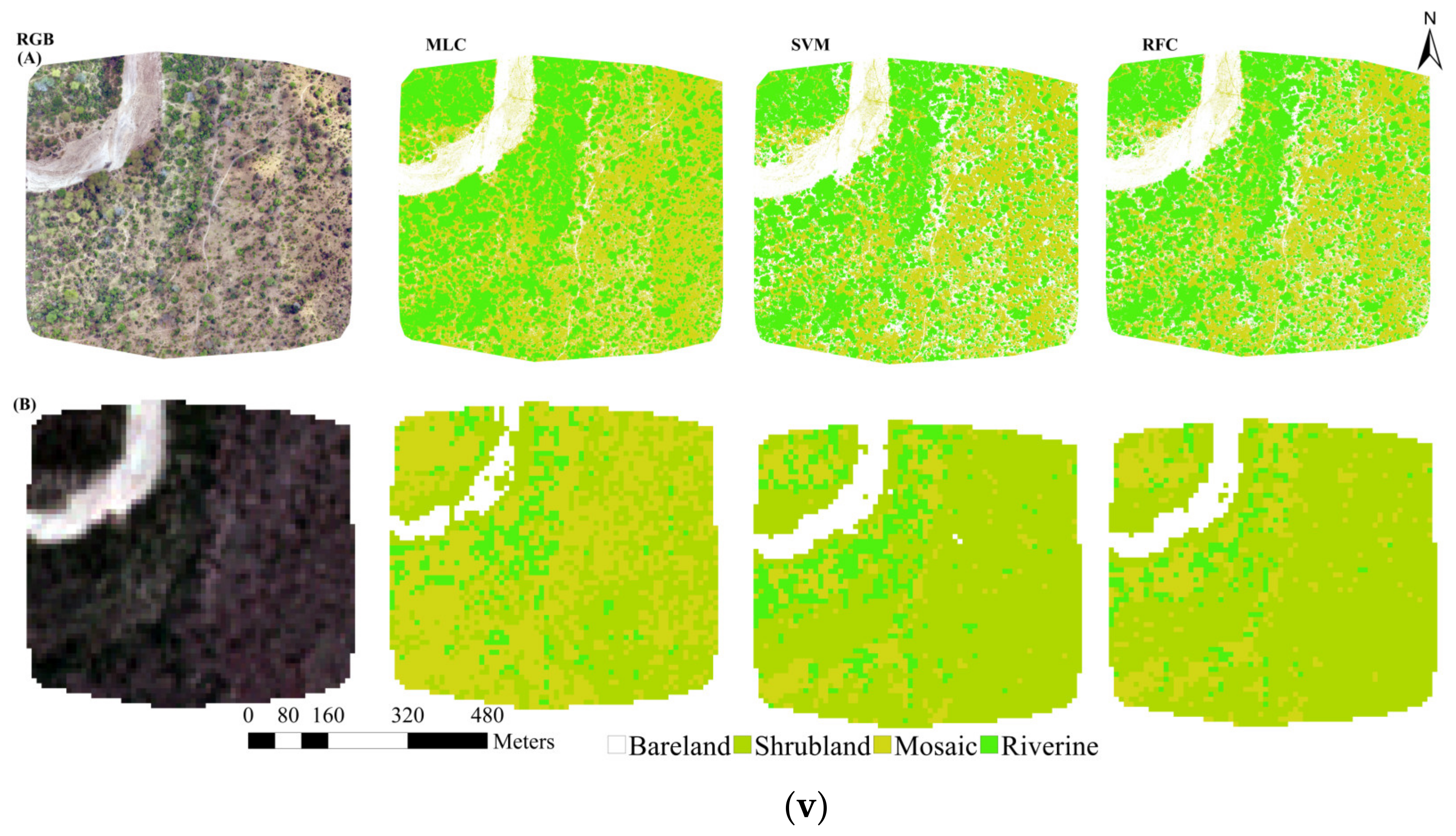

Upscaling Orthoimages to Sentinel-2 Grid Cell Resolutions

2.3. Collection of Training and Validation Sample Points

2.4. Satellite Image Acquisition and Pre-Processing

2.5. Image Classification

2.6. Accuracy Assessment

2.7. Combining UAV-Guided and Unguided Sentinel-2 LULC Classification Maps

3. Results

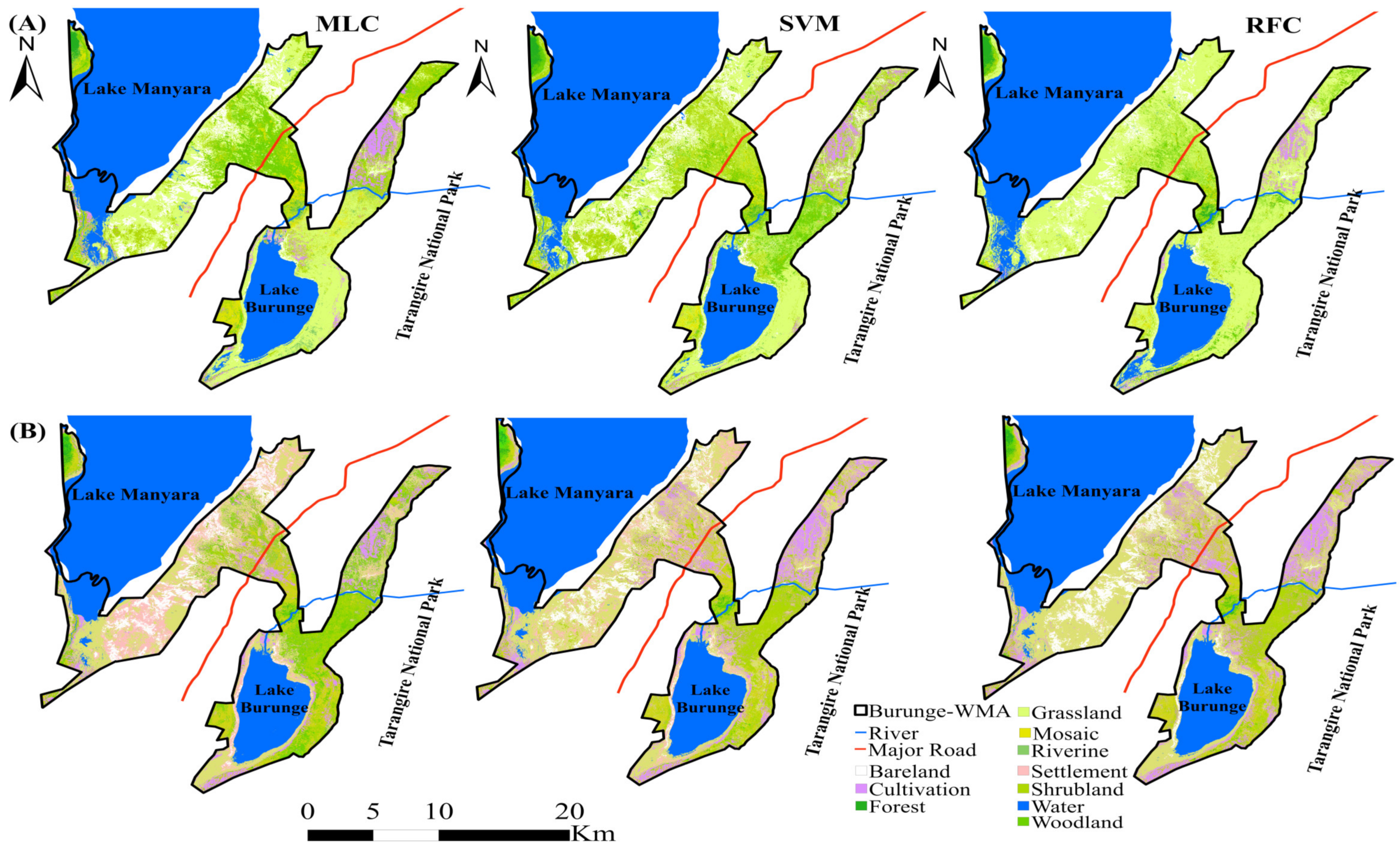

3.1. Accuracy Assessment for Ground-Linked UAV-Guided Sentinel-2 LULC Classification Approach

3.2. Accuracy Assessment for Unguided Sentinel-2 LULC Classification Approach

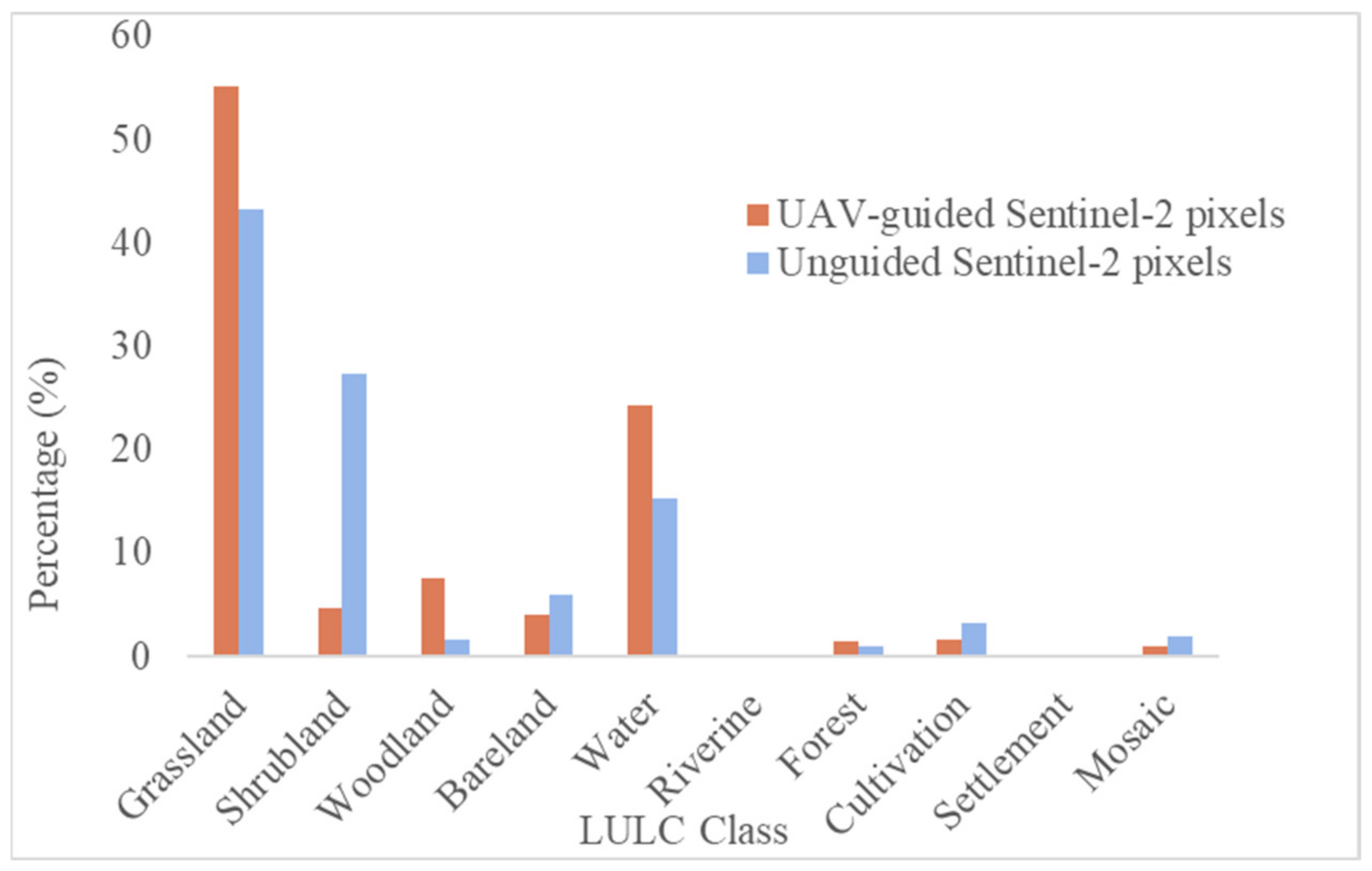

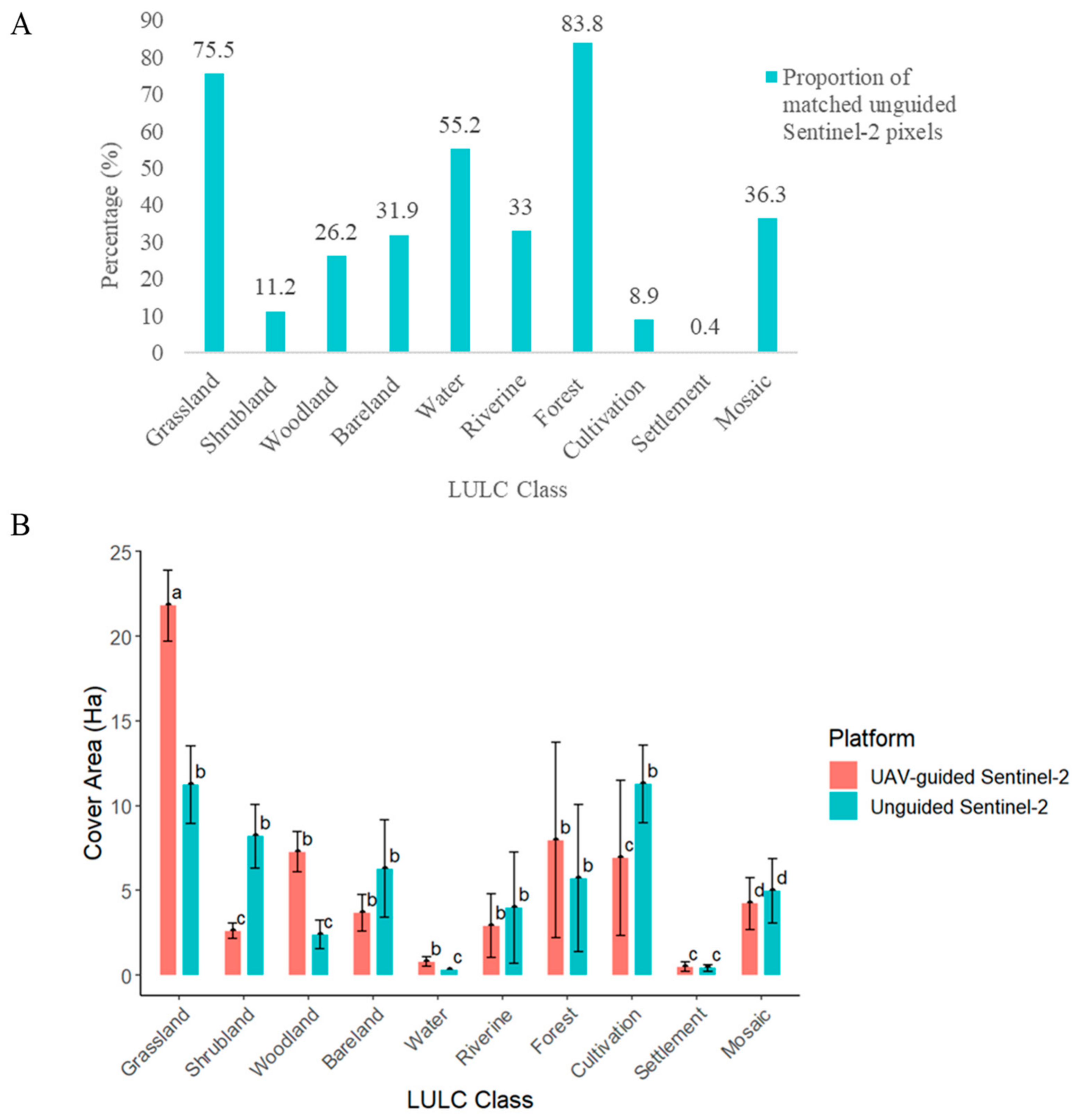

3.3. Comparative Extent and Spatial Distribution Patterns of LULC Classes Derived from UAV-Guided and Unguided Sentinel-2 Classification Approaches

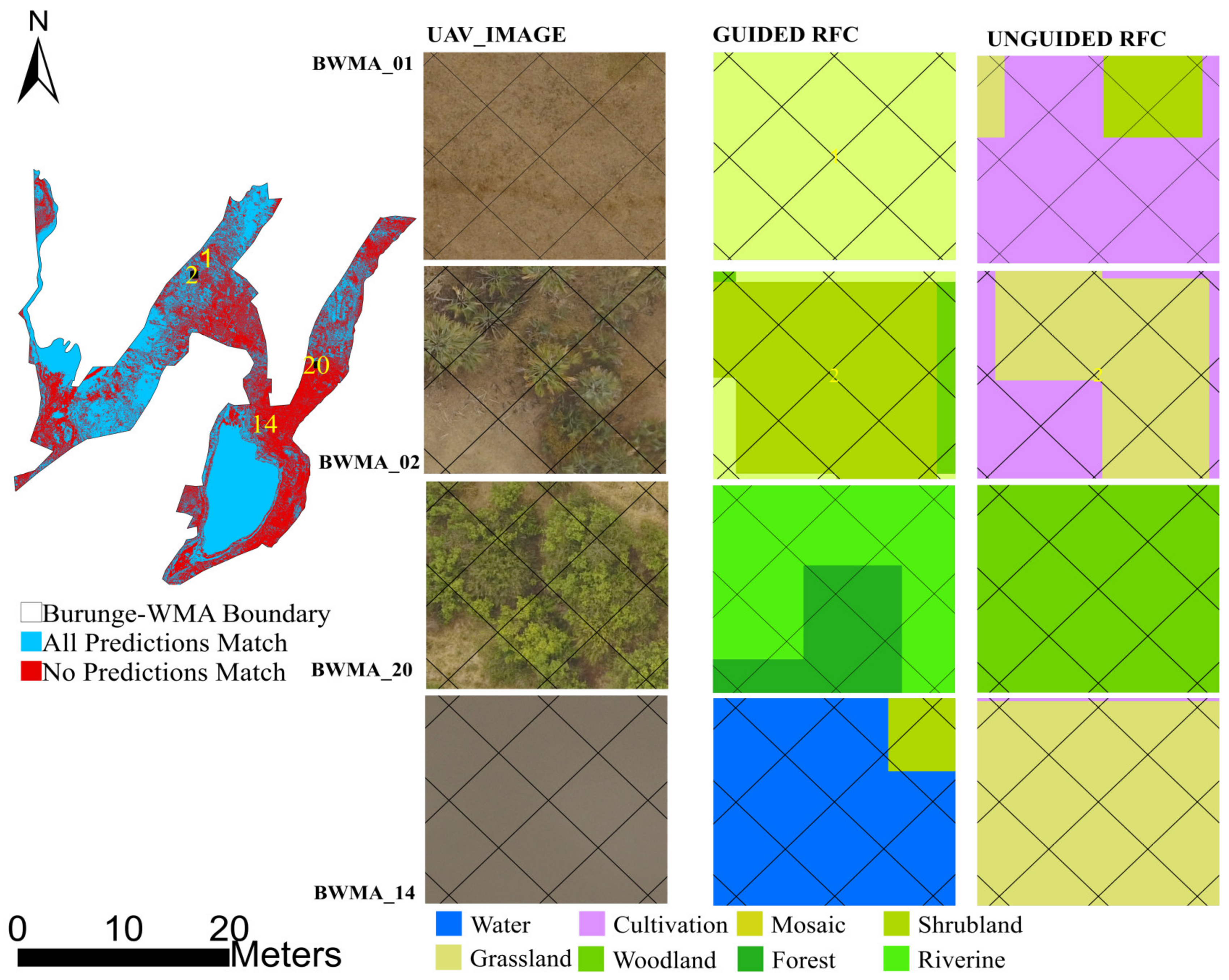

3.4. Agreement of UAV-Guided and Unguided Sentinel-2 LULC Classification Maps—RFC

4. Discussion

5. Conclusions and Recommendations

5.1. Conclusions

5.2. Recommendations

- Scale up this approach to the entire Kwakuchinja wildlife corridor (1280 km2), which is less protected in the landscape than the Burunge WMA (~300 km2), forming part of the important corridor. Two studies conducted in the entire corridor used Landsat, which has a medium resolution. This calls for an application of the approach we deployed in this study to the entire corridor, since there are different levels of protection concerning the legal status of lands.

- Scale up this approach to other community wildlife management areas in the country whose sizes range from 61 to 5372 km2 for updating their LULC maps. Using the same approach would generate high-resolution baseline information for future assessments of any LULC changes. For significantly large core protected areas such as national parks, further studies are necessary regarding how to address key challenges: costs (time and resources), the magnitude of heterogeneity, and levels of LULC classes (e.g., intact and disturbed forests with canopy gaps and regenerating ecosystems recovering from disturbances).

- A follow-up study in the study area to assess the woody plant expansion to other vegetation types, mainly grasslands, to inform management, government, and other key players about appropriate interventions.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mmbaga, N.E.; Munishi, L.K.; Treydte, A.C. How dynamics and drivers of land use/land cover change impact elephant conservation and agricultural livelihood development in Rombo, Tanzania. J. Land Use Sci. 2017, 12, 168–181. [Google Scholar] [CrossRef]

- Kidane, Y.; Stahlmann, R.; Beierkuhnlein, C. Vegetation dynamics, and land use and land cover change in the Bale Mountains, Ethiopia. Environ. Monit. Assess. 2012, 184, 7473–7489. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, C.M.; Martinuzzi, S.; Plantinga, A.J.; Radeloff, V.C.; Lewis, D.J.; Thogmartin, W.E.; Heglund, P.J.; Pidgeon, A.M. Current and future land use around a nationwide protected area network. PLoS ONE 2013, 8, e55737. [Google Scholar] [CrossRef]

- Ndegwa Mundia, C.; Murayama, Y. Analysis of land use/cover changes and animal population dynamics in a wildlife sanctuary in East Africa. Remote Sens. 2009, 1, 952–970. [Google Scholar] [CrossRef]

- Jewitt, D.; Goodman, P.S.; Erasmus, B.F.; O’Connor, T.G.; Witkowski, E.T. Systematic land-cover change in KwaZulu-Natal, South Africa: Implications for biodiversity. S. Afr. J. Sci. 2015, 111, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Mashapa, C.; Gandiwa, E.; Muboko, N.; Mhuriro-Mashapa, P. Land use and land cover changes in a human-wildlife mediated landscape of Save Valley Conservancy, south-eastern lowveld of Zimbabwe. J. Anim. Plant Sci 2021, 31, 583–595. [Google Scholar]

- Kiffner, C.; Wenner, C.; LaViolet, A.; Yeh, K.; Kioko, J. From savannah to farmland: Effects of land-use on mammal communities in the T arangire–Manyara ecosystem, T anzania. Afr. J. Ecol. 2015, 53, 156–166. [Google Scholar] [CrossRef]

- Hadfield, L.A.; Durrant, J.O.; Jensen, R.R.; Melubo, K.; Weisler, L.; Martin, E.H.; Hardin, P.J. Protected Areas in Northern Tanzania: Local Communities, Land Use Change, and Management Challenges; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Rahmonov, O.; Szypuła, B.; Sobala, M.; Islamova, Z.B. Environmental and Land-Use Changes as a Consequence of Land Reform in the Urej River Catchment (Western Tajikistan). Resources 2024, 13, 59. [Google Scholar] [CrossRef]

- Twisa, S.; Mwabumba, M.; Kurian, M.; Buchroithner, M.F. Impact of land-use/land-cover change on drinking water ecosystem services in Wami River Basin, Tanzania. Resources 2020, 9, 37. [Google Scholar] [CrossRef]

- Sharma, R.; Rimal, B.; Baral, H.; Nehren, U.; Paudyal, K.; Sharma, S.; Rijal, S.; Ranpal, S.; Acharya, R.P.; Alenazy, A.A. Impact of land cover change on ecosystem services in a tropical forested landscape. Resources 2019, 8, 18. [Google Scholar] [CrossRef]

- Kideghesho, J.R.; Nyahongo, J.W.; Hassan, S.N.; Tarimo, T.C.; Mbije, N.E. Factors and ecological impacts of wildlife habitat destruction in the Serengeti ecosystem in northern Tanzania. Afr. J. Environ. Assess. Manag. 2006, 11, 17–32. [Google Scholar]

- Martin, E.H.; Jensen, R.R.; Hardin, P.J.; Kisingo, A.W.; Shoo, R.A.; Eustace, A. Assessing changes in Tanzania’s Kwakuchinja Wildlife Corridor using multitemporal satellite imagery and open source tools. Appl. Geogr. 2019, 110, 102051. [Google Scholar] [CrossRef]

- Jones, T.; Bamford, A.J.; Ferrol-Schulte, D.; Hieronimo, P.; McWilliam, N.; Rovero, F. Vanishing wildlife corridors and options for restoration: A case study from Tanzania. Trop. Conserv. Sci. 2012, 5, 463–474. [Google Scholar] [CrossRef]

- Riggio, J.; Caro, T. Structural connectivity at a national scale: Wildlife corridors in Tanzania. PLoS ONE 2017, 12, e0187407. [Google Scholar] [CrossRef] [PubMed]

- Mangewa, L.J.; Kikula, I.S.; Lyimo, J.G. Ecological Viability of the Upper Kitete-Selela Wildlife Corridor in the Tarangire-Manyara Ecosystem: Implications to African Elephants and Buffalo Movements. ICFAI J. Environ. Econ. 2009, 7, 62–73. [Google Scholar]

- Debonnet, G.; Nindi, S. Technical Study on Land Use and Tenure Options and Status of Wildlife Corridors in Tanzania: An Input to the Preparation of Corridor; USAID: Washington, DC, USA, 2017.

- Mtui, D.T. Evaluating Landscape and Wildlife Changes over Time in Tanzania’s Protected Areas. Ph.D. Thesis, University of Hawai’i at Manoa, Honolulu, HI, USA, 2014. [Google Scholar]

- Yadav, P.; Kapoor, M.; Sarma, K. Land use land cover mapping, change detection and conflict analysis of Nagzira-Navegaon Corridor, Central India using geospatial technology. Int. J. Remote Sens. 2012, 1, 90–98. [Google Scholar]

- Kiffner, C.; Kioko, J.; Baylis, J.; Beckwith, C.; Brunner, C.; Burns, C.; Chavez-Molina, V.; Cotton, S.; Glazik, L.; Loftis, E. Long-term persistence of wildlife populations in a pastoral area. Ecol. Evol. 2020, 10, 10000–10016. [Google Scholar] [CrossRef] [PubMed]

- Zhi, X.; Du, H.; Zhang, M.; Long, Z.; Zhong, L.; Sun, X. Mapping the habitat for the moose population in Northeast China by combining remote sensing products and random forests. Glob. Ecol. Conserv. 2022, 40, e02347. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano Jr, J.J. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Ivošević, B.; Lugonja, P.; Brdar, S.; Radulović, M.; Vujić, A.; Valente, J. UAV-based land cover classification for hoverfly (Diptera: Syrphidae) habitat condition assessment: A case study on Mt. Stara Planina (Serbia). Remote Sens. 2021, 13, 3272. [Google Scholar] [CrossRef]

- Kija, H.K.; Ogutu, J.O.; Mangewa, L.J.; Bukombe, J.; Verones, F.; Graae, B.J.; Kideghesho, J.R.; Said, M.Y.; Nzunda, E.F. Land use and land cover change within and around the greater Serengeti ecosystem, Tanzania. Am. J. Remote Sens. 2020, 8, 1–19. [Google Scholar] [CrossRef]

- Seefeldt, S.S.; Booth, D.T. Measuring plant cover in sagebrush steppe rangelands: A comparison of methods. Environ. Manag. 2006, 37, 703–711. [Google Scholar] [CrossRef] [PubMed]

- Sumari, N.; Shao, Z.; Huang, M.; Sanga, C.; Van Genderen, J. Urban expansion: A geo-spatial approach for temporal monitoring of loss of agricultural land. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 1349–1355. [Google Scholar] [CrossRef]

- Al-Ali, Z.; Abdullah, M.; Asadalla, N.; Gholoum, M. A comparative study of remote sensing classification methods for monitoring and assessing desert vegetation using a UAV-based multispectral sensor. Environ. Monit. Assess. 2020, 192, 389. [Google Scholar] [CrossRef]

- Ge, G.; Shi, Z.; Zhu, Y.; Yang, X.; Hao, Y. Land use/cover classification in an arid desert-oasis mosaic landscape of China using remote sensed imagery: Performance assessment of four machine learning algorithms. Glob. Ecol. Conserv. 2020, 22, e00971. [Google Scholar] [CrossRef]

- Tsuyuki, S. Completing yearly land cover maps for accurately describing annual changes of tropical landscapes. Glob. Ecol. Conserv. 2018, 13, e00384. [Google Scholar]

- Singh, R.K.; Singh, P.; Drews, M.; Kumar, P.; Singh, H.; Gupta, A.K.; Govil, H.; Kaur, A.; Kumar, M. A machine learning-based classification of LANDSAT images to map land use and land cover of India. Remote Sens. Appl. Soc. Environ. 2021, 24, 100624. [Google Scholar] [CrossRef]

- Mwabumba, M.; Yadav, B.K.; Rwiza, M.J.; Larbi, I.; Twisa, S. Analysis of land use and land-cover pattern to monitor dynamics of Ngorongoro world heritage site (Tanzania) using hybrid cellular automata-Markov model. Curr. Res. Environ. Sustain. 2022, 4, 100126. [Google Scholar] [CrossRef]

- Sekertekin, A.; Marangoz, A.; Akcin, H. Pixel-based classification analysis of land use land cover using Sentinel-2 and Landsat-8 data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 91–93. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Zhang, H.; Feng, Y.; Guan, W.; Cao, X.; Li, Z.; Ding, J. Using unmanned aerial vehicles to quantify spatial patterns of dominant vegetation along an elevation gradient in the typical Gobi region in Xinjiang, Northwest China. Glob. Ecol. Conserv. 2021, 27, e01571. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Notario-García, M.D.; de Larriva, J.E.M.; de la Orden, M.S.; Porras, A.G.-F. Validation of measurements of land plot area using UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 270–279. [Google Scholar] [CrossRef]

- Yu, T.; Ni, W.; Zhang, Z.; Liu, Q.; Sun, G. Regional sampling of forest canopy covers using UAV visible stereoscopic imagery for assessment of satellite-based products in Northeast China. J. Remote Sens. 2022, 2022, 9806802. [Google Scholar] [CrossRef]

- Mishra, V.N.; Rai, P.K.; Kumar, P.; Prasad, R. Evaluation of land use/land cover classification accuracy using multi-resolution remote sensing images. Forum Geogr. 2016, 15, 45–53. [Google Scholar] [CrossRef]

- Kolarik, N.E.; Gaughan, A.E.; Stevens, F.R.; Pricope, N.G.; Woodward, K.; Cassidy, L.; Salerno, J.; Hartter, J. A multi-plot assessment of vegetation structure using a micro-unmanned aerial system (UAS) in a semi-arid savanna environment. ISPRS J. Photogramm. Remote Sens. 2020, 164, 84–96. [Google Scholar] [CrossRef]

- Ouattara, T.A.; Sokeng, V.-C.J.; Zo-Bi, I.C.; Kouamé, K.F.; Grinand, C.; Vaudry, R. Detection of forest tree losses in Côte d’Ivoire using drone aerial images. Drones 2022, 6, 83. [Google Scholar] [CrossRef]

- Campos, J.; García-Ruíz, F.; Gil, E. Assessment of vineyard canopy characteristics from vigour maps obtained using UAV and satellite imagery. Sensors 2021, 21, 2363. [Google Scholar] [CrossRef]

- Gbiri, I.A.; Idoko, I.A.; Okegbola, M.O.; Oyelakin, L.O. Analysis of Forest Vegetal Characteristics of Akure Forest Reserve from Optical Imageries and Unmanned Aerial Vehicle Data. Eur. J. Eng. Technol. Res. 2019, 4, 57–61. [Google Scholar]

- Gonzalez Musso, R.F.; Oddi, F.J.; Goldenberg, M.G.; Garibaldi, L.A. Applying unmanned aerial vehicles (UAVs) to map shrubland structural attributes in northern Patagonia, Argentina. Can. J. For. Res. 2020, 50, 615–623. [Google Scholar] [CrossRef]

- Vinci, A.; Brigante, R.; Traini, C.; Farinelli, D. Geometrical characterization of hazelnut trees in an intensive orchard by an unmanned aerial vehicle (UAV) for precision agriculture applications. Remote Sens. 2023, 15, 541. [Google Scholar] [CrossRef]

- Dash, J.P. On the Detection and Monitoring of Invasive Exotic Conifers in New Zealand Using Remote Sensing. Ph.D. Thesis, University of Canterbury, Christchurch, New Zealand, 2020. [Google Scholar]

- Michez, A.; Philippe, L.; David, K.; Sébastien, C.; Christian, D.; Bindelle, J. Can low-cost unmanned aerial systems describe the forage quality heterogeneity? Insight from a timothy pasture case study in southern Belgium. Remote Sens. 2020, 12, 1650. [Google Scholar] [CrossRef]

- Mollick, T.; Azam, M.G.; Karim, S. Geospatial-based machine learning techniques for land use and land cover mapping using a high-resolution unmanned aerial vehicle image. Remote Sens. Appl. Soc. Environ. 2023, 29, 100859. [Google Scholar] [CrossRef]

- Daryaei, A.; Sohrabi, H.; Atzberger, C.; Immitzer, M. Fine-scale detection of vegetation in semi-arid mountainous areas with focus on riparian landscapes using Sentinel-2 and UAV data. Comput. Electron. Agric. 2020, 177, 105686. [Google Scholar] [CrossRef]

- Duke, O.P.; Alabi, T.; Neeti, N.; Adewopo, J. Comparison of UAV and SAR performance for Crop type classification using machine learning algorithms: A case study of humid forest ecology experimental research site of West Africa. Int. J. Remote Sens. 2022, 43, 4259–4286. [Google Scholar] [CrossRef]

- Bhatt, P.; Maclean, A.L. Comparison of high-resolution NAIP and unmanned aerial vehicle (UAV) imagery for natural vegetation communities classification using machine learning approaches. GIScience Remote Sens. 2023, 60, 2177448. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Bui, D.H.; Mucsi, L. From land cover map to land use map: A combined pixel-based and object-based approach using multi-temporal landsat data, a random forest classifier, and decision rules. Remote Sens. 2021, 13, 1700. [Google Scholar] [CrossRef]

- Ahmad, A.; Quegan, S. Analysis of maximum likelihood classification technique on Landsat 5 TM satellite data of tropical land covers. In Proceedings of the 2012 IEEE international Conference on Control System, Computing and Engineering, Penang, Malaysia, 23–25 November 2012; pp. 280–285. [Google Scholar]

- Varotsos, C.A.; Cracknell, A.P. Remote Sensing Letters contribution to the success of the Sustainable Development Goals-UN 2030 agenda. Remote Sens. Lett. 2020, 11, 715–719. [Google Scholar] [CrossRef]

- Christensen, M.; Jokar Arsanjani, J. Stimulating implementation of sustainable development goals and conservation action: Predicting future land use/cover change in Virunga National Park, Congo. Sustainability 2020, 12, 1570. [Google Scholar] [CrossRef]

- Ruwaimana, M.; Satyanarayana, B.; Otero, V.; Muslim, A.M.; Syafiq, A.; Ibrahim, S.M.; Raymaekers, D.; Koedam, N.; Dahdouh-Guebas, F. The advantages of using drones over space-borne imagery in the mapping of mangrove forests. PLoS ONE 2018, 13, e0200288. [Google Scholar] [CrossRef]

- Stark, D.J.; Vaughan, I.P.; Evans, L.J.; Kler, H.; Goossens, B. Combining drones and satellite tracking as an effective tool for informing policy change in riparian habitats: A proboscis monkey case study. Remote Sens. Ecol. Conserv. 2018, 4, 44–52. [Google Scholar] [CrossRef]

- Graenzig, T.; Fassnacht, F.E.; Kleinschmit, B.; Foerster, M. Mapping the fractional coverage of the invasive shrub Ulex europaeus with multi-temporal Sentinel-2 imagery utilizing UAV orthoimages and a new spatial optimization approach. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102281. [Google Scholar] [CrossRef]

- Yang, B.; Hawthorne, T.L.; Torres, H.; Feinman, M. Using object-oriented classification for coastal management in the east central coast of Florida: A quantitative comparison between UAV, satellite, and aerial data. Drones 2019, 3, 60. [Google Scholar] [CrossRef]

- Mienna, I.M.; Klanderud, K.; Ørka, H.O.; Bryn, A.; Bollandsås, O.M. Land cover classification of treeline ecotones along a 1100 km latitudinal transect using spectral-and three-dimensional information from UAV-based aerial imagery. Remote Sens. Ecol. Conserv. 2022, 8, 536–550. [Google Scholar] [CrossRef]

- Akumu, C.E.; Amadi, E.O.; Dennis, S. Application of drone and WorldView-4 satellite data in mapping and monitoring grazing land cover and pasture quality: Pre-and post-flooding. Land 2021, 10, 321. [Google Scholar] [CrossRef]

- Brinkhoff, J.; Hornbuckle, J.; Barton, J.L. Assessment of aquatic weed in irrigation channels using UAV and satellite imagery. Water 2018, 10, 1497. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Nasiri, V.; Darvishsefat, A.A.; Arefi, H.; Griess, V.C.; Sadeghi, S.M.M.; Borz, S.A. Modeling forest canopy cover: A synergistic use of Sentinel-2, aerial photogrammetry data, and machine learning. Remote Sens. 2022, 14, 1453. [Google Scholar] [CrossRef]

- Hegarty-Craver, M.; Polly, J.; O’Neil, M.; Ujeneza, N.; Rineer, J.; Beach, R.H.; Lapidus, D.; Temple, D.S. Remote crop mapping at scale: Using satellite imagery and UAV-acquired data as ground truth. Remote Sens. 2020, 12, 1984. [Google Scholar] [CrossRef]

- Gaughan, A.E.; Kolarik, N.E.; Stevens, F.R.; Pricope, N.G.; Cassidy, L.; Salerno, J.; Bailey, K.M.; Drake, M.; Woodward, K.; Hartter, J. Using Very-High-Resolution Multispectral Classification to Estimate Savanna Fractional Vegetation Components. Remote Sens. 2022, 14, 551. [Google Scholar] [CrossRef]

- Kilwenge, R.; Adewopo, J.; Sun, Z.; Schut, M. UAV-based mapping of banana land area for village-level decision-support in Rwanda. Remote Sens. 2021, 13, 4985. [Google Scholar] [CrossRef]

- Angnuureng, D.B.; Brempong, K.; Jayson-Quashigah, P.; Dada, O.; Akuoko, S.; Frimpomaa, J.; Mattah, P.; Almar, R. Satellite, drone and video camera multi-platform monitoring of coastal erosion at an engineered pocket beach: A showcase for coastal management at Elmina Bay, Ghana (West Africa). Reg. Stud. Mar. Sci. 2022, 53, 102437. [Google Scholar] [CrossRef]

- Lee, D.E. Evaluating conservation effectiveness in a Tanzanian community wildlife management area. J. Wildl. Manag. 2018, 82, 1767–1774. [Google Scholar] [CrossRef]

- Kicheleri, R.P.; Treue, T.; Kajembe, G.C.; Mombo, F.M.; Nielsen, M.R. Power struggles in the management of wildlife resources: The case of Burunge wildlife management area, Tanzania. In Wildlife Management-Failures, Successes and Prospects; IntechOpen: London, UK, 2018. [Google Scholar]

- Prins, H.H.; Loth, P.E. Rainfall patterns as background to plant phenology in northern Tanzania. J. Biogeogr. 1988, 15, 451–463. [Google Scholar] [CrossRef]

- Mangewa, L.J.; Ndakidemi, P.A.; Alward, R.D.; Kija, H.K.; Bukombe, J.K.; Nasolwa, E.R.; Munishi, L.K. Comparative assessment of UAV and sentinel-2 NDVI and GNDVI for preliminary diagnosis of habitat conditions in Burunge wildlife management area, Tanzania. Earth 2022, 3, 769–787. [Google Scholar] [CrossRef]

- Braun-Blanquet, J. Plant sociology. In The Study of Plant Communities, 1st ed.; W.H. Freeman & Co. Ltd.: New York, NY, USA, 1932. [Google Scholar]

- Jennings, M.; Loucks, O.; Glenn-Lewin, D.; Peet, R.; Faber-Langendoen, D.; Grossman, D.; Damman, A.; Barbour, M.; Pfister, R.; Walker, M. Guidelines for Describing Associations and Alliances of the US National Vegetation Classification; The Ecological Society of America Vegetation Classification Panel: Washington, DC, USA, 2004; Volume 46. [Google Scholar]

- Friedl, M.A.; McIver, D.K.; Hodges, J.C.; Zhang, X.Y.; Muchoney, D.; Strahler, A.H.; Woodcock, C.E.; Gopal, S.; Schneider, A.; Cooper, A. Global land cover mapping from MODIS: Algorithms and early results. Remote Sens. Environ. 2002, 83, 287–302. [Google Scholar] [CrossRef]

- Mtui, D.T.; Lepczyk, C.A.; Chen, Q.; Miura, T.; Cox, L.J. Assessing multi-decadal land-cover–land-use change in two wildlife protected areas in Tanzania using Landsat imagery. PLoS ONE 2017, 12, e0185468. [Google Scholar] [CrossRef]

- Bukombe, J.; Senzota, R.B.; Fryxell, J.M.; Kittle, A.; Kija, H.; Hopcraft, J.G.C.; Mduma, S.; Sinclair, A.R. Do animal size, seasons and vegetation type influence detection probability and density estimates of Serengeti ungulates? Afr. J. Ecol. 2016, 54, 29–38. [Google Scholar] [CrossRef]

- Tekle, K.; Hedlund, L. Land cover changes between 1958 and 1986 in Kalu District, southern Wello, Ethiopia. Mt. Res. Dev. 2000, 20, 42–51. [Google Scholar] [CrossRef]

- Bennett, A.F.; Radford, J.Q.; Haslem, A. Properties of land mosaics: Implications for nature conservation in agricultural environments. Biol. Conserv. 2006, 133, 250–264. [Google Scholar] [CrossRef]

- Bhatt, P.; Edson, C.; Maclean, A. Image Processing in Dense Forest Areas using Unmanned Aerial System (UAS); Michigan Tech Publications: Houghton, MI, USA, 2022. [Google Scholar]

- Eskandari, R.; Mahdianpari, M.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S. Meta-analysis of unmanned aerial vehicle (UAV) imagery for agro-environmental monitoring using machine learning and statistical models. Remote Sens. 2020, 12, 3511. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Flores-de-Santiago, F.; Valderrama-Landeros, L.; Rodríguez-Sobreyra, R.; Flores-Verdugo, F. Assessing the effect of flight altitude and overlap on orthoimage generation for UAV estimates of coastal wetlands. J. Coast. Conserv. 2020, 24, 35. [Google Scholar] [CrossRef]

- Ahmad, L.; Habib Kanth, R.; Parvaze, S.; Sheraz Mahdi, S. Measurement of Cloud Cover. In Experimental Agrometeorology: A Practical Manual; Springer: Berlin/Heidelberg, Germany, 2017; pp. 51–54. [Google Scholar]

- Lim, S. Geospatial Information Data Generation Using Unmanned Aerial Photogrammetry and Accuracy Assessment; Department of Civil Engineering, Graduate School Chungnam National University: Daejeon, Republic of Korea, 2016. [Google Scholar]

- Yun, B.-Y.; Sung, S.-M. Location accuracy of unmanned aerial photogrammetry results according to change of number of ground control points. J. Korean Assoc. Geogr. Inf. Stud. 2018, 21, 24–33. [Google Scholar]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Dash, J.P.; Pearse, G.D.; Watt, M.S. UAV multispectral imagery can complement satellite data for monitoring forest health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Shiraishi, T.; Motohka, T.; Thapa, R.B.; Watanabe, M.; Shimada, M. Comparative assessment of supervised classifiers for land use–land cover classification in a tropical region using time-series PALSAR mosaic data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1186–1199. [Google Scholar] [CrossRef]

- Avcı, C.; Budak, M.; Yağmur, N.; Balçık, F. Comparison between random forest and support vector machine algorithms for LULC classification. Int. J. Eng. Geosci. 2023, 8, 1–10. [Google Scholar] [CrossRef]

- Zhang, F.; Yang, X. Improving land cover classification in an urbanized coastal area by random forests: The role of variable selection. Remote Sens. Environ. 2020, 251, 112105. [Google Scholar] [CrossRef]

- Hansen, M.; Dubayah, R.; Defries, R. Classification trees: An alternative to traditional land cover classifiers. Int. J. Remote Sens. 1996, 17, 1075–1081. [Google Scholar] [CrossRef]

- Taati, A.; Sarmadian, F.; Mousavi, A.; Pour, C.T.H.; Shahir, A.H.E. Land use classification using support vector machine and maximum likelihood algorithms by Landsat 5 TM images. Walailak J. Sci. Technol. 2015, 12, 681–687. [Google Scholar]

- Frakes, R.A.; Belden, R.C.; Wood, B.E.; James, F.E. Landscape Analysis of Adult Florida Panther Habitat. PLoS ONE 2015, 10, e0133044. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Lu, D.; Weng, Q.; Moran, E.; Li, G.; Hetrick, S. Remote Sensing Image Classification; CRC Press/Taylor and Francis: Boca Raton, FL, USA, 2011. [Google Scholar]

- Lillesand, T.; Kiefer, R.; Chipman, J. Digital image interpretation and analysis. Remote Sens. Image Interpret. 2008, 6, 545–581. [Google Scholar]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Foody, G.M. Harshness in image classification accuracy assessment. Int. J. Remote Sens. 2008, 29, 3137–3158. [Google Scholar] [CrossRef]

- Wulder, M.A.; Franklin, S.E.; White, J.C.; Linke, J.; Magnussen, S. An accuracy assessment framework for large-area land cover classification products derived from medium-resolution satellite data. Int. J. Remote Sens. 2006, 27, 663–683. [Google Scholar] [CrossRef]

- Nguyen, U.; Glenn, E.; Dang, T.; Pham, L. Mapping vegetation types in semi-arid riparian regions using random forest and object-based image approach: A case study of the Colorado River Ecosystem, Grand Canyon, Arizona. Ecol. Inform. 2019, 50, 43–50. [Google Scholar] [CrossRef]

- Van Iersel, W.; Straatsma, M.; Middelkoop, H.; Addink, E. Multitemporal classification of river floodplain vegetation using time series of UAV images. Remote Sens. 2018, 10, 1144. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 data for land cover/use mapping: A review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Otunga, C.; Odindi, J.; Mutanga, O.; Adjorlolo, C. Evaluating the potential of the red edge channel for C3 (Festuca spp.) grass discrimination using Sentinel-2 and Rapid Eye satellite image data. Geocarto Int. 2019, 34, 1123–1143. [Google Scholar] [CrossRef]

- Van Leeuwen, B.; Tobak, Z.; Kovács, F. Machine learning techniques for land use/land cover classification of medium resolution optical satellite imagery focusing on temporary inundated areas. J. Environ. Geogr. 2020, 13, 43–52. [Google Scholar] [CrossRef]

- Komarkova, J.; Sedlak, P.; Pešek, R.; Čermáková, I. Small water bodies identification by means of remote sensing. In Proceedings of the 7th International Conference on Cartography and GIS, Sozopol, Bulgaria, 18–23 June 2018; Volume 1, p. 2. [Google Scholar]

- Psychalas, C.; Vlachos, K.; Moumtzidou, A.; Gialampoukidis, I.; Vrochidis, S.; Kompatsiaris, I. Towards a Paradigm Shift on Mapping Muddy Waters with Sentinel-2 Using Machine Learning. Sustainability 2023, 15, 13441. [Google Scholar] [CrossRef]

- Waśniewski, A.; Hościło, A.; Zagajewski, B.; Moukétou-Tarazewicz, D. Assessment of Sentinel-2 satellite images and random forest classifier for rainforest mapping in Gabon. Forests 2020, 11, 941. [Google Scholar] [CrossRef]

- TAWIRI; United States Agency for International Development (USAID). Ecological viability assessment to support piloting implementation of wildlife corridor regulations in the proposed kwakuchinja wildlife corridor. In Kwakuchinja Wildlife Corridor Ecological Viability Assessment; USAID: Washington, DC, USA, 2019. [Google Scholar]

- Van Auken, O. Causes and consequences of woody plant encroachment into western North American grasslands. J. Environ. Manag. 2009, 90, 2931–2942. [Google Scholar] [CrossRef]

- Skowno, A.L.; Thompson, M.W.; Hiestermann, J.; Ripley, B.; West, A.G.; Bond, W.J. Woodland expansion in South African grassy biomes based on satellite observations (1990–2013): General patterns and potential drivers. Glob. Change Biol. 2017, 23, 2358–2369. [Google Scholar] [CrossRef]

- Sinclair, A.R.; Mduma, S.A.; Hopcraft, J.G.C.; Fryxell, J.M.; Hilborn, R.; Thirgood, S. Long-term ecosystem dynamics in the Serengeti: Lessons for conservation. Conserv. Biol. 2007, 21, 580–590. [Google Scholar] [CrossRef]

- Kimaro, H.; Asenga, A.; Munishi, L.; Treydte, A. Woody encroachment extent and its associated impacts on plant and herbivore species occurrence in Maswa Game Reserve, Tanzania. Environ. Nat. Resour. Res. 2019, 9. [Google Scholar] [CrossRef]

- Kitonsa, H.; Kruglikov, S.V. Significance of drone technology for achievement of the United Nations sustainable development goals. R-Econ. 2018, 4, 115–120. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J. Unmanned aerial vehicles for rangeland mapping and monitoring: A comparison of two systems. In Proceedings of the ASPRS Annual Conference Proceedings, Tampa, FL, USA, 7–11 May 2007; pp. 1–10. [Google Scholar]

- Gambo, J.; Shafri, H.M.; Shaharum, N.S.N.; Abidin, F.A.Z.; Rahman, M.T.A. Monitoring and predicting land use-land cover (LULC) changes within and around krau wildlife reserve (KWR) protected area in Malaysia using multi-temporal landsat data. Geoplanning J. Geomat. Plan. 2018, 5, 17–34. [Google Scholar] [CrossRef]

- Brink, A.B.; Martínez-López, J.; Szantoi, Z.; Moreno-Atencia, P.; Lupi, A.; Bastin, L.; Dubois, G. Indicators for assessing habitat values and pressures for protected areas—An integrated habitat and land cover change approach for the Udzungwa Mountains National Park in Tanzania. Remote Sens. 2016, 8, 862. [Google Scholar] [CrossRef]

- Lim, T.Y.; Kim, J.; Kim, W.; Song, W. A Study on Wetland Cover Map Formulation and Evaluation Using Unmanned Aerial Vehicle High-Resolution Images. Drones 2023, 7, 536. [Google Scholar] [CrossRef]

| LULC | Description | Reference |

|---|---|---|

| Bare land | Exposed soil, sand, or rocks; vegetation % cover of <2% | [18,74,75,76] |

| cultivation/ Agriculture | Characterized by a clear farm pattern covered by crops, harvested or with bare soil. Includes perennial woody crops cultivated inside or adjacent to protected areas. | [18,24,74,75,77] |

| Settlement/Built-up areas | Houses (scattered or clustered) inside and adjacent to the protected area. May include trees, shrubs, grasses, and roads, each with various proportions | [18,74,75,76,77] |

| Water bodies | Rivers, streams, lakes, ponds, and impoundments are composed of water, grasses, forbs, sedges, and reeds. | [18,24,74,75] |

| Grasslands | Dominated by grasses and herbs. Includes savanna grassland (widely scattered trees and shrub cover ≤ 2%) and wooded grassland (scattered tree and shrub cover < 10%) | [18,74,75,76] |

| Shrublands | Woody vegetation (evergreen or deciduous) composed of shrubs (multi-stemmed woody plants ≤ 5 m tall) and trees ≤ 2 m tall; combined canopy cover of 10–60% | [18,24,74,75,77] |

| Woodlands | Woody vegetation (evergreen or deciduous) comprises trees > 2 m tall. It includes open woodland/woody savanna (canopy cover 20–60%) and closed woodland (60–100% cover with canopy not thickly interlaced). The understory consists of small proportions of grasses, shrubs, and forbs. | [74,76,77] |

| Mosaic | Plant community characterized by relatively similar proportions of two or more LULC classes | [78] |

| Riverine vegetation | Trees dominate vegetation along rivers. Includes mixtures of riverine forests, riverine woodlands, and dense shrubs. | [77] |

| Forests | Trees forming closed or nearly closed canopies. May comprise an upper story of trees with heights of 40–50 m, a lower story (8–15 m), an understory (2–8 m), and vines. Degraded open/patched forests may look like intact open woodland. | [23] |

| Layer | ||||||

|---|---|---|---|---|---|---|

| Tree | Shrub | Herbaceous | Bare | |||

| Land Cover Type | Height (m) | Cover (%) | Height (m) | Cover (%) | Cover (%) | Cover (%) |

| Plain grassland | 0 | 0 | 0 | 0 | 93.7 | 6.3 |

| Wooded grassland | 7.9 | 8.5 | 1.8 | 21 | 40.4 | 30.1 |

| Shrubland | 6.7 | 2.8 | 2.1 | 72.9 | 18.4 | 5.9 |

| Palm woodland | 7.1 | 26.6 | 2.9 | 25.8 | 37.5 | 10.1 |

| Acacia woodland | 6.8 | 15.5 | 1.5 | 13.1 | 50.8 | 20.6 |

| Riverine vegetation | 8.5 | 9.8 | 2.8 | 39.0 | 43.2 | 8.0 |

| Mosaic | 7 | 12.9 | 1.8 | 13.8 | 45.2 | 28.1 |

| LULC Class | MLC | SVM | RFC | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Set | Testing Set | Training Set | Testing Set | Training Set | Testing Set | |||||||

| (i) | (ii) | (i) | (ii) | (i) | (ii) | (i) | (ii) | (i) | (ii) | (i) | (ii) | |

| Grassland | 234 | 132 | 101 | 81 | 244 | 115 | 105 | 87 | 265 | 363 | 114 | 156 |

| Shrubland | 74 | 89 | 32 | 38 | 50 | 101 | 22 | 31 | 143 | 67 | 61 | 29 |

| Woodland | 139 | 99 | 59 | 65 | 92 | 113 | 40 | 22 | 111 | 57 | 47 | 24 |

| Bareland | 91 | 102 | 39 | 25 | 63 | 98 | 27 | 12 | 39 | 46 | 17 | 22 |

| Water | 39 | 58 | 17 | 20 | 39 | 32 | 17 | 23 | 27 | 30 | 12 | 11 |

| Riverine | 32 | 76 | 14 | 15 | 53 | 26 | 23 | 35 | 25 | 31 | 10 | 13 |

| Forest | 22 | 56 | 9 | 20 | 34 | 36 | 15 | 18 | 23 | 34 | 10 | 14 |

| Cultivation | 19 | 25 | 12 | 3 | 49 | 47 | 21 | 23 | 31 | 35 | 9 | 11 |

| Settlement | 25 | 31 | 8 | 8 | 38 | 59 | 16 | 28 | 13 | 15 | 10 | 10 |

| Mosaic | 25 | 32 | 10 | 25 | 37 | 73 | 16 | 21 | 23 | 22 | 10 | 10 |

| Total | 700 | 700 | 300 | 300 | 700 | 700 | 300 | 300 | 700 | 700 | 300 | 300 |

| Pixel Counts | Matched Pixels | Unmatched Pixels | Proportion of Matched Pixels of Unguided Sentinel-2 (AR1) | Agreement Ratio for Total Pixels (AR2) | |||||

|---|---|---|---|---|---|---|---|---|---|

| LULC Class | Ao | % | Bo | % | AoBo | A1 | B1 | ||

| Grassland | 60,221 | 73 | 33,403 | 57.4 | 25,216 | 35,005 | 8187 | 75.5 | 30.4 |

| Shrubland | 5820 | 7 | 18,980 | 32.6 | 2121 | 3699 | 16,859 | 11.2 | 2.6 |

| Woodland | 16,782 | 20 | 5786 | 9.9 | 1516 | 15,266 | 4270 | 26.2 | 1.8 |

| Total | 82,823 | 58,169 | 28,853 | 53,970 | 29,316 | 34.8 | |||

| LULC Class | LULC Classification Algorithm | |||||

|---|---|---|---|---|---|---|

| MLC | SVM | RFC | ||||

| UA | PA | UA | PA | UA | PA | |

| Grassland | 0.98 | 0.97 | 0.94 | 0.98 | 0.94 | 0.98 |

| Woodland | 0.83 | 0.88 | 0.80 | 0.87 | 0.95 | 0.94 |

| Shrubland | 0.88 | 0.90 | 0.91 | 0.91 | 0.96 | 0.94 |

| Bareland | 0.92 | 0.87 | 0.92 | 0.86 | 0.89 | 0.86 |

| Water | 0.93 | 0.82 | 1.00 | 0.93 | 1.00 | 0.87 |

| Riverine | 0.79 | 0.82 | 0.78 | 0.81 | 0.89 | 0.89 |

| Forest | 0.90 | 0.86 | 0.90 | 0.88 | 0.85 | 0.85 |

| Cultivation | 0.81 | 0.86 | 0.96 | 0.87 | 0.90 | 0.87 |

| Settlement | 0.89 | 0.91 | 1.00 | 0.8 | 0.91 | 0.86 |

| Mosaic | 0.79 | 0.81 | 0.75 | 0.84 | 0.82 | 0.90 |

| OA (%) | 90 | 91 | 94 | |||

| k | 0.88 | 0.89 | 0.92 | |||

| LULC Class | LULC Classification Algorithm | |||||

|---|---|---|---|---|---|---|

| MLC | SVM | RFC | ||||

| UA | PA | UA | PA | UA | PA | |

| Grassland | 0.85 | 0.92 | 0.97 | 0.96 | 0.93 | 0.99 |

| Woodland | 0.77 | 0.75 | 0.78 | 0.86 | 0.84 | 0.83 |

| Shrubland | 0.75 | 0.80 | 0.82 | 0.82 | 0.86 | 0.76 |

| Bareland | 0.80 | 0.76 | 0.90 | 0.78 | 0.92 | 0.84 |

| Water | 0.87 | 0.76 | 0.86 | 0.91 | 0.98 | 0.84 |

| Riverine | 0.75 | 0.74 | 0.84 | 0.84 | 0.75 | 0.88 |

| Forest | 0.78 | 0.85 | 0.90 | 0.83 | 0.96 | 0.92 |

| Cultivation | 0.84 | 0.76 | 0.79 | 0.90 | 0.91 | 0.80 |

| Settlement | 0.79 | 0.75 | 0.76 | 0.78 | 0.78 | 0.76 |

| Mosaic | 0.77 | 0.75 | 0.75 | 0.77 | 0.82 | 0.75 |

| OA (%) | 80 | 87 | 90 | |||

| k | 0.77 | 0.85 | 0.87 | |||

| LULC Class | t | df | p-Value |

|---|---|---|---|

| Grassland | 2.0938 | 66.5710 | 0.0426 * |

| Shrubland | 2.5890 | 28.7600 | 0.0149 * |

| Woodland | 2.4134 | 41.8260 | 0.0128 * |

| Bareland | 0.0281 | 59.1220 | 0.9777 |

| Water | 2.8099 | 8.2062 | 0.0171 * |

| Riverine | 1.1118 | 12.7630 | 0.1084 |

| Forest | 1.6851 | 17.2380 | 0.1100 |

| Cultivation | 2.2809 | 51.4020 | 0.0267 * |

| Settlement | 1.9667 | 7.3144 | 0.1226 |

| Mosaic | 1.2129 | 25.2310 | 0.2364 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mangewa, L.J.; Ndakidemi, P.A.; Alward, R.D.; Kija, H.K.; Nasolwa, E.R.; Munishi, L.K. Land Use/Cover Classification of Large Conservation Areas Using a Ground-Linked High-Resolution Unmanned Aerial Vehicle. Resources 2024, 13, 113. https://doi.org/10.3390/resources13080113

Mangewa LJ, Ndakidemi PA, Alward RD, Kija HK, Nasolwa ER, Munishi LK. Land Use/Cover Classification of Large Conservation Areas Using a Ground-Linked High-Resolution Unmanned Aerial Vehicle. Resources. 2024; 13(8):113. https://doi.org/10.3390/resources13080113

Chicago/Turabian StyleMangewa, Lazaro J., Patrick A. Ndakidemi, Richard D. Alward, Hamza K. Kija, Emmanuel R. Nasolwa, and Linus K. Munishi. 2024. "Land Use/Cover Classification of Large Conservation Areas Using a Ground-Linked High-Resolution Unmanned Aerial Vehicle" Resources 13, no. 8: 113. https://doi.org/10.3390/resources13080113

APA StyleMangewa, L. J., Ndakidemi, P. A., Alward, R. D., Kija, H. K., Nasolwa, E. R., & Munishi, L. K. (2024). Land Use/Cover Classification of Large Conservation Areas Using a Ground-Linked High-Resolution Unmanned Aerial Vehicle. Resources, 13(8), 113. https://doi.org/10.3390/resources13080113