An Open-Source Many-Scenario Approach for Power System Dynamic Simulation on HPC Clusters

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Works

1.3. Contribution of This Work

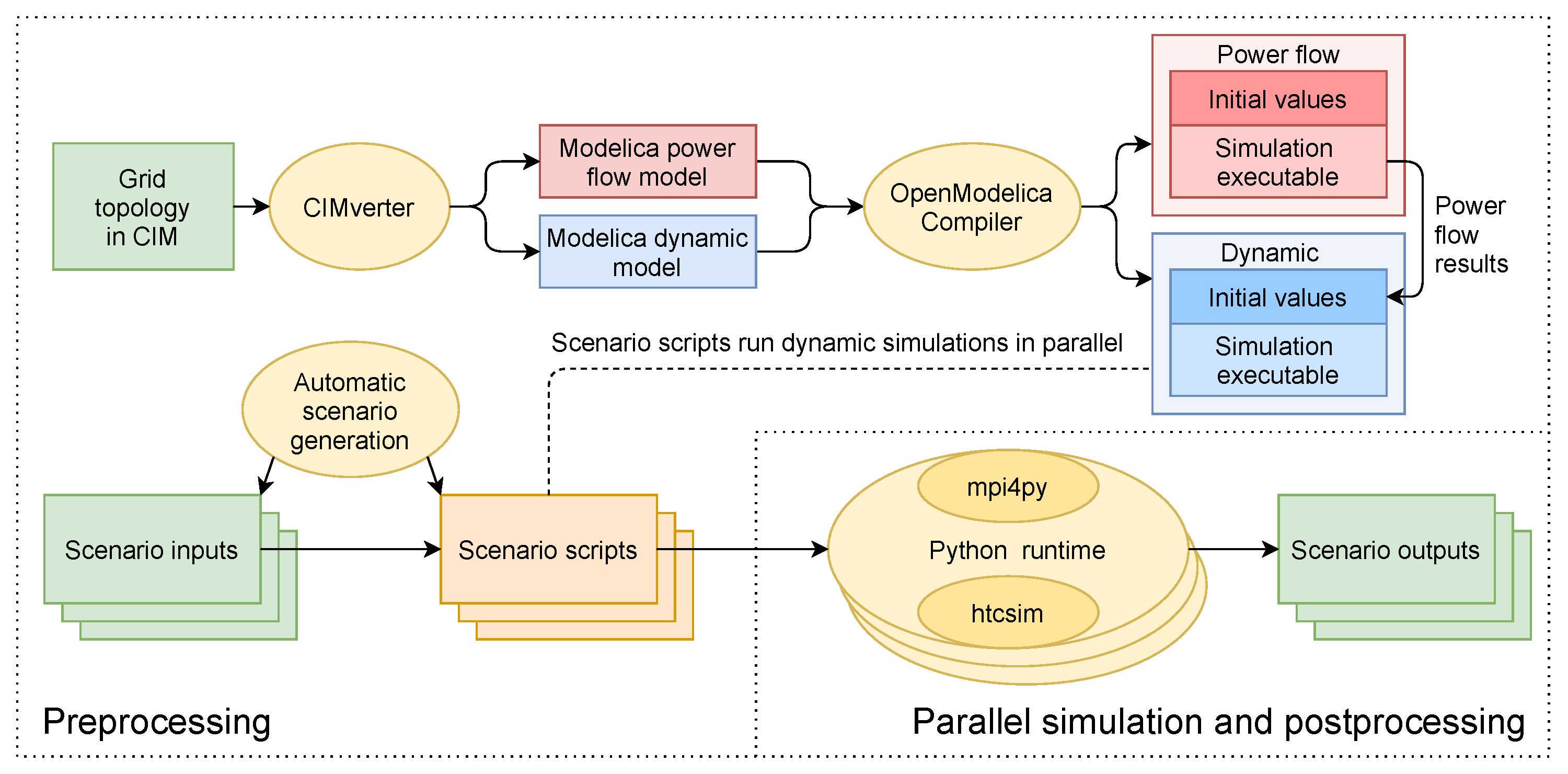

2. The Many-Scenario Approach for Parallel Dynamic Simulations

2.1. Fundamentals

2.1.1. Modelica

2.1.2. Message-Passing Interface (MPI)

2.1.3. Power System Simulation

2.2. Concept

2.2.1. Preprocessing

2.2.2. Parallel Simulation

2.2.3. Postprocessing

2.3. Implementation

2.3.1. Scenario Script and Input

2.3.2. Executable Generation

2.3.3. Computational Workload Balancing

3. Experiment and Discussion

3.1. Test Case 1

3.2. Test Case 2

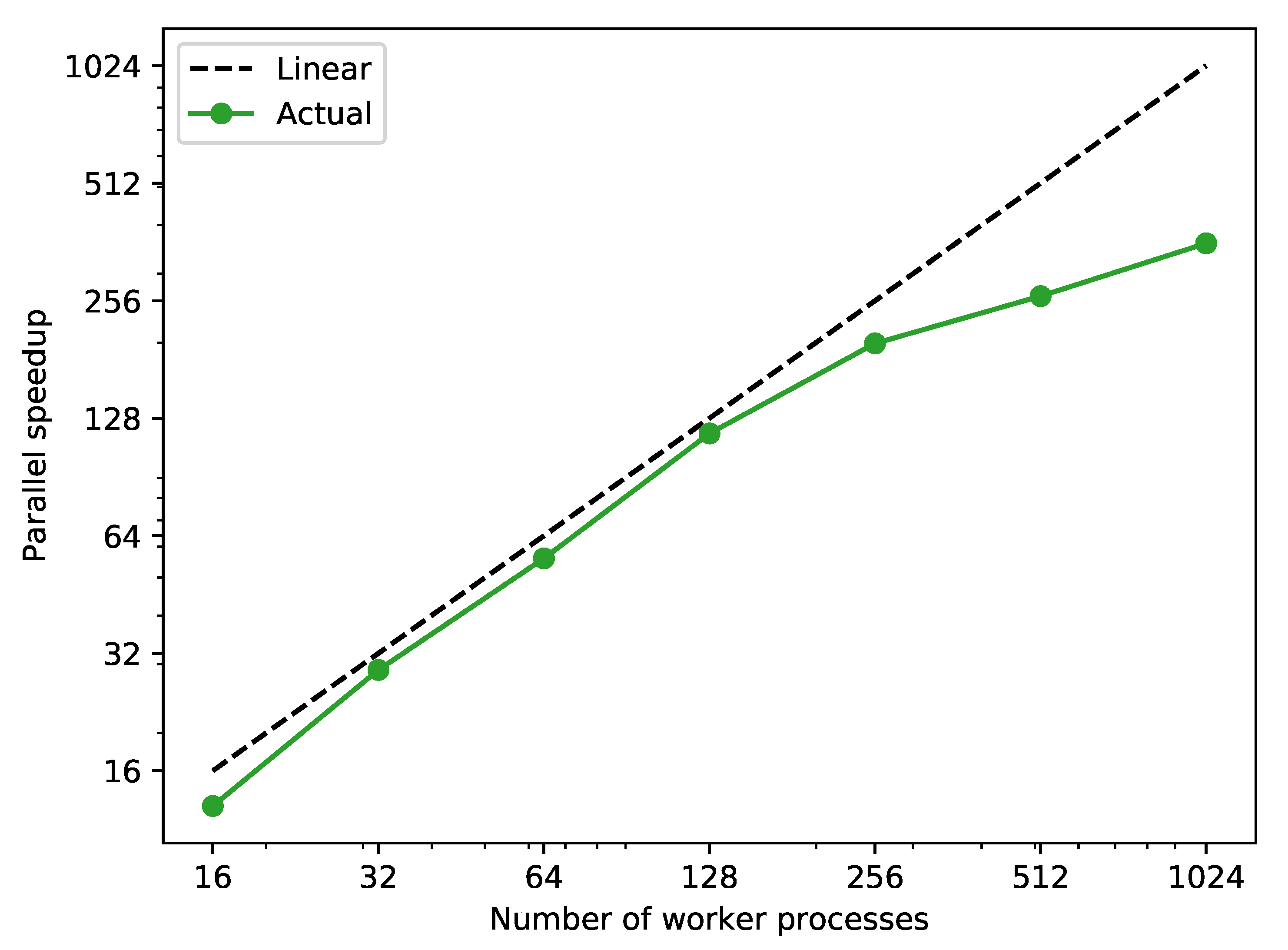

3.3. Test Case 3

3.4. Test Case 4

4. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| AST | abstract syntax tree |

| CIM | Common Information Model |

| CGMES | Common Grid Model Exchange Standard |

| DSA | dynamic security assessment |

| DSL | domain specific language |

| DAE | differential-algebraic system of equations |

| DP | dynamic phasor |

| IaaS | Infrastructure as a Service |

| SP | static phasor |

| EMT | electro-magnetic transient |

| HPC | high-performance computing |

| MPI | message-passing interface |

| OMC | OpenModelica Compiler |

| PMU | phasor measurement unit |

| probability density function | |

| RTE | Réseau de Transport d’Electricité |

| SCADA | supervisory control and data acquisition |

| SPMD | single program, multiple data |

| TSO | transmission system operator |

| XML | extensible markup language |

References

- Mitra, P.; Vittal, V.; Keel, B.; Mistry, J. A Systematic Approach to n-1-1 Analysis for Power System Security Assessment. IEEE Power Energy Technol. Syst. J. 2016, 3, 71–80. [Google Scholar] [CrossRef]

- Varela, B.J.; Hatziargyriou, N.; Puglisi, L.J. The IGREENGrid Project- Increasing Hosting Capacity in Distribution Grids. IEEE Power Energy Mag. 2017, 15, 30–40. [Google Scholar] [CrossRef]

- Evangelopoulos, V.A.; Georgilakis, P.S.; Hatziargyriou, N.D. Optimal operation of smart distribution networks: A review of models, methods and future research. Electr. Power Syst. Res. 2016, 140, 95–106. [Google Scholar] [CrossRef]

- Cheng, L.; Yu, T. A new generation of AI: A review and perspective on machine learning technologies applied to smart energy and electric power systems. Int. J. Energy Res. 2019, 43, 1928–1973. [Google Scholar] [CrossRef]

- Khaitan, S.K. A survey of high-performance computing approaches in power systems. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016. [Google Scholar] [CrossRef]

- Liu, Y.; Liang, S.; He, C.; Zhou, Z.; Fang, W.; Li, Y.; Wang, Y. A Cloud-computing and big data based wide area monitoring of power grids strategy. In IOP Conference Series: Materials Science and Engineering; IOP Publishing Ltd.: Bristol, UK, 2019; Volume 677. [Google Scholar]

- SIGUARD DSA—Transmission System Stability and Dynamic Security Assessment. Available online: https://new.siemens.com/global/en/products/energy/energy-automation-and-smart-grid/grid-resiliency-software/siguard-dsa.html (accessed on 10 November 2020).

- DSA Tools-Dynamic Security Assessment Software. Available online: https://www.dsatools.com/ (accessed on 10 November 2020).

- Khan, S.; Latif, A. Python based scenario design and parallel simulation method for transient rotor angle stability assessment in PowerFactory. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Vyakaranam, B.G.; Samaan, N.A.; Li, X.; Huang, R.; Chen, Y.; Vallem, M.R.; Nguyen, T.B.; Tbaileh, A.; Elizondo, M.A.; Fan, X.; et al. Dynamic Contingency Analysis Tool 2.0 User Manual with Test System Examples; Pacific Northwest National Lab.: Richland, WA, USA, 2019. [Google Scholar]

- Chen, Y.; Glaesemann, K.; Li, X.; Palmer, B.; Huang, R.; Vyakaranam, B. A Generic Advanced Computing Framework for Executing Windows-based Dynamic Contingency Analysis Tool in Parallel on Cluster Machines. In Proceedings of the 2020 IEEE Power Energy Society General Meeting (PESGM), Montreal, QC, Canada, 2–6 August 2020; pp. 1–5. [Google Scholar]

- Khaitan, S.K.; McCalley, J.D. Dynamic Load Balancing and Scheduling for Parallel Power System Dynamic Contingency Analysis. In High Performance Computing in Power and Energy Systems; Khaitan, S.K., Gupta, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 189–209. [Google Scholar]

- Quinn, M.J. Parallel Programming in C with MPI and OpenMP; McGraw-Hill Education Group: New York, NY, USA, 2003. [Google Scholar]

- Razik, L.; Dinkelbach, J.; Mirz, M.; Monti, A. CIMverter—A template-based flexibly extensible open-source converter from CIM to Modelica. Energy Inform. 2018, 1, 195–212. [Google Scholar] [CrossRef]

- Fritzson, P. Principles of Object Oriented Modeling and Simulation with Modelica 3.3; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Mirz, M.; Netze, L.; Monti, A. A multi-level approach to power system modelica models. In Proceedings of the 2016 IEEE 17th Workshop on Control and Modeling for Power Electronics (COMPEL), Trondheim, Norway, 27–30 June 2016; pp. 1–7. [Google Scholar]

- Bartolini, A.; Casella, F.; Guironnet, A. Towards Pan-European Power Grid Modelling in Modelica: Design Principles and a Prototype for a Reference Power System Library. In Proceedings of the 13th International Modelica Conference, Regensburg, Germany, 4–6 March 2019; Linköping University Electronic Press: Linköping, Sweden, 2019. [Google Scholar]

- Franke, R.; Wiesmann, H. Flexible modeling of electrical power systems–the Modelica PowerSystems library. In Proceedings of the 10th International Modelica Conference, Lund, Sweden, 10–12 March 2014; Linköping University Electronic Press: Linköping, Sweden, 2014. [Google Scholar]

- Dalcín, L.; Paz, R.; Storti, M.; D’Elía, J. MPI for Python: Performance improvements and MPI-2 extensions. J. Parallel Distrib. Comput. 2008, 68, 655–662. [Google Scholar] [CrossRef]

- Sielemann, M.; Casella, F.; Otter, M.; Clauß, C.; Eborn, J.; Matsson, S.E.; Olsson, H. Robust initialization of differential-algebraic equations using homotopy. In Proceedings of the 8th International Modelica Conference, Dresden, Germany, 20–22 March 2011; Linköping University Electronic Press: Linköping, Sweden, 2011; pp. 75–85. [Google Scholar]

- Solvang, E.H.; Sperstad, I.B.; Jakobsen, S.H.; Uhlen, K. Dynamic simulation of simultaneous HVDC contingencies relevant for vulnerability assessment of the nordic power system. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Kundur, P. Power System Stability and Control; McGraw-Hill Education: New York, NY, USA, 1994. [Google Scholar]

- 14 Bus Power Flow Test Case. Available online: http://labs.ece.uw.edu/pstca/pf14/pg_tca14bus.htm (accessed on 16 April 2021).

- Razik, L.; Schumacher, L.; Monti, A.; Guironnet, A.; Bureau, G. A comparative analysis of LU decomposition methods for power system simulations. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Hindmarsh, A.C.; Brown, P.N.; Grant, K.E.; Lee, S.L.; Serban, R.; Shumaker, D.E.; Woodward, C.S. SUNDIALS: Suite of Nonlinear and Differential/Algebraic Equation Solvers. ACM Trans. Math. Softw. 2005, 31, 363–396. [Google Scholar] [CrossRef]

- Nogueira, P.E.; Matias, R.; Vicente, E. An experimental study on execution time variation in computer experiments. In Proceedings of the ACM Symposium on Applied Computing, Gyeongju, Korea, 24–28 March 2014; pp. 1529–1534. [Google Scholar]

- Tsafrir, D.; Etsion, Y.; Feitelson, D.G.; Kirkpatrick, S. System noise, OS clock ticks, and fine-grained parallel applications. In Proceedings of the 19th Annual International Conference on Supercomputing, Cambridge, MA, USA, 20–22 June 2005; pp. 303–312. [Google Scholar]

- Kuo, C.S.; Shah, A.; Nomura, A.; Matsuoka, S.; Wolf, F. How file access patterns influence interference among cluster applications. In Proceedings of the 2014 IEEE International Conference on Cluster Computing (CLUSTER), Madrid, Spain, 22–26 September 2014; pp. 185–193. [Google Scholar]

- Shah, A.; Müller, M.; Wolf, F. Estimating the Impact of External Interference on Application Performance. In Euro-Par 2018: Parallel Processing, Proceedings of the 24th International Conference on Parallel and Distributed Computing, Turin, Italy, 27–31 August 2018; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2018; Volume 11014, pp. 46–58. [Google Scholar]

- ENTSO-E. Commission Regulation (EU) 2016/631 of 14 April 2016 establishing a network code on requirements for grid connection of generators. Official Journal of the European Union, 27 April 2016; 1–68. [Google Scholar]

- Barbosa, J.G.; Moreira, B. Dynamic scheduling of a batch of parallel task jobs on heterogeneous clusters. Parallel Comput. 2011, 37, 428–438. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Razik, L.; Jakobsen, S.H.; D’Arco, S.; Benigni, A. An Open-Source Many-Scenario Approach for Power System Dynamic Simulation on HPC Clusters. Electronics 2021, 10, 1330. https://doi.org/10.3390/electronics10111330

Zhang J, Razik L, Jakobsen SH, D’Arco S, Benigni A. An Open-Source Many-Scenario Approach for Power System Dynamic Simulation on HPC Clusters. Electronics. 2021; 10(11):1330. https://doi.org/10.3390/electronics10111330

Chicago/Turabian StyleZhang, Junjie, Lukas Razik, Sigurd Hofsmo Jakobsen, Salvatore D’Arco, and Andrea Benigni. 2021. "An Open-Source Many-Scenario Approach for Power System Dynamic Simulation on HPC Clusters" Electronics 10, no. 11: 1330. https://doi.org/10.3390/electronics10111330