1. Introduction

The number of people aged over 60 is expected to quadruple within the next two decades [

1], placing existing care provision capabilities under strain and driving the need to research into finding more effective and efficient ways of delivering care. Research [

2] has shown the vast majority of elderly people prefer to stay in their own homes whenever possible, rather than living in residential care or nursing homes. This view has further been reinforced with the emergence of the COVID-19 pandemic, which highlighted the vulnerabilities of residential care homes [

3]. Thus, the number of people who will need assistance to remain living independently in their own homes is expected to rise sharply in the coming years. It has long been recognised that technology can play a vital part in supporting people’s desire to maintain an independent life as they grow older. For example, sensors and actuators, together with intelligent software, can augment everyday objects to provide additional functionalities to support those in need of care. Products that combine embedded computers, sensors/actuators, and intelligent software are referred to as ‘smart objects’, and form a fundamental building block of the Internet-of-Things (IoT) [

4]. Technological advancement in the form of machine learning [

5] has enabled these smart objects to work collaboratively, as a whole, creating intelligent environments [

6] that can learn how to adapt their collective behaviour to meet the needs of their occupants. As a result, IoT can be used to foster a uniquely supportive environment, which is particularly useful for elderly care settings, such as assisted living systems, where the environment can be aware of and sensitive to the particular needs of its occupants [

7,

8,

9].

While much thought has gone into creating effective financial and care models, a significant ongoing research challenge is how best to lever advances in smart technologies, to develop context-aware systems which promote the well-being of elderly people by improving their day-to-day living experiences. Towards those ends, monitoring a person’s physical state is an effective approach, as it can establish the functional status of an individual which, in turn, can be used to determine the level of assistance required, with minimum intervention [

10,

11,

12]. In systems that monitor and gather behavioural data about people, inference techniques are commonly used [

10,

13], as no prior knowledge about the individuals is required. While applications designed to help older people to perform their day-to-day tasks have increasingly gained recognition [

14,

15], the vast majority of these applications rely on “active monitoring” approaches to deliver key information. Generally, monitoring is classified as either active or passive. Active devices require physical user interaction to function, while passive devices monitor the local environment in autonomous fashion. Active devices are regarded as being intrusive, interfering with the user’s normal routine in order to function, whereas passive devices are seen as non-intrusive, since they place no physical or mental load on the user. Moreover, active devices, such as an alert button, have the disadvantage of requiring the user to be physically conscious to operate it, which, depending on the status of the individual at the time when assistance is required, may not be possible. While active participation can offer benefits within a tele-medical monitoring system [

16,

17], we argue that using passive or ambient environmental monitoring has the potential to develop a comprehensive assisted living scenario, in a less intrusive and less costly way. This can be achieved by combining Artificial Intelligence (AI) with IoT to create an environment that responds to the occupant’s needs without reliance on their manual input, which overcomes issues such as incapacity, by exploiting the capability of passive seniors [

7]. Developments in ambient assisted monitoring include fall-detection and ‘lack of mobility’ alerts which, for example, involve using passive infrared (PIR) motion sensors, which work in tandem with accelerometers built into furniture and flooring [

13]. Other techniques, such as ubiquitous sensing, which are driven by a specific knowledge-driven framework, have also shown promising results [

18]. Thus, it has been recognized that pervasive passive monitoring is a good way forward to enable elderly people to sustain greater independence and enjoy a better quality of life [

19,

20]. The pervasive nature of noise in the modern domestic environment means that monitoring ambient sound levels offers good potential for the detection of abnormal patterns of behaviour. Furthermore, since this approach needs only a few strategically placed sound sensors around a person’s home, it is easy and cheap to install. However, while this is a most promising approach, there is a sparsity of research on this topic with no off-the-shelf solutions being available. Furthermore, the few existing examples (discussed in

Section 2) generally have built-in transceivers, with many being designed to be carried by users, which limit their practicality.

An overview of the most common unobtrusive wellness monitoring methods is given in [

21], but to be accurate, they need to be backed by ontological-based algorithms [

22], especially when the activities to be recognised are similar [

23], issues that are even more critical when designers have access to only a reduced training data set.

Thus, investigating these issues with the aim of overcoming existing barriers (especially the ones given by the lack of training data) is the main focus of the work reported in this paper; more precisely, whether sound (acoustic signals) can be used as the primary ambient source to create a relatively low-cost IoT system for identifying abnormal situations in domestic homes where elderly people may need external assistance.

Clearly, the focus of any research project (in our case, the AI methods) will contain bespoke and novel methods and technologies. However, in research that is aimed at practical deployment or commercial exploitation, for the findings to be convincing to a broader base (e.g., the industry), it is helpful if supporting technologies and standards utilised are ones which are readily available to those that might seek to exploit and deploy the work. Thus, in our work, for such secondary platforms, we sought to build on widely used industry standards. For networking, we used the IEEE 802.15.4 protocol, which is the most popular wireless sensor network standard. Likewise, for Middleware, we chose the popular open (and free) standard LinkSmart, developed by the FP7 EU project EBBITS. In this way, the debate on our results (theoretical and practical contributions) was better able to be appreciated by the wider ambient intelligence community. The system functionality requirements were gathered by consulting residents in a residential home for the elderly operated by a UK local government authority. Initial experiments were conducted in a domestic home to assess the capability of the sound sensors for gathering suitable data (the distribution of sensors and its effect on live/dead spots) in areas of interest (e.g., kitchens) based on typical events (e.g., walking, boiling a kettle) that relate to care of elderly people. In this work, we employed a Neural Network classifier combined with a context reasoning system to analyse and classify the acoustic data. In addition, we employed a goal-based approach to determine whether an alert should be triggered when an abnormal event was detected.

The remainder of this paper is structured as follows:

Section 2—Low-Cost Wireless Sensor IoT Network Design;

Section 3—Methodology, Design and Implementation;

Section 4—System Prototyping and Discussions;

Section 5—Experimental Setup and Results Analysis;

Section 6—Conclusions.

2. Low-Cost Wireless Sensor IoT Network Design

The Internet-of-Things (IoT) comprises a network of embedded networked computers (implanted into everyday ‘things’) that are able to sense the environment, as well as communicate and interact with people and each other [

24]. The falling costs of embedded devices and advanced networking has led to a proliferation of IoT applications in industries, cities, homes, and the natural world, all of which enhance people’s lives [

25]. Consequently, the ‘assisted living’ community are investing much ongoing research and development into the use of IoT. For example, an important area of IoT research needed to realise the ‘assisted living’ vision is inter-device communication, especially that relating to the development of the wireless sensor network (WSN). WSNs are networks that consist of multiple sensor nodes, with a gateway and a wireless transmission path. Generally, node communication is supported by technologies such as Wi-Fi, Low Energy Bluetooth, ZigBee, 6LoWPAN, and 4/5G.

Table 1 presents a comparison of four main wireless technologies (Wi-Fi, Bluetooth, ZigBee, Zwave) typically used to create WSN-based smart environments, a function of six characteristics: (1) standards, (2) indoor/smart home market share, (3) data throughput, (4) range, (5) reliability, and (6) ease of use. It can be noticed that the Wi-Fi protocol offers the highest data throughput for the transmission range (∼10 m) required in this study, and therefore, the lowest communication latency and highest.

In addition to the connectivity requirements, another key IoT element that requires special attention when designing assisted living systems is the environmental monitoring module [

26,

27].

To date, most of the approaches taken have been fairly conventional, relying on sensor networks to measure parameters such as temperature or humidity [

28,

29,

30,

31] to predict and maintain occupant comfort [

26]. The use of IoT ambient acoustic systems for human activity prediction and recognition is somewhat rare in environmental monitoring. For example, in 1995, Reynolds [

32] investigated the Gaussian Mixture Models (GMM) technique to predict users’ activities or events. Environmental sound generated from users’ activities/events has been explored by a number of scholars, including Virone and Istrate’s work in 2007 [

33], which used an environmental sound sensor for monitoring home activities using a simulator. Later in 2009, Shaikh et al. [

34] investigated sound virtualization in a virtual world where they tried to capture environmental sound together with interpreting associated activities. While Virone and Istrate’s research demonstrated the validity via simulation, they did not investigate the implementation of IoT. Virone and Istrate’s work in identification of the types of sound was further developed by Segura–Garcia et al. [

35] eight years later in 2015, where the new team focused on analysing urban noise levels. Segura–Garcia et al. used Zwicker’s annoyance model to analyse road traffic noise in the City of Barcelona, utilising a WSN similar to the technological set-up of the IoT application we developed in this study [

35]. However, our settings and focus differ to this earlier work in that our application has aimed at a domestic home environment occupied by elderly people. Nevertheless, the conclusions drawn by Segura–Garcia et al. [

35] added weight to our hypothesis that an acoustic sensing approach had the potential to deliver a low-cost solution to the provision of care within a domestic setting.

4. System Prototyping and Discussion

The main concern gathered from interviewing elderly people (see

Section 3.1.2 was the cost, which is understandable as they were all retired and living on small pensions.

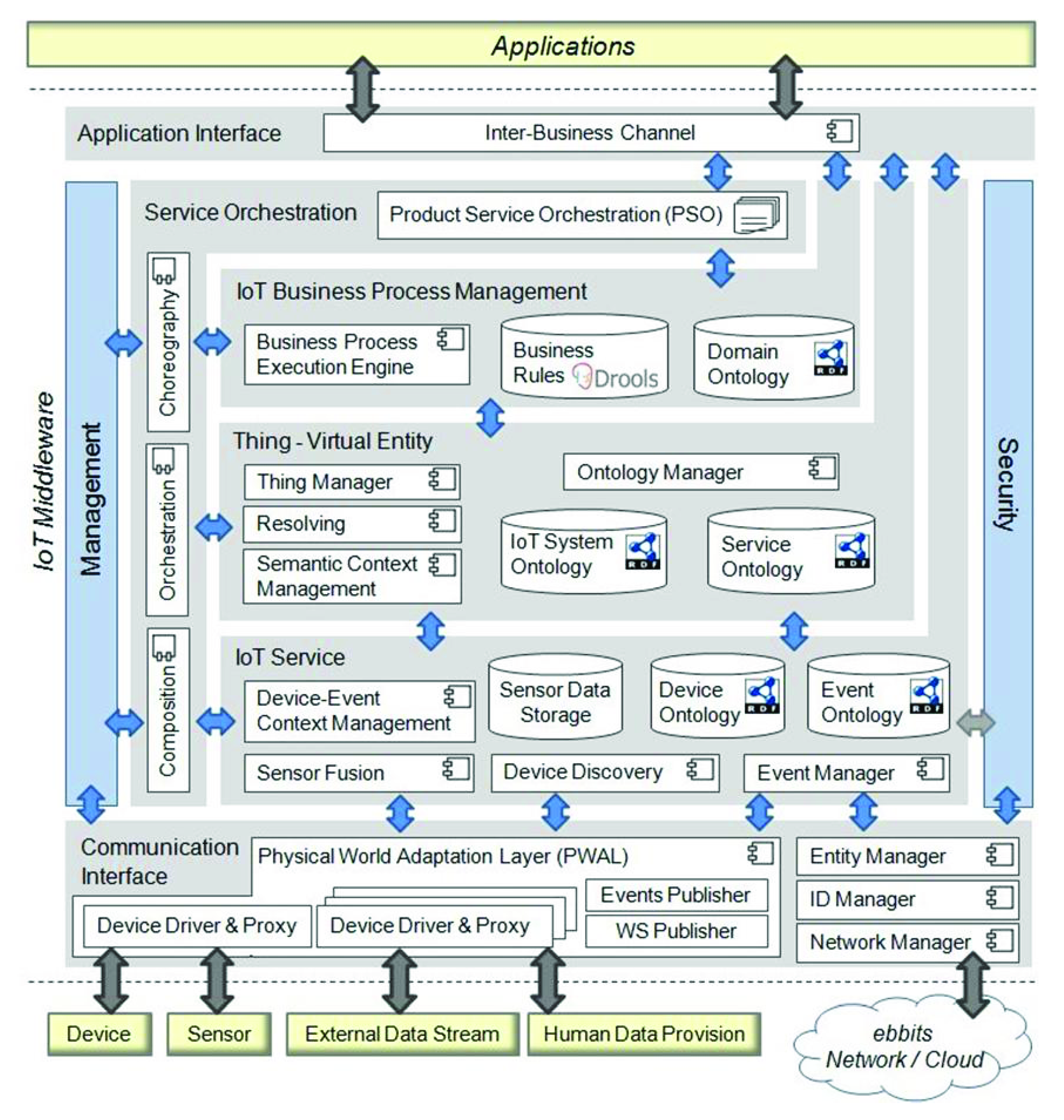

Our investigation into the development of a low-cost unobtrusive monitoring system that can passively monitor domestic environments via processing its ambient acoustic information could help with this concern. As explained earlier, we adopted an IoT-enabled LinkSmart semantic middleware (

Figure 1), an open source technology developed by the FP7 EU project EBBITS, which provides open interoperable capabilities suitable for applications using distributed physical devices with communication. In addition, the LinkSmart middleware has a modular framework, making it readily customisable. Furthermore, its ontological approach, to provide semantic representation of devices in the form of services, provides great flexibility and robustness, making it suitable for low-cost implementation.

As explained in

Section 2, a Wi-Fi-based WSN was chosen for our prototype implementation. In our prototype, the LinkSmart Virtual Entity module was used to act as the main semantic virtualization mechanism for representing and handling all the data on the WSN; while the coordinator node provided the data via IoT Service (

Figure 1).

Nodes were distributed and discoverable via LinkSmart’s device discovery feature and distributed architecture. LinkSmart is a popular standard, adopted by a number of other projects, including ELLIOT to create smart offices [

45] for business use. Our study is the first to explore its use in a domestic assisted-living environment.

The development of a WSN for monitoring construction noise was investigated previously by Hughes et al. [

46].

Their work compared different transmission modes, examining the effectiveness of a Bluetooth Low-Energy (BLE) network with a ZigBee network, specifically for an outdoor construction site setting. In their study, Beaglebone, Arduino, Raspberry Pi and Pi Zero computers were assessed for cost and availability. The node-to-gateway data transmission route choice was ZigBee, although BLE was considered. Other examples of low-cost IoT WSNs for environmental monitoring include the work by Ferdoush and Li [

47] on analysing the combination of the Raspberry Pi and ZigBee components in a multi-node network. In their study [

47], they drew the conclusion that a WSN with a Raspberry Pi base enabled the prototyping of a low-cost flexible WSN. In addition, Ahmed et al. [

48] investigated various sensor types using Raspberry Pi and ZigBee.

Their work emphasized the flexibility of the Raspberry Pi, particularly as the foundation of a WSN, and they concluded that it was a robust platform suitable for a wide variety of WSN locations. However, ZigBee has slightly higher power consumption and low data rate capability, and it is not suitable for supporting tasks requiring high bandwidth, such as gathering ambient acoustic signals.

In addition to the cost concerns raised by the participants, a literature review showed the adoption of smart environment technology had many other limiting factors, such as ease of implementation and perceived usefulness [

49]. These factors have been taken into consideration in the design of our prototype, which involved the use of low-cost devices: Two Raspberry Pi 3 were selected to act as WSN nodes, one equipped with a USB mini-microphone and the other with a speaker. Pi3 had a built-in Wi-Fi module and data were transmitted between the nodes and the gateway over Wi-Fi (

Figure 2).

IoT Hardware and Sensor Nodes Implementation

The prototype development was based on the principles employed in our previous study [

28], the key aspects of which can be summarised as:

The design of the Wireless Sensor Network (WSN) should be based around affordable devices with high-capacity processing [

25].

In line with IoT principles, a distributed network of devices should be employed to collect data independently and autonomously of other devices on the network [

50].

Raw data should be collated by a coordinator (control) node.

On-node data processing should be restricted and the data transmission rate balanced against power consumption to work within the limits of the IoT devices.

The overall system architecture is shown in

Figure 2. It uses a modular framework comprising hardware/device-level workflow, IoT middleware communication, NN learning modules, and GUIs (as explained in

Section 3.2). All sensors/nodes communicated directly with the coordinator (control) node via Wi-Fi. The coordinator (control) node communicated via LinkSmart middleware. As mentioned earlier, the main benefit from adopting the LinkSmart was device interoperability, while all nodes were implemented using a low-cost Raspberry Pi. A wireless LAN and an antenna were built into the board on the Pi-3, reducing the number of USB ports that were required and allowing for a smaller enclosure. All nodes were powered by a mains electricity connection to ensure a continuous and reliable data feed. SD cards of 8 GB were configured with the Linux-based Raspbian (Jessie), a Debian distribution, to provide an operating system for the Raspberry PIs. In addition, for the gateway node, an Apache server was used to present the data in graphical form. The prototype WSN nodes used low-cost, off-the-shelf microphones from Kinobo, which were easily obtained through on-line retailers.

As mentioned, our aims were to use cheap components; hence, the range was limited. However, sound levels recorded by the sensor node were sufficient for prototyping purposes. Consistency in the recordings from the microphones was ensured through comparative testing. Ambient acoustic data were gathered by a node placed in the kitchen, before being passed to the central controller node via Wi-Fi for analysis.

5. Experimental Setup and Results

For this study, we followed an experimental setup similar to the one used in our previous work [

28] but with two main differences: the WSN was based on Wi-Fi, and the setup consisted of two nodes. The experiment was conducted in a domestic home, with one bedroom, kitchen, living/dining room, and shower room, measuring approximately 50 sq. meter in total (

Figure 3).

One node (acting as a gateway node with an attached speaker) was placed in the living room and another node (a sensor node with an attached microphone) was placed in the kitchen area (

Figure 4). The data that were collected informed the size of the different networks, as the optimal number of nodes for different locations varied due to the location/room size and its ambient noise level. The gateway node received the transmissions from the sensor nodes and unpacked the data. A Python-based NN library was installed on the gateway node, which was used to test the data and generate predictions.

5.1. Experimental Procedure

The experiment involved two phases. The first phase was to gather data for training purposes. In this phase, the data were gathered in one of the participant’s home. A total of three sequences of activities, each repeated 10 times, were gathered. The three sequences of activities were:

Walking, grasping the kettle, going to the tap, filling the kettle with water, switching the kettle on, the kettle boiling;

Walking, grasping water filtered jug, (1a) going to the tap, (1b) filling the jug with water (2) pouring the water into the kettle, switching the kettle on, the kettle boiling;

Walking, opening the fridge, closing the fridge, walking.

The data gathered were processed offline and used to train the NN model and context reasoning system using a Dell Optiplex computer, described in

Section 3.2 and

Section 3.3. Once the models were fully trained, they were then deployed to the gateway node, ready for the second phase of the experiment. The preliminary data of the second phase were then collected and subsequently analysed. We used the methodology developed in our previous work [

28].

This involved using the same data collection and transmission script on the node running automatically, from boot, on the sensor node (the recording, analysis of the recording, and transmission of analysis ran in an infinite loop). A new recording and analysis started every second. The on-node analysis of recordings was done using the SoX, a cross-platform audio-processing command line utility. The audio data were then sent via Wi-Fi to the gateway node for further processing and testing. The gateway node stored the data in a local database which were then visualized through real-time rendering on a graphical interface. This allowed quick visual comparisons of consecutive time periods. The participants were first asked to repeat the same sequence of activities as normal, and then ‘walking three steps and stopping’ (remaining quiet).

Figure 5 shows an example of the audio data gathered during the first phase of the experiment. The red circle indicates a ’kettle boiling’ event as recognised by the NN classifier. The green circle indicates a ‘footstep’ event.

5.2. Results and Analysis

The data collected from the Phase 1 experiment were used to train a NN classifier and context reasoning system. For comparison purposes, the graphic representation of the sound waveforms, the logarithm of the filter bank energies, and the mel-frequency cepstrum for the kettle and footstep sounds are displayed in

Figure 6 and

Figure 7, respectively.

The accuracy of the NN classifier was investigated. In the experiment, the aim was to identify the sound of the kettle boiling, as distinct from other distracting noises such as walking, opening/closing the fridge, filling the kettle with water, switching the kettle on, the kettle boiling, and staying quiet. Initially, we used only the neural network classifier without the context reasoning. After training with 20 positive and 20 negative examples, we tested the system with 20 positive and 55 negative events based on the data gathered from one individual’s home. Next, we included the context reasoning. We trained another two neural network classifiers to identify the water tap sound and footstep sound (again using 20 positive and 20 negative examples for each case). The logic of the context reasoning was, if the water tap sound and footstep sound were not detected before a probable detection of the kettle boiling sound, then the probability (or confidence) of kettle boiling detection will be reduced.

The test results are shown in the corresponding confusion matrix shown in

Table 4 for the NN without context reasoning, and in

Table 5 for the NN with context reasoning. The table shows the performance of the NN trained to recognise the Boiling Kettle sound identified as “True Positive” (TP) when the NN predicted the Boiling Kettle when the Boiling Kettle sound was presented, “True Negative” (TN) when the NN predicted a non-Boiling Kettle when a non-Boiling Kettle sound was presented, “False Positive” (FP) when the NN predicted kettle boiling when a non-Boiling Kettle sound was presented and “False Negative” (FN) when NN predicted a non-Boiling Kettle when a Boiling Kettle sound was presented. The confusion matrix helped calculate the sensitivity (TP/(TP + FN)), specificity (TN/(TN + FP)), precision (TP/(TP + FP), and accuracy ((TP + TN)/(TP + FP + TN + FN)).

Table 6 shows the performances of the NN without and with a context reasoning system. Analysing the statistical data indicates that performance improvement comes from a reduction in false positives by taking the context into account. We expect that the accuracy could be improved further by designing more complex context reasoning logic.

However, putting these prediction percentages in the context of the Bayesian statistics, where the sensitivity and specificity are the conditional probabilities, the prevalence is the prior probability, and the positive/negative predicted values are the posterior probabilities, the model prediction is usually involved in unconditional events, and therefore the positive predictive value (PPV), defined in (

5) can better characterise the overall performance of the classifier when the prevalence is not 50%.

The calculated PPV values are shown in

Table 7. As expected, the value increased considerably, showing the importance of using a context reasoning system, especially on NNs trained with a low data set size.

Collating the data from all our test experiments and applying the context reasoning system on our NNs trained to recognize Kettle Boiling, Tap Water, and Foot Step, the calculated accuracy and PPv values are displayed in

Table 8.

Applied in the Phase 2 experiment, where the participant was asked to repeat the same habitual sequence of activities, the system correctly did not trigger any alarm (as the sequence was in the predefined sequences of activities), but when the participant walked three steps and stopped (not in the predefined list, and therefore treated as “abnormal” sequencing), an alarm was triggered. These demonstrate that the system design is capable of recognising the sounds produced and classifying it as normal or abnormal.

6. Conclusions

The summary of the research, that is, the goals achieved and how they were attained are the following:

(i) The main goal of this study was to investigate a holistic approach using a combination of acoustic sensing, artificial intelligence, and the Internet-of-Things as a means of providing a cost-effective approach to alerting care providers or relatives when an abnormal event is detected. (ii) We investigated the use of low-cost IoT devices and NN methods to unobtrusively monitor an ambient acoustic domestic environment. A prototype was successfully developed for this purpose. In developing the prototype, we investigated the performance of Wi-Fi WSN and the semantic LinkSmart IoT middleware as a platform to support on-node real-time audio sample processing, and distributed logic, in IoT systems, proving its architectural viability for such purposes. (iii) We investigated NN techniques as part of our acoustic processing work and combined the context reasoning system with the NN classifier trained on a low-training data set, and good overall accuracy was obtained. Moreover, our work has shown that by contextualising events within a stream of activities, the overall accuracy, and therefore the reliability of the alerting system can be improved (i.e., reduction of the number of false alarms). Although we have left a more focused and detailed study of the sequential logic and how it might be refined for a follow-on project, the findings from this research confirm that is possible to create a stable, low-cost wireless sensor network for monitoring and modelling ambient acoustic levels using an NN learning model on a distributed Raspberry Pi network over Wi-Fi and LinkSmart middleware.

As we explained earlier in this paper, our main goal was to explore the potential for a combination of low-cost IoT devices, NN models (trained using a low data set), and a context reasoning system to unobtrusively monitor ambient acoustic domestic environments for the purposes of providing care to elderly people. At the core of that work was establishing a set of hardware and software architectural principles (e.g., the nature of, and the distribution of processing arrangements and AI models) capable of ensuring a deployable system in home care, which we believe this work has achieved.

Clearly, there are many directions that this work could follow in the future, and below we are suggesting nine of them (numbered as i-ix): (i) One direction could include the investigation of more complex acoustic profiles (e.g., by including prior knowledge and context). (ii) A wider range of sound measurements could be captured to allow for a more detailed analysis of different types of sound and a more refined alert system (e.g., associating footsteps to particular people). (iii) To improve the context reasoning system, the sequential logic might be refined to involve a larger-scale study covering many more events. Likewise, it would be beneficial to (iv) further refine the NN classifier to optimise the network structure, the number of hidden units and the training features, or make it more sustainable when scaling up the system, (v) to replace the FF-BF NN with a CNN, which is a more accurate NN when recognising picture-type patterns (as the mel-frequency cepstrum provides). (vi) Another useful line of research would be to address the cocktail party problem (several sounds mixing with each other) by, say, using blind source separation or other techniques. (vii) HCI is another aspect worthy of further work.

Although the project has focused on how the system could be of benefit in AAL settings, the demonstrated WSN could be easily adapted for different environments and applications that would benefit from remote ambient sound monitoring and alerts. For example, beyond the care of elderly people, the system might be applied to (viii), baby/child monitoring, monitoring children with ADHD (as proposed in [

51], where accelerometers and proximity sensors were used), security systems, and even pest control.

Finally, (ix) concerning commercial deployment routes, given the ever-increasing popularity of smart-home voice control systems such as Amazon’s Echo, it may be possible to integrate the techniques presented in this paper into such systems, thereby endowing them with a broader sound analysis capability, enabling care services to benefit from the ’economics of scale’ associated with commercial smart-home technologies.

Thus, we are confident that our results will encourage further research on applications using systems trained with a low data size and support the increasing interest in applying sound analysis to a range of emerging IoT and smart home applications, which will benefit us all and especially older people who, to date, have not been the main beneficiaries of the technology revolution that is changing our world at an unprecedented rate, which in our opinion, has had a knock-off effect in gathering reliable behavioural data. Ultimately, such work would benefit all of us, since most of us are destined to be elderly later in our lives [

51].