Side-Scan Sonar Image Classification Based on Style Transfer and Pre-Trained Convolutional Neural Networks

Abstract

:1. Introduction

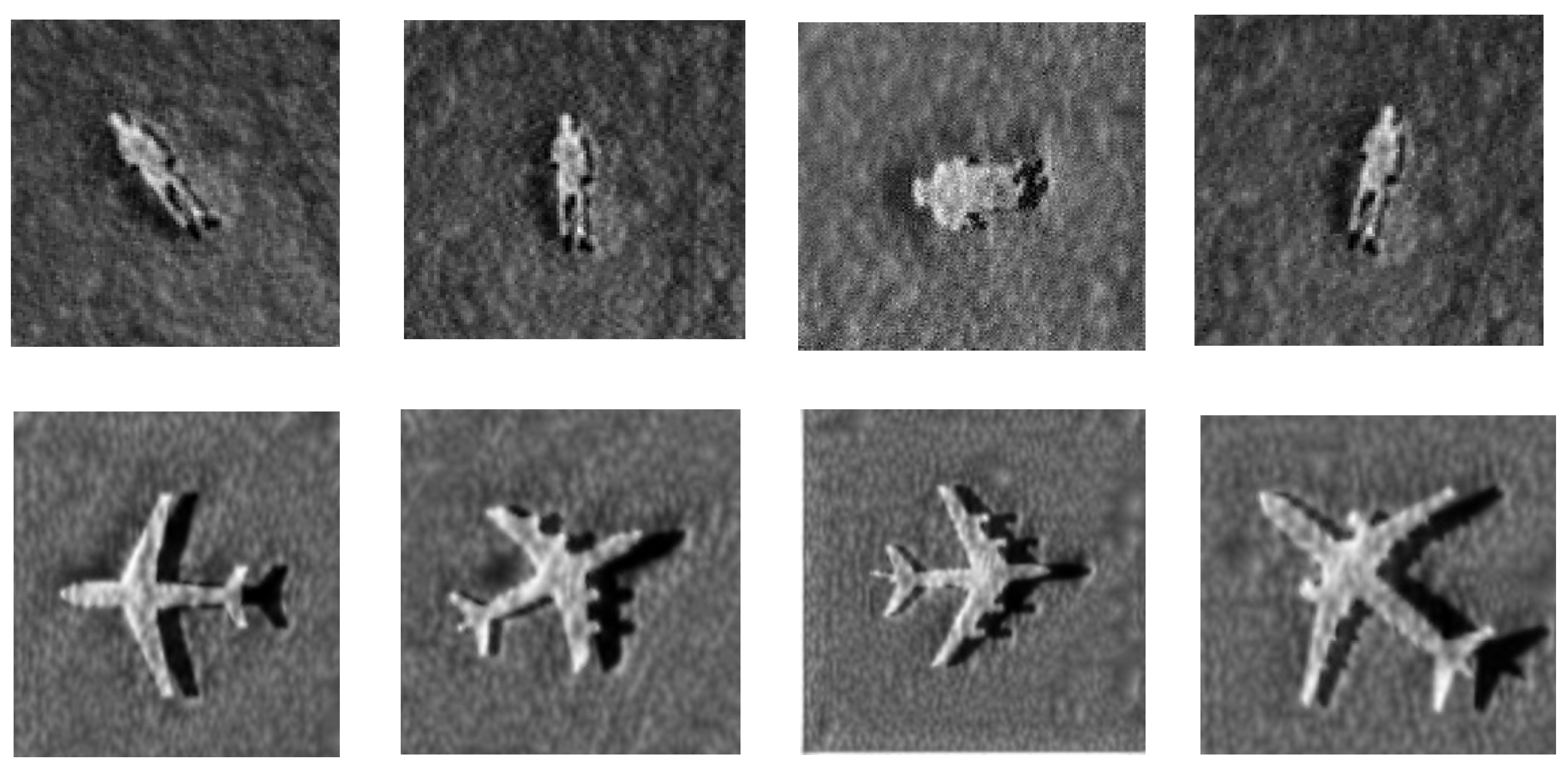

- Considering the problem of unbalanced data of side-scan sonar samples, we propose a method to generate “simulated side-scan sonar images” by combining image segmentation and style transfer networks with optical images as inputs, which are used to generate images of drowning victims and aircraft;

- We modified the image style transfer network and performed experimental comparisons, and the results showed that the improved network generates clearer and more natural images;

- By using pre-trained CNN model classification, such as VGG19, 70% of the real side-scan sonar images and “similar side-scan sonar images” were used to fine-tune the CNN model; then, 30% of the real side-scan sonar images were used to verify the model, and the final test accuracy achieved was up to 97.32%, which is better than the classification performance of the fine-tuned model merely using real side-scan sonar images.

2. Methods

2.1. Image Style Transfer Algorithm

2.2. Hybrid Dilated Convolution and K-Means Algorithm

2.3. Pre-Trained Convolutional Neural Network

3. Synthesis of “Simulated Side-Scan Sonar Images”

3.1. Image Synthesis Method

- The input optical images are clustered to separate the front and back backgrounds and highlight the target objects. In this study, the K-means algorithm is used to cluster the optical images into two categories, namely, background and target object, and the detailed features are removed;

- A digital morphology opening operation is used on the image to eliminate small and meaningless target objects, fill some holes, and eliminate small particle noise in the target region;

- The background color of the clustered image is changed to gray and the color of the target object is changed to white; then, the target object is extracted using binary threshold segmentation. Likewise, the background color of the clustered image is changed to gray and expanded by 1.2 times along the x-axis or y-axis as the shadow region;

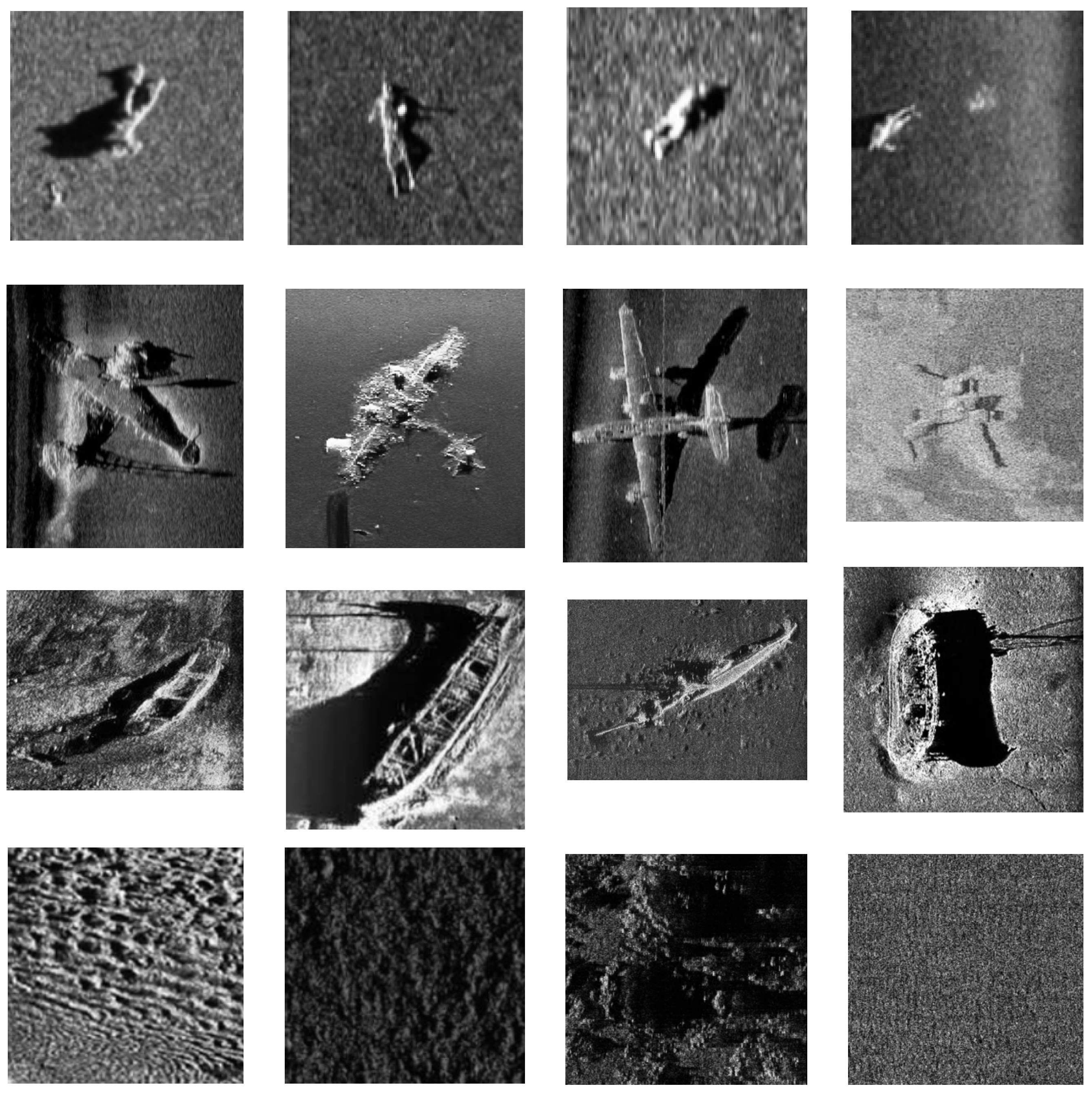

- The extracted target object is fused with the expanded image to obtain an image with a shadow region. This image used as the content image and the real side-scan sonar image used as the style image are simultaneously input into the modified style transfer network to generate the “simulated side-scan sonar images”, as shown in Figure 3.

3.2. Improved Style Transfer Network

4. Experiment

4.1. Synthetic Data Ablation Experiment

4.2. Experiment Based on Transfer Learning and “Simulated Side-Scan Sonar Images”

4.2.1. Dataset

4.2.2. Experimental Environment

4.2.3. Results

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | convolutional neural networks |

| MRF | Markov random field |

| SVM | support vector machine |

| PCA | principal component analysis |

| ELM | extreme learning machine |

| KNN | k-nearest neighbor attractor neural network |

References

- Key, W.H. Side scan sonar technology. In Proceedings of the OCEANS 2000 MTS/IEEE Conference and Exhibition, Providence, RI, USA, 11–14 September 2000; IEEE: New York, NY, USA, 2000; Volume 2, pp. 1029–1033. [Google Scholar]

- Klein, M. Side Scan Sonar. In International Handbook of Underwater Archaeology; Springer: Boston, MA, USA, 2002; pp. 667–678. [Google Scholar]

- Klaucke, I. Side Scan Sonar. In Submarine Geomorphology; Springer: Cham, Switzerland, 2018; pp. 13–24. [Google Scholar]

- Sadjadi, F.A. Studies in Adaptive Automated Underwater Sonar Mine Detection and Classification—Part 1: Exploitation Methods. In Automatic Target Recognition XXV; International Society for Optics and Photonics: Bellingham, WA, USA, 2015; Volume 9476, p. 94760K. [Google Scholar]

- Zhai, H.; Jiang, Z.; Zhang, P.; Tian, J.; Liu, J. Underwater object highlight segmentation in SAS image using Rayleigh mixture model. In Proceedings of the 2015 IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 418–423. [Google Scholar]

- Reed, S.; Petillot, Y.; Bell, J. An automatic approach to the detection and extraction of mine features in side scan sonar. IEEE J. Ocean. Eng. 2003, 28, 90–105. [Google Scholar] [CrossRef]

- Ye, X.-F.; Zhang, Z.-H.; Liu, P.X.; Guan, H.-L. Sonar image segmentation based on GMRF and level-set models. Ocean Eng. 2010, 37, 891–901. [Google Scholar] [CrossRef]

- Kumar, N.; Mitra, U.; Narayanan, S.S. Robust object classification in underwater side scan sonar images by using reliability-aware fusion of shadow features. IEEE J. Ocean. Eng. 2014, 40, 592–606. [Google Scholar] [CrossRef]

- Sinai, A.; Amar, A.; Gilboa, G. Mine-like objects detection in side-scan sonar images using a shadows-highlights geometrical features space. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–6. [Google Scholar]

- Martin, A. Comparative study of information fusion methods for sonar images classification. In Proceedings of the 2005 7th International Conference on Information Fusion, Philadelphia, PA, USA, 25–28 July 2005; Volume 2, p. 7. [Google Scholar]

- Quidu, I.; Malkasse, J.P.; Burel, G.; Vilbe, P. Mine classification based on raw sonar data: An approach combining Fourier descriptors, statistical models and genetic algorithms. In Proceedings of the OCEANS 2000 MTS/IEEE Conference and Exhibition. Conference Proceedings (Cat. No. 00CH37158), Providence, RI, USA, 11–14 September 2000; Volume 1, pp. 285–290. [Google Scholar]

- Karine, A.; Lasmar, N.; Baussard, A.; El Hassouni, M. Sonar image segmentation based on statistical modeling of wavelet subbands. In Proceedings of the 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 17–20 November 2015; pp. 1–5. [Google Scholar]

- Guo, J.; Jin-Feng, M.A.; Wang, A.X. Study of Side Scan Sonar Image Classification Based on SVM and Gray Level Co-Cccurrence Matrix. Geomat. Spat. Inf. Technol. 2015, 68, 60–63. [Google Scholar]

- Zhu, M.; Song, Y.; Guo, J.; Feng, C.; Li, G.; Yan, T.; He, B. PCA and kernel-based extreme learning machine for side-scan sonar image classification. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Korea, 21–24 February 2017; pp. 1–4. [Google Scholar]

- Williams, D.P. Underwater target classification in synthetic aperture sonar imagery using deep convolutional neural networks. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2497–2502. [Google Scholar]

- Dobeck, G.J.; Hyland, J.C. Automated detection and classification of sea mines in sonar imagery. Detection and Remediation Technologies for Mines and Minelike Targets II. Int. Soc. Opt. Photonics 1997, 3079, 90–110. [Google Scholar]

- Kim, J.; Cho, H.; Pyo, J.; Kim, B.; Yu, S.-C. The convolution neural network based agent vehicle detection using forward-looking sonar image. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–5. [Google Scholar]

- Phung, S.L.; Nguyen, T.N.A.; Le, H.T.; Chapple, P.B.; Ritz, C.H.; Bouzerdoum, A.; Tran, L.C. Mine-like object sensing in sonar imagery with a compact deep learning architecture for scarce data. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, WA, Australia, 2–4 December 2019; pp. 1–7. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A neural algorithm of artistic style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Zaki, M.J.; Meira, W., Jr.; Meira, W. Data Mining and Analysis: Fundamental Concepts and Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Winnemöller, H.; Olsen, S.C.; Gooch, B. Real-time video abstraction. ACM Trans. Graph. (TOG) 2006, 25, 1221–1226. [Google Scholar] [CrossRef]

- Hertzmann, A.; Jacobs, C.E.; Oliver, N.; Curless, B.; Salesin, D.H. Image analogies. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 327–340. [Google Scholar]

- Ashikhmin, N. Fast texture transfer. IEEE Comput. Graph. Appl. 2003, 23, 38–43. [Google Scholar] [CrossRef]

- Lee, H.; Seo, S.; Ryoo, S.; Yoon, K. Directional texture transfer. In Proceedings of the 8th International Symposium on Non-Photorealistic Animation and Rendering, Annecy, France, 7 June 2010; pp. 43–48. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Texture synthesis using convolutional neural networks. arXiv 2015, arXiv:1505.07376. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Huo, G.; Wu, Z.; Li, J. Underwater Object Classification in Sidescan Sonar Images Using Deep Transfer Learning and Semisynthetic Training Data. IEEE Access 2020, 8, 47407–47418. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, X.; Gao, Z.; Chen, L. Weld Defect Images Classification with Vgg16-Based Neural Network. In International Forum on Digital TV and Wireless Multimedia Communications; Springer: Singapore, 2017; pp. 215–223. [Google Scholar]

- Subetha, T.; Khilar, R.; Christo, M.S. A comparative analysis on plant pathology classification using deep learning architecture–Resnet and VGG19. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Su, F.; Sun, Y.; Hu, Y.; Yuan, P.; Wang, X.; Wang, Q.; Li, J. Development and validation of a deep learning system for ascites cytopathology interpretation. Gastric Cancer 2020, 23, 1041–1050. [Google Scholar] [CrossRef] [PubMed]

| Categories | Drowning Victim | Aircraft | Seafloor | Shipwreck |

|---|---|---|---|---|

| Numbers | 18 | 62 | 289 | 385 |

| Methods | OA (%) Using Real Data Only | OA (%) Using Real Data and Synthetic Data |

|---|---|---|

| VGG16 | 87.50% | 88.84% |

| Resnet18 | 89.02% | 90.18% |

| Transferred VGG19 | 95.98% | 96.88% |

| Transferred Resnet18 | 95.54% | 96.43% |

| True Class | Predicted Class | |||

|---|---|---|---|---|

| Drowning Victim | Aircraft | Seafloor | Shipwreck | |

| Downing Victim | 5 | 0 | 0 | 0 |

| Aircraft | 0 | 11 | 0 | 7 |

| Seafloor | 0 | 0 | 85 | 1 |

| Shipwreck | 0 | 1 | 0 | 114 |

| True Class | Predicted Class | |||

|---|---|---|---|---|

| Drowning Victim | Aircraft | Seafloor | Shipwreck | |

| Downing Victim | 5 | 0 | 0 | 0 |

| Aircraft | 0 | 13 | 0 | 5 |

| Seafloor | 0 | 0 | 85 | 1 |

| Shipwreck | 0 | 1 | 0 | 114 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, Q.; Ruan, F.; Qiao, B.; Zhang, Q.; Zuo, X.; Dang, L. Side-Scan Sonar Image Classification Based on Style Transfer and Pre-Trained Convolutional Neural Networks. Electronics 2021, 10, 1823. https://doi.org/10.3390/electronics10151823

Ge Q, Ruan F, Qiao B, Zhang Q, Zuo X, Dang L. Side-Scan Sonar Image Classification Based on Style Transfer and Pre-Trained Convolutional Neural Networks. Electronics. 2021; 10(15):1823. https://doi.org/10.3390/electronics10151823

Chicago/Turabian StyleGe, Qiang, Fengxue Ruan, Baojun Qiao, Qian Zhang, Xianyu Zuo, and Lanxue Dang. 2021. "Side-Scan Sonar Image Classification Based on Style Transfer and Pre-Trained Convolutional Neural Networks" Electronics 10, no. 15: 1823. https://doi.org/10.3390/electronics10151823