A Tilt Visible Light Positioning System Based on Double LEDs and Angle Sensors

Abstract

:1. Introduction

2. Positioning System

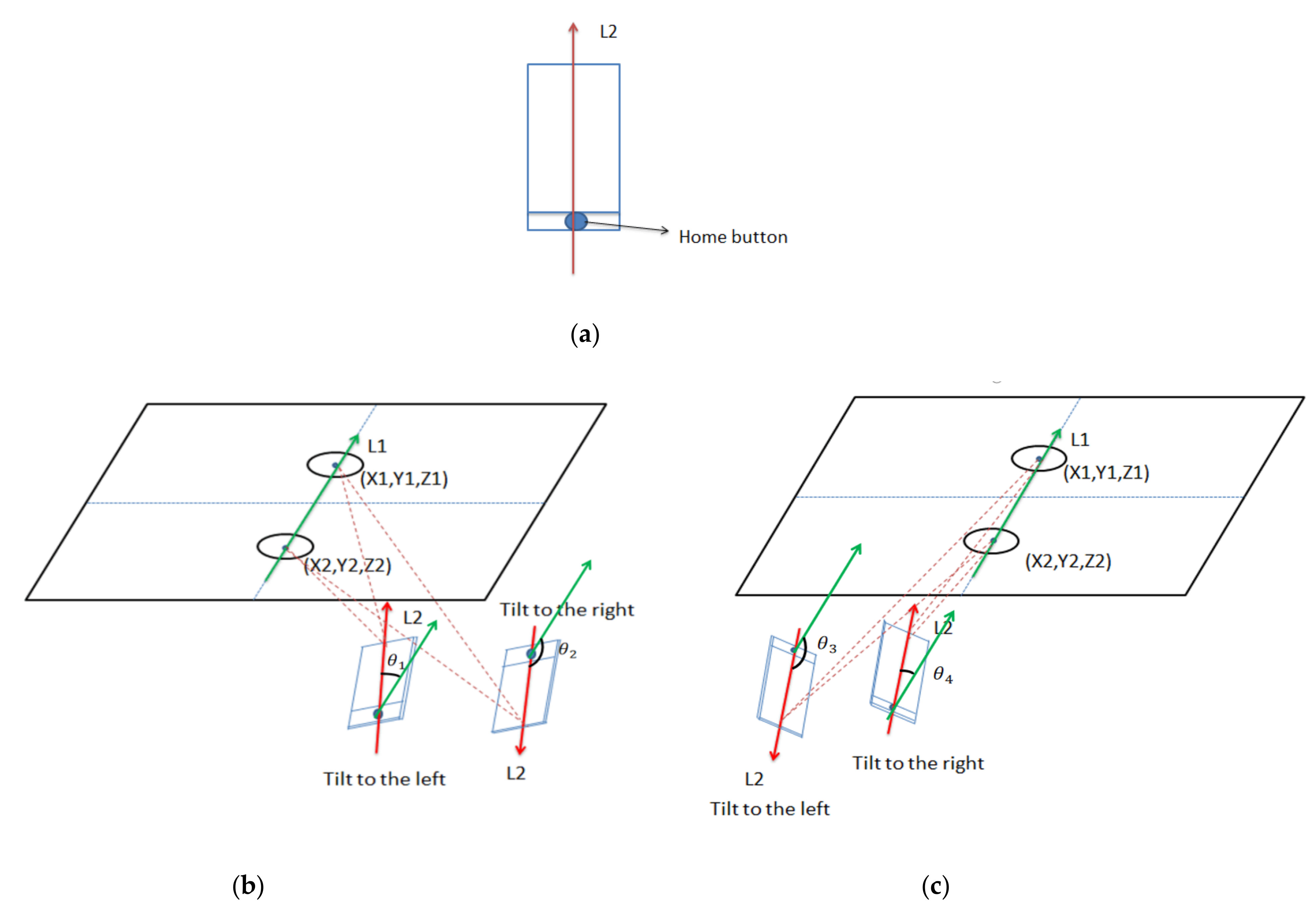

2.1. System Overview

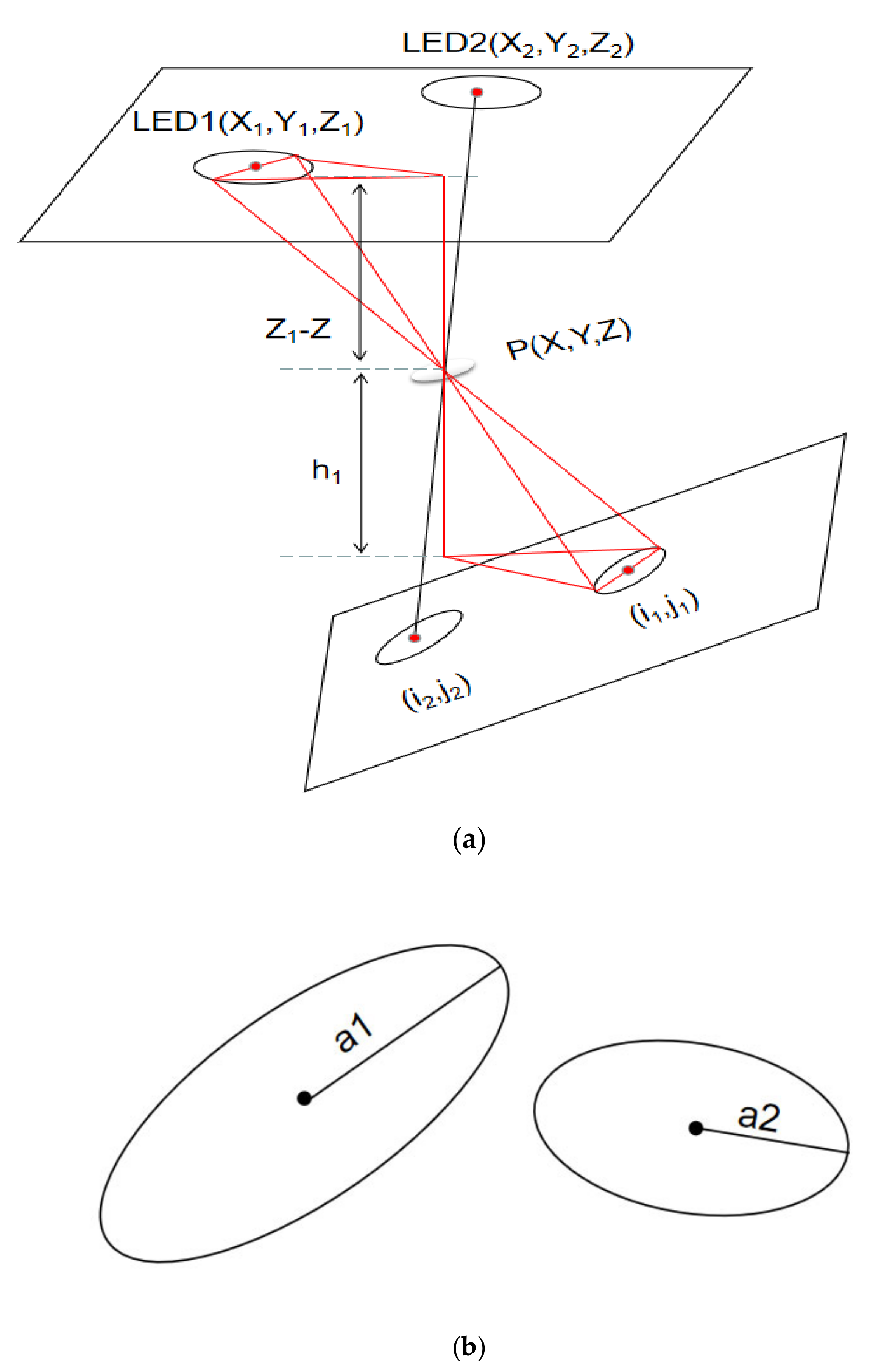

2.2. Principle of Positioning

2.2.1. Calculating Z Coordinate

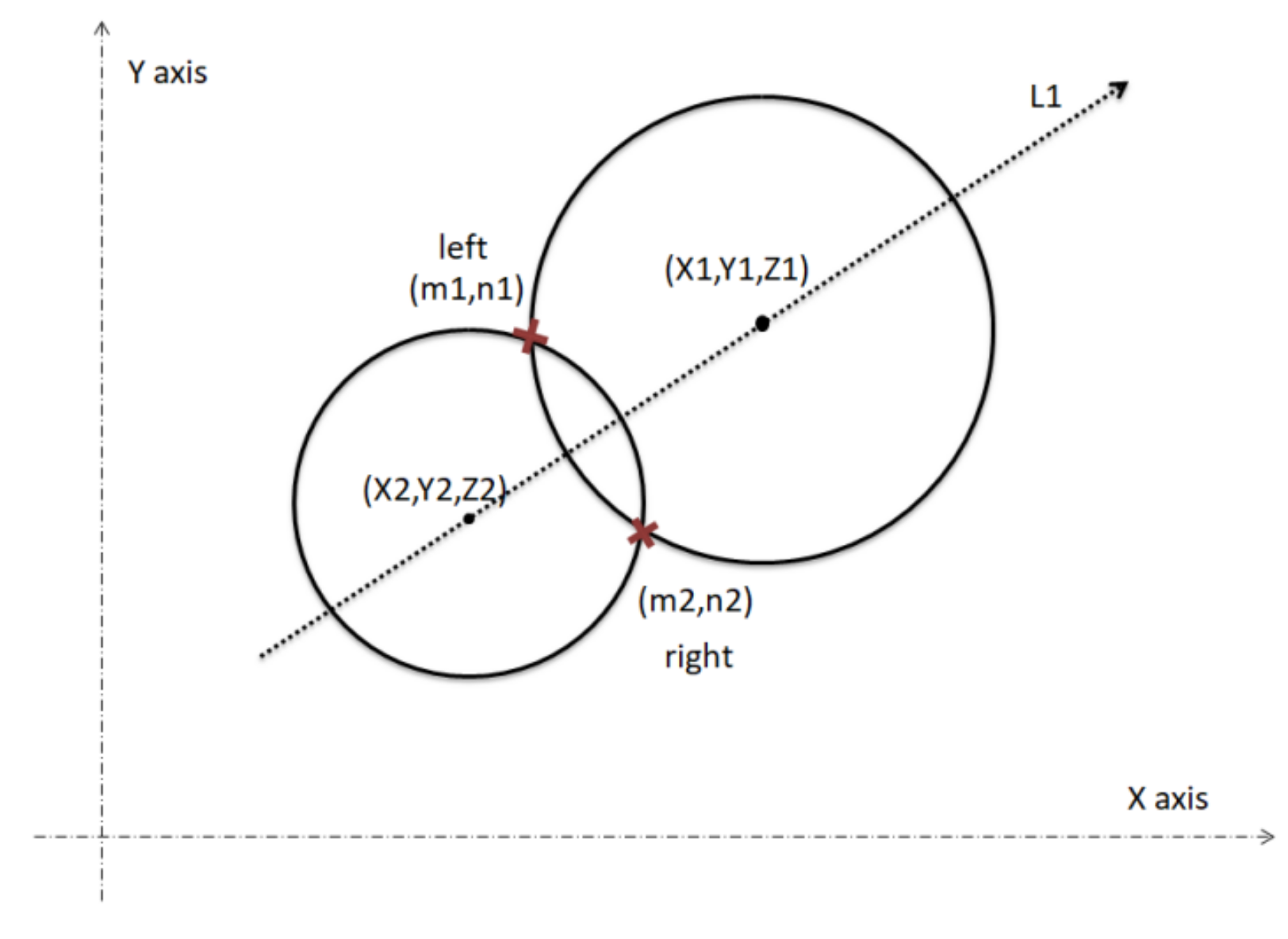

2.2.2. Calculating X and Y Coordinates

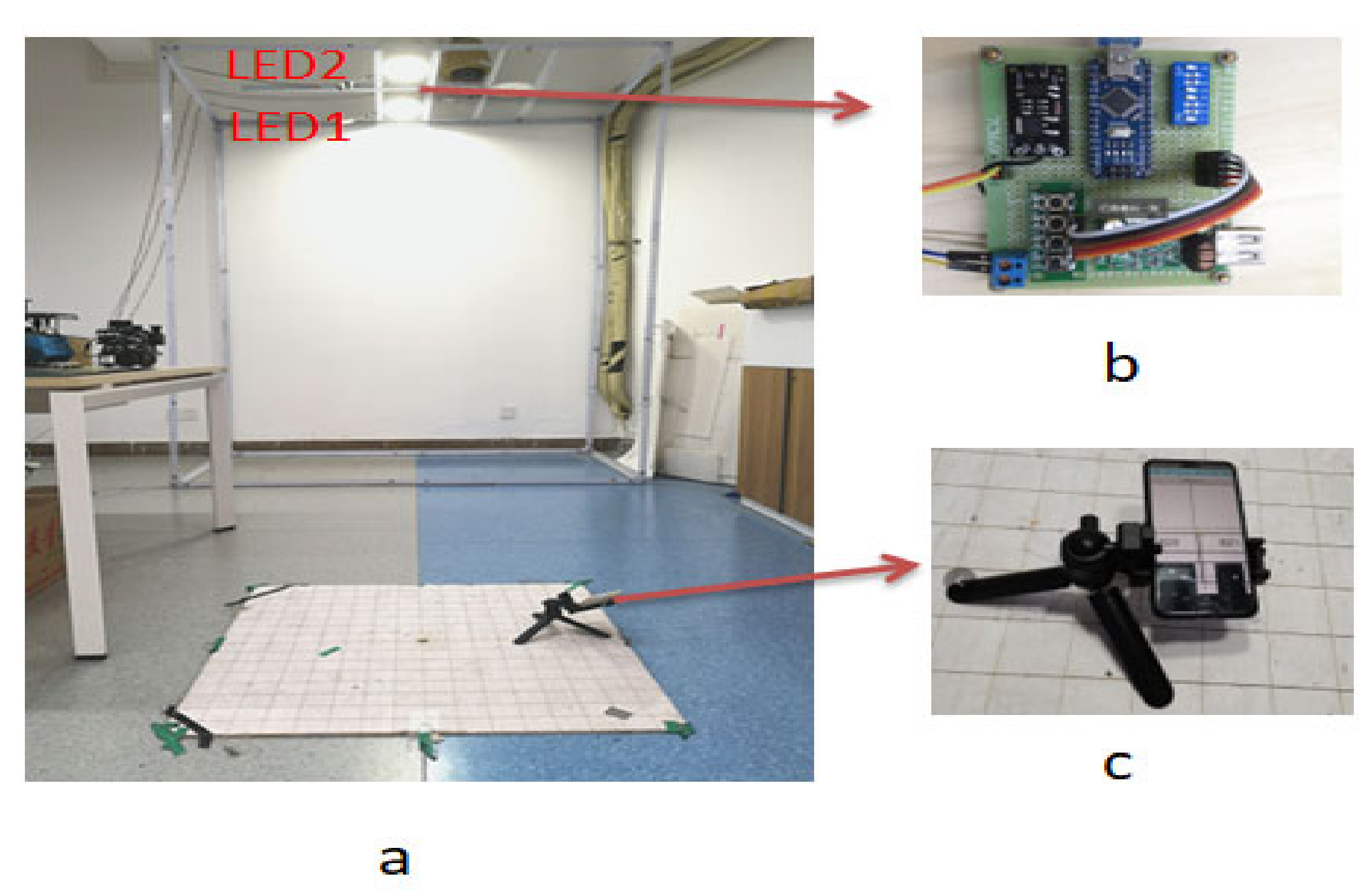

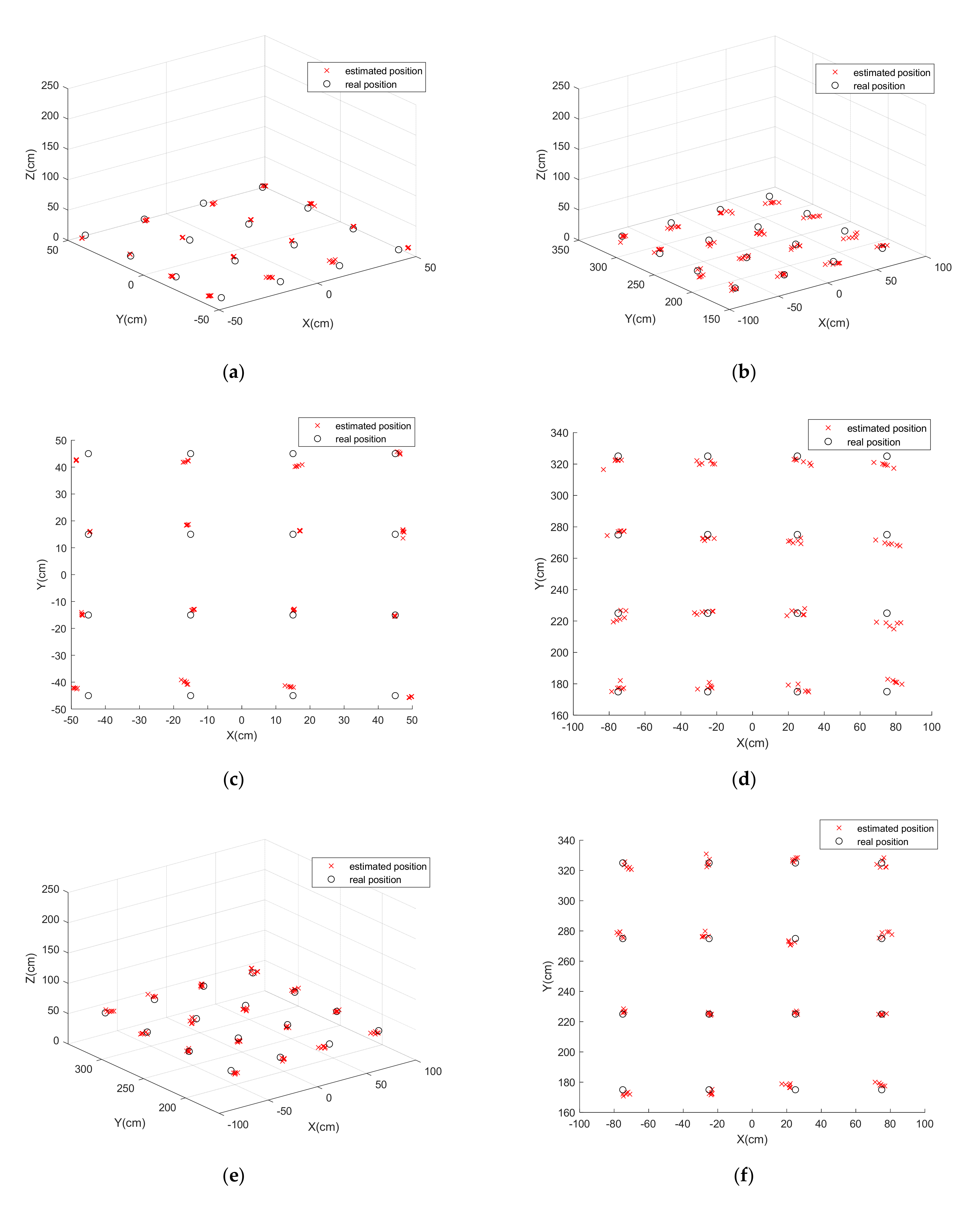

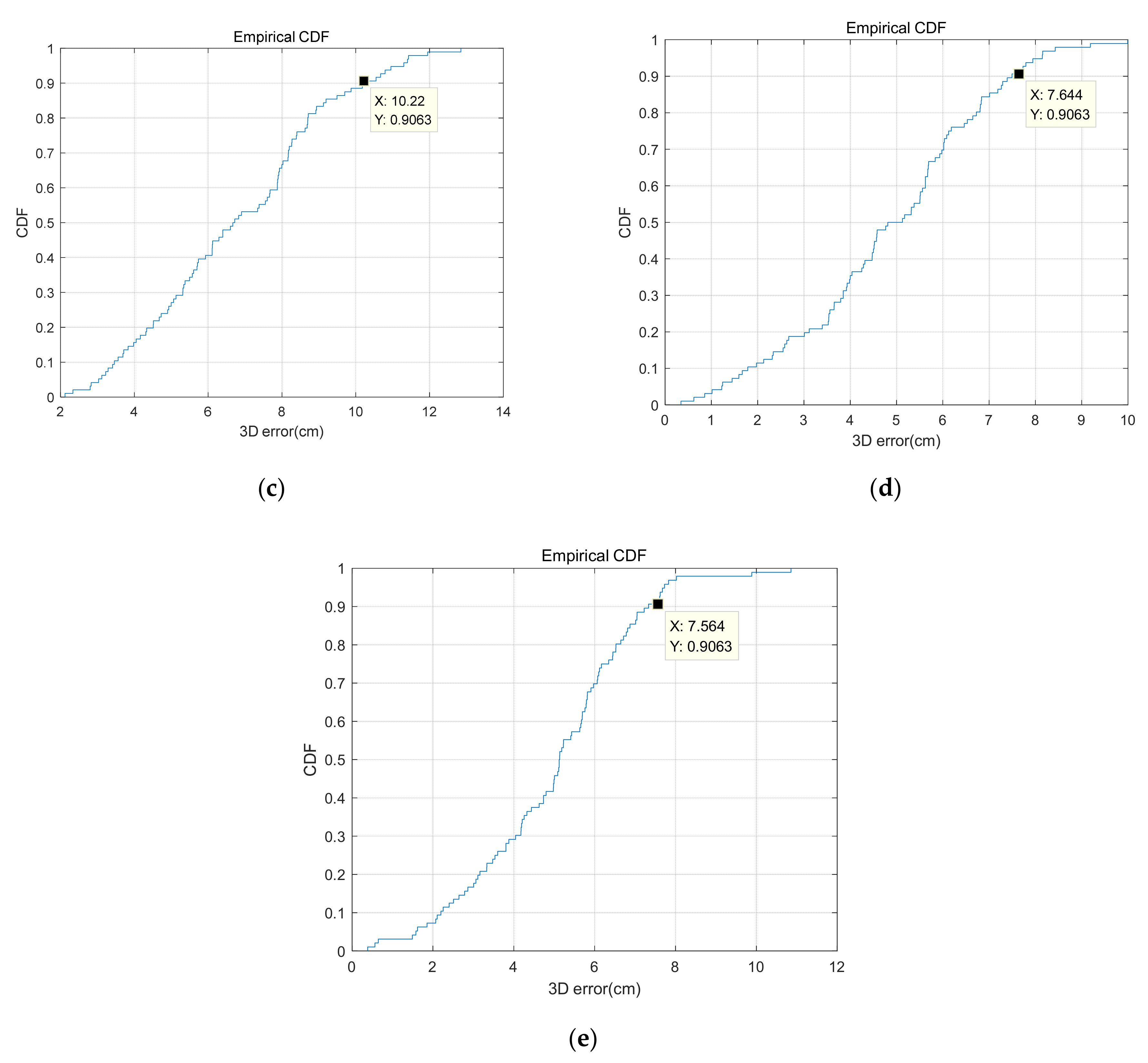

3. Experiment and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, P.; Hu, X.; Ruan, Y.; Li, H.; Fang, J.; Zhong, Y.; Zheng, H.; Fang, J.; Jiang, Z.L.; Chen, Z. Real-time Visible Light Positioning Supporting Fast Moving Speed. Opt. Express 2020, 28, 14503–14510. [Google Scholar] [CrossRef] [PubMed]

- Fang, J.; Yang, Z.; Long, S.; Wu, Z.; Zhao, X.; Liang, F.; Jiang, Z.L.; Chen, Z. High-speed indoor navigation system based on visible light and mobile phone. IEEE Photonics J. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Guan, W.; Wen, S.; Liu, L.; Zhang, H. High-precision indoor positioning algorithm based on visible light communication using complementary metal–oxide–semiconductor image sensor. Opt. Eng. 2019, 58, 024101. [Google Scholar] [CrossRef]

- Yasir, M.; Ho, S.W.; Vellambi, B. NIndoor Positioning System Using Visible Light and Accelerometer. J. Lightwave Technol. 2014, 32, 3306–3316. [Google Scholar] [CrossRef]

- Guan, W.; Wen, S.; Zhang, H.; Liu, L. A Novel Three-dimensional Indoor Localization Algorithm Based on Visual Visible Light Communication Using Single LED. In Proceedings of the 2018 IEEE International Conference on Automation, Electronics and Electrical Engineering (AUTEEE), Shenyang, China, 16–18 November 2018; pp. 202–208. [Google Scholar]

- Cai, Y.; Guan, W.; Wu, Y.; Xie, C.; Chen, Y.; Fang, L. Indoor high precision three-dimensional positioning system based on visible light communication using particle swarm optimization. IEEE Photonics J. 2017, 9, 1–20. [Google Scholar] [CrossRef]

- Shen, S.; Li, S.; Steendam, H. Simultaneous Position and Orientation Estimation for Visible Light Systems with Multiple LEDs and Multiple PDs. IEEE J. Sel. Areas Commun. 2020, 38, 1866–1879. [Google Scholar] [CrossRef]

- Chen, B.; Jiang, J.; Guan, W.; Wen, S.; Li, J.; Chen, Y. Performance comparison and analysis on different optimization models for high-precision three-dimensional visible light positioning. Opt. Eng. 2018, 57, 125101. [Google Scholar] [CrossRef]

- Li, Y.; Ghassemlooy, Z.; Tang, X.; Lin, B.; Zhang, Y. A VLC Smartphone Camera Based Indoor Positioning System. IEEE Photonics Technol. Lett. 2018, 30, 1171–1174. [Google Scholar] [CrossRef]

- Lin, B.; Ghassemlooy, Z.; Lin, C.; Tang, X.; Li, Y.; Zhang, S. An Indoor Visible Light Positioning System Based on Optical Camera Communications. IEEE Photonics Technol. Lett. 2017, 29, 579–582. [Google Scholar] [CrossRef]

- Guvenc, I.; Chong, C.C. A surveyon TOAbased wirelesslocalization and NLOS mitigation techniques. IEEE Commun. Surv. Tutor. 2009, 11, 107–124. [Google Scholar] [CrossRef]

- Do, T.H.; Yoo, M. TDOA-based indoor positioning using visible light. Photon. Netw. Commun. 2014, 27, 80–88. [Google Scholar] [CrossRef]

- Angjelichinoski, M.; Denkovski, D.; Atanasovski, V.; Gavrilovska, L. Cramér–Rao lower bounds of RSS-based localization with anchor position uncertainty. IEEE Trans. Inf. Theory 2015, 61, 2807–2834. [Google Scholar] [CrossRef]

- Komine, T.; Nakagawa, M. Integrated system of white LED visible-light communication and power-line communication. IEEE Trans. Consum. Electron. 2003, 49, 71–79. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; He, J.; Jiang, Z.; Chang, G.K. Modulation Format Shifting Scheme for Optical Camera Communication. IEEE Photonics Technol. Lett. 2020, 32, 1167–1170. [Google Scholar] [CrossRef]

- Zhou, Z.; Wen, S.; Guan, W. RSE-based optical camera communication in underwater scenery with bubble degradation. In Proceedings of the 2021 Optical Fiber Communications Conference and Exhibition (OFC), San Francisco, CA, USA, 6–10 June 2021; pp. 1–3. [Google Scholar]

- Kuo, Y.S.; Pannuto, P.; Hsiao, K.J.; Dutta, P. Luxapose: Indoor Positioning with Mobile Phones and Visible Light. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 447–458. [Google Scholar]

- Nakazawa, Y.; Makino, H.; Nishimori, K.; Wakatsuki, D.; Komagata, H. Indoor positioning using a high-speed, fish-eye lens-equipped camera in visible light communication. In Proceedings of the 2013 International Conference on Indoor Positioning and Indoor Navigation, Montbeliard, France, 28–31 October 2013; pp. 1–8. [Google Scholar]

- Kim, J.-Y.; Yang, S.-H.; Son, Y.-H.; Han, S.-K. High-resolution indoor positioning using light emitting diode visible light and camera image sensor. IET Optoelectron. 2016, 10, 184–192. [Google Scholar] [CrossRef]

- Shala, U.; Rodriguez, A. Indoor Positioning Using Sensor-Fusion in Android Devices. Master’s Thesis, Kristianstad University, Kristianstad, Sweden, 2011. [Google Scholar]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 421–430. [Google Scholar]

- Zhang, R.; Zhong, W.D.; Kemao, Q.; Zhang, S. A Single LED Positioning System Based on Circle Projection. IEEE Photonics J. 2017, 9, 1–9. [Google Scholar] [CrossRef]

- Chen, S.; Guan, W. High Accuracy VLP based on Image Sensor using Error Calibration Method. arXiv 2020, arXiv:2010.00529. [Google Scholar]

- Guan, W.; Huang, L.; Hussain, B.; Yue, C.P. Robust Robotic Localization using Visible Light Positioning and Inertial Fusion. IEEE Sens. J. 2021. [Google Scholar] [CrossRef]

- Amsters, R.; Holm, D.; Joly, J.; Demeester, E.; Stevens, N.; Slaets, P. Visible Light Positioning Using Bayesian Filters. J. Lightwave Technol. 2020, 38, 5925–5936. [Google Scholar] [CrossRef]

- Liang, Q.; Lin, J.; Liu, M. Towards Robust Visible Light Positioning Under LED Shortage by Visual-inertial Fusion. In Proceedings of theThe International Conference on Indoor Positioning and Indoor Navigation (IPIN 2019), Pisa, Italy, 28 November 2019. [Google Scholar]

- Cheng, H.; Xiao, C.; Ji, Y.; Ni, J.; Wang, T. A Single LED Visible Light Positioning System Based on Geometric Features and CMOS Camera. IEEE Photonics Technol. Lett. 2020, 32, 1097–1100. [Google Scholar] [CrossRef]

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS camera sensor for visible lightcommunication. In Proceedings of the IEEE Global Telecommun Conference Workshops, Anaheim, CA, USA, 3–7 December 2012; pp. 1244–1248. [Google Scholar]

- Song, H.; Wen, S.; Yuan, D.; Huang, L.; Yan, Z.; Guan, W. Robust LED region-of-interest tracking for visible light positioning with low complexity. Opt. Eng. 2021, 60, 053102. [Google Scholar] [CrossRef]

- Zhou, Z.; Wen, S.; Li, Y.; Xu, W.; Chen, Z.; Guan, W. Performance Enhancement Scheme for RSE-Based Underwater Optical Camera Communication Using De-Bubble Algorithm and Binary Fringe Correction. Electronics 2021, 10, 950. [Google Scholar] [CrossRef]

- Safaee-Rad, R.; Tchoukanov, I.; Smith, K.C.; Benhabib, B. Three-dimensional location estimation of circular features for machine vision. IEEE Trans. Robot. Autom. 1992, 8, 624–640. [Google Scholar] [CrossRef] [Green Version]

| Parameter | Value |

|---|---|

| Resolution | 1920 × 1080 |

| The focal length | 18 mm |

| FOV of camera | ϕ ≈ |

| Power of each LED | 18 W |

| Camera exposure time | 0.05 ms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, F.; Xu, H.; Wen, S.; Song, H.; Chen, Z.; Guan, W. A Tilt Visible Light Positioning System Based on Double LEDs and Angle Sensors. Electronics 2021, 10, 1923. https://doi.org/10.3390/electronics10161923

An F, Xu H, Wen S, Song H, Chen Z, Guan W. A Tilt Visible Light Positioning System Based on Double LEDs and Angle Sensors. Electronics. 2021; 10(16):1923. https://doi.org/10.3390/electronics10161923

Chicago/Turabian StyleAn, Futong, Haixin Xu, Shangsheng Wen, Hongzhan Song, Zhijian Chen, and Weipeng Guan. 2021. "A Tilt Visible Light Positioning System Based on Double LEDs and Angle Sensors" Electronics 10, no. 16: 1923. https://doi.org/10.3390/electronics10161923

APA StyleAn, F., Xu, H., Wen, S., Song, H., Chen, Z., & Guan, W. (2021). A Tilt Visible Light Positioning System Based on Double LEDs and Angle Sensors. Electronics, 10(16), 1923. https://doi.org/10.3390/electronics10161923