1. Introduction

Software vulnerabilities are regarded as a significant threat in information security. Programming languages without a memory reclamation mechanism (such as C/C++) have the risk of memory leaks, which may expose irreparable risks [

1]. With the increase in software complexity, it is impractical to reveal all abnormal software behaviors manually. Fuzz testing, or

fuzzing, is a (semi-) automated technology to facilitate software testing. A fuzzing tool, or

fuzzer, feeds random inputs to a target program and, meanwhile, monitors unexpected behaviors during software execution to detect vulnerabilities [

2]. Among all fuzzers, coverage-guided greybox fuzzers (CGF) have become one of the most popular ones due to their high deployability and scalability, e.g., AFL [

3] and LibFuzzer [

4]. They have been successfully applied in practice to detect thousands of security vulnerabilities in open-source projects [

5].

Coverage-guided greybox fuzzing relies on the assumption that more run-time bugs could be revealed if more program code is executed. To find bugs as quickly as possible, AFL and other CGFs try to maximize the code coverage. This is because a bug at a specific program location can only be triggered unless that location is covered by some test inputs. A CGF utilizes light-weight program transformation and dynamic program profiling to collect run-time coverage information. For example, AFL instruments the target program to record transitions at the basic block level. The actual fuzzing process starts with an initial corpus of seed inputs provided by users. AFL generates a new set of test inputs by randomly mutating the seeds (such as bit flipping). It then executes the program using the mutated inputs and records those that cover new execution paths. AFL continually repeats this process, but starts with the mutated inputs instead of user-provided seed inputs. If there are any program crashes and hangs, for example, caused by memory errors, AFL would also report the corresponding inputs for further analysis.

When a CGF is applied, the fuzzing process does not terminate automatically. Practically, users need to decide when to end this process. In a typical scenario, a user sets a timer for each CGF run and the CGF stops right away when the timer expires. However, researchers have discovered empirically that, within a fixed time budget, exponentially more machines are needed to discover each new vulnerability [

6]. With a limited number of machines, the CGF could rapidly reach a

saturation state in which, by continuing the fuzzing, it is difficult to find new unique crashes (where exponentially more time is needed). Then,

what can we do to improve the capability of CGF to find bugs with constraints on time and CPU power? In this work, we try to provide one solution to this question.

Existing CGFs are biased toward test inputs that can explore new program execution paths. These inputs are prioritized in subsequent mutations. Inputs that do not discover new coverage are considered unimportant and are not selected for mutation. However, in practice, this extensive coverage-guided path exploration may hinder the discovery of or even overlook potential vulnerabilities on specific paths. The rationale is that an execution path in one successful run may not be bug-free in all runs. Simply dumping “bad” inputs may cause insufficient testing of their corresponding execution paths. Rather, special attention should be paid to such inputs and paths. Intuitively, an input covering a path is more likely to cover the same path after mutation than any other arbitrary inputs. Although an input cannot trigger, in one execution, the bug in its path, it is possible that the input can do so after a few fine-grained mutations. In short, by focusing on the new execution paths, the CGFs can discover an amount of vulnerabilities in a fixed time, but they also omit some vulnerabilities, which need to be repeatedly tested on the specific execution path multiple times to be found.

Based on this, we propose a lightweight extension of CGF, ReFuzz, that can effectively find tens of new crashes within a fixed amount of time on the same machines. The goal of ReFuzz is not to achieve as high code coverage as possible. Instead, it aims to detect new unique crashes on already-covered execution paths in a limited time. In ReFuzz, test inputs that do not explore new paths are regarded as favored. They are prioritized and mutated often to examine the same set of paths repeatedly. All other mutated inputs are omitted from execution. As a prototype, we implement ReFuzz on top of AFL. In our experiments, it successfully triggered 37, 59, and 54 new crashes in our benchmarks that were not found by AFL, using three different experimental settings, respectively. Finally, we discovered nine vulnerabilities accepted to the CVE database.

In particular, ReFuzz incorporates two stages. Firstly, in the initial stage, AFL is applied as usual to test the target program. The output of this stage is a set of crash reports and a corpus of mutated inputs used during fuzzing. In addition, we record the code coverage of this corpus. Secondly, in the exploration stage, we use the corpus and coverage from the previous stage as seed inputs and initial coverage, respectively. During the testing process, instead of rewarding inputs that cover new paths, ReFuzz only records and mutates those that converge to the initial coverage, i.e., they contribute no new coverage. To further improve the performance, we also review the validity of each mutated input before execution and promote non-deterministic mutations, if necessary. In practice, the second stage may last until the fuzzing process becomes saturated.

Note that ReFuzz is not designed to replace CGF but as a complement and a remedy for saturation during fuzzing. In fact, the original unmodified AFL is used in the initial stage. The objective of the exploration stage is to verify whether new crashes can be found on execution paths that have already been covered by AFL and whether AFL and CGFs, in general, miss potential vulnerabilities on these paths while seeking to maximize code coverage.

We make the following contributions.

We propose an innovative idea in which, though the input cannot trigger a bug over one execution time, it is possible that the input can do so after a few fine-grained mutations.

We propose a lightweight extension of CGF, ReFuzz, that can effectively find tens of new crashes within a fixed amount of time on the same machines.

We develop various supporting techniques, such as reviewing the validity of each mutated input before execution, and promote non-deterministic mutations if necessary to further improve the performance.

We propose a new mutation strategy on top of AFL. If the input does not cover a new execution path, it is regarded as valuable, which will help to cover a specific execution path over multiple times.

We evaluate

ReFuzzon four real-world programs collected from prior related work [

7]. It successfully triggered 37, 59 and 54 new unique crashes in the three different experimental configurations and discovered nine vulnerabilities accepted to the CVE database.

The rest of the paper is organized as follows.

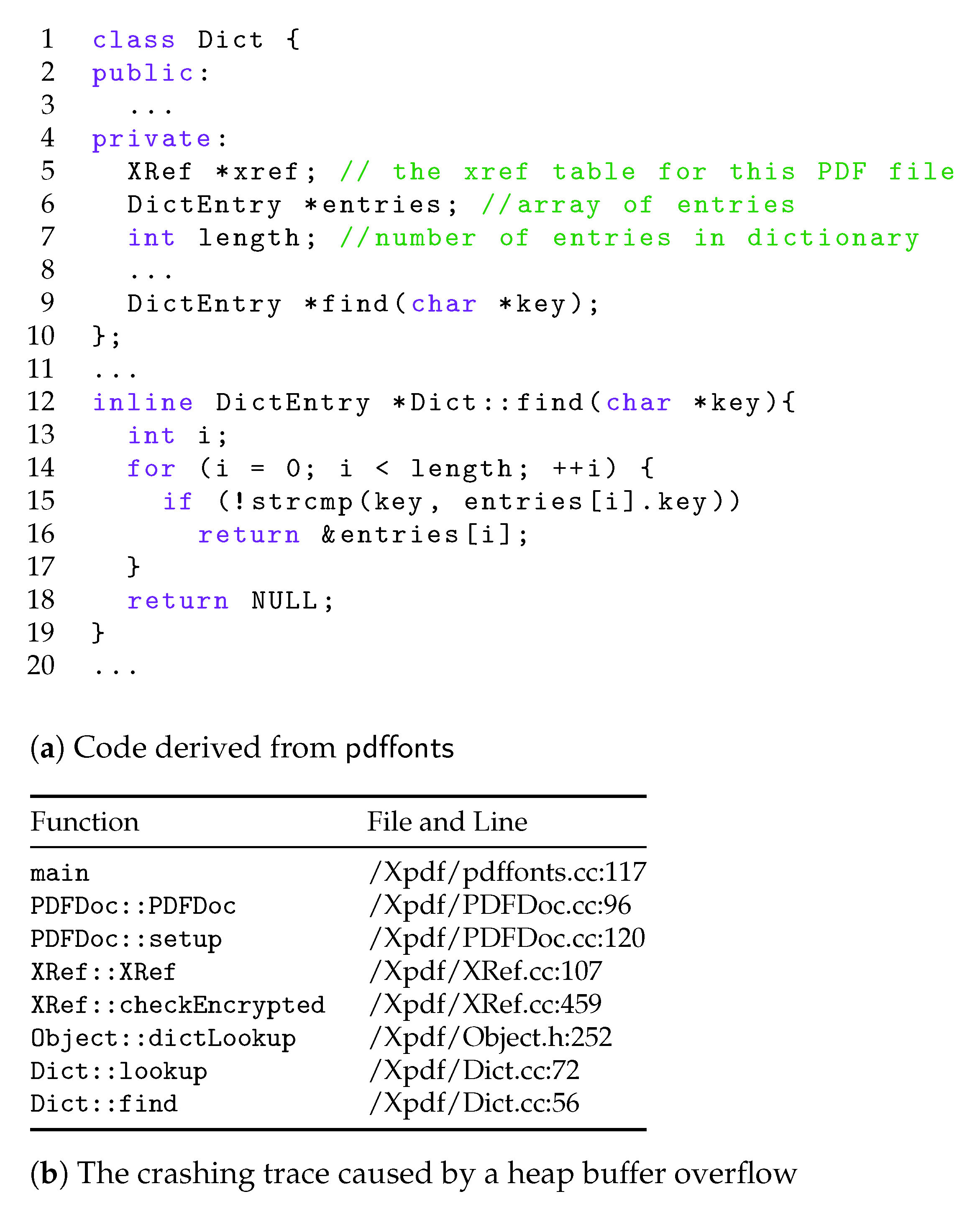

Section 2 introduces fuzzing and AFL, as well as a motivating example to illustrate CGFs mutating strategy limitations.

Section 3 describes the design details of

ReFuzz. We report the experimental results and discussion in

Section 4 and

Section 5.

Section 6 discusses the related work, and finally,

Section 7 concludes our work.

5. Discussion

ReFuzz is effective at finding new unique crashes that are hard to discover using AFL. This is because some execution paths need to be examined multiple times with different inputs to find hidden vulnerabilities. The coverage-first strategy in AFL and other CGFs tends to overlook executed paths, which may hinder further investigation of such paths. However, such questions as “when should we stop the initial stage in ReFuzz and enter the exploration stage to start the examination of these paths”, and “how long should we spend in the exploration stage of ReFuzz” remain unanswered.

How long should the initial stage take? As described in

Section 4, we performed three different experiments with

set to 60, 50, and 40 h to gather empirical results. The intuition is that the effect of using the original AFL to find bugs would be the best when

is 60 h since it is to be expected that more paths could be covered and more unique crashes could be triggered if we apply the fuzzer for a longer time in the initial stage. However, our experimental results in

Table 3,

Table 4 and

Table 5 show that the fuzzing process is unpredictable. The total number of unique crashes triggered in the initial stage of 60 h is close to 40 h (308 vs. 303), while the number obtained in 50 h is less than that of 40 h (246 vs. 303). In Algorithm 2, as well as our implementation of the algorithm, we allow the user to decide when to stop the initial stage and set

based on their experience and experiments. Generally, regarding the appropriate length of the initial stage, we suggest that users should pay attention to the dynamic data in the fuzzer dashboard. The code coverage remains stable, the color of the cycle numbers (

cycles done) transforms from purple to green, or the last discovered unique crashes (

last uniq crash time) have passed a long time, which indicates that continuing to test will not bring new discoveries. The best rigorous method is to combine these pieces of reference information to determine whether the initial stage should be paused.

How long should the exploration stage take? We conducted an extra experiment using

ReFuzz with the corpus obtained from the 80-h run of AFL. We ran

ReFuzz for 16 h and recorded the number of unique crashes per hour. In the experiment, each program was executed with

ReFuzz for 12 trials. The raw results are shown in

Figure 4 and the mean of the 12 trials are shown in

Figure 5. In both figures, the

x-axes show the number of bugs (i.e., unique crashes) and the y-axes show the execution time in hours. We can see that given a fixed corpus of seed inputs, the performance of

ReFuzz in the exploration stage varies a lot in the 12 trials. This is due to the nature of random mutations. Overall, we can see from the figures that in the exploration stage,

ReFuzz follows the empirical rule that finding a new vulnerability requires exponentially more time [

6]. However, this does not negate the effectiveness of

ReFuzz in finding new crashes. We suggest that the best test time to terminate the remedial testing is still when the exploration reaches saturation, and the relevant guidelines at the initial stage can be considered here.

IsReFuzzeffective as remedy for CGF? Many researchers have proposed remedial measures to CGFs. Driller [

18] combines fuzzing and symbolic execution. When a fuzzer becomes stuck, symbolic execution can calculate the valid input to explore deeper bugs. T-Fuzz [

19] detects whenever a baseline mutational fuzzer becomes stuck and no longer produces inputs that extend the coverage. Then, it produces inputs that trigger deep program paths and, therefore, find vulnerabilities (hidden bugs) in the program. The main cause of the saturation is due to the fact that AFL and other CGFs strongly rely on random mutation to generate new inputs to reach more execution paths. Our experimental results suggest that new unique crashes can actually be discovered if we leave code coverage aside and continue to examine the already covered execution paths by applying mutations (as shown in

Table 3,

Table 4 and

Table 5). They also show that it is feasible and effective to use our approach as a remedy and an extension to AFL, which can easily be applied to other existing CGFs. While this conclusion may not hold for programs that we did not use in the experiments, our evaluation shows the potential of remedial testing based on re-evaluation of covered paths.

6. Related Work

The mutation-based fuzzer uses actual inputs to continuously mutate the test cases in the corpus during the fuzzing process, and continuously feeds the target program. The code coverage is used as the key to measure the performance of the fuzzer. AFL [

3] uses compile-time instrumentation and genetic algorithms to find interesting test cases, and can find new edge coverage based on these inputs. VUzzer [

20] uses the “intelligent” mutation strategy based on data flow and control flow to generate high-quality inputs through the result feedback and by optimizing the input generation process. The experiments show that it can effectively speed up the mining efficiency and increase the depth of mining. FairFuzz [

21] increases the coverage of AFL by identifying branches (rare branches) performed by a small amount of input generated by AFL and by using mutation mask creation algorithms to make mutations that tend to generate inputs that hit specific rare branches. AFLFast [

12] proposes a strategy to make AFL geared toward the low-frequency path, providing more opportunities to the low-frequency path, which can effectively increase the coverage of AFL. LibFuzzer [

4] uses SanitizerCoverage [

22] to track basic block coverage information in order to generate more test cases that can cover new basic blocks. Sun et al. [

23] proposed to use the ant colony algorithm to control seed inputs screening in greybox fuzzing. By estimating the transition rate between basic blocks, we can determine which the seed input is more likely to be mutated. PerfFuzz [

24] generates inputs through feedback-oriented mutation fuzzing generation, can find various inputs with different hot spots in the program, and escapes local maximums to have higher execution path length inputs. SPFuzzs [

25] implement three mutation strategies, namely, head, content and sequence mutation strategies. They cover more paths by driving the fuzzing process, and provide a method of randomly assigning weights through messages and strategies. By continuously updating and improving the mutation strategy, the above research effectively improves the efficiency of fuzzing. As far as we know, in our experiment, if there are no new crashes for a long time (>

), and it is undergoing the deterministic mutation operations at present, then it performs the next deterministic mutation or to enter the random mutation stage directly, which reduces unnecessary time consumption to a certain extent.

The generation-based fuzzer is significant for having a good understanding of the file format and interface specification of the target program. By establishing the model of the file format and interface specification, the fuzzer generates test cases according to the model. Dam et al. [

26] established the Long Short-Term memory model based on deep learning, which automatically learns the semantic and grammatical features in the code, and proves that its predictive ability is better than the state-of-the-art vulnerability prediction models. Reddy et al. [

27] proposed a reinforcement learning method to solve the diversification guidance problem, and used the most advanced testing tools to evaluate the ability of RLCheck. Godefroid et al. [

28] proposed a machine learning technology based on neural networks to automatically generate grammatically test cases. AFL++ [

29] provides a variety of novel functions that can extend the blurring process over multiple stages. With it, variants of specific targets can also be written by experienced security testers. Fioraldi et al. [

30] proposed a new technique that can generate and mutate inputs automatically for the binary format of unknown basic blocks. This technique enables the input to meet the characteristics of certain formats during the initial analysis phase and enables deeper path access. You et al. [

31] proposed a new fuzzy technology, which can generate effective seed inputs based on AFL to detect the validity of the input and record the input corresponding to this type of inspection. PMFuzz [

32] automatically generates high-value test cases to detect crash consistency bugs in persistent memory (PM) programs. These efforts use syntax or semantic learning techniques to generate legitimate inputs. However, our work is not limited to using input format checking to screen legitimate inputs during the testing process, and we can obtain high coverage in a short time by using the corpus obtained by AFL test as the initial corpus in the exploration phase. Symbolic execution is an extremely effective software testing method that can generate inputs [

33,

34,

35]. Symbolic execution can analyze the program to obtain input for the execution of a specific code area. In other words, when using symbolic execution to analyze a program, the program uses symbolic values as input instead of the specific values used in the general program execution. Symbolic execution is a heavyweight software testing method because the possible input of the analysis program needs to be able to obtain the support of the target source code. SAFL [

36] is augmented with qualified seed generation and efficient coverage-directed mutation. Symbolic execution is used in a lightweight approach to generate qualified initial seeds. Valuable exploration directions are learned from the seeds to reach deep paths in program state space earlier and easier. However, for large software projects, it takes a lot of time to analyze the target source code. As

ReFuzz is a lightweight extension of AFL, in order to be able to repeatedly reach the existing execution path, we choose to add the test that fails to generate a new path to the execution corpus to participate in subsequent mutations.