Person Re-Identification Microservice over Artificial Intelligence Internet of Things Edge Computing Gateway

Abstract

:1. Introduction

2. Personal Re-Identification Microservice over Edge Computing (EC) Concepts

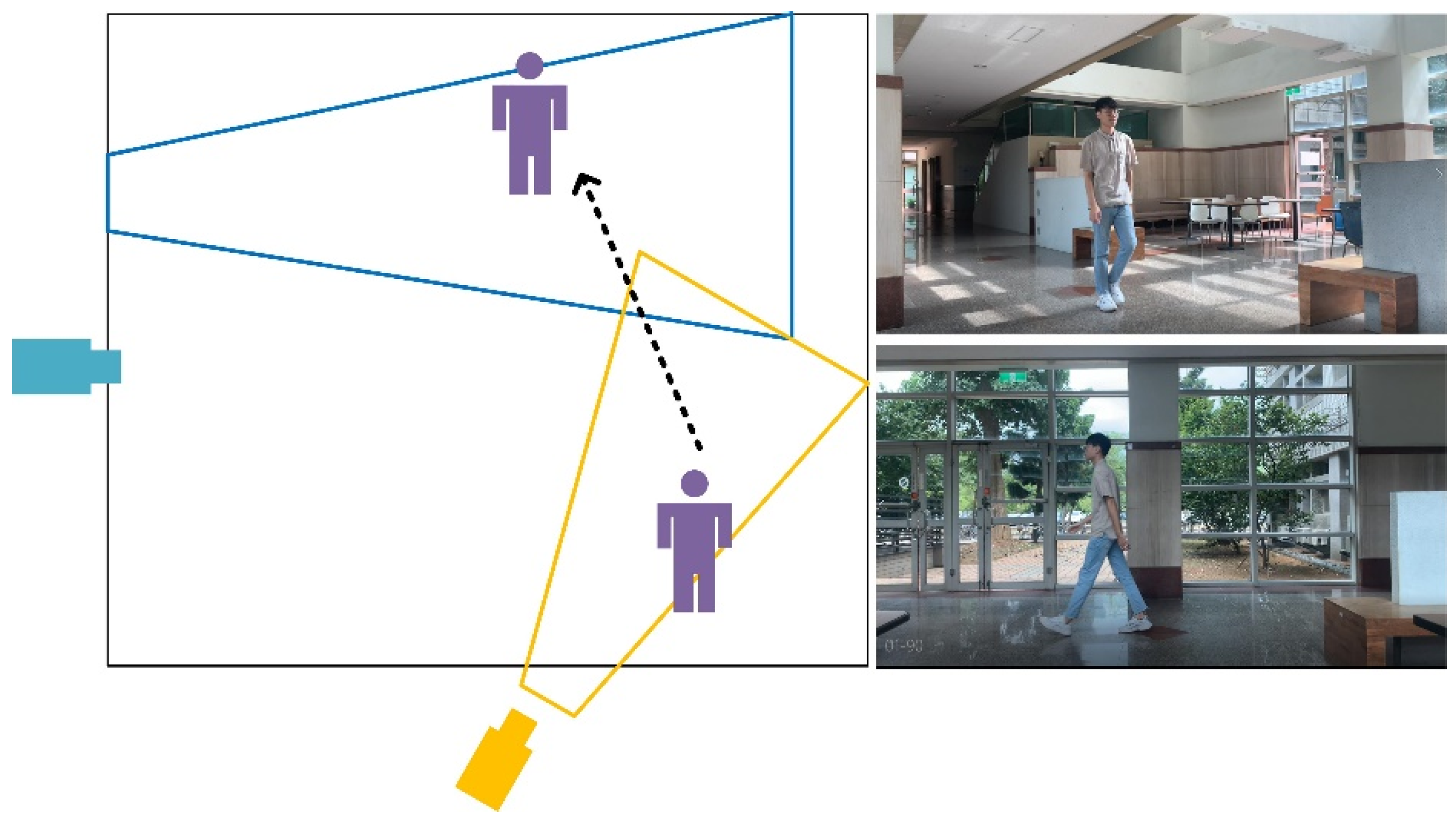

2.1. Personal Re-Identification

- 1.

- Raw Data Collection: In addition to obtaining raw video data from different surveillance cameras, calibration or preliminary background filtration must be performed.

- 2.

- Bounding Box Generation: This process pertains to the detection of a person and extraction of bounding boxes that contain the images of the person. Moreover, person pose detection technology can be employed [15].

- 3.

- Training Data Annotation: Annotating the cross-camera label is indispensable for discriminative Re-ID model learning, owing to variations in cross-camera images.

- 4.

- Model Training: This is the most extensively studied Re-ID paradigm in the literature.

- 5.

- Pedestrian Retrieval: The image gallery is used to test the learned results in the Re-ID model. Certain previous studies have also investigated a ranking optimization to improve the retrieval performance.

- (1).

- Heterogeneous Data: The data may be obtained from different sources, such as infrared images, sketches, depth images, or text description. The system must consider the input of multiple formats of data in the model and engine.

- (2).

- Bounding Box Generation from Videos or Images: Extra work is required to mark the bounding boxes from videos when compared to marking from images.

- (3).

- Unavailable/Limited Labels: There are insufficient label annotations for real-world data labels. Moreover, noisy annotations (i.e., incorrect labels) represent another problem.

- (4).

- The real-world data might not have a dataset for training.

- (1).

- Global feature representation learning: The learning process extracts a global feature vector for the image of each person [16].

- (2).

- Local feature representation learning: It learns part/region-aggregated features. It is primarily used to cut the characteristics of the body into several small parts, which are then individually recognized to obtain a better recognition rate. It is sometimes combined with different local feature learning methods, such as human posture recognition or horizontal-divided region features [17].

- (3).

- Auxiliary feature representation learning: This method considers additional annotated information; for example, semantic attributes are also adopted by Re-ID feature representation learning or generative adversarial network generation [18].

- (4).

2.2. Omni-Scale Network

2.3. EC and the Fusion of Internet of Things (IoT), Artificial Intelligence (AI), and EC

- (1).

- Save bandwidth and cloud computing resources: If a large amount of data is concentrated in the cloud, it is necessary to ensure a large bandwidth or build a large data center in a certain place, especially if the bandwidth is a limited resource; it is reasonable to provide this close to the edge side. For example, placing a video stream server close to a cellphone base station will reduce the backbone bandwidth.

- (2).

- Areas with no internet or limited access to internet, such as rural villages, only have satellite connections, which can be disconnected in bad weather.

- (3).

- Utilized for data preprocessing: The data are processed first before uploading to the cloud; for example, if the Re-ID features cannot be found in the database, then only the feature data, without the image data, can be uploaded to the cloud, which can save time and bandwidth.

- (4).

- Maintain privacy without transferring any data to the cloud: In certain situations, data can only be processed locally because of privacy or security reasons, such as facial images or features of security personnel.

- (5).

- The resources of the entire cloud can be optimized by the edge computing nodes.

2.4. Microservice Architecture and Container Technology

- 6.

- Namespaces: Namespace performs the job of isolation and virtualization of system resources for a collection of processes. It can isolate processes, users, file systems, and other components, and can control the range of the components, which can enhance the confidence of a user in the container [44].

- 7.

- CGroups: CGroups can control the accountability and limitation of resource usage, such as CPU runtime, system memory, input/output (I/O), and network bandwidth. Moreover, limited resource usage can prevent denial-of-service attacks between containers.

- 8.

- Capabilities: Linux systems implement the binary option of root and non-root dichotomy. It can allow a non-root application (e.g., nginx) to bind to a specific port (e.g., Transmission Control Protocol (TCP) port 80, which requires root authority to operate it).

- 9.

- Seccomp: Seccomp is a Linux kernel feature that filters system calls to the kernel. It can reduce attacks from system calls.

- 1.

- Provision of high-availability and system backup services: The relatively harsh AIoT environment requires such services, and different amounts of hardware or functions can be used for different environments and applications, e.g., to build a high-availability system on systems that require multiple backups, and researchers can focus on important jobs, such as system monitoring or analysis.

- 2.

- Fast update deployment/zero downtime: The design of the microservice allows the system to update the application without stopping the service, and the system update can also occur after the background update is completed.

- 3.

- Container: AIoT environments or applications require different libraries and setups. Containers can provide the ability to run these applications in individual environments.

- 4.

- Suitable for technology diversity: AIoT solutions are obtained from different hardware and software vendors. Solution vendors can implement their software solution in containers and simply open the API for their customers; this can avoid certain library integration issues.

- 5.

- Machine-to-machine communication: Both microservices and AIoT systems require low-level machine-to-machine protocol for communication. Protocols between AIoT objects are not usually different; they require an API or other ways to exchange data. Microservices implement several APIs for communication, especially for a fast API framework. Thus, AIoT applications can rely on fast API frameworks for easy deployment to the microservice architecture.

3. Personal Re-Identification AI IoT (AIoT) EC Gateway

- AI Accelerated Layer

- Application (Tracking Recognition) Layer:

- Tracking Layer:

- Camera Communication Layer

- Command:

- Real-time People Re-ID Collection:

- Monitor/Control:

- Decision Support:

- This architecture is run on embedded hardware, such as the ARM64 system.

- This architecture must have sufficient AI hardware resources to perform Re-ID functions on EC; if requirements change, it should be able to scale up and down easily.

- Additional flexible AI hardware and software are permitted for improved support for redundant systems.

- AIoT protocol that can transmit images efficiently and has a high-efficacy load-balancing function is implemented.

3.1. Torchreid

- Data: The DataManager class includes the training and test data loaders that are responsible for loading and classifying data, sampling, and data augmentation. DataManager can also support multiple datasets as the training data.

- Engine: It is designed as a streamlined pipeline for training and evaluation of deep Re-ID models. Based on this engine, the author implemented softmax loss and triplet loss learning paradigms. A Visualization toolkit is implemented in this class for visualizing the ranking result of a Re-ID CNN and represents the visualization of activation maps.

- Model: The model class provides multipurpose CNN architectures, which include common Re-ID models such as ResNet50.

3.2. Kubernetes

- etcd cluster: a simple, distributed key value storage, which is used to store cluster data;

- kube-apiserver: central management server that receives requests for pods, services, and other modifications;

- kube-scheduler: helps schedule the pods in the various nodes for resources utilization;

- kubelet: the main service in the worker node, regular, new, or modified pods specifications and ensures pods are healthy and running in the desired state;

- kube-proxy: a proxy service that runs on each worker node to expose services to the outside world;

- pod: a set of containers that should be controlled as a single application.

3.3. gRPC and Protobuf

3.4. Linkerd2 and Load Balancing

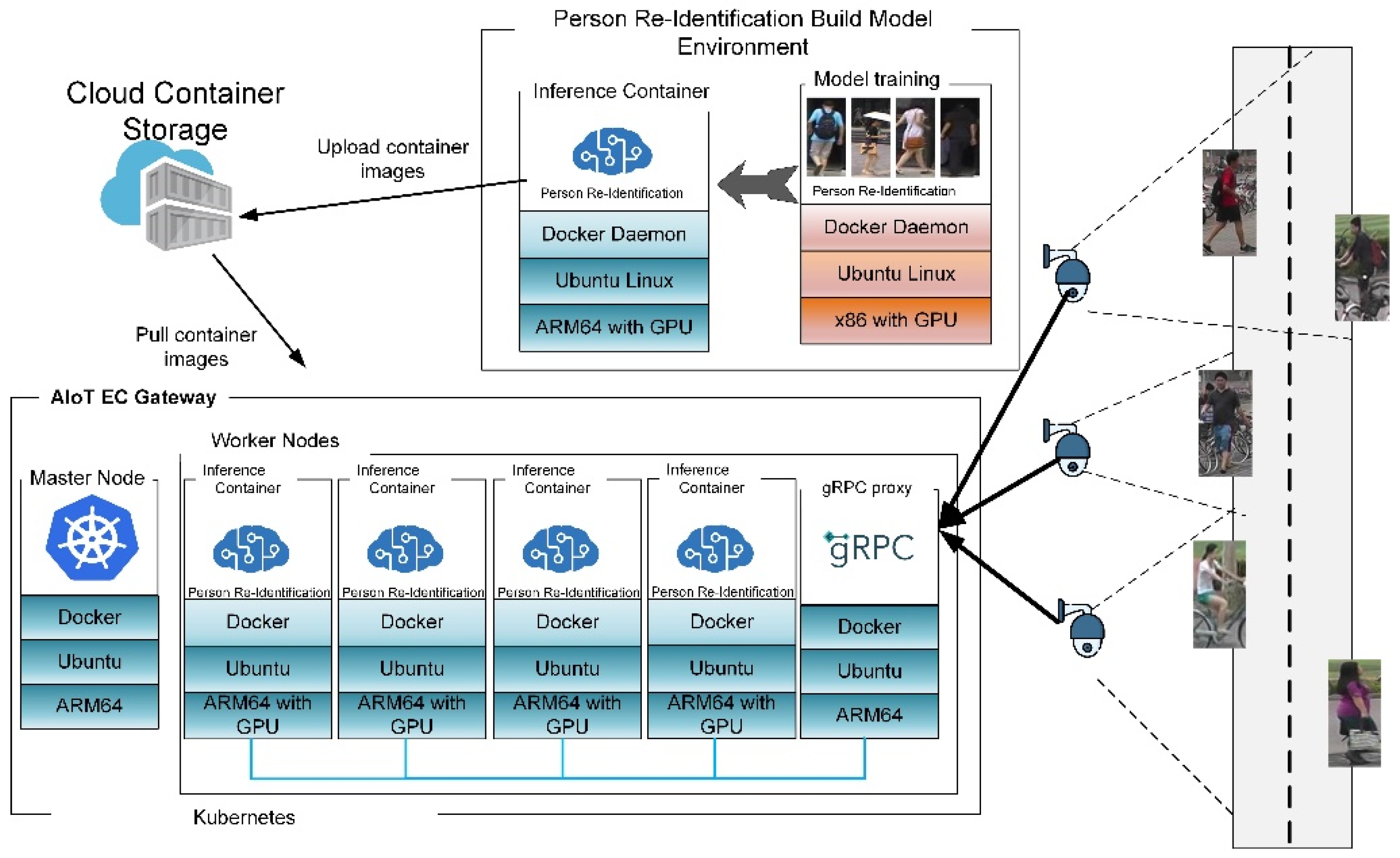

4. System Validation

- 1.

- Run model training and operate feature data and development inference software on the x86 hardware; it will create the model and feature data in this stage.

- 2.

- Build and test personal Re-ID and gRPC proxy(nginx)’s container images on the ARM64 platform. For personal Re-ID containers, it will include step 1′s model and feature data. In this step, we can make sure the personal Re-ID container works well.

- 3.

- Upload container images to cloud container storage. Generally, it will use a docker hub as cloud container storage.

- 4.

- Prepare the Kubernetes configuration file and load the configuration file; then, the Kubernetes system will download and deploy containers to where it should be placed in the system automatically.

- 5.

- Multiple cameras should be set up in a real environment, but for the performance, it will not be easy to control it. Therefore, we use one x86 PC to simulate multiple cameras and measure the whole system’s performance. When tested, it will send multiple images to the gPRC proxy, and the gRPC proxy will forward requests to Re-ID inference servers.

4.1. AIoT EC Gateway Hardware Architecture

- 1.

- Raspberry Pi 4(RPi4): RPi4 is the most popular embedded system that can run Kubernetes and is powered by ARM64 architecture with a quad-core 64-bit Cortex-A72 (ARMv8) and 4 GB of RAM.

- 2.

- NVIDIA Jetson TX2: We used four Jetson TX2 devices as the Kubernetes worker node with an AI hardware engine. They are powered by a quad-core ARMv8 A57 processor, a dual-core ARMv8 Denver processor, and an integrated 8 GB RAM and 256 Pascal GPU 1.3 GHz processor cores. For power consumption, the Jetson TX2 consumes a maximum of 15 W of power, which enables it to be used as an embedded AI platform or for AIoT EC gateway applications by different power-efficiency modes [4].

- Container infrastructure: Kubernetes is primarily used to help manage containers and Re-ID services.

- AIoT Communication protocol: uses gRPC as the low-bandwidth protocol with high efficiency, which is based on Protobuf.

- Proxy server: The nginx server is a high-performance HTTP and reverse proxy server, which is used for the gRPC proxy.

- Protocol-based load balancer: Linkerd2 is the service mesh application, which also performs HTTP/2 load balancing.

- AI container software: PyTorch was used to develop the software run in AI containers. PyTorch is a popular machine learning framework based on the Torch library and was used as the underlying AI Accelerated layer.

4.2. Hardware Environment

- Two RPi4 devices: One for the Kubernetes master node and another for proxy server.

- Four Jetson TX2 devices: These were used as the Kubernetes worker node with an AI-accelerated engine; the default uses Max-N operation mode.

- ASUS VC65 i5 x86_64: This was used to simulate AIoT device clients that can send simultaneous connections/requests.

- Intel Core i7-8700 CPU with NVIDIA 1080 Ti GPU and 32 GB RAM: These were used to simulate the EC server for training the Re-ID model and to create a database for AIoT EC gateway inference. Moreover, this machine was used to run an inference test to compare the performance with that of the AIoT EC gateway.

4.3. Experimental Evaluation and Power Consumption

4.4. x86 and AIoT EC Gateway Performance Comparision

- 1.

- Power consumption: According to Table 2 and Table 3, the power consumption of the x86 GPU is approximately equal to that of the entire four-GPU system. If only three GPUs are used for comparison, x86 versus the AIoT EC gateway is 48 vs. 36.5 W, while the number of x86 only counted the GPU power consumption and excluded other parts of the system (e.g., CPU/DISK/DRAM).

- 2.

- Performance and flexibility: Compared with x86, only a specific model of GPU can be used. The performance is fixed on the x86 system, the excess performance will be wasted, and the insufficient performance needs to be replaced by the hardware. The AIoT EC gateway can flexibly control the number of server hardware to meet the demand. It can use the same architecture to meet the embedded system’s requirement, so there is no need to consider using personal hardware, thereby saving the cost of the embedded system.

- 3.

- By comparing the AIoT EC gateway with the x86 system, we can know its flexibility and performance advantages. In addition, the AIoT EC gateway has lower power consumption and lower thermal, and because of this, the system design can reduce more space used than the x86 system. These are additional benefits of migrating the system to the AIoT EC gateway.

4.5. Architecture Performance Test

4.6. Alternative Model for Re-ID Performance Test

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Katz, J.S. AIoT: Thoughts on Artificial Intelligence and the Internet of Things. Available online: https://iot.ieee.org/conferences-events/wf-iot-2014-videos/56-newsletter/july-2019.html (accessed on 15 June 2021).

- Chen, C.-H.; Lin, M.-Y.; Liu, C.-C. Edge computing gateway of the industrial internet of things using multiple collaborative microcontrollers. IEEE Netw. 2018, 32, 24–32. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Blanco-Filgueira, B.; García-Lesta, D.; Fernández-Sanjurjo, M.; Brea, V.M.; López, P. Deep learning-based multiple object visual tracking on embedded system for IoT and mobile edge computing applications. IEEE Internet Things J. 2019, 6, 5423–5431. [Google Scholar] [CrossRef] [Green Version]

- Kristiani, E.; Yang, C.-T.; Huang, C.-Y.; Ko, P.-C.; Fathoni, H. On construction of sensors, edge, and cloud (ISEC) framework for smart system integration and applications. IEEE Internet Things J. 2020, 8, 309–319. [Google Scholar] [CrossRef]

- Chen, C.-H.; Liu, C.-T. A 3.5-tier container-based edge computing architecture. Comput. Electr. Eng. 2021, 93, 107227. [Google Scholar] [CrossRef]

- Gheissari, N.; Sebastian, T.B.; Hartley, R. Person Reidentification Using Spatiotemporal Appearance. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Manhattan, NY, USA, 2006; pp. 1528–1535. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef]

- Bedagkar-Gala, A.; Shah, S.K. A survey of approaches and trends in person re-identification. Image Vis. Comput. 2014, 32, 270–286. [Google Scholar] [CrossRef]

- Wang, X.; Doretto, G.; Sebastian, T.; Rittscher, J.; Tu, P. Shape and Appearance Context Modeling. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; IEEE: Manhattan, NY, USA, 2007; pp. 1–8. [Google Scholar]

- Javed, O.; Shafique, K.; Shah, M. Appearance Modeling for Tracking in Multiple Non-Overlapping Cameras. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: Manhattan, NY, USA, 2005; pp. 26–33. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep Metric Learning for Person Re-Identification. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; IEEE: Manhattan, NY, USA, 2014; pp. 34–39. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. A discriminatively learned cnn embedding for person reidentification. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2017, 14, 1–20. [Google Scholar] [CrossRef]

- Cheng, D.; Gong, Y.; Zhou, S.; Wang, J.; Zheng, N. Person Re-Identification by Multi-Channel Parts-Based Cnn with Improved Triplet LossFunction. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Manhattan, NY, USA, 2016; pp. 1335–1344. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, L.; Zhang, H.; Sun, S.; Chandraker, M.; Yang, Y.; Tian, Q. Person Re-Identification in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Manhattan, NY, USA, 2017; pp. 1367–1376. [Google Scholar]

- Yao, H.; Zhang, S.; Hong, R.; Zhang, Y.; Xu, C.; Tian, Q. Deep representation learning with part loss for person re-identification. IEEE Trans. Image Process. 2019, 28, 2860–2871. [Google Scholar] [CrossRef] [Green Version]

- Lin, Y.; Zheng, L.; Zheng, Z.; Wu, Y.; Hu, Z.; Yan, C.; Yang, Y. Improving person re-identification by attribute and identity learning. Pattern Recognit. 2019, 95, 151–161. [Google Scholar] [CrossRef] [Green Version]

- Dai, J.; Zhang, P.; Wang, D.; Lu, H.; Wang, H. Video person re-identification by temporal residual learning. IEEE Trans. Image Process. 2018, 28, 1366–1377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, C.-Y.; Chen, P.-Y.; Chen, M.-C.; Hsieh, J.-W.; Liao, H.-Y.M. Real-Time Video-Based Person Re-Identification Surveillance with Light-Weight Deep Convolutional Networks. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; IEEE: Manhattan, NY, USA, 2019; pp. 1–8. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Manhattan, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Wang, Y.; Chen, Z.; Wu, F.; Wang, G. Person Re-Identification with Cascaded Pairwise Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Manhattan, NY, USA, 2018; pp. 1470–1478. [Google Scholar]

- Chang, X.; Hospedales, T.M.; Xiang, T. Multi-Level Factorisation Net for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Manhattan, NY, USA, 2018; pp. 2109–2118. [Google Scholar]

- Zhou, K.; Yang, Y.; Cavallaro, A.; Xiang, T. Omni-Scale Feature Learning for Person Re-Identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; IEEE: Manhattan, NY, USA, 2019; pp. 3702–3712. [Google Scholar]

- Bai, S.; Tang, P.; Torr, P.H.; Latecki, L.J. Re-Ranking Via Metric Fusion for Object Retrieval and Person Re-Identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Manhattan, NY, USA, 2019; pp. 740–749. [Google Scholar]

- Ye, M.; Liang, C.; Yu, Y.; Wang, Z.; Leng, Q.; Xiao, C.; Chen, J.; Hu, R. Person reidentification via ranking aggregation of similarity pulling and dissimilarity pushing. IEEE Trans. Multimed. 2016, 18, 2553–2566. [Google Scholar] [CrossRef]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-Identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: Manhattan, NY, USA, 2019; pp. 1116–1124. [Google Scholar]

- Satyanarayanan, M. The emergence of edge computing. Computer 2017, 50, 30–39. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in edge: Deep learning for the Internet of Things with edge computing. IEEE Netw. 2018, 32, 96–101. [Google Scholar] [CrossRef] [Green Version]

- Pham, H.-T.; Nguyen, M.-A.; Sun, C.-C. AIoT Solution Survey and Comparison in Machine Learning on Low-cost Microcontroller. In Proceedings of the 2019 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Taipei, Taiwan, 3–6 December 2019; IEEE: Manhattan, NY, USA, 2019; pp. 1–2. [Google Scholar]

- Tran, T.X.; Hajisami, A.; Pandey, P.; Pompili, D. Collaborative mobile edge computing in 5G networks: New paradigms, scenarios, and challenges. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar] [CrossRef] [Green Version]

- Omoniwa, B.; Hussain, R.; Javed, M.A.; Bouk, S.H.; Malik, S.A. Fog/edge computing-based IoT (FECIoT): Architecture, applications, and research issues. IEEE Internet Things J. 2018, 6, 4118–4149. [Google Scholar] [CrossRef]

- Chen, X.; Pu, L.; Gao, L.; Wu, W.; Wu, D. Exploiting massive D2D collaboration for energy-efficient mobile edge computing. IEEE Wirel. Commun. 2017, 24, 64–71. [Google Scholar] [CrossRef] [Green Version]

- Gong, C.; Lin, F.; Gong, X.; Lu, Y. Intelligent cooperative edge computing in internet of things. IEEE Internet Things J. 2020, 7, 9372–9382. [Google Scholar] [CrossRef]

- Chiu, T.-C.; Shih, Y.-Y.; Pang, A.-C.; Wang, C.-S.; Weng, W.; Chou, C.-T. Semisupervised Distributed Learning With Non-IID Data for AIoT Service Platform. IEEE Internet Things J. 2020, 7, 9266–9277. [Google Scholar] [CrossRef]

- Thönes, J. Microservices. IEEE Softw. 2015, 32, 116. [Google Scholar] [CrossRef]

- Zimmermann, O. Microservices tenets. Comput. Sci.-Res. Dev. 2017, 32, 301–310. [Google Scholar] [CrossRef]

- Anderson, C. Docker [Software Engineering]. Available online: https://www.computer.org/csdl/magazine/so/2015/03/mso2015030102/13rRUy2YLWr (accessed on 14 May 2021).

- Morabito, R.; Kjällman, J.; Komu, M. Hypervisors Vs. Lightweight Virtualization: A Performance Comparison. In Proceedings of the 2015 IEEE International Conference on Cloud Engineering, Tempe, AZ, USA, 9–13 March 2015; IEEE: Manhattan, NY, USA, 2015; pp. 386–393. [Google Scholar]

- Joy, A.M. Performance Comparison between Linux Containers and Virtual Machines. In Proceedings of the 2015 International Conference on Advances in Computer Engineering and Applications, Ghaziabad, India, 19–20 March 2015; IEEE: Manhattan, NY, USA, 2015; pp. 342–346. [Google Scholar]

- Felter, W.; Ferreira, A.; Rajamony, R.; Rubio, J. An Updated Performance Comparison of Virtual Machines and Linux Containers. In Proceedings of the 2015 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Philadelphia, PA, USA, 29–31 March 2015; IEEE: Manhattan, NY, USA, 2015; pp. 171–172. [Google Scholar]

- Salah, T.; Zemerly, M.J.; Yeun, C.Y.; Al-Qutayri, M.; Al-Hammadi, Y. Performance Comparison between Container-Based and VM-Based Services. In Proceedings of the 2017 20th Conference on Innovations in Clouds, Internet and Networks (ICIN), Paris, France, 7–9 March 2017; IEEE: Manhattan, NY, USA, 2017; pp. 185–190. [Google Scholar]

- Sultan, S.; Ahmad, I.; Dimitriou, T. Container security: Issues, challenges, and the road ahead. IEEE Access 2019, 7, 52976–52996. [Google Scholar] [CrossRef]

- Chandramouli, R.; Chandramouli, R. Security Assurance Requirements for Linux Application Container Deployments; US Department of Commerce, National Institute of Standards and Technology: Washington, DC, USA, 2017.

- Yu, G.; Chen, P.; Zheng, Z. Microscaler: Cost-effective scaling for microservice applications in the cloud with an online learning approach. IEEE Trans. Cloud Comput. 2020. [Google Scholar] [CrossRef]

- Taherizadeh, S.; Stankovski, V.; Grobelnik, M. A capillary computing architecture for dynamic internet of things: Orchestration of microservices from edge devices to fog and cloud providers. Sensors 2018, 18, 2938. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, L. Microservices: Architecting for Continuous Delivery and Devops. In Proceedings of the 2018 IEEE International Conference on Software Architecture (ICSA), Seattle, WA, USA, 30 April–4 May 2018; IEEE: Manhattan, NY, USA, 2018; pp. 39–397. [Google Scholar]

- Debauche, O.; Mahmoudi, S.; Mahmoudi, S.A.; Manneback, P.; Lebeau, F. A new edge architecture for ai-iot services deployment. Procedia Comput. Sci. 2020, 175, 10–19. [Google Scholar] [CrossRef]

- Fernandez, J.-M.; Vidal, I.; Valera, F. Enabling the orchestration of IoT slices through edge and cloud microservice platforms. Sensors 2019, 19, 2980. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Cai, J.; Khan, F.; Rehman, A.U.; Balasubramaniam, V.; Sun, J.; Venu, P. A secured framework for sdn-based edge computing in IOT-enabled healthcare system. IEEE Access 2020, 8, 135479–135490. [Google Scholar] [CrossRef]

- Zhou, K.; Xiang, T. Torchreid: A library for deep learning person re-identification in pytorch. arXiv 2019, arXiv:1910.10093. [Google Scholar]

- Menouer, T. KCSS: Kubernetes container scheduling strategy. J. Supercomput. 2021, 77, 4267–4293. [Google Scholar] [CrossRef]

- Dizdarević, J.; Carpio, F.; Jukan, A.; Masip-Bruin, X. A survey of communication protocols for internet of things and related challenges of fog and cloud computing integration. ACM Comput. Surv. (CSUR) 2019, 51, 1–29. [Google Scholar] [CrossRef]

- de Saxcé, H.; Oprescu, I.; Chen, Y. Is HTTP/2 Really Faster than HTTP/1.1? In Proceedings of the 2015 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Hong Kong, China, 26 April–1 May 2015; IEEE: Manhattan, NY, USA, 2015; pp. 293–299. [Google Scholar]

- Williams, M.; Benfield, C.; Warner, B.; Zadka, M.; Mitchell, D.; Samuel, K.; Tardy, P. Twisted and HTTP/2. In Expert Twisted; Springer: Berlin/Heidelberg, Germany, 2019; pp. 339–363. [Google Scholar]

| Closed-World | Open-World |

|---|---|

| Single-modality Data | Heterogenous Data |

| Bounding Boxes Generation | Raw Images/Videos |

| Sufficient Annotated Data | Unavailable/ Limited Labels |

| Correct Annotation | Noisy Annotation |

| Query Exist in Gallery | Open-Set |

| Less Computing/AI Resources | More Computing/AI Resources |

| Measurement Item | Raspberry Pi 4 | Jetson TX2 | Entire System |

|---|---|---|---|

| Power Off on All Boards | - | - | 2.28 W |

| 4*Jetson TX2 Standby | 3 W | 3 W | 19.64 W |

| 4*Jetson TX2 Full-load Operation | 3.3 W | 9.87 W | 47.07 W |

| Measurement Item | Inference Image Number (S) | Power Consumption |

|---|---|---|

| Jetson TX Max-N Mode Denver*2 2.0G/ARMA57*4 2.0G GPU 1.3G | 15.06 | 10.42 |

| Jetson TX Max-P Mode ARMA57*4 1.12G GPU 1.12G | 14.43 | 9.77 |

| Jetson TX Max-P Core All Mode Denver*2 1.4G/ARMA57*4 1.4G GPU 1.12G | 13.76 | 7.78 |

| Jetson TX Max-Q Mode ARMA57*4 1.2G GPU 0.85G | 12.38 | 6.46 |

| X86 System with 1080 Ti | 36.70 | 48 (Only GPU) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.-H.; Liu, C.-T. Person Re-Identification Microservice over Artificial Intelligence Internet of Things Edge Computing Gateway. Electronics 2021, 10, 2264. https://doi.org/10.3390/electronics10182264

Chen C-H, Liu C-T. Person Re-Identification Microservice over Artificial Intelligence Internet of Things Edge Computing Gateway. Electronics. 2021; 10(18):2264. https://doi.org/10.3390/electronics10182264

Chicago/Turabian StyleChen, Ching-Han, and Chao-Tsu Liu. 2021. "Person Re-Identification Microservice over Artificial Intelligence Internet of Things Edge Computing Gateway" Electronics 10, no. 18: 2264. https://doi.org/10.3390/electronics10182264