Human–Machine Interaction in Driving Assistant Systems for Semi-Autonomous Driving Vehicles

Abstract

:1. Introduction

2. Related Work

3. Proposed Method

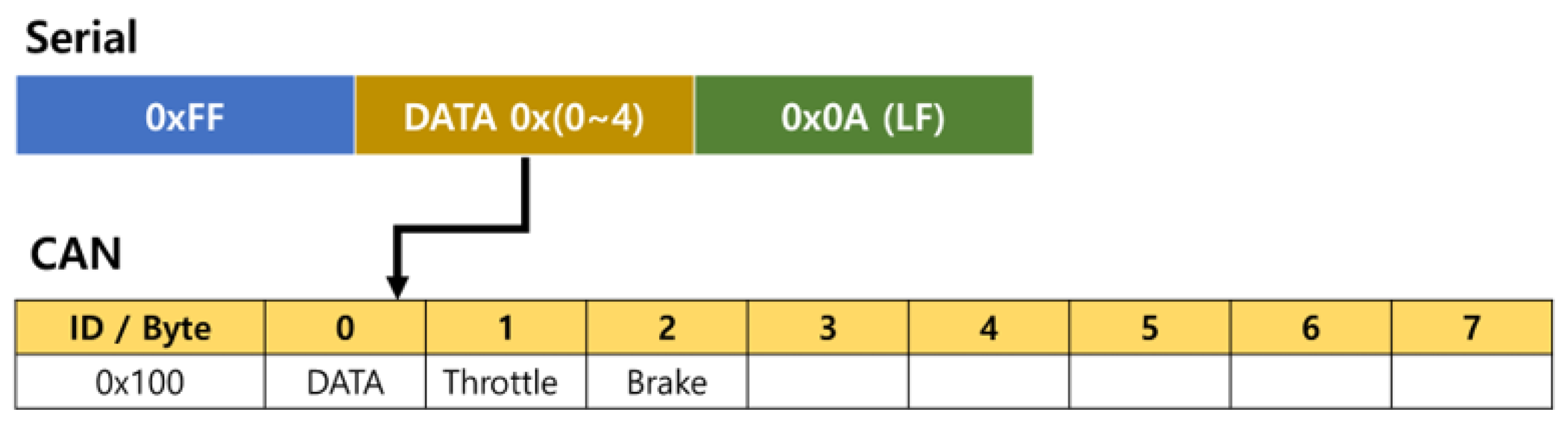

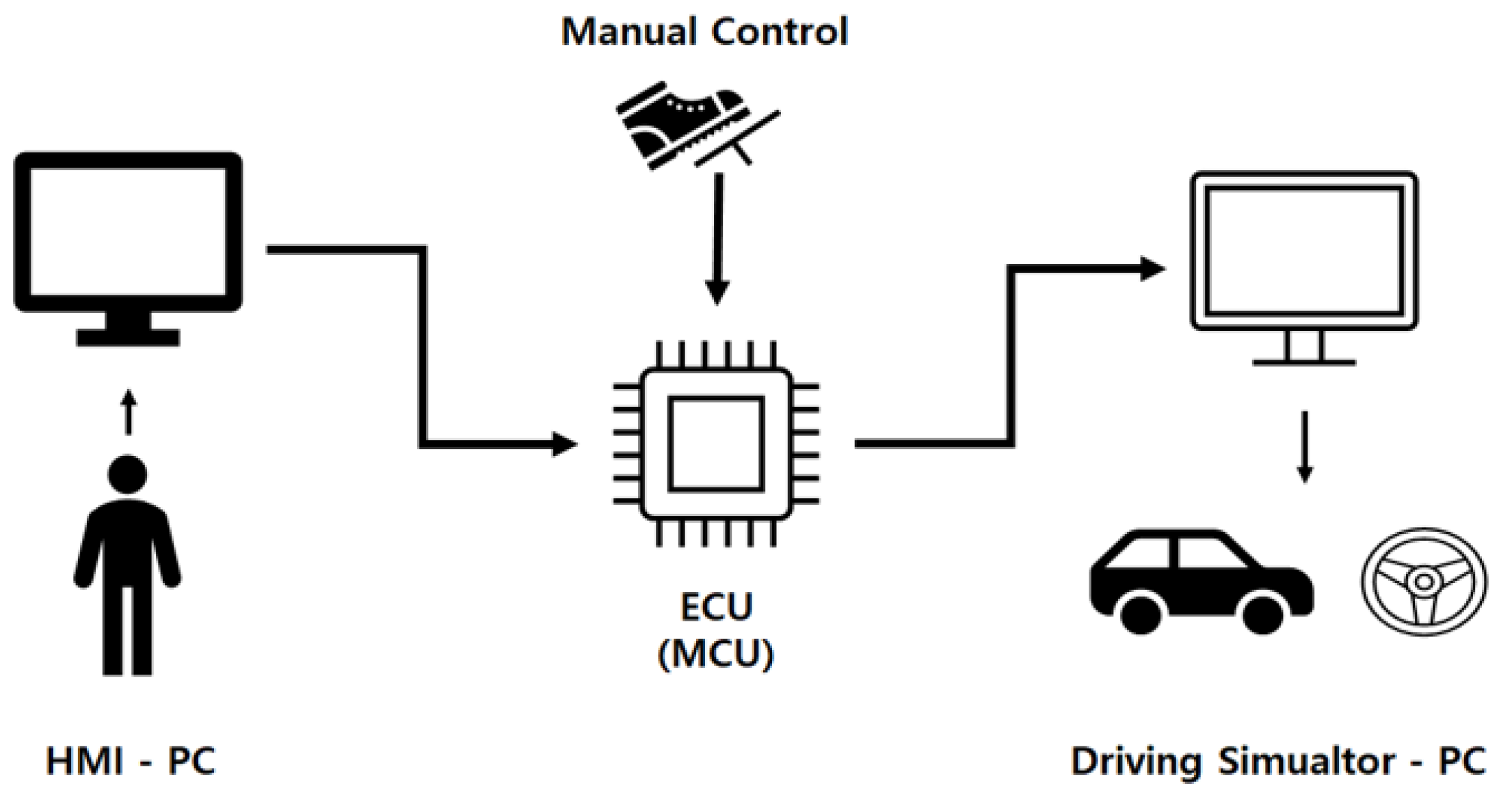

3.1. Semi-Autonomous Driving

3.2. Driver Assistance Systems

3.3. Human–Machine Interface Using Emotion Recogntion

4. Experiment Results

4.1. Experimental Environment

4.2. Experimental Result

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Jeon, M. Chapter 1—Emotions and Affect in Human Factors and Human–Computer Interaction: Taxonomy, Theories, Approaches, and Methods. In Emotions and Affect in Human Factors and Human-Computer Interaction; Jeon, M., Ed.; Academic Press: Cambridge, MA, USA, 2017; pp. 3–26. ISBN 9780128018514. [Google Scholar]

- Egerstedt, M.; Hu, X.; Stotsky, A. Control of a car-like robot using a virtual vehicle approach. In Proceedings of the 37th IEEE Conference on Decision and Control (Cat. No.98CH36171), Tampa, FL, USA, 18 December 1998; Volume 2, pp. 1502–1507. [Google Scholar] [CrossRef]

- Jeon, M.; Walker, B.N.; Yim, J.-B. Effects of specific emotions on subjective judgment, driving performance, and perceived workload. Transp. Res. Part F Traffic Psychol. Behav. 2014, 24, 197–209. [Google Scholar] [CrossRef]

- Javier, I.R.; Ramirez-Mendoza, R.A.; Bustamante-Bello, R.; Pons-Rovira, J.L.; Gonzalez-Vargas, J. Emotion recognition for semi-autonomous vehicles framework. Int. J. Interact. Des. Manuf. 2018, 12, 1447–1454. [Google Scholar]

- Grimm, M.; Kroschel, K.; Harris, H.; Nass, C.; Schuller, B.; Rigoll, G.; Moosmayr, T. On the necessity and feasibility of detecting a driver’s emotional state while driving. In International Conference on Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Pereira, J.L.F.; Rossetti, R.J.F. An Integrated Architecture for Autonomous Vehicles Simulation. In Proceedings of the SAC’12: 27th Annual ACM Symposium on Applied Computing, New York, NY, USA, 26–30 March 2012. [Google Scholar]

- Deter, D.; Wang, C.; Cook, A.; Perry, N.K. Simulating the Autonomous Future: A Look at Virtual Vehicle Environments and How to Validate Simulation Using Public Data Sets. IEEE Signal Process. Mag. 2021, 38, 111–121. [Google Scholar] [CrossRef]

- CARLA Simulator. Available online: https://carla.org./ (accessed on 10 August 2021).

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Blaga, B.; Nedevschi, S. Semantic Segmentation Learning for Autonomous UAVs using Simulators and Real Data. In Proceedings of the 2019 IEEE 15th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 5–7 September 2019; pp. 303–310. [Google Scholar] [CrossRef]

- Zofka, M.R. Pushing ROS towards the Dark Side: A ROS-based Co-Simulation Architecture for Mixed-Reality Test Systems for Autonomous Vehicles. In Proceedings of the 2020 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 14–16 September 2020; pp. 204–211. [Google Scholar] [CrossRef]

- Hanselmann, H. Hardware-in-the-loop simulation testing and its integration into a CACSD toolset. In Proceedings of the Joint Conference on Control Applications Intelligent Control and Computer Aided Control System Design, Dearborn, MI, USA, 15–18 September 1996; pp. 152–156. [Google Scholar] [CrossRef]

- Onuma, Y.; Terashima, Y.; Kiyohara, R. ECU Software Updating in Future Vehicle Networks. In Proceedings of the 2017 31st International Conference on Advanced Information Networking and Applications Workshops (WAINA), Taipei, Taiwan, 27 March 2017; pp. 35–40. [Google Scholar] [CrossRef]

- Johansson, K.H.; Törngren, M.; Nielsen, L. Vehicle Applications of Controller Area Network. In Handbook of Networked and Embedded Control Systems; Hristu-Varsakelis, D., Levine, W.S., Eds.; Control Engineering; Birkhäuser Boston: Cambridge, MA, USA, 2005. [Google Scholar] [CrossRef] [Green Version]

- CAN Specification; Version 2.0; Robert Bosch GmbH: Stuttgart, Germany, 1991.

- Lin, C.-T.; Wu, R.-C.; Jung, T.-P.; Liang, S.-F.; Huang, T.-Y. Estimating Driving Performance Based on EEG Spectrum Analysis. EURASIP J. Adv. Signal Process. 2005, 19, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Schier, M.A. Changes in EEG alpha power during simulated driving: A demonstration. Int. J. Psychophysiol. 2000, 37, 155–162. [Google Scholar] [CrossRef]

- Giannakakis, G.; Grigoriadis, D.; Giannakaki, K.; Simantiraki, O. Review on psychological stress detection using bio-signals. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Sini, J.; Marceddu, A.C.; Violante, M. Automatic emotion recognition for the calibration of autonomous driving functions. Electronics 2020, 9, 518. [Google Scholar] [CrossRef] [Green Version]

- Kamaruddin, N.; Wahab, A. Driver behavior analysis through speech emotion understanding. In Proceedings of the 2010 IEEE Intelligent vehicles symposium, San Diego, CA, USA, 21–24 June 2010. [Google Scholar]

- Sheng, W.; Ou, Y.; Tran, D.; Tadesse, E.; Liu, M. An integrated manual and autonomous driving framework based on driver drowsiness detection. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Sini, J.; Marceddu, A.; Violante, M.; Dessì, R. Passengers’ Emotions Recognition to Improve Social Acceptance of Autonomous Driving Vehicles. In Progresses in Artificial Intelligence and Neural Systems; Springer: Singapore, 2021; pp. 25–32. [Google Scholar]

- Marceddu, A.C. Automatic Recognition And Classification Of Passengers’ Emotions In Autonomous Driving Vehicles. Master’s Thesis, Diss. Politecnico di Torino, Torino, Italy, 2019. [Google Scholar]

- Du, N.; Zhou, F.; Pulver, E.M.; Tilbury, D.M.; Robert, L.P.; Pradhan, A.K.; Yang, X.J. Examining the effects of emotional valence and arousal on takeover performance in conditionally automated driving. Transp. Res. Part C Emerg. Technol. 2020, 112, 78–87. [Google Scholar] [CrossRef]

- Steinhauser, K.; Leist, F.; Maier, K.; Michel, V.; Pärsch, N.; Rigley, P.; Wurm, F.; Steinhauser, M. Effects of emotions on driving behavior. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 150–163. [Google Scholar] [CrossRef]

- Abdu, R.; Shinar, D.; Meiran, N. Situational (state) anger and driving. Transp. Res. Part F Traffic Psychol. Behav. 2012, 15, 575–580. [Google Scholar] [CrossRef]

- Hancock, G.M.; Hancock, P.A.; Janelle, C.M. The Impact of Emotions and Predominant Emotion Regulation Technique on Driving Performance. Work 2012, 41, 3608–3611. [Google Scholar] [CrossRef] [Green Version]

- Ünal, A.B.; de Waard, D.; Epstude, K.; Steg, L. Driving with music: Effects on arousal and performance. Transp. Res. Part F Traffic Psychol. Behav. 2013, 21, 52–65. [Google Scholar] [CrossRef]

- Tefft, B.C.; Arnold, L.S.; Grabowski, J.G. AAA Foundation for Traffic Safety; AAA Foundation for Traffic Safety: Washington, DC, USA, 2016. [Google Scholar]

- Dingus, T.A.; Guo, F.; Lee, S.; Antin, J.F.; Perez, M.; Buchanan-King, M.; Hankey, J. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. USA 2016, 113, 2636–2641. [Google Scholar] [CrossRef] [Green Version]

- Underwood, G.; Chapman, P.; Wright, S.; Crundall, D. Anger while driving. Transp. Res. Part F Traffic Psychol. Behav. 1999, 2, 55–68. [Google Scholar] [CrossRef]

- Hu, H.; Zhu, Z.; Gao, Z.; Zheng, R. Analysis on Bio-signal Characteristics to Evaluate Road Rage of Younger Drivers: A Driving Simulator Study. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 17 December 2018; pp. 156–161. [Google Scholar] [CrossRef]

- McKenna, F.P. The human factor in driving accidents An overview of approaches and problems. Ergonomics 1982, 25, 867–877. [Google Scholar] [CrossRef]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Pritam, S.; Etemad, A. Self-supervised ECG representation learning for emotion recognition. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

- Meshram, H.A.; Sonkusare, M.G.; Acharya, P.; Prakash, S. Facial Emotional Expression Regulation to Control the Semi-Autonomous Vehicle Driving. In Proceedings of the 2020 IEEE International Conference for Innovation in Technology (INOCON), Bangalore, India, 6–8 November 2020. [Google Scholar]

- Hussein, A.; García, F.; Olaverri-Monreal, C. ROS and Unity Based Framework for Intelligent Vehicles Control and Simulation. In Proceedings of the 2018 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Madrid, Spain, 12–14 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Jin, W.; Baracos, P. A Scalable Hardware-in-the-Loop System for Virtual Engine and Virtual Vehicle Applications No. 2003-01-1367. SAE Tech. Pap. 2003. [Google Scholar] [CrossRef]

- James, L. Road Rage and Aggressive Driving: Steering Clear of Highway Warfare; Prometheus Books: Amherst, NY, USA, 2009. [Google Scholar]

- Völkel, S.T.; Graefe, J.; Schödel, R.; Häuslschmid, R.; Stachl, C.; Au, Q. I Drive My Car and My States Drive Me: Visualizing Driver’s Emotional and Physical States. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018. [Google Scholar]

- Russell, J.A.; Weiss, A.; Mendelsohn, A.G. Affect grid: A single-item scale of pleasure and arousal. J. Personal. Soc. Psychol. 1989, 57, 493. [Google Scholar] [CrossRef]

- Angel, R.M.; Nunes, L. Mental load and loss of control over speed in real driving. Towards a theory of attentional speed control. Transp. Res. Part F Traffic Psychol. Behav. 2002, 5, 111–122. [Google Scholar]

- Christelle, P.; Lemercier, C.; Cellier, J.-M. Emotions drive attention: Effects on driver’s behaviour. Saf. Sci. 2009, 47, 1254–1259. [Google Scholar]

- Deffenbacher, J.L.; Deffenbacher, D.M.; Lynch, R.S.; Richards, T.L. Anger, aggression, and risky behavior: A comparison of high and low anger drivers. Behav. Res. Ther. 2003, 41, 701–718. [Google Scholar] [CrossRef]

- Vanlaar, W.; Simpson, H.; Mayhew, D. Fatigued and drowsy driving: A survey of attitudes, opinions and behaviors. J. Saf. Res. 2008, 39, 303–309. [Google Scholar] [CrossRef]

- Brown, T.; Johnson, R.; Milavetz, G. Identifying periods of drowsy driving using EEG. Ann. Adv. Automot. Med. 2013, 57, 99. [Google Scholar]

- Dong, Y.; Hu, Z.; Uchimura, K.; Murayama, N. Driver inattention monitoring system for intelligent vehicles: A review. IEEE Trans. Intell. Transp. Syst. 2010, 12, 596–614. [Google Scholar] [CrossRef]

- Mantini, D.; Perrucci, M.G.; Del Gratta, C.; Romani, G.L.; Corbetta, M. Electrophysiological signatures of resting state networks in the human brain. Proc. Natl. Acad. Sci. USA 2007, 104, 13170–13175. [Google Scholar] [CrossRef] [Green Version]

- Topic, A.; Russo, M. Emotion recognition based on EEG feature maps through deep learning network. Eng. Sci. Technol. Int. J. 2021. [Google Scholar] [CrossRef]

- Samara, A.; Menezes, M.L.R.; Galway, L. Feature Extraction for Emotion Recognition and Modelling Using Neurophysiological Data. In Proceedings of the 2016 15th International Conference on Ubiquitous Computing and Communications and 2016 International Symposium on Cyberspace and Security (IUCC-CSS), Granada, Spain, 14–16 December 2016; pp. 138–144. [Google Scholar]

- Supratak, A.; Wu, C.; Dong, H.; Sun, K.; Guo, Y. Survey on feature extraction and applications of bio-signals. In Machine Learning for Health Informatics. Springer: Cham, Switzerland, 2016; pp. 161–182. [Google Scholar] [CrossRef] [Green Version]

- Alian, A.A.; Kirk, H.S. Photoplethysmography. Best Pract. Res. Clin. Anaesthesiol. 2014, 28, 395–406. [Google Scholar] [CrossRef]

- Jacobs, K.W.; Frank, E.H., Jr. Effects of four psychological primary colors on GSR, heart rate and respiration rate. Percept. Mot. Ski. 1974, 38, 763–766. [Google Scholar] [CrossRef]

- Fukushima, K.; Miyake, S. Neocognitron: A Self-Organizing Neural Network Model for a Mechanism of Visual Pattern Recognition. In Lecture Notes in Biomathematics; Springer: Berlin/Heidelberg, Germany, 1982; pp. 267–285. [Google Scholar] [CrossRef]

- Lin, Y.-Y.; Zheng, W.-Z.; Chu, W.; Han, J.-Y.; Hung, Y.-H.; Ho, G.-M.; Chang, C.-Y.; Lai, Y.-H. A Speech Command Control-Based Recognition System for Dysarthric Patients Based on Deep Learning Technology. Appl. Sci. 2021, 11, 2477. [Google Scholar] [CrossRef]

- Maeng, J.-H.; Kang, D.-H.; Kim, D.-H. Deep Learning Method for Selecting Effective Models and Feature Groups in Emotion Recognition Using an Asian Multimodal Database. Electronics 2020, 9, 1988. [Google Scholar] [CrossRef]

- Dixit, V.V.; Chand, S.; Nair, D.J. Autonomous Vehicles: Disengagements, Accidents and Reaction Times. PLoS ONE 2016, 11, e0168054. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Participants and Modalities | |

|---|---|

| Participants | Total 62 (males: 28 and females: 34) |

| Recorded signals | PPG (1 kHz), GSR (1 kHz) |

| Self-report | Arousal, valence |

| Session 1 | 5 videos (sad: 1, happy: 1, angry: 2, scared: 1) |

| PPG, GSR signals | |

| Device | Hardware | Software |

|---|---|---|

| Simulator PC | CPU-Intel® Core™ i5-7500 GPU-GeForce GTX 1080 8G RAM 32 GB OS-Ubuntu 20.04.2 LTS | CARLA Simulator-0.9.10 ROS-Noetic |

| HMI PC | CPU-Intel® Core™ i7-9700 GPU-GeForce GTX 2080TI 12G RAM 64 GB OS-Window 10 | Python 3.6.0 Tensorflow 2.4.0 |

| ECU(MCU) | Borad- STM3240G-EVAL CORE- ARM® Cortex®-M4 Chip- STM32F407IGH6 |

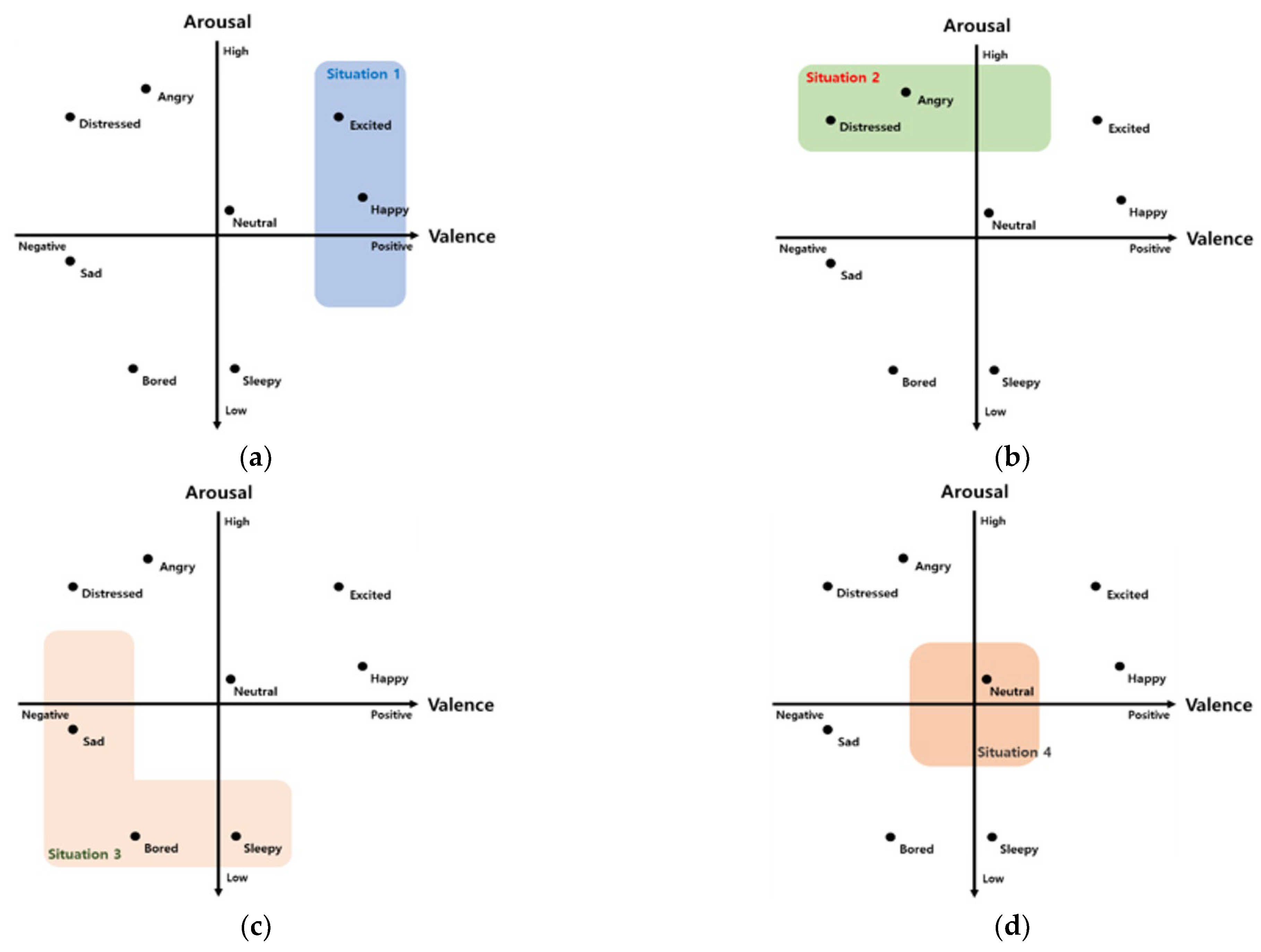

| (a) Scenario 1 (Speed perception ability) Data | (b) Scenario 2 (incident reaction ability) Data | ||||

| Target | joy, happiness | Target | angry, upset | ||

| Arousal | 33–100 | Arousal | 33–100 | ||

| Valence | −33–100 | Valence | −100–33 | ||

| Total segments | 5400 segments in 1 pulse unit | Total segments | 5400 segments in 1 pulse unit | ||

| Average accuracy | Arousal | 75% | Average accuracy | Arousal | 70% |

| Valence | 72% | Valence | 82% | ||

| (c) Scenario 3 (Driving situation judgment ability) Data | (d) Scenario 4 (the ability to drive normally) Data | ||||

| Target | tiredness, boredom | Target | neutral feelings | ||

| Arousal | −100–33 | Arousal | −33–33 | ||

| Valence | −100–33 | Valence | −33–33 | ||

| Total segments | 5400 segments in 1 pulse unit | Total segments | 5400 segments in 1 pulse unit | ||

| Average accuracy | Arousal | 70% | Average accuracy | Arousal | 85% |

| Valence | 84% | Valence | 70% | ||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Scenario 1 | 319 ms | 342 ms | 291 ms | 321 ms | 387 ms | 345 ms | 340 ms | 384 ms | 389 ms | 307 ms | 342.5 ms |

| 332 ms | 356 ms | 315 ms | 280 ms | 323 ms | 334 ms | 319 ms | 358 ms | 348 ms | 326 ms | 329.1 ms | |

| Scenario 2 | 352 ms | 376 ms | 354 ms | 410 ms | 307 ms | 362 ms | 329 ms | 338 ms | 368 ms | 383 ms | 357.9 ms |

| 326 ms | 375 ms | 389 ms | 360 ms | 340 ms | 376 ms | 394 ms | 386 ms | 389 ms | 305 ms | 364 ms | |

| Scenario 3 | 359 ms | 334 ms | 364 ms | 298 ms | 360 ms | 375 ms | 368 ms | 312 ms | 360 ms | 355 ms | 348.5 ms |

| 387 ms | 307 ms | 370 ms | 319 ms | 332 ms | 354 ms | 385 ms | 358 ms | 323 ms | 303 ms | 343.8 ms | |

| Scenario 4 | 398 ms | 389 ms | 362 ms | 378 ms | 308 ms | 356 ms | 381 ms | 343 ms | 396 ms | 355 ms | 366.6 ms |

| 376 ms | 379 ms | 337 ms | 344 ms | 378 ms | 356 ms | 343 ms | 364 ms | 394 ms | 345 ms | 361.6 ms |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Scenario 1 | 62.7 ms | 68.1 ms | 60.7 ms | 72.4 ms | 63.4 ms | 68.4 ms | 62.1 ms | 61.8 ms | 64.8 ms | 71.8 ms | 65.62 ms |

| Scenario 2 | 64.6 ms | 66.4 ms | 64.6 ms | 73.1 ms | 65.6 ms | 73.5 ms | 68.4 ms | 66.1 ms | 60.1 ms | 70.3 ms | 67.27 ms |

| Scenario 3 | 67.1 ms | 65.8 ms | 61.2 ms | 68.5 ms | 64.7 ms | 61.3 ms | 74.2 ms | 65.4 ms | 60.9 ms | 73.1 ms | 66.22 ms |

| Scenario 4 | 63.5 ms | 67.1 ms | 70.2 ms | 64.3 ms | 64.9 ms | 61.2 ms | 68.9 ms | 60.3 ms | 60.8 ms | 72.7 ms | 65.39 ms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.-G.; Kang, D.-H.; Kim, D.-H. Human–Machine Interaction in Driving Assistant Systems for Semi-Autonomous Driving Vehicles. Electronics 2021, 10, 2405. https://doi.org/10.3390/electronics10192405

Lee H-G, Kang D-H, Kim D-H. Human–Machine Interaction in Driving Assistant Systems for Semi-Autonomous Driving Vehicles. Electronics. 2021; 10(19):2405. https://doi.org/10.3390/electronics10192405

Chicago/Turabian StyleLee, Heung-Gu, Dong-Hyun Kang, and Deok-Hwan Kim. 2021. "Human–Machine Interaction in Driving Assistant Systems for Semi-Autonomous Driving Vehicles" Electronics 10, no. 19: 2405. https://doi.org/10.3390/electronics10192405

APA StyleLee, H.-G., Kang, D.-H., & Kim, D.-H. (2021). Human–Machine Interaction in Driving Assistant Systems for Semi-Autonomous Driving Vehicles. Electronics, 10(19), 2405. https://doi.org/10.3390/electronics10192405