1. Introduction

Spaceborne synthetic aperture radar (SAR) is a kind of high-resolution microwave imaging technology which has many characteristics, such as all-time, all-weather, high-resolution and a long detection distance. It is a radar that uses the Doppler information generated by the relative motion between the radar platform and the detected target, and uses signal processing method to synthesize a larger antenna aperture [

1,

2,

3]. As it can penetrate clouds, soil and vegetation, it has become more and more widely used in many important fields [

4,

5,

6]. Recent publications have reviewed the applications of satellite remote sensing techniques for hazards manifested by solid earth processes, including earthquakes, volcanoes, floods, landslides, and coastal inundation [

7,

8,

9].

The world’s first SAR imaging satellite, SEASAT-1, was launched in 1978, proving the feasibility of microwave imaging radar in Earth observation [

10]. Since then, many countries have scrambled to carry out spaceborne SAR research, and it has been used as an important means of Earth observation in recent years. For example, the Gaofen-3 satellite launched by China in 2016 is a C-band multi-polarization SAR satellite that can quickly detect and assess marine disasters, and it could improve China’s disaster prevention and mitigation capabilities [

11]. The Sentinel-B satellite launched by the European Space Agency (ESA) in 2016 and the previously launched Sentinel-A satellite belong to the Sential-1 SAR satellite mission [

12]. Its purpose is to provide an independent operational capability for continuous radar mapping of the Earth with enhanced revisit frequency, coverage, timeliness and reliability for operational services and applications requiring long time series. In 2019, SpaceX successfully launched the Radar Satellite Constellation Mission (RCM) satellite with a Falcon-9 rocket at Vandenberg Air Force Base in the United States [

13]. The satellite has a wide range of applications, including ice and iceberg monitoring, marine winds, oil pollution monitoring and response, and ship detection, etc. In recent years, natural disasters have occurred in various places, and many countries have put forward their requirements regarding the increased performance of SAR satellites. The future spaceborne SAR will develop in the direction of multi-band, high-resolution, and ultra-wide amplitude. The National Aeronautics and Space Administration (NASA) plans to launch the NASA—Indian Space Research Organization (ISRO) Synthetic Aperture Radar (NISAR) mission satellite in 2022 [

14]. It will be the first satellite to use two different frequencies (L-band and S-band) to measure changes in the Earth’s surface by less than 1 cm. The German Aerospace Agency plans to launch TerraSAR-NG satellites in 2025, which can achieve a resolution of 0.25 m [

15,

16].

Most of the above mentioned missions place high demands on the real-time performance of SAR data processing. The traditional processing method is that the satellite stores the raw data and sends them to the equipment on the ground for processing. With the continuous improvement of high-resolution and low-latency requirements for spaceborne SAR, traditional data processing methods cannot meet these needs. In recent years, the rapid development of large-scale integrated circuit technology has made on-board real-time processing of spaceborne SAR possible. This technology can greatly reduce the pressure of satellite-to-ground data transmission, improve the efficiency of information acquisition, and enable policymakers to plan and respond quickly. Spaceborne SAR real-time processing has become a research highlight in the field of aerospace remote sensing. In 2010, the ESA funded the National Aerospace Laboratory of the Netherlands to develop the next-generation multi-mode SAR On-board Payload Data Processor (OPDP) [

17]. The processor consists of a LEON2 FT anti-irradiation processing chip, an FFTC ASIC chip and a Synchronous Dynamic Random-Access Memory (SDRAM) structure designed by EDAC that can complete 1K×1K granularity SAR imaging processing, which will be applied to the ESA EASCOREH2O mission, the BIOMASS mission and the panelSAR satellite. In 2016, the California Institute of Technology (CIT) completed an Unmanned Aerial Vehicle (UAV) SAR real-time processing system using a single Xilinx Virtex-5 space-grade Field-Programmable Gate Array (FPGA) chip [

18]. The system is the first that applies space-grade FPGA to SAR real-time signal processing. It provides a basis for the use of space-grade FPGA in spaceborne SAR on-board real-time processing scenarios. SpaceCube is a series of space processors developed by NASA in the United States. SpaceCube established a hybrid processing approach combining radiation-hardened and commercial components while emphasizing a novel architecture harmonizing the best capabilities of Central Processing Unit (CPU), Digital Signal Processor (DSP) and FPGA [

19]. The latest SpaceCube v3.0 processing board launched in 2019 is equipped with Xilinx Kintex UltraScale, Xilinx Zynq MPSoC, Double-Data-Rate Three Synchronous Dynamic Random-Access Memory (DDR3 SDRAM) and NAND Flash chips, which can complete on-board real-time data processing missions, and provide a processing solution for next-generation needs in both science and defense missions [

20]. In 2020, NASA’s Goddard Space Flight Center developed a prototype design for the NASA SpaceCube intelligent multi-purpose system (IMPS) to serve high-performance processing needs related to artificial intelligence (AI), on-board science data processing, communication and navigation, and cyber security applications [

21,

22].

As indicated by the development of spaceborne SAR real-time processing technology, a high-performance on-board real-time processing platform needs to be equipped with processors and memories that meet many restrictions regarding power, performance and capacity constraints. CPU, DSP, FPGA, Graphics Processing Unit (GPU) and Application Specific Integrated Circuit (ASIC) are superior, to some extent, when it comes to real-time processing. Although CPU and DSP have strong design flexibility, they cannot provide enough computing resources, and there will be bottlenecks in massive data processing. GPU has strong processing capabilities, but has high power consumption and is not suitable for on-board scenarios. ASIC is a device that can be customized and has sufficient processing power, but the development time is long. FPGA has irreplaceable advantages in terms of on-chip resource storage, computing capabilities, reconfigurable characteristics, and can meet the requirements of large throughput and real-time processing under spaceborne conditions [

23,

24]. The on-chip storage resources of the above-mentioned processors are very limited, which cannot meet the requirements for massive data processing in the SAR algorithm. Taking Stripmap SAR imaging of 16,384 × 16,384 granularity raw data (5 m resolution) as an example, the required data storage space is up to 2 GB [

25]. Therefore, a high-speed, large-capacity external memory must be selected as the storage medium for SAR data. Hard Disk Drives (HDDs) have a higher storage capacity, but their stability is limited. Solid State Drives (SSDs) have strong stability, but a shorter lifetime. Flash memory is a non-volatile form of memory that can store data for a long time, but it has low data transmission efficiency and high power consumption. DDR3 SDRAM is a new type of memory chip developed on the basis of SDRAM chips [

26]. It has double data transmission rate and meets the requirements of on-board processing in terms of capacity, speed, volume, and power consumption [

27,

28,

29]. In summary, FPGA and DDR3 SDRAM are ideal processor and memory formats for on-board data processing.

Due to the frequent cross-row access in DDR3, the matrix transposition operation has become a bottleneck restricting SAR real-time imaging. In recent years, researchers have proposed a variety of hardware implementation methods for matrix transposition in SAR real-time system. Berkin et al. [

30] optimized memory access method for multi-dimensional FFT by using block data layout schemes. However, the method was only optimized for multi-dimensional FFT algorithm, was not suitable for spaceborne SAR algorithm. Mario et al. [

31] optimized the matrix transposition in a continuous flow in a hardware system. However, the method was not suitable for non-squared matrices. Yang et al. [

32] used the matrix-block linear mapping method to improve the data access bandwidth. However, the data controller in this method requires 8 cache RAMs, which occupies more on-chip storage resources. Li et al. [

33] made the range and azimuth data bandwidth reach complete equilibrium by using matrix-block cross mapping method. However, there is only one data channel in this method, which is less flexible. Sun et al. [

34] designed a dual-channel memory controller and reduced the use of cache resources. However, the data access efficiency in this method is low.

In order to solve the problem of inefficient matrix transposition due to the data transfer characteristics between FPGA and DDR3 SDRAM, an efficient dual-channel data storage and access method for spaceborne SAR real-time processing is proposed in this paper. First, we analyzed the mapping method of the Chirp Scaling (CS) algorithm and found that the maximum efficiency burden of the system mainly occurs in the matrix transposition operation due to the activation and precharge time in DDR3 SDRAM chip. Then, we proposed an optimized storage scheme that can achieve complete equilibrium of data bandwidth in range and azimuth. On this basis, a dual-channel pipeline access method is adopted to ensure a high data access bandwidth. In addition, we propose a hardware architecture to maintain high hardware efficiency. The main contributions of this paper are summarized as follows:

The reading and writing efficiency of the conventional matrix transposition method was modeled and calculated using the time parameters of DDR3, and we found that the cross-row read and write efficiency was reduced due to the active and precharge time in DDR3.

An optimized dual-channel data storage and access method is proposed. The matrix block three-dimensional mapping method is used to achieve the bandwidth equilibrium in range access and azimuth access, the sub-matrix data cross-mapping is used to realize the efficient use of cache Random-Access Memorys (RAMs), and the dual-channel pipeline processing is used to improve the processing efficiency.

An efficient hardware architecture is proposed to implement the above method. In this architecture, a register manager module is used to control the work mode, and an optimized address update method is used to reduce the utilization of on-chip computing resources.

We verified the proposed hardware architecture in a processing board equipped with Xilinx XC7VX690T FPGA (Xilinx, San Jose, CA, USA) and Micron MT4A1K512M16 DDR3 SDRAM (Micron Technology, Boise, ID, USA), and evaluated the data bandwidth. The experimental results show that the read data bandwidth is 10.24 GB/s and the write data bandwidth is 8.57 GB/s, which is better than the existing implementations.

The rest of this paper is organized as follows.

Section 2 introduces the data access efficiency of the SAR algorithm. In

Section 3, the optimized dual-channel data storage and access method is introduced in detail. The proposed hardware architecture is illustrated in

Section 4. The experiments and results are shown in

Section 5. Finally, the conclusions are presented in

Section 6.

3. Optimized Dual-Channel Data Storage and Access Method

The conventional SAR data storage method will make data access in the azimuth direction inefficient extremely, and the processing engine will be in a waiting state when the reading/writing operation is performed, thereby affecting the real-time performance of the entire system. To solve this problem, we propose an optimized dual-channel SAR data storage and access method, which maps the logical addresses in the SAR data matrix to the physical addresses in the DDR3 chip by using a new mapping method instead of the conventional linear mapping method. With this method, both the range direction and azimuth direction can reach high data bandwidths, which are more suitable for the real-time processing of spaceborne SAR.

3.1. The Matrix Block Three-dimensional Mapping

The matrix block three-dimensional mapping method makes full use of the feature of cross-bank priority data access in DDR3 chips [

38]. First of all, the SAR raw data matrix is divided into several sub-matrices of equal size. Then, a three-dimensional mapping method is used to map the continuous sub-matrices to different rows in different banks [

32,

33,

34]. This method can maximize the equilibrium of the two-dimensional access bandwidth of the data matrix and meet the real-time requirements of the system.

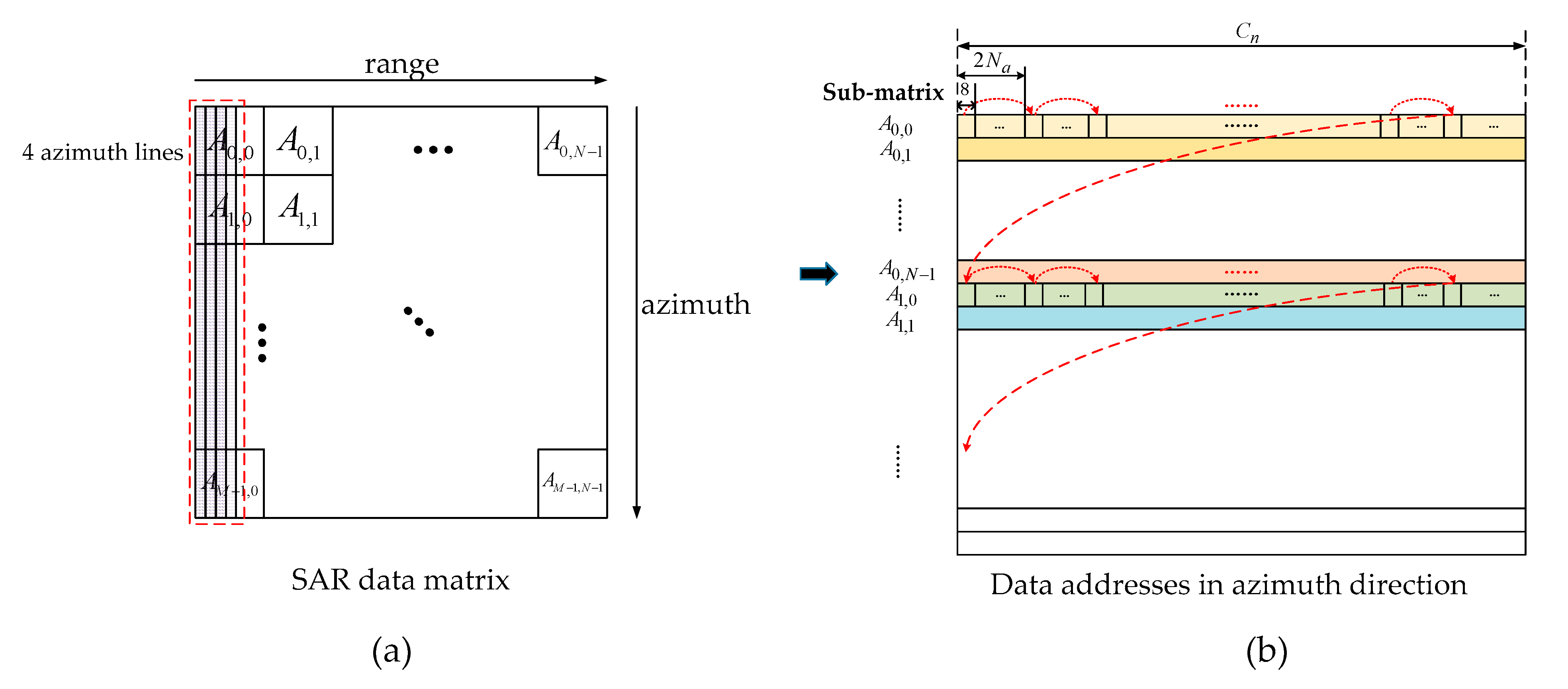

The SAR data matrix can be described as , , . Where, is the number of data in the azimuth direction of the matrix, and is the number of data in the range direction of the matrix. As the SAR imaging algorithm requires multiple FFT operations, both and are positive integer powers of two.

The matrix is chunked into

M ×

N sub-matrices of the same shape, and the sub-matrix is recorded as

, where

M and

N are the signs of the sub-matrix in the azimuth direction and range direction, as shown in

Figure 6. The size of the sub-matrix is

, thus,

,

. The data of the sub-matrix are mapped to a row in DDR3, so that

, where

is the number of columns in DDR3.

After the matrix is divided into various sub-matrices, each sub-matrix is mapped to a row of DDR3 through three-dimensional mapping method, as shown in

Figure 7. The three-dimensional mapping method maps consecutive sub-matrices to different rows in different banks by using the priority access feature of cross-bank data in DDR3, effectively improving data access efficiency [

38].

3.2. Sub-Matrix Cross-Storage Method

In the conventional method, the data in the sub-matrix are stored in a row in DDR3 using linear mapping. As DDR3 adopts the burst transmission mode, each read or write operation will complete the access of eight consecutive memory cells in DDR3 when the burst length is eight in a conventional linear mapping strategy. The eight data accessed each time belong to the same range line and eight different azimuth lines. In the SAR imaging system, after the data are read, they need to be cached in the on-chip RAMs first, and then sent to the processing engine such as an FFT processor after the entire row or column of the SAR data matrix is accessed. In range access mode, the eight data transmitted in a single burst belong to one range line, so only one RAM is needed for the cache. However, in azimuth access mode, since the eight data transmitted in a single burst belong to eight azimuth lines, eight RAMs are needed for data caching. In this way, the eight corresponding processing units need to be designed, so that they consume more on-chip resources.

In this paper, we optimize the mapping of single burst transmission data by using the cross-storage method as shown in

Figure 8, which reduces the use of cache RAMs and on-chip computing resources. “Cross-storage” means that the data of two adjacent range lines are alternately mapped to one row of DDR3, rather than using the conventional linear mapping method. In the proposed method, the eight data in each burst transmission in DDR3 belong to two adjacent range lines and four adjacent azimuth lines. Therefore, only four RAMs are required for data caching, and the utilization of the RAMs in the range direction is 50% and, in the azimuth direction, 100%, which is significantly improved compared with the linear mapping method. In the SAR real-time imaging system, a reduction in cache resources means that more computing resources can work at the same time, giving the system higher parallel processing capabilities.

According to the “cross-storage” method, the address mapping rule can be obtained. First, determine the sub-matrix

where the data logical address

is located:

where

,

.

Furthermore, the mapping rule between the logical address

and the physical storage address

is obtained:

where

i is the row address,

j is the column address,

k is the bank address, “

floor” means round down, and “mod” means take the remainder.

3.3. De-Cross Access and Caching Method

3.3.1. Range Data Access and Caching

In the range access mode, range lines are accessed one by one according to their order in the SAR data matrix. As the burst length is eight, each access can obtain data of two range lines. Since a sub-matrix datum with a size of

is mapped to a row of DDR3, there are

data in each row of DDR3 at the same range line, and the data of two adjacent range lines are cross-stored in DDR3. When reading the data, read

consecutive data in a row of DDR3 (the

data belong to four consecutive range lines in the SAR data matrix), and then jump to the next row to read the remaining data on the four range lines until all data on these four lines have been read, as shown in

Figure 9. When the range data are written back to DDR3 after being processed by the processing engine, the same address change method is used.

Since the data of two adjacent range lines are cross-stored in DDR3, among the eight data obtained by each burst transmission, two adjacent data belong to different range lines. When performing data caching, the burst data corresponding to the first two range lines needs to be decross-mapped to RAM0 and RAM1, respectively, and the burst data corresponding to the next two range lines need to be decross-mapped to RAM2 and RAM3, respectively, as shown in

Figure 10. Therefore, that the data storage order in the cache RAMs is consistent with the SAR data matrix, which is more convenient for the processing engine.

3.3.2. Azimuth Data Access and Caching

In the azimuth access mode, azimuth lines are accessed one by one according to the order in the SAR data matrix. As the burst length is eight, each burst transmission can obtain the data of four azimuth lines. Since a sub-matrix datum with a size of

is mapped to a row of DDR3,

data in each row of DDR3 are on the same azimuth line, and the data of four adjacent azimuth lines are cross-stored in DDR3. When reading the data, read the

data in one row of DDR3 (the

data belong to the four consecutive azimuth lines in the SAR data matrix), and then jump to the next row to read the remaining data on these four azimuth lines until all data on these four lines have been read, as shown in

Figure 11. The distance between the addresses of the two adjacent burst transmissions of the same azimuth line is

columns. After reading a row, jump to the corresponding row to read the remaining data in this azimuth direction until the data of these four azimuth lines have been read. When the azimuth data are written back to DDR3 after being processed by the computing unit, the same address jump method is used.

Since the four adjacent azimuth lines are cross- stored in DDR3, among the eight data obtained by each burst transmission, each pair of adjacent data belongs to a different azimuth line. When performing data caching, data needs to be decross-mapped to RAM0, RAM1, RAM2 and RAM3, as shown in

Figure 12. Therefore, that the data storage order in the cache RAMs is consistent with the SAR data matrix, which is convenient for the processing engine.

Taking a 16,384 × 16,384 SAR matrix as an example, the size of the sub-matrix is 32 × 32. According to the above method, 128 data must be read in each row for range data access mode or for azimuth data access mode in DDR3. Thus, each row needs to complete 16 burst data transmissions, before proceeding to the next row. As the matrix block three-dimensional mapping method proposed in

Section 3.1 uses the priority access feature of cross-bank data in DDR3, there is no interval between the precharge command and the next active command, so there is no

in the efficiency calculation equations. The reading and writing efficiency when using the access method proposed in range access mode or in azimuth access mode can be calculated as follows:

From the comparison results in

Table 2, the method proposed can achieve complete equilibrium in terms of the two-dimensional data bandwidth. Although the range data efficiency is slightly reduced, the azimuth data reading efficiency and writing efficiency are increased to more than 6.7 and 7.8 times, respectively compared with the conventional method. Thus, the azimuthal data access bandwidth is not a bottleneck restricting the real-time performance of the spaceborne SAR system in the proposed method.

3.4. Dual-Channel Pipeline Processing

With the continuous development of integrated circuit technology, the volume, power consumption, and reliability of memory chips have improved significantly. SAR imaging systems often have dual-channel memory units. “Dual-channel” means that two different data channels can be addressed and accessed separately without affecting each other. This section mainly discusses the dual-channel pipeline processing method.

The dual-channel pipeline processing method requires independent dual-channel DDR3 (hereinafter referred to as DDR3A and DDR3B), and a dual-channel cache RAM that matches the dual-channel DDR3. The schematic diagram of dual-channel pipeline processing is shown in

Figure 13; the four steps of this procedure are as follows:

Step 1: Store the SAR raw data in DDR3A using the mapping method proposed;

Step 2: Read the required data from DDR3A and save them to RAM for caching. Send data to the processing engine for calculation when the RAM is full; after the calculation is completed, the data are written into the RAM corresponding to DDR3B, and the next batch of data to be processed is read from DDR3A at the same time, so as to create a loop until the entire SAR data matrix is processed. The processing results are all stored in DDR3B;

Step 3: Read the required data from DDR3B and save them to the RAM for caching. Send data to the processing engine for calculation when the RAM is full; after the calculation is completed, the data are written into the RAM corresponding to DDR3A, and the next batch of data to be processed is read from DDR3B at the same time, so as to create a loop until the entire SAR data matrix is processed. The processing results are all stored in DDR3A;

Step 4: Repeat Step 2 and Step 3 until all calculations related to the SAR data matrix in the SAR algorithm flow are completed.

The dual-channel pipeline processing method has higher processing efficiency compared with the single-channel processing method. Assuming that the time for DDR3 to read a piece of data is , the time for writing a piece of data is , and the processing time for the processing engine to calculate a piece of data is . In the single-channel processing method, the data need to be written back to DDR3 after the calculation is completed, and then the next batch of data can be read. The total processing time of the entire data matrix is , where N is the number of batches of processed data. In dual-channel pipeline processing, the reading and writing of the two channels can be performed at the same time. At this time, the total processing time of the entire data matrix is , which is significantly improved compared with the single-channel processing method.

4. Hardware Implementation

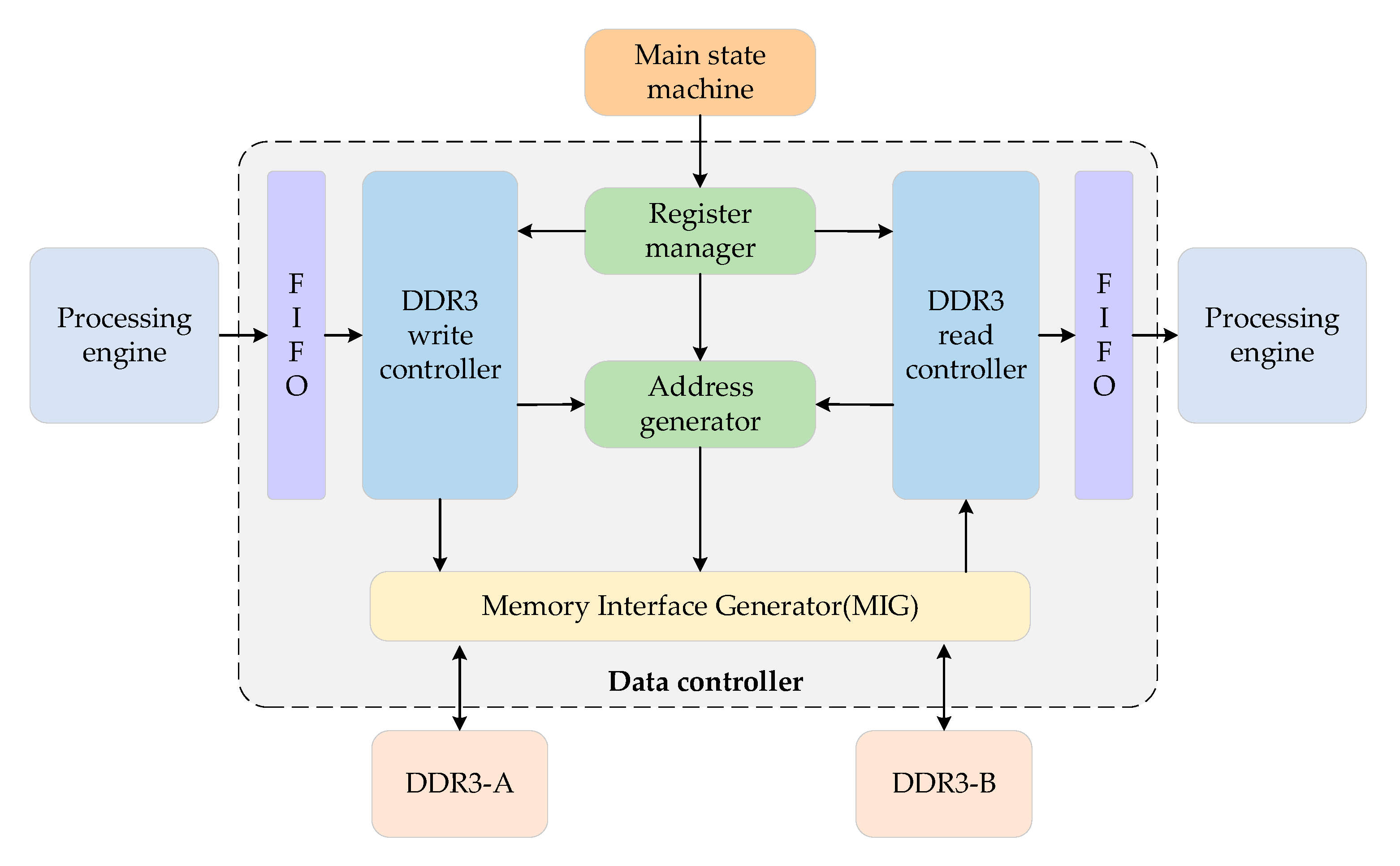

Based on the method proposed in

Section 3, this paper proposes a dual-channel data reading and writing controller, through which a two-dimensional read / write operation can be carried out from any position in the data matrix, and the bandwidth equilibrium of two-dimensional data access can be guaranteed to meet the efficient matrix transposition needs of SAR imaging. The overall structure of the controller, as shown in

Figure 14, consists of a register manager, an address generator, a DDR3 read/write module, and an input/output First-In-First-Out (FIFO) module. The controller invokes the Memory Interface Generator (MIG) IP core to control the dual-channel DDR3.

The data read or written from the controller are often input to the buffer RAM, so they work under the system working clock sys_clk. The read or write operation between the controller and DDR3 is performed under the user clock ui_clk provided by the MIG IP core. Under normal circumstances, there is a difference between sys_clk and ui_clk, so it is necessary to use FIFO for cross-clock domain processing.

4.1. Register Manager

When using this controller to complete read or write operation, four parameters need to be provided: the data access starting position, data access length, data access direction (range/azimuth), and data access mode (read/write). In the proposed controller, the design is optimized by using the register to complete read or write operation of any length and direction starting anywhere by hooking up a 64-bit register. The specific definition of each bit of the register is as shown in

Figure 15 and

Table 3:

4.2. Address Generator

The address generator generates the physical address (i.e., row address, column address and bank address) of the data through the address-mapping rules described in

Section 3 and outputs it this address to the MIG IP core to complete the data reading / writing operation, as shown in

Figure 16. The calculation method for the address-mapping rules is very complicated—if each data address is calculated with a complete formula, this will produce a large delay, which will affect the real-time performance of the hardware system. In order to solve the above problems, in the address generator, the address generation processing can be simplified by using the physical address change rules for the data in the matrix, and the address generation operation can be accomplished efficiently by simple judgment, addition and subtraction. The module consists of two sub-modules: the address initialization module and the address update module.

The address initialization module calculates the physical address of the starting position by the parameters provided in the register manager, and calculates the intermediate parameters required for subsequent address generation in the range or the azimuth working mode. The address update module includes a range direction address update module and an azimuth direction address update module that continuously updates the physical address to be accessed and feeds it into the MIG IP core based on the starting physical address calculated by the address initialization module and the required intermediate parameters. Due to the difference between the range direction and azimuth direction in the address calculation method, it is necessary to build two corresponding sub-modules. The process of address generation can be represented by pseudo-codes, as shown in Algorithm 1:

| Algorithm 1. The address generation algorithm. |

1: Address_initial calculate row_addr, col_addr, bank_addr, subrange_cnt, subazimuth_cnt;

2: If mod=range, then

3: Address_initial calculate range_parameters;

4: Else if mod=azimuth, then

5: Address_initial calculate azimuth_parameters;

6: End if

7: If gen_en=1, then //gen_en is an address request signal

8: If mod=range, then

9: Address_updata update row_addr, col_addr, bank_addr;

10: Else if mod=azimuth, then

11: Address_updata update row_addr, col_addr, bank_addr;

12: End if

13: End if |

4.3. DDR3 Read/Write Module

The DDR3 read/write module is connected to the register manager, the FIFO module and the address generator within the controller. First, the module determines the mode of data access (e.g., read/write, range/azimuth) by reading the data of each bit of the register from the register manager, and obtains relevant data parameters (e.g., starting coordinates, read/write length). Secondly, the module reads the data required for a burst transmission from the FIFO and obtains the corresponding address signal from the address generator. Finally, the dual-channel DDR3 data reading and writing is completed by establishing the correct MIG IP user interface timing. The module consists of two parts: the DDR3 write sub-module and the DDR3 read sub-module.

In the DDR3 write sub-module, the values in the register are constantly polled, and the write data process is initiated when the user issues a write data instruction. During the writing process, the write module obtains eight data from the input FIFO as a burst data transmission. After that, an address request signal is sent to the address generator to generate the write address. When the write address is output correctly, it is connected to the address signal of the MIG, and the control signal in the MIG is set up with the correct timing to perform the write operation. When all write operations are completed, a wr_over signal is sent to the register manager, the value of the register is zeroed, and the write process is completed.

In the DDR3 read sub-module, the values in the register are constantly polled, and the read data process is initiated when the user issues a read data instruction. In the read process, an address request signal is sent to the address generator, requiring a read address to be generated. When the read address is output correctly, it is connected to the address signal of the MIG and the control signal is set with the correct timing. When accepting a valid signal to read data from MIG, eight continuous data from MIG, which can be transmitted at one time, are written to the FIFO. When all read operations are completed, an rd_over signal is sent to the register manager, the value of the register is zeroed, and the read process is completed.

5. Experiments and Results

In this section, extensive experiments were conducted to evaluate the performance of the proposed optimized data storage and access method and hardware data controller. The evaluation experiments were divided into two parts. First, the hardware data controller based on the optimized dual-channel data mapping method proposed in

Section 4 is implemented in FPGA. Then, the processing performance of the SAR data matrix with the same granularity as the spaceborne conditions is tested. The experimental settings and detailed experimental results are described in the following subsections.

5.1. Experimental Settings

In order to verify the efficiency of the method proposed in this paper, we implemented a data controller based on a Xilinx Virtex-7 FPGA chip (Xilinx, San Jose, CA, USA). The hardware platform is a processing board equipped with a Xilinx XC7VX690T FPGA (Xilinx, San Jose, CA, USA) and two clusters of Micron MT41K512M16HA-125 DDR3 SDRAM (Micron Technology, Boise, ID, USA) particles. Each cluster of DDR3 SDRAM has a capacity of 8 GB and a bitwidth of 64 bit. A SAR data matrix with a granularity of 16,384 × 16,384 is used, and the data is a complex number of single-precision floating-point numbers (the bit width is 64 bit). Two independent data controllers are implemented in FPGA, and two clusters of DDR3 are connected.

The experiment was completed in the Vivado 2018.3 software with the Very-High-Speed Integrated Circuit Hardware Description Language (VHDL), and the Xilinx MIG v4.2 IP core (Xilinx, San Jose, CA, USA) was used. The parameters used in the experiment are as follows: the working clock of DDR3 is 800 MHz, the working clock of the data controller is 200 MHz, the working clock of the processing engine is 100 MHz, and the DDR3 burst transmission length is eight.

The experimental procedure is designed according to the standard CS algorithm. In the first step, the original data are stored in DDR3A by FPGA according to the range direction. The second step is to read data from DDR3A in the azimuth direction, and store them in DDR3B in the azimuth direction after caching. The third step is to read data from DDR3B in the range direction and, after caching, write these data to DDR3A in the range direction. The fourth step is to read data from DDR3A in the azimuth direction. After completing all the above steps, we should compare whether the final read data is the same as the original data, measure the data bandwidth of each step and record it.

5.2. Hardware Resource Utilization

Through the synthesis and implementation steps in Vivado 2018.3, the resource utilization of the data controller in the FPGA can be obtained. The results are shown in

Table 4:

The table shows the utilization of the on-chip resources of LUT, the register, RAM and DSP. The total number of available resources is the total number of these resources in Xilinx XC7VX690T FPGA, and the resource utilization (%) in the table is the utilization number for each resource divided by the total number of resources. It can be seen that the controller only occupies a small amount of LUT and storage resources. Since the address generation process is optimized in the controller, the address update can be completed only by judging, shifting, adding and subtracting, without using the DSP resources on the FPGA chip, so the utilization rate of DSP is 0. More FPGA computing resources are used for the system’s computing engine, which can further improve the system’s computing capabilities.

5.3. Performance Evaluation of the Data Controller

We deployed the proposed hardware architecture on the FPGA to demonstrate its efficiency. The peak theoretical bandwidth of the DDR3 chip with a bit width of 64bit and a working clock of 800 MHz in the existing processing board is:

When considering the efficiency loss caused by row activation and precharging, the actual bandwidth is:

where

is the efficiency.

We recorded the actual measured access bandwidth and included it in the above equation to calculate the access efficiency, providing the indicators listed in

Table 5. Compared with the theoretical calculation results in

Section 3.3.2, the experimental data bandwidths are slightly lower than the theoretical results due to the hardware communication overhead.

The FPGA needs to use dual-port RAM as a data buffer between DDR3 and the processing engine. In the experiment, the burst transmission length of DDR3 is eight. According to the sub-matrix cross-storage method, the utilization rate of DDR3 burst transmission data is 100%. For data access in the range direction, the read 8 × 64 bit data belong to two range lines, so two RAMs are needed to cache the data. For data access in the azimuth direction, the read 8 × 64 bit data belong to four azimuth lines, so four RAMs are needed to cache the data. In order to take into account both the range and azimuth access requirements, four dual-port RAMs should be included in the system design.

The recent FPGA-based implementations were also compared in this section. The comparison results of the proposed data controller and several recent FPGA-based implementations are presented in

Table 6. The “range” and “azimuth” in the table represent the performance during the range direction access and azimuth direction access, respectively. “Matrix transposition time” refers to the time for reading in the range direction and then writing in the azimuth direction, which can be calculated by the DDR3 access bandwidth. The experimental results show that the data controller proposed achieves a very high data bandwidth in both the range and azimuth directions. As the data controller proposed has the characteristics of independently controlling the dual-channel storage unit, it has strong flexibility and can complete the read and write tasks separately, making the processing engine twice the throughput rate. Compared with the previous FPGA-based implementations, the data controller proposed has a higher data bandwidth and greater flexibility in terms of its design, while ensuring higher access efficiency, leading to a trade-off between resources and speed, which is very suitable for real-time SAR processing systems in spaceborne scenarios.