Correspondence Learning for Deep Multi-Modal Recognition and Fraud Detection †

Abstract

1. Introduction

- We propose correspondence learning (CL), a novel and simple technique to explicitly learn the relationship among modalities;

- In sentimental analysis benchmarks, we show that CL significantly improves performances with the simple auxiliary correspondence learning task;

- In the garbage classification task, we show that single-modality-based models are vulnerable to fraud inputs and unseen class objects (out-of-distribution), and the learned correspondence can be used for fraud detection with high detection rates. We also show that the material classification is possible with non-contact ultrasound sensors.

2. Related Works

3. Method: Correspondence Learning

3.1. Motivation and Initial Observations

3.2. Multi-Modal Correspondence Learning

| Algorithm 1: Pseudo code for the proposed method. |

|

4. Garbage Classification Task for Fraud Detection

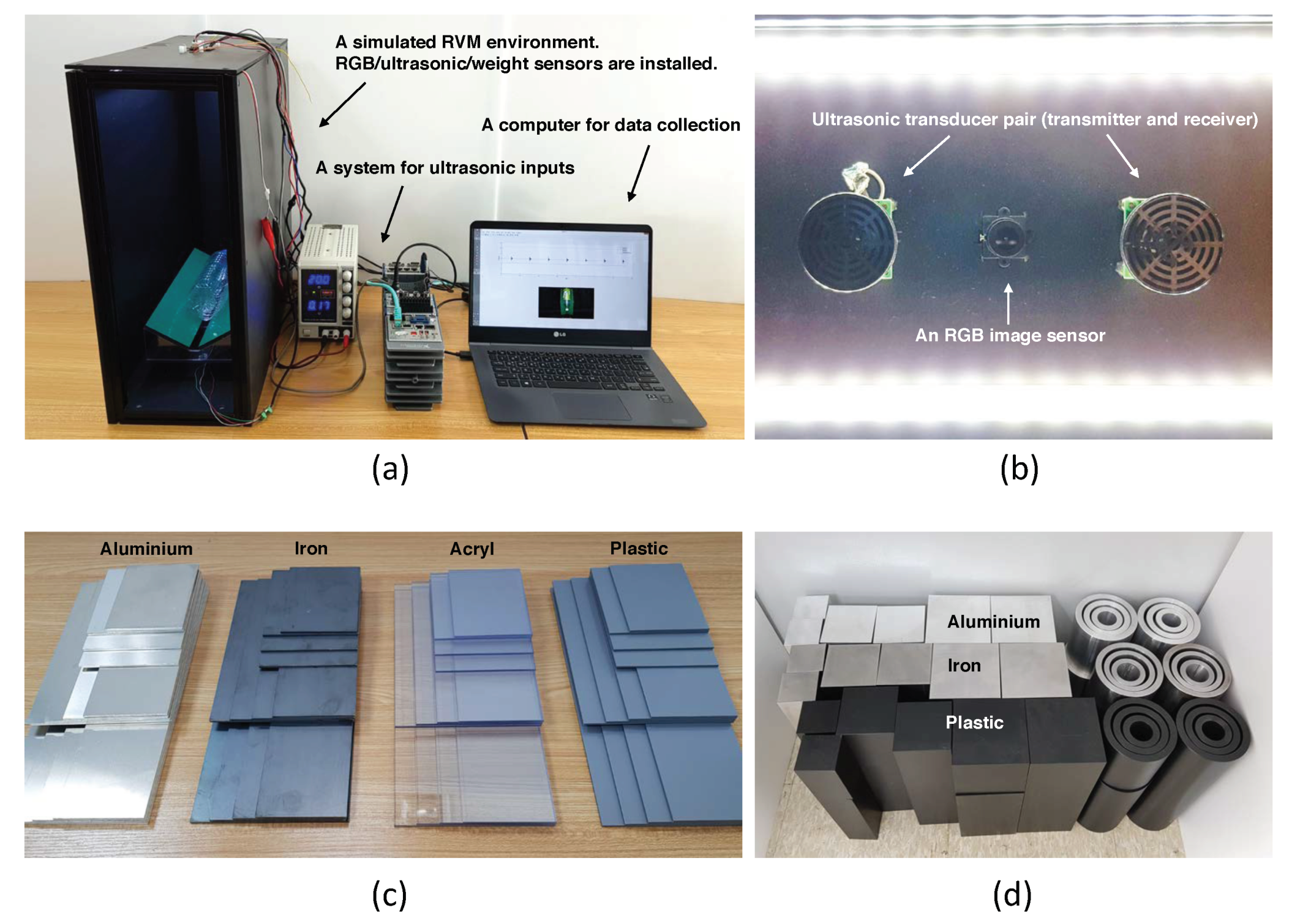

4.1. Hardware Settings

4.2. Dataset Composition

4.2.1. Raw Material Samples

4.2.2. Real World Targets and Fraud Samples

5. Experiment Setups

5.1. Datasets

5.2. CMU-MOSI and CMU-MOSEI Dataset

5.3. Baseline for the Garbage Classification Task

5.3.1. Feature Extractors

5.3.2. Attention Module

5.3.3. Classifiers

6. Experiment Results

6.1. CMU-MOSI and CMU-MOSEI

6.2. Raw Materials with Ultrasound

6.3. Fraud Detection Using Real-World Data

6.3.1. Multi-Modal Inputs

6.3.2. Multi-Modal Attention

6.3.3. Correspondence Learning

6.3.4. Final Model

7. Conclusions

8. Relevance to Electronics Journal

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Lawrence Zitnick, C.; Parikh, D. Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar]

- Chen, T.; Li, M.; Li, Y.; Lin, M.; Wang, N.; Wang, M.; Xiao, T.; Xu, B.; Zhang, C.; Zhang, Z. MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. arXiv 2015, arXiv:1512.01274. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 23 February 2021).

- Google Cloud Vision. Available online: https://cloud.google.com/vision/ (accessed on 21 December 2017).

- Papago. Available online: https://papago.naver.com/ (accessed on 21 December 2017).

- John, V.; Mita, S. Deep Feature-Level Sensor Fusion Using Skip Connections for Real-Time Object Detection in Autonomous Driving. Electronics 2021, 10, 424. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, N.; Hwang, S.; Park, K.; Yoon, J.S.; An, K.; Kweon, I.S. KAIST Multi-spectral Day/Night Dataset for Autonomous and Assisted Driving. IEEE Trans. Intell. Transp. Syst. 2018, 19, 934–948. [Google Scholar] [CrossRef]

- Bednarek, M.; Kicki, P.; Walas, K. On Robustness of Multi-Modal Fusion—Robotics Perspective. Electronics 2020, 9, 1152. [Google Scholar] [CrossRef]

- Bodapati, J.D.; Naralasetti, V.; Shareef, S.N.; Hakak, S.; Bilal, M.; Maddikunta, P.K.R.; Jo, O. Blended Multi-Modal Deep ConvNet Features for Diabetic Retinopathy Severity Prediction. Electronics 2020, 9, 914. [Google Scholar] [CrossRef]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Monfort, M.; Andonian, A.; Zhou, B.; Ramakrishnan, K.; Bargal, S.A.; Yan, T.; Brown, L.; Fan, Q.; Gutfruend, D.; Vondrick, C.; et al. Moments in Time Dataset: One million videos for event understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 502–508. [Google Scholar] [CrossRef] [PubMed]

- Kaiser, L.; Gomez, A.N.; Shazeer, N.; Vaswani, A.; Parmar, N.; Jones, L.; Uszkoreit, J. One model to learn them all. arXiv 2017, arXiv:1706.05137. [Google Scholar]

- Zadeh, A.; Zellers, R.; Pincus, E.; Morency, L.P. Mosi: Multimodal corpus of sentiment intensity and subjectivity analysis in online opinion videos. arXiv 2016, arXiv:1606.06259. [Google Scholar]

- Zadeh, A.; Liang, P.P.; Poria, S.; Vij, P.; Cambria, E.; Morency, L.P. Multi-attention recurrent network for human communication comprehension. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.P. Tensor Fusion Network for Multimodal Sentiment Analysis. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1103–1114. [Google Scholar] [CrossRef]

- Shenoy, A.; Sardana, A. Multilogue-Net: A Context Aware RNN for Multi-modal Emotion Detection and Sentiment Analysis in Conversation. arXiv 2020, arXiv:2002.08267. [Google Scholar]

- Li, A.; Tan, Z.; Li, X.; Wan, J.; Escalera, S.; Guo, G.; Li, S.Z. Casia-surf cefa: A benchmark for multi-modal cross-ethnicity face anti-spoofing. arXiv 2020, arXiv:2003.05136. [Google Scholar]

- Zhang, S.; Wang, X.; Liu, A.; Zhao, C.; Wan, J.; Escalera, S.; Shi, H.; Wang, Z.; Li, S.Z. A dataset and benchmark for large-scale multi-modal face anti-spoofing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 919–928. [Google Scholar]

- Video Shows Galaxy S8 Facial Recognition Tricked By A Photo. Available online: http://www.gizmodo.co.uk/2017/03/video-shows-galaxy-s8-facial-recognition-tricked-by-a-photo/ (accessed on 4 February 2018).

- Arandjelović, R.; Zisserman, A. Objects that Sound. arXiv 2017, arXiv:1712.06651. [Google Scholar]

- Park, J.; Kim, M.H.; Choi, S.; Kweon, I.S.; Choi, D.G. Fraud detection with multi-modal attention and correspondence learning. In Proceedings of the 2019 International Conference on Electronics, Information, and Communication (ICEIC), Auckland, New Zealand, 22–25 January 2019; pp. 1–7. [Google Scholar]

- Zadeh, A.B.; Liang, P.P.; Poria, S.; Cambria, E.; Morency, L.P. Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 2236–2246, Volume 1: Long Papers. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. arXiv 2014, arXiv:1406.2199. [Google Scholar]

- Crasto, N.; Weinzaepfel, P.; Alahari, K.; Schmid, C. Mars: Motion-augmented rgb stream for action recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7882–7891. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial Examples in the Physical World. In Proceedings of the ICLR Workshop, Toulon, France, 24–26 April 2017. [Google Scholar]

- Athalye, A.; Engstrom, L.; Ilyas, A.; Kwok, K. Synthesizing Robust Adversarial Examples. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Brown, T.; Mane, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial Patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Aytar, Y.; Vondrick, C.; Torralba, A. Soundnet: Learning sound representations from unlabeled video. arXiv 2016, arXiv:1610.09001. [Google Scholar]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1321–1330. [Google Scholar]

- TOMRA. Available online: https://www.tomra.com/en/ (accessed on 20 December 2017).

- RVM Systems. Available online: http://www.reversevending.co.uk/ (accessed on 4 February 2018).

- Superbin. Available online: http://www.superbin.co.kr/new/index.php (accessed on 20 December 2017).

- Hou, M.; Tang, J.; Zhang, J.; Kong, W.; Zhao, Q. Deep multimodal multilinear fusion with high-order polynomial pooling. Adv. Neural Inf. Process. Syst. 2019, 32, 12136–12145. [Google Scholar]

- Ohtani, K.; Baba, M. A Simple Identification Method for Object Shapes and Materials Using an Ultrasonic Sensor Array. In Proceedings of the 2006 IEEE Instrumentation and Measurement Technology Conference Proceedings, Sorrento, Italy, 24–27 April 2006; pp. 2138–2143. [Google Scholar] [CrossRef]

- Moritake, Y.; Hikawa, H. Category recognition system using two ultrasonic sensors and combinational logic circuit. Electron. Commun. Jpn. Part III Fundam. Electron. Sci. 2005, 88, 33–42. [Google Scholar] [CrossRef]

- Arandjelović, R.; Zisserman, A. Look, Listen and Learn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Miyato, T.; Maeda, S.i.; Koyama, M.; Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1979–1993. [Google Scholar] [CrossRef] [PubMed]

| Method | Binary | Regression | |

|---|---|---|---|

| Acc (%) | MAE | r | |

| Random | 50.1 | 1.86 | 0.057 |

| TFN [22] | 77.1 | 0.87 | 0.70 |

| TFN [22] + CL | 78.6 | 0.79 | 0.70 |

| HPFN [41] | 77.16 | 0.984 | 0.66 |

| HPFN [41] + CL | 77.97 | 0.995 | 0.63 |

| Human | 87.5 | 0.71 | 0.82 |

| Method | Binary | Regression | |

|---|---|---|---|

| Acc (%) | MAE | r | |

| Multilogue-Net [23] | 78.06 | 0.609 | 0.46 |

| Multilogue-Net [23]+CL | 80.19 | 0.605 | 0.48 |

| Material Type | Accuracy (%) | Material Type | Accuracy (%) |

|---|---|---|---|

| Acryl | 100.0 | Aluminum | 100.0 |

| Aluminum | 100.0 | Plastic | 91.6 |

| Iron | 100.0 | Iron | 91.8 |

| Plastic | 96.0 | ||

| Avg acc | 99.0 | Avg acc | 94.4 |

| 2D shapes | 3D shapes | ||

| Modality | Target (%) | VS (%) | Non-Target (%) |

|---|---|---|---|

| Image (IMG) | 98.0 | 8.3 | 6.9 |

| Ultrasound (US) | 82.3 | 15.0 | 6.9 |

| IMG + US | 96.5 | 15.0 | 6.9 |

| IMG + US + W | 97.5 | 18.3 | 13.7 |

| Modality | CL | Att | Target (%) | VS (%) | Non-Target (%) |

|---|---|---|---|---|---|

| IMG + US + W | - | - | 97.5 | 18.3 | 13.7 |

| IMG + US + W | - | √ | 99.5 | 21.7 | 20.7 |

| IMG + US + W | √ | - | 81.8 | 86.7 | 93.1 |

| IMG + US + W | √ | √ | 94.0 | 91.7 | 93.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Kim, M.-H.; Choi, D.-G. Correspondence Learning for Deep Multi-Modal Recognition and Fraud Detection. Electronics 2021, 10, 800. https://doi.org/10.3390/electronics10070800

Park J, Kim M-H, Choi D-G. Correspondence Learning for Deep Multi-Modal Recognition and Fraud Detection. Electronics. 2021; 10(7):800. https://doi.org/10.3390/electronics10070800

Chicago/Turabian StylePark, Jongchan, Min-Hyun Kim, and Dong-Geol Choi. 2021. "Correspondence Learning for Deep Multi-Modal Recognition and Fraud Detection" Electronics 10, no. 7: 800. https://doi.org/10.3390/electronics10070800

APA StylePark, J., Kim, M.-H., & Choi, D.-G. (2021). Correspondence Learning for Deep Multi-Modal Recognition and Fraud Detection. Electronics, 10(7), 800. https://doi.org/10.3390/electronics10070800