Map Merging with Suppositional Box for Multi-Robot Indoor Mapping

Abstract

:1. Introduction

2. Related Works

2.1. Initial Pose Known

2.2. Initial Pose Unknown—Rendezvous

2.3. Initial Pose Unknown—Optimization

2.4. Initial Pose Unknown—Feature Matching

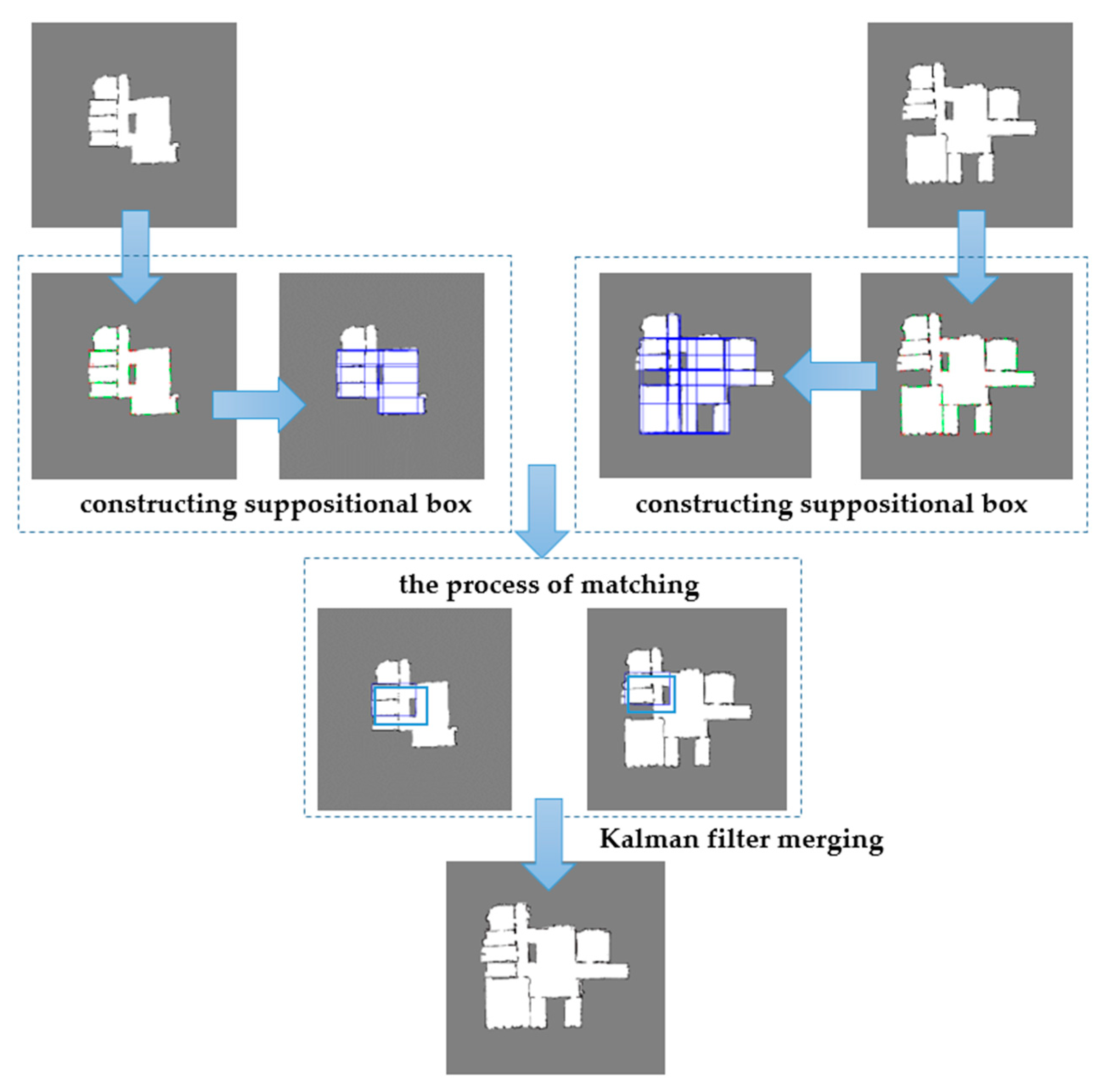

3. Method

3.1. The Construction of Suppositional Box

3.1.1. Vertical Point Extraction

3.1.2. Uncertainty Optimization of Vertical Points

3.1.3. Suppositional Box Building

| Algorithm 1 Suppositional box building |

| Input: the list of vertical points, |

| Output: the list of suppositional box descriptor: |

| 1: for = 1 → n do |

| 2: ← (.vx, .vy) |

| 3: ss ← True |

| 4: while ss |

| 5: if = finding (.,.) //Find point with the same slope and intercept |

| 6: if = finding (.,.)//Find point with the same slope and intercept |

| 7: if = finding (.,.) and intersection (,,,) |

| // Find point that has the same slope and intercept and satisfies distance constraint constraint |

| 8: ← (.vx, .vy) |

| 9: ← (.vx, .vy) |

| 10: ← (.vx, .vy) |

| 11: height = max(dis(, ),dis(, ))//Define the long side to be height high |

| 12: width = min(dis(, ),dis(, ))//Define the short side to be width |

| 13: (cx, cy) = meancenter(,,,)//Compute the center point |

| 14: .append(,,, ,height, width, cx, cy) |

| 15: q ← q + 1 |

| 16: else |

| 17: ss ← False |

| 18: end for |

3.2. Suppositional Box Matching

3.3. Map Merging

4. Experimental Results and Analysis

4.1. Experimental Verification under the Simulation Environment

4.2. Experimental Verification under the Real Environment

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yu, S.; Fu, C.; Gostar, A.K.; Hu, M. A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions. Sensors 2020, 20, 6988. [Google Scholar] [CrossRef]

- Simmons, R.; Apfelbaum, D.; Burgard, W.; Fox, D.; Moors, M.; Thrun, S.; Younes, H. Coordination for Multi-Robot Exploration and Mapping; American Association for Artificial Intelligence: Monloparker, CA, USA, 2000. [Google Scholar]

- Saeedi, S.; Trentini, M.; Li, H. A hybrid approach for multiple-robot SLAM with particle filtering. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3421–3426. [Google Scholar]

- Howard, A. Multi-robot Simultaneous Localization and Mapping using Particle Filters. Int. J. Robot. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef] [Green Version]

- Sasaoka, T.; Kimoto, I.; Kishimoto, Y.; Takaba, K.; Nakashima, H. Multi-robot SLAM via Information Fusion Extended Kalman Filters. IFAC Pap. OnLine 2016, 49, 303–308. [Google Scholar] [CrossRef]

- Zhou, X.S.; Roumeliotis, S.I. Multi-robot SLAM with Unknown Initial Correspondence: The Robot Rendezvous Case. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 1785–1792. [Google Scholar]

- Xu, W.; Jiang, R.; Chen, Y. Map alignment based on PLICP algorithm for multi-robot SLAM. In Proceedings of the 2012 IEEE International Symposium on Industrial Electronics, Hangzhou, China, 28–31 May 2012. [Google Scholar] [CrossRef]

- Lin, J.; Liao, Y.; Wang, Y.; Chen, Z.; Liang, B. A hybrid positioning method for multi-robot simultaneous location and mapping. In Proceedings of the Chinese Control Conference, Wuhan, China, 25–27 July 2018. [Google Scholar]

- Lee, H.; Cho, Y.; Lee, B. Accurate map merging with virtual emphasis for multi-robot systems. Electron. Lett. 2013, 49, 932–934. [Google Scholar] [CrossRef]

- Konolige, K.; Fox, D.; Limketkai, B.; Ko, J.; Stewart, B. Map merging for distributed robot navigation. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Carpin, S.; Andreas, B.; Viktoras, J. On map merging. Robot. Auton. Syst. 2005, 53, 1–14. [Google Scholar] [CrossRef]

- Birk, A.; Carpin, S. Merging Occupancy Grid Maps From Multiple Robots. Proc. IEEE 2006, 94, 1384–1397. [Google Scholar] [CrossRef]

- Ma, X.; Guo, R.; Li, Y.; Chen, W. Adaptive genetic algorithm for occupancy grid maps merging. In Proceedings of the 2008 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008. [Google Scholar] [CrossRef]

- Ferrão, V.T.; Vinhal, C.D.N.; Da Cruz, G. An Occupancy Grid Map Merging Algorithm Invariant to Scale, Rotation and Translation. In Proceedings of the 2017 Brazilian Conference on Intelligent Systems (BRACIS), Uberlandia, Brazil, 2–5 October 2017; pp. 246–251. [Google Scholar]

- Durdu, A.; Korkmaz, M. A novel map-merging technique for occupancy grid-based maps using multiple robots: A semantic approach. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3980–3993. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhu, J.; Jin, C.; Xu, S.; Zhou, Y.; Pang, S. Simultaneously merging multi-robot grid maps at different resolutions. Multimed. Tools Appl. 2019, 1–20. [Google Scholar] [CrossRef]

- Tang, H.; Sun, W.; Yang, K.; Lin, A.; Lv, Y.; Cheng, X. Grid map merging approach of multi-robot based on SURF feature. J. Electron. Meas. Instrum. 2017, 31, 859–868. [Google Scholar]

- Yong, S.; Rongchuan, S.; Shumei, Y.; Yan, P. A Grid Map Fusion Algorithm Based on Maximum Common Subgraph. In Proceedings of the 13th World Congress on Intelligent Control and Automation, Changsha, China, 4–8 July 2018. [Google Scholar]

- Carpin, S. Fast and accurate map merging for multi-robot systems. Auton. Robot. 2008, 25, 305–316. [Google Scholar] [CrossRef]

- Lee, H.-C.; Lee, B.-H. Enhanced-spectrum-based map merging for multi-robot systems. Adv. Robot. 2013, 27, 1285–1300. [Google Scholar] [CrossRef]

- Sajad, S.; Liam, P.; Michael, T.; Mae, S.; Howard, L. Map merging for multiple robots using Hough peak matching. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Roh, B.; Lee, H.C.; Lee, B.H. Multi-hypothesis map merging with sinogram-based PSO for multi-robot systems. Electron. Lett. 2016, 52, 1213–1214. [Google Scholar]

- Lee, H. Tomographic Feature-Based Map Merging for Multi-Robot Systems. Electronics 2020, 9, 107. [Google Scholar] [CrossRef] [Green Version]

- Lakaemper, R.; Latecki, L.; Wolter, D. Incremental multi-robot mapping. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3846–3851. [Google Scholar]

- Jafri, S.R.U.N.; Li, Z.; Chandio, A.A.; Chellali, R. Laser only feature based multi robot SLAM. In Proceedings of the 2012 12th International Conference on Control Automation Robotics & Vision (ICARCV), Guangzhou, China, 5–7 December 2012; pp. 1012–1017. [Google Scholar]

- Caccavale, A.; Schwager, M. Wireframe Mapping for Resource-Constrained Robots. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Park, J.; Sinclair, A.J.; Sherrill, R.E.; Doucette, E.A.; Curtis, J.W. Map merging of rotated, corrupted, and different scale maps using rectangular features. In Proceedings of the 2016 IEEE/ION Position, Location and Navigation Symposium (PLANS), Savannah, GA, USA, 11–14 April 2016; pp. 535–543. [Google Scholar]

- Jazi, S.H. Map-merging using maximal empty rectangles in a multi-robot SLAM process. J. Mech. Sci. Technol. 2020, 34, 2573–2583. [Google Scholar] [CrossRef]

- Göksel, D.; Sukhatme, G.S. Landmark-based Matching Algorithm for Cooperative Mapping by Autonomous Robots. In Distributed Autonomous Robotic Systems 4; Parker, L.E., Bekey, G., Barhen, J., Eds.; Springer: Tokyo, Japan, 2000; pp. 251–260. [Google Scholar]

- Tsardoulias, E.; Thallas, A.; Petrou, L. Metric map merging using RFID tags & topological information. arXiv 2017, arXiv:1711.06591. [Google Scholar]

- Saeedi, S.; Paull, L.; Trentini, M.; Li, H. Neural network-based multiple robot Simultaneous Localization and Mapping. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, Francisco, GA, USA, 25–30 September 2011; pp. 880–885. [Google Scholar] [CrossRef]

- Bonanni, T.M.; Grisetti, G.; Iocchi, L. Merging Partially Consistent Maps. In Simulation, Modeling, and Programming for Autonomous Robots; Brugali, D., Broenink, J.F., Kroeger, T., MacDonald, B.A., Eds.; Springer: Cham, Germany, 2014; Volume 8810, pp. 352–363. [Google Scholar]

- Lazaro, M.T.; Paz, L.M.; Pinies, P.; Castellanos, J.A.; Grisetti, G. Multi-robot SLAM using condensed measurements. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Deutsch, I.; Liu, M.; Siegwart, R. A framework for multi-robot pose graph SLAM. In Proceedings of the 2016 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–10 June 2016; pp. 567–572. [Google Scholar]

- Chen, H.; Huang, H.; Qin, Y.; Li, Y.; Liu, Y. Vision and laser fused SLAM in indoor environments with multi-robot system. Assem. Autom. 2019, 39, 297–307. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Qi, L.; Jie, S. A nonsmooth version of Newton’s method. Math. Program. 1993, 58, 353–367. [Google Scholar] [CrossRef]

- Anderson, T.; Uffner, M.; Vogler, M.R.; Donnelly, B.C. Gazebo Structure. U.S. Patent No. 7,963,072, 21 June 2011. [Google Scholar]

- Abdulgalil, M.A.; Nasr, M.M.; Elalfy, M.H.; Khamis, A.; Karray, F. Multi-robot SLAM: An Overview and Quantitative Evaluation of MRGS ROS Framework for MR-SLAM. In Advances in Human Factors, Business Management, Training and Education; Metzler, J.B., Ed.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 751, pp. 165–183. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Halmstad-Robot-Maps. Available online: https://github.com/saeedghsh/Halmstad-Robot-Maps/ (accessed on 29 March 2021).

- Shahbandi, S.G.; Magnusson, M. 2D map alignment with region decomposition. Auton. Robot. 2018, 43, 1117–1136. [Google Scholar] [CrossRef] [Green Version]

| S2 | Occupancy | Free | Unknow | |

|---|---|---|---|---|

| S1 | ||||

| occupancy | occupancy | occupancy | occupancy | |

| free | occupancy | free | free | |

| unknow | occupancy | free | unknow | |

| Wall’s Number | Actual Length (m) | (%) | (%) | Error in Merged Map (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 0° | 45° | 90° | 0° | 45° | 90° | ||

| 1 | 6.25 | 0.00 | 2.40 | 0.00 | 2.40 | 1.60 | 0.80 | 1.60 | 1.60 | 1.60 |

| 2 | 1.25 | 8.00 | 0.80 | 0.00 | 0.00 | 4.00 | 4.00 | 4.00 | 1.60 | 0.00 |

| 3 | 5.25 | 4.76 | 2.85 | 0.95 | 1.90 | 2.28 | 2.85 | 0.90 | 1.71 | 0.00 |

| 4 | 3.25 | 7.69 | 7.38 | 8.33 | 7.69 | 9.84 | 9.23 | 0.00 | 8.61 | 9.23 |

| 5 | 3.25 | 10.77 | 9.84 | 9.23 | 15.38 | 8.61 | 9.23 | 7.60 | 7.69 | 4.61 |

| 6 | 1.50 | 12.00 | 8.00 | 10.67 | 7.33 | 8.00 | 12.00 | 8.70 | 6.00 | 7.33 |

| 7 | 2.50 | 4.00 | 6.80 | 6.00 | 6.00 | 5.20 | 6.00 | 6.00 | 3.60 | 8.00 |

| 8 | 7.50 | 2.00 | 2.40 | 2.00 | 2.67 | 2.40 | 2.00 | 1.30 | 0.93 | 1.33 |

| E5 (%) | F5 (%) | HIH and KPT4A (%) | |

|---|---|---|---|

| SURF + RANSAC | 25.00 | 25.00 | 0.00 |

| ORB + RANSAC | 25.00 | 0.00 | 0.00 |

| Hough spectrum | 25.00 | 25.00 | 50.00 |

| Our proposal | 75.00 | 66.66 | 50.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Li, S.; Zhao, H.; Liu, L. Map Merging with Suppositional Box for Multi-Robot Indoor Mapping. Electronics 2021, 10, 815. https://doi.org/10.3390/electronics10070815

Chen B, Li S, Zhao H, Liu L. Map Merging with Suppositional Box for Multi-Robot Indoor Mapping. Electronics. 2021; 10(7):815. https://doi.org/10.3390/electronics10070815

Chicago/Turabian StyleChen, Baifan, Siyu Li, Haowu Zhao, and Limei Liu. 2021. "Map Merging with Suppositional Box for Multi-Robot Indoor Mapping" Electronics 10, no. 7: 815. https://doi.org/10.3390/electronics10070815