Integration of Single-Port Memory (ISPM) for Multiprecision Computation in Systolic-Array-Based Accelerators

Abstract

:1. Introduction

- We propose an address transformation method which enables the effectively integration of SPM and greatly saves the area cost of systolic-array-based accelerators on-chip. We further propose a novel address transformation method to adapt multiple precision computation which can effectively improve computing efficiency.

- We design a small module in the existing AI accelerator which uses hardware-friendly logic. The module consists of two main features: addressing mapping and conflict handling. The additional module increases several computation time overhead which can be ignored while containing the correctness of the computation.

- We implement SPM-based designs on RTL with a 7 nm library and evaluate with various real data. Experimental results show that Our SPM-based designs achieve about 30% and 25% improvement in area cost without affecting the throughput of the accelerator.

2. Background

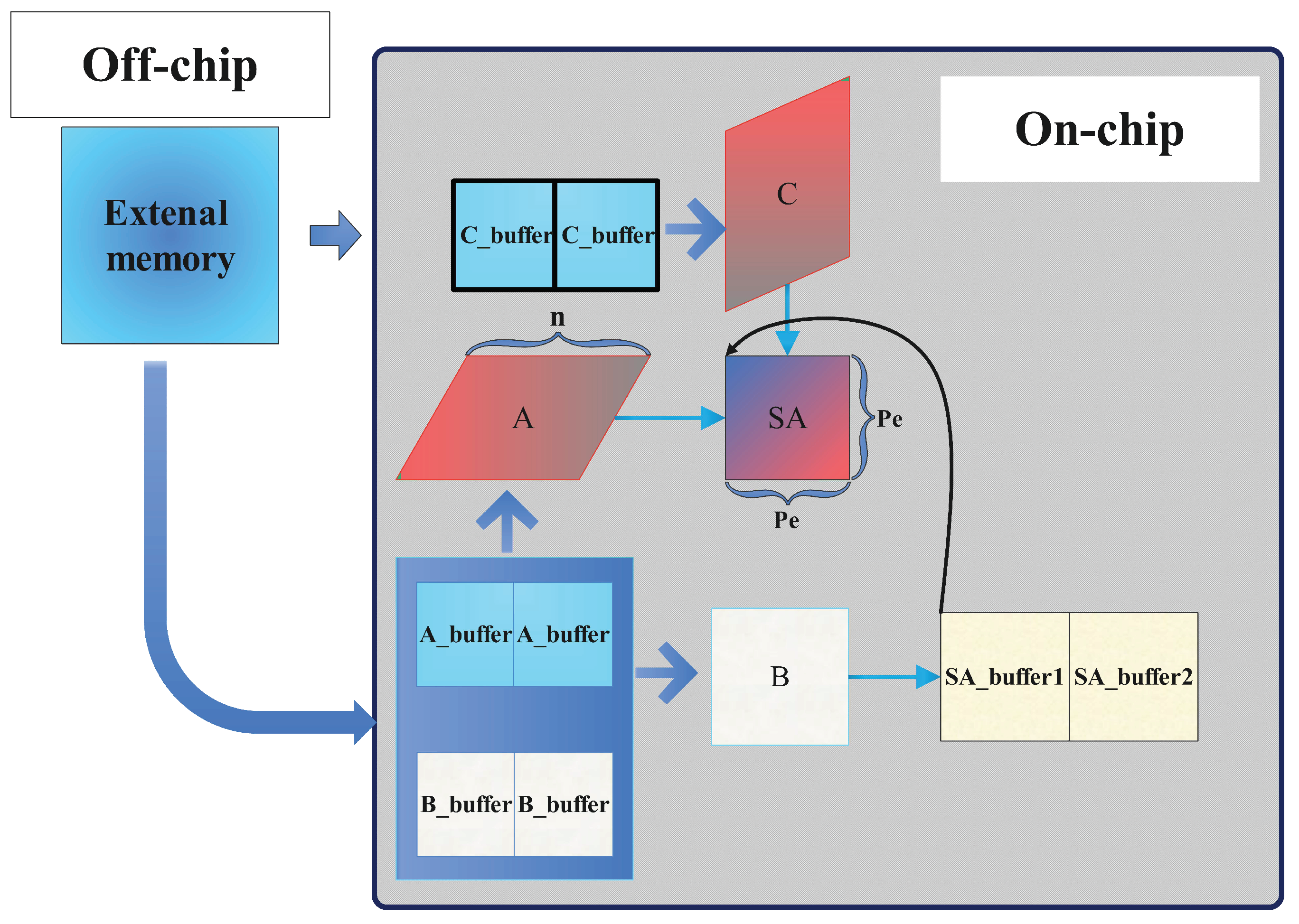

2.1. Systolic-Array-Based Accelerators Structure

2.2. The Conflicts of Memory Access to SPM

2.3. The Area Cost of SPM and TPM

2.4. Multiprecision Computation

3. Single-Port Memory Design Ideas for Both Single- and Multi-Precision Computing

3.1. Data Memory in External Memory and C-Buffer

3.2. The Impact of Data Arrangement on SPM and TPM

3.3. Conflict of Memory Access to the C-Buffer

4. ISPM

4.1. Architecture Overview

4.2. Address-Mapping Module Design for the Base Idea

4.3. Address-Mapping Module Design for Multiple Precision

4.4. Conflict Handling Design

4.5. Limitations

5. Evaluation

5.1. Metrics and Experimental Setting

5.2. Experiments and Results

6. Related Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Exploration of Memory Access Optimization for FPGA-based 3D CNN Accelerator. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020.

- On-chip Memory Optimized CNN Accelerator with Efficient Partial-sum Accumulation. In Proceedings of the GLSVLSI ’20: Great Lakes Symposium on VLSI 2020, Virtual, 7–9 September 2020.

- Stoutchinin, A.; Conti, F.; Benini, L. Optimally scheduling cnn convolutions for efficient memory access. arXiv 2019, arXiv:1902.01492. [Google Scholar]

- Chang, X.; Pan, H.; Zhang, D.; Sun, Q.; Lin, W. A Memory-Optimized and Energy-Efficient CNN Acceleration Architecture Based on FPGA. In Proceedings of the 2019 IEEE 28th International Symposium on Industrial Electronics (ISIE), Vancouver, BC, Canada, 12–14 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Peemen, M.; Setio, A.; Mesman, B.; Corporaal, H. Memory-centric accelerator design for Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Computer Design, Asheville, NC, USA, 6–9 October 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Alwani, M.; Chen, H.; Ferdman, M.; Milder, P. Fused-layer CNN accelerators. IEEE/ACM International Symposium on Microarchitecture. In Proceedings of the IEEE Computer Society, Pittsburgh, PA, USA, 11–13 July 2016; pp. 1–12. [Google Scholar]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-datacenter performance analysis of a tensor processing unit. Comput. Archit. News 2017, 45, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Hennessy, J.L.; Patterson, D.A. Computer Architecture: A Quantitative Approach, 6th ed.; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Ghose, S.; Boroumand, A.; Kim, J.S.; Gómez-Luna, J.; Mutlu, O. Processing-in-memory: A workload-driven perspective. IBM J. Res. Dev. 2019, 63, 3:1–3:19. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, H.; Ogleari, M.A.; Li, D.; Zhao, J. Processing-in-Memory for Energy-Efficient Neural Network Training: A Heterogeneous Approach. In Proceedings of the 2018 51st Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Fukuoka, Japan, 20–24 October 2018; pp. 655–668. [Google Scholar] [CrossRef]

- Gokhale, M.; Holmes, B.; Iobst, K. Processing in memory: The Terasys massively parallel PIM array. Computer 1995, 28, 23–31. [Google Scholar] [CrossRef]

- Chi, P.; Li, S.; Xu, C.; Zhang, T.; Zhao, J.; Liu, Y.; Xie, Y. PRIME: A Novel Processing-in-Memory Architecture for Neural Network Computation in ReRAM-Based Main Memory. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Korea, 18–22 June 2016; pp. 27–39. [Google Scholar] [CrossRef]

- Abdelfattah, A.; Tomov, S.; Dongarra, J. Towards Half-Precision Computation for Complex Matrices: A Case Study for Mixed Precision Solvers on GPUs. In Proceedings of the 2019 IEEE/ACM 10th Workshop on Latest Advances in Scalable Algorithms for Large-Scale Systems (ScalA), Denver, CO, USA, 18 November 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Ye, D.; Kapre, N. MixFX-SCORE: Heterogeneous Fixed-Point Compilation of Dataflow Computations. In Proceedings of the IEEE International Symposium on Field-Programmable Custom Computing Machines, Boston, MA, USA, 11–13 May 2014; IEEE Computer Society: Piscataway, NJ, USA, 2014. [Google Scholar]

- Burtscher, M.; Ganusov, I.; Jackson, S.J.; Ke, J.; Ratanaworabhan, P.; Sam, N.B. The vpc trace-compression algorithms. IEEE Trans. Comput. 2005, 54, 1329–1344. [Google Scholar] [CrossRef]

- YYang, E.H.; Kieffer, J.C. Efficient universal lossless data compression algorithms based on a greedy sequential grammar transform–part one: Without context models. IEEE Trans. Inf. Theory 2000, 46, 755–777. [Google Scholar] [CrossRef]

- Guay, M.; Burns, D.J. A comparison of extremum seeking algorithms applied to vapor compression system optimization. In Proceedings of the American Control Conference, Portland, ON, USA, 4–6 June 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Reichel, J.; Nadenau, M.J. How to measure arithmetic complexity of compression algorithms: A simple solution. In Proceedings of the IEEE International Conference on Multimedia & Expo, New York, NY, USA, 30 July–2 August 2000; IEEE: Piscataway, NJ, USA, 2000. [Google Scholar]

- Yokoo, H. Improved variations relating the ziv-lempel and welch-type algorithms for sequential data compression. IEEE Trans. Inf. Theory 2006, 38, 73–81. [Google Scholar] [CrossRef]

- Samajdar, A.; Zhu, Y.; Whatmough, P.; Mattina, M.; Krishna, T. Scale-sim: Systolic cnn accelerator simulator. arXiv 2018, arXiv:1811.02883. [Google Scholar]

- Shen, J.; Ren, H.; Zhang, Z.; Wu, J.; Jiang, Z. A High-Performance Systolic Array Accelerator Dedicated for CNN. In Proceedings of the 2019 IEEE 19th International Conference on Communication Technology (ICCT), Xi’an, China, 16–19 October 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Kung, H.T.; Leiserson, C.E. Systolic arrays (for VLSI). In Sparse Matrix Proceedings 1978; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1979; pp. 256–282. [Google Scholar]

- Law, K.H. Systolic arrays for finite element analysis. Comput. Struct. 1985, 20, 55–65. [Google Scholar] [CrossRef]

- Lacey, G.; Taylor, G.W.; Areibi, S. Deep learning on fpgas: Past, present, and future. arXiv 2016, arXiv:1602.04283. [Google Scholar]

- Chen, Y.; Emer, J.; Sze, V. Eyeriss: A Spatial Architecture for Energy-Efficient Dataflow for Convolutional Neural Networks. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Korea, 18–22 June 2016; pp. 367–379. [Google Scholar] [CrossRef] [Green Version]

- Genc, H.; Kim, S.; Amid, A.; Haj-Ali, A.; Iyer, V.; Prakash, P.; Shao, Y.S. Gemmini: Enabling Systematic Deep-Learning Architecture Evaluation via Full-Stack Integration. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 13 November 2021; pp. 769–774. [Google Scholar] [CrossRef]

- Pop, S.; Sjodin, J.; Jagasia, H. Minimizing Memory Access Conflicts of Process Communication Channels. U.S. Patent 12/212,370, 18 March 2010. [Google Scholar]

- Kasagi, A.; Nakano, K.; Ito, Y. An Implementation of Conflict-Free Offline Permutation on the GPU. In Proceedings of the 2012 Third International Conference on Networking and Computing, Portland, OR, USA, 13–17 June 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Ou, Y.; Feng, Y.; Li, W.; Ye, X.; Wang, D.; Fan, D. Optimum Research on Inner-Inst Memory Access Conflict for Dataflow Architecture. J. Comput. Res. Dev. 2019, 56, 2720. [Google Scholar]

- Feng, L.; Ahn, H.; Beard, S.R.; Oh, T.; August, D.I. DynaSpAM: Dynamic spatial architecture mapping using Out of Order instruction schedules. In Proceedings of the 2015 ACM/IEEE 42nd Annual International Symposium on Computer Architecture (ISCA), Portland, OR, USA, 13–17 June 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Chen, Y.H.; Emer, J.; Sze, V. Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks. Comput. Archit. News 2016, 44, 367–379. [Google Scholar] [CrossRef]

- Bao, W.; Jiang, J.; Fu, Y.; Sun, Q. A reconfigurable macro-pipelined systolic accelerator architecture. In Proceedings of the International Conference on Field-Programmable Technology, Seoul, Korea, 10–12 December 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Ito, M.; Ohara, M. A power-efficient FPGA accelerator: Systolic array with cache-coherent interface for pair-HMM algorithm. In Proceedings of the 2016 IEEE Symposium in Low-Power and High-Speed Chips (COOL CHIPS XIX), Yokohama, Japan, 20–22 April 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Pazienti, F. A systolic array for neural network implementation. In Proceedings of the Electrotechnical Conference, Cairo, Egypt, 7–9 May 2002; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Cignoni, P.; Montani, C.; Rocchini, C.; Scopigno, R. External memory management and simplification of huge meshes. IEEE Trans. Vis. Comput. Graph. 2003, 9, 525–537. [Google Scholar] [CrossRef] [Green Version]

- Choquette, J.; Gandhi, W.; Giroux, O.; Stam, N.; Krashinsky, R. NVIDIA A100 Tensor Core GPU: Performance and Innovation. IEEE Micro 2021, 41, 29–35. [Google Scholar] [CrossRef]

- Svedin, M.; Chien, S.W.; Chikafa, G.; Jansson, N.; Podobas, A. Benchmarking the Nvidia GPU Lineage: From Early K80 to Modern A100 with Asynchronous Memory Transfers. In Proceedings of the 11th International Symposium on Highly Efficient Accelerators and Reconfigurable Technologies, Virtual, 21–23 June 2021. [Google Scholar]

- Lyakh, D.I. An efficient tensor transpose algorithm for multicore CPU, Intel Xeon Phi, and NVidia Tesla GPU. Comput. Phys. Commun. 2015, 189, 84–91. [Google Scholar] [CrossRef] [Green Version]

- Jang, B.; Schaa, D.; Mistry, P.; Kaeli, D. Exploiting Memory Access Patterns to Improve Memory Performance in Data-Parallel Architectures. IEEE Trans. Parallel Distrib. Syst. 2011, 22, 105–118. [Google Scholar] [CrossRef]

- Weinberg, J.; McCracken, M.O.; Strohmaier, E.; Snavely, A. Quantifying Locality In The Memory Access Patterns of HPC Applications. In Proceedings of the Supercomputing, ACM/IEEE Sc Conference, Online, 12–15 November 2005; ACM: New York, NY, USA, 2005. [Google Scholar]

- Lorenzo, O.G.; Lorenzo, J.A.; Cabaleiro, J.C.; Heras, D.B.; Suárez, M.; Pichel, J.C. A Study of Memory Access Patterns in Irregular Parallel Codes Using Hardware Counter-Based Tools. In Proceedings of the International Conference on Parallel and Distributed Processing Techniques and Applications (PDPTA), Las Vegas, NV, USA, 16–19 July 2012. [Google Scholar]

- Arimilli, R.K.; O’Connell, F.P.; Shafi, H.; Williams, D.E.; Zhang, L. Data Processing System and Method for Reducing Cache Pollution by Write Stream Memory Access Patterns. U.S. Patent 20080046736 A1, 21 February 2008. [Google Scholar]

- Caffarena, G.; Pedreira, C.; Carreras, C.; Bojanic, S.; Nieto-Taladriz, O. Fpga acceleration for dna sequence alignment. J. Circuits Syst. Comput. 2008, 16, 245–266. [Google Scholar] [CrossRef]

| Depth | Memory Type | Ratio | |

|---|---|---|---|

| SPM | TPM | ||

| 64W144B | 1359 | 1654 | 17.8% |

| 128W144B | 1743 | 2437 | 28.5% |

| 256W144B | 2673 | 3952 | 32.4% |

| 448W144B | 3607 | 5764 | 37.4% |

| 512W144B | 4528 | 6559 | 31.0% |

| Computing Precision | Peak Rates |

|---|---|

| Peak FP64 Tensor Core | 19.5 TFLOPS |

| Peak FP32 Tensor Core | 156 TFLOPS |

| Peak FP16 Tensor Core | 312 TFLOPS |

| Matrix Size | T (SPM) | T (TPM) | T (SPM (Multi-Pre)) | T (TPM (Multi-Pre)) |

|---|---|---|---|---|

| 1024 × 1024 × 1024 | 2,120,197.5 | 2,118,013.5 | 5,320,001 | 5,318,837.5 |

| 512 × 512 × 512 | 283,141.5 | 283,005.5 | 106,178.5 | 106,127 |

| 256 × 256 × 256 | 46,597.5 | 46,461.5 | 15,532.5 | 15,460 |

| 128 × 128 × 128 | 10,053.5 | 9981.5 | 5026.5 | 4990.5 |

| 112 × 112 × 112 | 7396.5 | 7279.5 | 4021.5 | 3940.5 |

| 96 × 96 × 96 | 5316 | 5229.5 | 3042.5 | 3002.5 |

| 80 × 80 × 80 | 3630.5 | 3567.5 | 2100.5 | 2073 |

| 64 × 64 × 64 | 2287 | 2245.5 | 1380 | 1360.5 |

| 48 × 48 × 48 | 1306.5 | 1279.5 | 1037 | 1017 |

| 40 × 40 × 40 | 990.5 | 966 | 818 | 800 |

| 36 × 36 × 36 | 999.5 | 1017 | 818 | 840 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Shen, J.; Wen, M.; Cao, Y.; Li, Y. Integration of Single-Port Memory (ISPM) for Multiprecision Computation in Systolic-Array-Based Accelerators. Electronics 2022, 11, 1587. https://doi.org/10.3390/electronics11101587

Yang R, Shen J, Wen M, Cao Y, Li Y. Integration of Single-Port Memory (ISPM) for Multiprecision Computation in Systolic-Array-Based Accelerators. Electronics. 2022; 11(10):1587. https://doi.org/10.3390/electronics11101587

Chicago/Turabian StyleYang, Renyu, Junzhong Shen, Mei Wen, Yasong Cao, and Yuhang Li. 2022. "Integration of Single-Port Memory (ISPM) for Multiprecision Computation in Systolic-Array-Based Accelerators" Electronics 11, no. 10: 1587. https://doi.org/10.3390/electronics11101587